Cycle 1 Reflection: Looking Across, Moving Inside

Posted: December 10, 2017 Filed under: Uncategorized Leave a comment »Cycle 1 was a chance to pivot in terms of how I was approaching this project. At this point I had a working understanding of what the installation was going to do, but performing it in front of my colleagues revealed methodological challenges.

Specifically, the rotoscoping process I was using to remove dancers from the background of prerecorded videos was far too time-consuming. This was how the process worked:

- Aquire short films of dancers performing or rehearsing (gathered from friends or shot myself)

- Import videos into After Effect

- Use the AF rotoscoping tool to begin cutting out each dancer from the video on their own composition layer. The rotoscoping tool attempts to intelligently locate the edge of the object you are trying to isolate in each frame, but its success is based on the contrast of the object from the background, how fast the object is moving, and how many parts of the object move in different direction. A dancer in sweats against a white wall with many other objects around is obviously not a good candidate for predictive rotoscoping. Therefore, each frame must be cleaned up. Additionally, when dancers pass in front or behind each other, or make contact, it is virtually impossible to separate them.

- Once each frame is cleaned up, they are exported to a standalone, alpha-channeled video

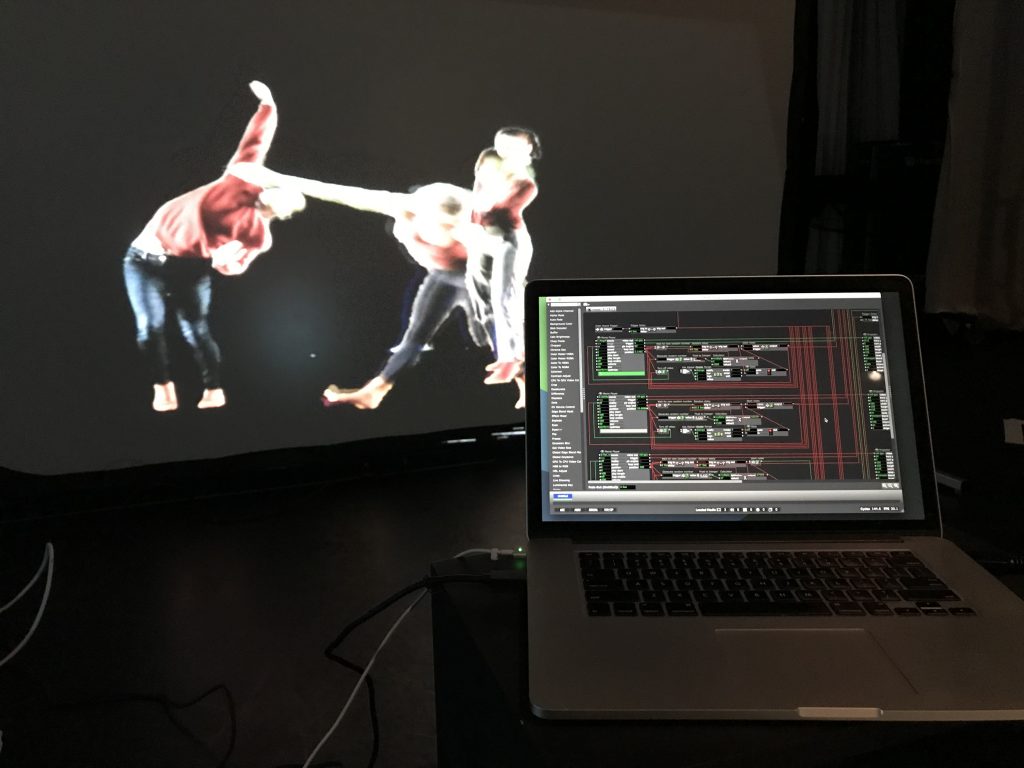

This is very short test I did with a live and rotoscoped dancers that represents at least eight hours of rotoscoping. Looks good, but incredibly inefficient.

Switching to a green screen capture model should make this process more efficient, allowing me to spend more time on the actual interaction of the video and the live dancer and less on simply compiling the required media. Additionally, the resolution and depth of field of the Kinect was too low and shallow for the live participant to interact with the prerecorded dancer in a meaningful way. Alex suggested upgrade to the Kinect 2, which has increased resolution and depth of field.

Attached is the initial Isadora patch for placing a silhouette of a live person alongside a video:

https://www.dropbox.com/s/54qn8bfmoc2o5hg/Cycle-1.izz?dl=0

Final Project: Looking Across, Moving Inside

Posted: December 10, 2017 Filed under: Final Project, Uncategorized Leave a comment »Looking Across, Moving Inside

What are different ways that we can experience a performance? Erin Manning suggests that topologies of experience, or relationscapes, reveal the relationships between making, performing, and witnessing. For example, the relationship between writing a script or score, assembling performers, rehearsing, performing, and engaging the audience. Understanding these associations is an interactive and potentially immersive process that allows one to look across and move inside a work in a way that witnessing only does not. For this project, I created an immersive and interactive installation that allows an audience member to look across and move inside a dance. This installation considers the potential for reintroducing the dimension of depth to pre-recorded video on a flat screen.

Hardware and Software

The hardware and software required includes a large screen that supports rear projection, a digital projector, an Xbox Kinect 2, a flat-panel display with speakers, and two laptops—one running Isadora and the other running PowerPoint. These technologies are organized as follows:

A screen is set up in the center of the space with the projector and one laptop behind it. The Kinect 2 sensor is slipped under the screen, pointing at an approximately six-foot by six-foot space delineated by tape on the floor. The flat-panel screen and second laptop are on a stand next to the screen, angled toward the taped area.

The laptop behind the screen is running the Isadora patch and the second laptop simply displays a PowerPoint slide that instructs the participant to “Step inside the box, move inside the dance.”

Media

Generally, projection in dance performance places the live dancers in front of or behind the projected image. One cannot move to the foreground or background at will without predetermining when and where the live dancer will move. In this installation, the live dancer can move to up or downstage at will. To achieve this the pre-recorded dancers must be filmed singly with an alpha channel and then composited together.

I first attempted to rotoscope individual dancers out from their backgrounds in prerecorded dance videos. Despite helpful tools in After Effects designed to speed up this process, each frame of video must still be manually corrected, and when two dancers overlap the process becomes extremely time consuming. One minute of rotoscoped video takes approximately four hours of work. This is an initial test using a dancer rotoscoped from a video shot in a dance studio:

Abandoning that approach, and with the help of Sarah Lawler, I recorded ten dancers moving individually in front of a green screen. This process, while still requiring post-processing in After Effects, was significantly faster. These ten alpha-channeled videos then comprised the pre-recorded media necessary for the work. An audio track was added for background music. Here is an example of a green-screen dancer:

Programming

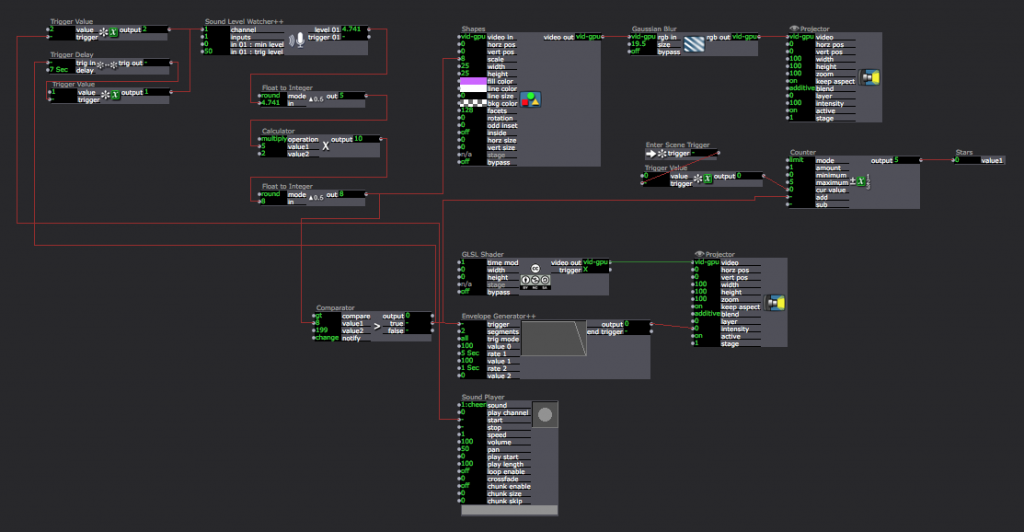

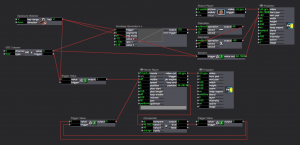

The Isadora patch was divided into three main function:

Videos

The Projector for each prerecorded video was placed on an odd numbered layer. As each video ends, a random number of seconds passes before they reenter the stage. A Gate actor prevents more than three dancers from being on stage at once by keeping track of how many videos are currently playing.

The Live Dancer

Brightness data was captured from the Kinect 2 (upgraded from the original Kinect for greater resolution and depth of field) via Syphon and fed through several filters in order to isolate the body of the participant.

Calculating Depth

Isadora logic was set up such that as the participate moved forward (increased brightness), the layer number on which they were projected increased by even numbers. As they moved backwards, the layer number decreased. In other words, the live dancer might be on layer 2, behind the prerecorded dancers on layers 3, 5, 7, and 9. As the live dancer move forward to layer 6, they are now in front of the prerecorded dancers on levels 3 and 5, but behind those on levels 7 and 9.

Download the patch here: https://www.dropbox.com/s/6anzklc0z80k7z4/Depth%20Study-5-KinectV2.izz?dl=0

In Practice

Watching people interact with the installation was extremely satisfying. There is a moment of “oh!” when they realize that they can move in and around the dancers on the screen. People experimented with jumping forward and back, getting low the floor, mimicking the movement of the dancers, leaving the stage and coming back on, and more. Here are some examples of people interacting with the dancers:

Devising Experiential Media from Benny Simon on Vimeo.

Devising Experiential Media from Benny Simon on Vimeo.

Devising Experiential Media from Benny Simon on Vimeo.

Future Questions

Is it possible for the live participant to be on more than one layer at a time? In other words, could they curve their body around a prerecorded dancer’s body? This would require a more complex method of capturing movement in real-time than the Kinect can provide.

What else can happen in a virtual environment when dancer move in and around each other? What configurations of movement can trigger effects or behaviors that are not possible in a physical world?

Pressure Project #3: Paper Telephone Superstar!

Posted: December 10, 2017 Filed under: Pressure Project 3 Leave a comment »Paper Telephone Superstar! is a simple, but interactive riff on the old Telephone game in which the meaning of media is blurred through transmission. My goals for this project were to create a gaming experience that was familiar, required only simple instructions, and included a responsive digital element that enhanced play.

The rules of the game were distributed to players, along with a blank piece of paper:

- Sit in a circle

- Write a short phrase at the top of the paper

- Pass your paper to the person on your right

- Draw a picture of what is written, then fold the paper down to cover the writing

- Pass the paper to the person on your right

- Write a short phrase the describes the picture, then fold the paper down to cover the picture

- Pass the paper to the person on your right

- Repeat until everyone has their original paper back

- Unfold your paper take turns sharing the hilarious results! Clap loudly for the best or funniest results! Whoever gets the spinny star wins!

I watched the players read the rules and then settle themselves on the floor in a circle. A screen mounted on the wall above the players pulsed slowly with a purple light that grew or shrunk depending on how much noise the players were making.

After the first round, when everyone had got back their original papers, the players took turns unfolding them and laughing at the results. The biggest laughs triggered the Isadora patch to erupt with fireworks and cheering, and a star dropped down on the screen. The person who got the fifth star wins the game with a gigantic spinning star, more fireworks, and louder cheering!

The patch itself is straightforward, drawing audio from the live capture functionality in to a Sound Level Watcher actor, and then into a Comparator to determine what actions should happen and when. A counter keeps track of how many stars have fallen and when to kick off the final animation that designates the winner.

The most challenging part of this project was gauging the appropriate level of sound that would trigger a star. This varied by room, and distance from the microphone, the number of people, and how loud they were. I did a lot of yelling and clapping in my apartment to find an approximate level, and then surreptitiously did a sound level check of my classmates during a busy time in the Motion Lab. I also spent time finessing the animation of the stars dropping, adding a slight bounce to the end.

This game is re-playable, but only once the sound levels are correctly set for a given environment. One way to resolve this would be to include a sound calibration step in the Isadora patch in order to set a high and low level before the game starts. Another option would be swap sound for movement; for example, players wave their arms in the air to indicate how funny the results of the game are.

Pressure Project #2: Alligators in the Sewers!

Posted: October 8, 2017 Filed under: Uncategorized Leave a comment »This audio exploration is designed to evoke sensation through sound in a whimsical way. Working from the myth of the alligators who live in the New York City subway system, I sketched out a one-minute narrative encounter that would be recognizable (especially to a city-dweller), a little creepy, and just a bit funny.

- A regular day on a busy New York City street

- The clatter of a manhole cover being opened

- Climbing down into the dark sewer

- Walking slowly through the dark through running water (and who knows what else)

- A sound in the distance . . . a pause

- The roar of an alligator!

Assembling these sounds was simply a matter of scouring YouTube, but mixing them proved to be a bit more challenging. I layered each track in GarageBand, paying special attention to the transition from the busy world above and the dark, industrial swamp below. I also worked to differentiate the sound of stepping down a ladder from the sound of walking through the tunnel. An overlay of swamp and sewer sounds created the atmosphere belowground, and a random water drop built the tension. It was important to stop the footsteps immediately after you hear the soft roar of the alligator in the distance, pause, and then bring in the loudest sequence of alligators roaring I could find.

The minute-long requirement was tricky to work with because of the relationship between time and tension. I think the project succeeds in general, but an extra minute or two could really ratchet up the sensations of moving from safety, to curiosity, to trepidation, to terror. Take a listen for yourself! I recommend turning off all the lights and lying on the floor.

Pressure Project #1: (Mostly) Bad News

Posted: September 16, 2017 Filed under: Pressure Project I, Uncategorized Leave a comment »Pressure Project #1

My concept for this first Pressure Project emerged from research I am currently engaged in concerning structures of representation. The tarot is an on-the-nose example of just such a structure, with its Derridean ordering of signs and signifiers.

I began by sketching out a few goals:

The experience must evoke a sense of mystery punctuated by a sense of relief through humor or the macabre.

The experience must be narrative in that the user can move through without external instructions.

The experience must provide an embodied experience consistent with a tarot reading.

I began by creating a scene that suggests the beginning of a tarot reading. I found images of tarot cards and built a “Card Spinner” user actor that I could call. Using wave generators, the rotating cards moved along the x and y axes, and the z axis was simulated by zooming the cards in and out.

Next I built the second scene that suggest the laying of specific cards on the table. Originally I planned that the cards displayed would be random, but due to the time required to create an individual scene for each card I opted to simply display the three same cards.

Finally, I worked to construct a scene for each card that signified the physical (well, virtual) card that the user chose. Here I deliberately moved away from the actual process of tarot in order to evoke a felt sensation in the user.

I wrote a short, rhyming fortune for each card:

The Queen of Swords Card – Happiness/Laughter

The Queen of Swords

with magic wards

doth cast a special spell:

“May all your moments

be filled with donuts

and honeyed milk, as well.”

The scene for The Queen of Swords card obviously needed to incorporate donuts, so I found a GLSL shader of animated donuts. It ran very slowly, and after looking at the code I determined that the way the sprinkles were animated was extremely inefficient, so I removed that function. Pharrell’s upbeat “Happy” worked perfectly as the soundtrack, and I carefully timed the fade in of the fortune using trigger delays.

Judgment Card – Shame

I judge you shamed

now bear the blame

for deeds so foul and rotten!

Whence comes the bell

you’ll rot in hell

forlorn and fast forgotten!

The Judgement card scene is fairly straightforward, consisting of a background video of fire, an audio track of bells tolling over ominous music, and a timed fade in of the fortune.

Wheel of Fortune – Macabre

With spiny spikes

a crown of thorns

doth lie atop your head.

Weep tears of grief

in sad motif

‘cuz now your dog is dead.

The Wheel of Fortune card scene was more complicated. At first I wanted upside-down puppies to randomly drop down from the top of the screen, collecting on the bottom and eventually filling the entire screen. I could not figure out how to do this without having a large number of Picture Player actors sitting out of site above the stage, which seemed inelegant, so I opted instead to simply have puppies drop down from the stop of the stage and off the bottom randomly. Is there a way to instantiate actors programmatically? It seems like there should be a way to do this.

Now that I had the basics of each scene working, I turned to the logics of the user interaction. I did this in two phases:

In phase one I used keyboard watchers to move from one scene to the next or go back to the beginning. The numbers 1, 2, and 3, were hooked up on the selector scene to choose a card. Using the keyboard as the main interface was a simple way to fine-tune the transitions between scenes, and to ensure that the overall logic of the game made sense.

The biggest challenge I ran into during this phase was in the Wheel of Fortune scene. I created a Puppy Dropper user actor that was activated by pressing the “d” key. When activated, a puppy would drop from the top of the screen at a random horizontal point. However, I ran into a few problems:

- I had to use a couple of calculators between the envelope generator and the projector in order to get the vertical position scaling correct such that the puppy would fall from the top to the bottom

- Because the sound the puppy made when falling was a movie, I had to use a comparator to reset the movie after each puppy drop. My solution for this is convoluted, and I now see that using the “loop end” trigger on the movie player would have been more efficient.

Phase two replaced the keyboard controls with the Leap controller. Using the Leap controller provides a more embodied experience—waving your hands in a mystical way versus pressing a button.

Setting up the Leap was simple. For whatever reason I could not get ManosOSC to talk with Isadora. I didn’t want to waste too much time, so I switched to Geco and was up and running in just a few minutes.

I then went through each scene and replaced the keyboard watchers with OSC listeners. I ran into several challenges here:

- The somewhat scattershot triggering of the OSC listeners sometimes confused Isadora. To solve this I inserted a few trigger delays, which slowed Isadora’s response time down enough so that errant triggers didn’t interfere with the system. I think that with more precise calibration of the LEAP and more closely defined listeners in Isadora I could eliminate this issue.

- Geco does not allow for recognition of individual fingers (the reason I wanted to use ManosOSC). Therefore, I had to leave the selector scene set up with keyboard watchers.

The last step in the process was to add user instructions in each scene so that it was clear how to progress through the experience. For example, “Thy right hand begins thy journey . . .”

My main takeaway from this project is that building individual scenes first and then connecting them after saves a lot of time. Had I tried to build the interactivity between scenes up front, I would have had to do a lot of reworking as the scenes themselves evolved. In a sense, the individual scenes in this project are resources in and of themselves, ready to be employed experientially however I want. I could easily go back and redo only the parameters of interactions between scenes and create an entirely new experience. Additionally, there is a lot of room in this experience for additional cues in order to help users move through the scenes, and for an aspect of randomness such that each user has a more individual experience.

Click to download the Isadora patch, Geco configuration, and supporting resource files:

http://bit.ly/2xHZHTO