cycle three: something that tingles ~

Posted: May 1, 2024 Filed under: Final Project | Tags: Cycle 3 Leave a comment »In this iteration, i begin with an intention to :

– scale up the what I have in cycle 2 (eg: the number of sensors/motors, and imagery?)

– check out the depth camera (will it be more satisfying than webcam tracking?)

– another score for audience participation based on the feedback from cycle 2

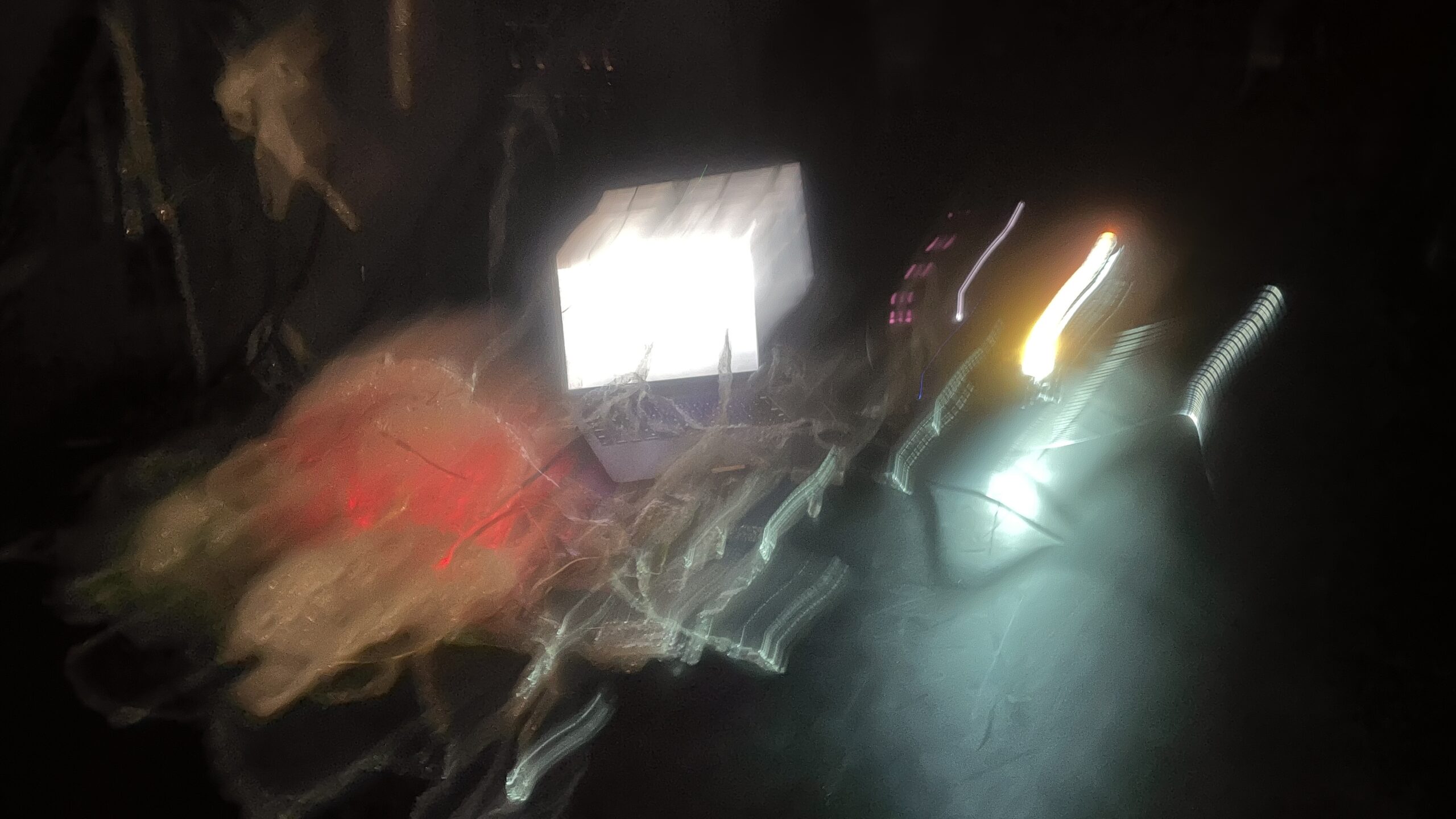

– some touches on space design with more bubble wraps..

Here are how those goes…

/scale up/

I added in more servo motors, this goes pretty smoothly, and the effects are instant — the number of servo wiggling gives it more of sense of a little creature.

I also attempted to add more flex/force sensor, but the data communication become very stuck, at times, Arduino is telling me that my board is disconnected, and the data does not go into Isadora smoothly at all. What I decide is: keep the sensors, and it is okay that the function is not going to be stable, at least it serves as a tactile tentacle for touching no matter it activates the visual or not.

I also tried to add a couple more imagery to have multiple scenes other than the oceanic scene I have been working with since the first cycle. I did make another 3 different imagery, but I feel that it kinda of become too much information packed there, and I cannot decide their sequence and relationship, so I decide leave them out for now and stick with my oceanic scene for the final cycle.

/depth cam?/

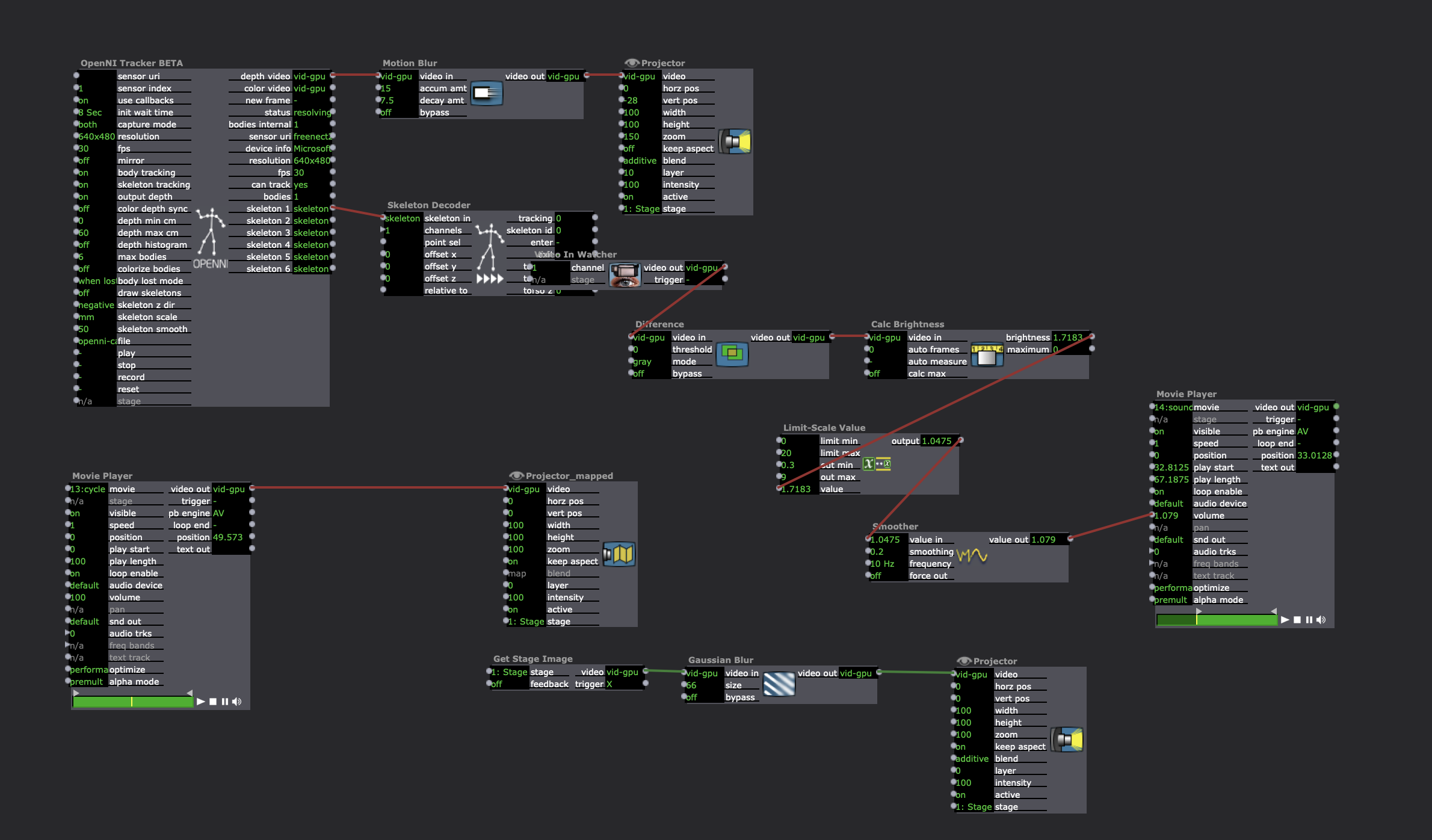

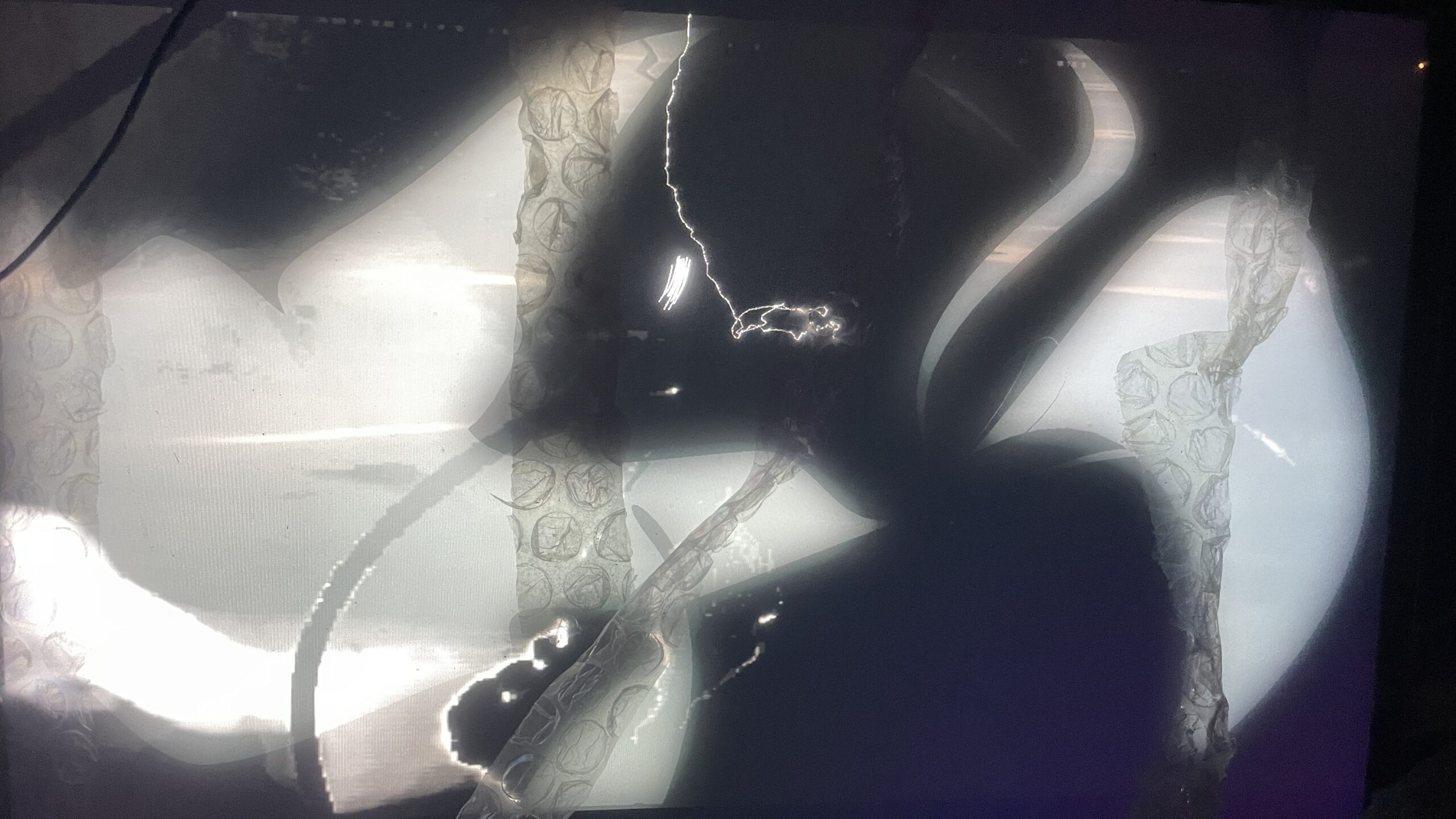

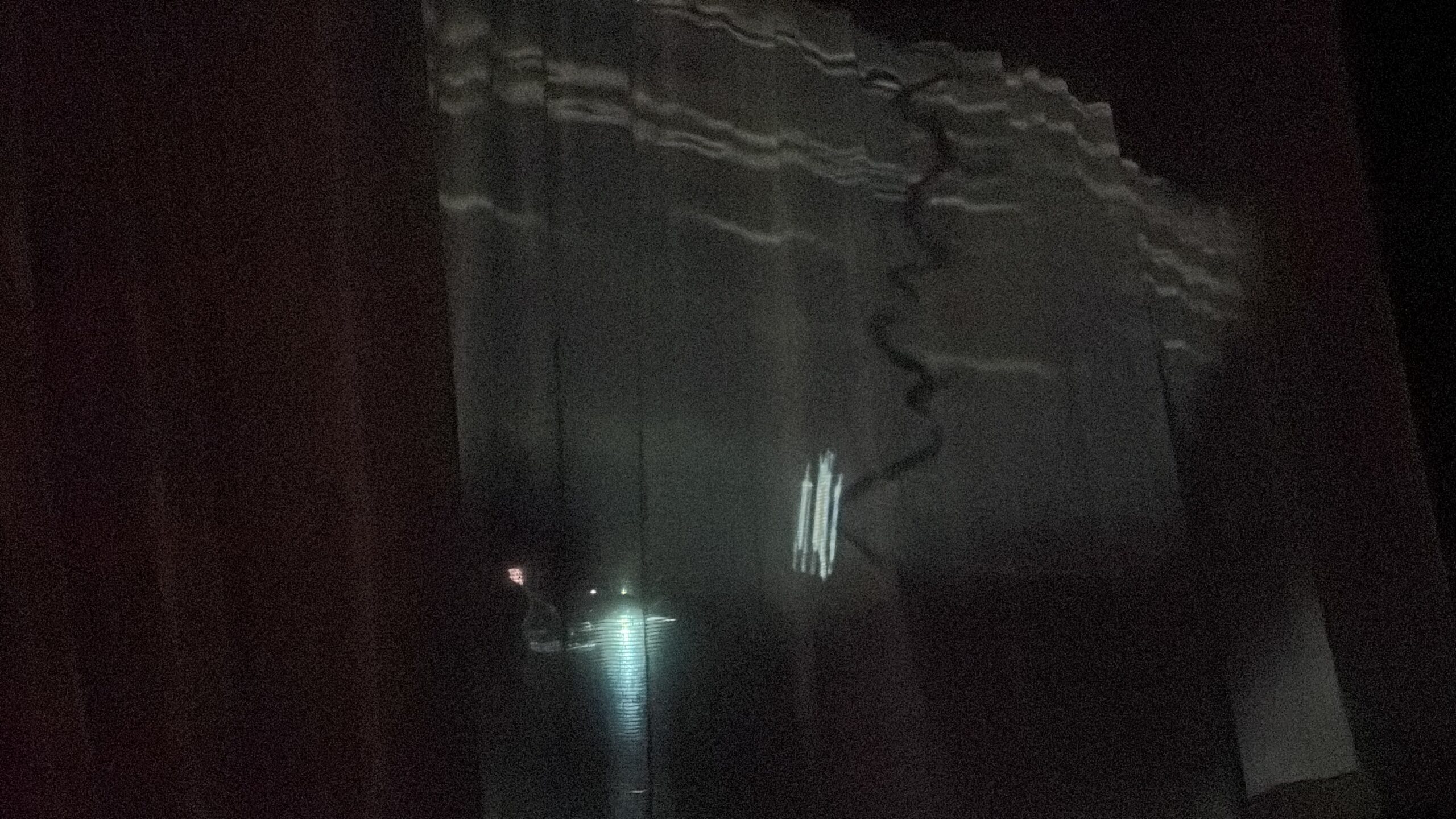

What I notice with the depth cam at first is that it keeps crashing Isadora, which is a bit frustrating, which propels me to work with it “lightly”. my initial intention of working with it is to see if it may serve better for body position tracking than webcam to animate the rope in my scene. But I also note that accurate tracking seems not matter too much in this work, so I just wanna see what’s the potential of depth cam. I think it does give a more accurate tracking, but the downside is that you have to be at a certain distance, and with the feet in the frame, so that the cam will start tracking your skeleton position, in this case it becomes less flexible than the eye++ actor. But what I find interesting with depth camera, is the white body-ghosty imagery it gives, so I ended up layering that on the video. And it works especially well with the dark environment.

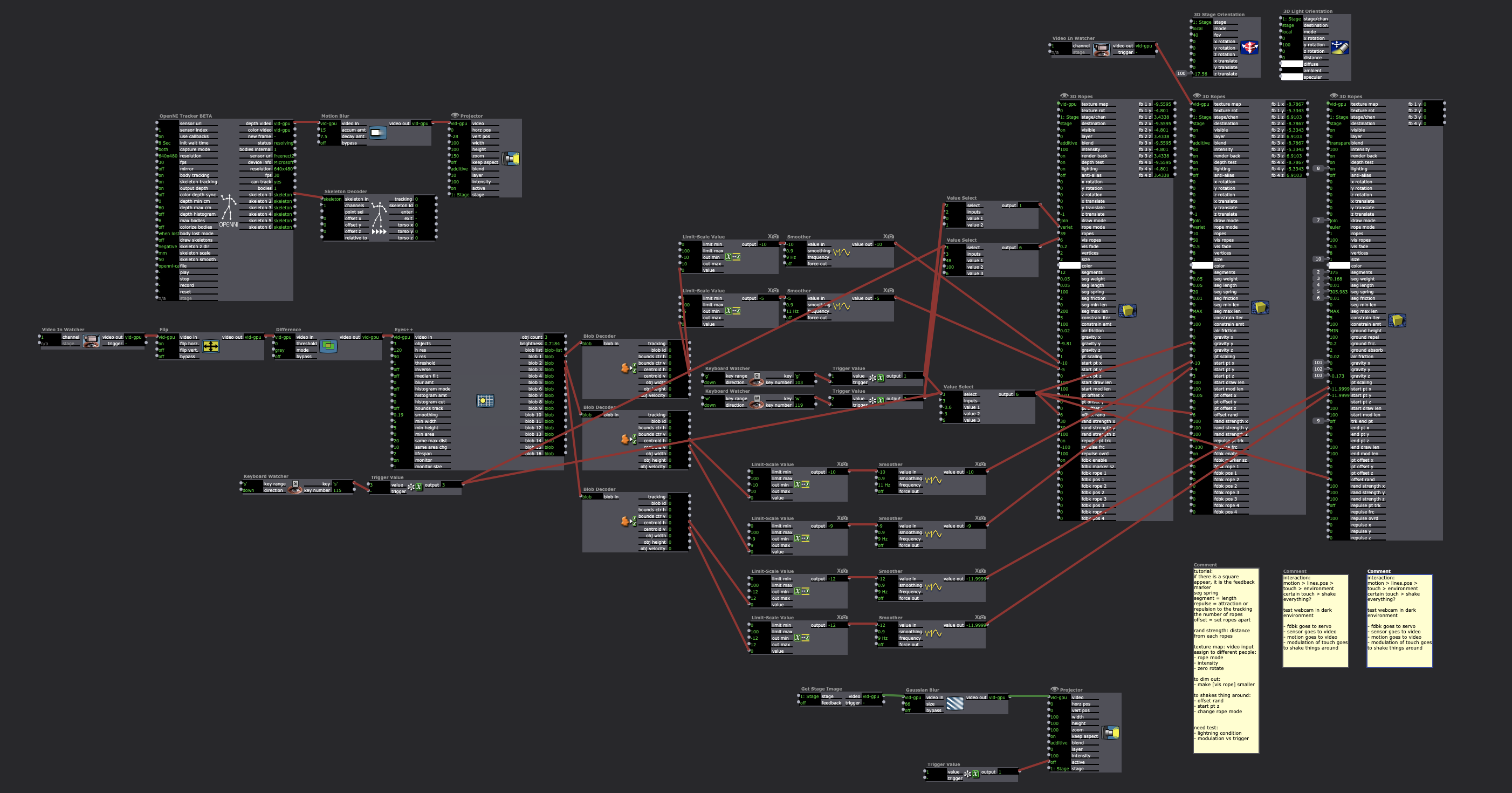

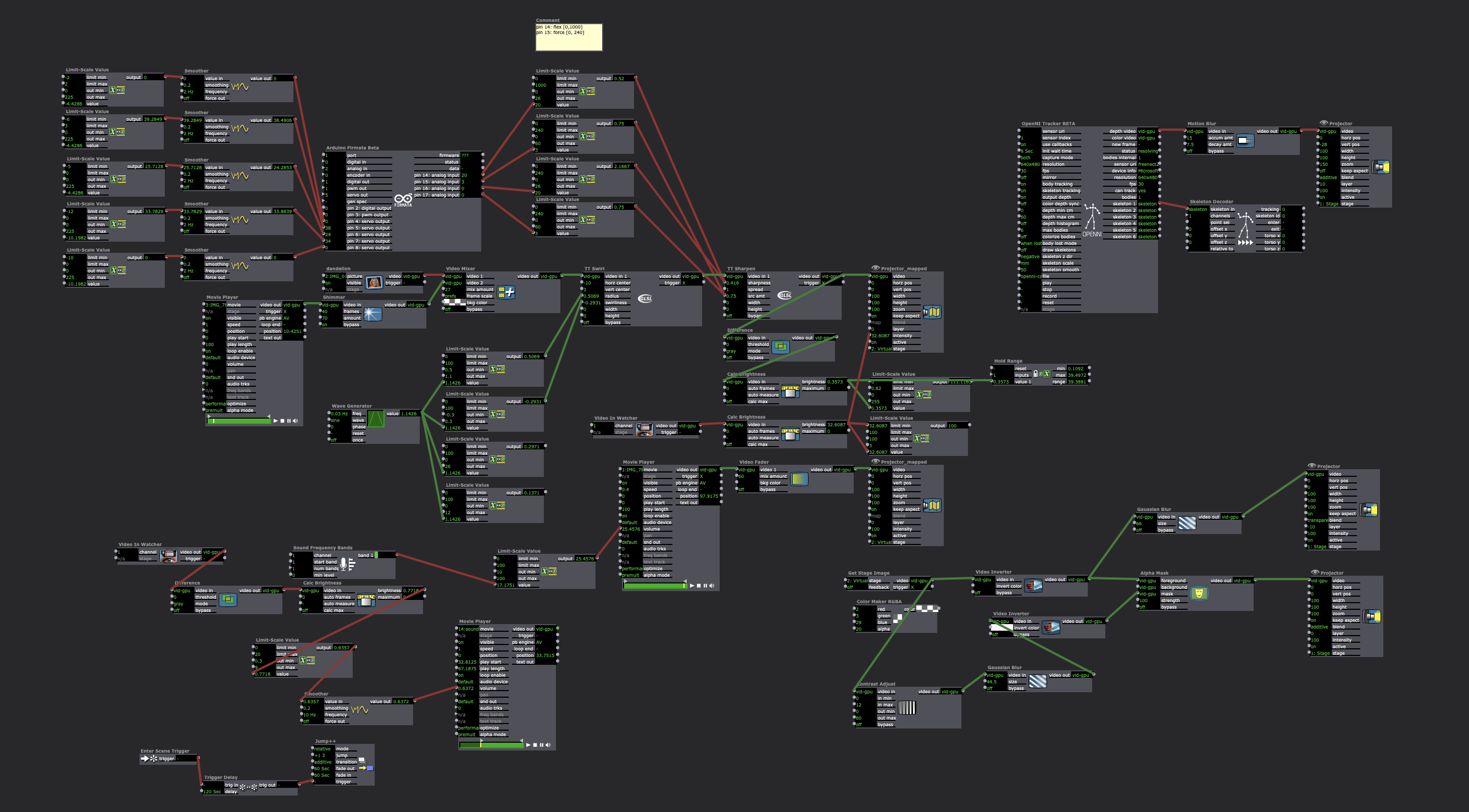

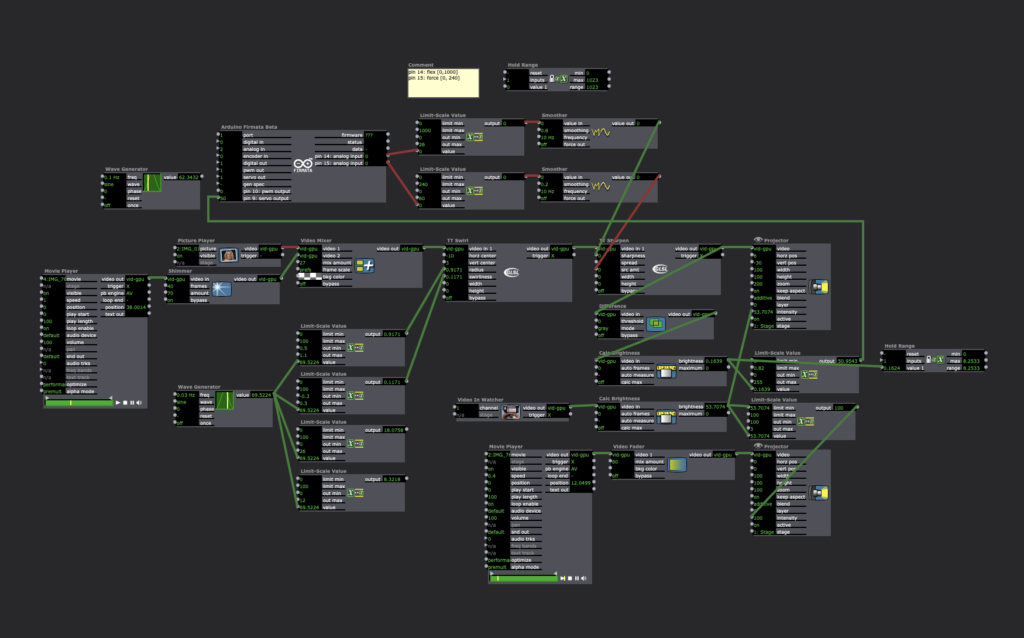

Here are the final Isadora patches:

/audience participation/

This time the score I decide to play with is: two people at a time, explore it. The rest are observers who can give to verbal cue to the people who are exploring — “pause” and “reverse”. Everyone can move around, in proximity or distance at any time.

/space design/

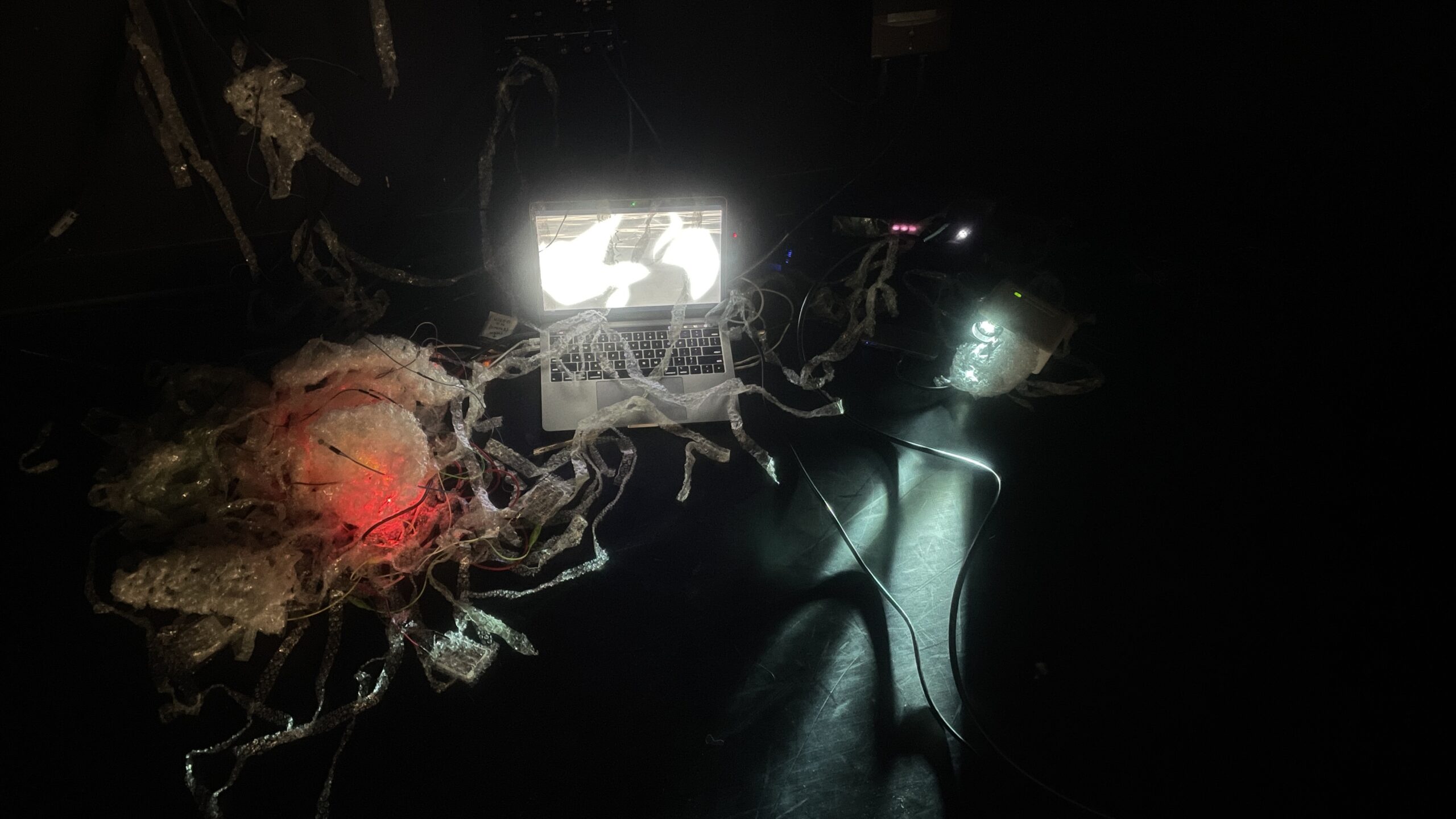

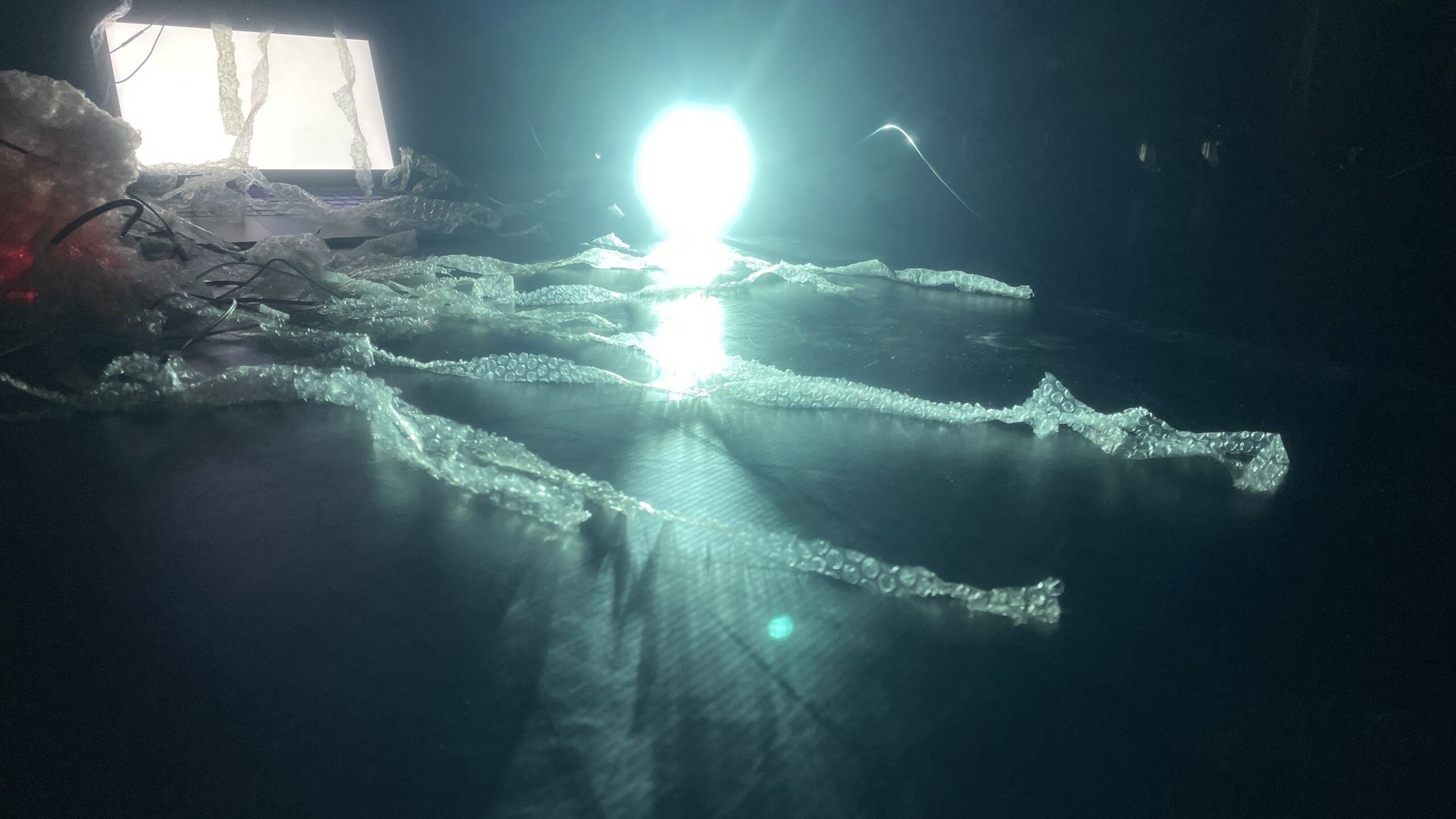

I wrapped and crocheted more bubble wrapper creatures in the space, tangling them through the wire, wall, charger, whatever happen to be in that corner that day. It’s like a mycelium growing on whatever environment there is, leaking out of the constructed space.

Feedback from folks and future iterations?

I really appreciate everyone’s engagement with this work and the discussions. Several people touches on the feeling of “jellyfish”, “little creature”, “fragile”, “desire to touch with care”, “a bit creepy?”. I am interested in all those visceral responses. At the beginning of cycle one, I was really interested in this modulation of touch, especially at a subtle scale, which I then find it hard to incite with certain technology mechanism, but it is so delightful to hear that the way the material composed actually evoke that kind of touch I am looking for. I am also interested in what Alex mentioned about it being like an “visual ASMR”, which I am gonna look into further. how to make visual/audio tactile is something really intrigues me. Also, I think I mentioned earlier that an idea I am working with in my MFA research is “feral fringe”, which is more of a sensation-imagery that comes to me, and through making works around this idea, it’s actually helping me to approach closer to what “feral fringe” actually refers to for me. I noticed that a lot of choice I made in this work are very intuitive (more “feel so” than “think so”) – eg: in the corner, the position of the curtain, and the layered imagery, the tilted projector, etc. Hearing people’s pointing out those help me to delve further into: what is a palpable sense of “feral fringe” ~

cycle two

Posted: May 1, 2024 Filed under: Final Project | Tags: Cycle 2 Leave a comment »I started my cycle 2 with exploring about the 3d rope actor. I was very curious about it, but didn’t get to delve into this in cycle 1, so I catch up with that. I began with this tutorial, which is really helpful.

The imagery of strings wildly dangling is really intriguing, echoing with the video imagery I had for cycle 1. To make it more engaging for the participant, I think of this way of using the eye++ actor to track the motion of multiple participant and let that affect the location of the strings.

Along with the sensors connected to Arduino from cycle one, I also made some touch sensors with the makeymakey alligator clip wires, foil, foam, and bubble wrap slices to give it a squeezy feeling. And I used some crocheted bubble wrapper, to wrap my Arduino & makeymakey kit inside, with the wires dangling out along the bubble wrap thin slices like the jellyfish’s tentacles – the intention is to give it a vibe like an amoeba creature.

I played with projection mapping and space set-up in the molab on Tuesday’s class, but need more time to mess around with it.

The observation and feedback from the audience is really helpful and interesting 🙂 I didn’t come up with a satisfying idea regarding instruction for audience. So I decide to let audience (two at a time) free explore in the space. I like how people got crawling to the ground, I notice that this bodily perspective is interesting for exploring this work; also love the feedback from Alex saying that it is interesting to notice the two people’s silhouette figuring out what is happening and the sound of murmuring; and I appreciating Alex pointing out the deliberate choice of having projector set in a corner, hidden and tilted. I am really interested in the idea of reimagining the optimal/normal functionality, and instead what I may call “tuning to the glitches” (by which I am not referring to the aesthetic of glitch, but a condition of unpredictable, instability, and “feral”)

Thinking along, I am relating to this mode of audiencing in this artist’s work:

https://artscenter.duke.edu/event/amendment-a-social-choreography-by-michael-klien

For the final cycle, i am going to:

– now that I have sensor and servo working, I am considering adding the number of it – “scale up” a little bit

– incorporate sound

– fine-tune webcam tracking

– play with the set design and projection mapping in molab

– continue wondering about modes of audience participation

cycle one

Posted: March 28, 2024 Filed under: Final Project Leave a comment »For cycle one, i keep my goal simple – to explore and figure out my multiple resources and the combined possibilities of arduino (and sensors), isadora, and makeymakey.

i learned how to connect arduino with isadora, and explored touch-related sensors and actuators. I started with capacitive touch because it can be achieved with just connecting wires to the pins, and doesn’t require me to purchase extra sensors, but it turns out that the fact that it does not give analog output makes it hard to send value to isadora with the Firmata Actor. (while i did find that it could be possible to use serial communication instead of Firmata Actor, but that seems more coding, and I think that’s too much for now, i may return to this in the future). So I decided to use force sensor, flex sensor, and the servo.

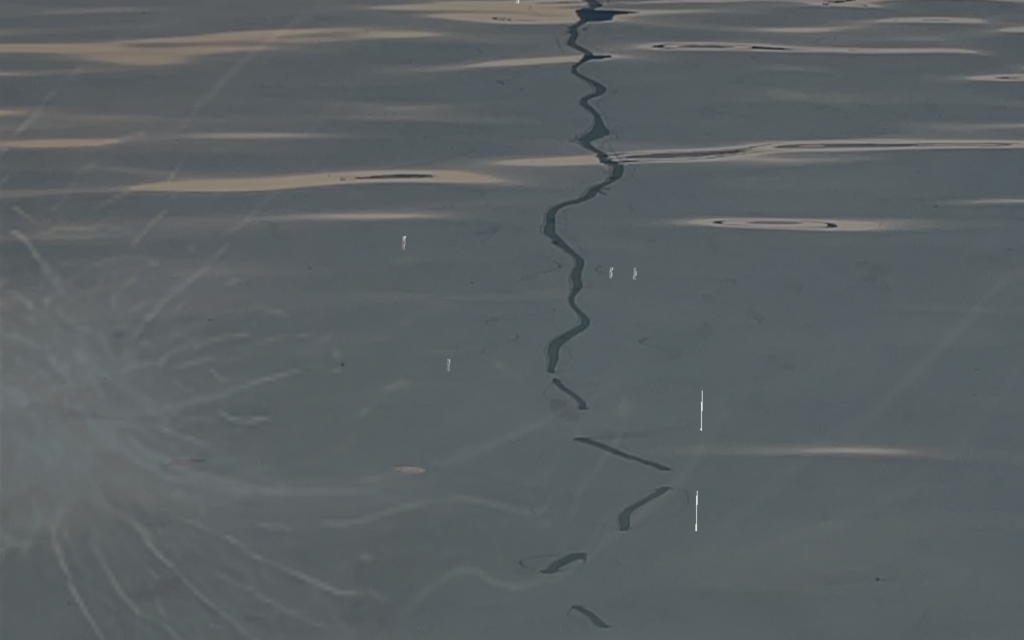

I played with layering some videos together (some of the footages i collected), all of them shared something in common (an aesthetic i am looking for) – the meandering/stochastic motion of feral fringe. I tested out some parameters that would allow subtle change to the visual. Then i tried send the value from my sensor to affect the subtle changes.

some tutorials that i find helpful:

(thanks Takahiro for sharing this about Firmata in his blog post!):

https://www.instructables.com/Arduino-Installing-Standard-Firmata/

https://www.instructables.com/How-To-Use-Touch-Sensors-With-Arduino/

https://docs.arduino.cc/learn/electronics/servo-motors/

Force sensor: https://learn.sparkfun.com/tutorials/force-sensitive-resistor-hookup-guide/all

Flex sensor: https://learn.sparkfun.com/tutorials/flex-sensor-hookup-guide/all

I was also thinking about the score for audience, that supports the intention of [shared agency, haptic experience (with the wires as well), subtle sensations], and I really love the sensation of the wires dangling and twitching like tentacles ~ i would love for people to engage with it:

In pairs, one person will close eyes, the other person will guide them in the space. While roaming around in the space, eye-closed person will explore the space with touch, which then affects visuals/sounds/sensations in the space.

This source from an activity we did in Mexico, also i find another artist did something similar. i find this audiencing quite effective in the sense that, eye-closed enables that people don’t select and manipulate the object by judging its appearance or functions, which makes new “worlding” happen. And, with eyes closed, there is an extra layer of care in our touch and pace. This also resonates with what i studied in dance artist Nita Little’s theory of “chunky attention” versus “thin-sliced attention”. With eyes closed, we don’t come to recognize a thing as a chunky whole thing and assume we already know it, but we are constantly researching every bit of it as our touch goes through it. With the pair format, we are also constantly framing the “scene” for each other.

Envisioning space use for final cycle:

– in molab

– a projector (i am thinking of using my own tiny projector, because it’s light and easy to move, i probably wanna audience to play with touch/move that as well)

– space wise, i am thinking that part where the curtain track goes out (see sketch below, the light green area), but i am also flexible. with the next circles, i need to test out how to hook my wires, how long the power cable would be in order to figure out the realistic spacial design…

Cycle 3: The Sound Station

Posted: December 11, 2023 Filed under: Arvcuken Noquisi, Final Project, Isadora | Tags: Au23, Cycle 3 Leave a comment »Hello again. My work culminates into cycle 3 as The Sound Station:

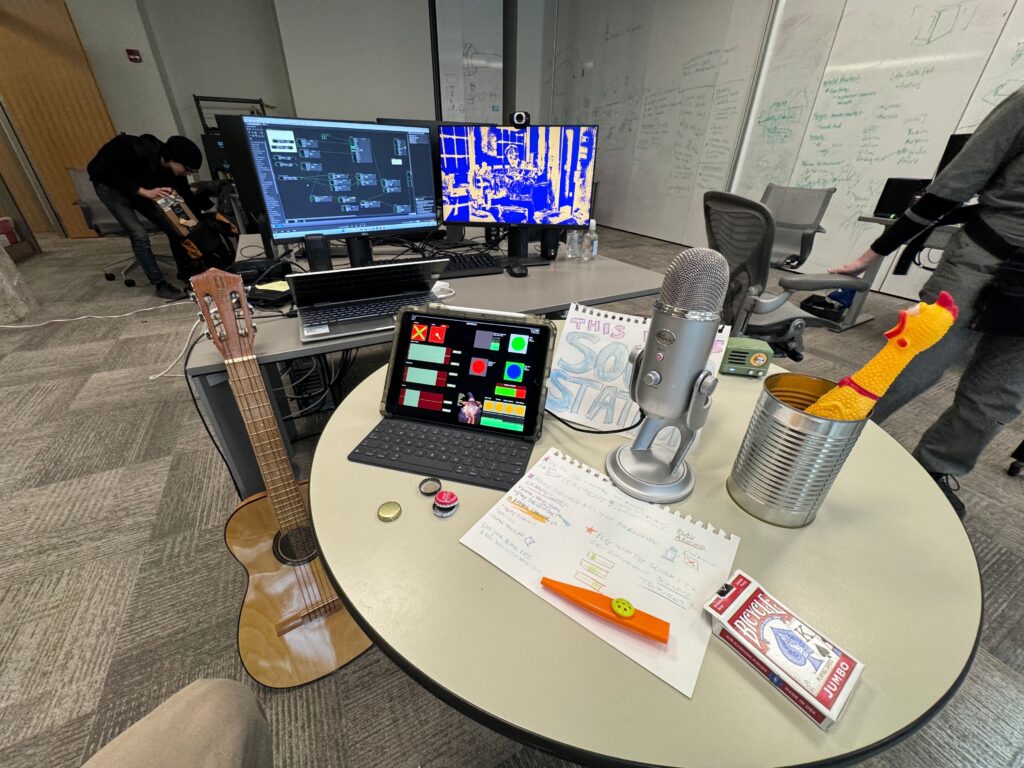

The MaxMSP granular synthesis patch runs on my laptop, while the Isadora video response runs on the ACCAD desktop – the MaxMSP patch sends OSC over to Isadora via Alex’s router (it took some finagling to get around the ACCAD desktop’s firewall, with some help from IT folks).

I used the Mira app on my iPad to create an interface to interact with the MaxMSP patch. This meant that I had the chance make the digital aspect of my work seem more inviting and encourage more experimentation. I faced a bit of a challenge, though, because some important MaxMSP objects do not actually appear on the Mira app on the iPad. I spent a lot of time rearranging and rewording parts of the Mira interface to avoid confusion from the user. Additionally I wrote out a little guide page to set on the table, in case people needed additional information to understand the interface and what they were “allowed” to do with it.

Video 1:

The Isadora video is responsive to the both the microphone input and the granular synthesis output. The microphone input alters the colors of the stylized webcam feed to parallel the loudness of the sound, going from red to green to blue with especially loud sounds. This helps the audience mentally connect the video feed to the sounds they are making. The granular synthesis output appears as the floating line in the middle of the screen: it elongates into a circle/oval with the loudness of the granular synthesis output, creating a dancing inversion of the webcam colors. I also threw a little slider in the iPad interface to change the color of the non mic-responsive half of the video, to direct audience focus toward the computer screen so that they recognize the relationship between the screen and the sounds they were making.

The video aspect of this project does personally feel a little arbitrary – I would definitely focus more on it for a potential cycle 4. I would need to make the video feed larger (on a bigger screen) and more responsive for it to actually have any impact on the audience. I feel like the audience focuses so much more on the instruments, microphone, and iPad interface to really necessitate the addition of the video feed, but I wanted to keep it as an aspect of my project just to illustrate the capacity MaxMSP and Isadora have to work together on separate devices.

Video 2:

Overall I wanted my project to incite playfulness and experimentation in its audience. I brought my flat guitar (“skinned” guitar), a kazoo, a can full of bottlecaps, a deck of cards, and miraculously found a rubber chicken in the classroom to contribute to the array of instruments I offered at The Sound Station. The curiosity and novelty of the objects serves the playfulness of the space.

Before our group critique we had one visitor go around for essentially one-on-one project presentations. I took a hands-off approach with this individual, partially because I didn’t want to be watching over their shoulder and telling them how to use my project correctly. While they found some entertainment engaging with my work, I felt like they were missing essential context that would have enabled more interaction with the granular synthesis and the instruments. In stark contrast, I tried to be very active in presenting my project to the larger group. I lead them to The Sound Station and showed them how to use the flat guitar, and joined in making sounds and moving the iPad controls with the whole group. This was a fascinating exploration of how group dynamics and human presence within a media system can enable greater activity. I served as an example for the audience to mirror, my actions and presence served as permission for everyone else to become more involved with the project. This definitely made me think more about what direction I would take this project in future cycles, if it were for group use versus personal use (since I plan on using the maxMSP patch for a solo musical performance). I wonder how I would have started this project differently if I did not think of it as a personal tool and instead as directly intended for group/cooperative play. I probably would have taken much more time to work on the user interface and removed the video feed entirely!

Cycle 2: MaxMSP Granular Synthesis + Isadora

Posted: November 26, 2023 Filed under: Arvcuken Noquisi, Final Project Leave a comment »For Cycle 2 I focused on working on the MaxMSP portion of my project: I made a granular synthesis patch, which cuts up an audio sample into small grains that are then altered and distorted.

2 demonstration clips, using different samples:

I had some setbacks working on this patch. I had to start over from scratch a week before Cycle 2 was due, because my patch suddenly stopped sending audio. Recreating the patch at least helped me better understand the MaxMSP objects I was using and what role they played in creating the granular synthesis.

Once I had the MaxMSP patch built, I added some test-sends to see if the patch will cooperate with Isadora. For now I’m just sending the granular synthesis amplitude through to an altered version of the Isadora patch I had used from Cycle 1. This was an efficient and quick way to determine how the MaxMSP outputs would work in Isadora.

I still have quite a few things to work on for Cycle 3:

- Router setup. I need to test the router network between my laptop (MaxMSP) and one of the ACCAD computers (Isadora).

- Isadora patch. I plan on re-working the Isadora patch, so that it’s much more responsive to the audio data.

- Interactivity. I’ll need to pilfer the MOLA closet for a good microphone and some sound-making objects. I want Cycle 3 to essentially be a sound-making station for folks to play with. I will have to make sure the station is inviting enough and has enough information/instructions that individuals will actually interact with it.

- Sample recording. Alongside interactivity, I will need to adjust my MaxMSP patch so that it plays back recorded samples instead of pulled files. According to Marc Ainger this shouldn’t be a challenge at all, but I’ll need to make sure I don’t miss anything when altering my patch (don’t want to break anything!).

Final Mission: three travelers

Posted: December 11, 2019 Filed under: Emily Craver, Final Project | Tags: choose your own adventure, dance, Isadora Leave a comment »

photo by Alex Oliszewski

During my Cycle 3 of Choose Your Own Adventure: Live Performance Edition, I explored how to allow for more timelines. I realized that the moments of failure for the audience provides excitement and raises the stakes of the performance. How to make a system that encourages and provides feedback for the volunteers while also challenging them?

I feel most creative and myself when creating pieces that play with stakes. I love dance and theatre that encourages heightened reactions to ridiculous situations. The roles of the three travelers started to sink in to me the more we rehearsed. They needed to be both helpless adventurers somewhere distant in time and space while also being all-knowing, somewhat questionably trustworthy narrator-like greek chorus assistants. Tara, Yildiz and I added cheering on the volunteers to blur those lines of where and who we are.

photo by Alex Oliszewski

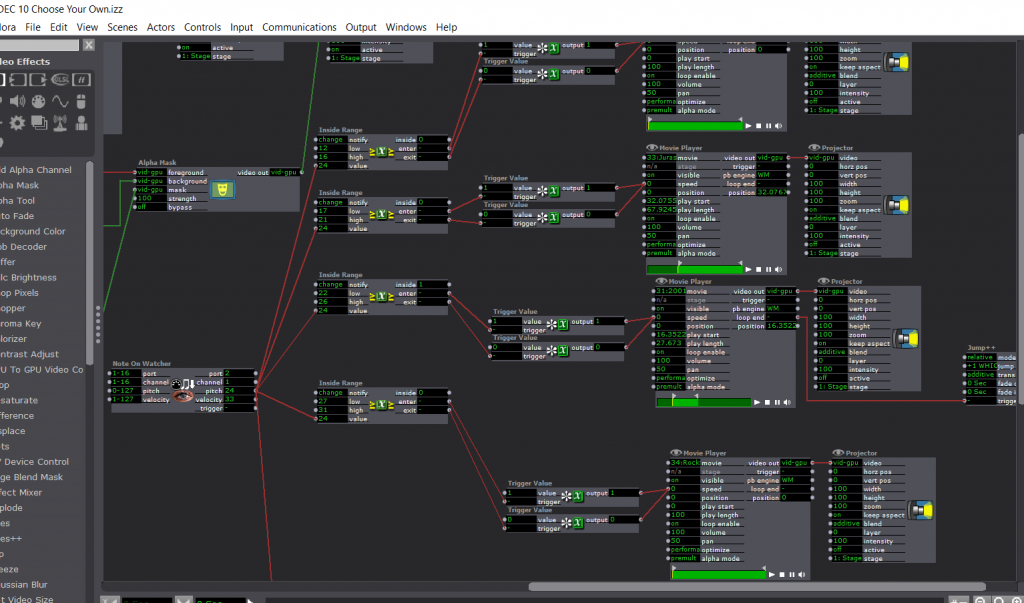

The new system for Choose Your Own Adventure included: MIDI keyboard as a controller, Live Webcam for a live feed of adventurers and photo capture of successes, FocusRite Audio hook-up for sound input and sound level watcher, GLSL shaders of all colors and shapes, and Send MIDI show control in order to trigger light cues.

The new system provided more direct signs of sound level watching and cues to the volunteers. The voice overs were louder and aided by flashing text reiterating what the audience should be doing. The three travelers became side coaches for the volunteers as well as self-aware performers trying to gain trust. I found myself fully comfortable with the way the volunteers were being taken care of and started to question and wonder about the audience who was observing all of this. How can an audience be let in while others are physically engaging with the material? I thought about perhaps close camera work of the decisions being made at the keyboard? Earlier suggestions (shout out to Alex Christmas who gave this suggestion) included an applause-o-meter to allow for the non-volunteers to have a say from their seats. A “Who Wants to be a Millionaire” style audience interaction comes to mind with options for volunteers to choose how to interact and have the audience come to their aid. What does giving audience a voice look like? How can it be both respectful, careful and challenging?

Final Project – Werewolf

Posted: December 14, 2018 Filed under: Final Project, Uncategorized Leave a comment »For this final project, many aspects changed over its development. Initially, I started work on a voting sort of game. I wanted everyone experiencing to have an app loaded to their mobile device written in ReactNative, then proceed to each have an interface to participate in the game. Essentially I would find through research that I wanted to create what is known as a Crowd Game.

Further reading: http://stalhandske.dk/Crowd_Game_Design.pdf

Through much of my development time, I worked with the concept of a voting game and how to get people to form coalitions. Ultimately, I found it difficult to design something around this concept, because it was difficult to evoke strong emotions without serious content or without just having the experience revolve around collecting points. Shortly before the final few days of development, I had the idea to completely change and base the experience on the party game known as Mafia or Werewolf (Rules example: https://www.playwerewolf.co/rules/). This change better reflected my original desire to have a Crowd Game, but with added intimacy and interaction between the players themselves, as opposed to with the technology. If people are together to play a game, it should leverage the fact that the people are together.

Client / Mobile App

– Written in React.js (JavaScript) using the ReactNative and Expo Frameworks. Excellent choice for development, written in a common web language for Android and iOS, able to access system camera, vibration, etc. https://facebook.github.io/react-native/ https://expo.io/

– Unique client ID. Game Client would scan and display QR codes so players can select players to kill automatically from distance with consensus. Also randomly assigns all rolls to players.

– Expo allowed me to upload code to their site and load it to any device. A website serving HTML/JS would be easier to use if one did not intend to use all the phone functions.

– This part of development went smoothly and was fairly predictable with regard to time sink. Would recommend for use.

Isadora

– Isadora patch ran in the Motion Lab. Easy to setup after learning software in class.

– Night/Day cycle for the game with 3 projectors.

LAN Wi-Fi Router

– Ran from laptop connected to Server over ethernet. Ideal for setups with need for high speed/traffic.

Game Server

– Written in Java, by far the most taxing part of the project.

– Contains game logic, handling rounds, players, etc.

– Connects to clients via WebSockets with the Jetty library. I could get individual connections up and running, but it became a roadblock to using the system during a performance because I could not fix the one-to-many server out-messages.

– This had a very high learning curve for me, and I would recommend that someone use a ready system like Colyseus for short-term projects like this final. http://colyseus.io/ https://github.com/gamestdio/colyseus

During the final performance, I only used the game rules and Isadora system setup in the Motion Lab, but I feel as though people really enjoyed playing. Certainly more effective than the first game iteration I had, even with the technology fully working. My greatest takeaway and advice I would give to anyone starting a project like this, would be to just get your hands dirty. The sooner you fully immerse yourself in the process, the sooner you can begin to see all it could be.

Final Schematic

Posted: November 6, 2018 Filed under: Final Project Leave a comment »My schematic for the final project is in the link.

Final Proposed Planning

Posted: November 6, 2018 Filed under: Final Project Leave a comment »Proposed Planning

11/8 – Work in Drake

11/13 – Work in ACCAD

11/15 – Work in Motion Lab 4-5:20pm

11/20 – Projector Hang/Focus – Critique

11/27 – Determined by outcome of 11/20 – Work in Motion Lab

11/29 – Split Motion Lab time

12/4 – Last Minute Problem Solving

12/6 or 12/7 Perform Final TBD