‘You make me feel like…’ Taylor – Cycle 3, Claire Broke it!

Posted: December 14, 2016 Filed under: Final Project, Isadora, Taylor Leave a comment »towards Interactive Installation,

the name of my patch: ‘You make me feel like…’

Okay, I have determined how/why Claire broke it and what I could have done to fix this problem. And I am kidding when I say she broke it. I think her and Peter had a lot of fun, which is the point!

So, all in all, I think everyone that participated with my system had a good time with another person, moved around a bit, then watched themselves and most got even more excited by that! I would say that in these ways the performances of my patch were very successful, but I still see areas of the system that I could fine tune to make it more robust.

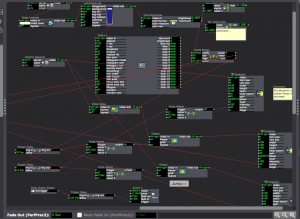

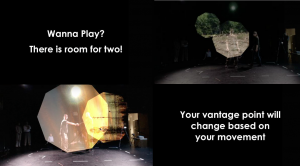

My system: Two 9 sided shapes tracked 2 participants through space, showing off the images/videos that are alpha masked onto the shapes as the participants move. It gives the appearance that you are peering through some frame or scope and you can explore your vantage point based on your movement. Once participants are moving around, they are instructed to connect to see more of the images and to come closer to see a new perspective. This last cue uses a brightness trigger to switch to projectors with live feed and video delayed footage playing back the performers’ movement choices and allowing them to watch themselves watching or watching themselves dancing with their previous selves dancing.

The day before we presented, with Alex’s guidance, I decided to switch to the Kinect in the grid and do my tracking from the top down for an interaction that was more 1:1. Unfortunately, this Kinect is not hung center/center of the space, but I set everything else center/center of the space and used Luminance Keying and Crop to match the space and what the Kinect saw. However, because I based the switch, from shapes following you to live feed, on brightness when the depth was tracked at the side furthest from the Kinect the color of the participant was darker (video inverter was used) and the brightness window that triggered the switch was already so narrow. To fix this I think shifting the position of the Kinect or how the space was laid out could have helped. Also, adding a third participant to the mix could have made (it even more fun) the brightness window greater and increased the trigger range, so that the far side is no longer a problem.

I wonder if I should have left the text running for longer bouts of time, but coming in quicker succession? I kept cutting the time back thinking people would read it straight away, but it took people longer to pay attention to everything. I think this is because they are put on display a bit as performers and they are trying to read and decipher/remember instructions.

The bout that ended up working the best, or going in order all the way through as planned was the third go-round, I’ll post a video of this one on my blog which I can link to once I can get things loaded. This tells me my system needs to accommodate for more movement because there was a wide range of movement between the performers (maybe with more space, or more clearly defined space). Also, this accounting for the time taken exploring I have mentioned above.

Cycle 2_Taylor

Posted: December 3, 2016 Filed under: Assignments, Isadora, Taylor Leave a comment »This patch was created to use in my rehearsal to give the dancers a look at themselves while experimenting with improvisation to create movement. During this phase of the process we were exploring habits in relation to limitations and anxiety. I asked the dancers to think about how their anxieties manifest physically calling up the feelings of anxiety and using the patch to view their selves in real time, delayed time, and various freeze frames. From this exploration, the dancers were asked to create solos.

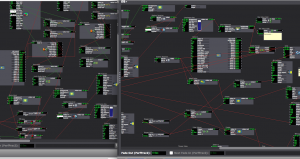

For cycle two, I worked out the bugs from the first cycle.

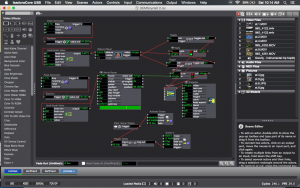

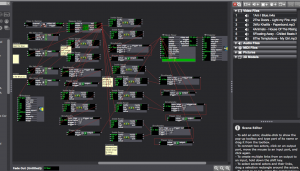

The main thing that was different for this cycle was working with the Kinect and using a depth camera to track the space and shift from the scenic footage to the live feed video instead of using live feed and tracking brightness levels.

I also connected toggles and gates to a multimix to switch between pictures and video for the first scene using pulse generators to randomize the images being played. For rehearsal purposes, these images are content provided from the dancers’ lives, a technological representation of their memories. I am glad I was able to fix this glitch with the images and videos. Before they were not alternating. I was able to use this in my previous patch along with the Kinect depth camera to switch the images based on movement instead of pulse generators.

Cycle 2 responses:

The participants thought that not knowing that the system was candidly recording them was cool. This was nice to hear because I had changed the amount of video delay so that the past self would come as more of a surprise. I felt that if the participant was unaware of the playback of the delay that there interaction with the images/videos at the beginning would be more natural and less conscious of being watched (even though our class is also watching the interaction).

Participants (classmates) also thought that more surprises would be interesting. Like the addition of filters such as dots or exploding to live video feed, but I don’t know how this would fit into my original premise for creating the patch.

Another comment that I wrote down, but am still a little unsure of what they meant was dealing with the placement of performers and inquiring if multiple screens might be effective. I did use the patch projecting on multiple screens in my rehearsal. I was interesting how the performers were very concerned with the stages being produced letting that drive their movement but were also able to stay connected with the group in the real space because they could see the stages from multiple angles. This allowed them to be present in the virtual and the real space during their performance.

I was also excited about the movement of participants that was generated. I think I am becoming more and more interested in getting people to move in ways they would not normally and think with more development this system could help to achieve that.

link to cycle 2 and rehearsal patches: https://osu.box.com/s/5qv9tixqv3pcuma67u2w95jr115k5p0o (also has unedited rehearsal footage from showing)

Cycle 1… more like cycle crash (Taylor)

Posted: December 3, 2016 Filed under: Isadora, Taylor, Uncategorized Leave a comment »The struggle.

So, I was disappointed that I couldn’t get the full version (with projector projecting, camera, and a full-bodied person being ‘captured’) up and running. Even last week I did a special tech run two days before my rehearsal using two cameras (an HDMI connected camera and a web cam). I got everything up, running, and attuned to the correct brightness Wednesday and then Friday it was struggle-bus city for Chewie, then Izzy was saying my files were corrupted and it didn’t want to stay open. Hopefully, I can figure out this wireless thing for cycle 2 or maybe start working with a Kinect and a Cam?…

The patch.

This patch was formulated from/in conjunction with PP(2). It starts with a movie player and a picture player switching on&off (alternating) while randomly jumping through videos/images. Although recently I am realizing that it is only doing one or the other… so I have been working on how the switching back and forth btw the two players works (suggestions for easier ways to do this are welcome). When a certain range of brightness (or amount of motion) is detected from the Video In (fed through Difference) the image/vid projector switches off and the 3 other projectors switch on [connected to Video In – Freeze, Video Delay – Freeze, another Video In (when other 2 are frozen)]. After a certain amount of time the scene jumps to a duplicate scene, ‘resetting’ the patch. To me, these images represent our past and present selves but also provide the ability to take a step back or step outside of yourself to observe. In the context of my rehearsal, for which I am developing these patches, this serves as another way of researching our tendencies/habits in relation to inscriptions/incorporations on our bodies and the general nature of our performative selves.

The first cycle.

Some comments that I received from this first cycle showing were: “I was able to shake hands with my past self”, “I felt like I was painting with my body”, and people were surprised by their past selves. These are all in line with what I was going for, I even adjusted the frame rate of the Video Delay by doubling it right before presenting because I wanted this past/second self to come as more of a surprise. Another comment that I received was that the timing of images/vids was too quick, but as they experimented and the scene regenerated they gained more familiarity with the images. I am still wondering with this one. I made the images quick on purpose for the dancers to only be able to ‘grab’ what they could from the image in this flash of time (which is more about a spurring of feeling than a digestion of the image). Also, the images used are all sourced from the performers so they are familiar and these images already have certain meanings for them… Don’t quite know how the spectators will relate or how to direct their meaning making in these instances…(ideas on this are also welcomed). I want to set up the systems used in the creation of the work as an installation that spectators can interact with prior to the performers performing, and I am still stewing on through line between systems… although I know it’s already there.

Thanks for playing, friends!!!

Also, everyone is invited to view the Performance Practice we are working on. It is on Fridays 9-10 in Mola (this Friday is rescheduled for Mon 11.14, through Dec. 2), please come play with us… and let me know if you are planning to!

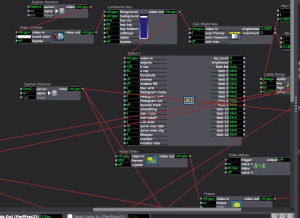

Pressure Project 3 Rubik’s Cube Shuffle

Posted: November 5, 2016 Filed under: Pressure Project 3, Taylor, Uncategorized Leave a comment »For this project, I learned the difficulties of chroma detecting. I was trying to create a patch that would play a certain song every time the die (a Rubik’s cube) landed on a certain color. Since this computer vision logic depends highly on lighting I trying to work in the same space (spiking the table and camera) so that my color ranges would be specific enough to achieve my goal. With Oded’s guidance, I decided to use a Simultaneity actor that would detect the Inside Ranges of two different chroma values through the Color Measure actor, which was connected to a Video In Watcher. I duplicated this set up six times, trying to use the most meaningful RGB color combinations for each side of the Rubik’s cube. The Simultaneity actor was plugged into a Trigger Value that triggered the songs through a Movie Player and Projector. Later in the process I wanted to use just specific parts of songs since I figured there would not be a lot of time between dice roles and I should put the meaning or connection up front. I did not have enough time to figure out multiple video players and toggles and I did not have time to edit the music outside of Isadora either, so I picked a place to start that worked relatively well with each song to get the point across. However, this was more changing when wrong colors were triggering songs. I feel like a little panache was lost by the system’s malfunction, but I think the struggle was mostly with the Webcam’s consistent refocusing – causing the use of larger ranges. I am also wondering if a white background might have worked better lighting wise. (Putting breaks of silence between the songs may have also been helpful to people’s processing of the connections btw colors and songs). Still, I think people had a relatively good time. I had also wanted the video of the die to spin when a song was played, but with readjusting the numbers for lighting conditions, which was done with Min/Max Value Holds to detect the range numbers, was enough to keep me busy. I chose not to write in my notebook in the dark and do not aurally process well, so I am not remembering other’s comments.

Here are the songs: (I trying to go for different genres and good songs)

Blue – Am I blue?- Billie Holiday

Red – Light My Fire – Live Extended Version. The Doors

Green – Paperbond- Wiz Khalifa

Orange – House of the Rising Sun- The Animals

White – Floating Away – Chill Beats Mix- compiled by Fluidify

Yellow – My girl- The Temptations

Also, Rubik’s Cube chroma detection is not a good idea for use in automating vehicles.

https://osu.box.com/s/5qv9tixqv3pcuma67u2w95jr115k5p0o PresProj3(1)

PP2_Taylor__E.T. Touch/Don’t Touch__little green light…

Posted: October 4, 2016 Filed under: Assignments, Pressure Project 2, Taylor Leave a comment »So… I still don’t think my brightness component is firing correctly but feel free to try it out by shining your flashlight at the camera to see if it works for you. pp2-taylor-izz

I felt that the Makey Makey was just as limiting as it was full of possibilities… not the biggest fan, maybe if the cords were longer and grounding simpler (like maybe I could have just made a bracelet)

So, I believe the first response to my system from the peanut gallery was “What happens if you touch them at the same time”… my answer to this, I don’t know… it probably will explode your computer, but feel free to try.

other responses: Alice and wonderland feel, grounding is reminiscent of a birthday hat, voyeuristic — watching the individual interact with the system (and seeing their facial reactions from behind).

I wonder what reactions would be like if people interacted with it in singular experiences… like just walking up not really knowing anything. I was thinking about the power of the green light, and if this would compel people to follow or break the rules (our class is always just looking to break some shit – oh that’s what it does…what if…). All of the E.T. references came about from me just thinking touch — what’s a cool touch?

And the whole thing was a bastardization of a patch I was working on for my rehearsal… not done yet but here is the beginning of that.pp2-play4pp-izz

Pressure Project 1 __ Taylor

Posted: September 22, 2016 Filed under: Assignments, Isadora, Pressure Project I, Reading Responses, Taylor | Tags: #frogger:) Leave a comment »Under Pressure, dun dun dun dadah dun dun

Alright… so I got super frustrated fiddling around in Isadora and my system ended up being what I learned from my failures in the first go round. Which was nice because I was able to plan based on what I couldn’t and didn’t want to do, leading me to make simpler choices. It seemed like everyone was enthusiastically trying to grok how to interact with the system. It felt like it kept everyone entertained and pretty engaged for more than 3 minutes. ? Some of the physical responses to my system were getting up on the feet, flailing about, moving left to right and using the depth of the space, clapping, whistling, and waving. Some of the aesthetic responses to my system were that the image reminded them of a microscope or a city. I tried to use slightly random and short intervals of time between scenes and build off of simple rules and random generators (and slight variations of the like), in an attempt to distract the brain away from connecting the pattern. For a time it seemed this proved successful, but after many cycles and finding out the complexities were more perceived than programmed enthusiasm waned. I really enjoyed this project and the ideas of scoring and iterations that accompanied it.

Actors I jotted down, that I liked in others processes: {user input/output/ create inside user actor}{counter/certain#of things can trigger}{alpha channel/ alpha mask}{chroma keying/ color tracking}{motion blur / can create pathways with rate of decay ? }{gate / can turn things on/off}{trigger value}

Reading things:

media object- representation

interaction, character, performer

scene, prop, Actor, costume, and mirror

space & time | here, there, or virtual / now or then

location anchored to media (aural possibilities), instrumental relationship, autonomous agent/ responsive, merging to define identity (cyborg tech), “the medium not only reflects back, but also refracts what is given” (love this). “The interplay between dramatic function / space / time is the real power — expansive range of performative possibilities …< <107/8ish.. maybe>>