Scrying – Cycle 3

Posted: December 15, 2023 Filed under: Uncategorized Leave a comment »For Cycle 3, I made a few expansions/updates!

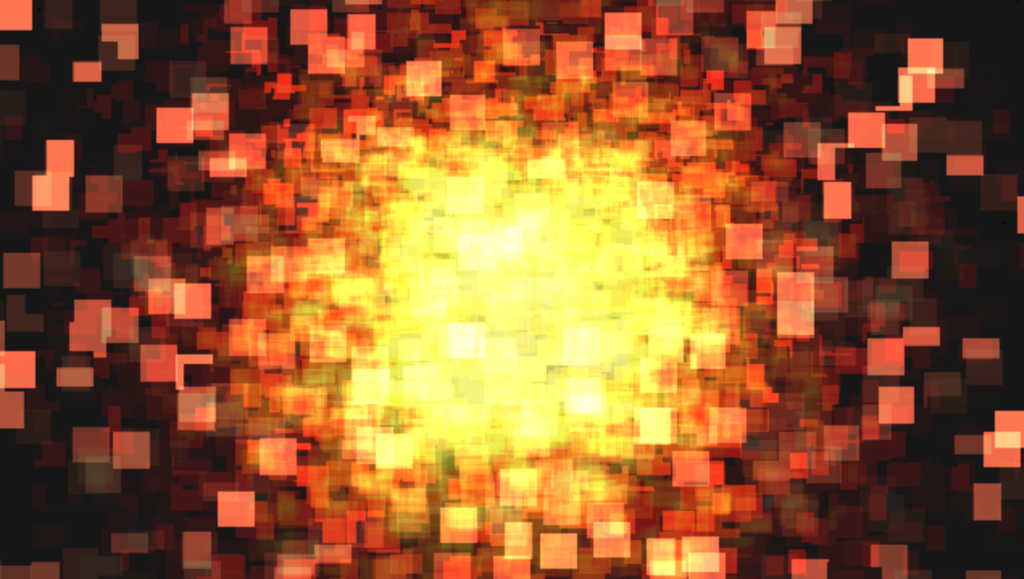

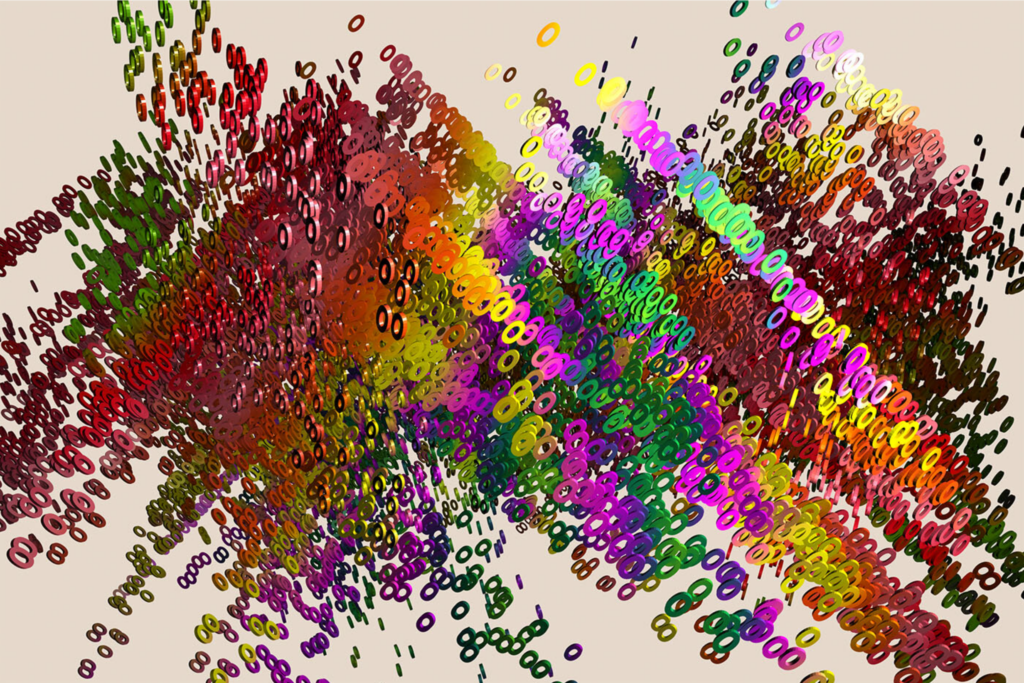

Firstly, I expanded the project from one to two crystal balls. This meant creating more visuals and more music. My goal was to contrast the slow, cool, foggy blue feeling of the first scene. I eventually settled on red as the primary color, and decided to make the visuals sharper and faster moving than those of the first scene:

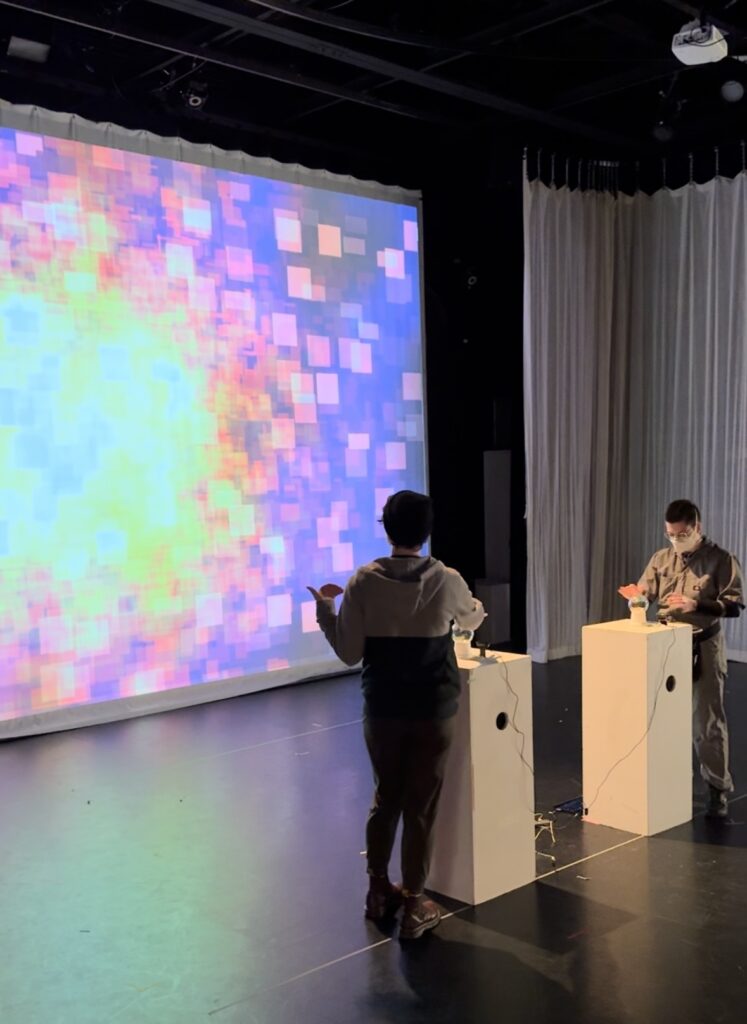

It was important to me to create visuals and music that would be interesting separately, but would also be able to combine well. If both crystal balls are being interacted with at once, both of their audiovisual scenes would play back at once. I wanted this combination to be a meaningful part of the experience.

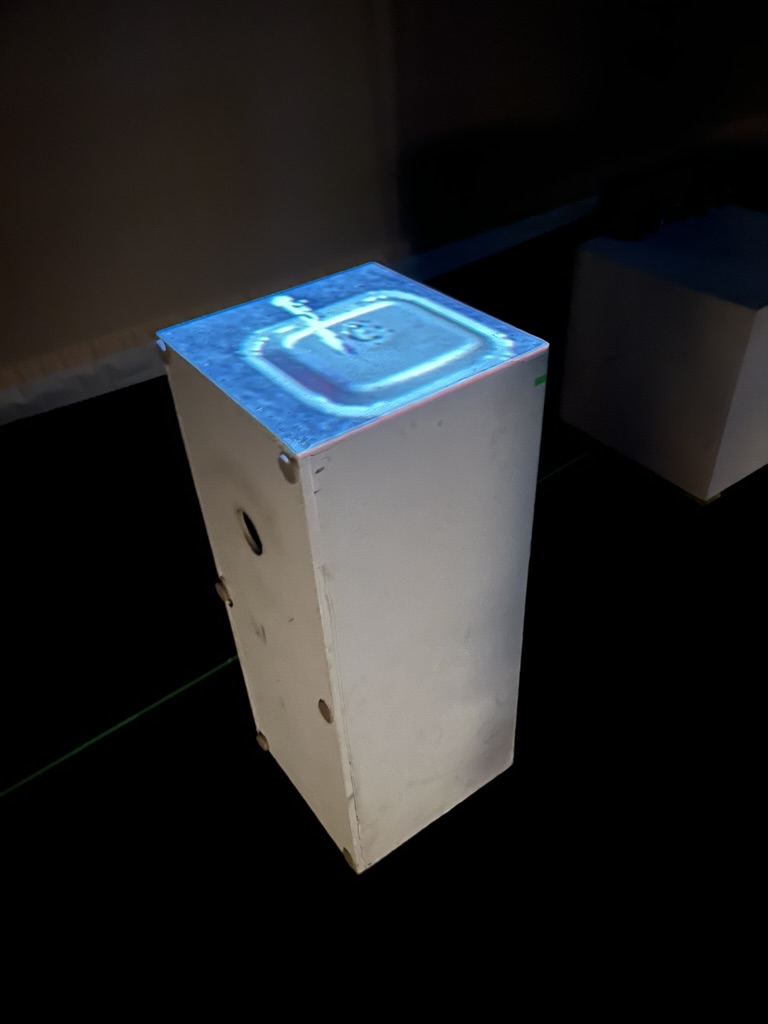

Additionally in this cycle, I added projection mapping onto the tops of the crystal ball stands themselves that triggered when the crystal ball was interacted with. These projections had a similar look and feel to their corresponding larger projections. This led to a whole host of technical problems but created a really cool effect, making it feel like something magical was happening on the stand itself.

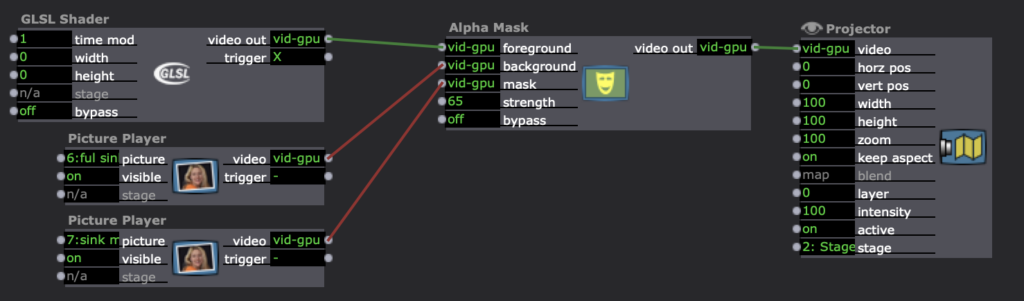

The problems occurred because, of course, the projection mapping consists of light, and the amount of light is what is being detected by the system to decide whether to play the scene or not (duh!……….) So the scene would trigger and would stay triggered because of the light from the projection. I solved this by utilizing masking in Isadora. I created masks to cover up the parts of the projections where the cameras would be.

My final addition was subtle, but I think significant. I added a very low drone to the space that played constantly, even when nothing was being triggered. This was included to transition the experiencers into the space and provide an audible contrast between a normal room and the installation space. My feeling was that without some sort of audible cue, the space would feel too “normal” when no sounds or visuals were being triggered. The low drone provided a tapestry on top of which the crystal balls could weave their music.

If I were to proceed forward with this piece, my first goal would be to add more crystal balls! I think the piece could work well and be even more interesting with several of them. They could interact with each other in so many different ways!

I also think I would like to incorporate something more to solidify the emotional themes of the piece. I really enjoy the visuals I created, and they are designed to represent very real and contrasting emotions. I also think it could be interesting to redo the visuals and incorporate something slightly less abstract, like videos of some significant place or event, and processing those in a visually interesting and artistically meaningful way.

Scrying – Cycle 2

Posted: December 15, 2023 Filed under: Uncategorized Leave a comment »For Cycle 2, I moved the project into the Motion Lab!

First, I needed to decide on a few things. How would I present the visuals? How would I present the crystal ball? How did I eventually want different crystal balls to interact with each other?

I ended up projecting the visuals onto the large front screen. The crystal ball I placed on a pedestal near the center of the room.

A challenge that presented itself during this cycle was the lighting conditions in the space. Ideally, the room would be quite dark, but of course as it turns out some amount of light is needed for the camera to detect any change in brightness (duh!….) Also, after being triggered once, the light from the projection could be reflected in the glass and interfere with the brightness detection, keeping it from ever shutting off! To solve these problems, I decided on using a small amount of constant top-down light shining on the crystal ball’s pedestal. The webcam would be positioned such that a clear shadow would be created on it when a person’s hand was near the crystal ball, and this lighting difference proved to be enough to create consistent behavior in spite of the other complicating factors.

For Cycle 3, the plan is to add more crystal balls and make more audiovisual scenes for them!

Scrying – Cycle 1

Posted: December 15, 2023 Filed under: Uncategorized Leave a comment »For my project, I decided to create crystal balls that, when interacted with, would result in audiovisual playback that embodied a certain affect. Each ball would represent a different affect, and would placed in different locations around the room. The act of “scrying” upon these affects using a crystal ball represents a rediscovery of, and a distinct distance from, the raw emotions of childhood. The crystal ball itself represents a suspension of rationality and a mystic lore that often feels so real to children.

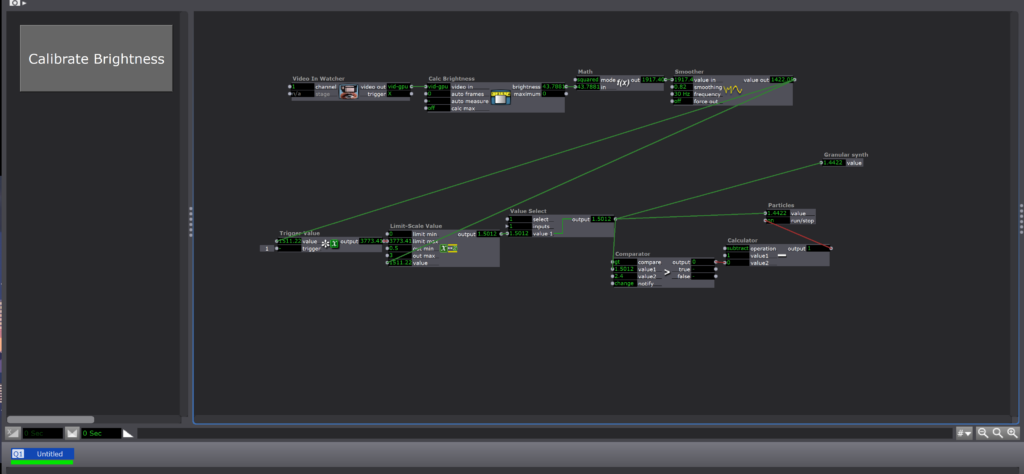

In Cycle 1, I created a prototype crystal ball that could send data to trigger audiovisual playback in Isadora. I ended up recycling the idea I had used in Pressure Projects 2 and 3, using the brightness from a webcam to determine if a person was interacting with the crystal ball. The idea was that the webcam would be installed in the base of the crystal ball and look upward through the glass.

Though the scope of the project is larger, the brightness detection system I used in this project is actually simpler than the one I used in Pressure Projects 2 and 3. Rather than determine how close a person’s hand was to the lens, the webcam is tasked simply with determining if a person’s hand is there or not. If it is, the visuals and music are triggered.

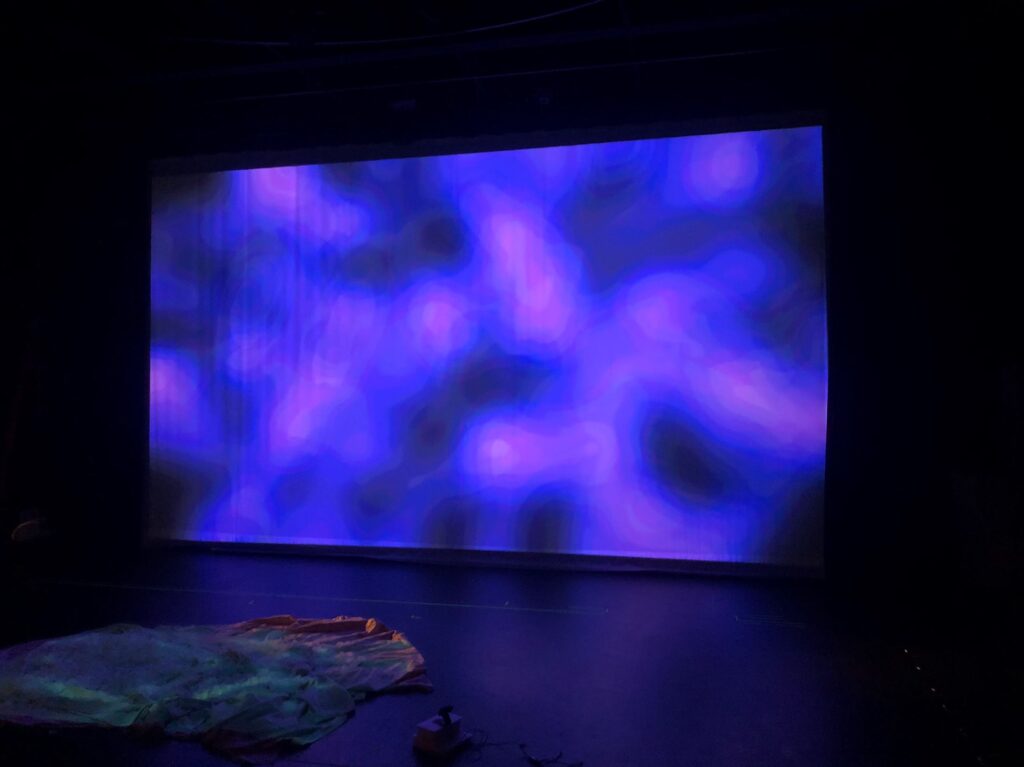

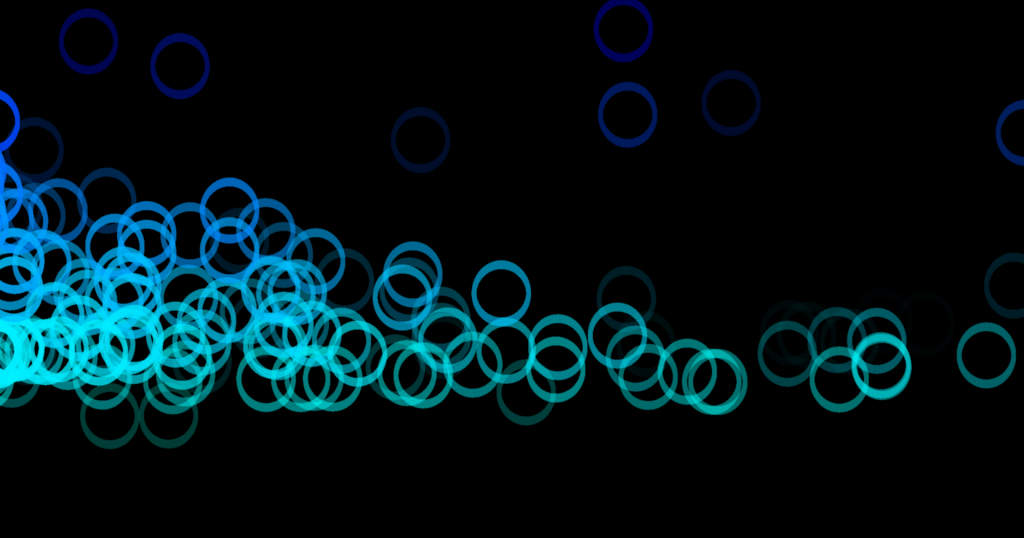

For the visual, I processed a simple blue square through an explode actor. I slowly modulated the parameters of the explode actor with wave generator actors and processed the results through lots of motion blur and Gaussian blur. Color shifts occured as bits of the same color overlaid on top of each other. The result was extremely textural and a lot more interesting than the list of parts makes it sound:

Pressure Project 3 – Webcam Theremin 2.0

Posted: December 15, 2023 Filed under: Uncategorized Leave a comment »For Pressure Project 3, we were tasked with refining Pressure Project 2 into something that could be shown at ACCAD’s open house honoring Chuck Csuri. In order create a more meaningful experience for this event, I changed a few things about my piece.

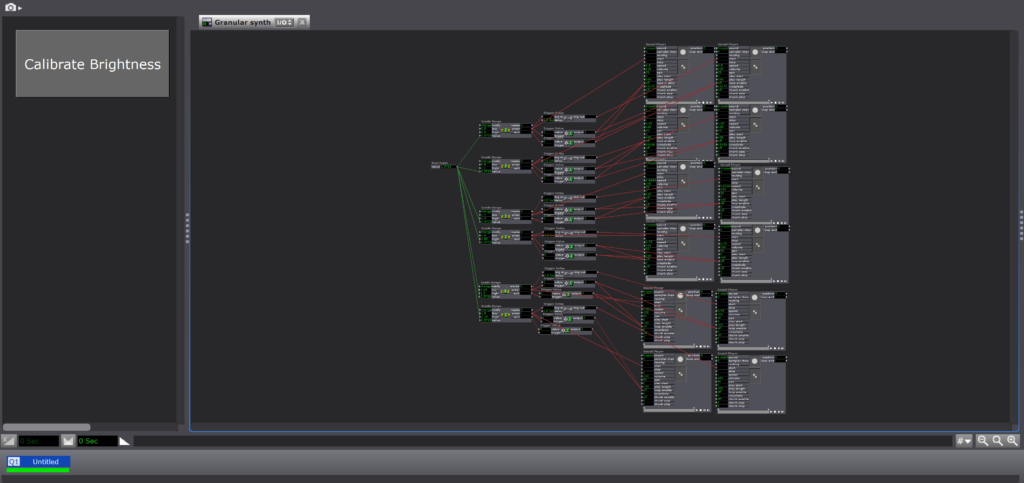

The first change I made was the sound of the “theremin”. Rather than have the sound be simply one voice that increased or decreased in pitch according to the position of a person’s hand, I programmed the sound to be triggered in a manner more similar to a guitar or harp, where instead notes are “plucked” as a person’s hand moves toward and away from the webcam and so can overlap as they decay to create chords. These “notes” are quantized to a very intentional scale and are treated with a variety of audio effects, such as delay/echo (before being imported into Isadora). A person interacting with the piece is invited to create their own path through the soundworld.

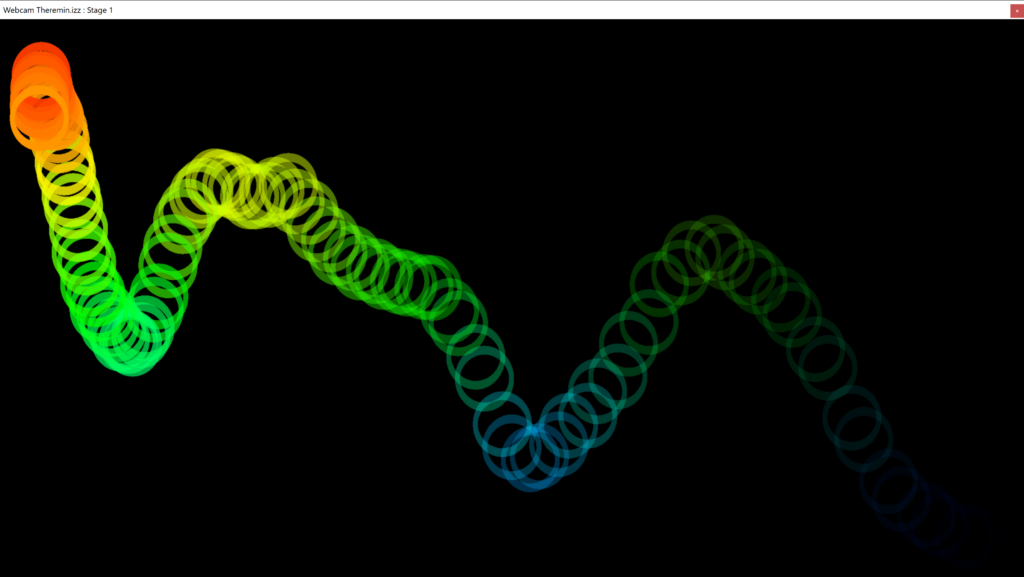

The remaining changes I made to the piece were visual. I altered the color of the generated Os to suit the feeling of the music (“cool” colors), and I slightly randomized the generation position as well as the movement of the Os through the screen space. In my view, this created a much more interesting and organic visual.

Cycle 3: House of Discovery

Posted: December 15, 2023 Filed under: Uncategorized Leave a comment »

For cycle 3, my main mission was to put all of my elements together. I had the stove/makey-makey element I had created for cycle 1, as well as the projection mappings I had done for cycle 2, and I needed to pull them together in order to make the experience I had set out to originally.

After a lot of thought about how to connect users to ground when using the stove and sink, I realized that I could easily do it by using separate piece of tinfoil on either side of each knob. This whole time, I had each knob wrapped in one singular piece, but by breaking that apart and connecting one side to ground and the other to its correct input, I could easily ground each user without making people take shoes off or touching other tinfoil elements (both options I cam dangerously close to doing!). Of course, this dawned on me the night before we were presenting our cycle 3s, which ended in me reworking a lot of my makey-makey design from cycle 1. However, I found this experience to be very representative of the RSVP cycle and creative process in general. Especially when you’re working “solo” (all work is collaborative to some extent), the sometimes obvious answer can be staring you in the face, and it just takes a while for you to find it.

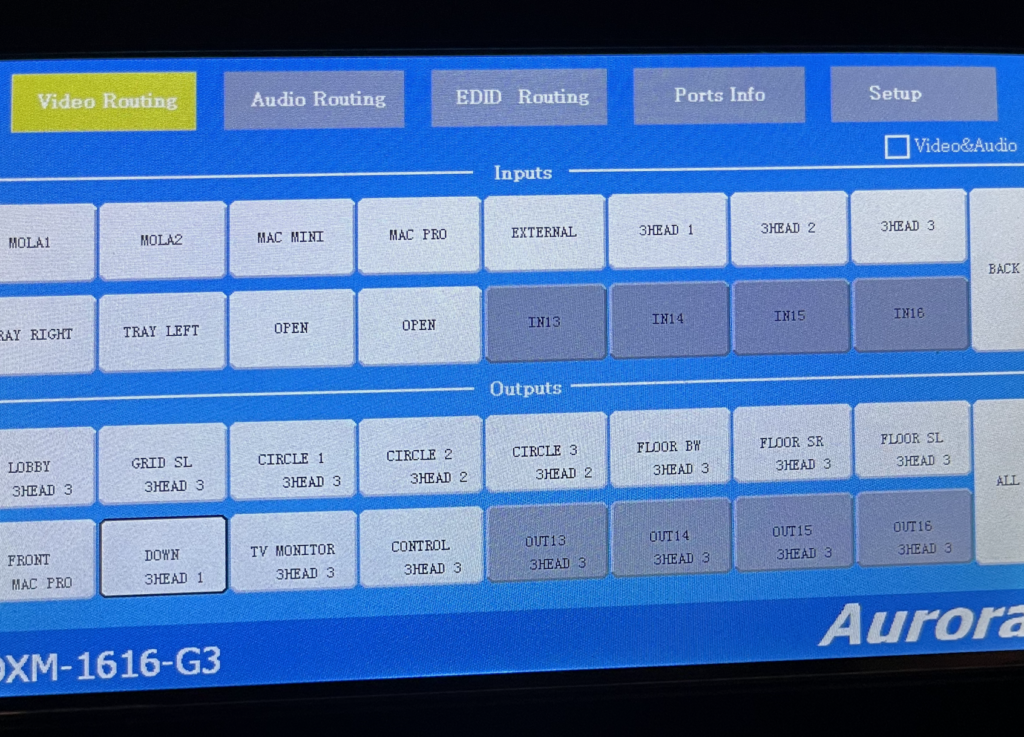

Still, this ground answer was a big breakthrough for me. All that was left was to add some more elements to upgrade my kitchen to an actual house. I decided to add a window to the left of the kitchen scene. In the patch, I set it so that every so often, a man’s faint silhouette would appear in the backyard, giving the experiencer the sense that they were being watched. It was at this point where I also decided to add a living room on another scrim to add dimension to the experience. I was able to set up some chairs in front to set it up as if if could be a place people could gather. I even created a cardboard “vase” with flowers (also made of cardboard and duct tape) to add to the room’s design. Of course, this additional room added complexity with yet another projector (projector count is now 3) and Isadora stage (Isadora stage count also 3). It was a quick picture for me to keep track of my outputs, so the framing isn’t great, but here’s a picture of the matrix switcher with my outputs in it:

And here’s a picture of the living room during cycle 3’s presentation:

Finally, after the presentation, I find that I would definitely like to make some updates for a theoretical cycle 4. I would focus a lot more on making my patch and experience robust. Some of my elements didn’t quite work during the showcase (thanks to a quick change and fault in logic on my part and OneDrive refusing to sync the morning of), so I’d like to focus on user testing and experience framing. There’s a lot to my idea that I don’t think I translated well into my cycle 3. I think the idea itself was big, so it was hard to tackle it all. If I were to go back to cycle 1, I would definitely downsize, making it easier for myself to have a more robust experience by the time we got to cycle 3. However, I could also 100% see myself saying I would want more time even if we had a cycle 4 and even 5. Since the RSVP cycle never ends, I’ll never quite be “happy” with the output. There’s always something to tweak.

However, I’m still quite proud of what I accomplished in the time given, and most of all what I learned. I never would have known how to do any of this–Isadora programming, projection mapping, experience design–before this class, and it’s given me a lot of ideas for the future and ongoing possibilities. Although I might not get a cycle 4 in the same context as these first 3 cycles, I’m excited to see where my new skills take me in the future.

Thank you all for this class–it’s been amazing, and I’ve truly enjoyed it and am endlessly grateful for this experience!

Pressure Project 2 – Webcam Theremin

Posted: December 15, 2023 Filed under: Uncategorized Leave a comment »For Pressure Project 2, I decided to make a theremin out of a webcam!

For the visual component, I was inspired by one of Chuck Csuri’s works, his Structural Fragmentation 2021.

The Isadora patch I created uses the total brightness of the webcam feed to decide whether to play sound, as well as how high/low the pitch should be. The webcam is placed on a table looking up at the ceiling, and a person is encouraged to use their hand to interact with it. The idea was that the video feed from the webcam would be detectably darker when a hand was placed over the lens, and the closer the hand was to the lens, the darker it would be. The fact that the vast majority of webcams have automatic brightness correction complicated this idea, but it still functioned mostly as intended.

As for the sonic element, I recorded myself singing an “oo” vowel so as to continue with the theme from Chuck Csuri’s piece. This sound was pitched up and down according to the distance the “performer” had their hand from the camera. Unfortunately, I think the vowel ended up not sounding identifiably like an “oo” vowel, or like a human voice at all, and instead ended up just sounding like a synthesized tone.

Visually, the Isadora patch starts creating Os on the screen when a hand is detected by the webcam. The Os are created on the left side and slide to the right side as they dissipate over time, leaving a clear trail. The higher the pitch of the theremin, the higher the Os are created on the screen (vertically), and vice versa. The color of the Os is also shifted according to the pitch of the theremin.

Cycle 2: Stove Maps

Posted: December 15, 2023 Filed under: Uncategorized Leave a comment »For my cycle 2, I was mostly focused on how I was physically going to present my outputs in motion lab. Since I had already created a main mechanism (my stove), I wanted to start work on the more visual element of the project.

In addition to the stove, I had decided to add a sink element, as this was another interactive piece of a kitchen/house I felt I could incorporate. I used Shadertoy to find a simple water GLSL shader that I connected to my sink user actor. When activated by pressing the tinfoil of the “faucet,” the GLSL shader would fade in, making the sink look full.

I used an alpha mask to achieve this effect. At this point, all of these “activations,” (like this and the stove burners) were happening by jump++ actors jumping to new scenes. This was a quick and dirty way of activating these elements, but I would soon have to update these to work more seamlessly within the same scene in order to simplify my patch and output.

Once I started working in motion lab, I first had to decide how I would handle the different output streams I had in my Isadora patch. I knew I needed at least two projectors: one that would show the background of the kitchen, and one that would show the stove top and sink.

Above are my sink and stove blocks–I had to be aware of how I could position them so that the top-down projector would still hit them. Now that they had been positioned, it was onto output stream management and mapping. I was using the Mac Pro in motion lab, and it took me a long time to sort out how to manage my outputs using the triple head and matrix switcher so that the correct images would project from the correct places. Not only did the matrix switcher have to be set correctly, but I had to fix my Isadora output so that each projection element was on its own stage. I got a lot of help from Alex on this, which was definitely needed.

Finally, I got it set so I could map. Above is my mapped sink. Even though it was mapping a square onto another square, it took a fair amount of time to find where exactly on the floor of motion lab the small sink was, and how I had to move it in order for it to be placed correctly.

From my presentation, I was finally able to present the idea of the space to the class. I got some valuable feedback in terms of switching the direction my stove and sink faced and decided to implement that going into cycle 3.

Cycle 3 (Amy)

Posted: December 14, 2023 Filed under: Uncategorized Leave a comment »Cycle 3: Ready for interaction – Title: ‘Undoing’

Artist Statement:

The roots of this project stem from a very personal experience. In October, unexpectedly, I was faced with the break down of an 8 year relationship. This caused a shattering of self which spun things out of control and messily permeated all areas of my life. It was a hard reset. I stopped eating. The ground disappeared beneath my feet and I could not summon any semblance of rationality. It also erased the need to cling to complex processes and technique which I had previously used to hide behind. It demanded a stripping down to raw honesty in myself and my work that felt both unfamiliar and deeply uncomfortable. I did not want to forget.

Grounded in Pauline Oliveros’s philosophy on deep listening, I explore the dissolving of self as an experience in the form of an interactive audio-visual installation. I believe productivity stems from the human desire for proof of existence which causes us to do, undo, and redo over and over again. To challenge this notion, I invite viewers to let go of the need to do and simply ‘be’ as they are relieved of their burden of self in the tangle of others. Simultaneously overwhelming and gentle, the outcome of the work is indeterminate and depends on the willingness of the viewer to engage and shape their own experience.

As a demonstration of the interdependence and power of interconnectedness, this project exists both also as a large-scale collective experience for 10+ participants, where the actions of each person impacts the overall outcome, as well as a solitary experience for one participant alone with headphones. Further development in Cycle 4 and beyond will include exploration into different spaces both inside and outside, framing the piece in ritual by using tactile materials such as sand, and ornamentation on lightbulbs as well as other sources of light reactivity. As a performance piece, I would like to explore methods of generative improvisation through the use of DAWs, as well as a purely acoustic version where people sing in a room together.

Reflection:

In this iteration, I was mainly interested in the responses of the participant. I devised several versions of this piece for various groups with the intention of creating a different experience for each situation. As the maker, instead of my usual eagerness for validation, I took a step back and observed. I was curious to see how people interacted with the piece out of context. Did they approach with apprehension? Were they able to be playful? How long did they stay for? Did they grasp for control? Did they allow for a collaborative experience? Were they able to take it in?

The more people engaged, the more I realized that the feedback of each participant told me was more about what kind of person they were than the actual piece. This was such a gift! I learned so much about what it means to have an experience and how to cultivate a sense of freedom and agency in a work.

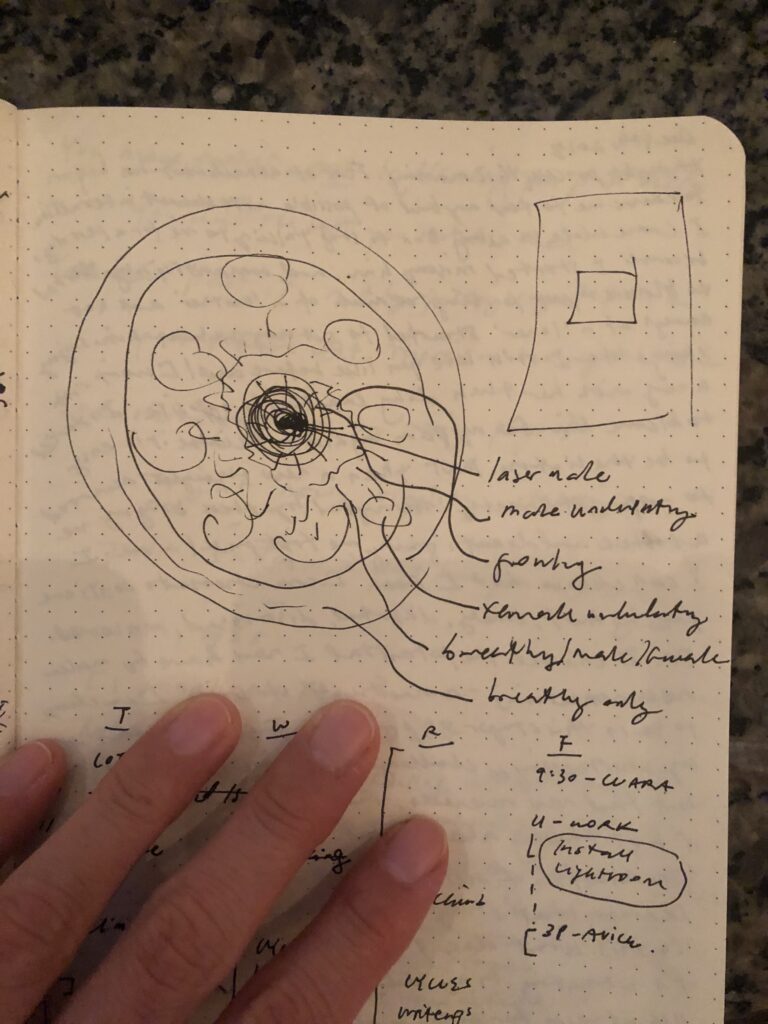

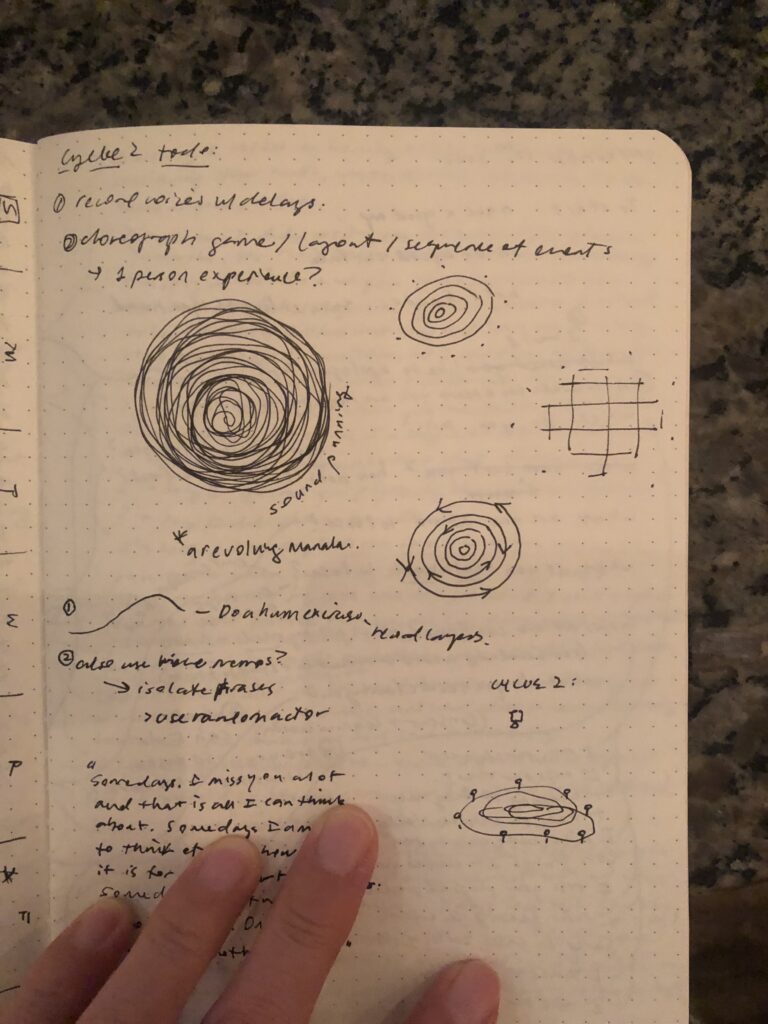

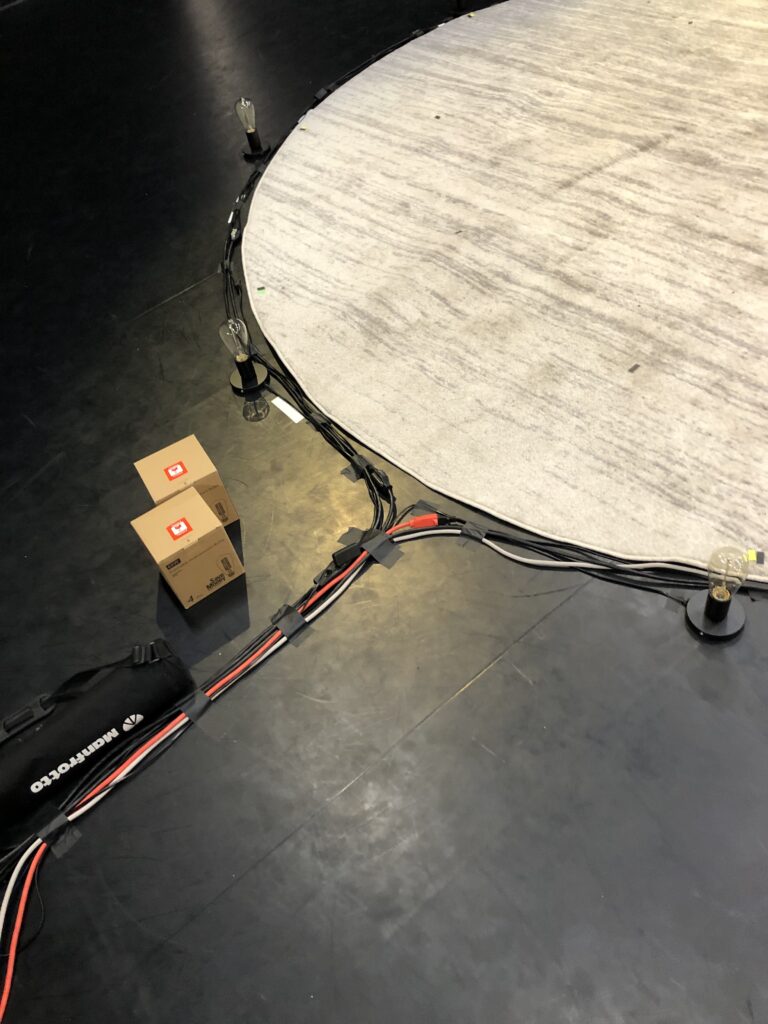

In terms of the piece itself, for Cycle 3 I mainly worked on refining the layering of voices and how they would fit into the shape of a mandala (for the rug!). For the final presentation in the motion lab, the sound formed a 5 layer mandala. I asked a vocalist friend (a true bass!) to record some very low tones for me. I placed these tones in the very center of the mandala and then gradually expanded outward from high vocal intensity to gentle sustaining tones to breathing on the outmost circle. During our final DEMS critique, I was thrilled to see how spontaneous and playful everyone was in their approach to the piece. Pure JOY!

Untangle – loosen – untie – undo is the mantra I have adopted through the creation of this work. In contrast to my previous need to finish a work and then determine it good or bad, I hope to continue developing iterations of this project embraced by the idea that we too exist in cycles.

Cycle 2 (Amy)

Posted: December 14, 2023 Filed under: Uncategorized Leave a comment »

Cycle 2: Implementation and expansion – working title: ‘Annihilation of self’

November 14th goal: Create a piece that allows for a fluid collective experience for the class.

Inspiration: ‘Deep listening takes us below the surface of our consciousness and helps to change or dissolve limiting boundaries.’ – Pauline Oliveros

Implementation: acquisition of resources and programming

- Light –

- 8 dimmable lightbulbs

- 8 socket bases

- Chauvet DJ DMX 4-4 channel (lightbulbs > Enttec)

- Enttec USB Pro (DMX > Computer)

- Motion –

- Orbbec Depth Sensor (installed on ceiling of Motion Lab)

- Sound –

- Solo voice improvisation, recording, layering

- 2 Portable Speakers

Reflection:

Developing from Cycle 1, mostly a sketch using motion detection, translating to sound and light reactivity, Cycle 2 really fleshed out the the piece and set up for a collective experience in preparation for Cycle 3.

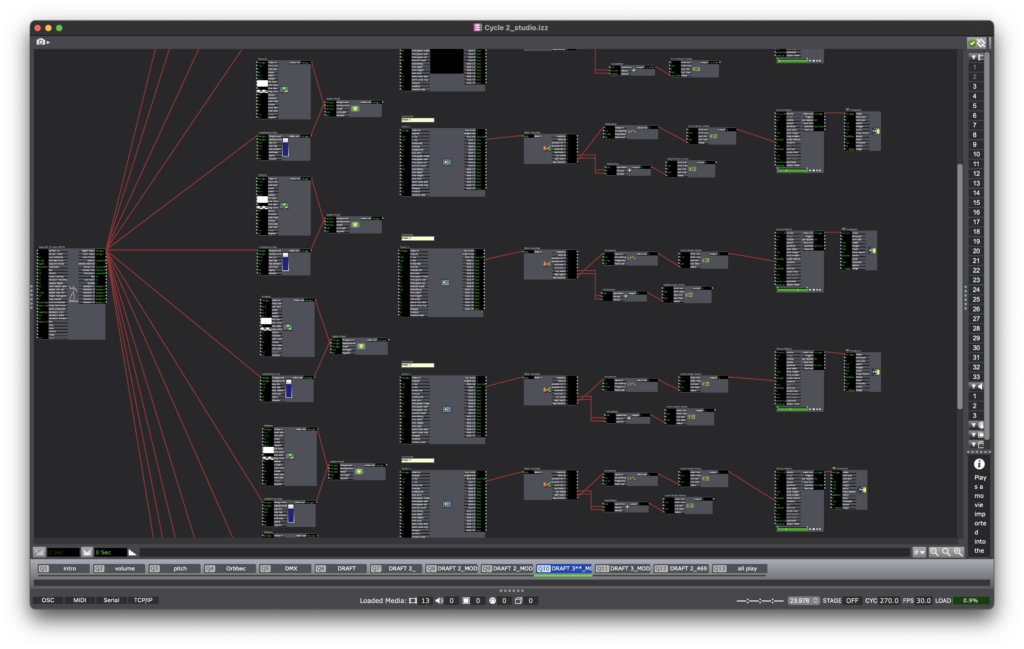

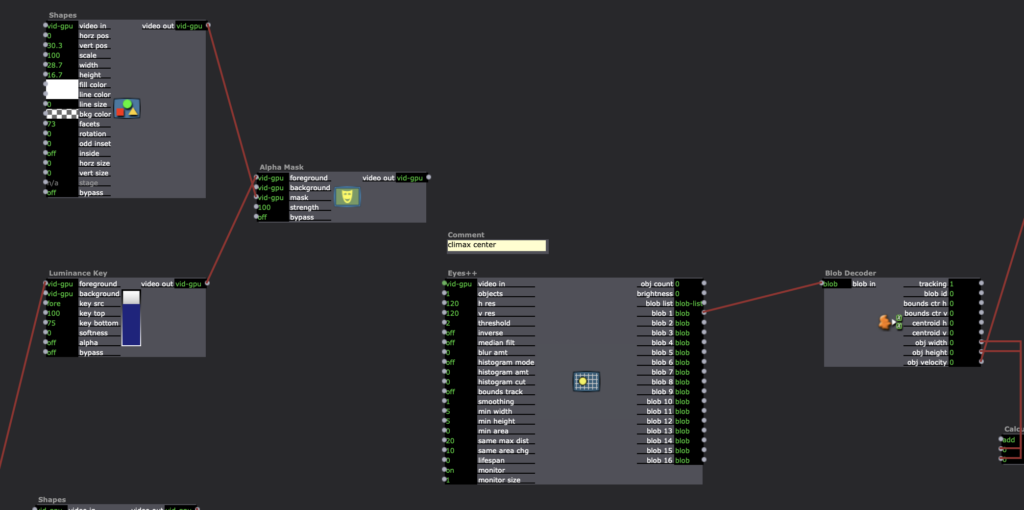

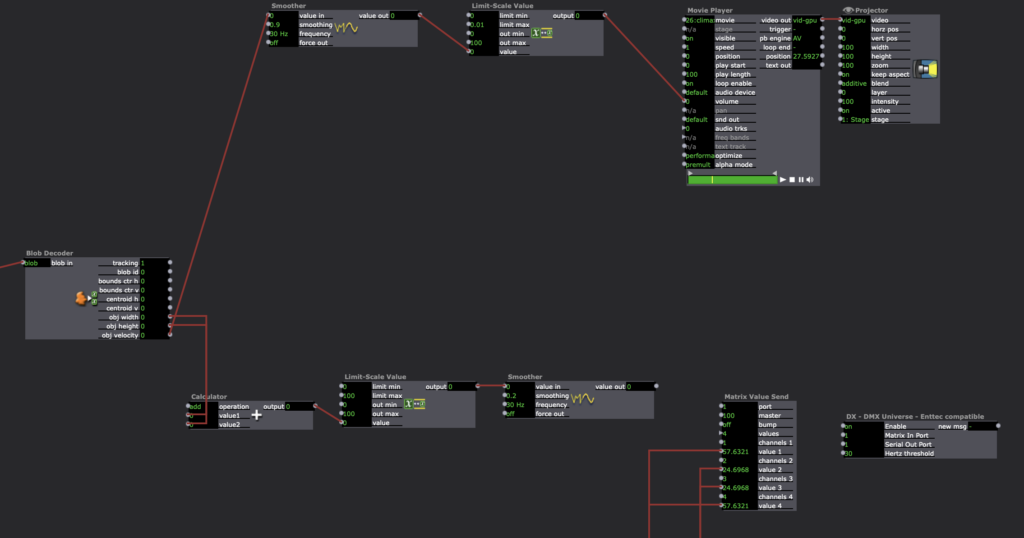

A crucial addition was CG’s fantastic 11ft circular rug! It provided the perfect layout for our group and served as a physical ‘score’ for the piece. To detect motion, we hung an Orbbec from the ceiling facing the floor, which provided a bird’s-eye-view for my reactivity map. As people stepped onto the rug, the Orbbec would trigger sound and light in response to their position and movements. In Isadora, the mechanisms for operation were: OpenNI (Orbbec detection) > Luminance key > Alpha Mask (for circular crop) > Eyes++ > blob detection, which then controlled the volume of sound files on loop and levels of brightness for the DMX output.

The biggest challenge for this iteration was the set up. Because each lightbulb required its own extension cord, the set up and tear down became quite labor intensive and each took about an hour. It was definitely worth the effort. The lightbulbs were entrancing! Seeing the initial concept of light and sound ‘waking up’ to physical presence was utterly magical. Much credit goes to Nico for generously providing mesmerizing dance as a quick demo. Thanks Nico!

Cycle 1 (Amy)

Posted: December 14, 2023 Filed under: Uncategorized Leave a comment »Cycle 1: research and experiments, Initial title – ‘the things you don’t see’

October 11th goal: I would like for someone’s presence, just being, to be sensed. They are reacting to the system reacting to them, it’s reciprocated, ongoing. The feedback loop of interaction.

Research: keywords and quotes from Quantum Listening by Pauline Oliveros

- ‘Listening field…’ – pg 13

- ‘Listening to their [audience] listening.’ – pg 16

- ‘Deep listening takes us below the surface of our consciousness and helps to change or dissolve limiting boundaries.’ – pg 38

- ‘What if you could hear the frequency of colors?’ – pg 40

Reflection:

In this initial stage, my desires were a jumble. I had the vague idea of creating an interactive audio-visual installation that ‘woke up’ to physical presence and responded generatively to subtle bodily movements. I was also very intrigued by the concept of deep listening and the philosophy of Pauline Oliveros during this time, particularly, her work ‘Teach yourself to Fly.’ In this work, a group of people sing together, responding to and diverging from the voices of those around them. The result is an organic sound cloud that is ever shifting, highlighting breath, fluidity, and a state of impermanence.

Alex provided guidance by helping me sift through my thoughts and in constructing a clear directive. The first was – how do I use the Orbbec to pick up movement and how is that reflected through programming in Isadora? Second – how do I transfer this response onto a lightbulb using a DMX box? Third – how does the motion translate or manipulate sound output?

In Cycle 1, I programmed the bare bones of motion and sound reactivity in Isadora. AND got the lightbulbs to work, yay!