Cycle 3: PALIMPSEST

Posted: December 11, 2024 Filed under: Uncategorized Leave a comment »As I ponder the 3rd iteration of this cyclical project, I am reminded of how much time is an incredibly valuable resource in the RSVP cycle. I have struggled with feeling so close to my MFA project both in choreography and projection design that there have been moments where I have been unable to see what is actually occurring. A huge moment of learning occurred for me following our Thanksgiving break. With the gift of a few days away from OSU, I found myself able to view my choreography and projection design with more fresh eyes and some objectivity. Within a very quick turnaround, I was able to determine some factors that has made a tremendous impact on this cycle.

In this cycle, performance and valuaction were deeply important. I was working towards a Dec 5th showing in the Dance Dept to all faculty and also our Cycle 3 presentations on Dec 9th. I wanted to create and show at least 10 minutes of choreography and projection design accompanied by some music, lighting, and costuming ideas so that my advisors and peers could have a sense of the world I was trying to communicate and access through this piece.

My initial goals for this cycle were to slow down the projections and create some more negative space on the floor for my dancers to move through and emulate an interactive relationship with the projection. Through both feedback from faculty, peers, and other artists, I realized that the projection on the floor had a very strong definitive edge of a rectangle that actively shrunk a lot of the dancing space. This meant that whenever a dancer stepped out of the perimeter of the rectangle, it looked like a mistake in choreography. To combat this, I initially attempted to use a crescent or circle shape as a mask in Isadora, but still felt like the shape was far too crisp. Due to time constraints, I was unable to finish a version of the projection that utilized organic shapes for the Dec 5th showing in the Dance Dept.

In the Dec 5th showing, my cohort had decided to use the black marley in the Barnett which meant that this would be my first time seeing the projections on a black floor. In all honesty, I was quite delighted by the black floor—there was a sense of depth and texture that the black floor offered that was not quite accessible when compared to the white floor. This piece, currently entitled PALIMPSEST, references a manuscript where text is effaced and then new text is written upon it. I view each dancer as deeply important to the layering of the piece. I intend that PALIMPSEST communicates a meditation on small nuanced intercultural experiences that draw from my Taiwanese-American diasporic worldview.

Below is the video of the Dec 5th showing

Following the Dec 5th showing, I was finally able to take the time to figure out how to use an organic shape as a mask. I wanted to use an image of a banyan tree from Taiwan. This felt like a little Easter egg for myself—I frequently imagine the movement of my dancers as hybrid bird-banyan trees. With support from Annelise, I figured out how to use the banyan tree as a mask in Isadora and was deeply surprised at how the organic shape transformed the possibilities of the projection.

In our Cycle 3 presentation on Dec 9, I showed a new version of the projection that played with more negative space and utilized the banyan tree mask. I also began to play with adding some more blackouts in the projection so that I could imagine what the choreography could look like without any projection. I am imagining that for my 20ish minute piece, projection will only be present for at most half to two-thirds of the piece.

In our feedback during cycle 3, I was glad to hear that the black floor resonated with my peers and that the banyan tree mask created a feeling that the projection was another dancer. People used words like desert, tension, moss, mind of its own to describe the projection in collaboration with music. I do feel that these words reflect where I desire to go forward in this project. I would like to be able to envision the projection as another dancer in the space. Returning to the RSVP cycle, I want to acknowledge that I am at the stage of making where I deeply need some time away before returning to see what I have actually made. As I look towards the next cycles leading up to my MFA project performance at the end of February 2025, I desire to return to solidifying what the choreography is so that I can make more informed choices about when the projections might be dancing in the space with the other performers.

Cycle 3

Posted: December 11, 2024 Filed under: Uncategorized Leave a comment »Naiya Dawson

For cycle three I presented the patches and videos I created on isadora in the motion lab. I used two scrims and four projectors to project my videos four different ways. Presenting in the motion lab allowed me to see what projection was the most interesting and what editing techniques looked good and what didnt/could change. If I were to do cycle 4 and beyond I would want to play with more floor projection and the videos with the difference actor effect. I also would want to have a dancer in the space and create the live drawings I used to match up with the live dancing.

Cycle 3

Posted: December 11, 2024 Filed under: Uncategorized Leave a comment »Resources

Motion Lab

- Projectors and screens

- Visual Light Cameras

- Overhead depth sensor

Castle

Blender

Isadora

Adobe Creative Suite

Poplar Dowel Rod

MaKey MaKey

Arduino Board

Accelerometer/Gyroscope

Bluetooth card

Score

Guests are staged in the entryway of the Motion Lab. They are met by the “magical conductor” who invites them to come in with a wish in their hearts.

As guests file into the completely dark room housing only an unlit castle the song “When You Wish Upon a Star” from the Pinocchio soundtrack begins to play.

Projections onto the castle are delayed by approximately 10 seconds. Projections start slow, progressively showcasing the full range of mapped projection capabilities (i.e. isolating each element of the castle.

Stars shoot across the front of the castle corresponding to the first two mentions of “when you wish upon a star”

The projections steadily increase in intensity.

As the novelty of the intensified projections wears off, the magical conductor enters the scene to demonstrate the capabilities of the magic wand, introducing a new dimension to the experience.

The magical conductor offers the magic wand to guests who can try their hand at interacting with the castle.

The music fades along with the projections.

The Peppers Ghost emerges from the castle entrance and thanks guests for coming. Fades out.

This score outlines the behavior of my relatively low-tech approach to creating a magic wand. As a physical object, the magic wand required only a poplar dowel rod and a little bit of imagination.

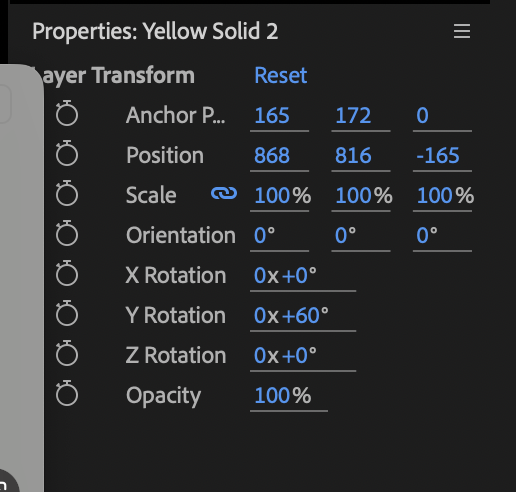

Isadora Score for Cycle 3 iteration. Entering the scene activates the control scene, which controls magic wand functionality and provides the manual star control. Entering the scene also initiates a timer to launch the first star around the first mention of “Wish Upon a Star”. The Sound level watcher ++ creates a connection between the music and the projection’s brightness.

Valuation

Embrace the bleed – I spent hours during cycles 1 and 2 trying to tighten my projection mapping and reduce bleed around the edges. During our feedback session for Cycle 2, one of my classmates pointed out that the bleed outlining the castle on the main projection screen actually looked pretty cool. I immediately recognized that they were right, the silhouette of the castle was a striking image. It took time for me to fully embrace this idea.

Simplify to achieve balance – I started to hear Alex’s voice ringing in my ears over the course of this project. This was the most critical lesson for me to take away from this course. I dream big. Probably too big. I always want to create magic in my projects, I have an impulse to improve and expand and

During cycle 3 I made several conscious simplifications to achieve balance, i.e. to deliver a magical experience within the allotted time.

I removed the stone facade projection from the castle. This was a neat effect, but the lines in the stone made imperfections in the mapping readily apparent. The payoff for the audience was marginal and diminished quickly. Removing this element allowed investment in features that made the experience more immersive and engaging.

I simplified the function of the magic wand (which had already been simplified several times from the original concept of a digital fireworks cannon). Prior to the final iteration, the concept for the wand was to embed a small Arduino chip, an accelerometer, and battery to capture movements and trajectories, sending signals to the computer over bluetooth that would trigger events in Isadora. The simplified concept used the Motion Lab’s depth sensor to detect activity in a range over the top of the castle. The active areas were cropped to isolate the effect to specific areas of the castle. An auxiliary effect was added to the Isadora interface with a button that enabled the operator to trigger a shooting star when guests tried to perform magic tricks that were not programmed into the system.

Early concepts for what would become the Magic Wand called for a blaster. At various times the blaster was to house some combination a Nintendo Wii remote, a phone using Data OSC, or an Arduino board with a gyroscope/accelerometer and bluetooth card. Other conceptual drawings outline the operation whereby the pull rope connected to a PVC pipe on an elastic band inside the blaster would close a circuit to send a signal to the computer to fire a digital firework along the trajectory of the blaster. (More drawings will be uploaded upon retrieval of my notebook from the Motion Lab).

I simplified the castle, removing several intended features from the castle including multiple towers, parapets, and roofs. These features would have been visually striking and several towers were already cut and shaped, but each additional tower increases complexity and time costs. Truthfully if I had not broken the castle twice (once on the night before final installation in the motion lab and again on the way into the building the morning of final installation) I likely would have added a few additional towers. It’s not clear whether this would have substantially improved the final experience.

The most difficult simplification was cancelling plans to include a Pepper’s ghost in the doorway – this was the hardest to part with because the potential payoff to guests was huge. Other features like windows with waving character silhouettes were also difficult to cut because they would have improved upon the magic of the experience.

Manage the magic – I created gigabytes of interesting visualizations, 3D models with reflective glass and metal textures, and digital fireworks for this project and it was very tempting to bring everything in and have the experience run at full intensity throughout, but this approach burns too quickly. People need time to adapt and recover in order for escalations to be effective. Metering out the experience requires a measure of discipline.

This score shows an emotional journey map of the planned experience prior to dropping plans for the Pepper’s Ghost in the week prior to the performance. Ultimately removing the ghost still allowed for escalation of emotional intensity and a meaningful journey, where diverting time to building it would have put the project completion in jeopardy.

Simplify the projection mapping – Prior efforts to simplify my approach to projection mapping had been unsuccessful and at this point multiple projectors could no longer be avoided. I decided to change my approach to do much of the mapping in the media itself to reduce the amount of mapping necessary at installation.

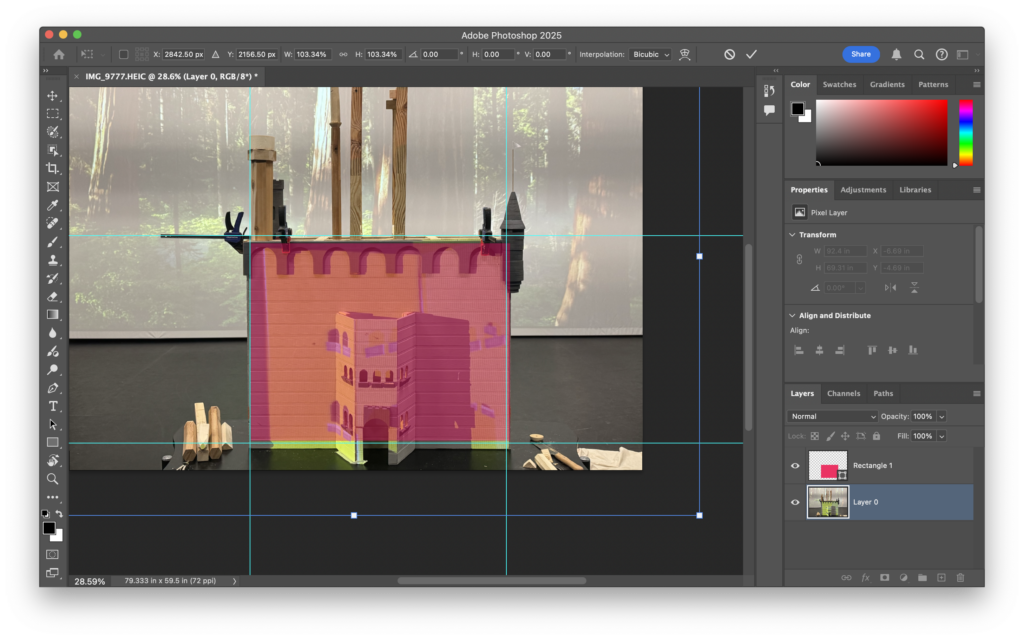

I used multiple approaches to support this effort with the expectation that some may fail, but that diversification would ultimately lead to the greatest chance for success. First I took photos of the castle from the projectors’ vantage point. Next I measured the distance, rotation, and orientation of the projectors in relation to the castle to model the space in Adobe After Effects. Finally I built a 3D model of the castle in Blender, hoping that I would be able to import the obj files into Isadora and use my projections as textures. This was a pipe dream that did not work, but I did manage to create something interesting that I couldn’t figure out how to actually use in time for the final presentation.

A cool effort that turned out to be only marginally useful in the end. Given more time I would love to use this OBJ file to reflect things in the environment like fireworks that could create a really interesting and cohesive experience.

I used images of the castle to help build digital models.

Early attempts to model the castle using Adobe After Effects and position cameras to correspond with the projector positioning were time consuming and came close to approximating the environment, but ultimately unsuccessful. Using the measurements taken from the motion lab helped close in on actual positioning, but the angles and exact positioning proved elusive.

The most useful asset proved to be line tracings from the perspective of the motion lab projectors that I could pull into After Effects to create graphics that were very close to the actual dimensions of the castle. These were much easier to map onto the castle and only require minor distortions to account for imperfections in my photography approach.

Performance

I think that many of my theories were confirmed during the performance. The flow worked well. Nobody mentioned the removal of the digital stone facade. Classmates enjoyed playing with the magic wand even during the feedback session. The silhouette of the castle on the main projector made for a striking visual and gave an impression of being larger-than-life. The magical conductor role was looked upon favorably. Obviously the Pepper’s ghost was not mentioned because it was a secret closer, but I suspect that if well executed it would have fit well with the overall theming and magic.

One major oversight that would have been embarrassingly simple to execute is the addition of an audio indicator for successful magic wand interactions. I added these in post to demonstrate how it might have changed the experience.

What could have been…

Cycle 2

Posted: December 11, 2024 Filed under: Uncategorized Leave a comment »Several key take aways from cycle 1 defined my approach to cycle 2:

- Projection mapping is going to be a lot harder than I expected.

- Find a way out of the complexity. The decision to project onto a structure under active development means that I have no stability in projection mapping. I need to either find a faster way to build the castle and/or a more flexible approach to projection mapping.

- Go all in on immersion. Front facing projection is going to be inadequate. The contrast between an animated forward face and the dead sides destroys the immersion on the experience. Conversely, participants in the experience wanted to interact with it. Providing pathways to interact with the castle could be a big win for immersion.

- The little things are the big things. There is a lot of potential delight in thoughtfully-placed, well-executed micro-animations.

The key to all of this is in my ability to improve my approach to projection mapping. As suggested during the feedback session, I decided to pursue an approach where I could fix my projection mapping once.

There were a number of options available to me. The easiest way to decrease my risk and create a positive experience would be to nix the castle and project onto something that was rigid, static, and reproducible; however in terms of the RSVP cycle, the castle was a major part of my valuation and was therefore non-negotiable.

The next option to consider was to expedite development on the castle. This was appealing, but only to a point. Castle construction involved the use of power tools. While there’s an upper limit to how fast it is advisable to move when working with a blade spinning at 3500 RPM, increasing the efficiency of my techniques for building the castle (score) without sacrificing safety was a good idea.

My plans for the castle included several hexagonal and several cylindrical towers. With no lathe available (resources) to create dowels, I relied on a process to build my cylindrical towers that included using a hole saw to cut out sections of the tower that I could later stack. This approach allowed me precise control over the tapering at the top and bottoms of the towers. I directly adapted this approach to create my hexagonal towers, by using the radii of the circle to mark out the vertices of the hexagon, then using a bandsaw to cut down to the edges.

To make this process more efficient, I can use a table saw with the blade set to 30º to do 2 rip cuts down the length of a board to dramatically decrease the time cost for building a hexagonal tower with a manageable increase to safety risk.

Beyond improving my building techniques, I was able to decide decrease the complexity of my castle design. I reduced the overall number of towers in my plan and I altered my approach to building rooftops. This reduced the overall risk to my project, but the castle remained a significant risk. Mistakes in the digital space can be undone with a keystroke. Mistakes in woodworking (of the non-digit removing variety) come with a much larger time cost.

For the digital side of the Cycle 2, I devised several new approaches to handle my projection mapping. My initial concept was simple enough. Scene 1 would Get Stage Image from Virtual Stages and feed those through pre-mapped projectors for the specific image.

The top of this page outlines some of the ideas I had for projecting. The bottom half outlines my concept for simplifying my projection mapping.

The execution went a little off the rails. I chose to build this approach by projecting onto a cube instead of the castle with the expectation that I could build the scaffolding with a simple use case and easily scale up my approach with a more complex target surface. Looking back at my initial concept, it seems manageable, but during the execution I got lost in the weeds and couldn’t fully understand what was going on in Isadora. During cycle 2 I had a basic grasp of how to work the Motion Lab media controls, but was not completely fluent. I also struggled to fully understand how a virtual stage actually works. In the end I built a user actor to accept media assets as inputs and tweak the parameters of the target projection.

This approach was more simple than my initial concept early on, but quickly became more complex and unwieldy as I needed to expand the User Actor to adapt to my use cases. I was ultimately able to get this approach to work, but it did not yield a dramatic improvement in efficiency over my Cycle 1 projection mapping approach.

For the Cycle 2 performance, I elected to drop the background projection and focus on the Castle projections. I kept the forward facing projector for simplicity’s sake, electing to add the necessary complexity after tackling the mapping challenges. I changed the castle texture to a style that more accurately captured my intent. I added some delightful micro-animations like flags that illuminated and a fire that flickered in the entry hallway. I stripped the music to allow my castle to stand on its own (though I had full intention of bringing music back during Cycle 3).

I also created fireworks using the 3D particles actor that I did not display until a classmate specifically recommended it. The fireworks were time-consuming to produce in Isadora and the effect was shabby at best. Still, my ultimate plan was to make them an interactive component of the final presentation, so I persisted. The ultimate effect was underwhelming.

During the feedback session for cycle 2, a classmate pointed out that the light bleed on the main projector screen made for a cool effect. I hadn’t noticed this prior to it being pointed out, but I agreed and took note.

Another piece of feedback centered around the sizing of the stones on the facade. It took a lot of effort to bring them in and map them out, but they were turning out to present more trouble than the value they provided.

Cycle 1

Posted: December 10, 2024 Filed under: Uncategorized Leave a comment »Resources

An open face box made of Southern Yellow Pine and pre-primed wood paneling.

A big white box

A roll of paper

Some trash from the Motion Lab

Several boxes to form makeshift parapets

Giant white sheets

Isadora

Blackhole

Youtube

Score

The audience is staged in the Motion Lab entry way. They are invited in to see a large projection of a winter landscape behind a bunch of junk on a table. The junk transforms into a castle when hit by the projection. I used an image of a stone wall projection mapped across the front of the facade to achieve this effect. After a period of acclimation, the second scene is triggered and a freezing sound plays over the Motion lab speaker system. A distorted white bar raises across the facade of the castle dragging a blue video with a morphing snowflake along with it. Let it Go begins to play over the speakers.

The first scene defines all of my interactions. I would later pull these into a user actor that I could easily trigger within my Castle Project scene.

This scene outlines my basic conditions. Each section corresponds to a different piece of the castle. One section projects onto the roof, one handles the stone on the facade. Another projects the door, and yet another is projecting the windows onto the tower with a comment “Why did I do this?” When I finally got it working I felt like it was a minor detail that no one would even notice.

This is the user actor that defines my freeze behavior. When I hit the trigger the castle freezes over and the Frozen music is played.

I was actually pretty proud of this. I used gaussian blur on a white rectangle to make the base of the freezing line. Next I warped this image using projection mapping to give it a more organic appearance. The effect in action was really very cool (but I forgot to record it due to nerves, so it lives on only in memory).

Valuation

I love the projection shows at Walt Disney World. Harmonious at EPCOT was the first show that I ever saw. The tight integration of audio with projection with fireworks and water displays… the mere fact that they’re projecting movie scenes onto water. The whole experience changed me at some deep level. It changed what I assumed was possible. It changed my perspective on fireworks shows, which I had always found to be rather boring displays that I would sometimes feel social pressure to pretend to enjoy. It solidified my desire to become a Disney Imagineer.

For my cycle projects I wanted to do something that Disney does better than anyone. Blend multi-sensory stimuli to create a meaningful, impactful shared experience.

For cycle 1, my plan was to get into the Motion Lab and see what was available to me. I started off with projection mapping. Our first exposure, mapping onto a tilted white cube was quick and fun. How hard can projection mapping be?

As it turns out… Really. Effing. Hard.

After hours of effort I was able to map textures onto the castle and towers reasonably well. This experience shaped my approach to cycle 2.

Performance

This felt like cheating. The performance was well received and both transitions elicited audible reactions from the audience, but I used a beloved song (“Let It Go” from the movie Frozen). I felt people were largely reacting to their love of the song rather than the experience that was put together. Yes, the song is part of the experience, but it did too much of the lifting for me and I didn’t feel like the experience itself was standing on its own merit.

The feedback was really helpful. Classmates noted that they were immersed in the illusion and felt like they could go inside the doorway to the castle (side note – in hindsight I’m really surprised that the projector was able to output black so well).

I received notes about how I could use minor details to create light ambiance and make the experience more engaging. I could create footprints in the snow as audience members walk about. I could build slight animations into the facade of the castle, maybe vines grow over the castle. I could put candles in the portholes on the tower (yes, they were in fact noticed).

The little castle that could. This is the scene that the class walked into. Allowing the audience to see this first gave the second scene greater effect. This scene is a little chaotic, pretty messy, and not almost a little sad. The next scene, however…

Not so shabby… The castle with textures projected onto the face. Note the bleed around the main projector screen. I was certain that I had done away with this before the performance. I didn’t notice these artifacts until they were pointed out to me.

Note here that the bleed artifacts have changed. I did not recognize this until posting. The reason for this – the motion lab floor. The floor gives as people walk across it, and this can cause projections to move slightly in response to foot traffic.

This is all that was captured during the initial performance of cycle 1. On the castle you can see the semi-transparent snowflake video projected onto the front of the castle.

Initial sketches of the castle concept and notes from feedback session of Cycle 1

Pressure Project 3

Posted: December 10, 2024 Filed under: Uncategorized Leave a comment »Assignment – Use audio to tell a story that is important to your culture (broadly defined)

I actually did this project twice. I got a request to not share my first project online for privacy reasons, so I chose a new story and re-did the project.

The story posted here is arguably among the most important stories worldwide over the last 30 years. Maybe I’m being provocative. Maybe not.

Resources

Blackhole

Youtube

DTMF generator from venea.net

Logic Pro

QRS Reverb

Channel EQ

Score

The six failed connections with variable wait times between dial and busy signal

The successful connection on the event attempt

The agonizing wait

The welcome

The first contact

Spindown

Valuation

I want to provide a realistic experience through sound of logging on to the Internet in the early days. I captured the sounds of that might have been in my household as I was connecting on a Friday night.

I wanted to convey the frustration of trying to dial into a crowded phone line and the uncertainty of never knowing whether the next attempt would hit, the ambiance of sitting at a stationary machine where you were at the mercy of the nearest phone line, the excitement of finally establishing a successful connection, and most of all, just how slow it all was (within the confines of my time limit — it was actually much slower to sign onto CompuServe in 1996).

I also included hard drive sounds because 1996 computers were loud.

I think this is an important story (though I hesitate to say whether it’s an important story to tell) because in the modern world we are perpetually connected to the Internet. You probably encounter it within the first few minutes of each day and within the last hour before falling asleep. In the early days there was a delayed gratification. “Going online” was an event. You couldn’t take for granted that your friends would be there at the same time as you. And what you sacrificed to go online was in some ways more clear. Your time. Your phone line (unless you were lucky enough to be a two-line family… we were not). The opportunity to be in other locations doing different things. Today the friction to go “eyes on” is so low that we aren’t even necessarily conscious of it. But we must be certain that the sacrifices are still there.

Performance

This was never performed in public. It is an online exclusive.

cycle 3

Posted: December 10, 2024 Filed under: Uncategorized Leave a comment »For the third iteration of my project, I added in additional elements of a contact mic tracking my heart beat and a large scale projection of my blinking eye. I stood in front of the large eye projection and performed my movements with the smaller face projection trained on my face at all times.

The performance was a 7 minute duration, but in the future I could see it being much longer–potentially 30-45. The movements that are the score of the piece are my slow, measured blinkings that sync in and out with the blinking done by the image projected onto my face and the large eye projection, as well as the deliberate and slow movements of my head turning from left to right. My movements are slow to the point that it takes 7 minutes to turn from center to the right, back to center, and then to the left.

The addition of the contact mic picking up my heartbeat felt vital to the piece. With the help of Michael at MOLA, I was able to narrow in on the low frequencies of my heart beating and had that play for the duration of the performance. It felt crucial to be a live sound, as it was really evident how much it would change according to what was happening. For instance, it was quite fast at the beginning and then gradually slowed throughout, or it would speed up if someone moved around in the audience. The feedback I got was that it felt clear that it wasn’t pre-recorded because of how it felt tied to my physical presence in the room as audience members could see me breathe.

Besides increasing the duration of the piece, something I would consider changing in a future iteration would be providing subtle clues to the audience that they would be allowed to come closer to my body as I performed. Some clues I would consider would be a sign outside of the performance space that said something like “viewers are invited to look closely at the artist, but to not touch her,” or perhaps include a variety of seating at different viewing distances. Especially in the case of a longer durational piece, seating would become an invitation to stay and look. I was also appreciative of the monitor that was provided to me so I could “mark” my movements, and it made me remember that I had at one point considered having a live feed in the space that showed the performance in a different light; perhaps with a time delay? Alex pointed out that that could be a good idea, especially because there were many layers of mediation happening within the performance already.

I am really proud of this piece. It’s an idea that I had early on in the semester, and I’m really glad I stuck with it and spent the whole 3 cycles dedicated to broadening the performance.

Cycle Three

Posted: December 9, 2024 Filed under: Uncategorized Leave a comment »For the third phase of my project, I refined the Max patch to improve its responsiveness and overall precision. This interactive setup enables the user to experiment with hand movements as a means of generating both real-time visual elements and synthesized audio. By integrating Mediapipe-based body tracking, the system captures detailed hand and body motion data, which are then used to drive three independent synthesizer voices and visual components. The result is an integrated multimedia environment where subtle gestures directly influence pitch, timbre, rhythmic patterns, colors, and shapes allowing for a fluid, intuitive exploration of sound and image.

Visual component:

Adaptive Visual Feedback:

A reactive visual system has been incorporated, one that responds to the performer’s hand movements. Rather than serving as mere decoration, these visuals translate the evolving soundscape into a synchronized visual narrative. The result is an immersive, unified audio-visual experience that makes both the musical and visual experience.

Sound component:

Left Hand – Harmonic Spectrum Shaping:

The left hand focuses on sculpting the harmonic spectrum. Through manipulation of partials and overtones, it introduces complexity and depth to the aural landscape. This control over the harmonic series allows for evolving textures that bring richness and variation to the overall sound.

Right Hand – Synthesizer Control:

The right hand interfaces with a dedicated synthesizer module. In this role, it manages a range of real-time sound production parameters, including oscillator waveforms, filter cutoff points, modulation rates, and envelope characteristics. By manipulating these elements on-the-fly, the performer can craft sounds lines and dynamically shape the signals.

Cycle 3

Posted: December 8, 2024 Filed under: Uncategorized Leave a comment »For cycle 3, I continued on with my projects from cycles 1 and 2 to make a completed piece. At the end of cycle 2, I left off with most of the models done and compiled into a scene that people could look around. For cycle 3, I finished making the rest of the models, textured everything, animated it, added lighting, and then did some sound design.

For the models, at the end of cycle 2, I had most of the big furniture pieces done, but now I had to go through and make the smaller props that were going to be animated and tell the story. These props included playing cards, paper clips, a mug, a stuffed bear, a lawn mower, and the tic tac toe board. These are all things that carry significant memories for me about my grandmother and represent some of the things I remember interacting with the most in her house. They are all pretty generic and without the content of the house, background story, or additional things added to the scene, they would seem like ordinary objects without much significance. But that’s sort of the magical thing about memories connected to material things so I wanted to refrain from adding too much explicit narrative.

When making the textures for everything in the scene, I tried to balance what they looked like in real life and a more simplistic stylized version through the use of color and simple patterns. This abstraction was meant to make the space more applicable to more peoples memories while also staying connected to my own.

The animations were relatively simple. I wanted to add life and movement to the objects in my scene to show them being used and then that use fading away at the end. Playing a game with the playing cards, drawing tic tac toe in the fluffy carpet, getting a drink in my special mug, making paper clip necklaces, and hearing the riding lawn mower outside.

While the objects told the story of the space when used, I really wanted the lights to tell the story of the house over time. Everything starts out in golden hour with warm colors, then fades to blue as my time spent there dwindled, and then fades to gray at the end.

I also went for a more abstraction in the sound design as well. At first I had originally wanted to use some voicemails that I have of my grandmother and some other sound recordings that I had, but when I put them in the scene, I felt like it was too jarring hearing a person’s voice and it disrupted the serenity of the scene. I tried going a different route and adding written words to fade in and out of the scene as well, but I felt the same way about those. I thought both options added too much direct narration to what was happening and I wanted to leave it more open. Some of this might have been from the strength of these things in my memory, but I wasn’t able to distinguish what my personal feelings were toward it from the effect of the piece for other people so I thought it best not to include it in general. Regardless, I added in some ambient sound and the sound of some things in the room to fill up the space and round it out.

Overall I really enjoyed this creative experience. I really liked getting to divide out my work into three different cycles and push through different phases of the project as I went. The smaller chunks made the work feel more manageable and I was able to work through different ideas without worrying about the end product all of the time. This project turned out pretty similarly to what I was envisioning in the beginning visually, but in concept, turned out much more abstract than I had originally intended. One of the things that I wanted to explore when I started this all the way back in cycle 1 was making projects and telling stories that had personal significance to me. I think that the abstraction and lean away from direct narrative in part came from a hesitance to share. It’s one thing to talk about these stories in a classroom setting, and another to create a world and put a visualization of your thoughts, feelings, and memories on screen for everything to look at and dissect. In the end though, I thought that this was a great first trial run of telling this type of story and I definitely feel more comfortable doing so now than I did when this first started.

Feedback:

One of the biggest pieces of feedback I got was a desire for more of the original stories I had proposed to be incorporated into the work. There was a desire for a more emotional work and to lean further into those previous ideas. I really agree with this feedback and if I could go back and do a fourth cycle of this, that would probably be what I focused on. I struggled in this project to figure out how to balance my own memories with the meaning I was instilling into the objects and how much of that I wanted to show explicitly.

There was also still a strong desire for the project to be more interactive and to be able to move around the space (possibly in VR). Putting this space in a more interactable environment is totally possible and would add a lot of interesting elements, but I chose the medium I did because I wanted to explore something I didn’t see often in media. While it isn’t as advanced or interactive as VR would be, the underlying language and storytelling abilities of a 360 video medium was something I hadn’t seen a lot of before and was excited about. It would be interesting to compare the experience I made with the same thing but in VR and see how people react to the space.

I was happy to hear that people enjoyed watching it and went about watching it multiple times to try and catch all the different little things that were happening in the scene. The intention came through!