Pressure project 3 – sharing a wandering?

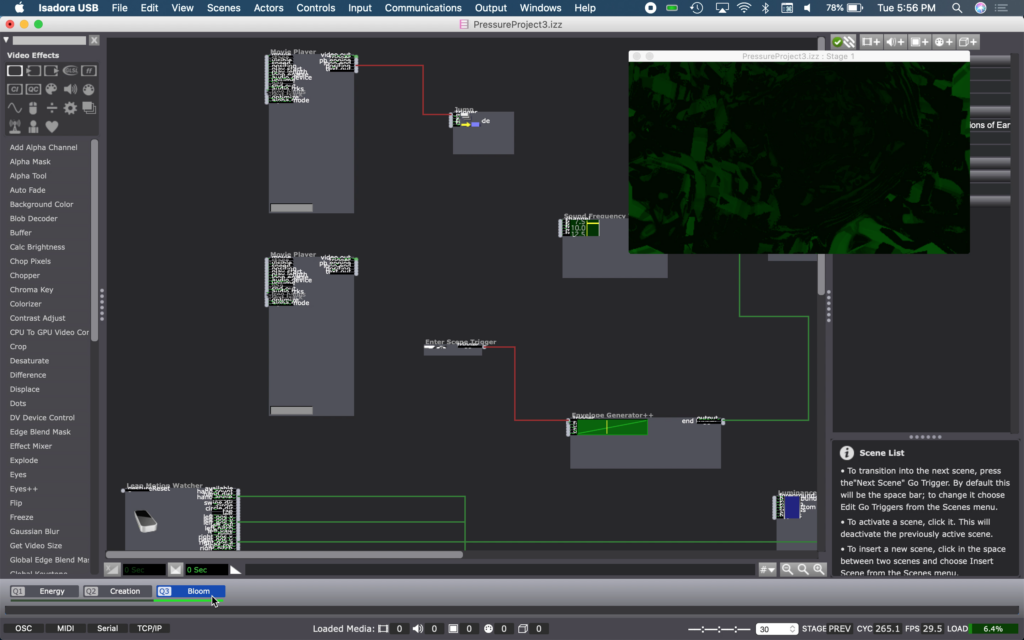

Posted: March 5, 2024 Filed under: Pressure Project 3 Leave a comment »When designing this experience in response to the prompt of “mystery is revealed”, my thought is that, I don’t want to be the one in control to reveal the mystery, but I wanna send the participants on a journey where they disclose mystery for themselves.

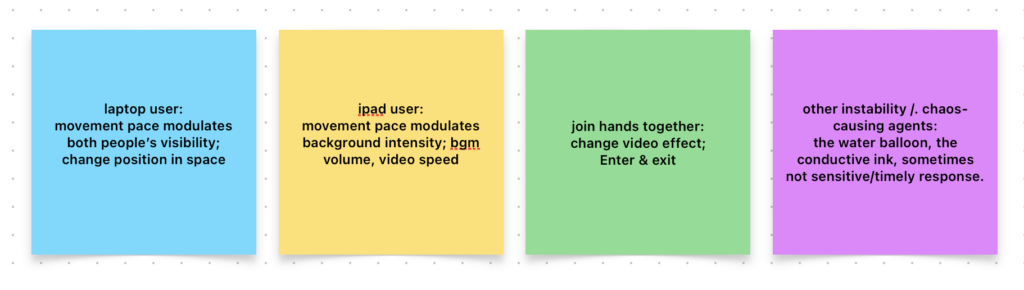

I am interested in what shared agency and reciprocal relationship can do. So I devised this experience where the duet have to work together to decide the journey for themselves. I am interested in haptic experience, so some trigger requires the duet to hold hand together. So the experience is co-created by both participants and some random factors:

I thought of the resource of using the green screen from zoom to carve out the human out and compose it to the video background. I think I start with thinking of using zoom quite spontaneously even without knowing how exactly I wanna use, probably because of the past three years of weekly dancing in zoom…

And the videos themselves are composed of a couple of clips that I took with my phone years ago. To me, all these clips share a kind of texture that feels visceral and has a potential of touching the unconsciousness. That’s why I choose to use these specific imagery for this project whose theme is related to mystery.

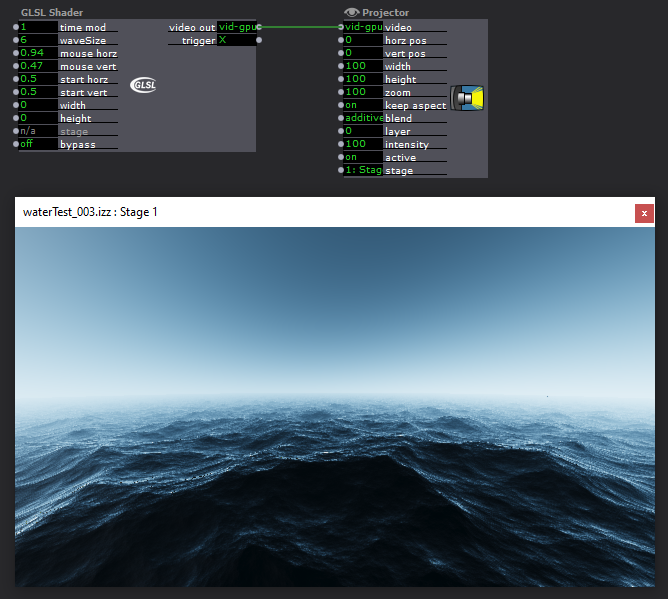

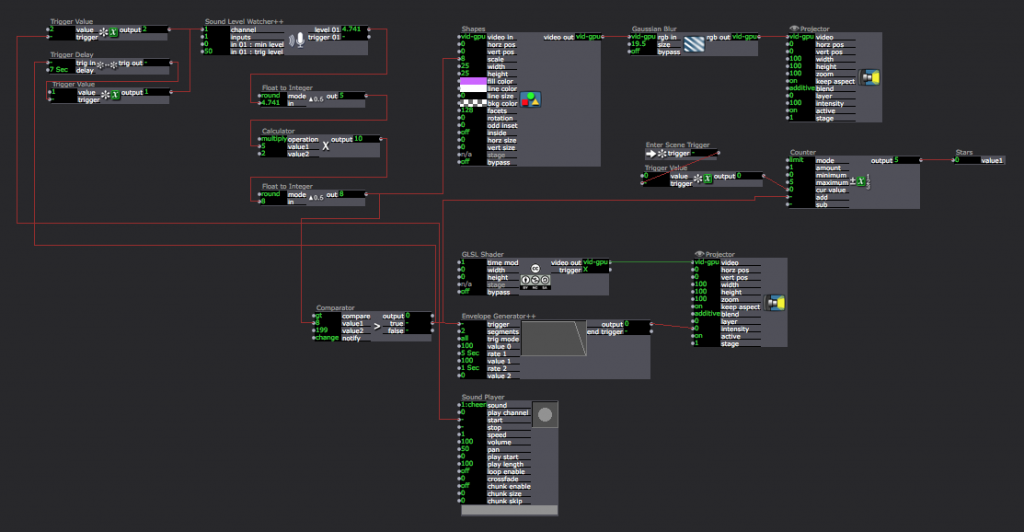

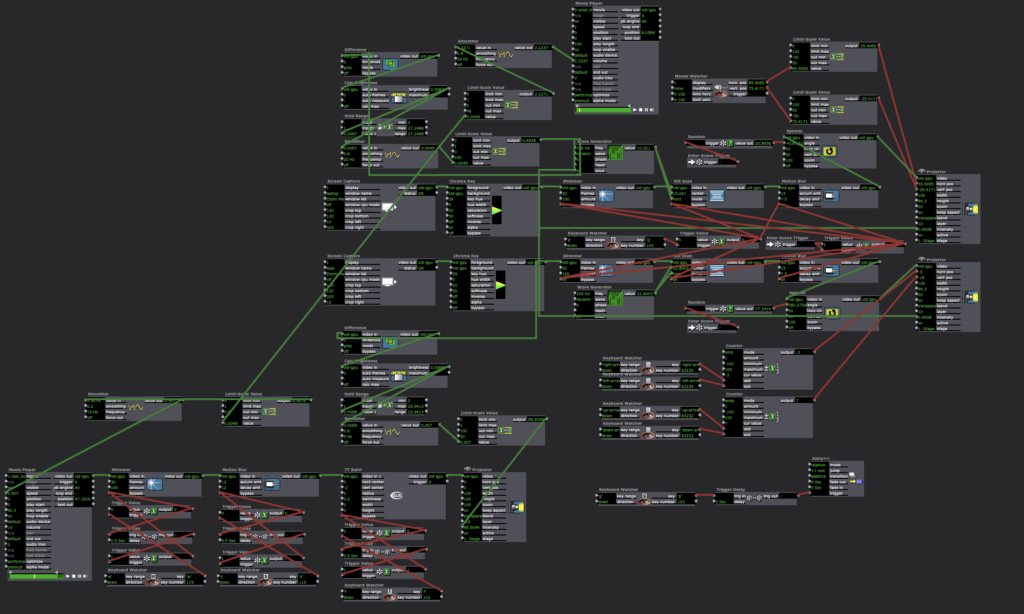

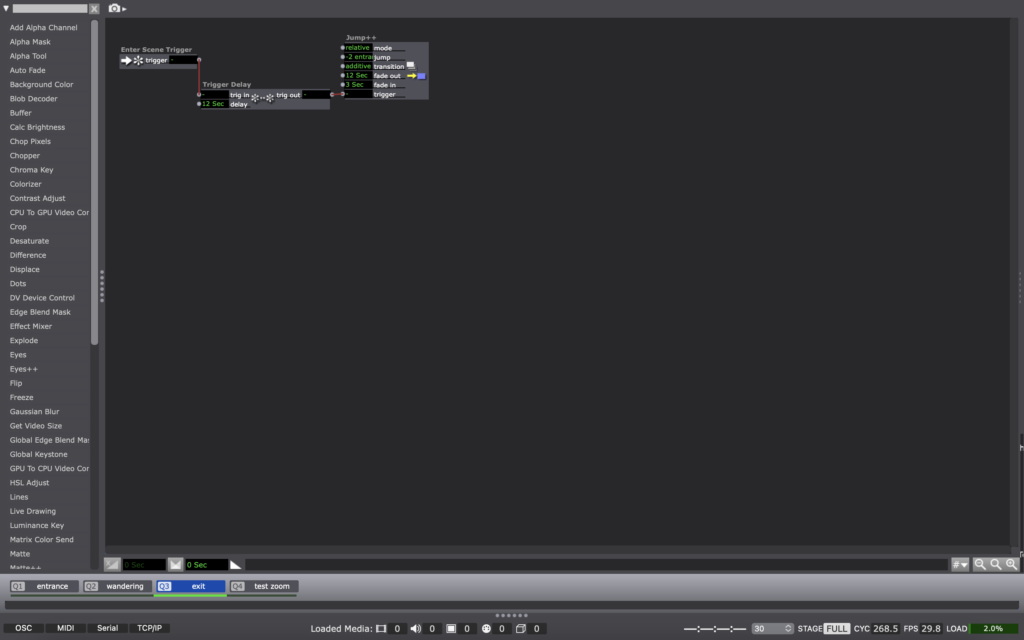

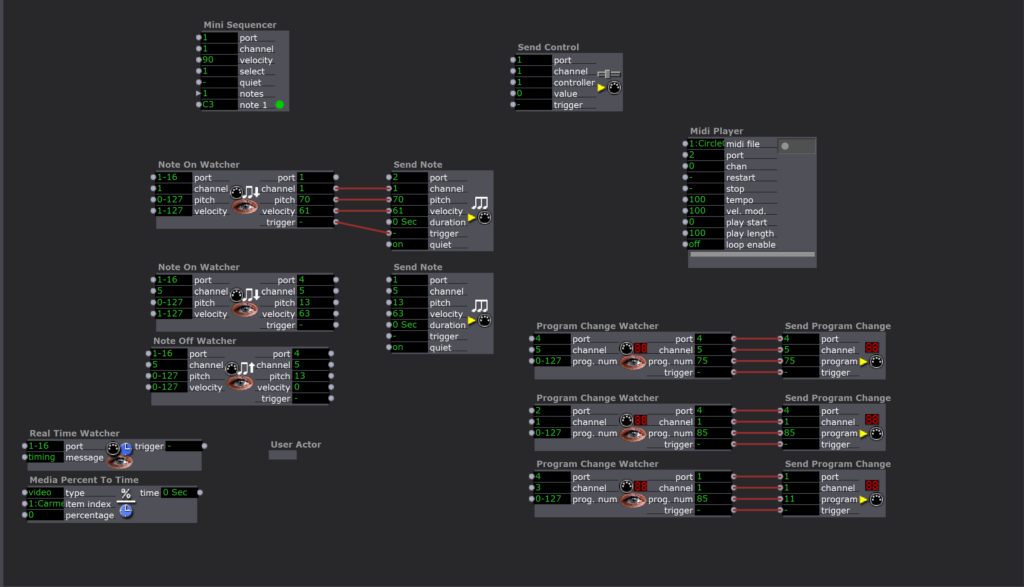

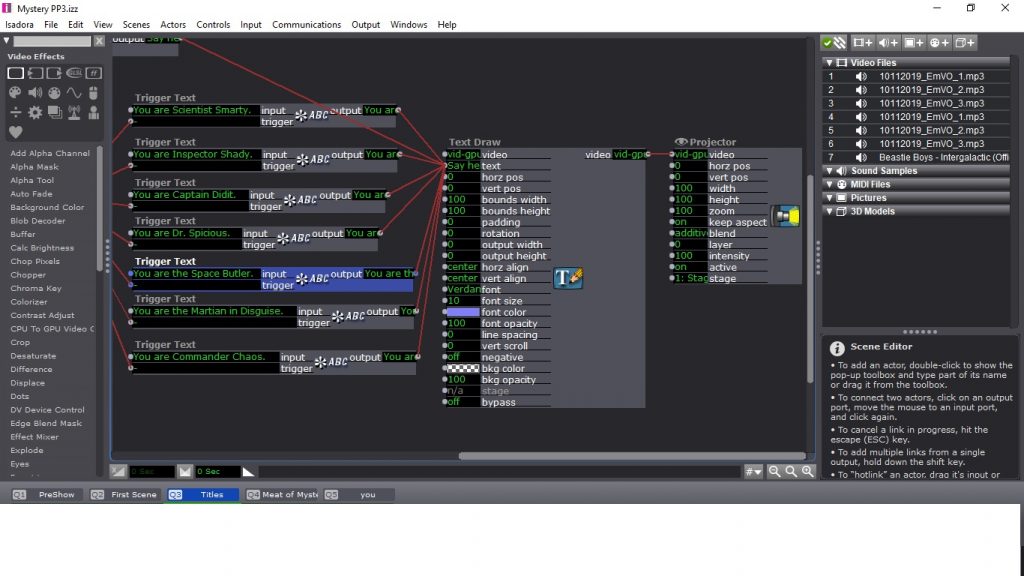

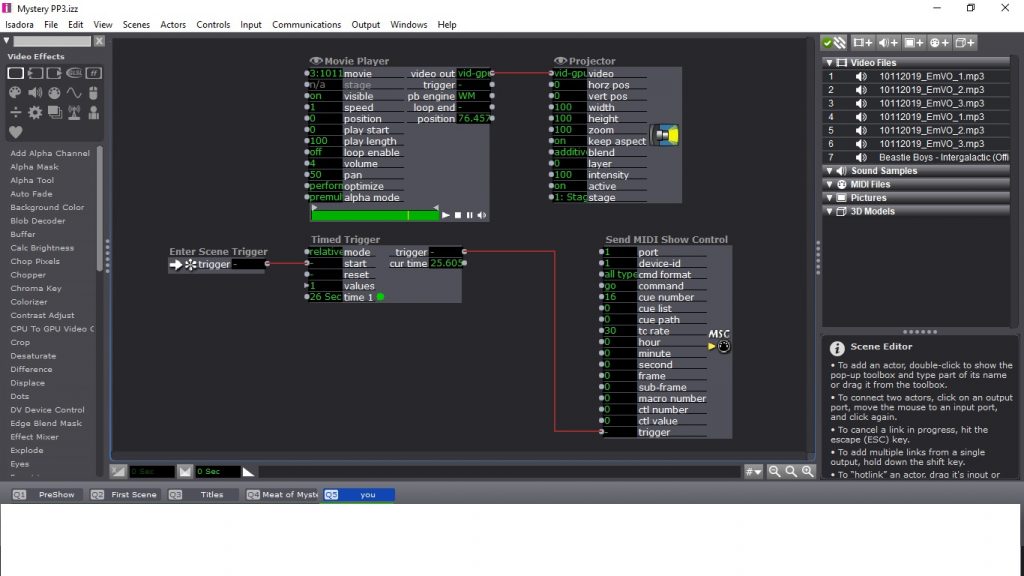

Isadora patch:

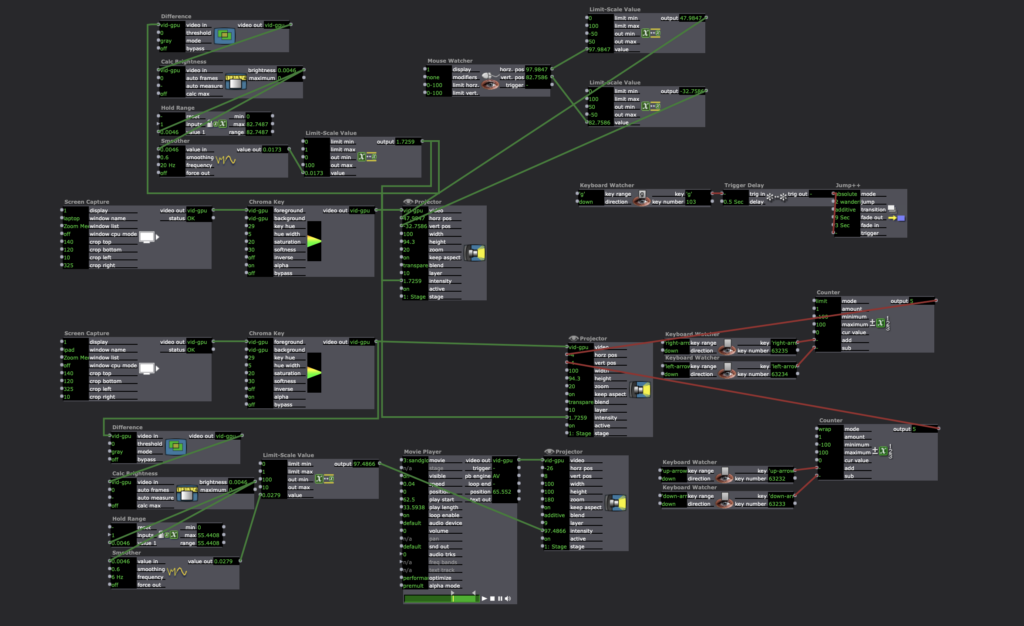

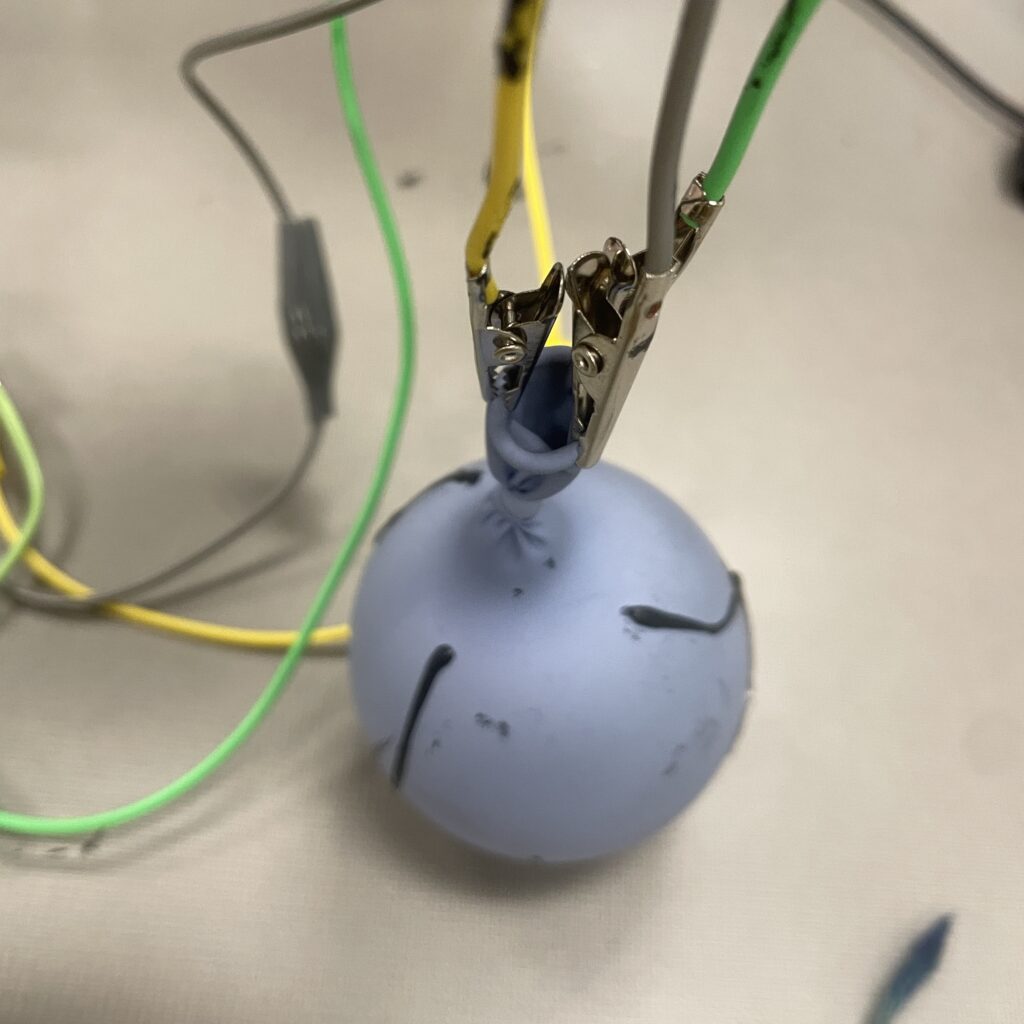

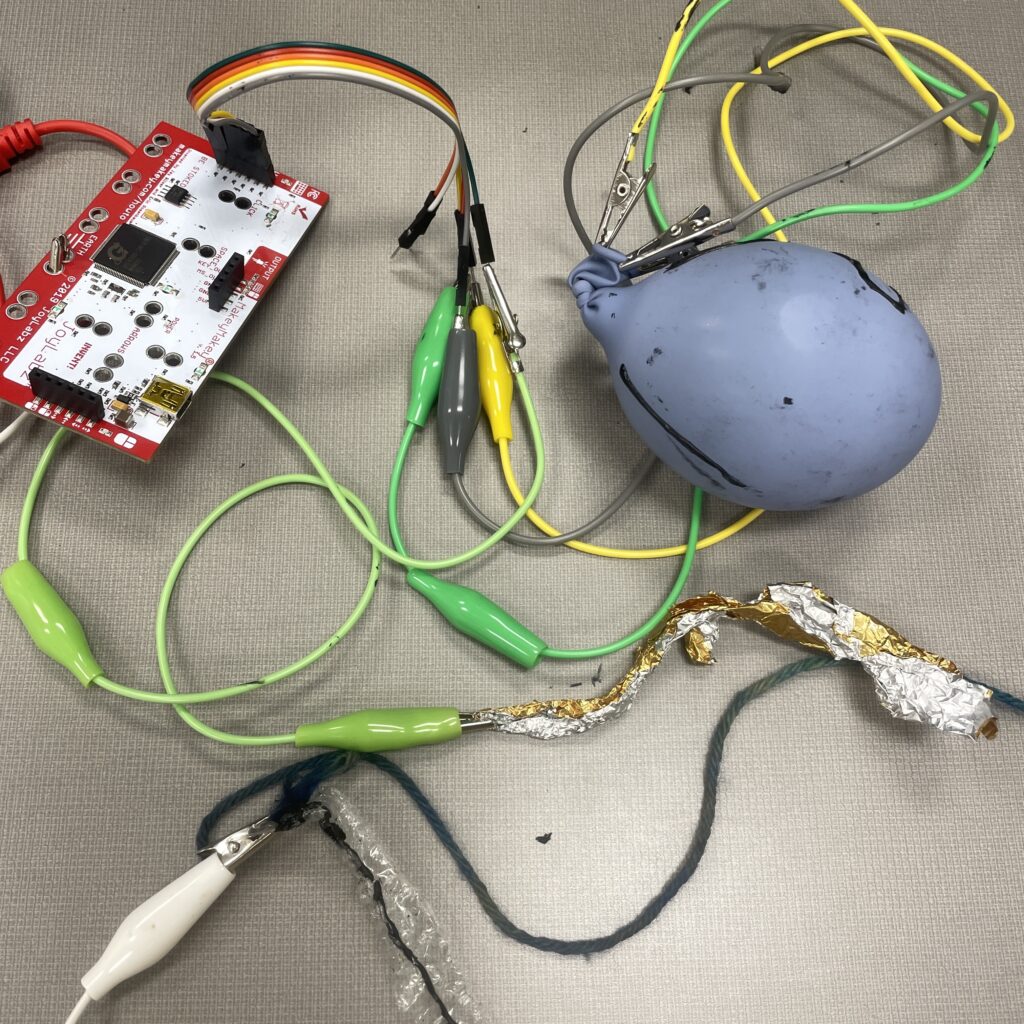

Makeymakey connection:

i kinda of appreciate the time contraints of 10 hours, it takes the pressure of me by pushing me to make quicker decisions and letting go when i was wavering between too many ideas.

Several things I bumped into but not yet figured out, and am interested in exploring further is:

– how to elicit and engage with the fragility and instability side of technology. I was noticing it intriguing about the use of a water-soaked thread as a conductor, which involves an uncertainty of how long it takes for the water to evaporate that it eventually becomes not conductive. The same goes with the use of conductive ink – after I painted it onto the plastic strap, the ink tends to break in-between easily. Also the design of water balloon part…The fragility and uncertainty here are fascinating to me, but I haven’t figured out how to more intentionally engage with it.

– another desired I had is to have the participants touching the webcam, (think about relations of visual and haptic), but I drop the idea because it is too much to incorporate here.

– and how to communicate such interaction to the audience, and how verbal or nonverbal communication have different affects.

PP2/PP3: Musicaltereality

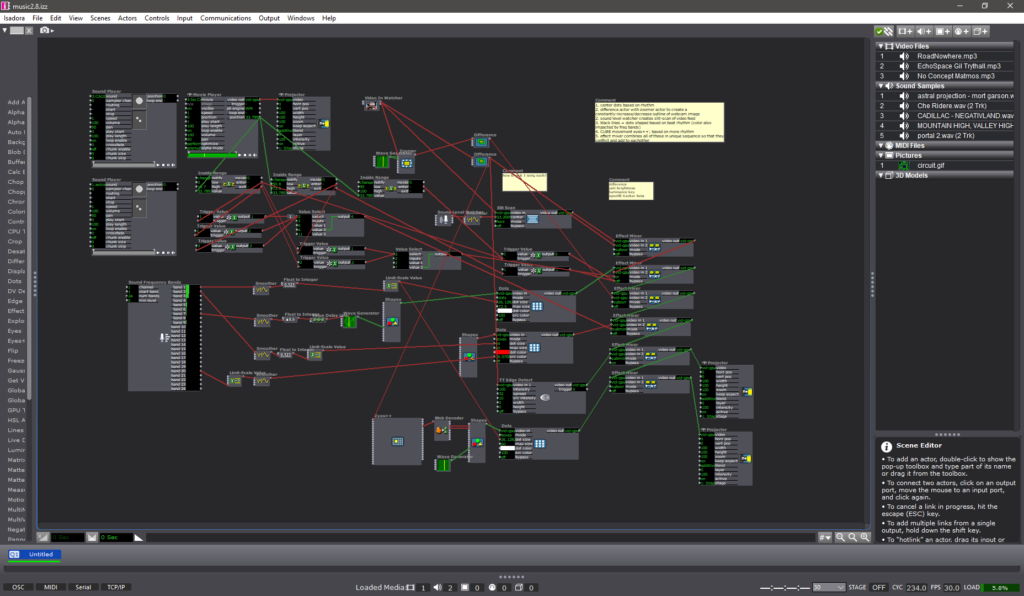

Posted: October 29, 2023 Filed under: Arvcuken Noquisi, Pressure Project 2, Pressure Project 3, Uncategorized Leave a comment »Hello. Welcome to my Isadora patch.

This project is an experiment in conglomeration and human response. I was inspired by Charles Csuri’s piece Swan Lake – I was intrigued by the essentialisation of human form and movement, particularly how it joins with glitchy computer perception.

I used this pressure project to extend the ideas I had built from our in-class sound patch work from last month. I wanted to make a visual entity which seems to respond and interact with both the musical input and human input (via camera) that it is given, to create an altered reality that combines the two (hence musicaltereality).

So here’s the patch at work. I chose Matmos’ song No Concept as the music input, because it has very notable rhythms and unique textures which provide great foundation for the layering I wanted to do with my patch.

Photosensitivity/flashing warning – this video gets flashy toward the end

The center dots are a constantly-rotating pentagon shape connected to a “dots” actor. I connected frequency analysis to dot size, which is how the shape transforms into larger and smaller dots throughout the song.

The giant bars on the screen are a similar setup to the center dots. Frequency analysis is connected to a shapes actor, which is connected to a dots actor (with “boxes” selected instead of “dots”). The frequency changes both the dot size and the “src color” of the dot actor, which is how the output visuals are morphing colors based on audio input.

The motion-tracking rotating square is another shapes-dots setup which changes size based on music input. As you can tell, a lot of this patch is made out of repetitive layers with slight alterations.

There is a slit-scan actor which is impacted by volume. This is what creates the bands of color that waterfall up and down. I liked how this created a glitch effect, and directly responded to human movement and changes in camera input.

There are two difference actors: one of them is constantly zooming in and out, which creates an echo effect that follows the regular outlines. The other difference actor is connected to a TT edge detect actor, which adds thickness to the (non-zooming) outlines. I liked how these add confusion to the reality of the visuals.

All of these different inputs are then put through a ton of “mixer” actors to create the muddied visuals you see on screen. I used a ton of “inside range”, “trigger value”, and “value select” actors connected to these different mixers in order to change the color combinations at different points of the music. Figuring this part out (how to actually control the output and sync it up to the song) was what took the majority of my time for pressure project 3.

I like the chaos of this project, though I wonder what I can do to make it feel more interactive. The motion-tracking square is a little tacked-on, so if I were to make another project similar to this in the future I would want to see if I can do more with motion-based input.

Pressure Project 3

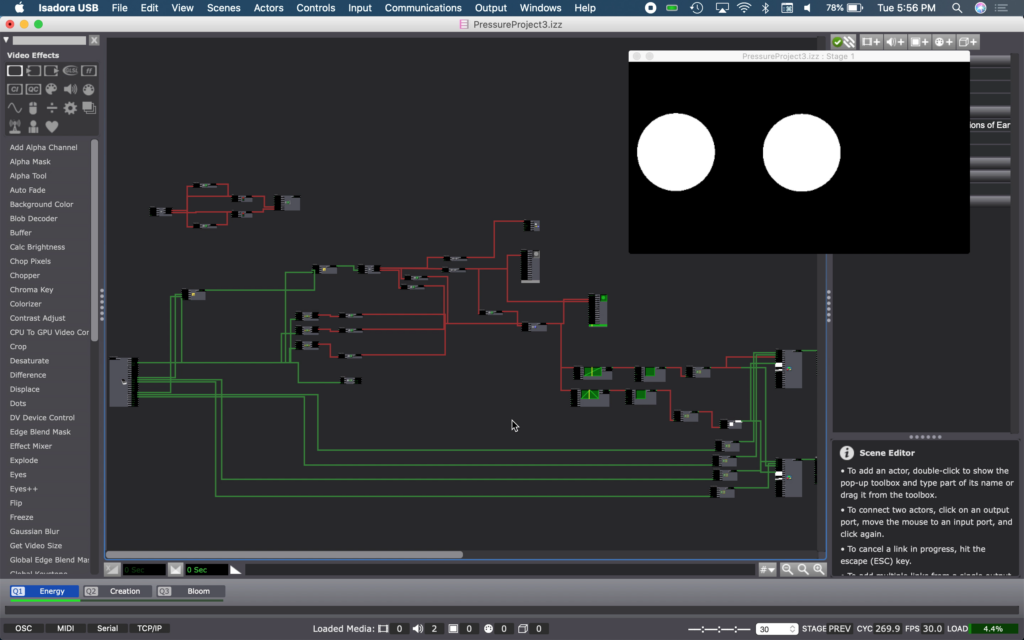

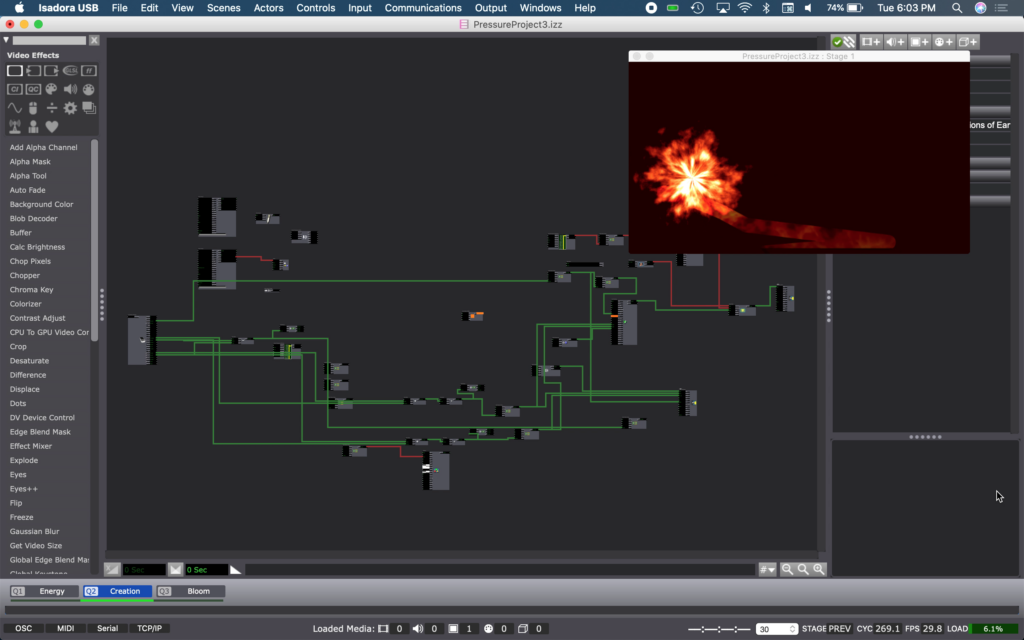

Posted: March 21, 2021 Filed under: Nick Romanowski, Pressure Project 3 | Tags: Nick Romanowski Leave a comment »For Pressure Project 3, I wanted to tell the story of creation of earth and humanity through instrumental music. One of my favorite pieces of media that has to do with the creation of earth and humanity, is a show that once ran at EPCOT at Walt Disney World. The nighttime spectacular was called Illuminations: Reflections of Earth and used music, lighting effects, pyrotechnics, and other elements to convey different acts showcasing the creation of the universe and our species.

I took this piece of music into Adobe Audition and split the track up into different pieces of music and sounds that could be manipulated or “visualized” in Isadora. My idea was to allow someone to use their hands to “conduct” or influence each act of the story as it plays our through different scenes in Isadora. The beginning of the original score is a series of crashes that get more and more rapid as time approaches the big band and kicks off the creation of the universe. Using your hands in Isadora, you become the trigger of that fiery explosion. As you bring your hands closer to one another, the crashes become more and more rapid until you put them fully together and you hear a loud crash and screech and then immediately move into the chaos of the second scene which is the fires of the creation of the universe.

Act 2 tracks a fire ball to your hand using the Leap Motion. The flame effect is created using a GLSL shader I found on shader toy. A live-drawing actor allows a tail to follow it around. A red hue in the background flashes in sync with the music. This was an annoyingly complicated thing to accomplish. The sound frequency watcher that flashes the opacity of that color can’t listen to music within Isadora. So I had to run my audio through a movie player that outputted to something installed on my machine called Soundflower. I then set my live capture audio input to Soundflower. This little path trick allows my computer to listen to itself as if it were a mic/input.

Act 3 is the calm that follows the conclusion of the flames of creation. This calm brings green to the earth as greenery and foliage takes over the planet. The tunnel effect is also a shader toy GLSL shader. Moving your hands changes how it’s positioned in frame. The colors also have opacity flashes timed with the same Soundflower effect described in Act 2.

I unfortunately ran out of time to finish the story, but I would’ve likely created a fourth and fifth act. Act 4 would’ve been the dawn of humans. Act 5 our progress as a species to today.

Pressure Project 3 (Maria)

Posted: March 12, 2021 Filed under: Maria B, Pressure Project 3, Uncategorized Leave a comment »I approached this project wanted to tell a fictional, widely recognized narrative that I knew well. So I chose to tell the story of The Lion King. My initial plan for this pressure project ended up being a lot more than I could chew in the allotted 9 hours. I wanted to bring in MIDI files that told the story through sound, and map out different notes to coordinate with the motion and color of visuals on the screen (kind of like what was demonstrated in the Isadora MIDI Madness boot-camp video). After finding MIDI versions of the Lion King songs and playing around with how to get multiple channels/instruments playing at once in Isadora, I realized that trying to map all (or even some) of these notes would be WAY too big of a task for this assignment.

At this point, I didn’t have too much time left, so I decided to take the most simple, straightforward approach. Having figured out the main moments I felt necessary to include to communicate the story, I went on YouTube and grabbed audio clips from each of those scenes. I had a lot of fun doing this because the music in this movie is so beautiful and fills me with so many memories 🙂

- Childhood

- Circle of Life

- Just can’t wait to be King

- “I laugh in the face of danger”

- Stampede

- Scene score music

- “Long live the king”

- “Run, run away and never return”

- Timone and Pumbaa

- First meet

- Hakuna Matata

- Coming Back to Pride Rock

- Nala and Simba Reunite

- Can You Feel the Love Tonight

- “simba you have to come back” “sorry no can do”

- Rafiki/omniscient Mufasa “Remember who you are”

- Simba decides to go back

- The Battle

- Score music of sad pride lands/beginning of fight

- “I killed Mufasa”

- Scar dies

- Transition music as Pride Rock becomes sunny again -> Circle of Life

I took this as an opportunity to take advantage of scenes in Isadora and made a separate scene for each audio clip. The interface for choosing the correct starting and ending points in the clip was kind of difficult, it would definitely be easier to do in Audition but this was still a good learning experience.

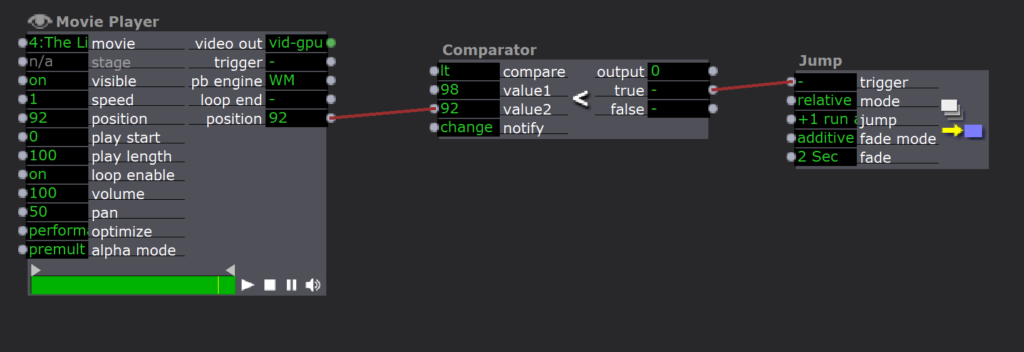

I used MP3 files since they act as movie files, and the movie player actor has outputs while the sound player does not. I determined the correct starting position using the ‘position’ input (and sometimes using a ‘Time to Media Percent Actor’ if I already knew exactly where in the clip I wanted to start). I connected the position output to a comparator that triggered a Jump actor to go to the next scene when the position value (value 2) went above the value I inputted (value 1).

Here is the final result:

I wasn’t super proud of this at first because I didn’t feel like I took enough creative liberties with the assignment. However, when I shared it in class, it seemed to evoke an emotional response from almost everyone! It really demonstrated the power music has to bring up memories and emotions, especially when it is something so familiar. Additionally, it showed the power of a short music/audio clip to tell a story without any other context–even the people who weren’t super familiar with the movie were able to gain a general sense of the story arc.

Pressure Project 3 (Sara)

Posted: March 11, 2021 Filed under: Assignments, Pressure Project 3, Sara C Leave a comment »I plucked out the story I wanted to tell and the accompanying audio I wanted to use within half an hour of beginning. If only the remaining eight and a half hours spent on Pressure Project 3 had progressed as fluidly. Oh, well, Lawrence Halprin tells us “one of the gravest dangers we experience is the danger of becoming goal-oriented;” I don’t think anyone would accuse me of forgoing the process in favor of a goal over the course of this meandering rumination on how I’ve grappled with 3/11 in the ten years since the disaster.

First, the parts of the assignment that flowed easily:

Alex told us the deadline was Thursday, March 11. 3/11 marks the tenth anniversary of the Tōhoku earthquake and tsunami that in turn led to the Fukushima Daiichi Nuclear disaster. I was completing an undergraduate study abroad program in Shiga Prefecture at the time. As the earthquake struck and the tsunami was thundering towards the shore, I was shopping for a pencil case at a mall near Minami-Hikone Station. I was utterly oblivious to the devastation until I walked into my host family’s living room and saw a wave sweeping across airport runways.

So, yes, when Alex told us the deadline for this project was March 11, I knew there was only one “story of significant cultural relevance” I could tell.

After my study abroad program was summarily canceled, and we were forced to scramble for airfare back to the States, I spent a good deal of time adrift. Somewhere along the way, I decided I wanted to get in my car and head west. So I did. I drove west for a week, making it as far as the Badlands of South Dakota before turning around. Sometime later, I took a creative nonfiction course and wrote a piece about grappling with memories, grief, and coming unmoored. The writing was selected for my university’s literary journal, and so the audio you hear is my voice from all those years ago.

Now, onto process:

The piece was more than five minutes long, so I dumped the file into Audition and pruned it to meet Alex’s 3.5-minute requirement. Ensuring that the work still told a cohesive story after excising large swaths of it took half an hour.

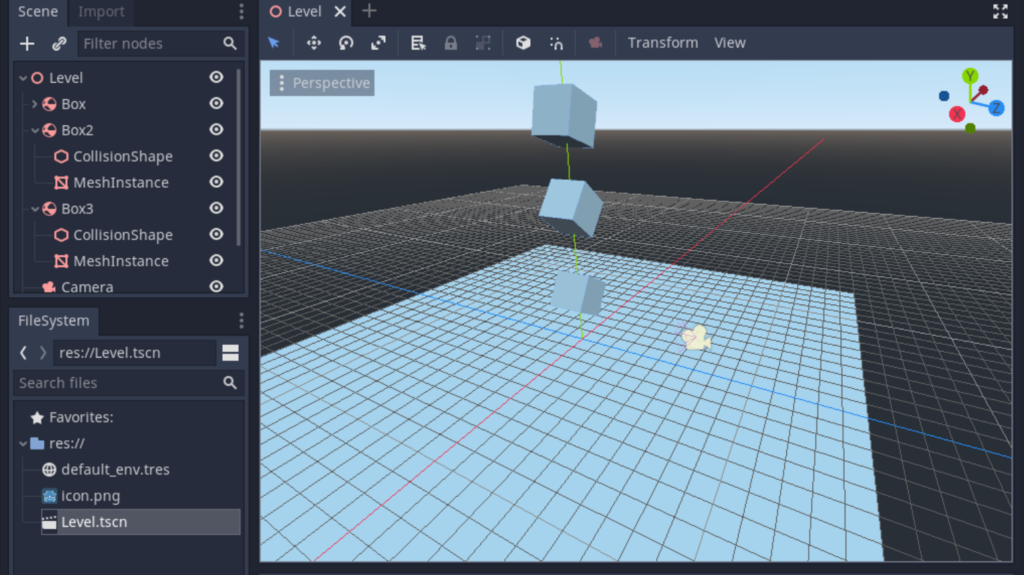

After that is where things became tricky. We were told we didn’t have to use Isadora. I’ve been interested in dabbling with Godot for our upcoming Cycles project, so I was tempted to get a headstart and move directly into using the software. I jotted down the following notes:

“User plays with water while listening to audio?”

“3D water?”

Alex graciously explored GLSL shader options in Isadora with me. Unfortunately, I was 1.) stubborn and interested in Godot, and 2.) felt personally removed and emotionally remote from the visual experience. The waves were lovely, but I didn’t mold them myself. I wanted to dig my hands in the electronic clay.

I started watching Intro to Godot videos and quieted any voices in my head wondering if I was falling too far into the weeds. Four hours and fifteen minutes later, I had an amorphous blob of nothing. I wrote the following note:

“Do I press forward with Godot? Do I return to Isadora? Do I crawl back to my roots and make an animatic?”

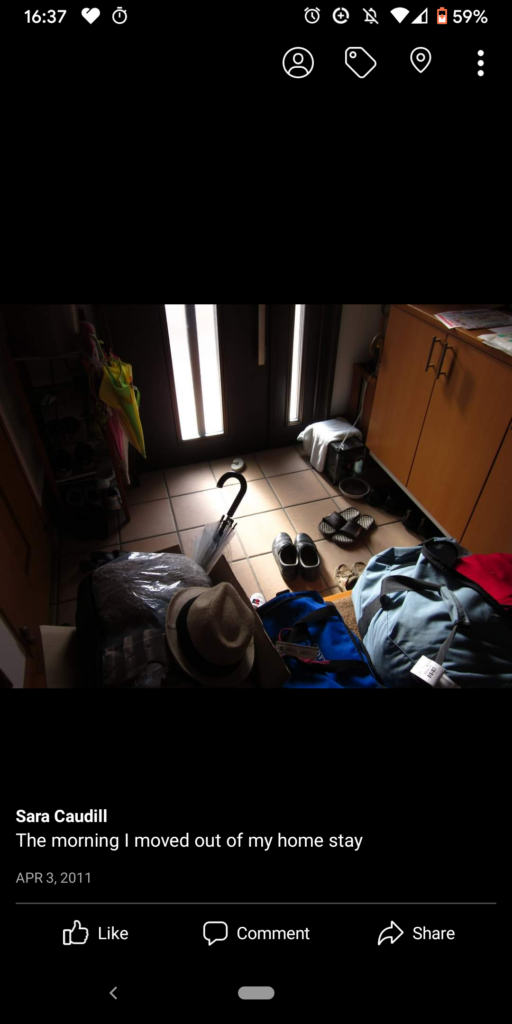

A few months ago, I had dabbled in 3D panoramas in Photoshop using equirectangular projections. I thought that perhaps that might be a way to bring the audience closer to the story without the kitsch of “splash around in fake water with your Leap Motion while listening to young, lost Sara.” I didn’t want to Google “3/11 Triple Disaster” and sift through the wreckage like a disaster tourist, so I instead looked up photos of Lake Biwa. The school where I studied squatted right on its shore. The Google results felt inert, so I then cracked open my defunct Facebook and went back to the albums I haven’t looked at in years. I found a photo of the entryway at my homestay strewn with boxes, luggage, and shoes. The caption read: “The morning I moved out of my homestay.”

Even after all this time, it hit me like a punch to the gut. I immediately started sketching it. I didn’t know what I was actually going to do with it, but drawing was a visceral, physical relief.

After making a messy sketch, I played with palettes. Eventually, I settled on the Japanese national red as the mid-tone and bumped the lights and darks around from there. I got even messier. I wanted the colors to sort of hurt the eyes; I wanted the whole thing to feel smudgy.

All right.

I had an audio clip.

I had an illustration.

Now what?

I found more old photos. I sketched more. I emailed my host family. I cried. Eventually, I found myself with half an hour to go and disparate, disconnected images and sounds. Finally, I listened to the wind outside the trees, thought about how it sounded like waves along the shore, and jumped into Premiere to make an audio-visual composition.

I don’t really feel like I created this work so much as wrenched it loose. Truthfully, I’m grateful for the opportunity.

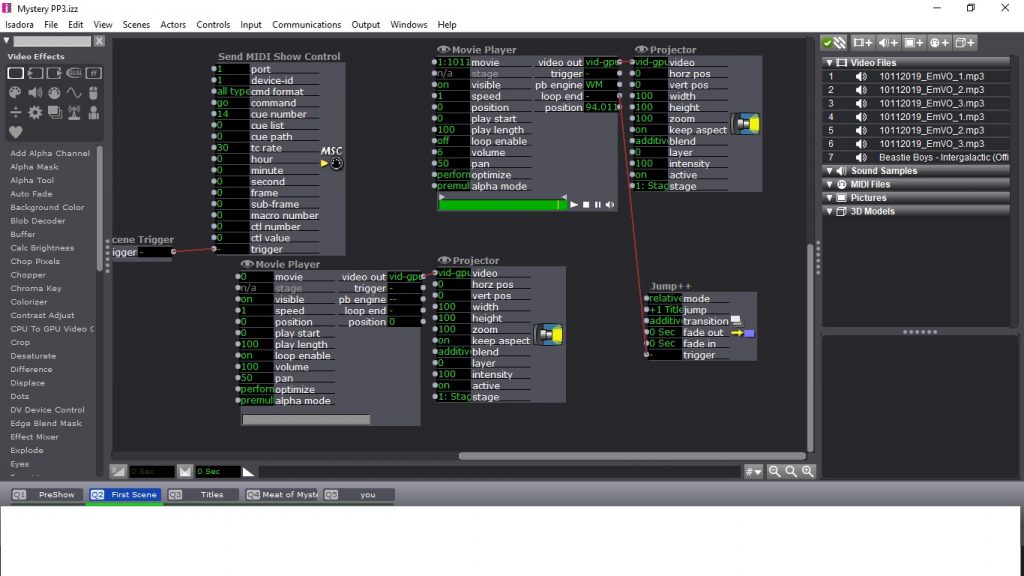

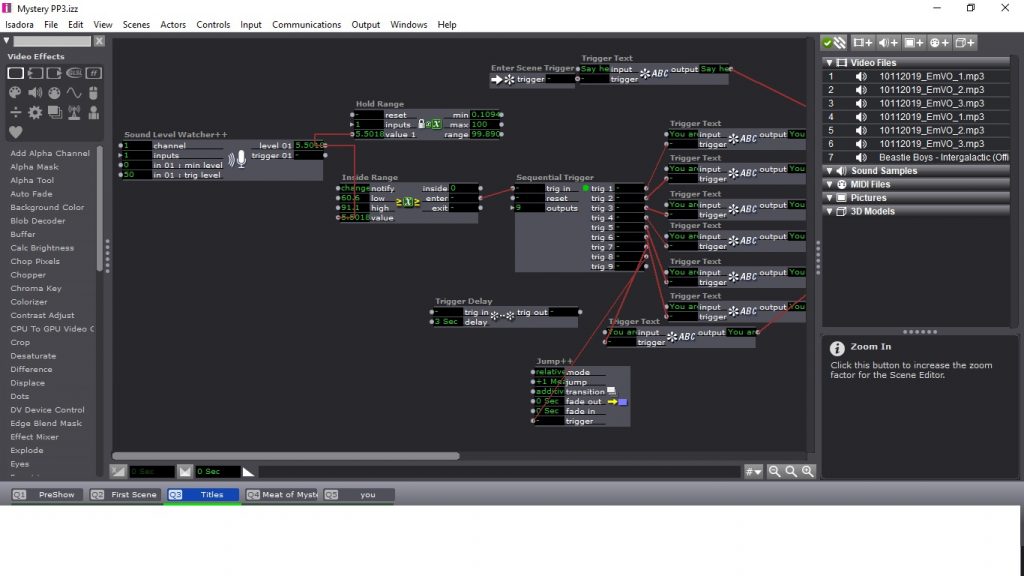

murder on the mission to mars

Posted: October 20, 2019 Filed under: Emily Craver, Pressure Project 3 1 Comment »My immediate thought as to what to create on our third Pressure Project involving mystery was Murder Mystery Party and the Clue Movie. Should I randomize three minutes worth of clips from the movie as a guessing game? Can I assign people to be the characters and initiate a Live Action Re-staging of the movie? Then I realized that I might as well create my own mystery: a mystery on a mission to mars.

Excited to explore lighting elements through a Send Midi Show Controller as well as how to assign characters in real-time via a sound level watcher and inside range actor, I created a four-scene interactive experience in which the audience acts as first adventurers en route to Mars–interrupted by murder. The three-minute time limit acted as prevention from creating an entire narrative delving into these characters, but also creates an interested set-up for the audience. Mars seems to be the focus until the story shifts. I’m interested in creating more of the context around why/who/how and feedback from my classmates implied that there was some curiosity around details.

PP3 – Puzzle Game

Posted: November 8, 2018 Filed under: Pressure Project 3 Leave a comment »For Pressure Project 3, I decided to create a game reminiscent of games like The Talos Principle and The Stanley Parable. These games are unique in that they explore themes of determinism and freewill, and the ending you receive is based on how you perform in light of the choices you make in the game. Certain choices made following the instruction of the narrator/narration will lead you to a somewhat lesser ending than one of disobedience and self-determination. The links below highlight the endings of the games where these themes are evident.

Talos Principle: https://www.youtube.com/watch?v=g5FloMq9Lck

https://www.youtube.com/watch?v=-mNa2csiD8E

Stanley Parable: https://www.youtube.com/watch?v=w3UxRa_-9UU

Given the time constraint, I decided to make a game with the puzzle goal of unlocking when the user successfully correlates actions, but only executes their own commands.

User Inputs would include a gamepad controller and a Kinect interface, and users would input a corresponding button on the controller or move in a corresponding way in space.

🡸 🡺 🡹 🡻 A (Jump) B (Crouch)

Participants would move in a grid space made up in Isadora with data from the Kinect to complete the physical inputs. In the first phase, only the game pad would be used. In the second, only physical movement. In the final phase, both would be used. Thematically, there would be psychology/philosophy quotes about choice between phases, some which would suggest a means of escape/winning. In the final phase, you would unlock the real ending if you made valid unprompted choices.

The game wasn’t completed because I lost the save at one point when I was trying to get the Kinect stream working. SAVE EARLY, SAVE OFTEN. At time of performance, game included the first phase only. It also broke.

Here’s a user actor example on how to make a non-repeating random number string generator. Change length or modulo number for different results. This was used to generate the pattern of inputs that the user had to input.

Pressure Project 3

Posted: November 6, 2018 Filed under: Pressure Project 3 Leave a comment »For Pressure Project 3 I decided to bring in some of my childhood enjoyments to this project. Knowing that I had to have a project that did not involve the keyboard yet had some level of technology involved I had to figure out what to do. I remember enjoying decoding things and figuring out puzzles. The technology that was involved was the Makey Makey and using a website called Scratch. The experience was where someone was to receive a piece of paper. On the paper you had four pictures with a piece of duct tape on top and three dashes below the pictures. There were two tables. One table had a group of red cups that were turned over. The other table had a computer and a Ritz cracker box with silver items protruding up from the inside of the box. When someone received their paper, they were to use the color of the duct tape at the top of the paper to correspond with the color duct tape on the cup that had a letter written on it. Then using those cups, they then had it narrowed down to a smaller number of letter choices. Then looking at the pictures they had to figure out the word and the three dashes let them know that it was a three-letter word. Once they figured out the word they were to figure out that you had to turn the cup right side up to reveal a number inside the cup. Then using the numbers, they were to go to the computer and Ritz box. On the Ritz box there were ten silver protruding sets of foil. There were three rows of three foils and one foil in the center bottom that had the message hold me written around it. The experiencer then was to hold the tenth foil and then touch the other ones in order of the numbers. Once the code was cracked they heard a cheering noise from the computer. There was also a message for the user to click the green flag when they heard their noise.

It seemed that everyone was enjoying the experience. One person said they enjoyed the tactile experience of the this project. It became one big group event and they even asked for more clues when they finished one. They eventually tried to find how to break the experience or to find out if there were any underlying similarities from the clues. They eventually found out that to make the cheering sound you had to hold the one silver prong and enter two numbers followed by the nine. If I was to do this again, I would work more triggers to where there were different sounds for series of numbers and for every last digit that was different, there was a different sound or a random sound.

As I was trying to determine how to put together this pressure project I did some research into other ideas. I ran across a video on how to use the Makey Makey and a book about different projects called 20 Makey Makey Projects for the Evil Genius. There are a lot of projects in the book that I would like to make happen yet I needed to narrow down to this specific project. The project I used was the lock box project. I did some tweaking from what the book has to make it my own. Overall, I feel that this was a successful project.

Pressure Project 3

Posted: October 26, 2018 Filed under: Pressure Project 3 Leave a comment »For this project, I really enjoyed the class’ take on a fortune teller in the last pressure project, and a few people’s reminded me of the old chose your own adventure books I used to read when I was younger. One thing I remember is being able to skip ahead a lot and would avoid pages if I saw them while turning through, so I wanted to make something like that but where you couldn’t cheat. I thought that QR codes would be an interesting idea to tell this story, so I wrote out a whole choose your own adventure centered around a murder mystery. From each segment that went on each page, it ended up being a unique QR code that the user can scan to read that section of the story. I pasted the corresponding QR codes to their respective pages on a book that I had. To generate the Qr codes, I just pasted text into (@Laura) this website which generated the corresponding png.

Watching the class interact with my project was so much fun because everyone was very divided on which path to take and it ultimately lead to a democratic vote to which path to take. I think it really helped that the narrator was Alex for this story, his narration really gave life to my adventure. I just wish that my speaker was working; I wanted to have some background music for ambiance.

If you’d like to go through the story in segments, use the attached zip file to read the story using the QR codes. If you’d like to just read it straight up, use the script.md file attached.

Pressure Project #3: Paper Telephone Superstar!

Posted: December 10, 2017 Filed under: Pressure Project 3 Leave a comment »Paper Telephone Superstar! is a simple, but interactive riff on the old Telephone game in which the meaning of media is blurred through transmission. My goals for this project were to create a gaming experience that was familiar, required only simple instructions, and included a responsive digital element that enhanced play.

The rules of the game were distributed to players, along with a blank piece of paper:

- Sit in a circle

- Write a short phrase at the top of the paper

- Pass your paper to the person on your right

- Draw a picture of what is written, then fold the paper down to cover the writing

- Pass the paper to the person on your right

- Write a short phrase the describes the picture, then fold the paper down to cover the picture

- Pass the paper to the person on your right

- Repeat until everyone has their original paper back

- Unfold your paper take turns sharing the hilarious results! Clap loudly for the best or funniest results! Whoever gets the spinny star wins!

I watched the players read the rules and then settle themselves on the floor in a circle. A screen mounted on the wall above the players pulsed slowly with a purple light that grew or shrunk depending on how much noise the players were making.

After the first round, when everyone had got back their original papers, the players took turns unfolding them and laughing at the results. The biggest laughs triggered the Isadora patch to erupt with fireworks and cheering, and a star dropped down on the screen. The person who got the fifth star wins the game with a gigantic spinning star, more fireworks, and louder cheering!

The patch itself is straightforward, drawing audio from the live capture functionality in to a Sound Level Watcher actor, and then into a Comparator to determine what actions should happen and when. A counter keeps track of how many stars have fallen and when to kick off the final animation that designates the winner.

The most challenging part of this project was gauging the appropriate level of sound that would trigger a star. This varied by room, and distance from the microphone, the number of people, and how loud they were. I did a lot of yelling and clapping in my apartment to find an approximate level, and then surreptitiously did a sound level check of my classmates during a busy time in the Motion Lab. I also spent time finessing the animation of the stars dropping, adding a slight bounce to the end.

This game is re-playable, but only once the sound levels are correctly set for a given environment. One way to resolve this would be to include a sound calibration step in the Isadora patch in order to set a high and low level before the game starts. Another option would be swap sound for movement; for example, players wave their arms in the air to indicate how funny the results of the game are.