Final Documentation Jonathan Welch

Posted: December 20, 2015 Filed under: Jonathan Welch, Uncategorized Leave a comment »OK I finally got it working.

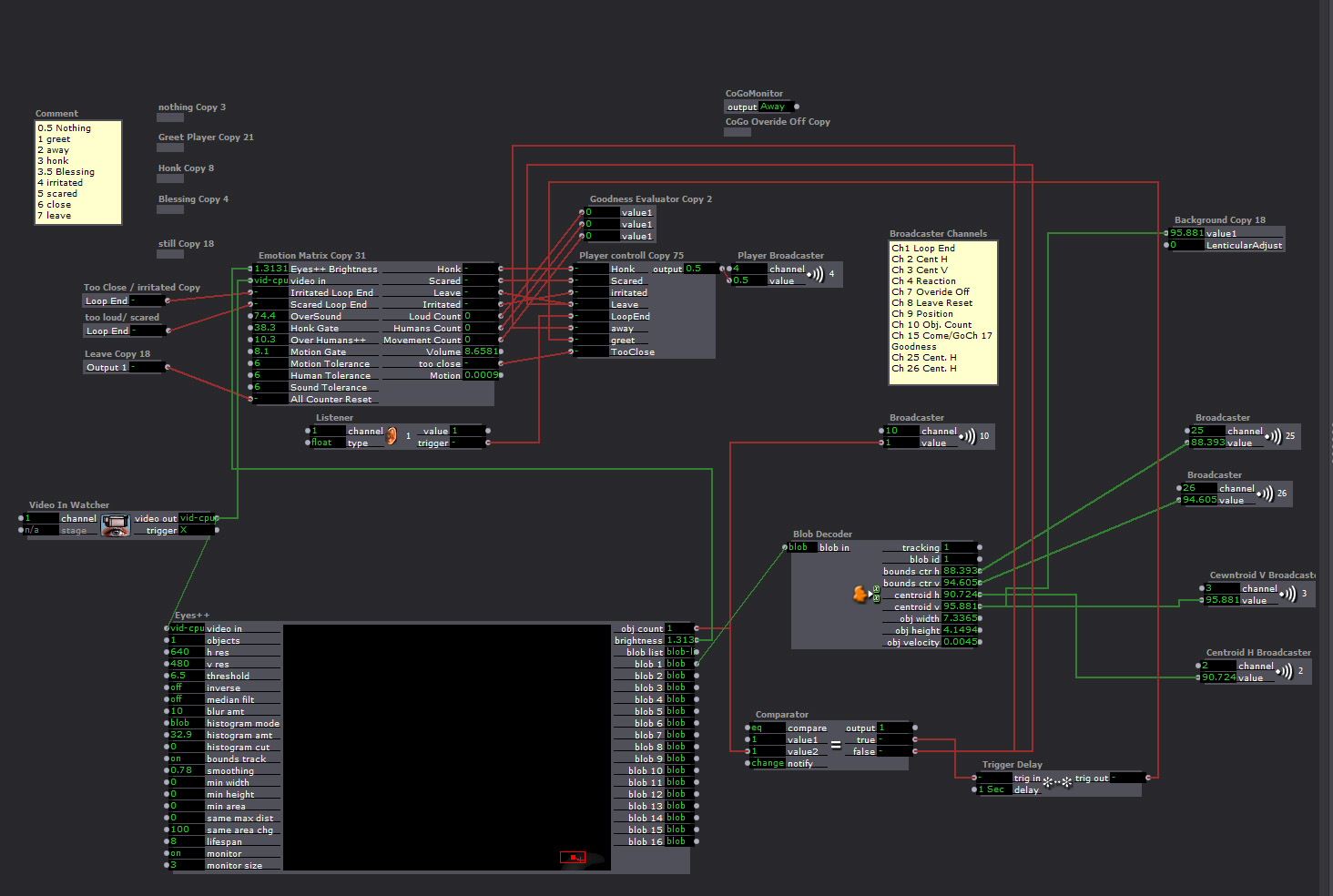

The Master Patch

Eyes++ Tracking the viewer sending data to the “Emotion Matrix” that sent the character’s response to the “Player Controller”, which triggered the Players. The background interlacing was done in the “Background”.

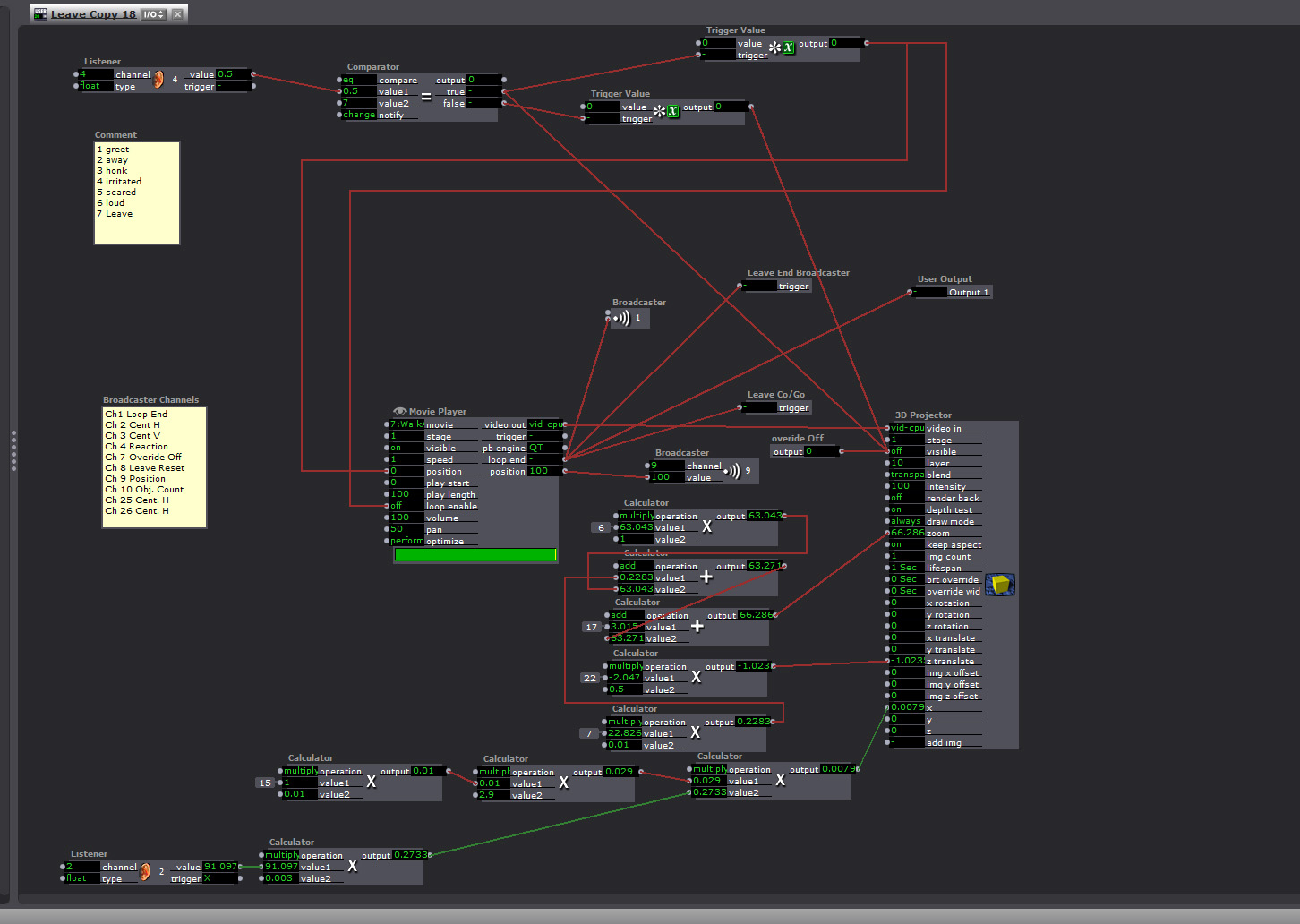

This is the “Response Player”

There were 9 Responses (6 different animations, 2 actions that generated the same response, a still frame, and blank/away response). The players were very similar, but the “Leave” and “Greet” players had a broadcaster that toggled the character’s Here/Away state so noises when no one was around wouldn’t trigger the honk response.

The responses were:

1. Leave (triggered if no one was there, or you pissed him off)

“Honk” walk off camera off and subtitles read “Whatever Mammal”

2. Greet (triggered when someone arrives, the “Blob Counter” was 1)

Walks Up to the camera

3. Too Close

Honks and the subtitles read “You are freaking me out human”

4. Too Loud

Honks and the subtitles read “Are humans always this loud?”

5. Too much motion

Honks and the subtitles read “You are freaking me out human”

6. Too many Humans

Honks and the subtitles read “You are freaking me out human”

7. Honk

Honks and the subtitles read “Hey”

8. Blessing

Honks and the subtitles read “May your down always be greasy and your pond go be dry”

9) And Pause or Away (still frame, or blank frame depending on weather it the state was “Here or Away”)

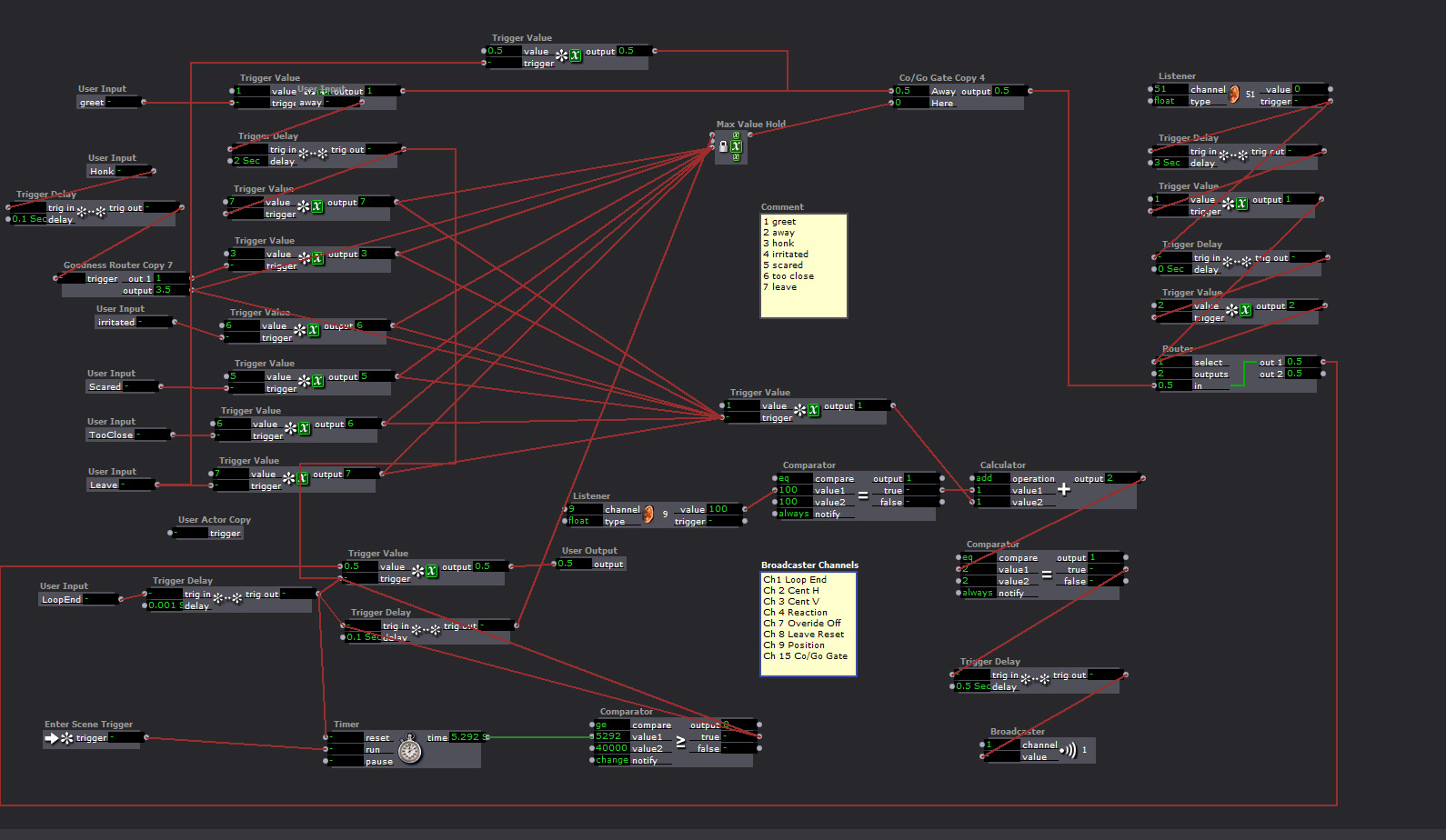

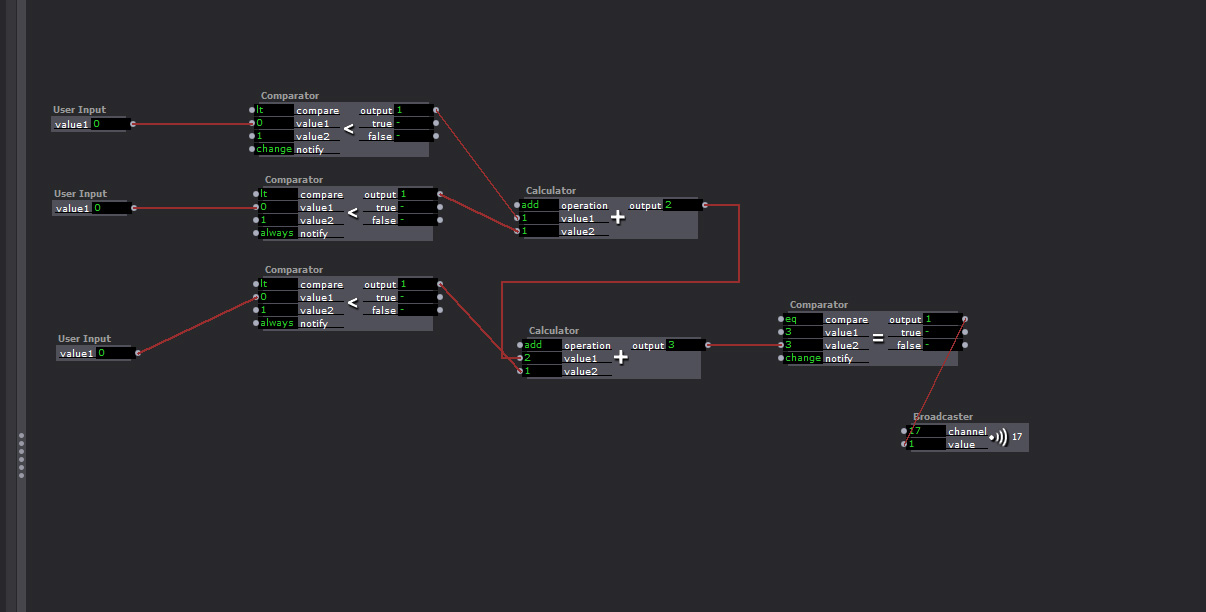

“Playback Controller”

The response is generated in the Emotion Matrix, and this keeps the responses from triggering at the same time

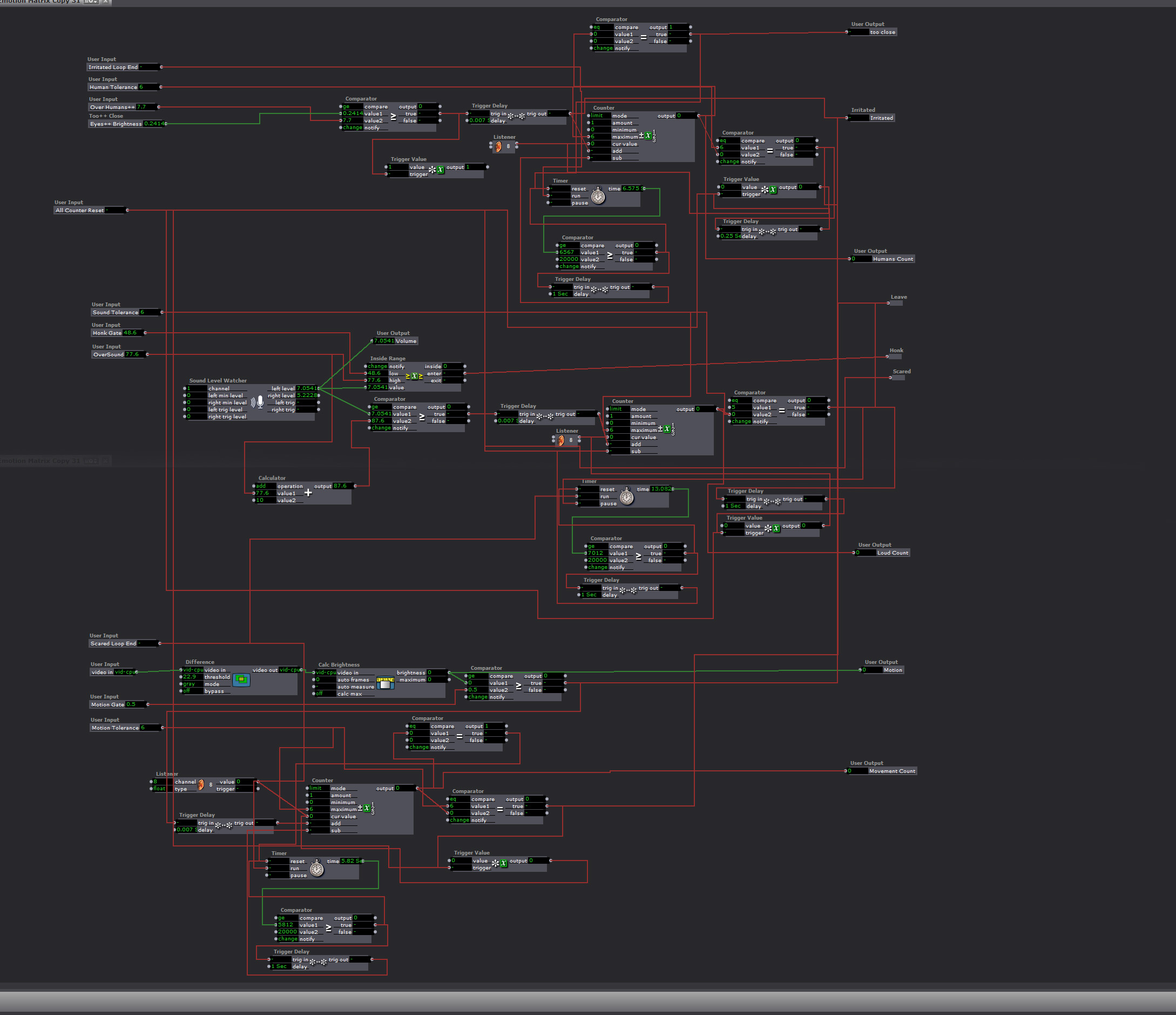

The “Emotion Matrix”

Too much fast motion, getting too close, being too loud, or too many people around would generate a response, and add negative points to the goose’s attatude. If the score got too high, he would leave. If you did not have any negative points and you said something at normal talking tone volume, the goose would give you a blessing. If you had been loud or done something to irritate him, he would just say “Hey”. The irritants would go down over time, but if you got too many in too short a time the goose would walk away.

The “Goodness Elevator”

This took input from the negative response counter and routed the “Honk” response to a blessing if the count was 0 on all negative emotions.

What went wrong…

The background interlacing reduced the refresh rate to under 1 FPS at times. At such a slow rate several of the triggers that start the next response arrive at the same time. There were redundancies to keep them from all happening at once, and to keep a response from starting when one had been triggered but at 1 FPS they were all happening at once.

I had a broadcaster that was sending the player position that was used to ensure the players did not respond at the same time, and to trigger the next response when the last one was over. A “comparator” and a “router” kept the signals from starting while a player was playing, but if too many signals came at once, and the refresh rate was too low, there was no way to keep one response from starting while the other was playing.

I finally fixed it by eliminating the live feed, and the moving background. I tried just replacing the4 live feed with a photo, but interlacing it, and moving the background image was more than the computer could handle.

Cycle… What is this, 3?… Cycle 3D! Autostereoscopy Lenticular Monitor and Interlacing

Posted: November 24, 2015 Filed under: Jonathan Welch, Uncategorized | Tags: Jonathan Welch Leave a comment »One 23″ glasses-free/3D lenticular monitor. I get up to about 13 images at a spread of about 10 to 15 degrees, and a “sweet spot” 2 to 5 or 10 feet (depending the number of images); the background blurs with more images (this is 7). The head tracking and animation are not running for this demo (the interlacing is radically different from what I was doing with 3 images, and I have not written the patch or made the changes to the animation). The poor contrast is an artifact of the terrible camera, the brightness and contrast are normal, but the resolution on the horizontal axis diminishes with additional images. I still have a few bugs, honestly I hoped the lens would be different, this does not seem to really be designed specifically for a monitor with a pixel pitch of .265 mm (with a slight adjustment to the interlacing, it works just as well on the 24 inch with a pixel pitch of .27 mm). But it works, and it will do what I need.

better, stronger, faster, goosier

No you are not being paranoid, that goose with a tuba is watching you…

So far… It does head tracking and adjusts the interlacing to keep the viewer in the “sweet spot” (like a nintendo 3Dsa, but it is much harder when the viewer is farther away, and the eyes are only 1/2 to 1/10th of a degree apart). The goose recognizes a viewer, greets and follows the position… There is also recognition of sound, number of viewers, speed of motion, leaving, and over volume vs talking, but I have not written the animations for the reaction for each scenario, so it just looks at you as you move around. And the background is from the camera above the monitor, I had 3, so it would be in 3D, and have parallax, but it was more than the computer could handle, so I just made a slightly blurry background several feet back from the monitor. But it still has a live feed, so…

Cycle 2 Demo

Posted: November 7, 2015 Filed under: Jonathan Welch, Uncategorized | Tags: Jonathan Welch Leave a comment »The demo in class on Wednesday showed the interface responding to 4 scenarios:

- No audience presence (displayed “away” on the screen)

- Single user detected (the goose went through a rough “greet” animation)

- too much violent movement (the words “scared goose” on the screen)

- more than a couple audience members (the words “too many humans” on the screen)

The interaction was made in a few days, and honestly, I am surprised it was as accurate and reliable as it was…

The user presence was just a blob output. I used a “Brightness Calculator” with the “Difference” actors to judge the violent movement (the blob velocity was unreliable with my equipment). Detecting “too many humans” was just another “Brightness Calculator”. I tried more complicated actors and patches, but these were the ones that worked in the setting.

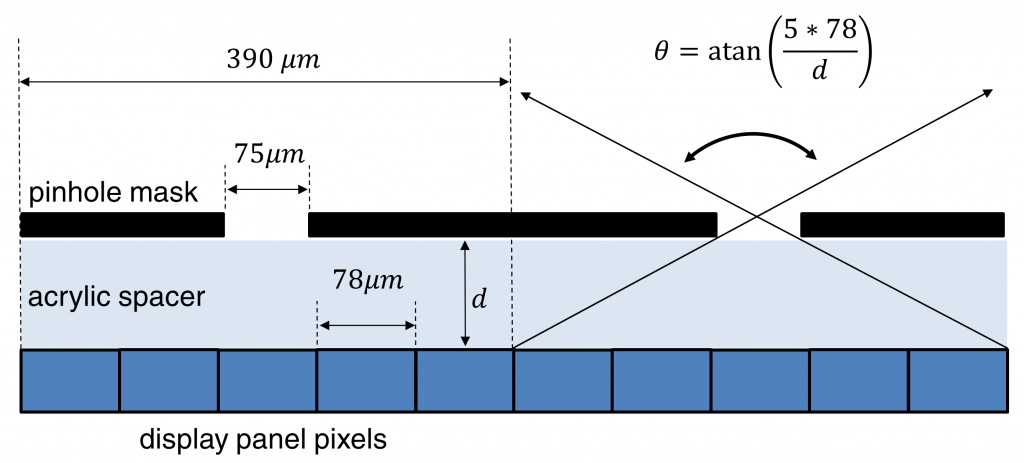

Most of what I have been spending my time solving is an issue with interlacing. I hoped I could build something with the lenses I have, order a custom lens (they are only $12 a foot + the price to cut), or create a parallax barrier. Unfortunately, creating a high quality lens does not seem possible with the materials I have (2 of the 8″ X 10″ sample packs from Microlens), and a parallax barrier blocks light exponentially based on the number of viewing angles (2 views blocks 50%, 3 blocks 66%… 10 views blocks 90%). On Sunday I am going to try a patch that blends interlaced pixels to fix the problem with the lines on the screen not lining up with the lenses (it basically blends interlaces to align a non-integral number of pixels with the lines per inch of the lens).

Worse case scenario… A ready to go lenticular monitor is $500, the lens designed to work with a 23″ monitor is $200, and a 23 inch monitor with a pixel pitch of .270 mm is about $130… One way or another, this goose is going to meet the public on 12/07/15…

Links I have found useful are…

Calculate the DPI of a monitor to make a parallax barrier.

Specs of the one of the common ACCAD 24″ monitor

http://www.pcworld.com/product/1147344/zr2440w-24-inch-led-lcd-monitor.html

MIT student who made a 24″ lenticular 3D monitor.

http://alumni.media.mit.edu/~mhirsch/byo3d/tutorial/lenticular.html

Luma to Chroma Devolves into a Chromadepth Shadow-puppet Show

Posted: October 30, 2015 Filed under: Jonathan Welch | Tags: Jonathan Welch Leave a comment »I was having trouble getting eyes++ to distinguish between a viewer and someone behind the viewer, so I changed the luminescence to chroma with the attached actor and used “The Edge” to create a mask to outline each object, so eyes++ would see them as different blobs. Things quickly devonved into making faces at the Kinect.

The raw video is pretty bad. The only resolution I can get is 80 X 60… I tried adjusting the input, and the image in the OpenNI Streamer looks to be about 640X480, and there are only a few adjustable options, and none of them deal with resolution… I think it is a problem with OpenNI streaming.

But the depth was there, and it was lighting independent, so I am working with it.

The first few seconds are the patch I am using (note the outline around the objects), the rest of the video is just playing with the pretty colors that were generated as a byproduct.

Final Project Progress

Posted: October 27, 2015 Filed under: Jonathan Welch, Uncategorized Leave a comment »I don’t have a computer with camera inputs yet, so I have been working on the 3D environment and interlacing. Below is a screenshot with the operator interface and a video testing the system. It is only a test, so the interlacing is not to scale and oriented laterally. The final project will be on a screen that is mounted in portrait. I hoped to do about 4 interlaced images, but the software is showing a serious lag with 2, so it might not be possible.

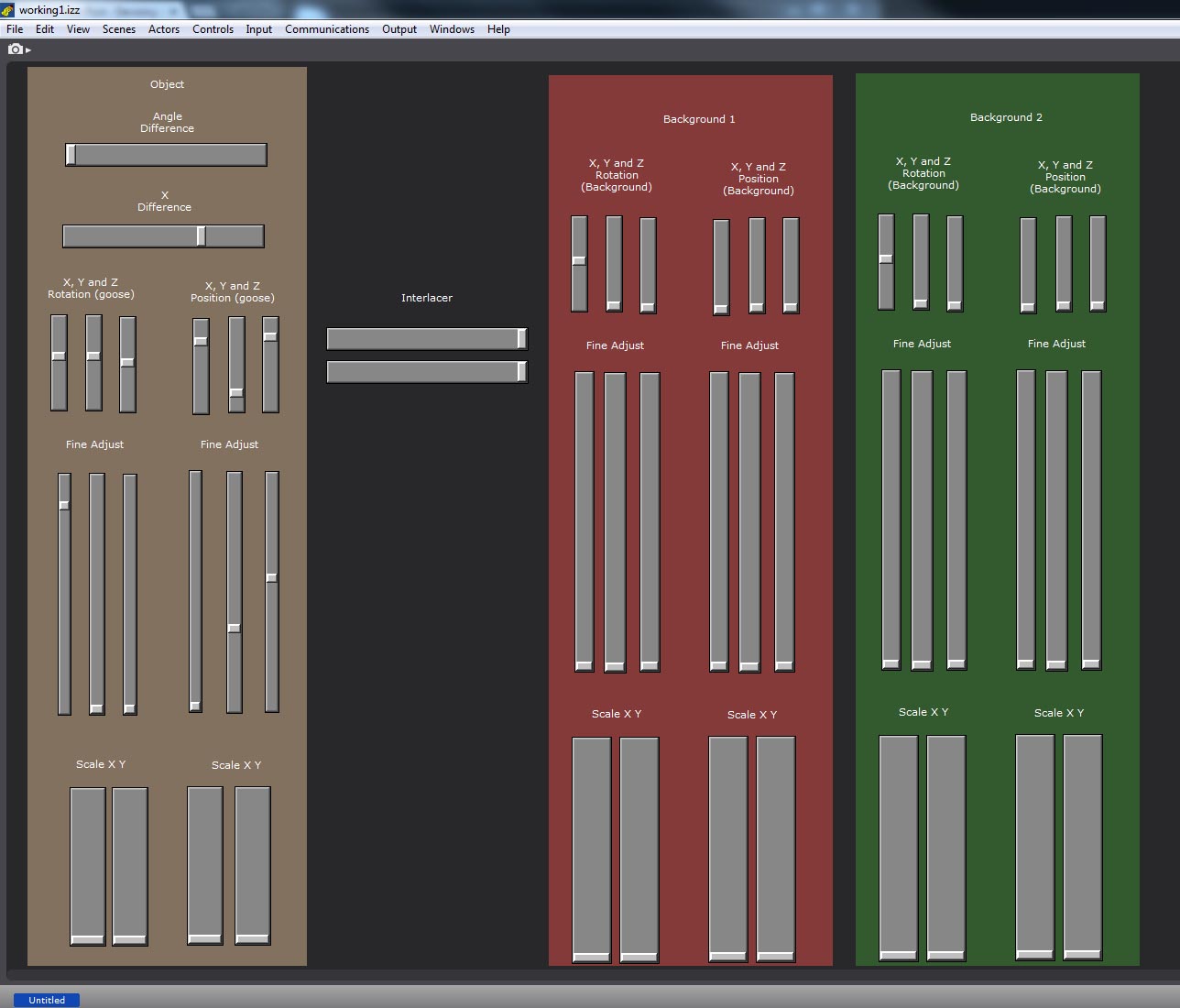

Operator Interface

(used to calibrate the virtual environment with the physical)

The object controls are on the Left (currently 2 views); Angle Difference (the relative rotation of object 1 vs object 2), X difference (how apart the virtual cameras are), X/Y/Z rotation with a fine adjust, and X/Y/Z translation with a fine adjust

The Backdrop controls are on the Right (currently 2 views, I am using mp4 files until I can get a computer with cameras); Angle Difference (the relative rotation of screen 1 vs screen 2), X/Y/Z rotation with a fine adjust, and X/Y/Z translation with a fine adjust.

In the middle in the interlace control (width of the lines and distance between, if I can get more than 1 perspective to work, I will change this to number of views and width of the lines)

Video of the Working Patch

0:00 – 0:01 changing the relative angle

0:01 – 0:04 changing the relative x position

0:04 – 0:09 changing the XYZ rotation

0:09 – 0:20 adjusting the width and distance between the interlaced lines

0:20 – 0:30 adjusting the scale and XYZ YPR of backdrop 1

0:30 – 0:50 adjusting the scale and XYZ YPR of backdrop 2

0:50 – 0:60 adjusting the scale and XYZ YPR of the model

I have a problem as the object gets closer and farther from the camera… One of the windows is a 3D projector, and the other is a render on a 3D screen with a mask for the interlacing. I am not sure if replacing the 3D projector with another 3D screen with a render on it would add more lag or not, but I am already approaching the processing limits of the computer, and I have not added the tuba or the other views… I could always just add XYZ scale controls to the 3D models, but there is a difference between scale and zoom, so it might look weird.

The difference between zooming in and trucking (getting closer) is evident in the “Hitchcock Zoom”

A Diagram with the Equations for Creating a Parallax Barrier

The First Tests

The resolution is very low because the only lens I could get to match with a monitor was 10 lines per inch lowering the horizontal parallax to just 80 pixels on the 8″ x 10″ lens I had. The first is pre-rendered 9 images. the 2nd is only 3 because Isadora started having trouble. I might pre-render the goose and have the responses trigger a loop (like the old Dragon’s Lair game). The draw back, the character would not be able to look at the person interacting. But with only 3 possible views, it might not be apparent he was tracking you.

Selecting Scenes

Posted: October 4, 2015 Filed under: Jonathan Welch Leave a comment »https://vimeo.com/141210159

I put together an animatic for my first idea for the scene selection.

The icons are purely arbitrary; we would probably want something more representational of the concept behind the individual scenes.

I am totally flexible on the idea, and it is dependent on being able to get reliable X Y positional data on the performer from the overhead camera…

Dialog with Machines by Peter Krieg

Posted: September 16, 2015 Filed under: Jonathan Welch Leave a comment »Machine’s and Ideas

Modern computers can’t integrate arguments from different sources into new conclusions. They are unable to create comparatives, metaphors, or analogies, because they are essentially Turing machines (a hypothetical device that manipulates symbols on a strip of tape according to a set of rules, i.e. linear logic). The storage requirement for associative memory in a hierarchical structure increases exponentially as details add up. Data contained in capsulated in hierarchical structures, like the internet, has no comparative capabilities.

Biological Systems are Knowledge Based Polylogical Learning Systems

Hierarchical deductive inference system, like a computer, has only one way to look at things, but learning systems integrate patterns from external and internal events, and compare experiences to create new knowledge. It then uses the knowledge generated to transcend logical domain and apply the map to a new system. Biological systems create an abstract conceptual map of a solution and apply it no a new context. For example, a toddler taking the experiences objects falling combined with experience with the application of force to an object to come to the conclusion that when he or she pushes their plate off the table; it will fall onto the floor creating a mess.

Humans simulate “autopoipsis” (self organization a learning system develops through survival) in conversation. We abstract structural similarities between language and the adaptive behavior of survival. Data storage in cognitive systems can be thought of as generative, in the way we create conceptual symbols, rather than transcribe every event. For example, I might be read a long article, but I will probably only remember general idea as a sequence symbolic representations of the data I find relevant… (i.g. If I wasn’t taking notes, I would probably only remember this as a long article about how people are complex, and machines are dumb.)

Deep Blue Cheated, Virtual Reality Adapts, after that Everything gets Fuzzy

When you ask a person to factor 21, we don’t have to try every number until we get it right. A computer’s approach to problem solving is to test every possible solution, and though they can do this with increasing speed, it is an inefficient approach. While current computer technology does not think like we do, there are some similarities to our symbolic memory and the way some virtual reality systems are generating dynamic maps and dialogue. New “Pile Systems,” store data as input/output patterns.

On the last two pages Kreig describes Fuzzy Logic (which I cannot differentiate from a Pile System) and predicts the rise of the machines…

What’s Bugging Me About All That

He says “high end computers can handle 14 dimensions” (p.24), which seems to conflict with his premise of the mono-logical nature of computers?

At the top of page 24 Kreig says “knowledge system must be able to analyze data and create new data from it,” but isn’t that what a computer does, compare data with a function that generates an output? It does not create a new idea, just applies an existing formula to a pre-categorized set of variables, but doesn’t it generate new data?

How does quantum computing factor in? As I understand it, the “Q-Bits” these machines are based on use quantum “paradoxes” to be 1 and a 0 at once, rather than testing every solution as in a linear logic system. Isn’t this is essentially a Polylogical system?

He lost me on “Pile Systems” and “Fuzzy Logic.”

Doug Mann’s introduction to Jean Baudrillard

Posted: September 16, 2015 Filed under: Jonathan Welch Leave a comment »Doug Mann’s introduction to Jean Baudrillard relays a man with a philosophy on contemporary American society’s construction of a cultural reality with subjective tangibles and a history made malleable with philosophical semantics and media representation.

Baudrillard expanded on a Marxist categorization of objects as use vs. exchange, citing objects that have practical and prestigious value, and envisions a utopian future were gifts cease to be consumer objects and are exchanged for love (I wonder what he would have thought of Facebook, a consumer culture of virtual gifts).

The philosophy of simulacra, phases of imagery, and science fiction, was strangely analogous to neoromantic historian view of history and myth. Mircea Eliade’s (a historian concerned with myth and culture) philosophy of modern vs. “primitive” man’s connection to time and events is an odd parallel and inversion of Baudrillard’s writings on cultural shifts in the perception of reality. Both make extraordinary statements, and use a philosophical semantics to argue the validity through perceptually dependent “truths.” Eliade makes statements about archaic man’s freedom from the progression of time in a way that implies magical abilities to travel through time, and Baudrillard predicted that America would never enter the Gulf War, but they explain their claims, they describe a culturally constructed paradigm in which “actual” historical events are irrelevant. Conversely, Eliade believed modern man’s attachment to a “non-reversible” chronological history shackles us to an unchangeable reality that archaic man transcended through myth, contrasted with cultural progression to Baudrillard’s third order of simulacra, where we have created a disconnected virtual reality.

His views on seduction blatantly enforce an ignorant archaic stereotypical gender roles, and are not worthy of mention.

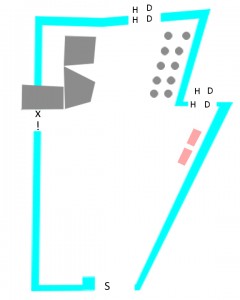

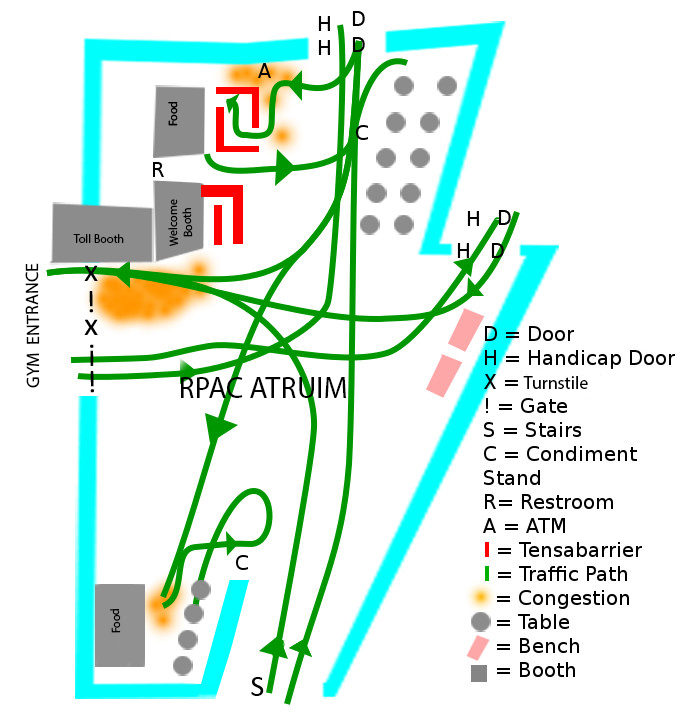

Pressure Project 1

Posted: September 16, 2015 Filed under: Jonathan Welch, Uncategorized Leave a comment »The location and time that I chose to schedule my Pressure Project, were less than optimal (the RPAC on Monday 09/07, the day on the labor day footmab game). There was little traffic and no congestion.

RPAC Atrium

Most of the congestion was in front of the one active turnstile, but they relieved it by opening the other.

The congestion near the tensabarriers in front of the food booth at the top was mainly from people looking at the menu, the food booth at the bottom had no barriers and less congestion.

The vast majority of the traffic came through the doors that did not have handicap access, and no one used the button to activate the doors.

A sadistic, and therefore interesting, social experiment would be to use the turnstiles for outgoing gym traffic instead of incoming, and change the incoming gym traffic to the left side and outgoing to the right.

I suspect this would create a great deal of frustration for both the patrons and the student employees, with outgoing traffic blocking the entrance/exit to argue with the attendant, people trying to come in the turnstiles on the right (as is typical for road traffic), and people trying to exit through the gates.