Final Documentation Jonathan Welch

Posted: December 20, 2015 Filed under: Jonathan Welch, Uncategorized Leave a comment »OK I finally got it working.

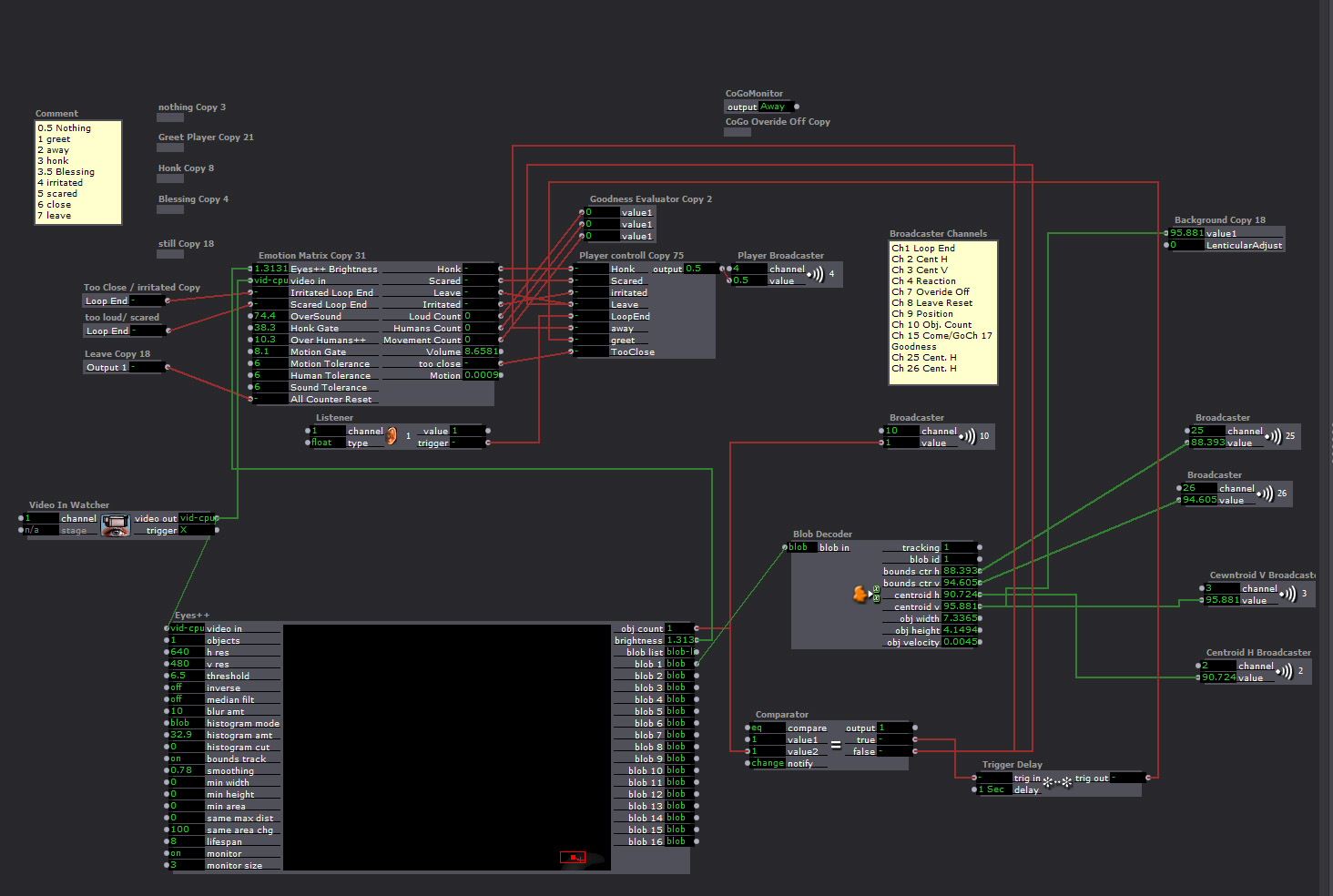

The Master Patch

Eyes++ Tracking the viewer sending data to the “Emotion Matrix” that sent the character’s response to the “Player Controller”, which triggered the Players. The background interlacing was done in the “Background”.

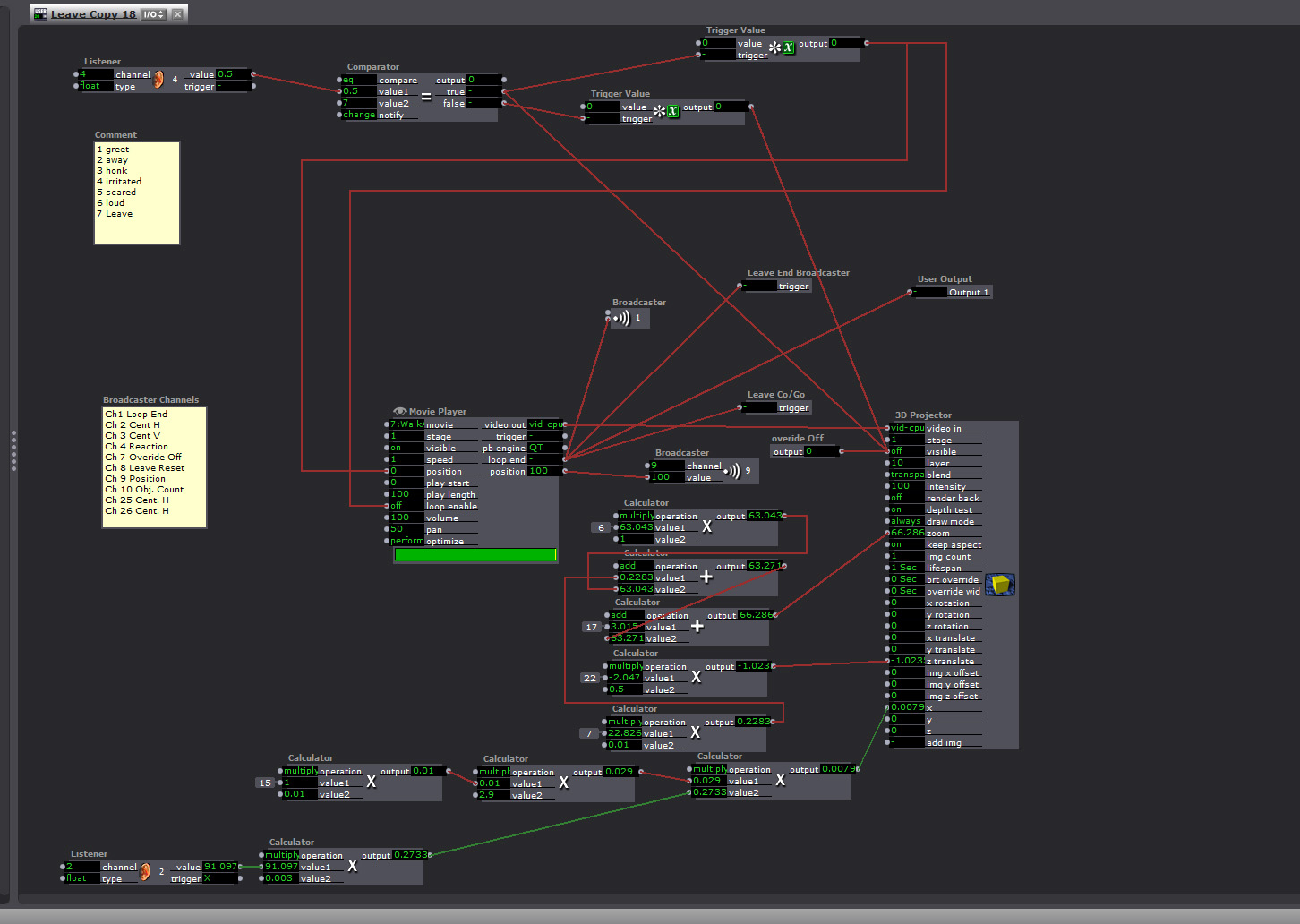

This is the “Response Player”

There were 9 Responses (6 different animations, 2 actions that generated the same response, a still frame, and blank/away response). The players were very similar, but the “Leave” and “Greet” players had a broadcaster that toggled the character’s Here/Away state so noises when no one was around wouldn’t trigger the honk response.

The responses were:

1. Leave (triggered if no one was there, or you pissed him off)

“Honk” walk off camera off and subtitles read “Whatever Mammal”

2. Greet (triggered when someone arrives, the “Blob Counter” was 1)

Walks Up to the camera

3. Too Close

Honks and the subtitles read “You are freaking me out human”

4. Too Loud

Honks and the subtitles read “Are humans always this loud?”

5. Too much motion

Honks and the subtitles read “You are freaking me out human”

6. Too many Humans

Honks and the subtitles read “You are freaking me out human”

7. Honk

Honks and the subtitles read “Hey”

8. Blessing

Honks and the subtitles read “May your down always be greasy and your pond go be dry”

9) And Pause or Away (still frame, or blank frame depending on weather it the state was “Here or Away”)

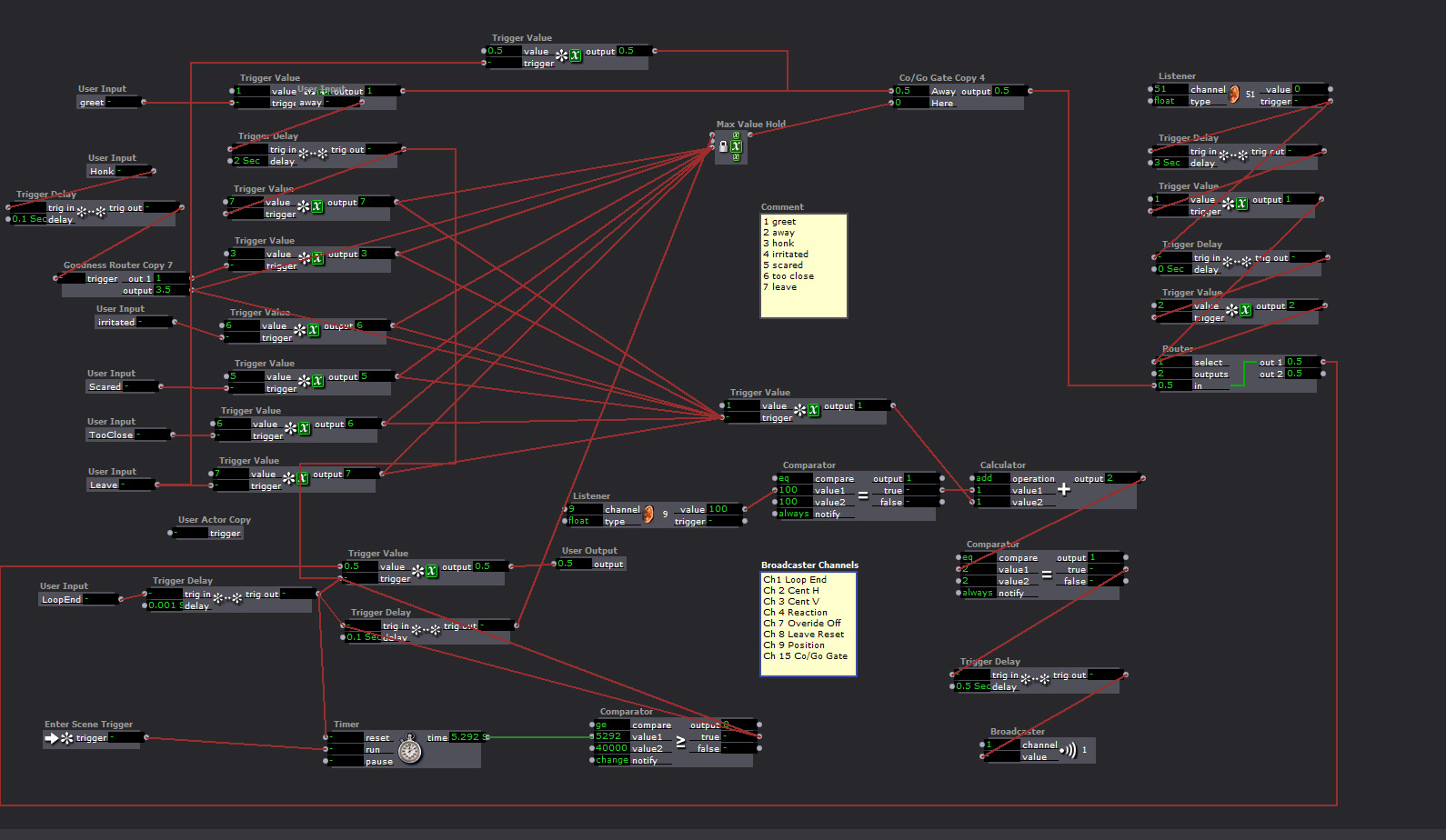

“Playback Controller”

The response is generated in the Emotion Matrix, and this keeps the responses from triggering at the same time

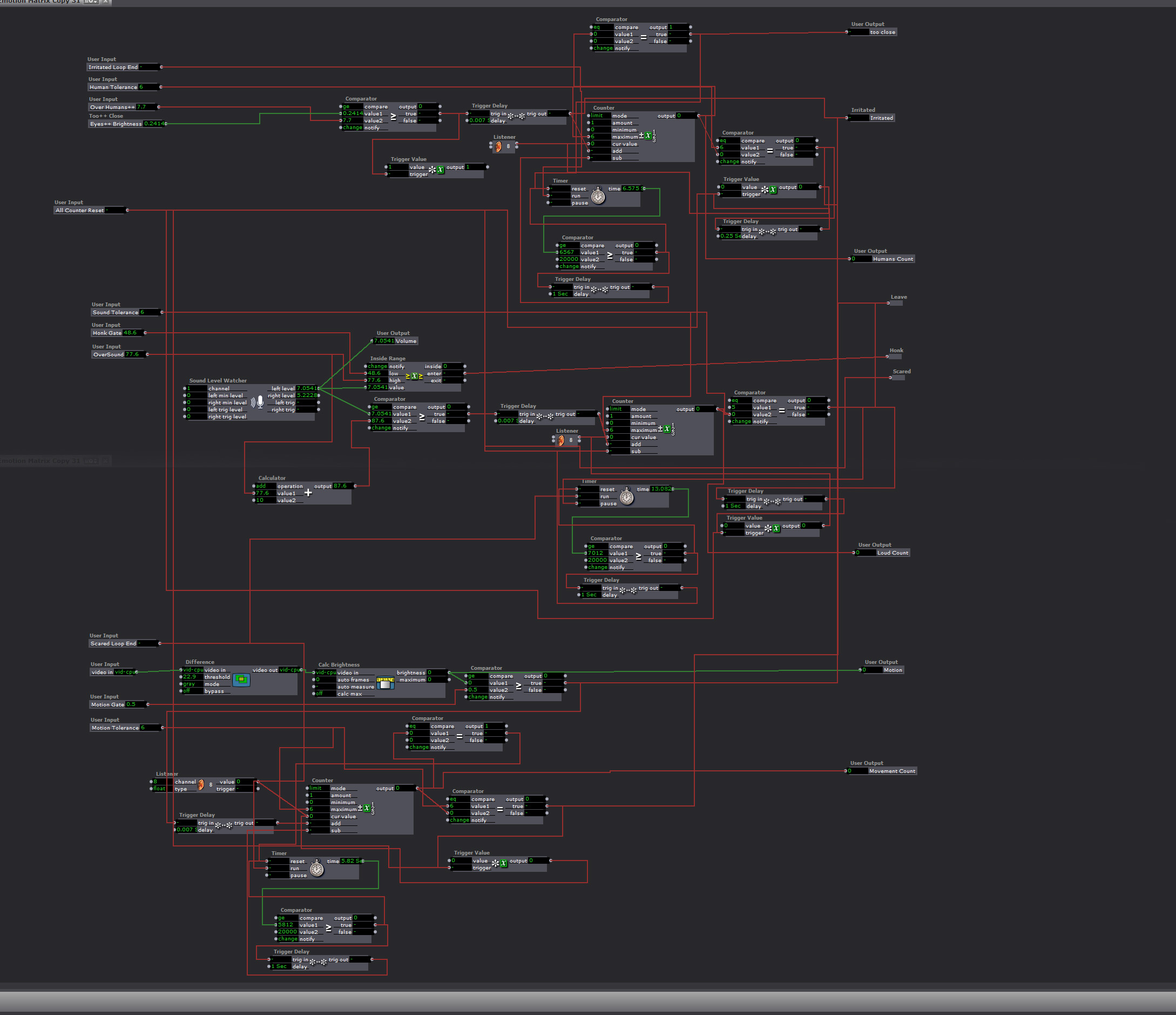

The “Emotion Matrix”

Too much fast motion, getting too close, being too loud, or too many people around would generate a response, and add negative points to the goose’s attatude. If the score got too high, he would leave. If you did not have any negative points and you said something at normal talking tone volume, the goose would give you a blessing. If you had been loud or done something to irritate him, he would just say “Hey”. The irritants would go down over time, but if you got too many in too short a time the goose would walk away.

The “Goodness Elevator”

This took input from the negative response counter and routed the “Honk” response to a blessing if the count was 0 on all negative emotions.

What went wrong…

The background interlacing reduced the refresh rate to under 1 FPS at times. At such a slow rate several of the triggers that start the next response arrive at the same time. There were redundancies to keep them from all happening at once, and to keep a response from starting when one had been triggered but at 1 FPS they were all happening at once.

I had a broadcaster that was sending the player position that was used to ensure the players did not respond at the same time, and to trigger the next response when the last one was over. A “comparator” and a “router” kept the signals from starting while a player was playing, but if too many signals came at once, and the refresh rate was too low, there was no way to keep one response from starting while the other was playing.

I finally fixed it by eliminating the live feed, and the moving background. I tried just replacing the4 live feed with a photo, but interlacing it, and moving the background image was more than the computer could handle.

Cycle… What is this, 3?… Cycle 3D! Autostereoscopy Lenticular Monitor and Interlacing

Posted: November 24, 2015 Filed under: Jonathan Welch, Uncategorized | Tags: Jonathan Welch Leave a comment »One 23″ glasses-free/3D lenticular monitor. I get up to about 13 images at a spread of about 10 to 15 degrees, and a “sweet spot” 2 to 5 or 10 feet (depending the number of images); the background blurs with more images (this is 7). The head tracking and animation are not running for this demo (the interlacing is radically different from what I was doing with 3 images, and I have not written the patch or made the changes to the animation). The poor contrast is an artifact of the terrible camera, the brightness and contrast are normal, but the resolution on the horizontal axis diminishes with additional images. I still have a few bugs, honestly I hoped the lens would be different, this does not seem to really be designed specifically for a monitor with a pixel pitch of .265 mm (with a slight adjustment to the interlacing, it works just as well on the 24 inch with a pixel pitch of .27 mm). But it works, and it will do what I need.

better, stronger, faster, goosier

No you are not being paranoid, that goose with a tuba is watching you…

So far… It does head tracking and adjusts the interlacing to keep the viewer in the “sweet spot” (like a nintendo 3Dsa, but it is much harder when the viewer is farther away, and the eyes are only 1/2 to 1/10th of a degree apart). The goose recognizes a viewer, greets and follows the position… There is also recognition of sound, number of viewers, speed of motion, leaving, and over volume vs talking, but I have not written the animations for the reaction for each scenario, so it just looks at you as you move around. And the background is from the camera above the monitor, I had 3, so it would be in 3D, and have parallax, but it was more than the computer could handle, so I just made a slightly blurry background several feet back from the monitor. But it still has a live feed, so…

Multi-Viewer 3D Displays (why the 3DS is a handheld divice)

Posted: November 12, 2015 Filed under: Uncategorized Leave a comment »I have been trying to make a, glasses free, multi-viewer 3D monitor (like the Nintendo 3DS, only 23 inch and multiple viewers), and it is much more tricky than it looks.

The parallax barrier cuts down the brightness exponentially based on the number of viewer (50% light cut to do 1 pair of eyes, 75% for 2), so with 2 viewers the screen is dim. But there is more, the resolution is not only cut by 1/4, a pixel is just as narrow, but I am adding a huge gap between them (some test subjects could not even tell what they were looking at)…

Lenticular lenses cost about $12 a foot, but none of the ones I got in the sampler pack line up with the pixels on any of the monitors, so I have to sacrifice even more horizontal resolution…

I gave up and started doing head tracking, but isolating individual eyes on that scale is just about impossible, so I wind up wasting 4X the resolution just to have enough margin for a single viewer…

And it still doesn’t isolate the eyes properly!!!

Now I have a 3D display (if you do not move faster than the head tracking can follow, and remain 2 to 3 1/2 feet from the monitor) that tracks your head, but will not respond to more than 1 viewer…

So I decided that when the head tracking detects more than one person, or if you move around too fast, the character (Tuba-Goose) will get irritated and leave…

If I can get it to do that, I would consider it a hell of an achievement. And even watching this quasi-3D interface figure out where you are and adjust the perspective to compensate (with a bit of a lag sometimes) is a little unsettling… Kind of like being eyeballed by a goose…

I can work with this, but I might still have to buy the 3D monitor, or at least the lens that is made for the 23 inch display…

Cycle 2 Demo

Posted: November 7, 2015 Filed under: Jonathan Welch, Uncategorized | Tags: Jonathan Welch Leave a comment »The demo in class on Wednesday showed the interface responding to 4 scenarios:

- No audience presence (displayed “away” on the screen)

- Single user detected (the goose went through a rough “greet” animation)

- too much violent movement (the words “scared goose” on the screen)

- more than a couple audience members (the words “too many humans” on the screen)

The interaction was made in a few days, and honestly, I am surprised it was as accurate and reliable as it was…

The user presence was just a blob output. I used a “Brightness Calculator” with the “Difference” actors to judge the violent movement (the blob velocity was unreliable with my equipment). Detecting “too many humans” was just another “Brightness Calculator”. I tried more complicated actors and patches, but these were the ones that worked in the setting.

Most of what I have been spending my time solving is an issue with interlacing. I hoped I could build something with the lenses I have, order a custom lens (they are only $12 a foot + the price to cut), or create a parallax barrier. Unfortunately, creating a high quality lens does not seem possible with the materials I have (2 of the 8″ X 10″ sample packs from Microlens), and a parallax barrier blocks light exponentially based on the number of viewing angles (2 views blocks 50%, 3 blocks 66%… 10 views blocks 90%). On Sunday I am going to try a patch that blends interlaced pixels to fix the problem with the lines on the screen not lining up with the lenses (it basically blends interlaces to align a non-integral number of pixels with the lines per inch of the lens).

Worse case scenario… A ready to go lenticular monitor is $500, the lens designed to work with a 23″ monitor is $200, and a 23 inch monitor with a pixel pitch of .270 mm is about $130… One way or another, this goose is going to meet the public on 12/07/15…

Links I have found useful are…

Calculate the DPI of a monitor to make a parallax barrier.

Specs of the one of the common ACCAD 24″ monitor

http://www.pcworld.com/product/1147344/zr2440w-24-inch-led-lcd-monitor.html

MIT student who made a 24″ lenticular 3D monitor.

http://alumni.media.mit.edu/~mhirsch/byo3d/tutorial/lenticular.html

Luma to Chroma Devolves into a Chromadepth Shadow-puppet Show

Posted: October 30, 2015 Filed under: Jonathan Welch | Tags: Jonathan Welch Leave a comment »I was having trouble getting eyes++ to distinguish between a viewer and someone behind the viewer, so I changed the luminescence to chroma with the attached actor and used “The Edge” to create a mask to outline each object, so eyes++ would see them as different blobs. Things quickly devonved into making faces at the Kinect.

The raw video is pretty bad. The only resolution I can get is 80 X 60… I tried adjusting the input, and the image in the OpenNI Streamer looks to be about 640X480, and there are only a few adjustable options, and none of them deal with resolution… I think it is a problem with OpenNI streaming.

But the depth was there, and it was lighting independent, so I am working with it.

The first few seconds are the patch I am using (note the outline around the objects), the rest of the video is just playing with the pretty colors that were generated as a byproduct.

Final Project Progress

Posted: October 27, 2015 Filed under: Jonathan Welch, Uncategorized Leave a comment »I don’t have a computer with camera inputs yet, so I have been working on the 3D environment and interlacing. Below is a screenshot with the operator interface and a video testing the system. It is only a test, so the interlacing is not to scale and oriented laterally. The final project will be on a screen that is mounted in portrait. I hoped to do about 4 interlaced images, but the software is showing a serious lag with 2, so it might not be possible.

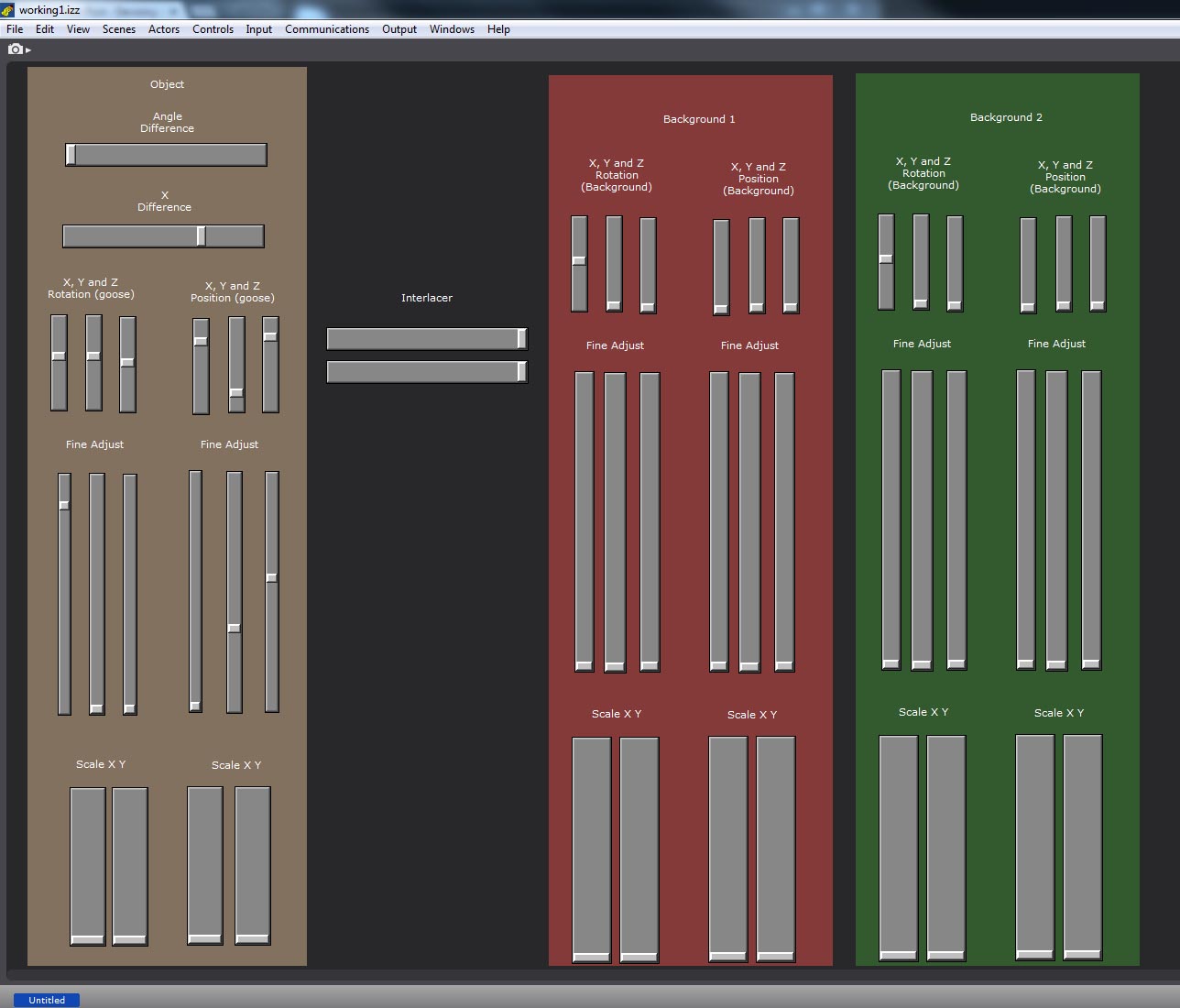

Operator Interface

(used to calibrate the virtual environment with the physical)

The object controls are on the Left (currently 2 views); Angle Difference (the relative rotation of object 1 vs object 2), X difference (how apart the virtual cameras are), X/Y/Z rotation with a fine adjust, and X/Y/Z translation with a fine adjust

The Backdrop controls are on the Right (currently 2 views, I am using mp4 files until I can get a computer with cameras); Angle Difference (the relative rotation of screen 1 vs screen 2), X/Y/Z rotation with a fine adjust, and X/Y/Z translation with a fine adjust.

In the middle in the interlace control (width of the lines and distance between, if I can get more than 1 perspective to work, I will change this to number of views and width of the lines)

Video of the Working Patch

0:00 – 0:01 changing the relative angle

0:01 – 0:04 changing the relative x position

0:04 – 0:09 changing the XYZ rotation

0:09 – 0:20 adjusting the width and distance between the interlaced lines

0:20 – 0:30 adjusting the scale and XYZ YPR of backdrop 1

0:30 – 0:50 adjusting the scale and XYZ YPR of backdrop 2

0:50 – 0:60 adjusting the scale and XYZ YPR of the model

I have a problem as the object gets closer and farther from the camera… One of the windows is a 3D projector, and the other is a render on a 3D screen with a mask for the interlacing. I am not sure if replacing the 3D projector with another 3D screen with a render on it would add more lag or not, but I am already approaching the processing limits of the computer, and I have not added the tuba or the other views… I could always just add XYZ scale controls to the 3D models, but there is a difference between scale and zoom, so it might look weird.

The difference between zooming in and trucking (getting closer) is evident in the “Hitchcock Zoom”

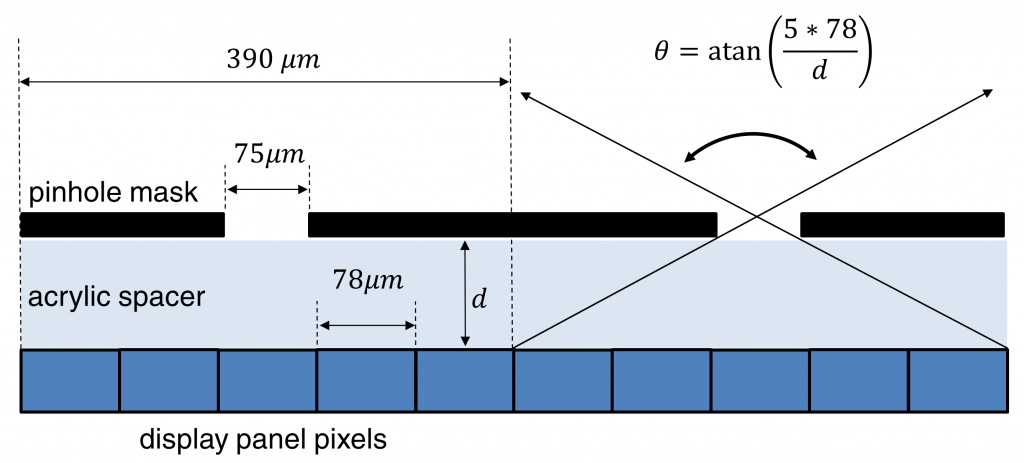

A Diagram with the Equations for Creating a Parallax Barrier

The First Tests

The resolution is very low because the only lens I could get to match with a monitor was 10 lines per inch lowering the horizontal parallax to just 80 pixels on the 8″ x 10″ lens I had. The first is pre-rendered 9 images. the 2nd is only 3 because Isadora started having trouble. I might pre-render the goose and have the responses trigger a loop (like the old Dragon’s Lair game). The draw back, the character would not be able to look at the person interacting. But with only 3 possible views, it might not be apparent he was tracking you.

Jonathan PP3 Patch and Video

Posted: October 12, 2015 Filed under: Pressure Project 3, Uncategorized | Tags: j, Jonathan Welch Leave a comment »

https://youtu.be/HjSSyEbz68Y

CLASS_PP3 CV Patch_151007_1.izz

Coherence???

Posted: October 6, 2015 Filed under: Uncategorized Leave a comment »I was thinking we might want our scenes to be connected… I have a patch that turns on an outside night scene with crickets chirping and animal moving around in the woods… There is also a little parallax as the “performer” moves around in the space (the 33% X 50% piece of the stage before the performer triggers another patch/scene/whatever), but I could adapt it if we have a common vision…

The performer’s position is represented by the moving H (Vorizontal) and V (Vertical) readout. The numbers would not be visible in the projection.

The trigger position is in the lower left corner.

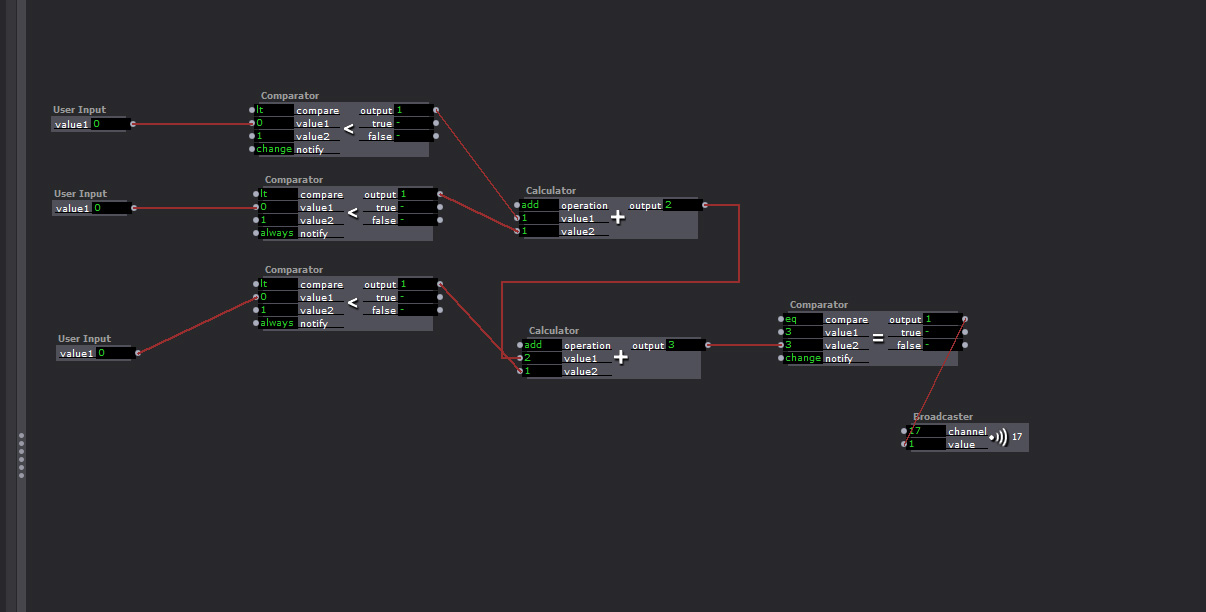

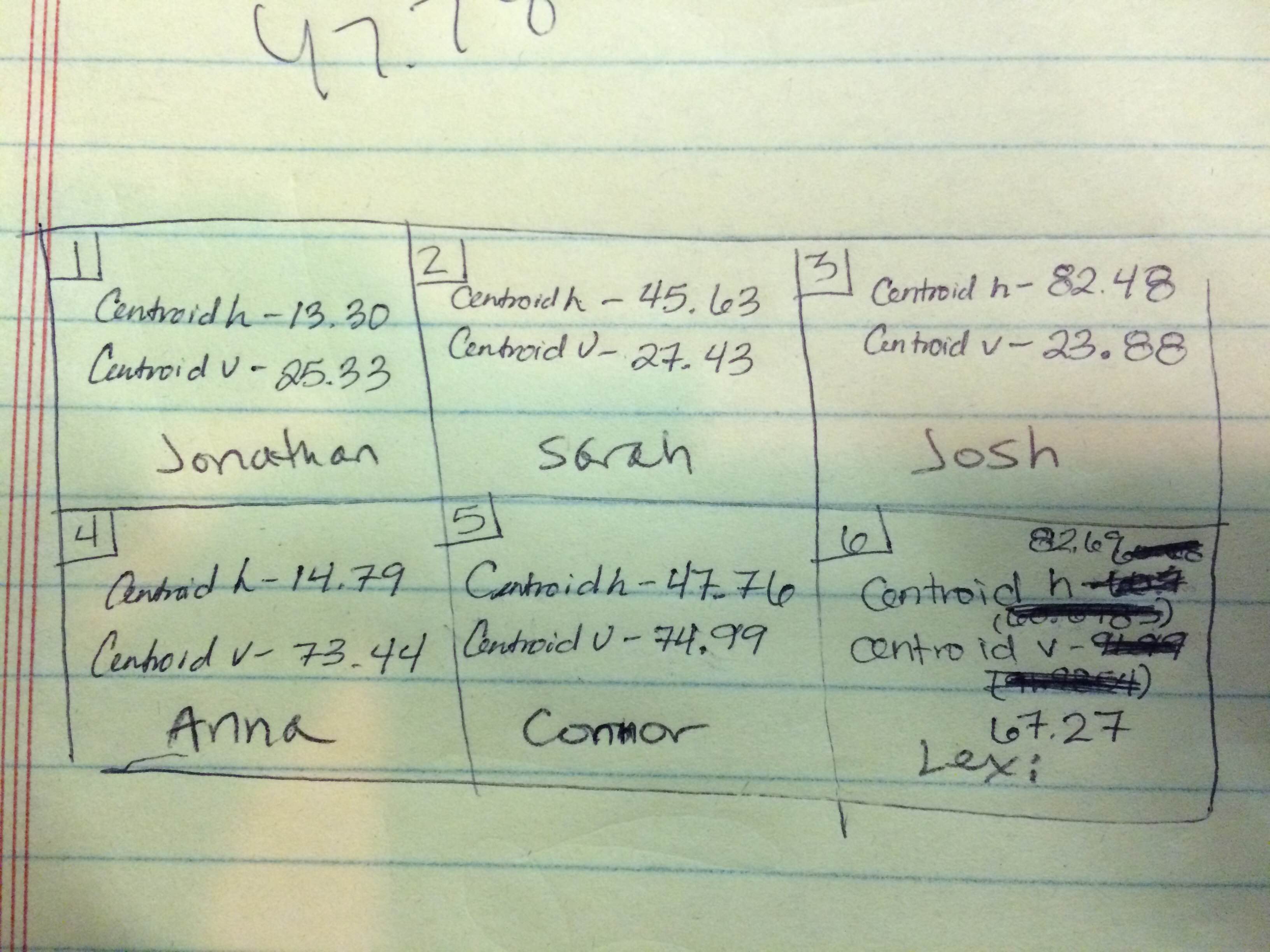

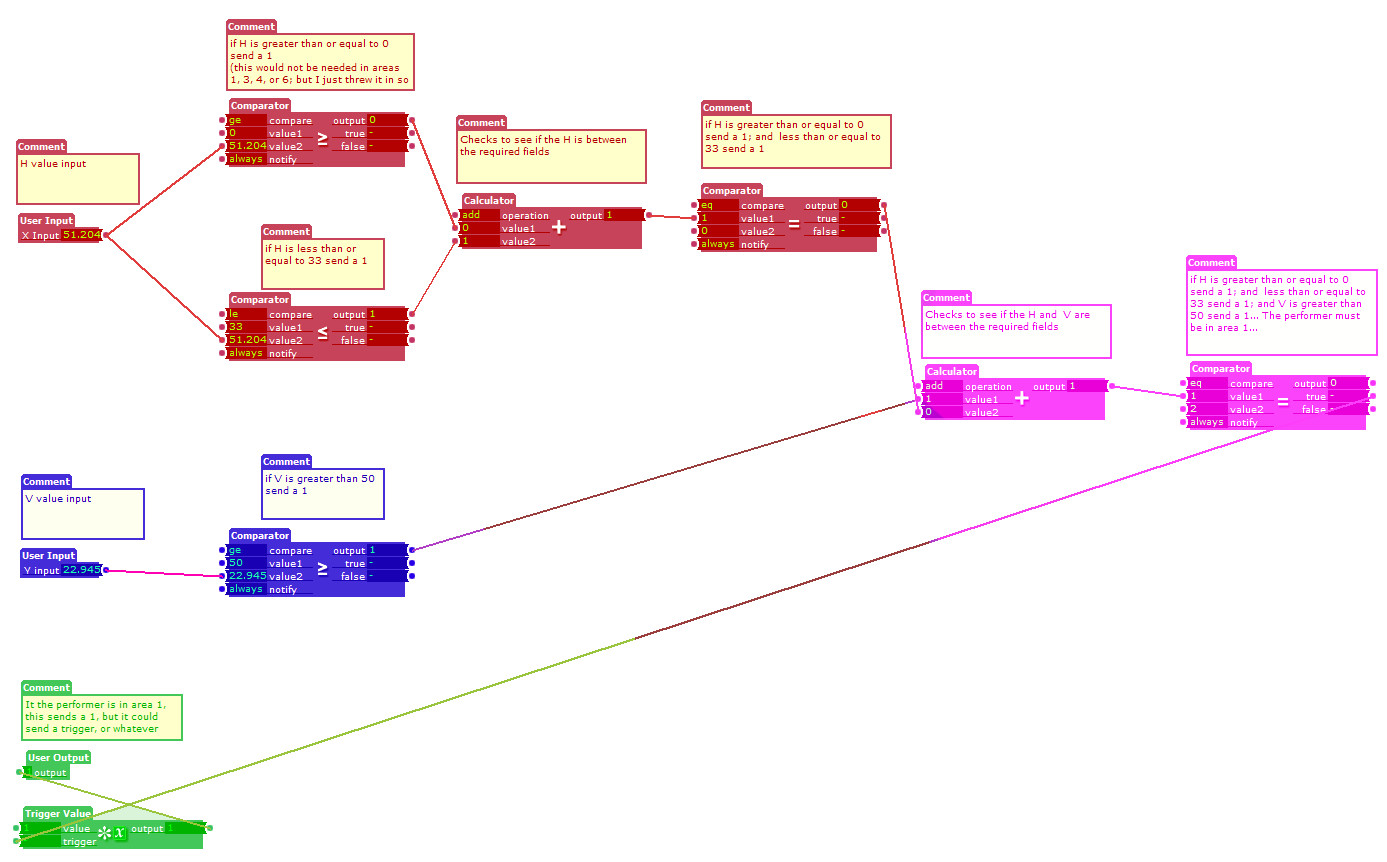

The “Sextant” and a patch that I was using to activate my Scene/Actor when the performer was in area 1

Posted: October 6, 2015 Filed under: Uncategorized 4 Comments »I am guessing the area’s range is 100 X 100 in both directions, so I divided it into 33/33/34 W and 50/50 H, and wrote a user actor to define area 1. This could be used to activate a scene or turn on/off projectors.

The red quantifies the H axis, blue the V, purple is the combined, and green is the output with a value.

Selecting Scenes

Posted: October 4, 2015 Filed under: Jonathan Welch Leave a comment »https://vimeo.com/141210159

I put together an animatic for my first idea for the scene selection.

The icons are purely arbitrary; we would probably want something more representational of the concept behind the individual scenes.

I am totally flexible on the idea, and it is dependent on being able to get reliable X Y positional data on the performer from the overhead camera…