PP1: Breathing the text – Tamryn McDermott

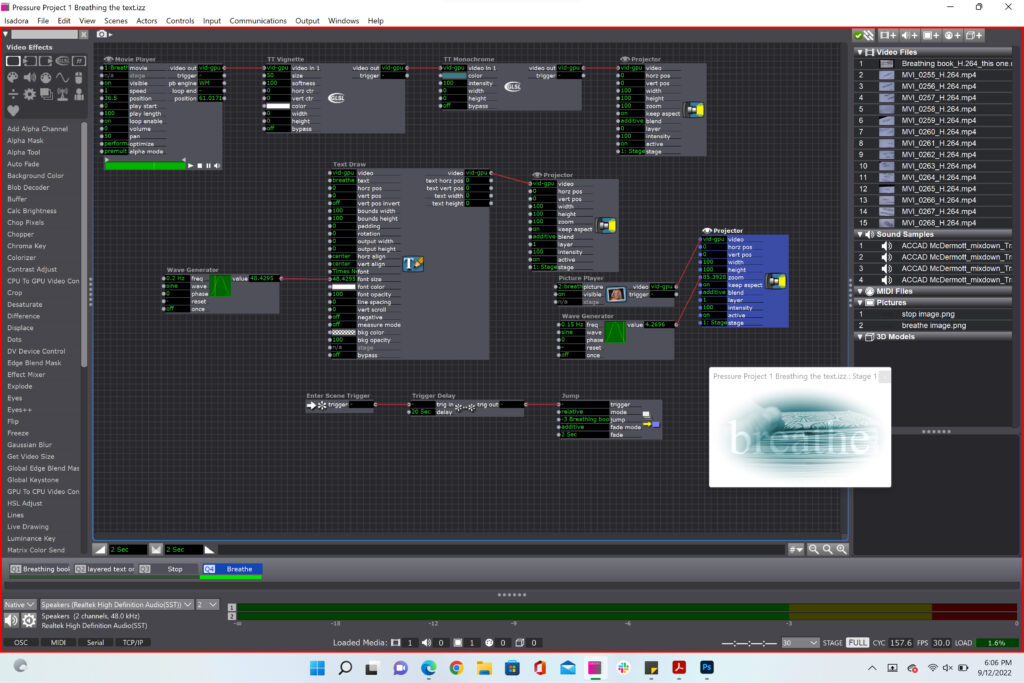

Posted: September 12, 2022 Filed under: Pressure Project I, Tamryn McDermott | Tags: Pressure Project, Pressure Project One 1 Comment »For this project, I decided to use some of the digital assets I created (video and audio) for a project I did in the motion lab last spring. I did not know Isadora at the time and so this provided me the opportunity to set up in Isadora from scratch and play with the assets in a new way without relying on someone else to set it up for me.

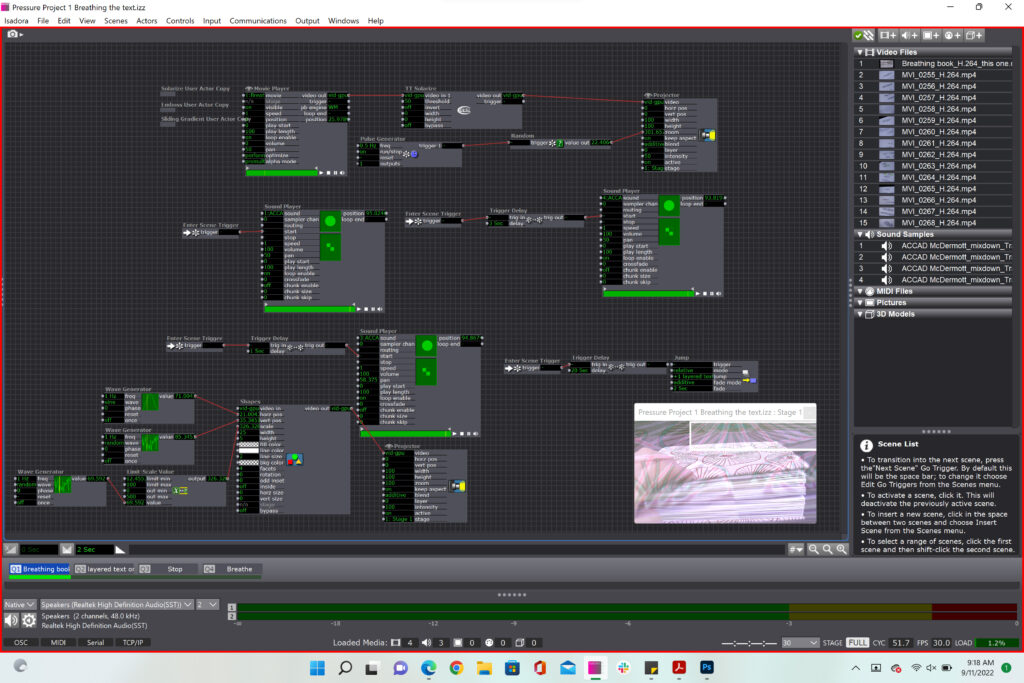

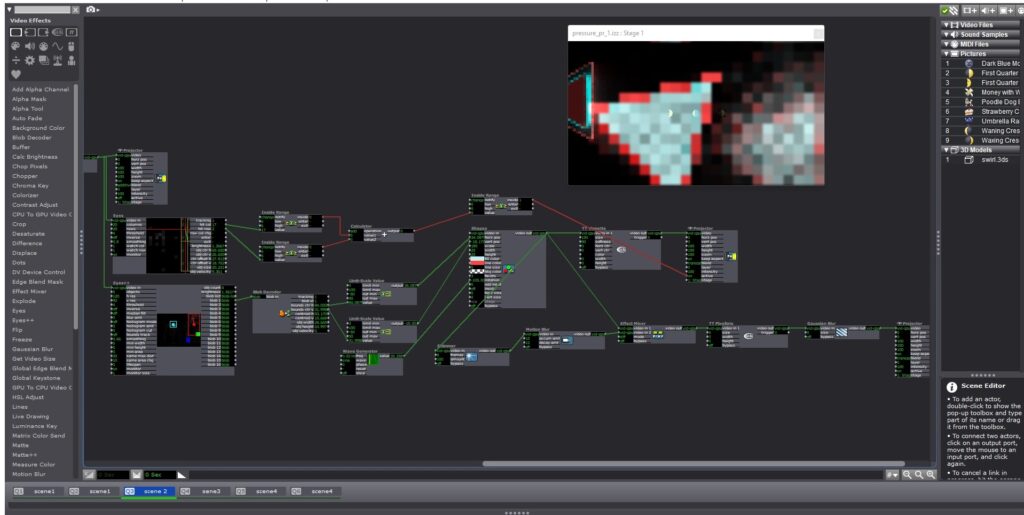

Initial structure and approach: four scenes, use Text Draw actor, play with integrating video, text, and audio, use pacing in an attempt to affect and engage viewer

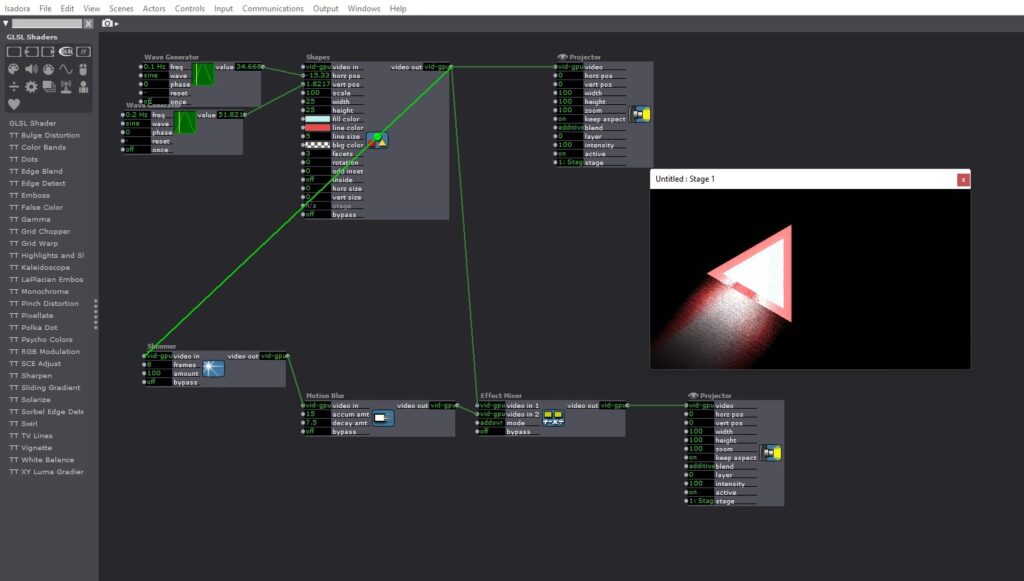

Scene 1: Introduce breathing book structure and layer in shape actors with random movement and scale

In scene one, I had challenges with getting the different shape actors to actually show color variations in the final output even though I had them set up within different user actors. The color remained white no matter what in the final stage display. The layering and actors worked well here although I would have liked to include more randomness in the scene and possibly integrate some of new randomness techniques based on video sensors into the scene.

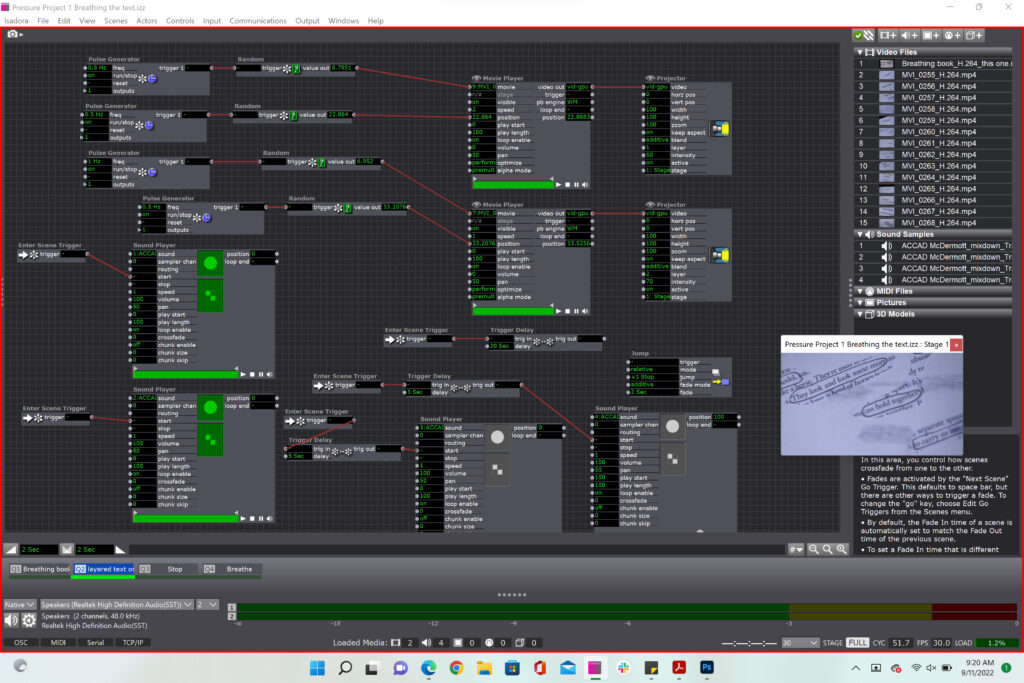

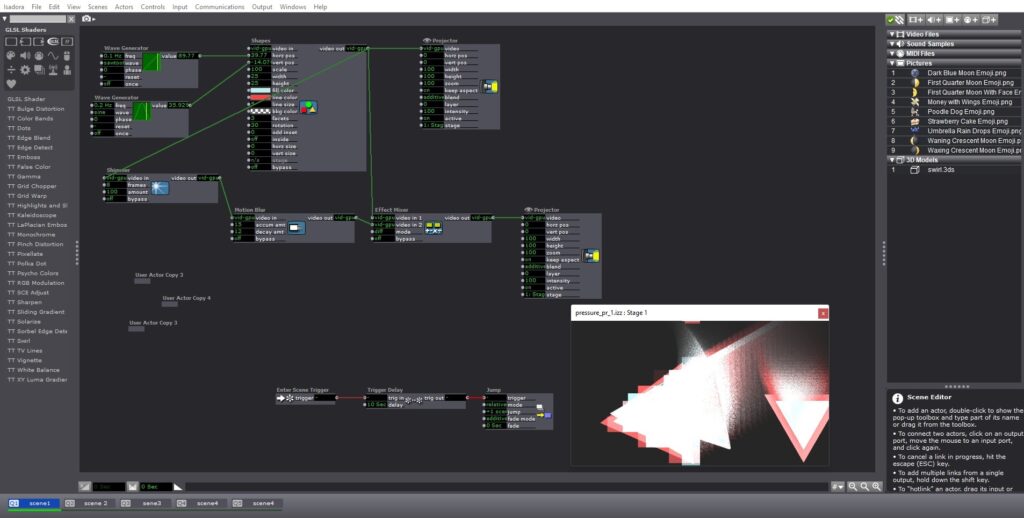

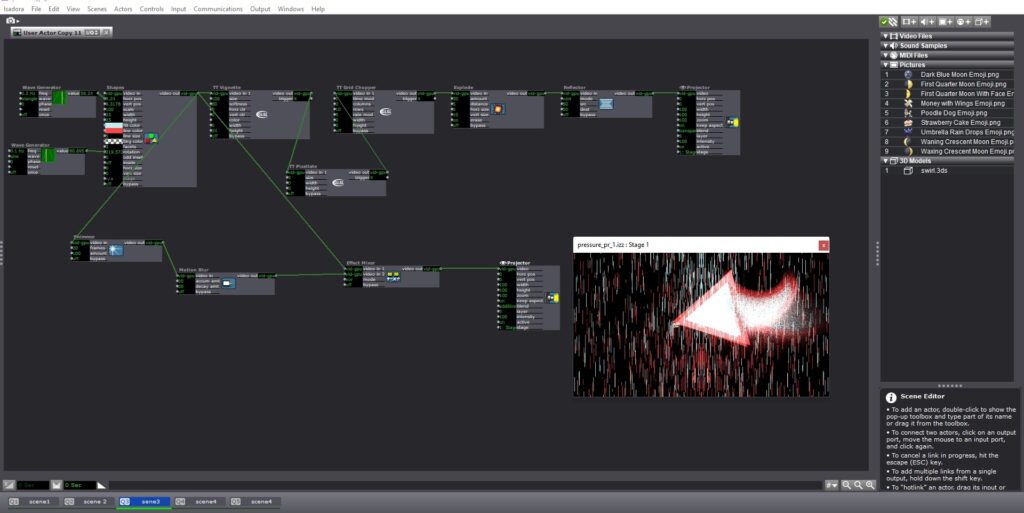

In this scene I used the random actor to select between 14 different videos that would be layered in pairs and change positions, however the random actor favored the number 15 for the video selection field and so most of the time video 15 layered over itself from varying starting positions. Again, with more time, I would have introduced another method of selecting random numbers between 2 – 15. I think it would have been cool to have the audio files trigger this in some way, but I would have to think about this more to figure out the best approach.

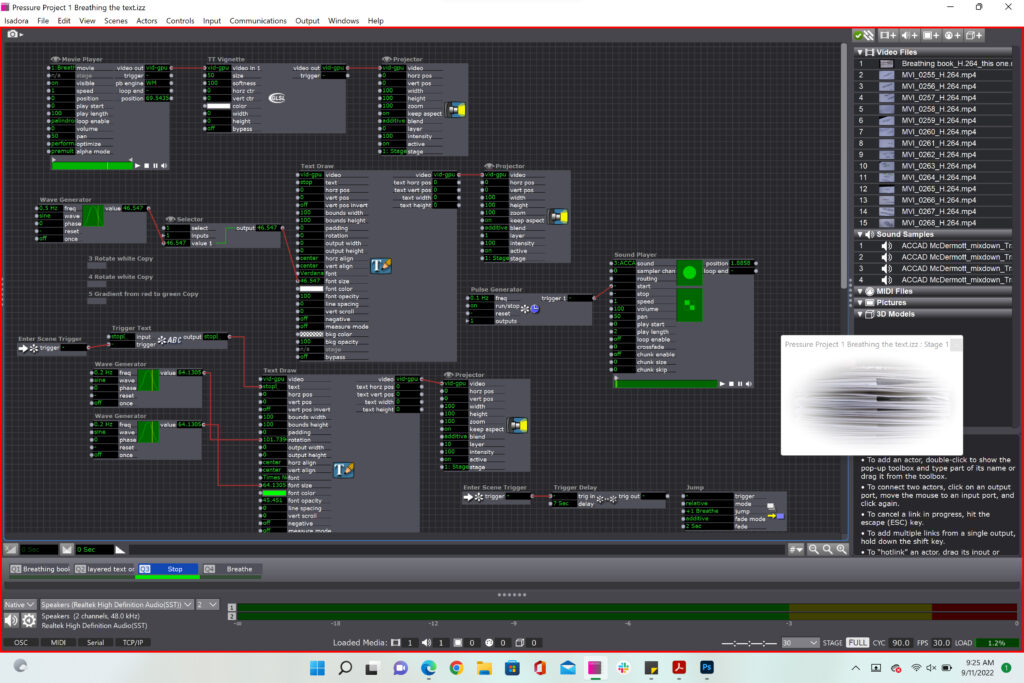

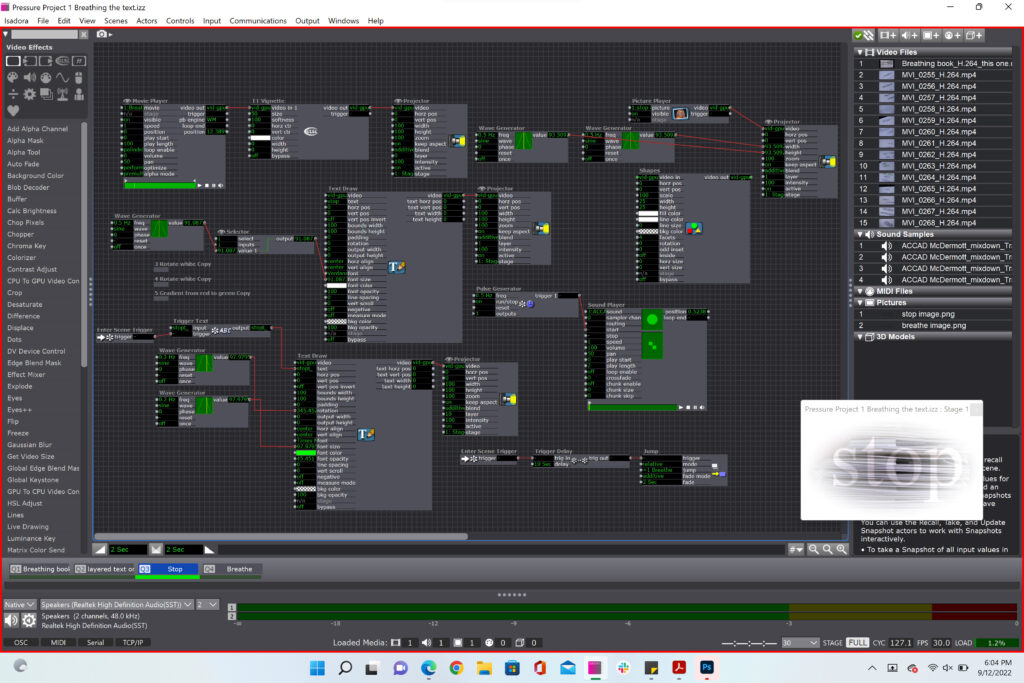

In the screenshot below the text draw actors are not working. I had to create workarounds since none of these actors would show up when I exited the scene and returned. There was a bug that prevented it from working on both my laptop and the ACCAD lab computers. Only the video would display on the stage. As a workaround, I generated a .png of the text “stop” and then used that as a shape actor and animated it to correspond with the repeated “stop” audio file. Below are the screenshots from the first and second patches, the second one being the working one.

Below is the working scene with the substituted image of the word “stop” I wanted to animate.

Finally, in the last scene I wanted to end on a calming note after the stop scene so here is the final scene with again, the text inserted as an image that I animated since the text draw actor did not work for me.

I learned a lot from this project and hope to be able to get a response from the Isadora team on the text draw actor bug I ran into. I also want to experiment with sensors and introduce more randomness into my work.

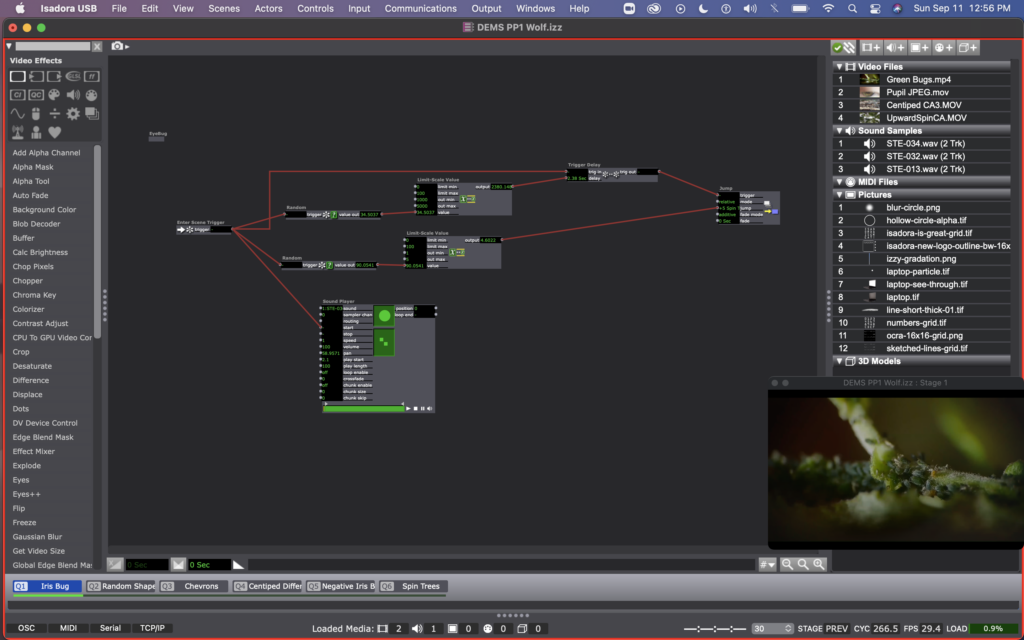

PP1 Playing with Randomness – Mollie Wolf

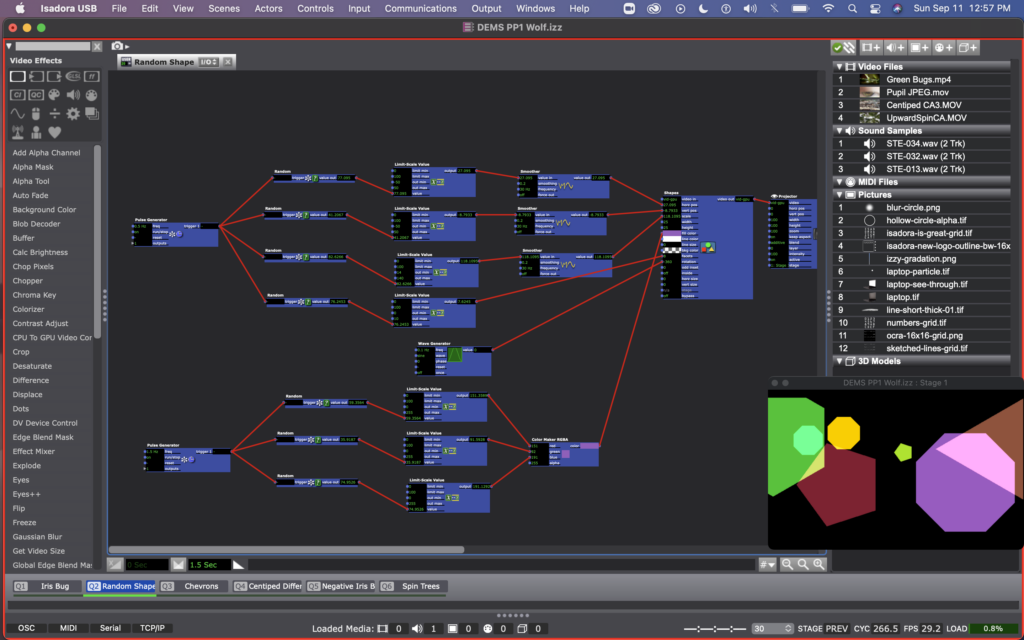

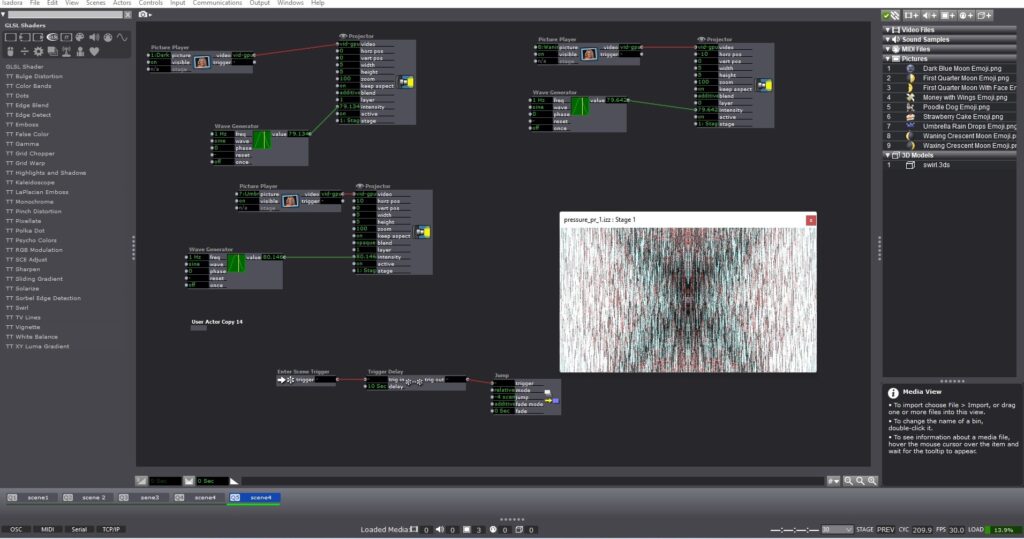

Posted: September 11, 2022 Filed under: Uncategorized Leave a comment »For this pressure project, I decided to use continue using the random shapes demo we worked on together as a class, and layered onto it/made it more complex.

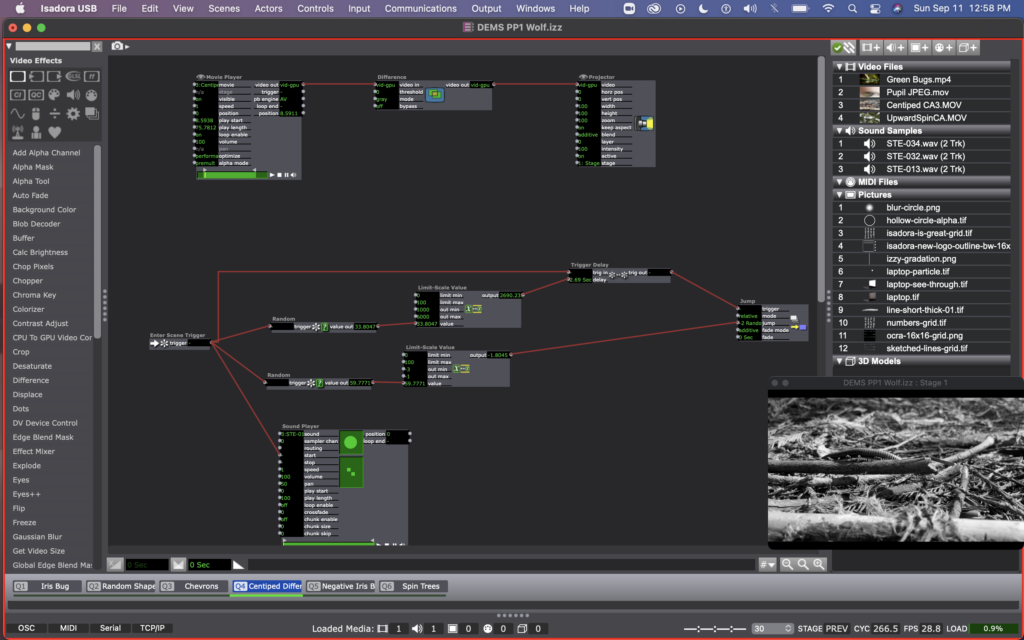

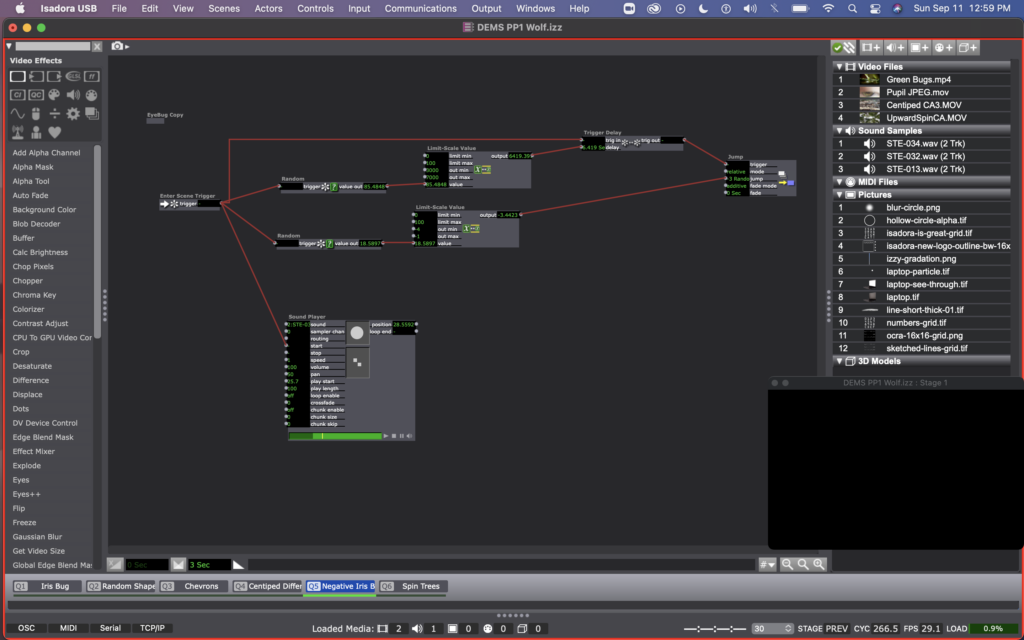

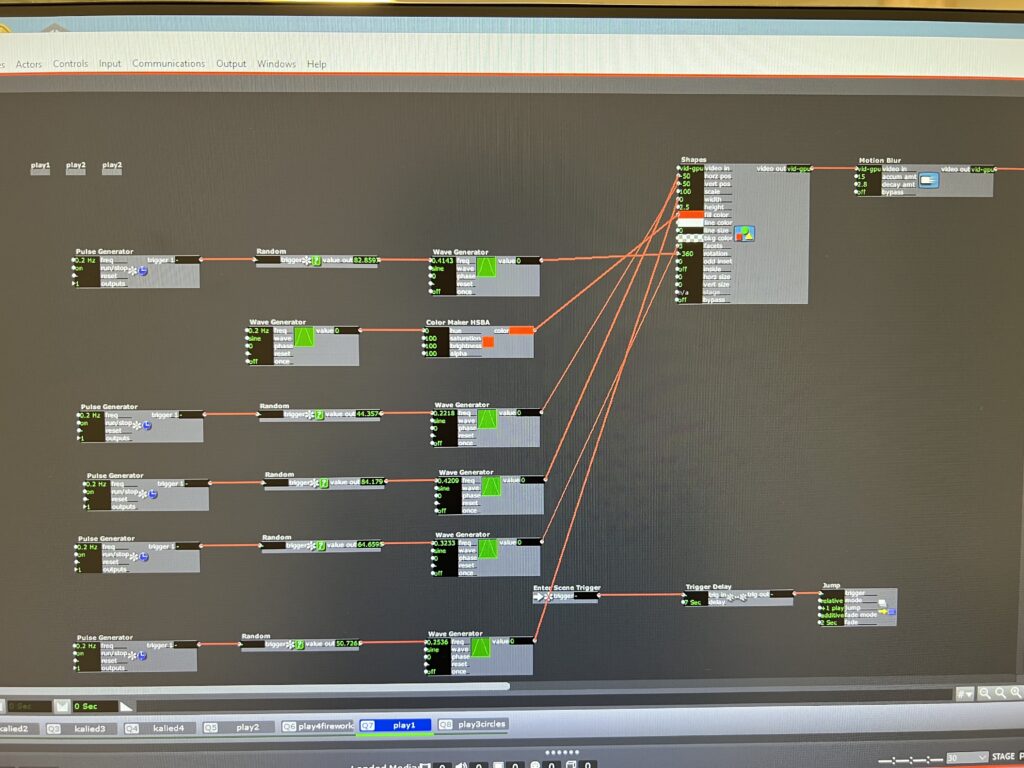

I leaned into the randomness prompt quite a bit, and decided to use random actors to decide how long the patch would stay in each scene, as well as which scene it would jump to next. You can see this clearly in this photo of Scene 1, where one random actor is determining the delay time on a trigger delay actor, and another random actor is hooked up to the jump location on the jump actor. I repeated this process for every scene in order to make it a self generating patch that cycles through the scenes endlessly in randomized order.

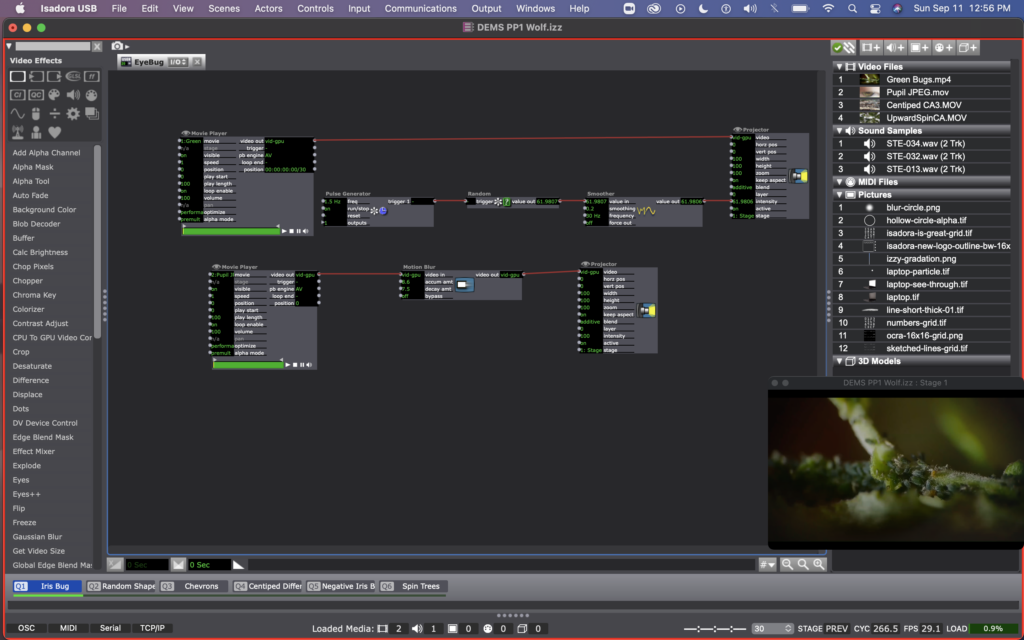

In scene 1, I was playing with the videos that Mark included in his guru sessions. I used a pulse generator, random actor, and smoother to have the intensity of the bugs video fade in and out. Then I used a motion blur actor on the pupil video to exaggerate the trippy vibe that was happening every time they blinked or refocused their eye.

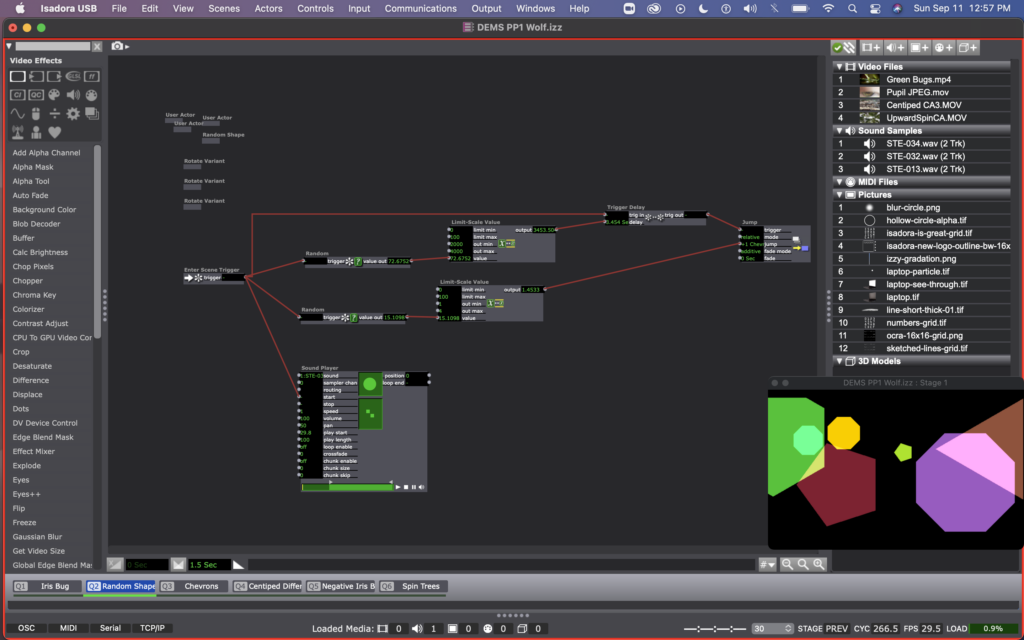

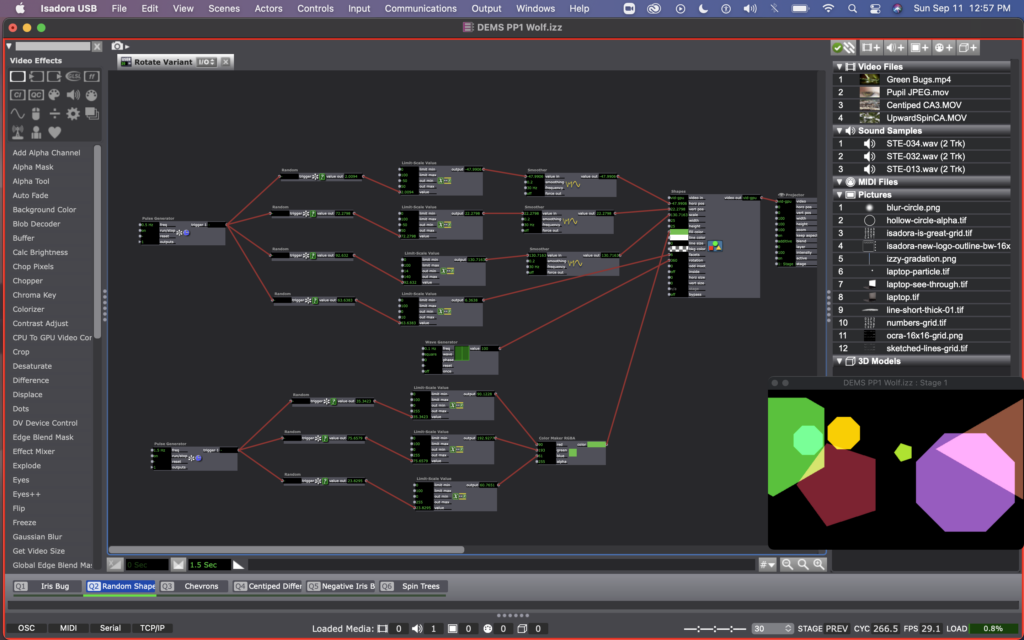

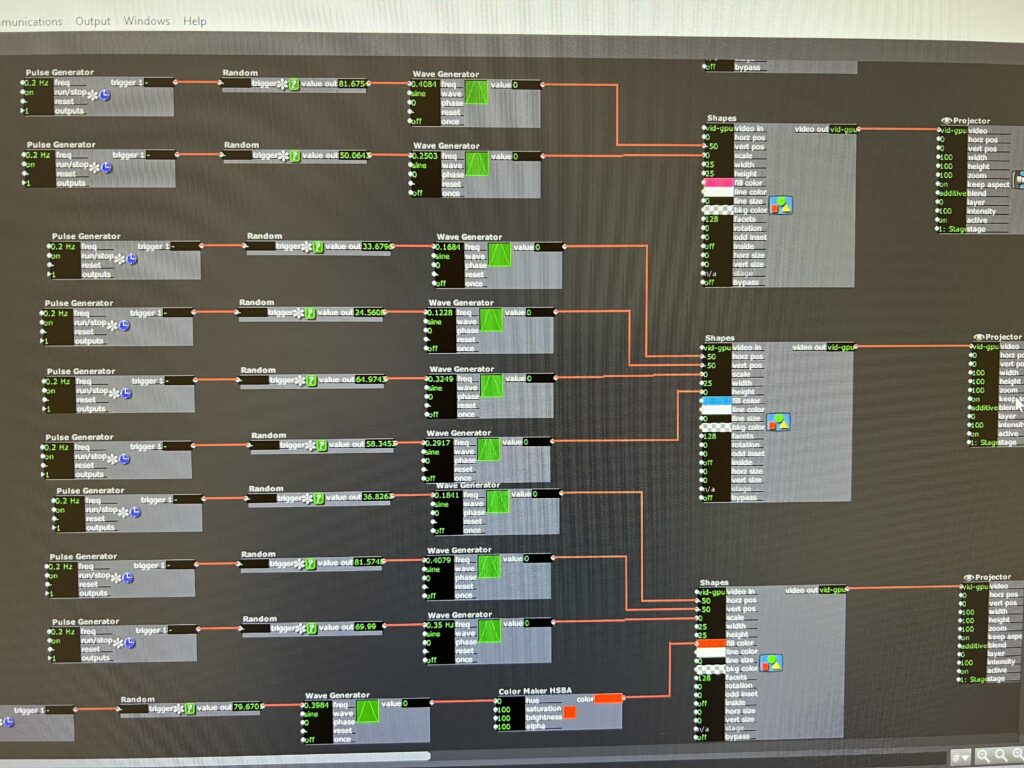

I kept the shape user actors I was playing with in class last week in two of my scenes (Q2 & Q3). With these shapes I was experimenting with a variety of things – all determined still by using random actors. In the shapes created be the user actor called “Random Shape,” random actors are determining the facet number, color, and position of the shapes, and a wave generator is determining the rotation of them. I like the effect of the smoother here so that the shapes looking like they are moving, rather than jumping from place to place. At one point I noticed that they were all rotating together (since they all had the same wave generator affecting them, so I created a variant of this user actor, called “Rotation Variant” with a different wave generator (square rather than sine).

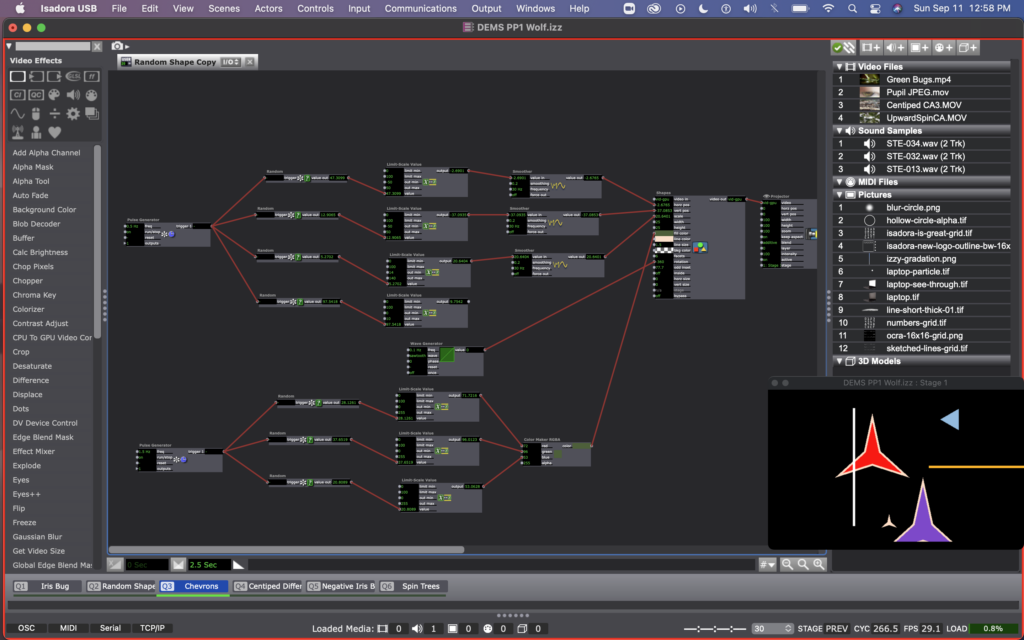

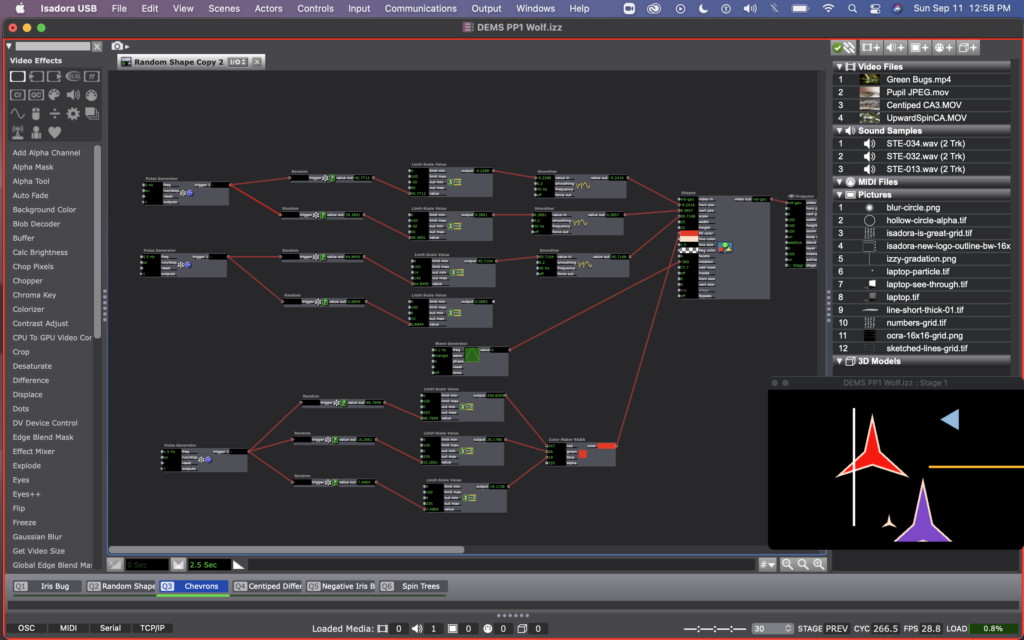

In scene 3, I edited these user actors further. I made a variation of this user actor, called “Random Shape Copy,” in which all the shapes have 6 facets (making a chevron-esc shape), and are using the sawtooth wave generator for rotation. And just so not all the shapes in this scene were rotating in unison, I created a copy of of this user actor, called “Random Shape Copy 2” that used a triangle wave generator for rotation instead. I also added a couple static lines with shape actors in this scene so that the shapes would look like they were crossing thresholds as they moved across the stage.

Once I figured out how to jump between scenes, I decided to experiment with some footage I have for my thesis. You can see me starting this in scene 4, where I used a difference actor to create an X-ray esc version of a video of a centipede crawling. I also started to add sound to the scenes at this point (even going back and adding sound to my previous 3 scenes as well). The sound is triggered by the enter scene trigger and has a starting point that is individual for each scene (determined by the arrows on the sound player actor).

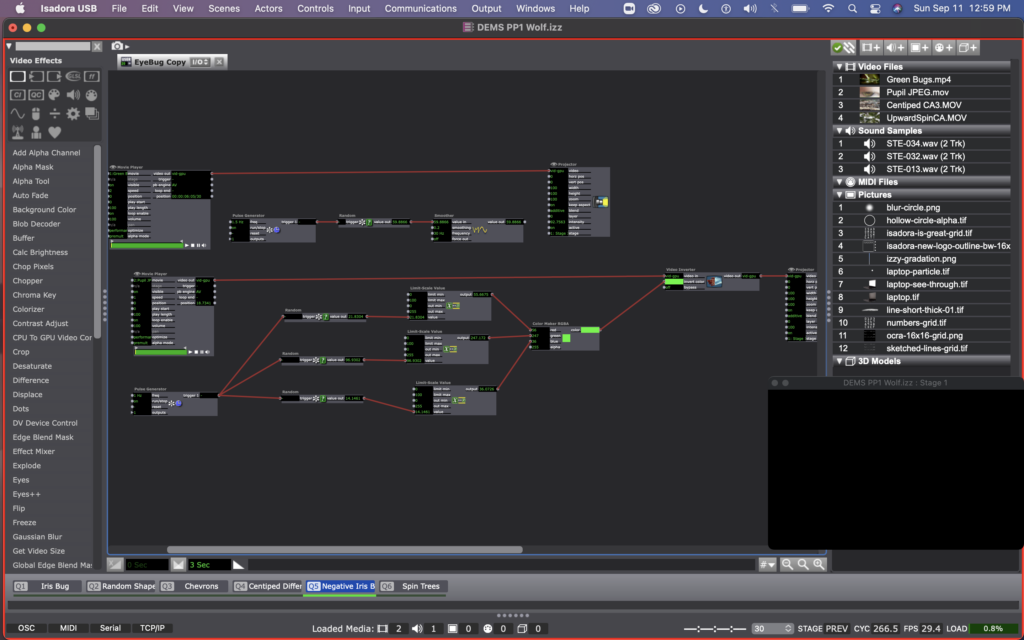

For scene 5, I copied the user actor I created in scene 1, calling the copy “EyeBug Copy.” Here you can see that I inserted a video inverter between the movie player and projector for the iris video. Then, I used random actors and a pulse generator to determine and regularly change the invert color. I added a sound file here – the same that I used in other scenes, but set the start location for a sound bit that mentions looking into the eyes of a shrieking macaque, which I thought was an appropriate pairing for a colorized video of an eye.

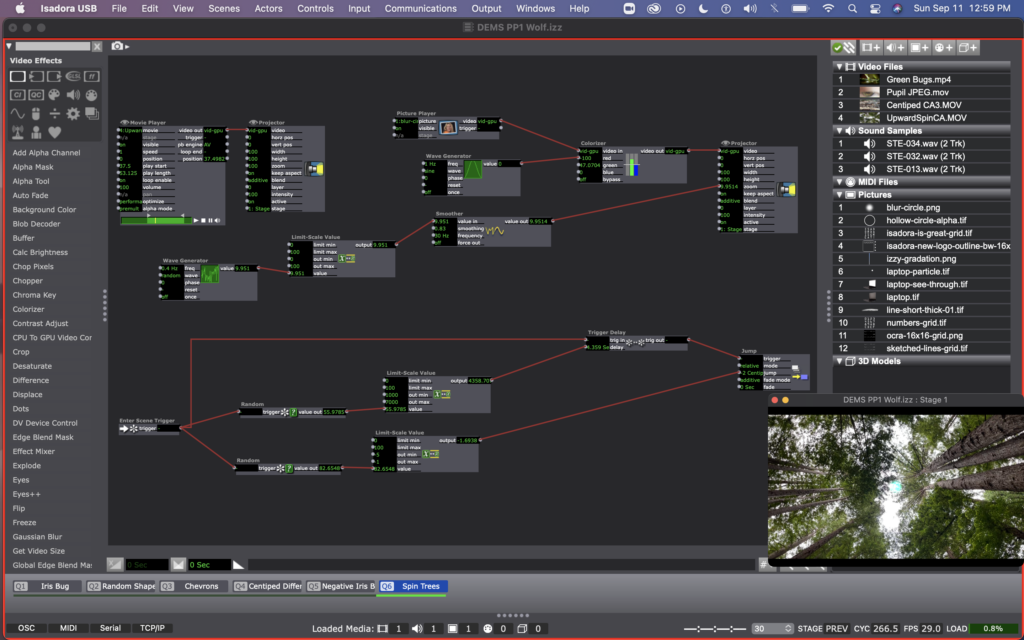

For scene 6, I have two video layers happening. One is simply a movie player and a projector, looping a video I took while spinning and looking up at the sky in the middle of the redwoods this summer. I wanted it to feel a little more disorienting, or even to make it feel like there was some outside force here, so I added a picture player and used the blurry circle that Mark included in the guru sessions. I used a random wave generator, limit-scale value, and smoother on the projector zoom input to make the blurry circle grow and shrink. Then I used a wave generator and a colorizer to have the circle change between yellows and blues.

Here’s a video of what it ended up looking like once complete:

I enjoyed sharing this with the class. It was mostly just an experiment, or play with randomness, but I think that a lot of meaning could be drawn out of this material, though that wasn’t necessarily my intention.

Of course everyone laughed at the sound bit that started with “Oh shit…” – it’s funny that curse words will always have that affect on audiences. It feels like a bit of a cheep trick to use, but it definitely got people’s attention.

Alex said that it felt like a poetic generator – the random order and random time in each scene, especially matched with the voices in the sound files made for a poetry-esc scene that makes meaning as the words and images repeat after one another in different orders. I hadn’t necessarily intended this, but I agree and it’s something I’d like to return to, or incorporate into another project in the future.

PP1 documentation + reflection

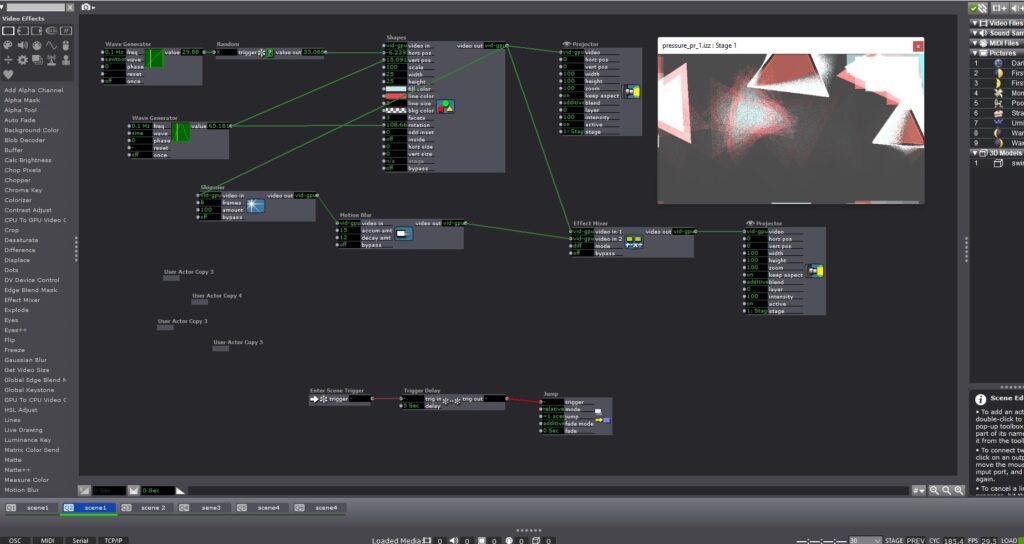

Posted: September 10, 2022 Filed under: Uncategorized Leave a comment »I took this project as an opportunity to experiment with what I learned about the program so far and also scratch more surfaces with what can be done in Isadora in order to inspire future projects. I used shapes, blend modes, and various actors to alter their states and my starting point and visual goal was creating a sequence of events that will make the shapes get more chaotic gradually.

I liked that I got to play with using Isadora’s features to create a sense of texture from flat 2D shapes. Initially, I was going to start with a 3D model but I had trouble getting it to appear in Isadora, so one of my next steps will be to watch the guru session on 3D. I didn’t have a strong conceptual idea in mind when I started working on this project as I mainly wanted to experiment with the program while trying to create a surprise and laughter factor, so when I liked where the first scene was headed, I took it up from there for the future scenes where I focused on creating surprises in the intensity of the visuals and also inserting live capture interactions in one of the scenes after learning about that in class.

It is hard for me not to focus on the visual aspect even though I knew that art wouldn’t be judged, so it was very good to hear that some viewers found it mesmerizing to look at.

I wanted to take a funny twist on the randomness of the patch so I decided to throw in what I thought were pretty random emojis and make them small (I wish I made them even smaller) so they motivate the people to come closer and start a discourse on their role, and also hopefully laugh. I was glad that both of those happened in critique! It was interesting to listen to what everyone thought was the meaning behind them and also make their own meanings of them, although the emojis were just random.

During the critique I was disappointed that people didn’t realize they can control the movement of one of the shapes but it totally made sense why, and I think very good feedback is to put the scene with small emojis before that one so that viewers are already drawn to be closer to the screen and then after that it’s easier to realize they can interact with the content in the following scene.

My presentation was also a good learning opportunity because while making this project I considered the first scene the beginning of the experience and the last scene the end, and I was expecting everyone to judge what they experienced from ‘beginning’ to ‘end’. But for the sake of critique, I put it on loop and that altered how everyone experienced it because a repeating pattern could be recognized. So, that was beneficial or me to observe since that one simple decision made an impact on how everyone experienced the project even though I had my own expectations of ‘beginning’ and ‘end’ to it.

Overall I am really satisfied with the experimentation in Isadora and I feel more confident trying out new things with it and I’m excited about what I could do with future projects.

Stars Kaleid – PP1

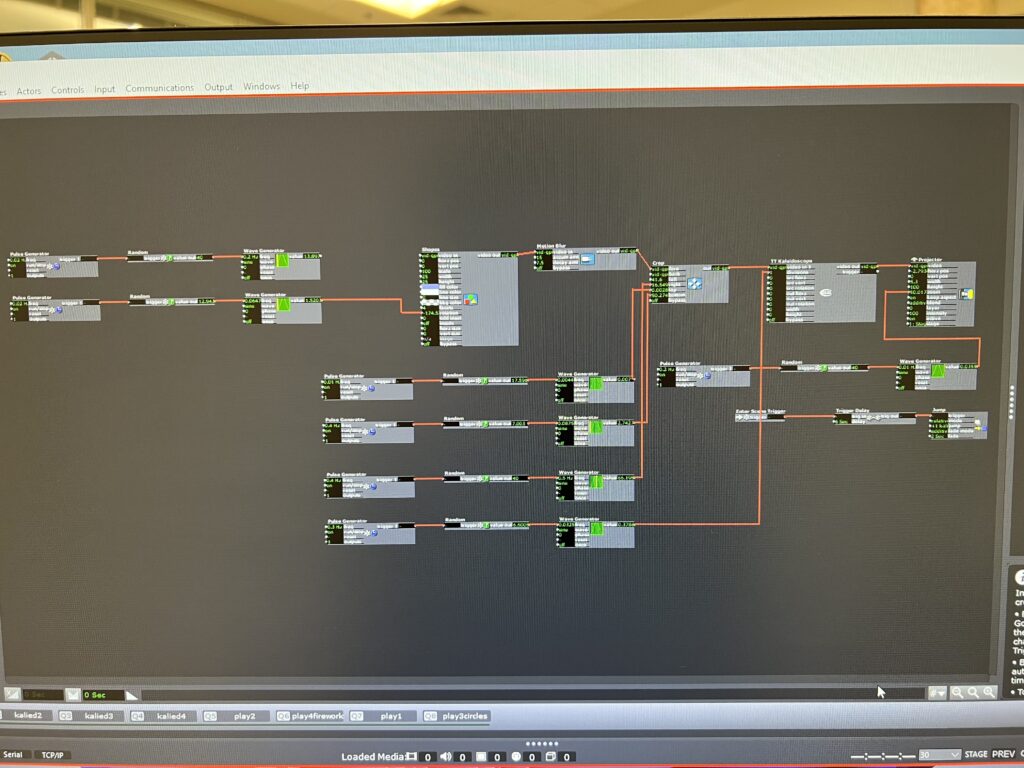

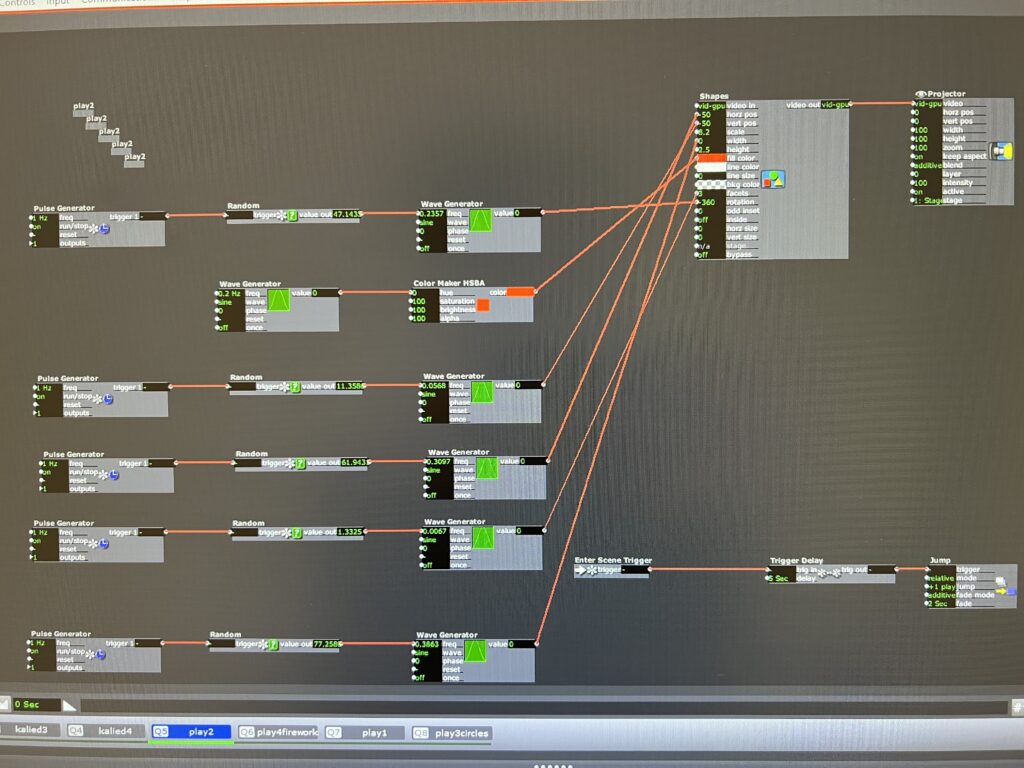

Posted: September 9, 2022 Filed under: Uncategorized Leave a comment »For this pressure project, I wanted to take the time to play with the actor kaleidoscope++, practice triggering randomness and scene jumping, work on how to grow and expand an image smoothly and add videos into the patch.

Unfortunately, about 2 of the hours I spent working with my personal video I didn’t end up using because Isadora didn’t like the codec and I didn’t realize it in time. Isadora eventually just started freezing and not registering the video, and occasionally crashing.

After I gave up on trying to keep working on the video issues, I returned to the scenes where I had played with different shapes to create textures and images. Because I liked how the video gave the kaleidoscope a lot of movement, I added wave generators to the shape and crop actors to give the same kind of effect.

Even though there were hair pulling moments and I had to ditch some of my work, I still enjoyed the process of just connecting different actors and trying different number patterns to see how it affected the image. I ended up playing so much with the star that when I was asked how I did it, I couldn’t remember.

Right now, Isadora feels endless and limitless and intimidating. I’m grateful for this project just to give me time with the software in a low stakes way so I can experiment and begin to feel more confident. Keeping a clean interface seems to help my brain out as I’m working, so my goal is to not get into the habit of connecting actors every which way.

As I was building this patch, I was dreaming of how the body could be incorporated. I was imagining sketches and snapshots of hands, eyes, ribs showing up in the kaleidoscope as it transitioned randomly. For the next time, I will probably not use as much randomness. I liked it, but I craved a little more structure towards the end. Working with the kaleidoscope also got me imagining infinity loops, so I’m hoping to play with something like that in the future.

PP1: The Backrooms Teaser

Posted: September 8, 2022 Filed under: Uncategorized Leave a comment »

Inspiration and Background

Those of us who enjoy horror and creepy stories all know the name Creepypasta. For those of you who may be unfamiliar with the term, Creepypasta is used as a catch-all term for horror stories and legends that are posted to the internet. These stories can often be found on many forms of social media, and some have been adapted into short films or even video games.

The Backrooms is a popular Creepypasta story that discusses and explains the existence of liminal spaces hidden in our world. These spaces, often referred to as “levels”, are seemingly infinite non-Euclidean environments with twisting hallways and never ending tunnels. The lore itself is expansive, and allows for people to write their own levels into existence (as of writing this there are 2,641 entries on the Backrooms Wiki) . But a sinister and unexplainable evil resides in this dark and haunted place. It’s said that every level of the Backrooms houses a unique entity that is almost always out to get you; this concept and story mechanic has been used to create short films and media related to the original Backrooms Creepypasta. And so the infamous Backrooms: Found Footage movie was born, a short film by Kane Pixels that tells the story of a man that fell into the Backrooms and has to escape and survive the horrors within. This short film has almost 39 million views on YouTube, and is said to have launched the worldwide trend of Backrooms content.

My Experience

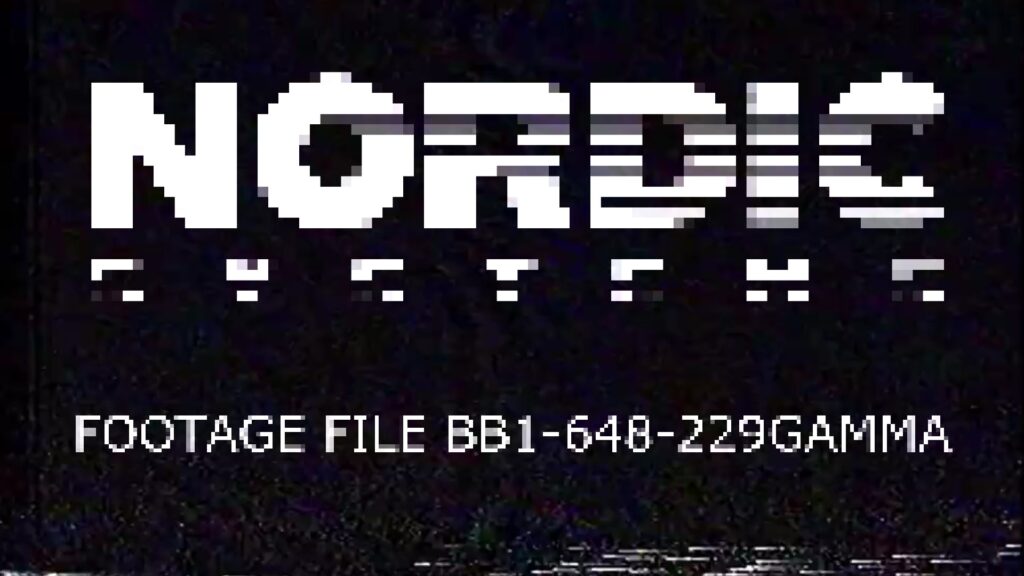

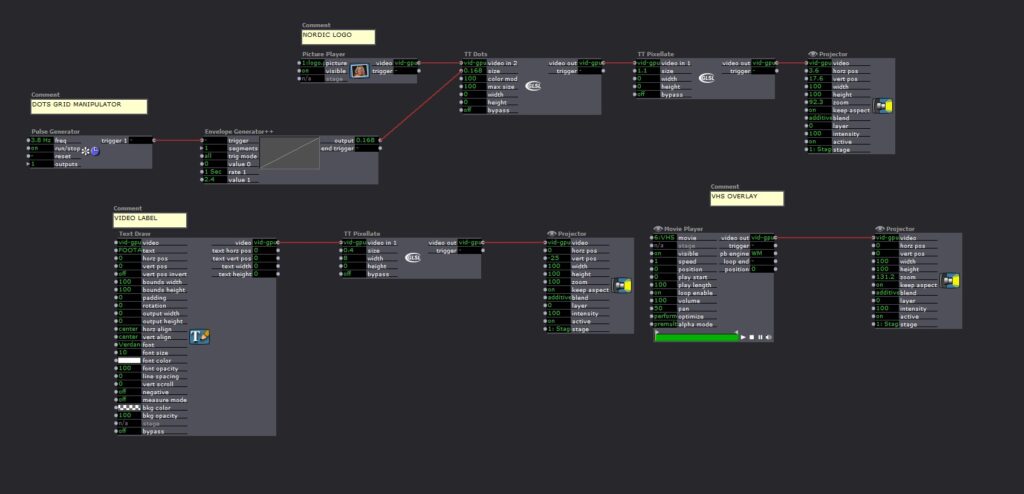

Now that I’ve bored you with my inspiration behind the project, I’ll talk a little about my process for the first pressure project and truly my first encounter with Isadora. I started out with the goal of making something with vintage/retro vibes. There’s something about the sound of old computers and VHS players that fascinates me, and I decided to make a little experience that uses that style.

I started by imagining my little corner of Creepypasta lore; I pictured this experience running on a very old computer in a small unsuspecting corner of a museum. A dimly lit, room with a single chair would allow for guests to be immersed in their surroundings. The computer at the center would be playing my start screen (pictured above), waiting for someone to walk by and take a seat. Once the experience starts, they would be shown clips of found footage from the first adventurer into the backrooms (clips taken from the original short film Backrooms: Found Footage by Kane Pixels) The clips would end abruptly when the footage shows our main character pushed into oblivion by a large and haunting entity; the computer cuts to black, and you’re faced with a blue start-up sequence and boot noises:

Sidenote:

I cut the following keyboard input section from the experience I showed in class due to difficulties with the text draw actor (more on that later). Because the goal was to create a self-generating patch, I ended up making it jump to the next scene after the boot-up video played out. If I had required the viewer to click the escape button it would no longer be a self-generating patch.

The input screen hums in front of you as you hear a repetitive analog beeping, waiting for you to press the escape key.

Do you do it?

You hesitate for a moment… and then *click*.

The next thing you know, this horrifying clip is playing in front of you…

Gotcha! Yes, unfortunately since we only had 5 hours and were trying to keep the element of surprise for as long as possible my goal was to build up this creepy lore and finally troll you with Never Gonna Give You Up. The video had the reaction I was going for; audience members were puzzled at the ending, wondering where the heck this video came from.

In addition to their comments on the final part of the experience, my classmates also had comments on how the story was intriguing but hard to piece together. While I was aiming for disorienting and confusing, I was also aiming for fear, and I expected at least a jump or two from one of the scarier parts of the found footage clips. No one jumped, but they did mention it was slightly unsettling!

Note to self: Make the next one scarier

What I Would’ve Done with a Little More Time

If this had been a full-length project, I would’ve spent more time onboarding the viewer into the world of the Backrooms and thinking about the physical manifestation of the project. The ideal scenario for the experience would’ve been some sort of wall-projected environment combined with a creepy old computer. And after you pressed the escape key, I would’ve transported you to the Backrooms, and done my best to scare the hell out of you with a more immersive experience featuring camera tracking and interactive content.

Process and Obstacles

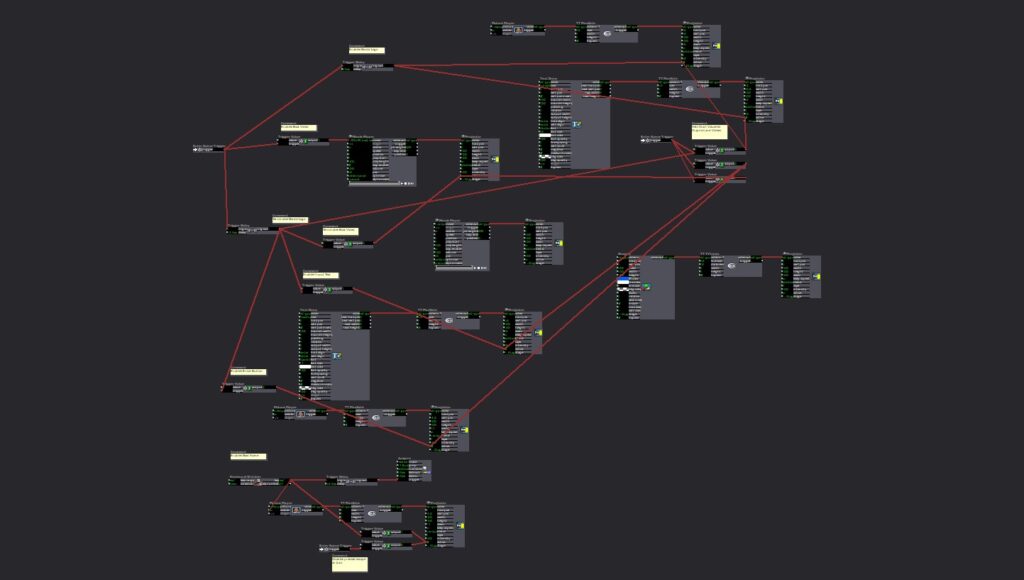

This was the first creation I made with Isadora, and it was certainly a challenge since I’m a 3D artist by trade that’s used to a viewport and polygons. However, since I was familiar with node-based systems, I was able to pick up Isadora fairly quickly and start experimenting with my own ideas.

One of my biggest obstacles was triggering videos and transitions. I came up with a system that used trigger delay and trigger value actors to create a faux-sequencer that took care of video layering and playback. Then Alex told me the method I created was great, but that there was a way easier way of doing it!

Another obstacle that I encountered in the minutes before presentation was when one of my text draw actors mysteriously stopped working. The actors were there, and the connections were correct because I had tested the experience at home and it looked great. But for some reason, when I loaded my project on the PC at ACCAD, this one specific text actor was just not showing up. We later found that others had been running into this issue, and that it might be a hidden bug with this specific text actor.

My favorite part of the project was figuring out ways to layer distortion and aging to make the experience look as old and vintage as possible. By using the TT Dots actor in tandem with TT Pixelate I was able to get some really cool effects.

Conclusion

My heart lies in the style of vintage pixelated VHS tapes and the found footage genre. I absolutely loved getting to play with creating a dated interface on a modern computer, and finding out how to mess with our expectation of fresh and high-res images. I look forward to continuing my exploration of Isadora and hopefully getting to make more cool retro-style experiences.

BUMP: Looking Across, Moving Inside

Posted: September 6, 2022 Filed under: Uncategorized Leave a comment »I picked Benny Simon’s final project because I was drawn to the concept of adding depth to pre-recorded content and through doing that examining different ways we can experience a performance. The installation seemed very engaging as people seemed to enjoy how their movements were translated onto a screen together with other pre-recorded dancers.

I am interested in exploring similar methods for my project since I would like to experiment with ways to inhabit virtual environments by using projection mapping and motion tracking. I think this was a very interesting way to make participants feel like they were a part of this virtual scene while physically moving around and having fun in the process.

I wonder what role the form on the screen of a person dancing plays in engaging with the pre-recorded video content as one? Is the lack of representation in the while silhouette beneficial for that and how would that change if the projection was mirroring participants as they are?

BUMP: Maria’s leap Motion Puzzle

Posted: September 5, 2022 Filed under: Uncategorized Leave a comment »I chose Maria’s Leap Motion puzzle project because it reminds me of the “Impossible Test” that were featured on gaming sites I visited as a Kid. And the idea of using the Leap Motion as a faux button is really cool!

BUMP: Seasons – Min Liu

Posted: September 4, 2022 Filed under: Uncategorized Leave a comment »I’m bumping Min Liu’s cycle 3 from last semester. I was drawn to this one because it seems as though this project gave the audience an experience of interacting with a digital reconstruction of a natural environment. I’m not sure I would do it in the same way, but this is the type of experience I’m hoping to create for my project. Having leaves fall in response to the participants’ movements (sensed by the Kinect) made for a responsive environment – and I think was a nice way to make the digital environment responsive without the aesthetic becoming too digital/non-natural. I’m hoping to brainstorm some ideas like this one – how can I use programming systems to manipulate imagery of the natural environment so it seems as though the environment is responding as opposed to a digital/technological system responding?

BUMP: Willsplosion PP1 by Emily Craver

Posted: September 3, 2022 Filed under: Emily Craver, Pressure Project I, Tamryn McDermott | Tags: Pressure Project, Pressure Project One Leave a comment »I wanted to find a pressure project 1 to bump and take a closer look at it to inspire and give me some ideas about how to approach mine as I start contemplating ideas and directions. I was drawn to Emily’s project because she integrated both sound and an element of humor using text into the final experience. I want to use one of the text actor features in mine too. I also found some actors in her patch that I want to experiment with such as the “Explode” actor. In addition, I appreciated her organization within each scene and want to be mindful in my own work to develop an organizational system that works and keeps things clear so I don’t get lost.

Emily’s post left me wanting to see/hear the outcome. This means that when I post mine, I want to share a video of the final outcome much like Tara’s that Katie bumped. What is the best way to set up to do this? How can we export and save the content from a projector?

Willsplosion: PP 1 | Devising EMS (ohio-state.edu)

Bump: Tara Burns – The Pressure is On PP1

Posted: September 1, 2022 Filed under: Uncategorized 1 Comment »This pressure project showed both an intrigue with certain actors that wanted to be explored, and a knack for abstract storytelling. As I was watching it, it felt like Tara was playing and enjoying what the program can do. Although I’m sure it wasn’t all kick and giggles, there was an ease about how the design traveling through its timeline. I liked the use of color and liveness, and I found a few new actors that I want to learn about.

I appreciated what she said about desired randomness… or randomness in a way you want it. How do you make specific randomness?