Cycle 3 documentation – Dynamic Cloth

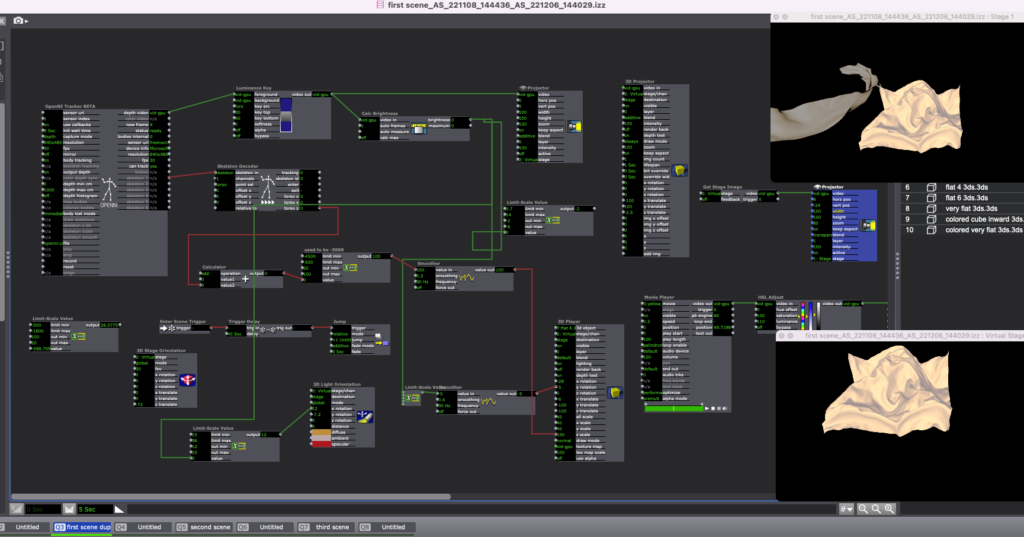

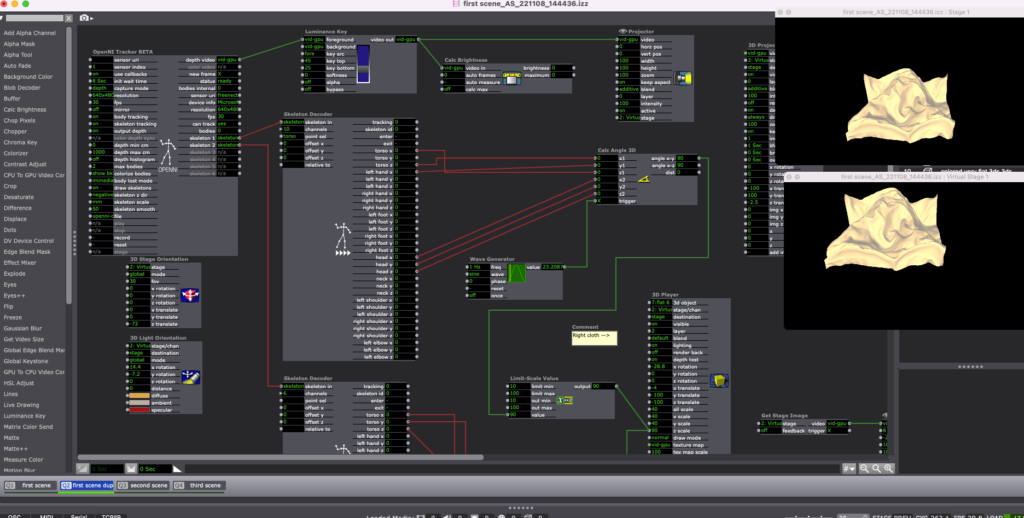

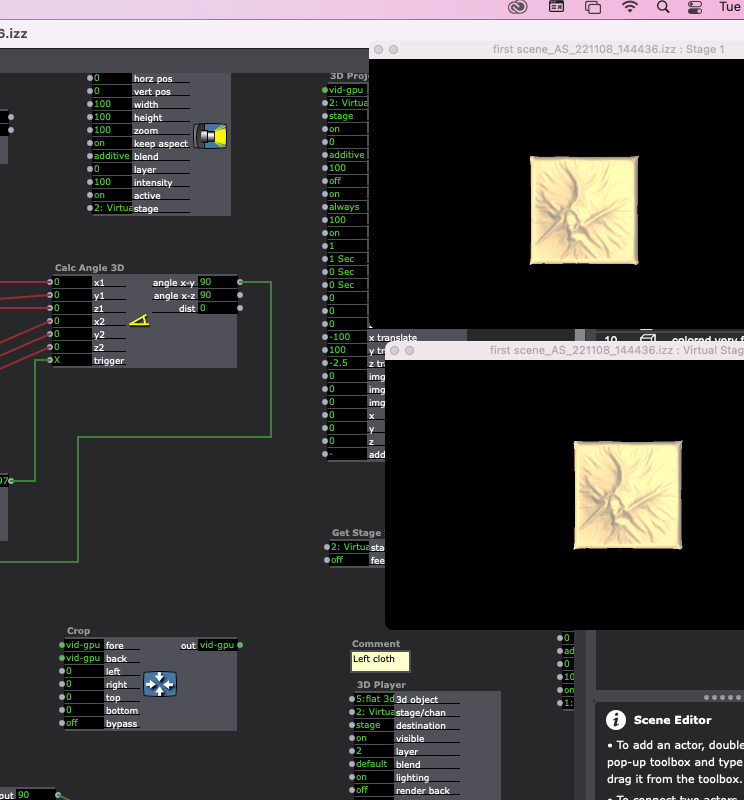

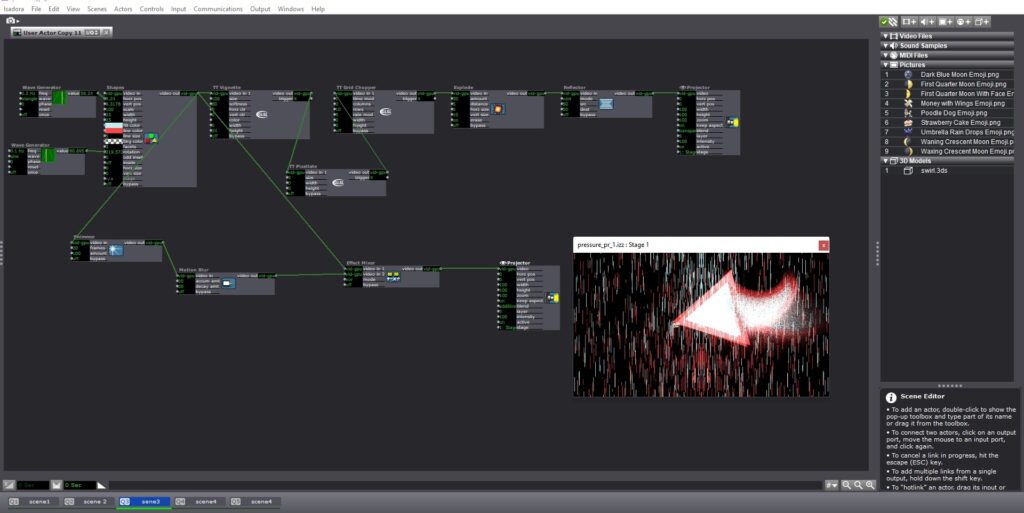

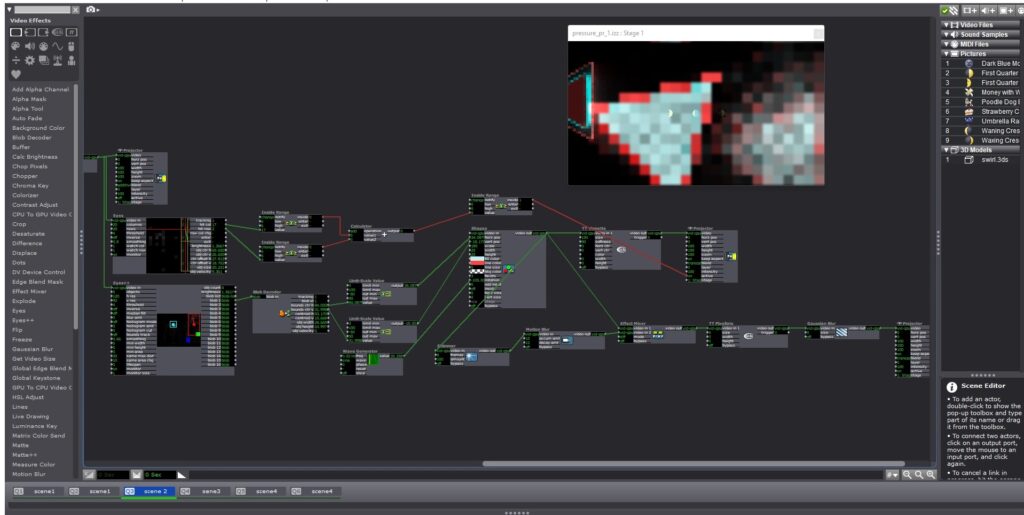

Posted: December 10, 2022 Filed under: Uncategorized Leave a comment »After cycle 2, the main aspect I worked on was controlling the Kinect input numbers in the ranges I wanted and that worked best for the type of movement I wanted, and also polishing the interactions based on that. I made the shapes ‘more sensitive’ in the Z-axis, more similar to how they were in Cycle 1, but using the skeleton data instead of just brightness inputs, although I still used brightness for subtle horizontal and vertical movement. I also experimented with placing some pre-recorded animations from Cinema 4D in the background, made transitions between the scenes smoother, and made the materials in 3ds Max less shiny.

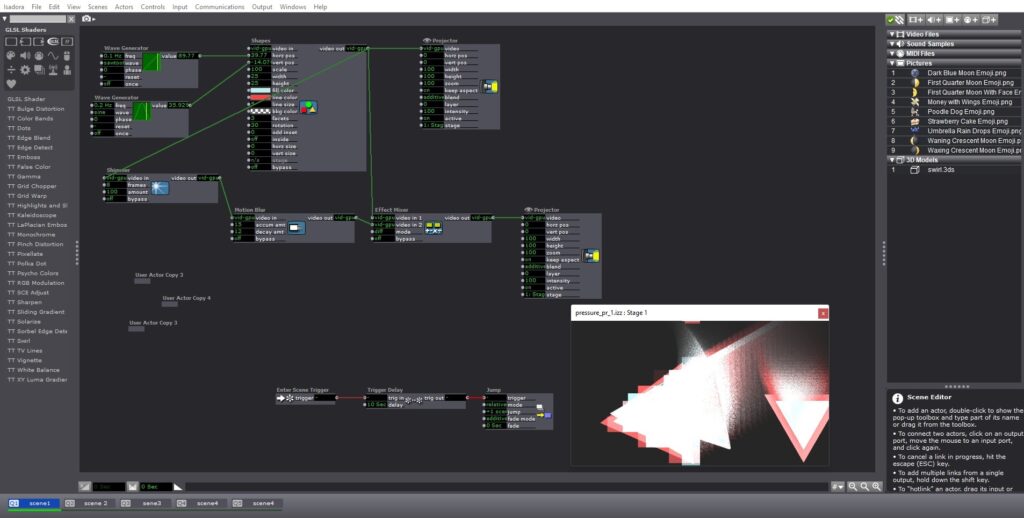

The transitions required a workaround that Alex helped me with. Initially, I was trying to just set a 3-second delay in the Jump actor but I was getting weird glitches during that delay, like the next scene showing up temporarily in the wrong position, etc. So, I ended up putting empty scenes in between each interactive scene and setting a delay so that it looks like a smooth transition is happening between each scene.

I’m happy with how the Cycle 3 presentation went (other than Isadora crashing 2 times) and I have gotten a lot of interesting and useful feedback. It was also very enjoyable seeing everyone engage with the projections. The feedback I have gotten included experimenting with adding texture to the materials and seeing how that impacts the experience and perception of the models, another comment was about potentially introducing a physical aspect like touching a real piece of fabric with something like Makey-Makey that triggers transitions between the scenes, as well as tracking more types of user movements instead of mainly focusing on depth of one direction. Comments also included that the animations felt a bit different from the main interactive models which were in the foreground, but the animations faded in the background, which I definitely agreed with. I think the next iteration would be making the animations interactive too and getting better at materials in 3ds Max and experimenting with more texture because I liked that suggestion. In the next iteration, I would apply all these suggestions except the physical cloth part since my main goal with this project was to experiment with introducing movement and body tracking as one method of interaction I could explore in virtual environments. With that said, I am very happy with the takeaways from this class and the whole Cycle 1-3 process, including learning Isadora, getting more comfortable using the Motion Lab, and trying out a new type of project and experience.

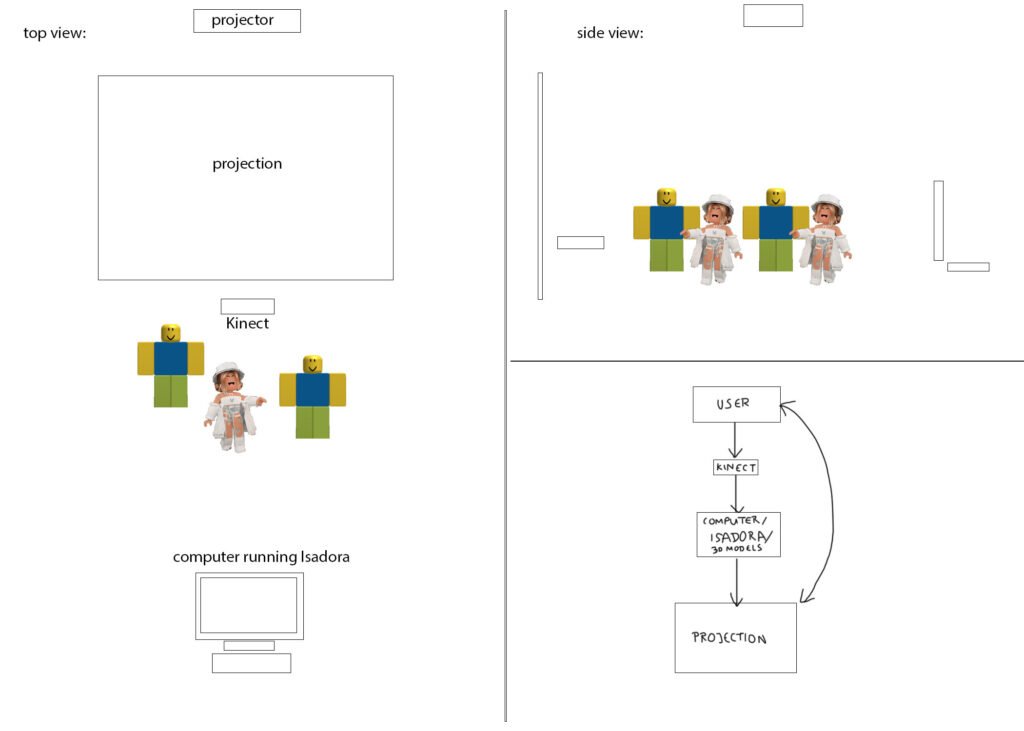

I also learned a lot of new things about designing in and around a physical space, since that is something I usually don’t think about much. I learned about important considerations when using physical space and more free-form movement, especially when Alex was running backward and collided with Kinect. That also prompted me to think about putting it in front of the projection in the next iteration like I initially had in mind, and moving the projections up higher so that the sensor is not right in front of them.

Video compilation of everyone’s experience from Alex’s 360 videos:

Another useful feedback was participants expecting and wishing that they could use their hands more since that was the main tendency when first seeing cloth on the screen; a lot of participants wished they could manipulate it using their hands similar to how they would in real life. I think this would also be very interesting to explore in the next iteration, playing with tracking the distance between hands and hand movements to influence the number ranges.

As I mentioned during the discussion, I have been experimenting with this in a different project using Leap Motion that we checked out. I created a virtual environment in the game engine Unity and used physics properties on interactive materials to make them responsive to hand tracking using Leap Motion, which allows participants to push, pull, and move cloth and various other objects in the virtual space, so I also wanted to share a short screen-recording of that here too:

Cycle 2 documentation

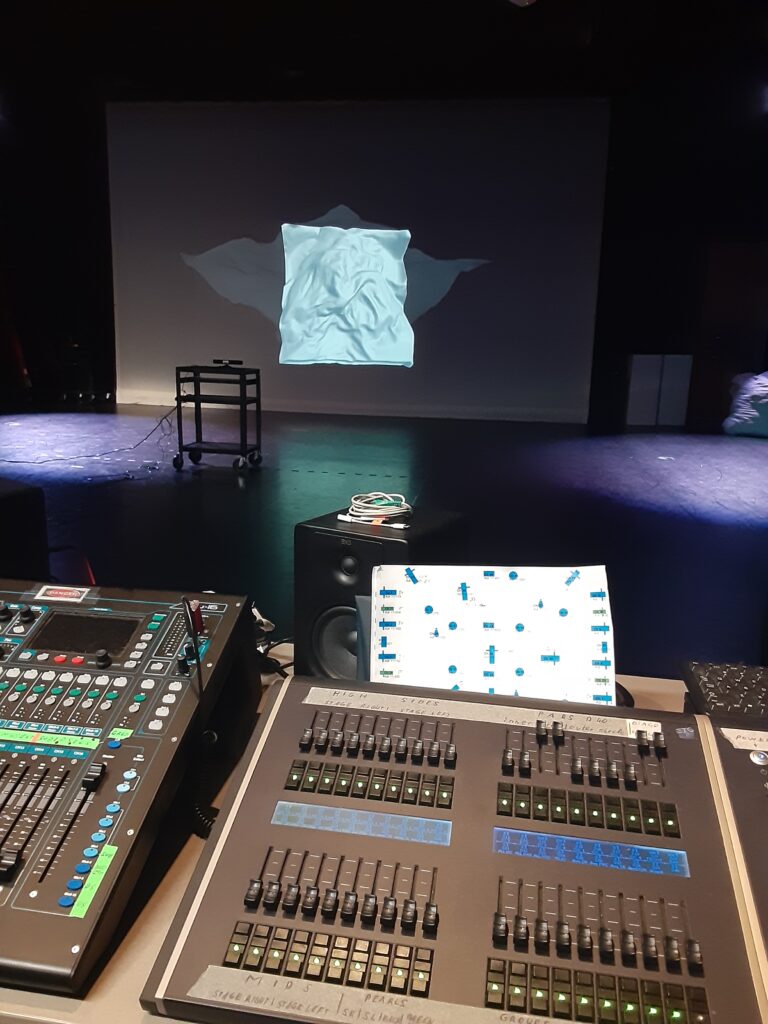

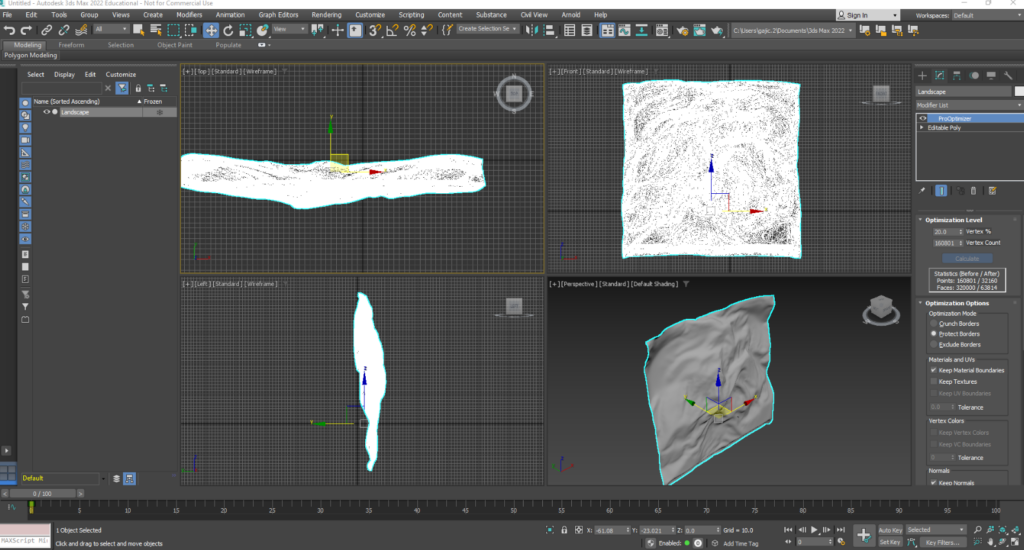

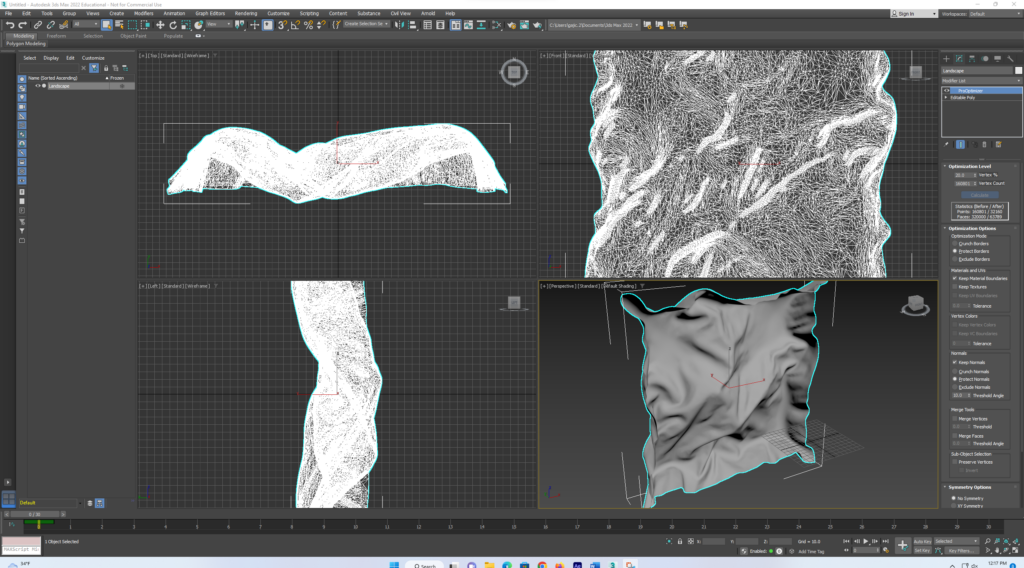

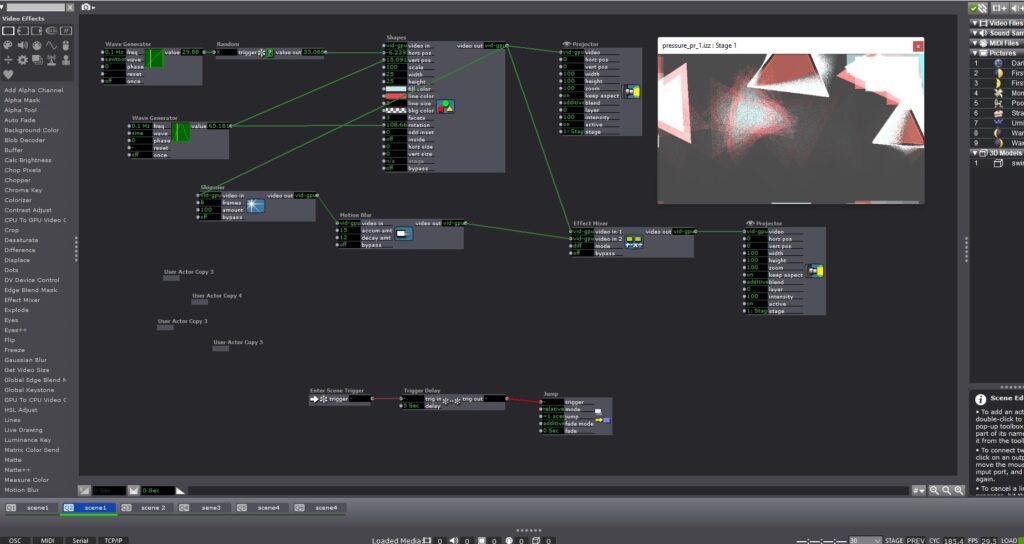

Posted: November 22, 2022 Filed under: Uncategorized Leave a comment »Since Cycle 1, I used Cinema 4D to create the final 3D cloth models I’m going to use for the installation, setting up Kinect and Isadora working in the Motion Lab, experimenting with projection spots, learning how to project in the first place, and I’ve also been modifying the Isadora patch based on the Motion Lab environment. One of the main changes I made is to have 4 separate scenes every minute at least. A big part of this process was optimizing the models in 3DS Max since the program has a maximum number of polygon faces that can be exported and my original models were much bigger than that:

At the time of the Cycle 2 presentation, my visuals were still in progress since I am learning how to make materials in 3DS Max which is the program I have to use because that’s the only format Isadora supports. But my vision for all the materials is to be non-shiny, like the first two scenes

…which was also the feedback I got from the critique – scene number 2 was the most visually pleasing one, and I have to figure out how to edit the shiny materials on the other objects (scenes 3 and 4) this week.

During Cycle 2 I decided I want the projection to be on the main curtain at the front of the Motion Lab and I liked the scale of the projected models, but I need to remove the Kinect-colored skeletons from the background and have the background just be black.

The feedback from the critique also included experimenting further with introducing some more forms of movement to the cloth which I already tried but it was kind of laggy and patchy so I think once I learn how to control the skeleton numbers and output better I could use this to also expand the ways in which I can make the models move, and then I’ll experiment with having them move a little on the projection horizontally and vertically instead of just scaling along the Z-axis.

Next steps:

My main next step is to keep working on modifying the Isadora patch since it is really confusing to figure out which numbers are best to use based on the skeleton tracking outputs, I’m thinking I might switch back to using brightness/darkness inputs for some scenes since I liked how much more sensitive the cloth models were when I was using that. But I will first experiment with utilizing the skeleton data more efficiently. I am also going to polish the layout and materials of the 3rd and 4th scenes, and I think I’m happy with how the first and second scenes are looking, they just need some interaction refining. On Tuesday I am also going to work on setting up the Kinect to be much further from the computer, in front of the participants.

I am also going to render some animations I have of these same cloth models and try importing them into the Isadora patch in addition to the interactive models to see how that combination looks in the projections.

Cycle 1 documentation – Dynamic Cloth – prototype

Posted: October 27, 2022 Filed under: Uncategorized Leave a comment »

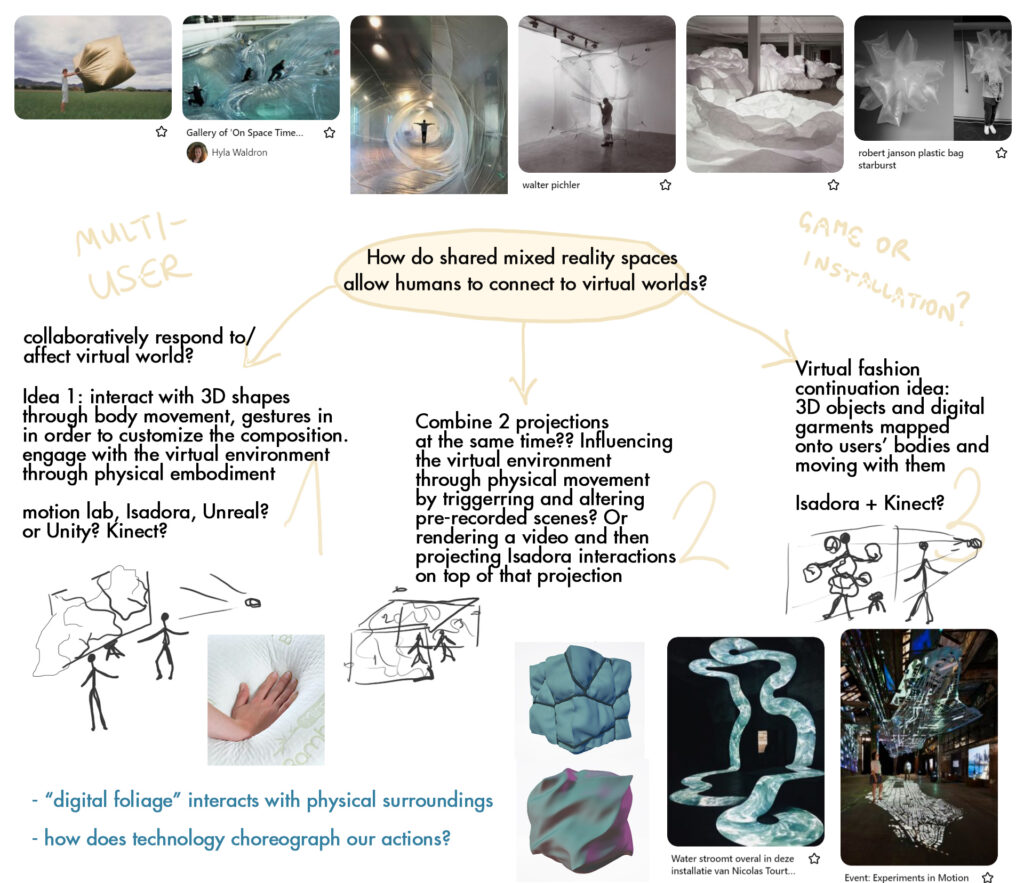

Since my research is based on how shared mixed reality experiences help us relate to virtual worlds, I wanted to use this project to create an experience where users can collaboratively affect/respond to digital cloth shapes through body movement tracking in the Motion Lab. I love creating experiences and environments that blend both physical and virtual worlds, and so I thought this would be a good way to explore how physical surroundings and inputs impact virtual objects, and I also thought this project direction would be an interesting way to explore how interactive technology creates performative spaces.

Since at the time (and now) I was still a beginner in Isadora I didn’t really have an idea how to go about doing this, and I didn’t know if Isadora is even the right software to use or should I just be using Kinect in a game engine. My goal is to have the users affect the virtual cloth in real time, but not knowing how to do this, in the beginning I was thinking an option could also be pre-rendering the cloth simulations and then use the Kinect inputs to trigger different animations to create this dynamic effect. However, after learning how to import 3D models to Isadora and affect lighting, I realized that I will be able to trigger real time changes to 3D shapes, without using pre-made animations. I might still use animations if I think they can benefit the experience, but after the progress I’ve made so far I realized that I have a sense of how to make this work in real time.

After deciding I need Kinect and Isadora for this experience, I needed some help from Felicity to install the drivers for Kinect on the computer in the classroom, so I can begin working on an early prototype. After that was setup, I first learned how to import 3D models in Isadora because I didn’t figure that out during PP1. I was able to import a placeholder cloth model I made a few months ago and use that to begin figuring out how to cause dynamic changes to it using Kinect input. Initially, I hooked the Kinect tracking input to the 3D light orientation and I was already happy where it was going since it felt like I was casting a shadow on a virtual object through my movement, but this was just a simple starting point:

After this, I wanted to test changing the depth of the shape through positions and motion, so I thought a good initial approach would be plugging the moving inputs into the shape’s size along the y axis, to make it seem like the object is shrinking and expanding:

I took this approach to the previous file and currently I have the Kinect input impacting the lighting, orientation, and y-axis size of the placeholder cloth shapes. In the gif below I plugged in the movement inputs to the brightness calculator, and when I’m further away from Kinect and when more light is being let in, the shapes expand along the y axis, but when I get closer and it gets darker, the shapes flatten down, which feels like putting pressure on them through movement:

I’m happy that I’m figuring out how to do what I want, but I want this to be a shared experience with multiple users’ data influencing the shapes simultaneously, so the next step is to transition from the computer where I made the prototype into the Motion Lab where I want the experience to be. Currently I need the Isadora on the Motion Lab computers to be updated to the version we are using in class, so I will remind Felicity about that. After the setup is done again both with Isadora and Kinect, I will keep working on this in the Motion Lab and modifying the patch based on that environment, since they are going to be interdependent. I also finally managed to renew my Cinema 4D license yesterday so this upcoming week I also want to make the final models (and animations?) I want to use for this project and replace the current placeholders.

Feedback from the presentation:

I appreciated the feedback from the class, and the patch I currently have seemed to produce positive reactions. The comments I got included that it was intriguing to see how responsive and sensitive the 3D cloth model was. It was nice to see that a lot of people wanted to interact with it in different ways. I realized I need to think more about the scale of the projection since it can impact how people perceive and engage with it.

PP3 Sound project

Posted: October 25, 2022 Filed under: Uncategorized Leave a comment »For this project my first idea was to work with a story that I always remember first when thinking of books/stories that stuck with me when I was younger. The story I chose to represent through sound is from the book called Palle Alone in the World by a Danish writer Jens Sigsgaard. Since I never read this book in English I know the book as “Pale sam na svijetu” and I always remembered the look of this cover because I liked the illustration style in the book:

Since my perception and understanding of the book has always been based on the visual, I thought it will be interesting to imagine what the events in the book would sound like. I always associated the book with happy memories but just thinking about recreating it through sound I could tell that it is probably going to sound kind of daunting and portraying overall a stressful experience. In the book, the boy Palle discovers he is totally alone in the world and so he goes on doing whatever he wants without any restrictions, he tries driving cars, he even crashes a car, he steals money from the bank, he eats all the food he can eat in the grocery store… The approach I took in the project was to depict the sounds of his actions and experiences in the order that they occurred, condensed in 3 minutes, and I also overlayed some slow piano music to create a dreamlike mood, since at the end of the book we find out that this was all just a dream.

I used the sound level watcher in Isadora to listen to the sound of the piece and use that to distort the picture of the book cover. I did this because as I was working on this project and listening to what’s happening in the book, my perception of the book and how it would feel to be Palle started to change.

Just the visual:

On the day we were showing the projects, I couldn’t use my Isadora file on the Motion Lab computers because I was using some of the effects that I previously had to install as plugins on the classroom computer I have been using, so I didn’t end up showing the visual portion during the experience. I wish I could have but it was still very interesting to hear the reactions people were having even without the visual. The visual was still quite abstract not knowing it’s a book but I think the picture does provide some context of “children’s book”, and it also gives further flow since it is constantly moving. Maybe I also thought this because I personally prefer to have something to look at, but I realized that’s not a universal preference. Based on the comments I got, a lot of people understood the moods and the narrative I was trying to convey which was good to hear. The comments included the observations that the events are linear, occurring in a specific sequence, and happening right after one another not in a way you would normally expect but it also still feels continuous, interpreting the sound through child’s perspective, the feeling of uneasiness, and getting invested in some sounds more than other like the sound of eating, walking on the grass, or unwrapping a chocolate bar. Another interesting aspect of the experience was being able to add and manipulate the lighting as the audience was listening to it, which is something I haven’t thought of before because I was not planning initially to show it in Motion Lab but I decided to after hearing how immersive the other sounds were in there.

I also remember thinking of this book sometimes when Covid first started when I had a very bad experience being stuck in a house with toxic and insane roommates and not being able to see my friends, so for me this book also relates to this time period.

PP1 documentation + reflection

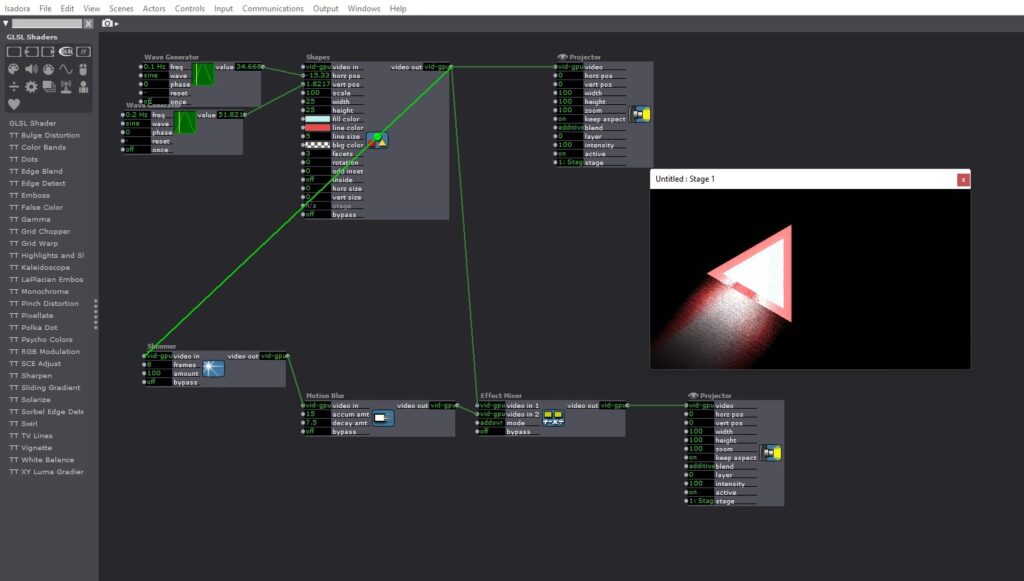

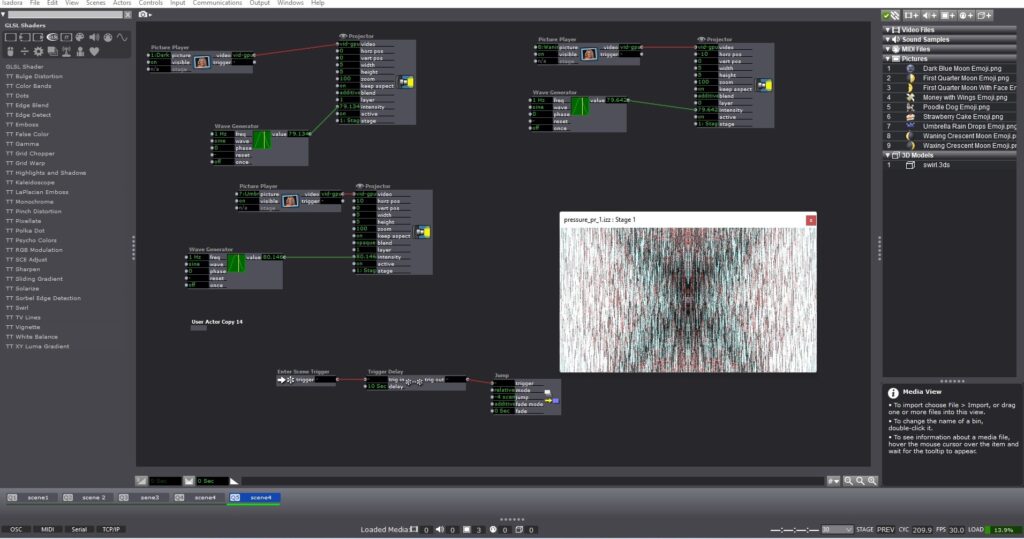

Posted: September 10, 2022 Filed under: Uncategorized Leave a comment »I took this project as an opportunity to experiment with what I learned about the program so far and also scratch more surfaces with what can be done in Isadora in order to inspire future projects. I used shapes, blend modes, and various actors to alter their states and my starting point and visual goal was creating a sequence of events that will make the shapes get more chaotic gradually.

I liked that I got to play with using Isadora’s features to create a sense of texture from flat 2D shapes. Initially, I was going to start with a 3D model but I had trouble getting it to appear in Isadora, so one of my next steps will be to watch the guru session on 3D. I didn’t have a strong conceptual idea in mind when I started working on this project as I mainly wanted to experiment with the program while trying to create a surprise and laughter factor, so when I liked where the first scene was headed, I took it up from there for the future scenes where I focused on creating surprises in the intensity of the visuals and also inserting live capture interactions in one of the scenes after learning about that in class.

It is hard for me not to focus on the visual aspect even though I knew that art wouldn’t be judged, so it was very good to hear that some viewers found it mesmerizing to look at.

I wanted to take a funny twist on the randomness of the patch so I decided to throw in what I thought were pretty random emojis and make them small (I wish I made them even smaller) so they motivate the people to come closer and start a discourse on their role, and also hopefully laugh. I was glad that both of those happened in critique! It was interesting to listen to what everyone thought was the meaning behind them and also make their own meanings of them, although the emojis were just random.

During the critique I was disappointed that people didn’t realize they can control the movement of one of the shapes but it totally made sense why, and I think very good feedback is to put the scene with small emojis before that one so that viewers are already drawn to be closer to the screen and then after that it’s easier to realize they can interact with the content in the following scene.

My presentation was also a good learning opportunity because while making this project I considered the first scene the beginning of the experience and the last scene the end, and I was expecting everyone to judge what they experienced from ‘beginning’ to ‘end’. But for the sake of critique, I put it on loop and that altered how everyone experienced it because a repeating pattern could be recognized. So, that was beneficial or me to observe since that one simple decision made an impact on how everyone experienced the project even though I had my own expectations of ‘beginning’ and ‘end’ to it.

Overall I am really satisfied with the experimentation in Isadora and I feel more confident trying out new things with it and I’m excited about what I could do with future projects.

BUMP: Looking Across, Moving Inside

Posted: September 6, 2022 Filed under: Uncategorized Leave a comment »I picked Benny Simon’s final project because I was drawn to the concept of adding depth to pre-recorded content and through doing that examining different ways we can experience a performance. The installation seemed very engaging as people seemed to enjoy how their movements were translated onto a screen together with other pre-recorded dancers.

I am interested in exploring similar methods for my project since I would like to experiment with ways to inhabit virtual environments by using projection mapping and motion tracking. I think this was a very interesting way to make participants feel like they were a part of this virtual scene while physically moving around and having fun in the process.

I wonder what role the form on the screen of a person dancing plays in engaging with the pre-recorded video content as one? Is the lack of representation in the while silhouette beneficial for that and how would that change if the projection was mirroring participants as they are?