Cycle 1: A Touch of Magic

Posted: March 31, 2025 Filed under: Uncategorized Leave a comment »For this project, my primary strategy was to try to reverse engineer a piece of media I experienced in a queue at Disney World. I was pretty sure I understood how it was working, but I was curious if I would be able to reconstruct some portion of it on my own. After thinking about the patterns, I knew the media sequence was a series of scenes that were just variations on one another with either some kind of count trigger or time marker that triggered the movement into the next scene. I also wanted to use this as an opportunity to explore a depth sensor, as a tactic to experiment with other interactive media I’ve seen used in various capacities. The two together were challenging, and I think there were ways that I could have created a more polished finished product if I had focused on one thing or another. I think this is a byproduct of me originally imagining a much bigger/flashier end product at the end of the three cycles, which I have since scaled back from significantly. In the end, these cycles will end up serving more as vehicles for me to continue to learn and explore so I can better recognize how these tools and technologies are working out in the wild, thereby making me a better partner to the people who are actually executing the media side of things.

Figure 1: No Time for Making Things Neat

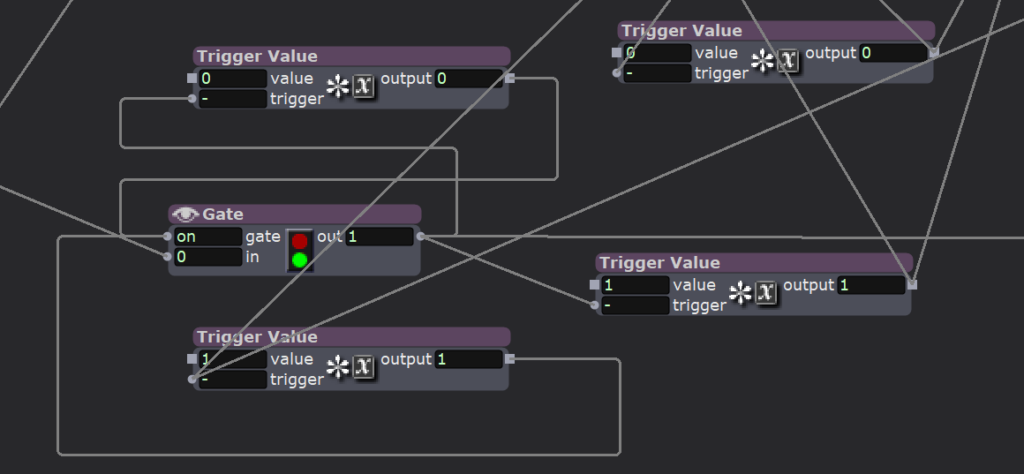

The tools I used for this were a depth sensor, Touch Designer mainly because Isadora couldn’t recognize the depth sensor without it, and Isadora. In Isadora, I used a Virtual Stage for the first time in one of my projects. It helped me be able to see where the areas were I was isolating with the depth sensor, while keeping it separate from the area where the interactive pieces were being triggered. I used the Luminance Key, NDI Watcher, and Calc Brightness actors for the first time in a project and continued to enjoy the possibilities of triggers, gates, and sequential triggers. I find that I am always thinking about how the story progresses, what happens next, and in building in ways for what I’m creating to naturally bring up another scene or next thing once it’s reached a relatively low threshold or interactions or time.

Figure 2: An Abundance of Gates and Triggers

Some challenges were that I didn’t quite author to the technology, which caused some issues, and I didn’t fully understand the obstacles I might encounter in moving from one space to another. The very controlled environment of a ride queue is perfect for these kinds of tools, and I feel like I understand much better, now, how this could work in a more controlled and defined space. I also think focusing on the challenges of a depth sensor alone and maybe using it to more carefully capture motion, rather than necessarily a range of depth, could have allowed this to be more sensitive and, therefore, successful. I certainly spent more time than I would have liked trying to figure out how to get the trigger to be less sensitive than I wanted it to be but sensitive enough to still fire, and I still didn’t quite figure it out.

I think the decision to trigger both a visual and auditory reaction when the specific areas were interacted with was a good one. The sound, especially, seemed like a success with my classmates interacted with it, and the simplistic nature of a little bit of magic on a black screen also worked well. It was noted that the specific visual and sound combination did make those playing with it want to do more magical motions or incited joy, so I think that those were good choices.

Figure 3: A Touch of Magic

I can’t help wishing the presentation would have been visually more polished, but I don’t really have the skills to make more finalized video product. Adobe stock leaves something to be desired, but it’s what I’m mostly working with right now, as well as whatever sounds I can find in a relatively short amount of time that kind of “do the job.” I think this is where my scenic design abilities need the other, media design part to fully execute the ideas that are in my head. As I said, though, I am primarily using these cycles to continue to explore and learn how things work.

In the end, I think the project elicited the responses I was hoping for, which mostly was for people to realize a motion in a specific space would trigger magic. I could also see how some magic incited the desire to seek out more, and I think that a more comprehensive version of this could absolutely be extrapolated out into the queue entertainment I saw at Disney.* I do think this project was successful, in that I learned what I set out to learn and it incited play, and, depending on how the coming cycles develop and what I learn, there feels like the possibility some of this might show up again in the coming cycles.

Figure 4: The To Be Continued Scene Transition

*In the queue, guests interacted with shadows of either butterflies, bells, or Tinkerbell. After a certain amount of time or a certain number of interactions, the scene would kind of “explode” into either a flurry of butterflies, the bells ringing madly, or Tinkerbell fluttering all around, and move into the next scene. Guests’ shadows would be visible in some scenes and instances and not in others, sometimes a hat would appear on a shadow’s head. I think I mostly figured out what was going on in the queue sequence, and for that, I’m very proud of myself.

Pressure Project 2

Posted: March 5, 2025 Filed under: Uncategorized Leave a comment »For pressure project two, I took my main inspiration from “A Brief Rant on the Future of Interaction Design” by Bret Victor. I was drawn to the call for tactile user interfaces and the lamentation of a lack of sensation when using our hands and fingers on touch screen “images below glass.” I wanted to create something that not only avoided the traditional user interface, but also avoided the use of screens all together. For this reason, I choose to focus on sound as the main medium and created the experience around the auditory sensation.

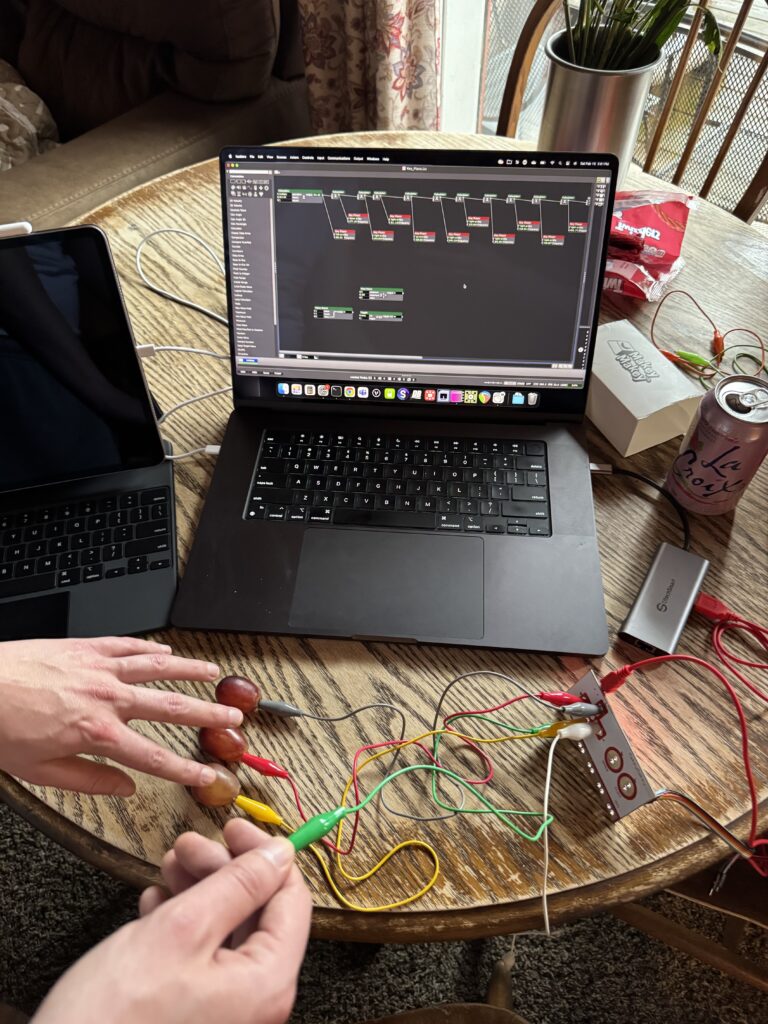

Having seen the Makey Makey’s around the Motion Lab for over a year, I was excited to finally use one and see what all the fuss was about. I was immediately inspired by the many options that became available to be used as a means of interacting with the computer. Anything that had even the slightest ability to exchange electrical charges could be used to trigger events on the computer.

Knowing that I wanted to focus on sound, I started off this exploration by recreating the popular Makey Makey example of the banana keyboard. Since I didn’t want to ruin multiple bananas for an experiment, I decided to use grapes instead. I was able to map the grapes to the tone generator actor in isadora and then it was just a matter for figuring out the frequencies of each note, which it turns out is quite specific and requires hard coding each note as a separate actor.

Since I considered this a simple proof of concept, I didn’t bother to create the whole keyboard and was satisfied with being able to play “hot cross buns” using the three notes I had programmed. When I finally had the patch working well, I quickly realized that the tone generator in Isadora was very one dimensional. It produced a pleasant sine wave but the result was something that sounded very robotic and computational. This was one component that I knew would need further refinement. While enjoyed the grapes as an instrument (and a snack) I knew I wanted something a bit more meaningful. I had considered using brail to spell out the notes, but I ended up deciding I would like to deviate further from the “keyboard” example and instead trigger sounds in a more abstract way.

Having successfully complete the basic outline of a patch, I took a few days to think about the different ways I could modify and expand the concept so that it no longer resembled a piano. I was encouraged to explore the “AU” actors in Isadora to expand the functionality of the audio handling in Isadora. I found these actors to be useful, but also a bit mysterious since I could not find any help documentation readily accessible. Through a process of trial and error, I found the actors that worked for my purposes and was able to have the control needed to complete the patch.

For the tactile inputs I was reminded of some basic 3 dimensional shapes that have been laying around in the Motion Lab. I don’t know their exact origin, but I liked the simple sphere, cube, cone and cylinder’s size and overall appearance, so I decided to use them as the human facing element of the project. The shapes simple form reminded me of some ancient truths as if they were the basic elements of the universe. When these basic building blocks are combined, the mystery will be revealed. At last, I found a way to fulfill the overarching requirement of pressure project 2.

Since I choose to make this a sound centric project, deciding on the sound effects that would be played was one of the most important aspects. I wanted something booth soothing and mysterious and was reminded of the souvenir “Tibetan singing bowls” that I had seen in the Christmas Markets of Europe. When the rim of the bowl is rubbed with a stick, the bowl starts to resonate like a bell and reverberates loudly in a very calming tone.

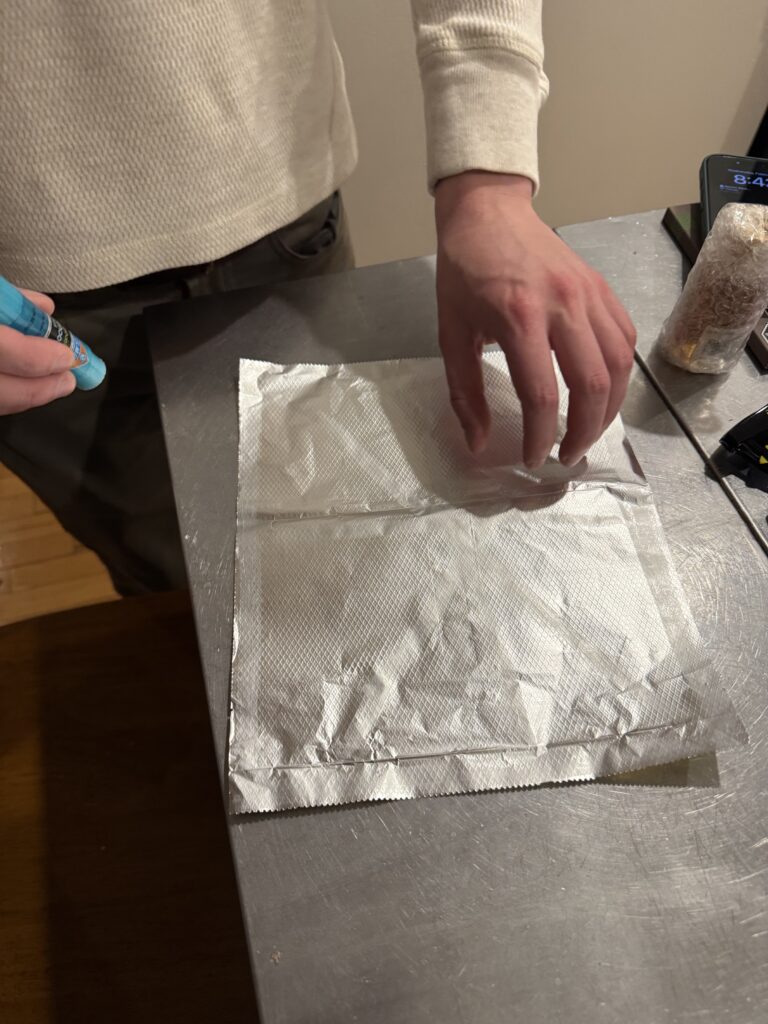

I downloaded some pre-recorded singing bowls from freesounds.org and after a bit of research, decided to cover the “singing shapes” in gold foil so that they resembled the metal bowls, but also so they would conduct electricity for the Makey Makey. Wiring everything up was a breeze and the basic patch I had made before only needed a few tweaks. The most difficult part was revealing the final “mystery” when all four shapes were activated at the same time, a 5th sound of a choir singing was activated.

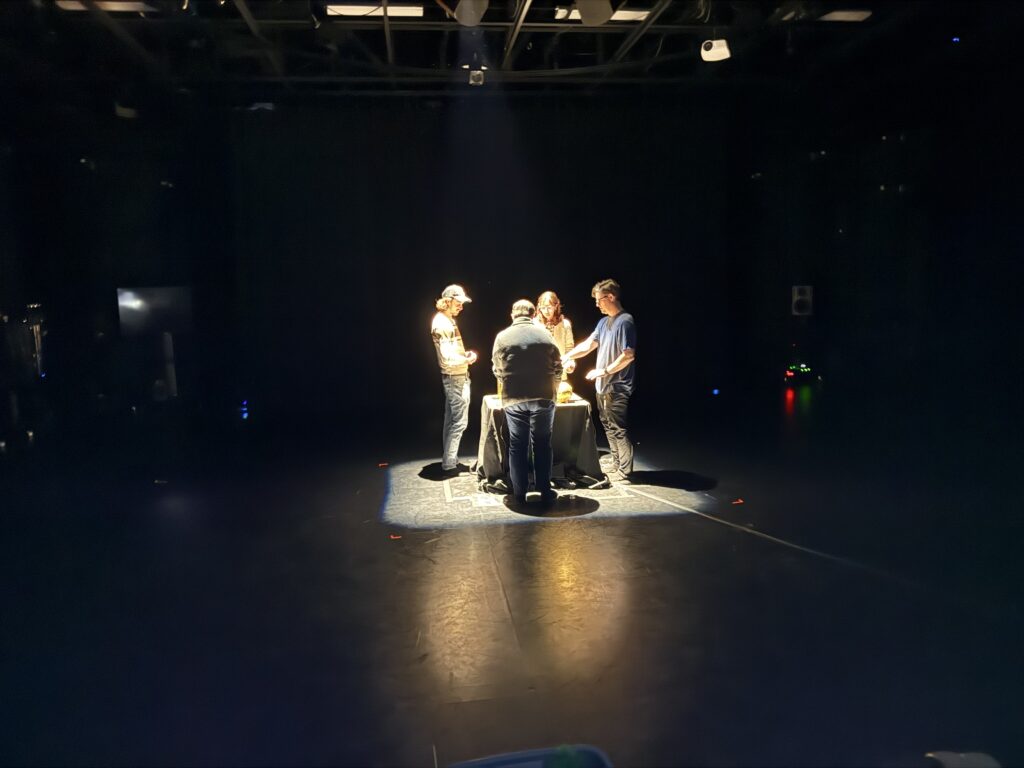

Finally, for the actual project presentation, I found myself with an extra hour to spare and an empty Motion Lab, so I decided to take the project to the next level and present a polished version to the class. I had this larger vision ahead of time, but was not planning on implementing it since I didn’t think I would have the opportunity, but I’m glad I did. A few mirrors that I found in the same box as the shapes, and an fortuitously focused lighting special added the finishing touch.

I was happy to watch the class interact with the singing shapes and it quickly became clear they were putting a lot more thought into the “mystery” than I did. There were a few theories about which shape did what function and if what order you pressed them in mattered, in reality they played the exact same sound every time and the order made no difference. Eventually they all were activated the same time and the choir began to sing. I don’t think it was obvious enough, because it was noticed, but there was no “ah ha” moment and I think people were expecting a bit more of a pay off. Either way, I had fun making it, and I think others had fun experiencing it, so overall I think it turned out well.

Pressure Project 2: Unveil the Mystery

Posted: March 3, 2025 Filed under: Uncategorized Leave a comment »For this pressure project, we had to unveil a mystery. Going into it, I had a general idea of what I wanted to create, but I faced many struggles during execution. I spent a good portion of my 7 hours doing research into different actors I would need and how to use them. I think I got so focused on the technical aspects that I pushed the storytelling aspect to the side and didn’t flush the story out as much as I wanted. Overall, though, my project was successful and I learned a lot.

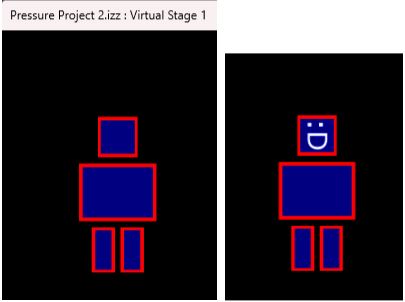

I wanted to hide little mini mysteries throughout my project, so I built three scenes for the user to explore before getting to the end. Each scene has a base set of actors that I build on to customize the scene. I created a little avatar to move around the stage with a Mouse Watcher actor and added a sound effect (unique to each scene) that played when the avatar hit the edge of the stage. The avatar also completed a different action in each scene when the user hit a button on the Makey Makey interface I made.

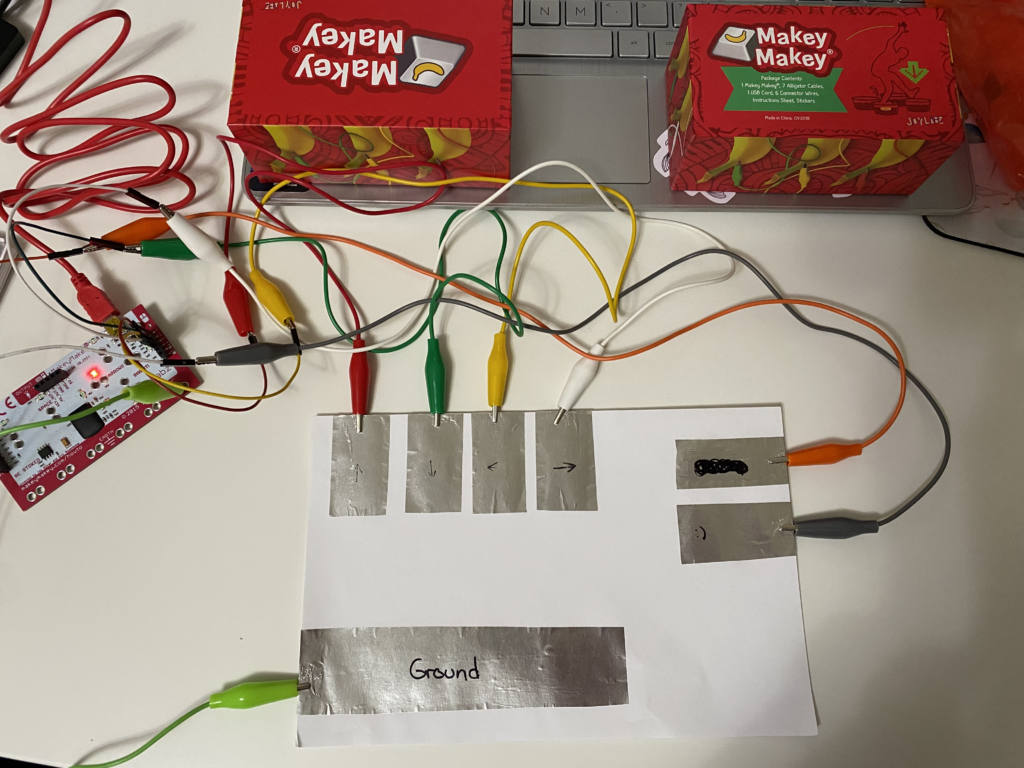

This is the Makey-Makey interface I made for myself, which allowed me to use it single-handed. I planned to make another one that was more like a video game controller but ran out of time.

In the intro scene, a digital city, the avatar jumped when the key was hit, and after 7 jumps, it inflated to take up a large portion of the scene, then quickly deflated. In the ocean scene, it triggered fish to “swim” across the scene so it could swim with the fish. In the space scene, the avatar teleported (an explosion actor was triggered to scatter the avatar’s pixels and reform them). Every scene had another key plugged into a counter actor, and after that key was pushed so many times, it jumped to the next scene. This project was designed to make the users curious to push all the buttons to figure out the mystery. A great suggestion I received was to put a question mark on the action button, which my peers agreed would be more effective than having it unlabeled.

I received a lot of positive feedback on my project, both verbally and through reactions. There were several moments where they were surprised by something that happened (aka a feature they unlocked), namely when the avatar first exploded in the space scene. I was surprised by this because I didn’t think it would be a big moment, but everyone enjoyed it. They went into it with an unlock-the-mystery mentality and were searching for things (oftentimes things that weren’t there), so they were happy to find the little features I put in for them to find before reaching the final mystery, which was that they won a vacation sweepstakes. They said the scenes felt immersive and alive because I used video for the backgrounds. Again, the space scene was a big hit because the stars were moving towards the user and was more noticeable than the more subtle movement of the ocean scene. There were multiple moments of laughter and surprise during the presentation so I am very pleased with my project.

The main critique was that the mystery was a bit confusing without context, and I do agree with that. One suggestion was to add a little something pointing to the idea of a context or raffle to add context for the final mystery, and another was to progress from the ocean to land to beach to get to the vacation resort or something along those lines. My original idea was to have portals between scenes and end with a peaceful beach vacation after traveling so far, but I ran out of time and didn’t dedicate enough time to telling the story that I ended up throwing something in at the end. They did say the final scene provided closure because the avatar had a smile (unique to this scene) and was jumping up and down in celebration.

I hit a creative rut during this project so the majority of it was improvised, contributing to the storytelling problem. I started by just making the avatar in the virtual stage because making a lil guy seemed manageable, and it sparked some ideas. I decided on a video game-esque project where the avatar would move around the scene. As I brainstormed ideas and started researching, I had a list of ideas for what could happen as the user navigated through my little world. I spent about 20-25 minutes on each idea on the list to figure out what worked. This involved research and (attempted) application. Some things didn’t work and I moved on to try other things.

I broke this project up into several short sessions to make it more manageable, especially with my creative struggles. This gave me time to sit and process/reflect what I had already done, attempted, and wanted to do. I was able to figure out a few mechanisms I had struggled with and go back later to make them work, which helped me move the project along. One time-based challenge I did not consider was how much time it would take to find images, videos, and sound effects. The idea to add these sonic and visual elements occurred much later in the process, so I did not have a lot of time left to adequately reallocate my time, so I do think I would have been better off creating a storyboard before diving right in to better prepare myself for the project as a whole.