Cycle Three: The Forgotten World of Juliette Warner

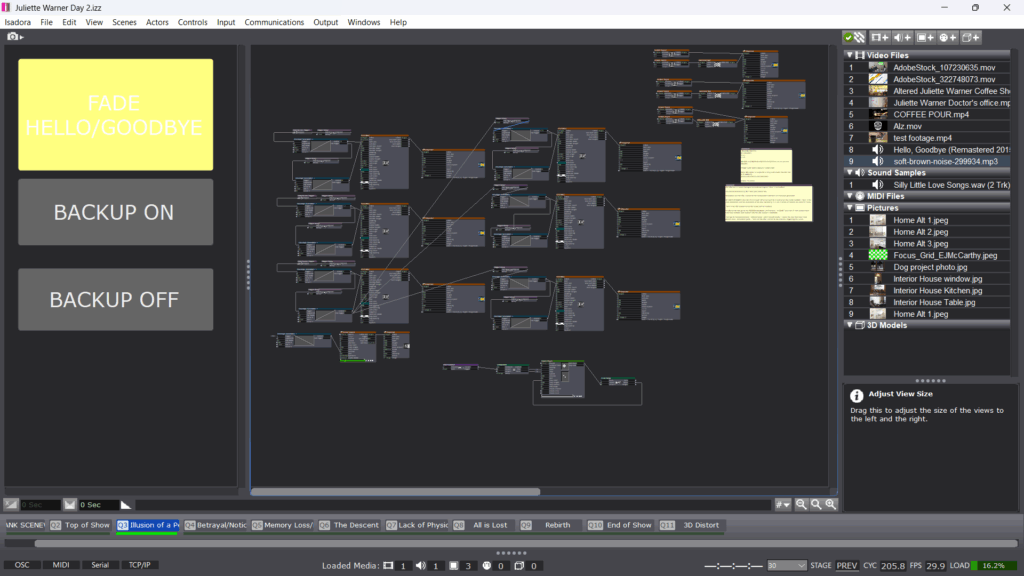

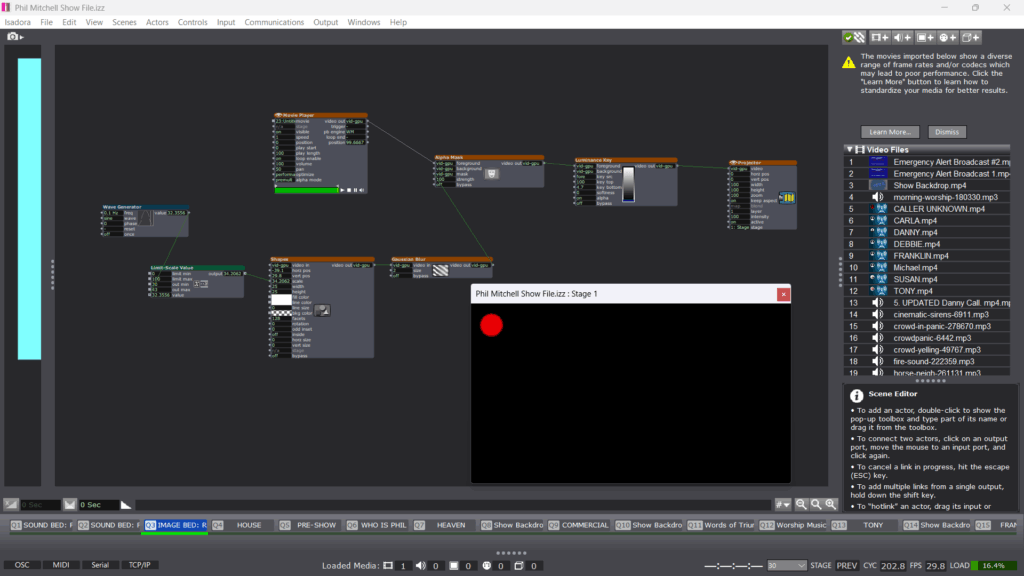

Posted: April 30, 2025 Filed under: Uncategorized Leave a comment »For my third cycle, I wanted to revisit my cycle one project based on the feedback I had received, which centered mostly on the audience’s relationship with the projections. One sticking point from that earlier cycle was that all three projectors featured the same projected surface. This choice was originally made as a preventative measure to keep Isadora’s load low. That being said, the first point of focus for my cycle three project was determining whether three separate projected video streams would be sustainable. Once I confirmed that they were, I began sourcing media for the entire script.

After gathering my media, I moved onto an element I’ve wanted to incorporate since cycle one but hadn’t felt fully ready to tackle, depth sensors. I used the Orbec sensor placed in the center of the room, facing down (bird’s-eye view), and defined a threshold that actors could enter or exit, which would then trigger cues. I accessed the depth sensor through a pre-built patch (thank you, Alex and Michael) in TouchDesigner, which I connected to Isadora via an OSC Listener actor. This setup allowed Isadora to receive values from TouchDesigner and use them to trigger events. With this in mind, I focused heavily on developing the first scene to ensure the sensor threshold was robust. I marked the boundary on the floor with spike tape so the performers had a clear spatial reference.

Outside of patch-building, I devoted significant time to rehearsing with three wonderful actors. We rehearsed for about three hours total. Because class projects sometimes fall through due to cancellations. as happened with my first cycle, I wanted to avoid that issue this time around. It was important to me that the actors understood how the technology would support their performances. To that end, I began our first rehearsal by sharing videos that illustrated how the tech would function in real time. After that, we read through the script and discussed it in our actor-y ways, and I explained how I envisioned the design unfolding across the show.

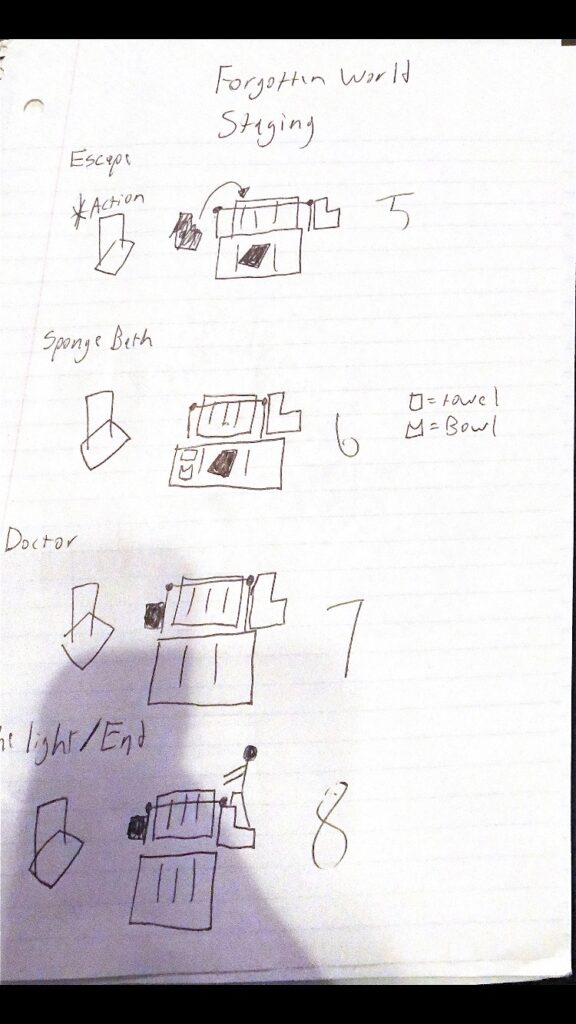

In our second rehearsal, we got on our feet. I defined entrances and exits based on where the curtains would part and placed mats to indicate where the bench would be in the Motion Lab. These choices helped define the shared “in-the-round” experience for both audience and performers.

Over the weekend, I worked extensively on my patch. The full script juxtaposes the Heroine’s Journey with the stages of Alzheimer’s, so I wanted the audience to know which stage they were in as the performance unfolded. Using a combination of enter-scene triggers, trigger delays, envelopes, and text-draw actors, I created a massive patch for each scene that displayed the corresponding stage. This was by far my biggest time investment.

When I was able to get into the lab space with my actors for a mini tech run, I realized that these title cards, which I had spent so much time on, were not serving the immersive experience and to be honest, they were ugly. The entire concept of the piece was to place the audience alongside Juliette on her journey, sharing her perspective via media. Having text over that media disrupted the illusion, so I cut the text patches and Isadora’s performance load improved as a result.

I spent another two hours between tech and performance fine-tuning the timing of scene transitions, something I had neglected earlier. This led to the addition of a few trigger delays for sound to improve flow.

When it came time to present the piece to a modest audience, I was surprisingly calm. I had come to terms with the idea that each person’s experience would be unique, shaped by their own memories and perspectives. I had my own experience watching from outside the curtains, observing it all unfold. There’s a moment in the show where Juliette’s partner Avery gives her a sponge bath, and we used the depth sensor to trigger sponge noises during this scene. I got emotional there for two reasons. First, the performer took his time, and it came across as such a selfless act of care, exactly as intended. Second, I was struck by the realization that this was my final project of grad school, an evolution of a script I wrote my first year at OSU. It made me appreciate just how much I’ve grown as an artist, not just in this class, but over the past three years.

The feedback I received was supportive and affirming. It was noted that the media was consistent and that a media language had been established. While not every detail was noticed, most of the media supported the immersive experience. I didn’t receive the same critiques I had heard during cycle one, which signaled a clear sense of improvement. One comment that stuck with me was that everyone had just experience theatre. I hadn’t considered how important that framing was. It made me reflect on how presenting something as theatre creates a kind of social contract, it’s generally not interactive or responsive until the end. That’s something I’m continuing to think about moving forward.

Once the upload is finished, I will include it below:

Cycle 3: Grand Finale

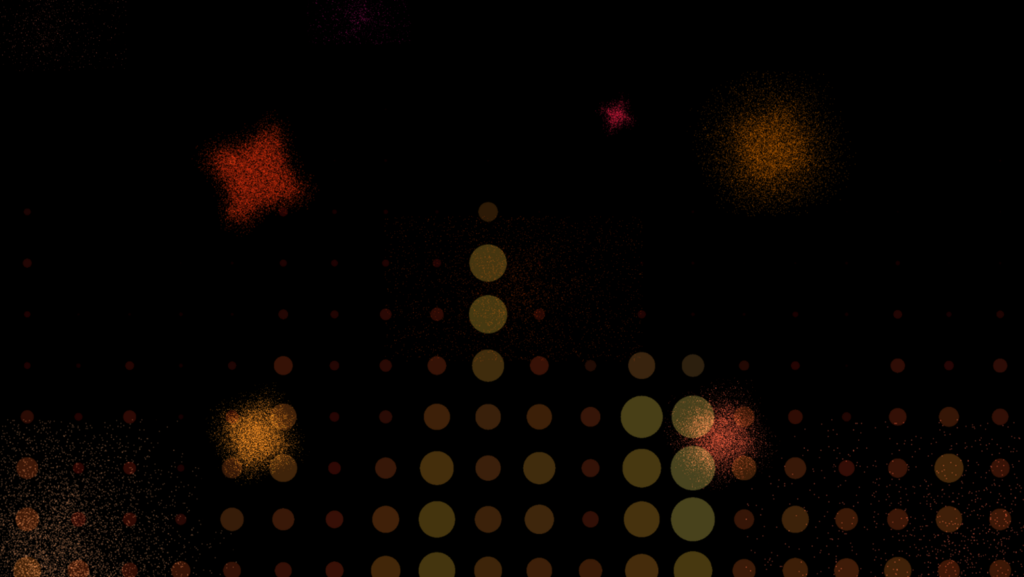

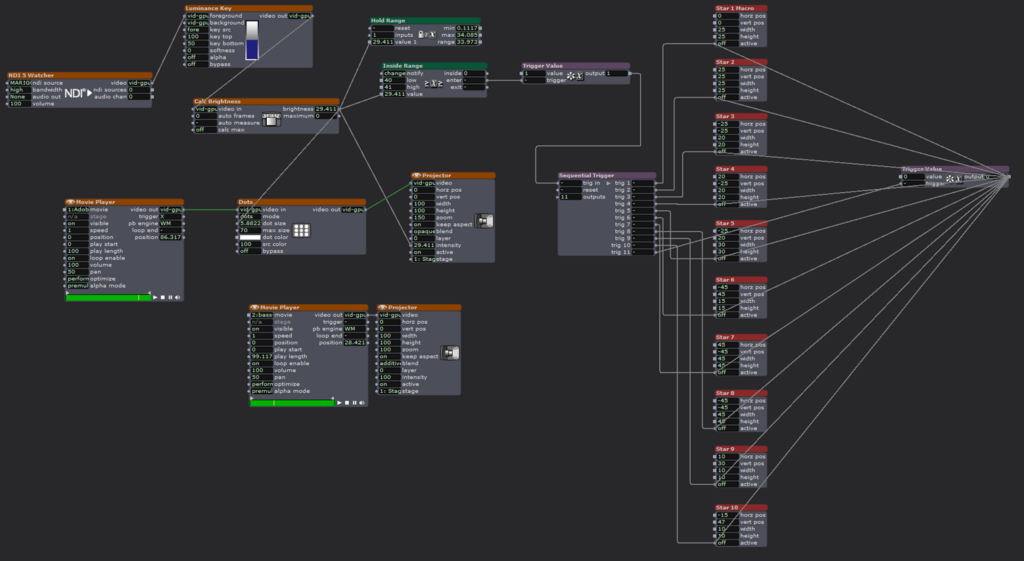

Posted: April 29, 2025 Filed under: Uncategorized Leave a comment »For the final project, I decided to do some polishing on my Cycle 2 project and then try to add to it to create a more complete experience. During feedback for Cycle 2, a few people felt the project could be part of a longer set, so I decided to try to figure out where that set might go. This allowed me to keep playing with some of the actors I had been using before, but also to try to see how I could alter the experience in various ways to keep it interesting. I, again, spent less time than I did in the beginning of the semester looking for assets. I think I knew what I was looking for more quickly than I did in the beginning, and I knew the vibe I wanted to maintain from the last cycle. While I, for the second cycle in a row, neglected to actually track my time, a decent portion of it was spent trying to explore some of the effects and actors I hadn’t used before that could alter the visuals of what I was doing in smaller ways, by swapping out one actor for another. As always, a great deal of time was spent adjusting and fine-tuning various aspects of the experience. Unlike in the past, far, far too much time was spent trying to recover from system crashes, waiting for my computer to unfreeze, and generally crossing my fingers that my computer will make it through a single run of the project without freezing up. Chances seem slim.

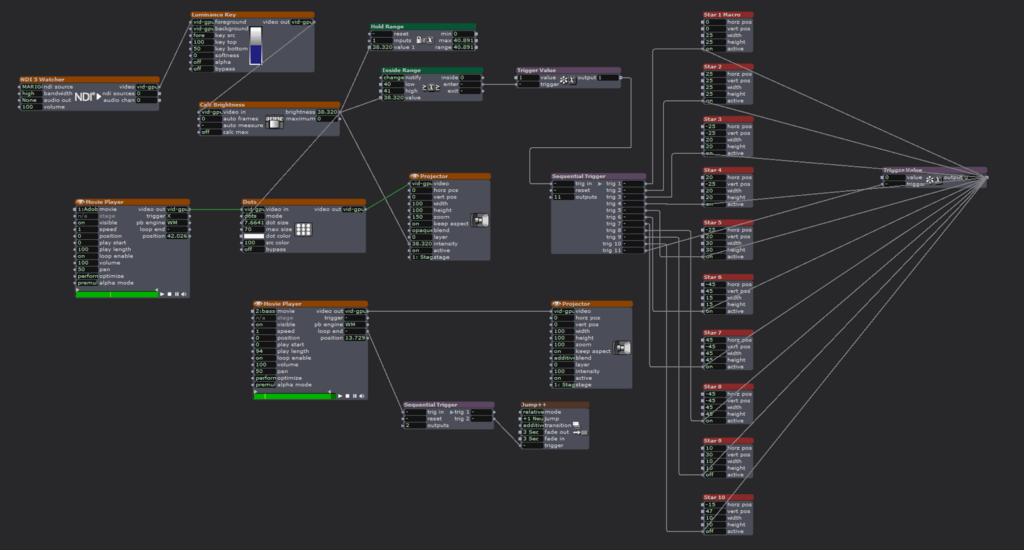

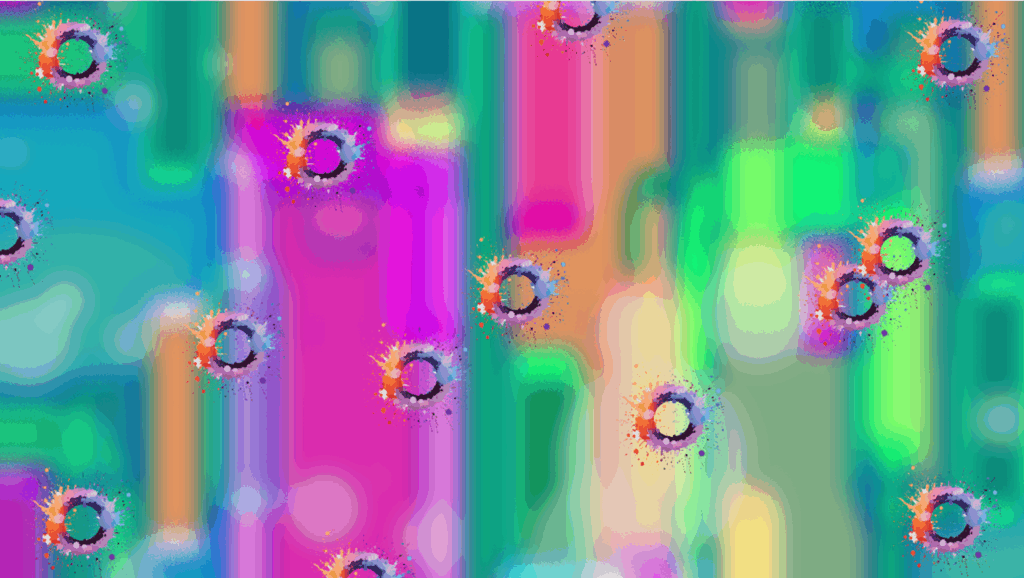

Cleaned Up and Refined Embers

Flame Set-up

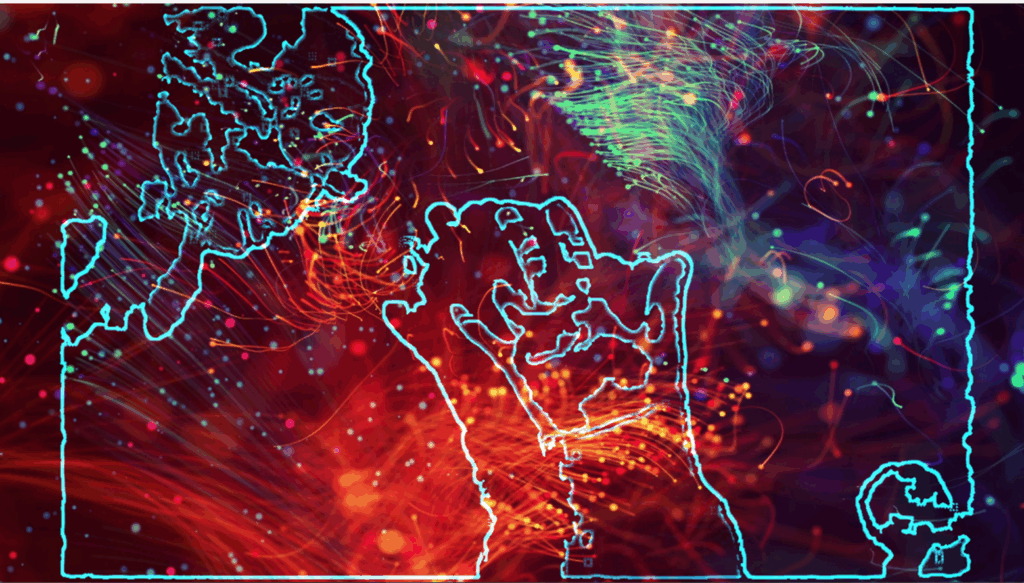

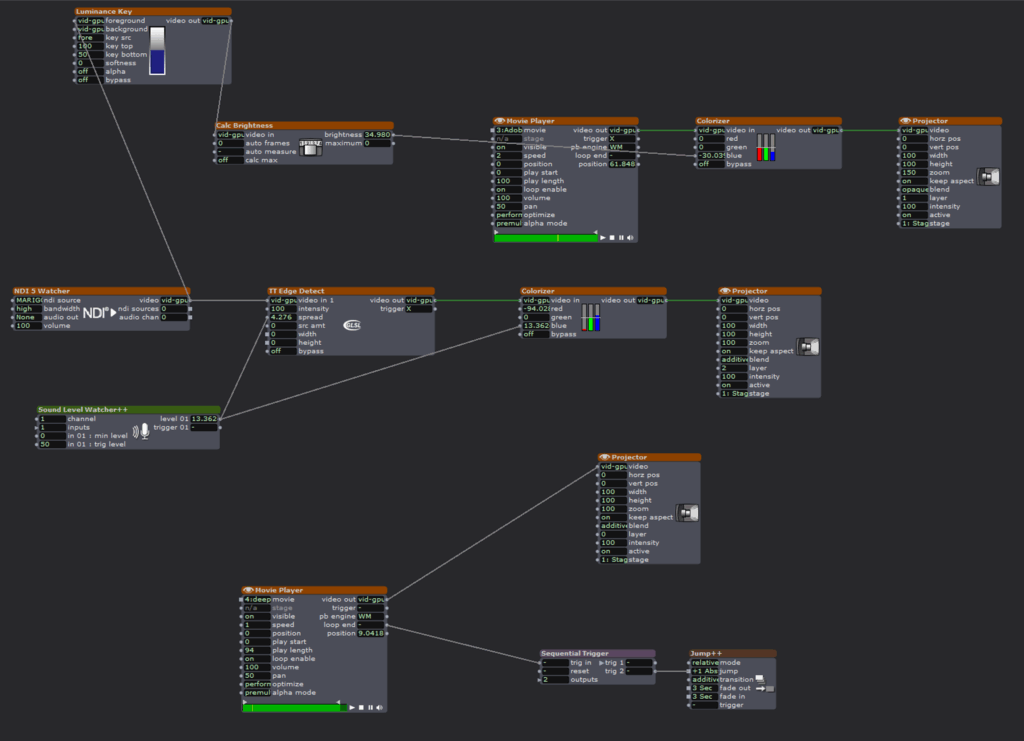

I again worked with the Luminance Key, Calc Brightness, Inside Range, and Sequential Triggers actors. Sequential Triggers, in particular, are a favorite because I like tying “cues” together and then being able to reset the experience without anyone needing to touch a button. Throughout the class, I’ve been interested in making experiences that cycle and don’t have an end, things that can be entered at any time, really, and can be abandoned at any point. To me, this most closely mimics the way I’ve encountered experiences in the wild. Think of any museum video, for instance. They run on a never-ending loop, and it’s up to the viewer to decide to wait until the beginning or just watch through until they return to where they entered the film. For me, I want something that people can come back to and that isn’t strictly tied to a start and stop. I want people to be able to play at will, wait for a sequence they particularly enjoyed to come back around, leave after a few cycles, etc. I am still using TouchDesigner and a depth sensor to explore these ideas. This time around, I also the TT Edge Effect, Colorizer, and Sound Level Watcher ++ to the mix. I was conservative with the Sound Level Watcher because my system was already getting overloaded, at that point, and while I wanted to incorporate it more, I wanted to err on the side of actually being able to run my show.

“Neuron” Visuals

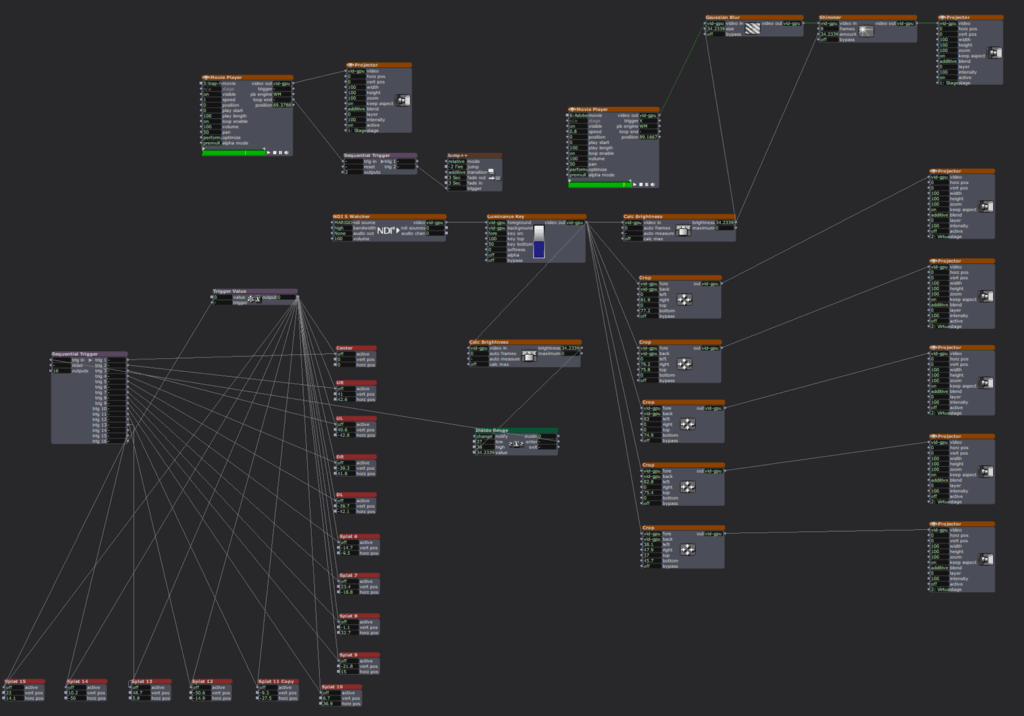

“Neuron” Stage Set-up

The main challenge I faced this time was that my computer is starting to protest, greatly, and Isadora decided she had had enough. I ran into errors a number of times, and while I didn’t lose a significant amount of work, I did lose some, and the time I lost to the program crashing, restarting my computer in hopes it would help, and various other troubleshooting attempts was not insignificant. In retrospect, I should have found ways to scale back my project so my computer could run it smoothly, but it everything was going fine until it very much wasn’t. I removed a few visual touches and pieces to try to get the last scene, in particular, to work, and I’m hoping that will be enough.

Initial Set-up of Final Scene

Final Scene Visuals before Edits

For this project, I mostly was focusing on expanding on my Cycle 2 project to make a longer experience. I don’t know that the additional scenes ended up being as developed as the first, but I think they still added to the experience as a whole. I worked hard to incorporate music to make a more holistic experience, as well. As stated above, I couldn’t do so to the level I would have liked, but I hope the impulse toward that is recognized and appreciated, such as it is. I also wanted to address some of the visual notes I received in the last cycle, which I was able to do with relative ease. I tried to find new ways for people to interact with the project in the added scenes, but I feel they are a little lacking in the interactive department. Ultimately, it was a combination of struggling with Isadora logic, still, and a lack of ideas. I still feel my creativity in these projects is somewhat stymied by my beginner status with media. I can’t quite get to the really creative exploration, still, because I’m working on figuring out how to make things work and look somewhat finished. I’m satisfied with what I’ve been able to put together, I just wish I were able to create at a higher level (it’s an unrealistic wish, but a wish, nonetheless).

If I were to continue to work on this project, I would work to increase the interactivity in each scene and make things more sound reactive. I would continue to look for different ways people could play with the scenes. I think there’s a world where something like this is maybe 5 or 6 scenes long, and it runs 20-30 minutes. I think this one runs maybe 15 minutes with three scenes, which maybe would work well with more interactivity woven in. Regardless, I’m happy with what I managed to put together and all I managed to cram into my noggin in one semester.

ADDENDUM: I ended up cutting the third scene of the experience before I presented it. By the time I removed enough of the effects to get it to run smoothly, it felt too derivative, and I decided to stick with the two scenes that were working and “complete.” If I had more time, I would work on the getting the third scene to a simpler patch that was still engaging.

Cycle 2 | Mysterious Melodies

Posted: April 20, 2025 Filed under: Uncategorized 1 Comment »For my cycle 2 project, I wanted to expand upon my original idea and add a few resources based on the evaluation I received from the initial performance. I wanted to lean away from the piano-ness of my original design and instead abstract the experience into a soundscape that was a bit more mysterious. I also wanted to create an environment that was less visually overwhelming and played more with the sense of light in space. I have long been an admirer of the work of James Turrell, an installation artist that uses light, color and space as his main medium. Since my background is primarily in lighting and lighting design, I decided to remove all of the video and projection elements and focus only on light and sound.

ACCAD and the Motion Lab recently acquired a case of Astera Titan Tubes. They are battery powered LED tubes that resemble the classic fluorescent tube, but have full color capabilities and the ability to be separated into 16 “pixels” each. They also have the ability to receive wireless data from a lighting console and be controlled and manipulated in real time. I started by trying to figure out an arrangement that made sense with the eight tubes that I had available to me. I thought about making a box with the tubes at the corners, I also thought about arranging them in a circular formation. I decided against the circular arrangement because, arranged end to end, the 8 tubes would have made an octagon, and spread out, they were a bit linear and created more of a “border”. Instead, I arranged them into 8 “rays”. Lines that all originated from the center and fanned out from a central point. This arrangement felt a bit more inviting, however it did create distinct “zones” in-between the various sections. The tubes also have stands that allow them to be placed vertically. I considered this as well, but I ended up just setting them flat on the ground.

In order to program the lights, I opted to go directly from the lighting console. This was the most straightforward approach, since they were already patched and working with the ETC ion in the Motion Lab. I started by putting them into a solid color that would be the “walk in” state. I wanted to animate the pixels inside the tubes and started by experimenting with the lighting console’s built in effects engine. I have used this console many times before, but I have found the effects to be a bit lacking on usability and struggled to manipulate the parameters to get the look I was going for. I was able to get a rainbow chase and a pixel “static” look. This was okay, but I knew I wanted something a bit more robust. I decided to revisit the pixel mapping and virtual media server functions that are built into the console. These capabilities allow the programmer to create looks that are similar to traditional effects, but also create looks that would otherwise be incredibly time consuming if not completely impossible using the traditional methods. It took me a bit to remember how these functions worked since I have not experimented with them since my time working on “The Curious Incident of the Dog in the Night”, programming the lighting on the set that had pixel tape integrated into a scenic grid. I finally got the pixel mapping to work, but ran out of time before I could fully implement the link to triggers already being used in Touch Designer. I manually operated the lighting for this cycle, and intend to focus more on this in the next cycle.

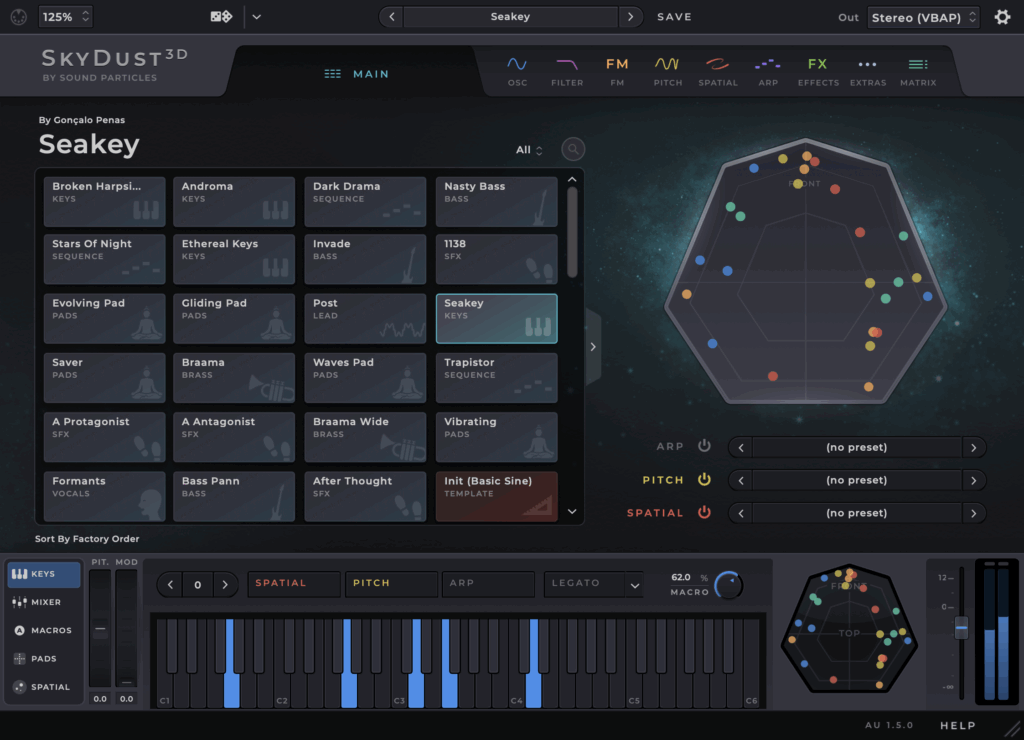

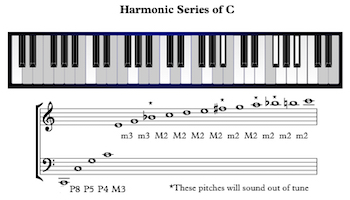

For the audio portion, I decided to use “Skydust” a spatial synthesizer that Professor Jean-Yves Munch recommended. This allowed me to use the same basic midi integration as the last cycle, but expand the various notes into spatial sound without the need for an extra program to take care of the panning. Similar to the last cycle, I spent a lot of time listening to the various presets and the wide variety of sounds and experiences they produced. Everything from soft and southing to harsh and scary. I ended up going with the softer side and finding a preset called “waves pad” which produced something a bit ethereal but not too “far out”. I also decided to change up the notes a bit. Instead of using the basic C chord, I decided to use the harmonic series. I had a brief discussion with Professor Marc Ainger and this was recommended as a possible arrangement that went beyond the traditional chord structure.

For the patch, I kept the same overall structure but moved the trigger boxes around into a more circular shape to fill the space. Additionally, I discovered that I could replace the box with other shapes. Since the overall range of the depth sensor is a circle, I decided to try out the “tube” or cylinder shape in touch designer. When adding boxes and shapes into the detector, I also noticed that there is a limit of 8 shapes per detector. This helped a bit to keep things simplified and I ended up with 2 detectors, one that had boxes in a circular formation, and another with tubes of various sizes, one that was low and covered the entire area, with another that was only activated in the center, and a third tube that was only triggered if your reached up in the center of the space.

The showing of this project was very informative since only one other person had experienced the installation previously. I decided to pull the black scrim in front of the white screen in motion lab to darken the light reflections, while also allowing the spatial sound to pass through. I also pulled a few black curtains in front of the control booth to hide the screens and lights inside. I was pleasantly surprised by how long people wanted to explore the installation. There was a definite sense of people wanting to solve or decode a mystery or riddle, even though there was no real secret. The upper center trigger was the one thing I hoped people would discover and they eventually found it (possibly because I had shown some people before). For my next cycle I plan to continue with this basic idea but flesh out the lighting and sonic components a bit more. I would like to trigger the lighting directly from touch designer. I am still searching for the appropriate sound scape and am debating between using pre recorded samples to form a song or continuing with the midi notes and instruments. Maybe a combination of both?

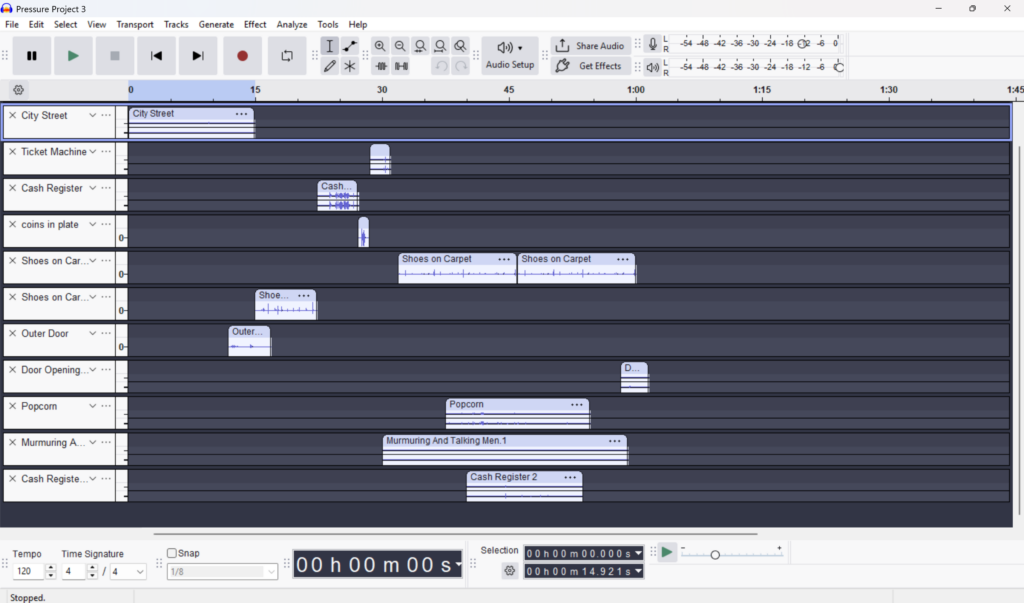

Pressure Project 3 | Highland Soundscapes

Posted: April 20, 2025 Filed under: Uncategorized | Tags: Pressure Project 3 Leave a comment »Our prompt for pressure project 3 was to create an audio soundscape that reflects a cultural narrative that is meaningful to you. Since I am 75 percent Scottish, I choose to use the general narrative of Braveheart as an inspiration. My biggest challenge for this project was my motivation. Since I am concurrently taking Introduction to Immersive Audio, I have already done a project similar to this one just a few weeks ago. I struggled a bit with that project, so this one seemed a bit daunting and unexciting. I must admit, the biggest hurdle was just getting started.

I wanted to establish the setting of the Scottish countryside near the sea. I imagined the Cliffs of Moher, which are actually in Ireland, but the visual helped me to search and evaluate the many different wave sounds available. I used freesound.org for all of my various sound samples. In order to establish the “country side” of the soundscape, I found various animal noises. I primarily focused on “highland cow” and sheep. I found many different samples for these and eventually I got tired of listening to the many moos and trying to decide which sounded more Scottish. I still don’t know how to differentiate.

To put the various sounds together I used Reaper. This is the main program we have been using for my other class, so it seemed like the logical choice. I was able to lay a bunch of different tracks in easily and then shorten or lengthen them based on the narrative. Reaper is easy to use after you get the hang of it and it is free to use when “evaluating” which apparently most people do their entire lives. I enjoyed the ease of editing and fading in and out which is super simple in Reaper. Additionally, the ability to automate panning and volume levels allowed me to craft my sonic experience easily.

For the narrative portion, I began by playing the seaside cliffs with waves crashing. The sound of the wind and crashing waves set it apart from a tranquil beach. I gradually began fading in the sound of hoofs and sheep as if you were walking down a path in the highlands and a herd of cattle or sheep was passing by. The occasional “moo” helped to establish the pasture atmosphere I wanted to establish. The climax of the movie Braveheart is a large battle where the rival factions charge one another. For this, I found some sword battle sounds and groups of people yelling. After the loud intense battle, I gradually faded out the sounds of clashing swords and commotion to signify the end of the battle. To provide a resolution, I brought back in the sounds of the cattle and seaside, this was to signify that things more or less went “back to normal” or, life goes on.

For the presentation portion, I decided to take an extension since my procrastination only lead me to have the samples roughly arranged in the session and the narrative portion wasn’t totally fleshed out. This was valuable not only because I was not fully prepared to present on day 1, but I was also able to hear some of the other soundscapes that helped me to better prepare and understand the assignment. While I am not proud that I needed an extension, I am glad I didn’t decide to present something that I didn’t give the proper amount of effort to. While, I don’t think it was my best work, I do think it was a valuable exercise in using time as a resource and how stories can sometimes take on a life of their own when a listener has limited visual and lingual information and must rely totally on the sounds to establish the scene and story. I felt the pressure of project 3 and I am glad I got the experience, but I’m also glad it’s over 🙂

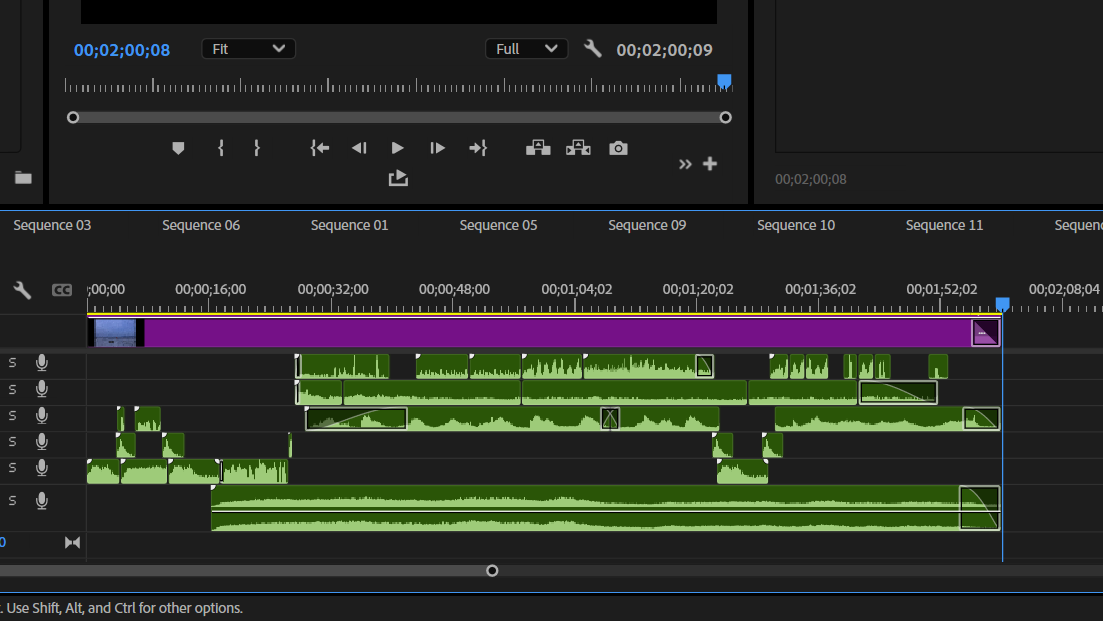

Cycle 2: The Rapture

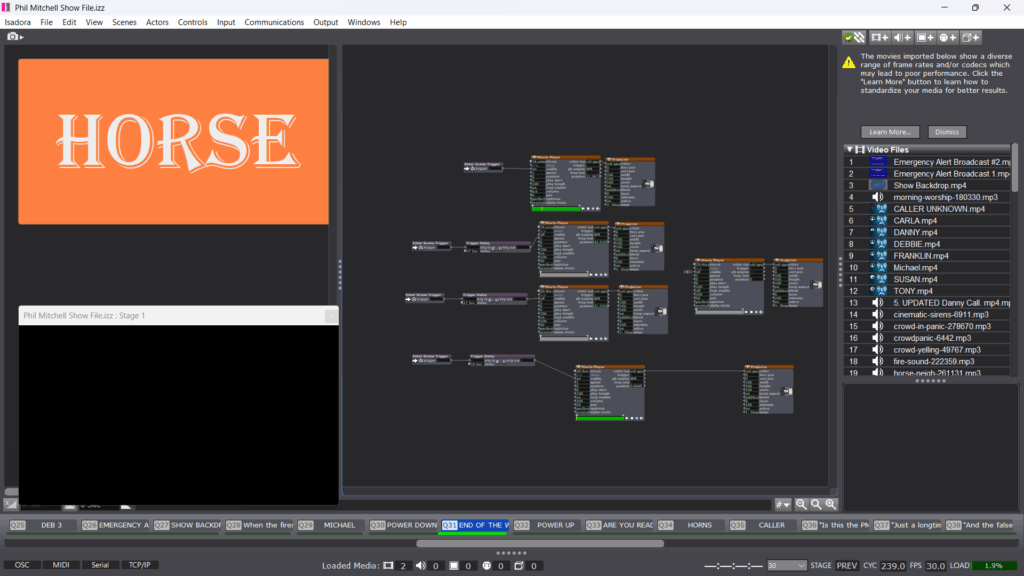

Posted: April 17, 2025 Filed under: Uncategorized Leave a comment »For Cycle Two, I wanted to build off the kernel of my first cycle, Isadora in a theatrical context, and put my money where my mouth is by using it in a real production. My first project was exploratory; this one was functional. I wanted to program a show I could tour anywhere that had a screen. I had just revised The Phil Mitchell Radio Hour, a solo show booked for performances April 11th and 12th at UpFront Performance Space in Columbus, and at the Atlanta Fringe Festival May 27th–June 8th. Both venues had access to a projector, so I decided to build a visual component to the show using Isadora.

This meant I needed to create a projection design and keep it user friendly, since I wouldn’t be running the projections myself. It was important to me that the visual media didn’t just exist as a cool bonus, but felt like a performer alongside me, heightening the story, rather than distracting from it. I’d say the project was a major success. I want to tweak a few small moments before Atlanta, but the tweaks are minor and the list is short.

My Score:

Resources:

- Isadora, Adobe Premiere Pro, Adobe Express

- Projector, HDMI cable, laptop

- UpFront Performance Space

- Projector screen

Steps:

- Create the “scenes” of the show in Isadora

- Remaster sound design and integrate into scenes using enter triggers

- Design projections

- Add projections to scenes and build new scenes as needed

- Run the full show and make sure it all holds together

For context: Here is the official show synopsis followed by an image from the original production:

“The Phil Mitchell Radio Hour follows a self-assured televangelist delivering a live sermon on divine pruning—only to realize mid-broadcast that the rapture is happening, and he didn’t make the cut. As bizarre callers flood the airwaves and chaos erupts, Phil scrambles to save face, his faith, and maybe even his soul—all while on live on air.”

I’m a big image guy, so I sort of skipped step one and jumped straight to step three. I began by designing what would become the “background” projection during moments of neutrality, places in the show where I wanted the projection to exist but not call too much attention to itself. I made a version of this in Adobe Express and pretty quickly realized that the stillness of the image made the show feel static. I exported a few versions and used Premiere to create an MP4 that would keep the energy alive while maintaining that “neutral” feeling. The result is a slow-moving gradient that shifts gently as the show goes on.

My light design for the original production was based around a red recording light and a blue tone meant to feel calming, those became my two dominant projection colors. Everything else built off that color story. I also created a recurring visual gag for the phrase “The Words of Triumph,” which was a highlight for audiences.

Once the media was built, I moved into Isadora. This was where the real work of shaping began. While importing the MP4s, I also began remastering the sound design. Some of it had to be rebuilt entirely, and some was carried over from previous drafts as placeholders. The show had 58 cues. I won’t list them all here, but one challenge I faced was how to get the little red dot to appear throughout the show. I had initially imagined it as an MP4 that I could chroma key over a blank scene then recall with activate/deactivate scene actors. Alex helped me realize that masking was the best solution, and once I got the hang of that, it opened up a new level of design.

All in all, I spent about two weeks on the project. The visual media and updated sound design took about 8–10 hours. Working in Isadora took another 3. By then, I felt really confident navigating the software. It was more about refining what I already understood. I initialized nearly every scene with specific values so it would be consistent regardless of who was operating. I also built some buttons for the stage manager in key moments. For example, when Phil yells, “Did I just see a horse?! Do horses go to Heaven?!” the stage manager pressed a button which activates a horse neighing sound.

When it came time to present a sample, I struggled with which moment to show. I chose a segment just before the first emergency alert of the Rapture, a phone call exchange. I thought it was the strongest standalone choice. It features three different projections (caller, emergency alert, and blank screen), has layered sound design, and doesn’t require much setup to understand.

Most of the feedback I received was about the performance itself, which surprised me. I was trying to showcase the Isadora work, but because I didn’t have a stage manager, I had to operate the cues myself, this created some awkward blocking. One suggestion I appreciated was to use a presenter’s remote in the future, so I could trigger cues without disrupting the flow of the scene. There were also some great questions about Phil’s awareness of the projections, should he see them? Should he acknowledge them? That question has stuck with me and influenced how I’ve shaped the rest of the show. Now, rather than pointing to the projections, Phil reacts as though they are happening in real time, letting the audience do the noticing.

Overall, I’m extremely proud of this work. Audience members who had seen earlier drafts of the show commented on how much the projections and sound enhanced the piece. This clip represents the performance version of what was shown in class. Enjoy 🙂

Cycle 2: Up in Flames

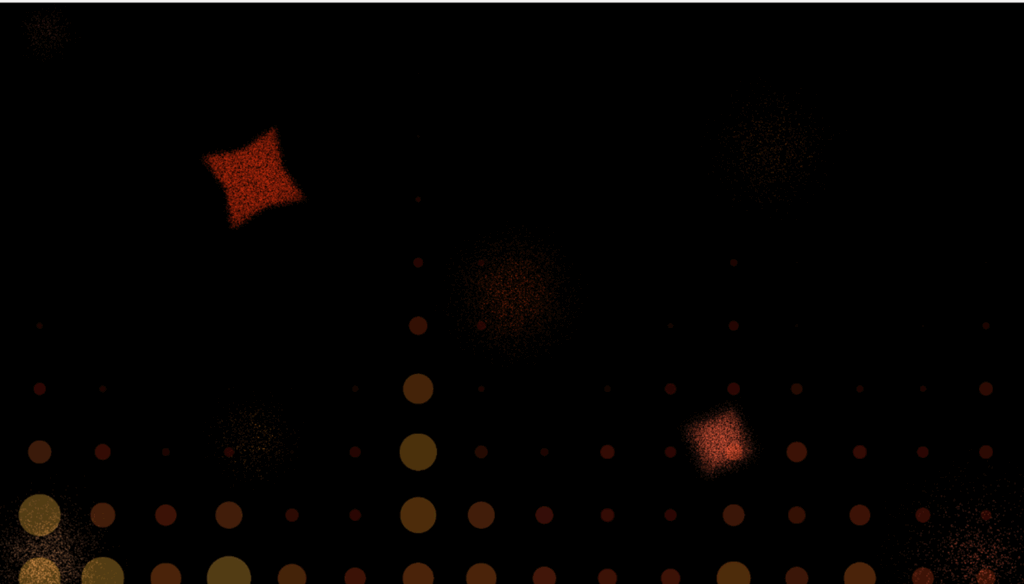

Posted: April 15, 2025 Filed under: Uncategorized Leave a comment »For this project, I wanted to keep stepping up the interactivity and visual polish. I knew I wouldn’t be able to get all the way with either, but my aim was to continue to step up those two aspects. In these cycles, my overall aim isn’t to create one, final project, but to use them as opportunities to continue to play and create as much as I can before the semester ends, and I have to return all the toys to the Lab. I didn’t do a great job of keeping track of how I was spending time on this project. I spent less time than I have in the past looking for assets, partially because I’m learning how to use my resources better and more quickly. I found the music I used more quickly than I expected, which also cut down on this aspect significantly. I spent most of my time increasing my understanding of actors I’d used before and trying to use them in new ways. Once I’d locked in the main visual, I figured out what to add to increase the interest and then invested some time in designing the iterations of the “embers.”

Exploding Embers

I explored the Luminance Key, Calc Brightness, Inside Range, and Sequential Triggers actors, mostly, seeing what I could figure out with them. I used them in the last cycle, but I wanted to see if I could use them in a different way that would require less precision from the depth sensor, which was also at play in this project. In the last cycle, I struggled with the depth sensor not being robust enough to tune into the very exact way I wanted to use it; this time, I figured I would use it more to capture motion than precision. I still have to use TouchDesigner to get the sensor to work with Isadora. Because it’s free, I can see myself continuing to explore TouchDesigner after this class to see if I can at least replicate the kinds of things I’ve been exploring in these cycles. For the purposes of class, though, I’m trying to fit as much Isadora in as I can before I have to give the fob back!

Actor Setup

I think the main challenge continues to be that I want the sensor to be more robust than it is. I think, in many ways, I’m running into hardware issues all around. My computer struggles to keep up with the things I’m creating. But I am working with my resources to create meaningful experiences as best I can.

With this project, I wanted to add enough to it that it wasn’t immediately discernable exactly how everything was working. In that, I feel I succeeded. While it was eventually all discovered, it did take a few rounds of the song that was playing, and, even then, there were some uncertainties. Sound continues to be a bit of an afterthought for me and is something I want to more consciously tie into my last cycle, if I can leave myself enough time to do so. The thing I didn’t include that I had an impulse toward was to connect the sound to the sensor, once I actually added it. This was an option that was pointed out during the presentation, so it seems that that was definitely an opportunity for enhancement. I also think there was a desire to be able to control more of the experience in some way, to be able to affect it more. I think this would have been somewhat mitigated if it was, indeed, tied to the music in some way.

The presentation itself did immediately inspire movement and interaction, which was my main hope for it. While I did set up that getting close to it would be better, I also think that the class felt driven to do that somewhat on their own. I think choice of color, music, and movement helped a lot with that. The class was able to pick up on the fire aspect of it, as well as the fact that some part of it was reacting to their movement. They also were able to pick up on the fact that the dots were changing size beyond the pulsing they do when in stasis. I think the one aspect that wasn’t brought up or mentioned was that the intensity of the dots was also tied into the sensor. It was brighter when left alone to draw people to it and got less intense when things were closer to the sensor. A kind of inverse relationship. In some ways, the reverse would have encouraged interaction, but I was also interested in how people might be drawn to come interact with this if it weren’t in a presentation and landed on this solution.

If I were to take this further, I definitely like the idea of tying the embers to different pieces of the sound/song. There was also an interest in increasing the scale of the presentation, which would also be something to explore. I think my resources limit my ability to do the sound aspect, at least, but it would be the step I would take if I were going to go further with this, somehow. I also liked the idea of different scenes, and it is definitely something I was thinking about and would have been interested in adding with more time. I can absolutely see this as a thirty-minute, rotating scene interactive thing. I think the final note was that the boxes around the exploding embers could be smoothed out. It’s an issue I’ve run into with other projects and something I would definitely like to address in future projects.

Video of Stage

Song Used for Presentation

Pressure Project 3: Going to the Movies

Posted: April 13, 2025 Filed under: Uncategorized Leave a comment »For pressure project 3, I set out to tell a clear story using only sound. I’d done a similar project once before a handful of years ago and was in the middle of teaching sound design to my Introduction to Production Design class, so I felt fortunate to have a little experience and to be in the correct mindset. I wanted to make sure there was a clear narrative flow and that the narrative was fleshed out completely with details. I opted for a realistic soundscape, a journey, because it felt, to me, the most logical way to organize the sounds in the way I wanted to.

In terms of what it would be, I started broadly thinking about what culture thing I might create a story for. As I mentioned in class, I did originally consider doing a kind of ancestral origin story. I first considered doing a story about/for my great grandparents who came to the United States from Croatia. The initial thought was to follow them from a small town there, across the ocean, to Ellis Island, where they landed, and then perhaps onto the train and to Milwaukee (even though that skips a portion of their story). Ultimately, part of why I abandoned this idea was because it felt too sweeping for two minutes, and I started to realize that a lot of what I would want for that story would have to be recorded, rather than found.

In the end, I decided to think much smaller, about a cultural experience about me, specifically. Because I still had this idea of a “journey” in my head, I came to the idea of walking to the movies. Being able to walk to an independent movie house, preferably an old one, is something I look for when we’re moving somewhere new, so it felt like the right way to go. I decided to age up the movie theatre in my head a bit to play up the soundscape; quiet chairs and doors didn’t seem as interesting as someplace that needs a healthy round of WD40. The theatres for the last three places I’ve lived are pictured below.

Neighborhood Theatres

With a story in mind, I set out to figure out how to actually make the sound story. With a decent amount of digging around, I finally remembered that the program I’d used in the past was Audacity, so I at least knew it would be free and relatively accessible. From there, I searched out sounds on the sound effects websites I’d given my students to use (YouTube Sound Library, Freesound, Free Music Archive, etc.). I used Audacity to get the sound file from the movie I wanted to use at the end of the piece, which from The Women (1939). The main challenge I faced was that I’ve personally only worked with sound once before maybe four years ago when I took a class very much like the one I’m teaching now. The other is that it’s always harder than you want it to be to find the right sound you’re looking for. I was mindful of the relatively limited time I had to work on the project and tried to work as quickly as I could to make a list of sound I felt I needed and try to track them down. I also challenged myself to make the sound locational in the piece, to work with levels and placement so that it felt as if it was actually happening to the listener. In some ways, this was the easiest part of the project to do. For me, it was easy to pick out when a sound wasn’t where I wanted it to be or something was too loud or quiet to my mind’s eye.

I didn’t keep careful track of how my time worked out or was split up. I did the project, more or less, straight through, moving between tasks as it made sense. I designed it mostly from start to finish, rather than jumping around, finding a sound, placing it, and editing it. I shifted lengths and things like that as I went, and I spent maybe half an hour at the end fine tuning things and making sure the timing made sense.

A Smattering of the Sounds

During the presentation, the class understood that a journey was happening and, eventually, that we were at the movies. They experienced this realization at slightly different points, though it universally seemed to happen once we had gone through the theatre doors and into the theatre itself. They reported that the levels and sound placement worked, that they knew they were inside of an enclosed space. I think the variety of information was also pleasing, in terms of the inclusion of social interactions, environmental information, the transitions between places, etc. I think the inclusion of a visual would have made the sound story come into focus much more quickly. If I had included a picture of a movie theatre, or, better, the painting I had in my head (below), I think the whole journey would have been extremely clear. As it was, once I explained it, I think all the choices made sense and told the story I was trying to tell.

Overall, I think the project was successful, though perhaps in need of visual accompaniment, particularly if this kind of movie going isn’t your usual thing. I also think one of the effects, the popcorn machine in the lobby, needed adjusting; I was worried about it being too loud and overcorrected.

New York Movie (Edward Hopper, 1939)

Cycle 2: It Takes 2 Magic Mirror

Posted: April 12, 2025 Filed under: Uncategorized | Tags: Cycle 2, Interactive Media, Isadora Leave a comment »My Cycle 2 project was a continuation of my first cycle, which I will finish in Cycle 3. In Cycle 1, I built the base mechanisms for the project to function. My focus in this cycle was to start turning the project into a fuller experience by adding more details and presenting with larger projected images instead of my computer screen.

Overall, there was a great deal of joy. My peers mentioned feeling nostalgic in one of the scenes (pink pastel), like they were in an old Apple iTunes commercial. Noah pointed out that the recurrence of the water scene across multiple experiences (Pressure Project 2 and Cycle 1) has an impact, creating a sense of evolution. Essentially, the water background stays the same but the experience changes each time, starting with just a little guy journeying through space to interacting with your own enlarged shadows.

Alex asked “how do we recreate that with someone we only get one time to create one experience with?” How do we create a sense of evolution and familiarity when people only experience our work once? I think there is certainly something to coming into a new experience that involves something familiar. I think it helps people feel more comfortable and open to the experience, allowing them the freedom to start exploring and discovering. That familiarity could come from a shared experience or shared place, or even an emotion, possibly prompted by color or soundscape. Being as interested in creating experiences as I am, I have greatly enjoyed chewing on this question and its ramifications.

I got a lot of really great feedback on my project, and tons of great suggestions about how to better the experience. Alex mentioned he really enjoyed being told there was one detail left to discover and then finding it, so they suggested adding that into the project, such as through little riddles to prompt certain movements. There was also a suggestion to move the sensor farther back to encourage people to go deeper into the space, especially to encourage play with the projected shadows up close to the screen. The other major suggestion I got was to use different sounds of the same quality of sound (vibe) for each action. Alex said there is a degree of satisfaction in hearing a different sound because it holds the attention longer and better indicates that a new discovery has been made.

I plan to implement all of this feedback into my Cycle 3. Since I do not think I will be creating the inactive state I initially planned, I want a way to help encourage users to get the most out of their experience and discoveries. Riddles are my main idea but I am playing with the idea of a countdown; I am just unsure of how well that would read. Michael said it was possible to put the depth sensor on a tripod and move the computer away so it is just the sensor, which I will do as this will allow people to fully utilize the space and get up close and personal with the sensor itself. Lastly, I will play with different sounds I find or create, and add fade ins and outs to smooth the transition from no sound to sound and back.

As I mentioned earlier, the base for this project was already built. Thus, the challenges were in the details. The biggest hurdle was gate logic. I have struggled with understanding gates, so I sat down with the example from what we walked through in my Pressure Project 2 presentation, and wrote out how it worked. I copied the series of actors into a new Isadora file so I could play around with it on its own. I just followed the flow and wrote out each step, which helped me wrap my brain around it. Then I went through the steps and made sure I understood their purpose and why certain values were what they were. I figured out what I was confused about from previous attempts at gates and made notes so I wouldn’t forget and get confused again.

After the presentation day, Alex sent out a link (below) with more information about how gates work with computers, and a video with a physical example in an electric plug, which was neat to watch. I think these resources will be valuable as I continue to work with gates in my project.

Because I spent so much time playing with the gate and the staging, I did not get as far as I wanted with the other aspects. I still need to fix the transparency issue with the shadow and the background, and I realized that my videos and images for them are not all the right size. Aside from fixing the transparency issue, I will probably make my own backgrounds in Photoshop so I can fully ensure contrast between the shadow and background. The main mission for Cycle 3 will be adding in discoverable elements and a way to guide users towards them without giving away how.

The galaxy background is very clearly visible through the shadow, a problem I was not able to fix by simply changing the blend mode. I will likely have to do some research about why this happens and how to fix it.

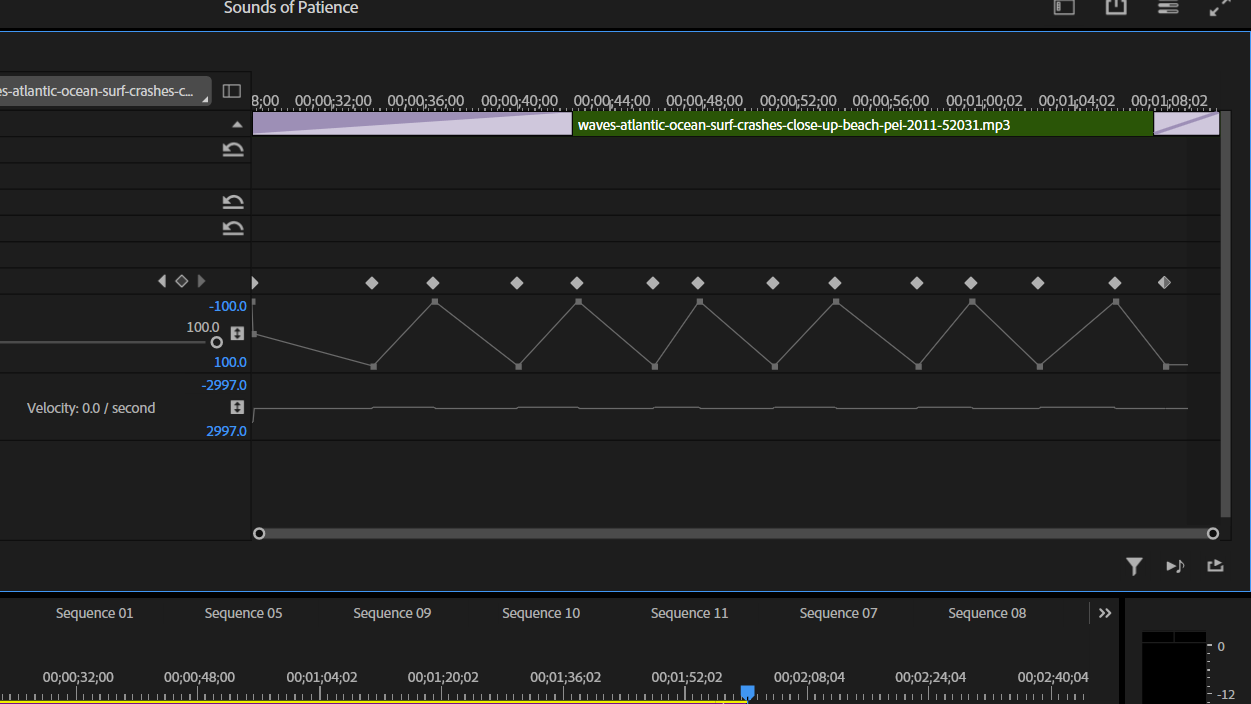

Pressure Project 3: Surfing Soundwaves

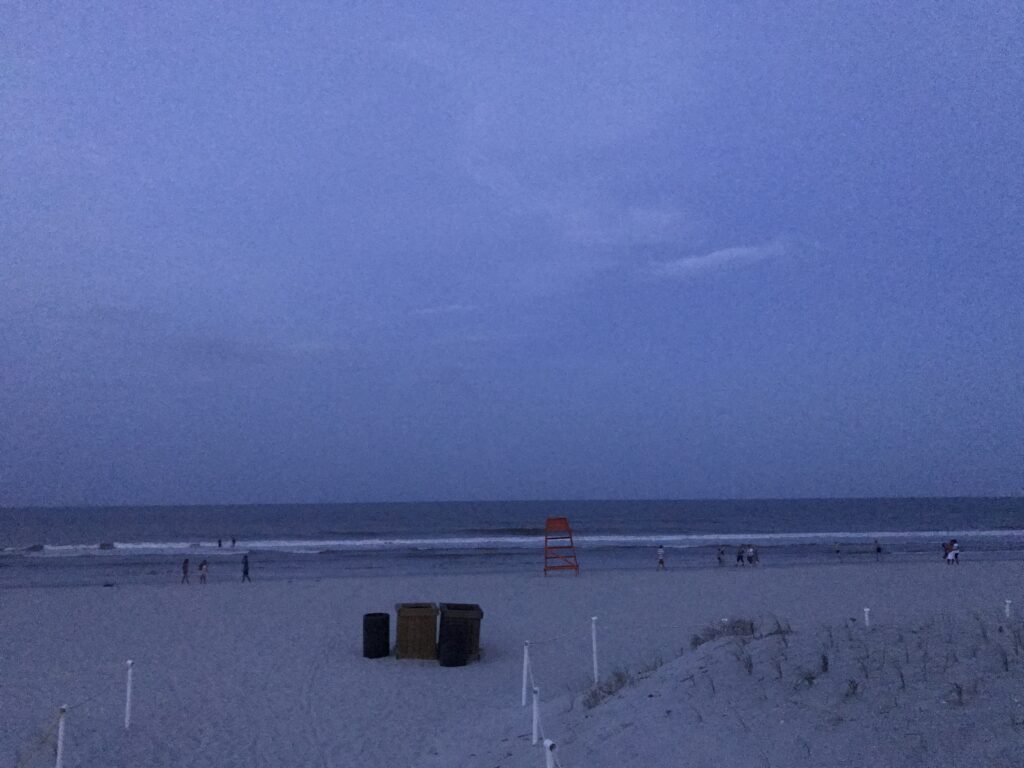

Posted: April 11, 2025 Filed under: Uncategorized Leave a comment »When starting this project, it was a bit daunting and overwhelming to decide which part of my cultural heritage to explore. Theatre initially came to mind, but that felt too easy, I wanted to choose something that would allow me to share a new side of myself with my peers. With graduation on the horizon, I’ve been thinking a lot about home. I grew up near the water, and there’s this unbreakable need in me to be close to it. That’s where I began: sourcing sounds of the ocean on Pixabay. I went so specific as to only use sounds from the Atlantic Ocean when possible, even finding one clip from Florida.. But I realized that the ocean itself didn’t fully capture my heritage, it was a setting, not a story. I had to go deeper.

I began thinking about my dad, who was a professional surfer. I’m sure he had aspirations for me to follow a similar path. I felt a lot of pressure to surf as a kid, it was honestly terrifying. But over time, and on my own terms, I grew to love it. Now it’s how my dad and I connect. We sit out on the water together, talk, and when the waves come, we each ride one in, knowing we’ll meet back up. The more I thought about that ritual, the more homesick I felt. Even though the assignment was meant to be sound-based, I found myself needing a visual anchor. I dug through my camera roll and found a photo that captured the calmness and rhythm of beach life, something soft and personal that helped ground my process.

Once I had that anchor, I started refining the design itself. Since this was primarily a sonic piece, I spent about thirty minutes watching first-person surfing videos. It was a surprisingly helpful strategy for identifying key sounds I usually overlook. From that research, I pinpointed four essential moments: walking on sand, stepping into water, submerging, and the subtle fade of the beach as you move away from it. While wave sounds and paddling were expected, those smaller, transitional sounds became crucial to conveying movement and environment.

I then spent another chunk of time combing through Pixabay and ended up selecting sixteen different sounds to track the journey from the car to the ocean. But as I started laying them out, it became clear that I wasn’t telling a story yet, I was just building an immersive environment.

Avoiding that realization for a while, I focused instead on the technical side. I mapped out a soundscape where the waves panned from right to left, putting the listener in the middle of the ocean. I also created a longform panning effect for the beach crowd, which built up as you walk from the parking lot but slowly faded once you were in the water. I was proud of how the spatial relationships evolved, but I knew something was still missing.

Late in the process, I returned to the idea of my dad. I created an opening sequence with a sound of submerging followed by a child gasping for air. I used a kid’s breath to imply inexperience, maybe fear. To mark a shift in time, I recorded a voicemail similar to the kinds of messages my dad and I send each other, with the general sentiment“I’ll see you in the water.” It was meant to signal growth, like the child in the beginning was now the adult. I had hoped to use my actual dad’s voicemail, but when I called him, I found his box wasn’t set up.

I decided to end the piece with the paddling slowing down and the waves settling as I/the character reached dad. I repeated “dad” a couple of times—not in panic, just to get his attention, to make it clear he was present and alive. I used very little dialogue overall, letting the sound do most of the storytelling, but I tried to be economical with the few words I did include.

During the presentation, I felt confident in the immersive quality of the work, but I wasn’t prepared for how the tone might be read. The feedback I received was incredibly insightful. While the environment came through clearly, the emotional tone felt ambiguous. Several people thought the piece might be about someone who had passed away, either the child or the father. I had added a soft, ethereal track underneath the ocean to evoke memory, but that layer created a blurred, melancholic vibe. One person brought up the odd urgency in the paddling sounds, which I completely agreed with. I had tried to make my own paddling sounds in my kitchen sink, but they didn’t sound believable, so I settled on a track from Pixabay.

Looking back, I know I missed the mark slightly with this piece. It’s hard to convey the feeling of home when you’re far from it, and I got caught up in the sonic texture at the expense of a clearer narrative. That said, I still stand by the story. It’s true to me. When I shared it with my wife, she immediately recognized the voicemail moment and said, “I’ve heard those exact messages before, so that makes sense.”

If I had more time, I would revisit the paddling and find a better way to include my dad’s voice, either through an actual message or a recorded conversation. That addition alone might have clarified the tone and ensured that people knew this was a story about connection, not loss.

Pressure Project 3: Soundscape of Band

Posted: April 9, 2025 Filed under: Uncategorized | Tags: Pressure Project 3 Leave a comment »For this pressure project, we were tasked with creating a two-minutes soundscape that represents part of our culture or heritage. I chose to center my project around my experience in band. My original plan had been to take recordings during rehearsals to create the soundscape. None of my recordings saved properly, so I had to get creative with where I sourced my sound.

Upon realizing I did not have any recordings from rehearsal, I had a moment of panic. Fortunately, some of our runs from rehearsal are recorded and uploaded to Carmen Canvas so we can practice the music for the upcoming Spring Game show. I also managed to scrounge up some old rehearsal recordings from high school and a recorded playing test for University Band. I had also done a similar project for my Soundscapes of Ohio class last year, and still had many of my sound samples from that (the first and likely last time my inability to delete old files comes in handy).

Then I had the idea to use videos from the Band Dance, meaning I had to scour my camera roll and Instagram so I could screen record posts and convert them to mp3 files. This process worked quite well, but it was no small task sorting through Instagram without getting distracted. It was also a challenge to not panic about having no recordings, but once I found this new solution, I had a lot of fun putting it together.

I spent about the first hour finding and converting video files into audio files and putting them into GarageBand. I made sure everything was labeled in a way that I would be able to immediately understand. I then made a list of all the audio files I had at my disposal.

The next 45 minutes were used to plan out the project, since I knew going straight to GarageBand would get frustrating quickly. I listened to each recording and wrote out what I heard, which was helpful when it came to putting pieces together. I also started to clip longer files into just the parts I needed. I made an outline so I knew which recordings I wanted to use. It was a struggle to limit it to just what would fit into two minutes, but it was helpful going in with a plan, even if I had to cut it down.

During the next 45-minute block, I started creating the soundscape. I already had most of the clips I needed, so this time was spent cutting down to the exact moment I needed everything to start and stop, and figure out how to layer sounds to create the desired effect. About halfway through this process, I listened to what I had and was close to the two-minute mark. I did not have everything I wanted, so I cut down the first clip and cut another. I did a quick revision on my outline now that I had a better understanding of my timing.

The last half hour was dedicated to automation! I added fade ins and outs in the places I felt were most necessary for transition moments. I wanted to prioritize the most necessary edits first to ensure I had a good, effective, and cohesive soundscape. I had plenty of time left, so I went through to do some fine tuning, particularly to emphasize parts of the sound clips that were quieter but needed to have more impact.

I wanted my soundscape to reflect my experience. As such, I started with a recording from high school of my trio rehearsing before our small ensemble competition. This moment was about creating beautiful music. It fades into the sound from my recorded playing test for University Band last year, where I was also focused on producing a good sound.

There is an abrupt transition from this into Buckeye Swag, which I chose to represent my first moment in the Athletic Band, a vastly different experience from any previous band experience I had. The rest of the piece features sounds from sports events, rehearsals, and the band dance, and it includes music we have made, inside jokes and bits, and our accomplishments.

I wanted to include audio from the announcers at the Stadium Series game because that was a big moment for me as a performer. I marched Script on Ice in front of more than 94,000 people in the ‘Shoe, performing for the same crowd as twenty one pilots (insane!). My intent with that portion was to make it feel overwhelming. I cut the announcement into clips with the quotes I wanted and layered them together to overlap slightly, with the crowd cheering in the background. I wanted it to feel convoluted and jumbled, like a fever dream almost.

In creating this project, I came to realize what being in band really means to me. It is about community, almost more so than making music. Alex pointed this out during the discussion based on how the piece progresses, and I was hoping it would read like that. At first, it was about learning to read and make music. When I got to high school, I realized that no matter where I went, if I was in band, I had my people. So over time, band became about having a community, and it became a big part of who I am, so I wanted to explore that through this project.

Aside from personal reflection, I wanted to share the band experience. My piece represents the duality of being in concert and pep band, both of which I love. There is a very clear change from concert music to hype music, and the change is loud and stark to imitate how I felt going to my fist rehearsal and first sporting events with the band. By the end, we are playing a high energy song while yelling “STICK!” at a hockey game, completely in our element.