Cycle 3: It Takes 3

Posted: May 1, 2025 Filed under: Uncategorized | Tags: Cycle 3, Interactive Media, Interactive Shadow, Isadora, magic mirror Leave a comment »This project was the final iteration of my cycles project, and it has changed quite a bit over the course of three cycles. The base concept stayed the same but the details and functions changed as I received feedback from my peers and changed my priorities with the project. I even made it so three people could interact with it.

I wanted to focus a bit more on the sonic elements as I worked on this cycle. I started having a lot of ideas on how to incorporate more sonic elements, including adding soundscapes to each scene. Unfortunately I ran out of time to fully flesh out this particular idea and didn’t want to incorporate a half baked idea and end up with an unpleasant cacophony of sound. But I did add sonic elements to all of my mechanisms. I kept the chime when the scene became saturated, as well as the first time someone raised their arms to change a scene background. I did add a gate so this only happened the first time, to control the sound.

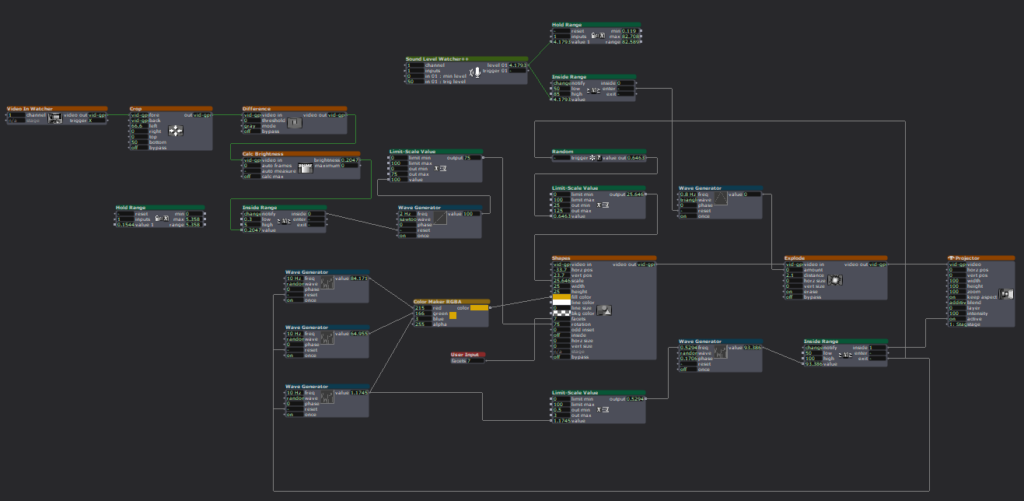

A new element I added was a Velocity actor that caused the image inside the silhouettes to explode, and when it did, it triggered a Sound Player with a POP! sound.This pop was important because it drew attention to the explosion to indicate that something happened and something they did caused it. This actor was also plugged into a Inside Range actor that was set to trigger a riddle at a certain velocity just below the range to trigger the explosion.

The other new mechanism I added was based on the proximity to the sensor of one of the users. The z-coordinate data for Body 2 was plugged into a Limit-Scale Value actor to translate the coordinate data into numbers I could plug into the volume input to make the sound louder as the user gets closer. I really needed to spend time in the space with people so I could fine-tune the numbers to the space, which I ended up doing during the presentation when it wasn’t cooperating. I also ran into the issue of needing that Sound Player to not always be on, otherwise that would have been overwhelming. I decided to have the other users have their hands raised to turn it on (it was actually only reading the left hand of Body 3 but for ease of use and riddle-writing, I just said both other people had to have them up).

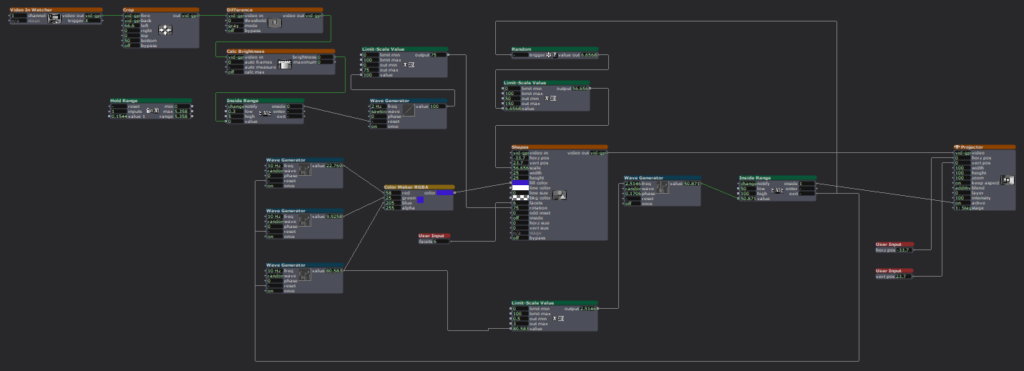

I have continued adjusting the patch for the background change mechanism (raising the right hand of Body 1 changes the silhouette background and raising the left hand changes the background). My main focus here was making the gates work so it only changes one time while the hand is raised (gate doesn’t reopen until hand goes down), so I moved the gate to be in front of the Random actor in this patch. As I reflect on this, I think I know why it didn’t work; I didn’t program it to turn the gate on based on hand position, it only holds the trigger until the first one is complete, which is pretty much immediately. I think I would need an Inside Range actor to tell the gate to turn on when the hand is below a certain position, or something to that effect.

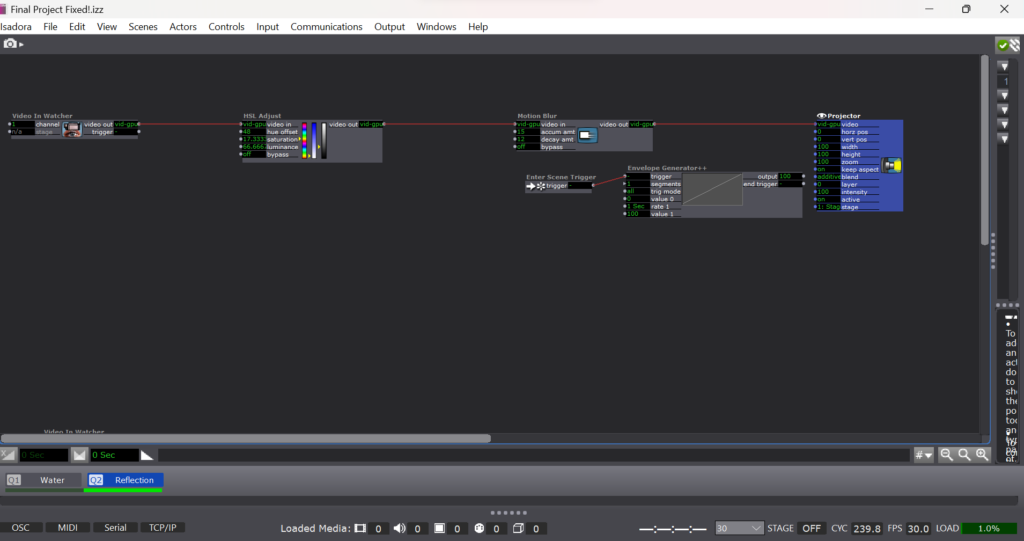

I sat down with Alex to work out some issues I had been having, such as my transparency issue. This was happening because the sensor was set to colorize the bodies, so Isadora was seeing red and green silhouettes. This was problematic because the Alpha Mask looks for white, so the color was not allowing a fully opaque mask. We fixed this with the addition of an HCL Adjust actor between the OpenNI Tracker and the Alpha Mask, with the saturation fully down and the luminance fully up.

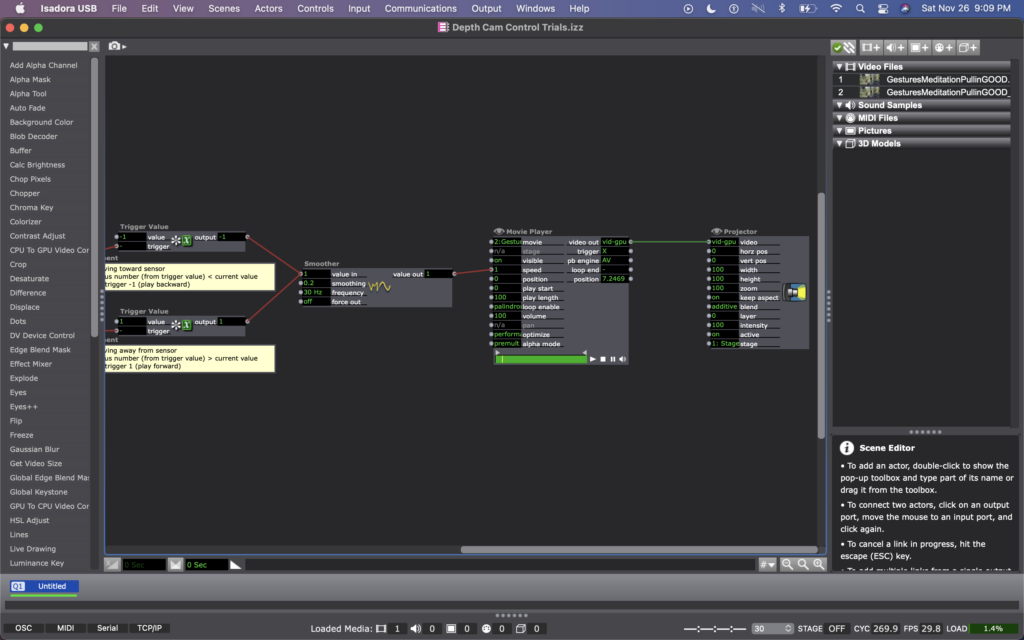

The other issue Alex helped me fix was the desaturation mechanism. We replaced the Envelope Generators with Trigger Value actors plugged into a Smoother actor. This made for smooth transitions between changes because it allowed Isadora to make changes from where it’s already at, rather than from a set value.

The last big change I made to my patch was the backgrounds. Because I was struggling to find decent quality images of the right size for the shadow silhouettes, I took the information of one image that looked nice and created six simple backgrounds in Procreate. I wanted them to have bold colors and sharp lines so they would stand out against the moving backgrounds and have enough contrast both saturated and not. I also decided to use recognizable location-based backdrops since the water and space backdrops seemed to elicit the most emotional responses. In addition to the water and space scenes, I added a forest, mountains, a city, and clouds rolling across the sky.

These images worked really well against the realistic backgrounds. It was also fun to watch the group react, especially to the pink scene. They got really excited if they got a sparkle full and clear on their shadow. There was also a moment where they thought the white dots in the rainbow and purple scenes were a puzzle, which could be a cool idea to explore. I did have an idea to create a little bubble-popping game in a scene with a zoomed-in bubble as the main background.

The reactions I got were overwhelmingly positive and joyful. There was a lot of laughter and teamwork during the presentation, and they spent a lot of time playing with it. If we had more time, they likely would have kept playing and figuring it out, and probably would have loved a fourth iteration (I would have loved making one for them). Michael specifically wanted to learn it enough to manipulate it, especially to match up certain backgrounds (I would have had them go in a set order because accomplishing this at random would be difficult, though not impossible). Words like “puzzle” and “escape room” were thrown around during the post-experience discussion, which is what I was going for with the addition of the riddles I added to help guide users.

The most interesting feedback I got was from Alex who said he had started to experience himself ‘in third person’. What he means by this is that he referred to the shadow as himself while still recognizing it as a separate entity. If someone crossed in front of the other, the sensor stopped being able to see the back person and ‘erased’ them from the screen until it re-found them. This prompted that person to often go “oh look I’ve been erased”, which is what Alex was referring to with his comment.

I’ve decided to include my Cycle 3 score here as well, because it has a lot of things I didn’t get to explain here, and was functinoally my brain for this project. I think I might go back to this later and give some of the ideas in there a whirl. I think I’ve learned enough Isadora that I can figure out a lot of it, particularly those pesky gates. It took a long time, but I think I’m starting to understand gate logic.

The presentation was recorded in the MOLA so I will add that when I have it :). In the meantime, here’s the test video for the velocity-explode mechanism, where I subbed in a Mouse Watcher to make my life easier.

Cycle 2: It Takes 2 Magic Mirror

Posted: April 12, 2025 Filed under: Uncategorized | Tags: Cycle 2, Interactive Media, Isadora Leave a comment »My Cycle 2 project was a continuation of my first cycle, which I will finish in Cycle 3. In Cycle 1, I built the base mechanisms for the project to function. My focus in this cycle was to start turning the project into a fuller experience by adding more details and presenting with larger projected images instead of my computer screen.

Overall, there was a great deal of joy. My peers mentioned feeling nostalgic in one of the scenes (pink pastel), like they were in an old Apple iTunes commercial. Noah pointed out that the recurrence of the water scene across multiple experiences (Pressure Project 2 and Cycle 1) has an impact, creating a sense of evolution. Essentially, the water background stays the same but the experience changes each time, starting with just a little guy journeying through space to interacting with your own enlarged shadows.

Alex asked “how do we recreate that with someone we only get one time to create one experience with?” How do we create a sense of evolution and familiarity when people only experience our work once? I think there is certainly something to coming into a new experience that involves something familiar. I think it helps people feel more comfortable and open to the experience, allowing them the freedom to start exploring and discovering. That familiarity could come from a shared experience or shared place, or even an emotion, possibly prompted by color or soundscape. Being as interested in creating experiences as I am, I have greatly enjoyed chewing on this question and its ramifications.

I got a lot of really great feedback on my project, and tons of great suggestions about how to better the experience. Alex mentioned he really enjoyed being told there was one detail left to discover and then finding it, so they suggested adding that into the project, such as through little riddles to prompt certain movements. There was also a suggestion to move the sensor farther back to encourage people to go deeper into the space, especially to encourage play with the projected shadows up close to the screen. The other major suggestion I got was to use different sounds of the same quality of sound (vibe) for each action. Alex said there is a degree of satisfaction in hearing a different sound because it holds the attention longer and better indicates that a new discovery has been made.

I plan to implement all of this feedback into my Cycle 3. Since I do not think I will be creating the inactive state I initially planned, I want a way to help encourage users to get the most out of their experience and discoveries. Riddles are my main idea but I am playing with the idea of a countdown; I am just unsure of how well that would read. Michael said it was possible to put the depth sensor on a tripod and move the computer away so it is just the sensor, which I will do as this will allow people to fully utilize the space and get up close and personal with the sensor itself. Lastly, I will play with different sounds I find or create, and add fade ins and outs to smooth the transition from no sound to sound and back.

As I mentioned earlier, the base for this project was already built. Thus, the challenges were in the details. The biggest hurdle was gate logic. I have struggled with understanding gates, so I sat down with the example from what we walked through in my Pressure Project 2 presentation, and wrote out how it worked. I copied the series of actors into a new Isadora file so I could play around with it on its own. I just followed the flow and wrote out each step, which helped me wrap my brain around it. Then I went through the steps and made sure I understood their purpose and why certain values were what they were. I figured out what I was confused about from previous attempts at gates and made notes so I wouldn’t forget and get confused again.

After the presentation day, Alex sent out a link (below) with more information about how gates work with computers, and a video with a physical example in an electric plug, which was neat to watch. I think these resources will be valuable as I continue to work with gates in my project.

Because I spent so much time playing with the gate and the staging, I did not get as far as I wanted with the other aspects. I still need to fix the transparency issue with the shadow and the background, and I realized that my videos and images for them are not all the right size. Aside from fixing the transparency issue, I will probably make my own backgrounds in Photoshop so I can fully ensure contrast between the shadow and background. The main mission for Cycle 3 will be adding in discoverable elements and a way to guide users towards them without giving away how.

The galaxy background is very clearly visible through the shadow, a problem I was not able to fix by simply changing the blend mode. I will likely have to do some research about why this happens and how to fix it.

Pressure Project 1 – Interactive Exploration

Posted: February 4, 2025 Filed under: Uncategorized | Tags: Interactive Media, Isadora, Pressure Project Leave a comment »For this project, I wanted to prioritize joy through exploration. I wanted to create an experience that allowed people to try different movements and actions to see if they could “unlock” my project, so to say. To do this, I built motion and sound sensors into my project that would trigger the shapes to do certain actions.

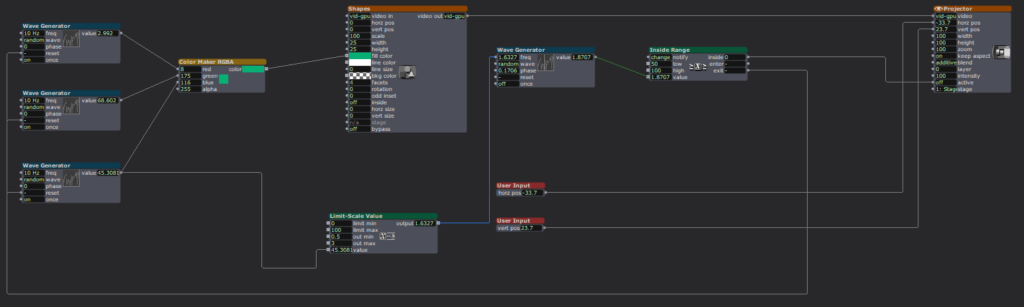

Starting this project was difficult because I didn’t know what direction I wanted to take it, but I knew I wanted it to have some level of interactivity. I started off small by adding a User Input actor to adjust the number of facets on each shape, then a Random actor (with a Limit-Scale Value actor) to simply change the size of the shapes each time they appeared on screen. Now it was on.

I started building my motion sensor, which involved a pretty heavy learning curve because I could not open the file from class that would have told me which actors to put where. I did a lot of trial-and-error and some research to jog my memory and eventually got the pieces I needed, and we were off to the races!

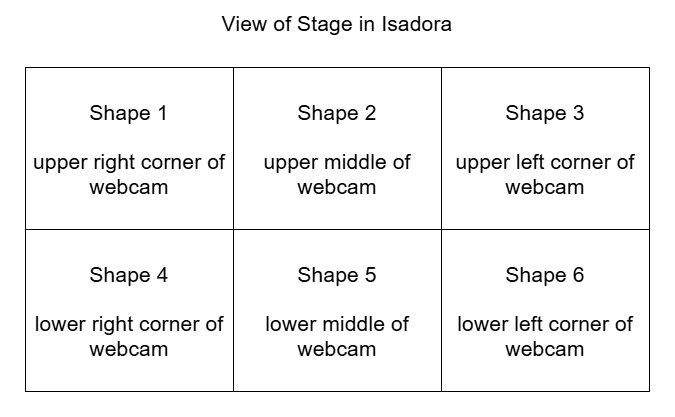

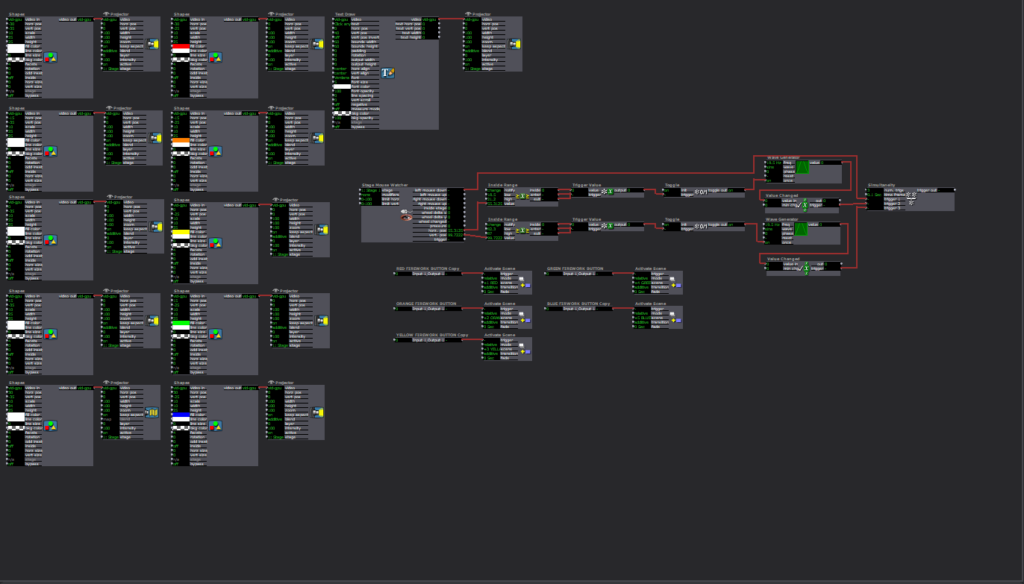

A mockup diagram of which section of the motion sensor is attached to each shape. The webcam is mirrored so it is actually backwards, which made it difficult to keep of which portion of the sensor attached to which shape.

Figuring out the motion sensor from scratch was just the tip of the iceberg; I still needed to figure out how to implement it. I decided to divide the picture into six sections, so each section triggered the corresponding shape to rotate. Figuring out how to make the rotation last the right amount of time was tricky, because the shapes were only on-screen for a short, inconsistent amount of time and I wanted the shapes to have time to stop rotating before fading. I plugged different numbers into different outputs of a Wave Generator and Limit-Scale Value actor to get this right.

Then it was time to repeat this process five more times. Because each shape needed a different section for the motion detector, I had to crop each one individually (making my project file large and woefully inefficient). I learned the hard way how each box interacts and that not everything can be copied to each box as I had previously thought, causing me to have to go back a few times to fix/customize each shape. (I certainly understand the importance of planning out projects now!)

I had some time left after the motion sensor was done and functional, so I revisited an idea from earlier. I had originally wanted the motion sensor to trigger the shapes to explode, but realized that would likely be overwhelming, and my brain was melting trying to get the Explode actor plugged in right to make it work. Thus, I decided on an audio sensor instead. Finding the sweet spot to set the value at to trigger the explosion was difficult, as clapping and talking loudly were very close in value, so it is not a terribly robust sensor, but it worked well enough, and I was able to figure out where the Explode actor went.

I spent a lot of time punching in random values and plugging actors into different inputs to figure out what they did and how they worked in relation to each other. Exploration was not just my desired end result; it was a part of the creative process. For some functions, I could look up how to make them work, such as which actors to use and where to plug them in. But other times, I just had to find the magic values to achieve my desired result.

This meant utilizing virtual stages as a way to previsualize what I was trying to do, separate from my project to make sure it worked right. I also put together smaller pieces to the side (projected to a virtual stage), so I could get that component working before plugging it into the rest of the project. Working in smaller chunks like this helped me keep my brain clear and my project unjumbled.

I worked in small chunks and took quick breaks after completing a piece of the puzzle, establishing a modified Pomodoro Technique workflow. I would work for 10-20 minutes, then take a few minutes to check notifications on my phone or refill my water bottle, because I knew trying to get it done in one sitting would be exhausting and block my creative flow. Not holding myself to a strict regimen to complete the project allowed me the freedom to have fun with it and prioritize discovery over completion, as there was no specific end goal. I think this creative freedom and flexibility gave me the chance to learn about design and creating media in a way I could not have with a set end result to achieve because it gave me options to do different things.

If something wasn’t working for me, I had the option to choose a new direction (rotating the shape with the motion sensor instead of exploding them). After spending a few hours with Isadora, I gained confidence in my knowledge base and skill set that allowed me to return to abandoned reconsidered ideas and try them again in a new way (triggering explosions with a sound sensor).

I wasn’t completely without an end goal. I wanted to create a fun interactive media system that allowed for the discovery of joy through exploration. I wanted my audience to feel the same way playing with my project as I did making it. It was incredibly fulfilling watching a group of adults giggle and gasp as they figured out how to trigger the shapes in different ways, and I was fascinated watching the ways in which they went about it. They had to move their bodies in different ways to trigger the motion sensors and make different sounds to figure out which one triggered the explosions.

Link to YouTube video: https://youtu.be/EjI6DlFUof0

Pressure Project 1 – Fireworks

Posted: January 30, 2024 Filed under: Uncategorized | Tags: Interactive Media, Isadora, Pressure Project, Pressure Project One Leave a comment »When given the prompt, “Retain someone’s attention for as long as possible” I begin thinking about all of the experiences that have held my attention for a long time. Some would be a bit hard to replicate such as a conversation or a full length movie. Other experiences would be easier as I think interacting with something could retain attention and be a bit easier to implement. Now what does that something do so people would want to repeat the experience again and again and again? Some sort of grand spectacle that is really shiny and eye catching. A fireworks display!

The Program

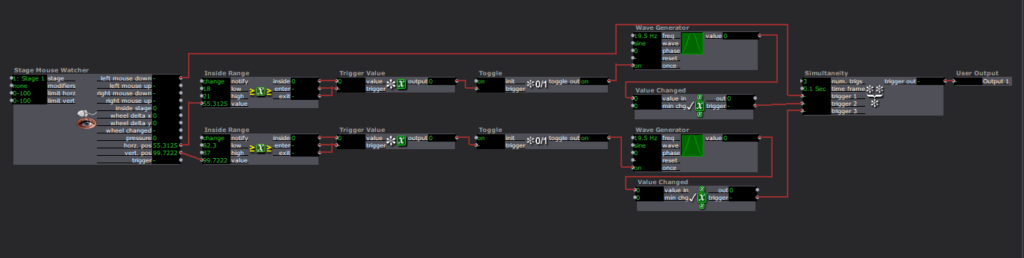

The first scene is setting up the image that the user always see. This is the firework “barrels” and buttons to launch the fire works.

The buttons were made as a custom user input function. I did not know there was a control panel that already has preset buttons programed. If I had known that I could have saved myself 2 hours of experimenting. So how each button works is the Stage Mouse Watcher checks the location of the mouse in the stage and if the mouse clicks. Then two inside range actors are used to check where the mouse is in the x and y axis. If the mouse is in preset range it triggers a Trigger value actor that goes to a Toggle actor. The toggle actor then turns a wave generator on and off. The wave generator then sends its value to a Value Changed actor. If the in the x bounds, the y bounds, and the mouse clicks triggers activate all at once, then the scene is moved to launch a firework.

The scenes that the buttons jump to is set up to be a unique firework pattern. The box will launch a firework to a set location and after a timer the sparkle after effect will show. Then after it ends the scene ends.

Upon reflection, one part that could have helped retain attention even longer would have been to randomize the fireworks explosion pattern. This could have been done with the random number generator and the value scalar actors to change the location of where the sparkle explosion effect ends up and with how long they last in the air.

Lawson: Cycle 2

Posted: November 30, 2023 Filed under: Nico Lawson | Tags: Cycle 2, dance, Digital Performance, Interactive Media, Isadora Leave a comment »The poem shared in the videos:

I’m sorry that I can’t be more clear.

I’m still waking up.

This body is still waking up.

What a strange sensation,

To feel a part of you dying while you’re still alive.

What a strange sensation for part of you to feel like someone else.

Maybe she was someone else.

I can’t explain the relief that I feel to let her go.

I can’t explain the peace that I feel,

To give myself back to the dust,

On my own terms this time.

That’s just it.

My past life, Wisteria’s life, is dust.

That life caught fire and returned to the durst from which it came…

But the rain came just as it always does

Cleansing tears and eternal life cycle.

It reminds me that this body is seventy percent water

Intimately tied to planer just the same

That will always come to claim it’s own.

Wash me away and birth me again.

When I still prayed to a god they taught me about baptism.

How the water washes away your sin.

How you die when they lay you down.

How you are reborn when they raise you up.

While Wisteria turns to dust,

I return myself to the water, still on my own terms.

I watch my life in the sunlight that dances on the surface

Let the current take her remains as my tears and the Earth’s flow by.

Grieving…

Lost time

Self-loathing

The beautiful possibilities choked off before they could take root

The parts of myself that I sacrificed in the name of redemption.

And the water whispers love.

I am not sin.

I am holy.

I am sacred.

I am made of the stuff of the Earth and the universe.

No forgiveness, no redemption is necessary.

Only the washing away of the remains of the beautiful mask I wore.

Only the washing away of self-destruction and prayers for mercy.

And when I emerge I hope the water in my veins will whisper love to me

Until I can believe it in every cell…

Technical Elements

Unfortunately I do not have images of my Isadora patch for Cycle 2. I will share more extensive images in my Cycle 3 post. The changes applied to the patch are as follows:

- Projection mapping onto a square the size of the kiddie pool that I will eventually be using.

- Rotating the projection map of the “reflection” to match the perspective of the viewer.

- Adding an “Inside Range” actor to calculate the brightness of the reflection.

- Colorizer and HSL adjust actors to modify the reflection.

For Cycle 2, I also projected onto the silk rose petals that will form the bulk of the future projection surface and set the side lighting to be optimal for not blinding the camera. Before the final showing on December 8, I need to spray the rose petals with starch to prevent them from sticking to each other and participants’ clothing.

For Cycle 3, I know that I will need to remap the projections once the pool is in place. One of the things that I observed from my video is that the water animation and the reflection do not overlap well. Once I have the kiddie pool in place, it will be easier to make sure that the projections fall int he correct place.

I also want to experiment with doubling and layering the projection to play into the already other-worldliness of the digital “water.” I may also play with the colorization of the reflections as well. The reflection image is already distorted; however, it is incredibly subtle and, as noted by one of the viewers at my showing, potentially easy to miss. Since there is no way to make the reflection behave like water, I see no reason not to further abstract this component of the project to make it more easily observable and more impactful on the viewer.

Reflections and Questions

One of my main questions about this part of the project was how to encourage people to eventually get into the pool to have their own experience in the water. For my showing, I verbally encouraged people to get in and play with the flower petals while they listened to me read the poem. However, when this project is installed in exhibition for my MFA project, I will not be present to explain to viewers how to participate. So I am curious about how to docent my project so that viewers want to engage with it.

What I observed during my showing and learned from post-showing feedback is that hearing me read the poem while they were in the pool created an embodied experience. Hearing my perspective on the spiritual nature of my project directed people into a meditative or trance-like experience of my project. What I want to try for Cycle 3 is creating a loop of sections of my poem with prompts and invitations for physical reflections in the pool. My hope is that hearing these invitations will encourage people to engage with the installation. I will also provide written instructions alongside the pool to make it clear that they are invited to physically engage with the installation.

Lawson: Cycle 1

Posted: November 14, 2023 Filed under: Nico Lawson, Uncategorized | Tags: cycle 1, dance, Interactive Media, Isadora Leave a comment »My final project is yet untitled. This project will also be a part of my master’s thesis, “Grieving Landscapes” that I will present in January. The intention of this project is that it will be a part of the exhibit installation that audience members can interact with and that I will also dance in/with during the performances. My goal is to create a digital interpretation of “water” that is projected into a pool of silk flower petals that can then be interacted, including casting shadows and reflecting the person that enters the pool.

In my research into the performance of grief, water and washing has come up often. Water holds significant symbolism as a spirit world, a passage into the spirit world, the passing of time, change and transition, and cleansing. Water and washing also holds significance in my personal life. I was raised as an Evangelical Christian, so baptism was a significant part of my emotional and spiritual formation. In thinking about how I grieve my own experiences, baptism has reemerged as a means of taking control back over my life and how I engage with the changes I have experienced over the last several years.

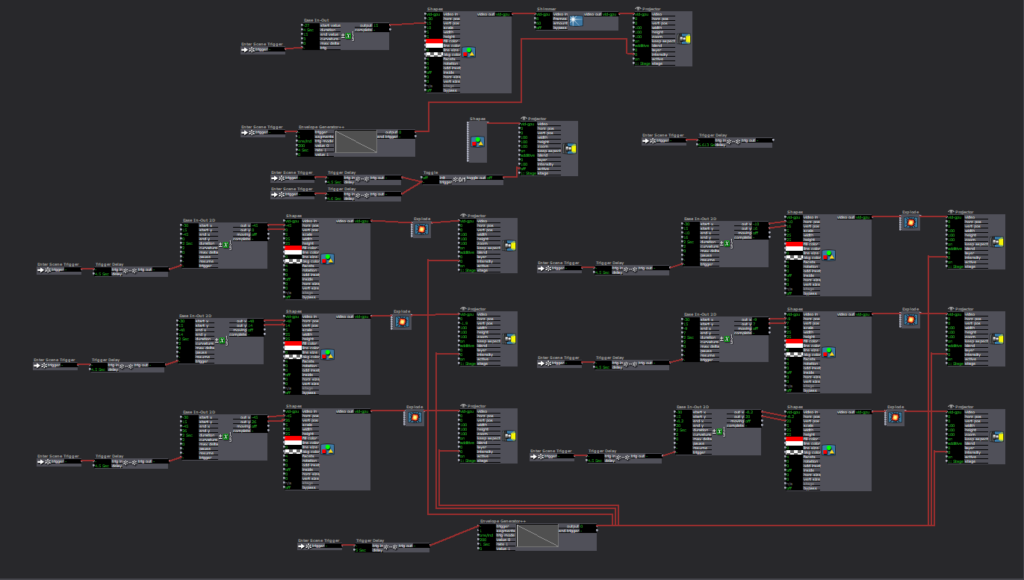

For cycle 1, I created the Isadora patch that will act as my “water.” Rather than attempting to create an exact replica of physical water, I want to emphasis the spiritual quality of water: unpredictable and mysterious.

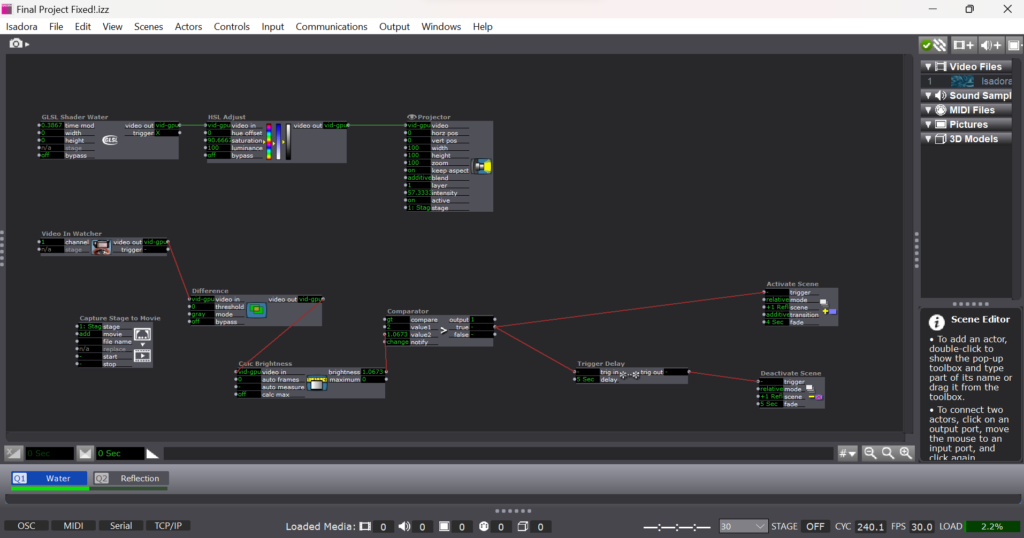

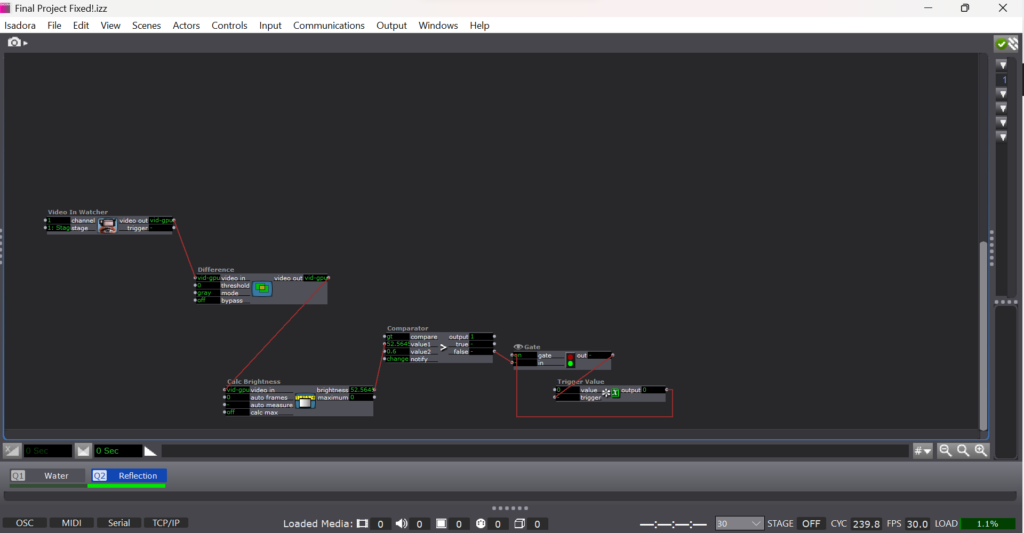

To create the shiny, flowing surface of water, I found a water GLSL shader online and adjusted it’s color until it felt suitably blue: ghostly but bright, but not so bright as to outshine the reflection generated by the web cam. To emphasize the spiritual quality of the digital emanation, I decided that I did not want the watch to be constantly projecting the web cam’s image. The GLSL shader became the “passive” state of the patch. I used difference, calculate brightness, and comparater actors with active and deactive scene actors to form a motion sensor that would detect movement in front of the camera. When movement is detected, the scene with the web cam projection is activated, projecting the participant’s image over the GLSL shader.

To imitate the instability of reflections in water I applied a motion blur to the reflection video. I also wanted to imitate the ghostliness of reflections in water, so I desaturated the image from the camera as well.

To emphasize the mysterious quality of my digital water, I used an additional motion sensor to deactivate the reflection scene. If the participant stops moving or moves out of the range of the camera, the reflection image fades away like the closing of a portal.

The patch itself is very simple. It’s two layers of projection and a simple motion detector. What matters to me is the way that this patch will eventually interact with the materials and how the materials with influence the way that the participant then engages with the patch.

For cycle 2, I will projection map the patch to the size of the pool, calibrating it for an uneven surface. I will determine what type of lighting I will need to support the web camera and appropriate placement of the web camera for a recognizable reflection. I will also need to recalibrate the comparater for a darker environment to keep the motion sensor functioning.

Lawson: PP3 “Melting Point”

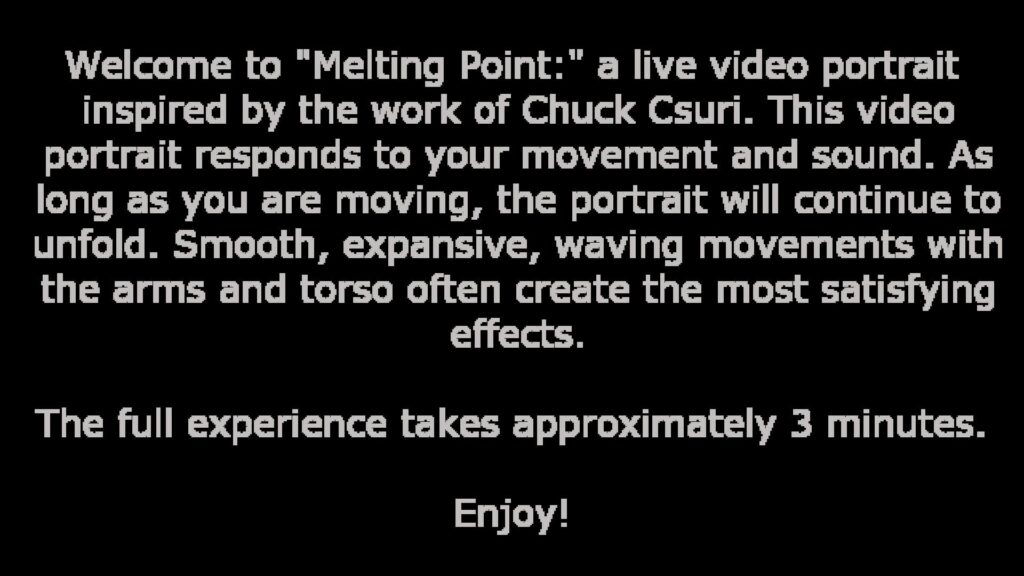

Posted: November 14, 2023 Filed under: Nico Lawson, Uncategorized | Tags: Interactive Media, Isadora, Pressure Project Leave a comment »For Pressure Project 3, we were tasked to improve upon our previous project inspired by the work of Chuck Csuri to make the project suitable to be exhibited in a “gallery setting” for the ACCAD Open House on November 3, 2023. I was really happy with the way that my first iteration played with the melting and whimsical qualities of Csuri’s work, so I wanted to turn my attention to the way that my patch could also act as it’s own “docent” to encourage viewer engagement with the patch.

First, rather than wait until the end of my patch to feature the two works that inspired my project, I decided to make my inspiration photos the “passive” state of the patch. Before approaching the web camera and triggering the start of the patch, my hope was that the audience would be curious and approach the screen. I improved the sensitivity of the motion sensor aspect of the patch so that as soon as a person began moving in front of the camera, the patch would begin running.

When the patch begins running, the first scene that the audience sees is this explanation. Because I am a dancer and the creator of the patch, I am intimately familiar with the types of actions that make the patch more interesting. However, audience members, especially those without movement experience, might not know how to move with the patch with only the effects on the screen. My hope was that including instructions for the type of movement that best interacted with the patch would increase the likelihood that a viewer would stay and engage with the patch for it’s full duration. For this reason, I also told the audience about the length of the patch so audience members would know what to expect. Additional improvements made to this patch were shortening the length of the scenes to keep viewers from getting bored.

Update upon further reflection:

I wish that I had removed or altered the final scene in which the facets of the kaleidoscope actor were controlled by the sound level watcher. After observing visitors to the open house and using the patch at home where I had control over my own sound levels, I found that it was difficult to get the volume to increase to such a level that the facets would change frequently enough for the actor to attract audience member’s attention by allowing them to intuit that their volume impacted what they saw on screen. For this reason, people would leave my project before the loop was complete seeiming to be confused or bored. For simplicity, I could have removed the scene. I also could have used an inside range level actor to lower the threshold for the facets to be increased and spark audience attention.

Cycle 3: Dancing with Cody Again – Mollie Wolf

Posted: December 15, 2022 Filed under: Uncategorized | Tags: dance, Interactive Media, Isadora, kinect, skeleton tracking Leave a comment »For Cycle 3, I did a second iteration of the digital ecosystem that uses an Xbox Kinect to manipulate footage of Cody dancing in the mountain forest.

Ideally, I want this part of the installation to feel like a more private experience, but I found out that the large scale of the image was important during Cycle 2, which presents a conflict, because that large of an image requires a large area of wall space. My next idea was to station this in a narrow area or hallway, and to use two projectors to have images on wither side or surrounding the person. Cycle 3 was my attempt at adding another clip of footage and another mode of tracking in order to make the digital ecosystem more immersive.

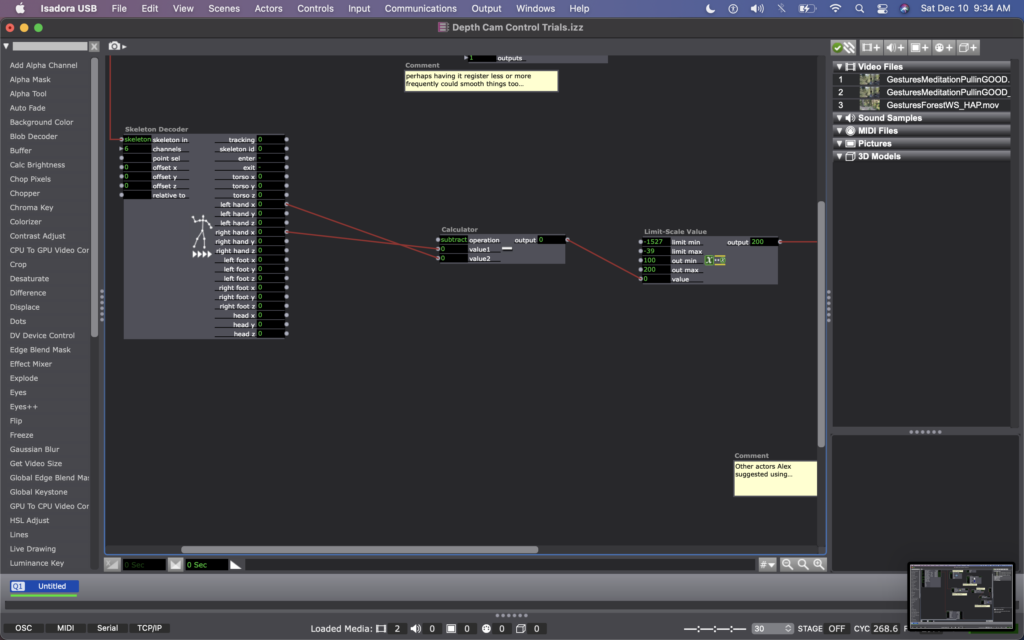

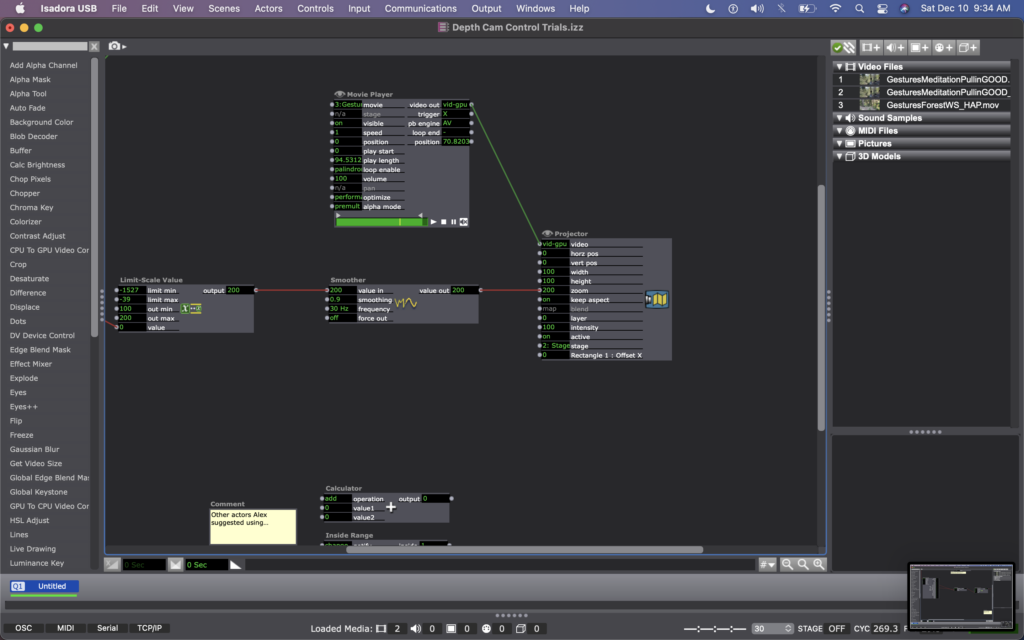

For this, I found some footage of Cody dancing far away, and thought it could be interesting to have the footage zoom in/out when people widen or narrow their arms. In my Isadora patch, this meant changing the settings on the OpenNI Tracker to track body and skeleton (which I hadn’t been asking the actor to do previously). Next, I added a Skeleton Decoder, and had it track the x position of the left and right hand. A Calculator actor then calculates the difference between these two numbers, and a Limit-Scale Value actor translates this number into a percentage of zoom on the Projector. See the images below to track these changes.

My sharing for Cycle 3 was the first time that I got to see the system in action, so I immediately had a lot of notes/thoughts for myself (in addition to the feedback from my peers). My first concern is that the skeleton tracking is finicky. It sometimes had a hard time identifying a body – sometimes trying to map a skeleton on other objects in space (the mobile projection screen, for example). And, periodically the system would glitch and stop tracking the skeleton altogether. This is a problem for me because while I don’t want the relationship between cause and effect to be obvious, I also want it to be consistent so that people can start to learn how they are affecting the system over time. If it glitches and doesn’t not always work, people will be less likely to stay interested. In discussing this with my class, Alex offered an idea that instead of using skeleton tracking, I could use the Eyes++ actor to track the outline of a moving blob (the person moving), and base the zoom on the width or area that the moving blob is taking up. This way, I could turn off skeleton tracking, which I think is part of why the system was glitching. I’m planning to try this when I install the system in Urban Arts Space.

Other thoughts that came up when the class was experimenting with the system were that people were less inclined to move their arms initially. This is interesting because during Cycle 2, people has the impulse to use their arms a lot, even though at the time the system was not tracking their arms. I don’t fully know why people didn’t this time. Perhaps because they were remembering that in Cycle 2 is was tracking depth only, so they automatically starting experimenting with depth rather than arm placement? Also, Katie mentioned that having two images made the experience more immersive, which made her slow down in her body. She said that she found herself in a calm state, wanting to sit down and take it in, rather than actively interact. This is an interesting point – that when you are engulfed/surrounded by something, you slow down and want to receive/experience it; whereas when there is only one focal point, you feel more of an impulse to interact. This is something for me to consider with this set up – is leaning toward more immersive experiences discouraging interactivity?

This question led me to challenge the idea that more interactivity is better…why can’t someone see this ecosystem, and follow their impulse to sit down and just be? Is that not considered interactivity? Is more physical movement the goal? Not necessarily. However, I would like people to notice that their embodied movement takes effect on their surroundings.

We discussed that the prompting or instructions that people are given could invite them to move, so that people try movement first rather than sitting first. I just need to think through the language that feels appropriate for the context of the larger installation.

Another notable observation from Tamryn was that the Astroturf was useful because it creates a sensory boundary of where you can move, without having to take your eyes off the images in front of you – you can feel when you’re foot reaches the edge of the turf and you naturally know to stop. At one point Katie said something like this: “I could tell that I’m here [behind Cody on the log] in this image, and over there [where Cody is, faraway in the image] at the same time.” This pleased me, because when Cody and I were filming this footage, we were talking about the echos in the space – sometimes I would accidentally step on a branch, causing s snapping noise, and seconds later I would hear the sound I made bouncing back from miles away, on there other side of the mountain valley. I ended up writing in my journal after our weekend of filming: “Am I here, or am I over there?” I loved the synchronicity of Katie’s observation here and it made my wonder if I wanted to include some poetry that I was working on for this film…

Please enjoy below, some of my peers interacting with the system.

Mollie Wolf Cycle 2: The WILDS – Dancing w/ Cody

Posted: November 27, 2022 Filed under: Uncategorized | Tags: depth camera, Interactive Media, Isadora, kinect, mollie wolf Leave a comment »For Cycle 2, I began experimenting with another digital ecosystem for my thesis installation project. I began with a shot I have of one of my collaborators, Cody Brunelle-Potter dancing, gesturing, casting spells on the edge of a log over looking a mountain side. As they do so, I (holding the camera) am slowing walking toward them along the log. I was rewatching this footage recently with the idea of using a depth camera to play the footage forward or backward as you walk – allowing your body to mimic the perspective of the camera – moving toward Cody or away from them.

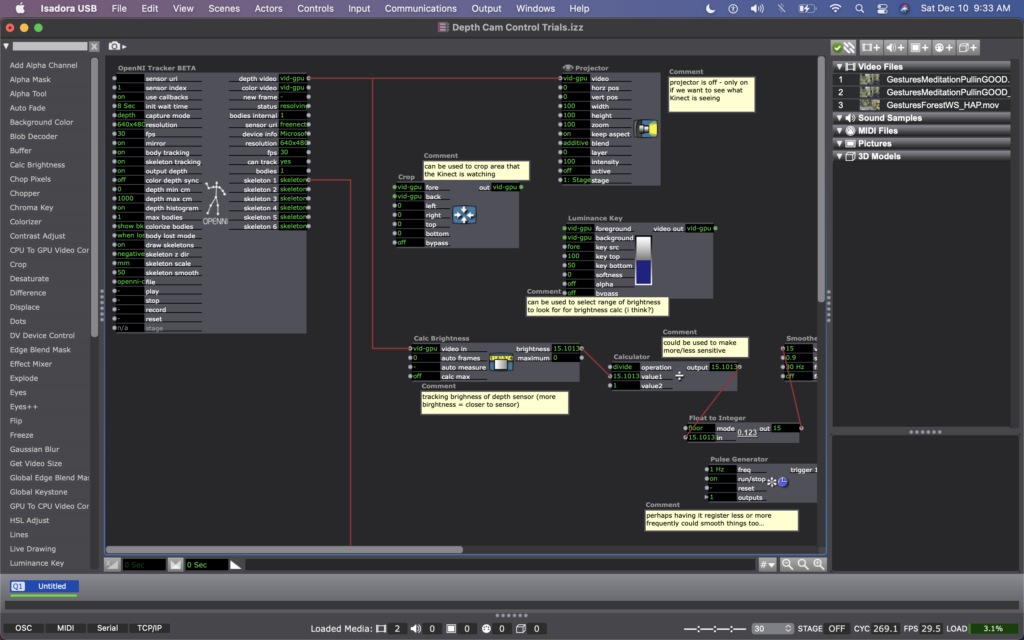

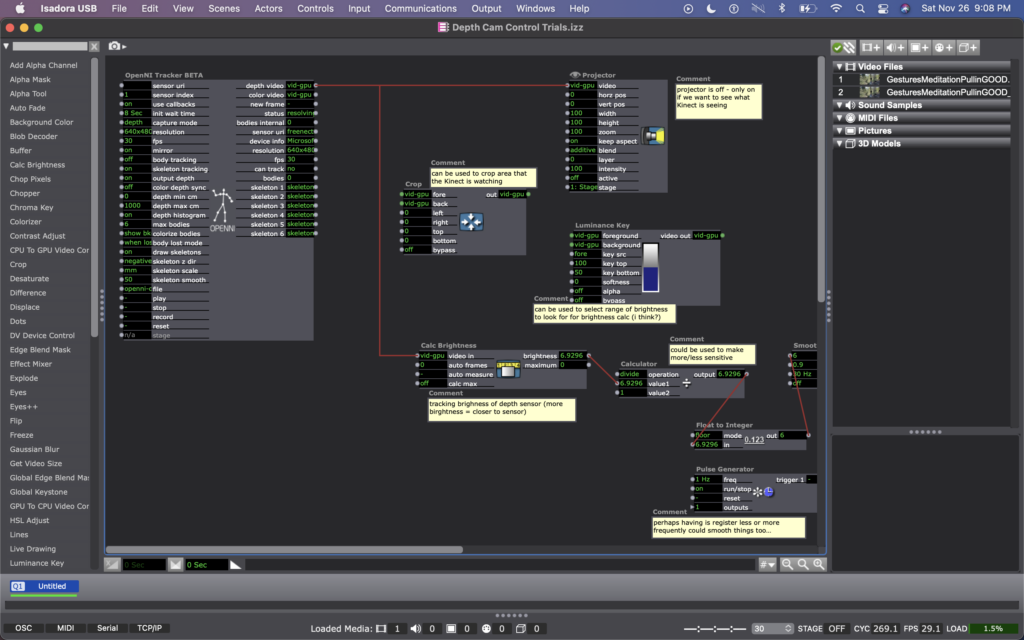

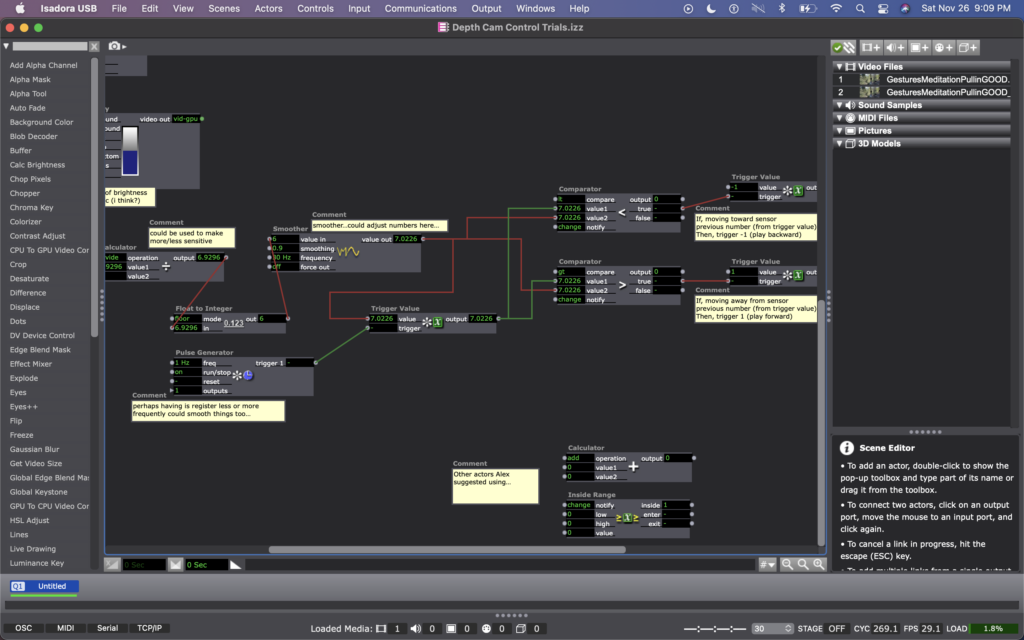

I wasn’t exactly sure how to make this happen, but the first idea I came up with was to make an Isadora patch that recorded how far someone was from an Xbox Kinect at moments in time regularly, and was always comparing the their current location to where they were a moment ago. Then, whether the difference between those two numbers was positive or negative would tell the video whether to play forward or backward.

I explained this idea to Alex; he agreed it was a decent one and helped me figure out which actors to use to do such a thing. We began with the OpenNI Tracker, which has many potential ways to track data using the Kinect. We turned many of the trackers off, because I wasn’t interested in creating any rules in regards to what the people were doing, just where they were in space. The Kinect sends data by bouncing a laser of objects, depending on how bright the is when it bounces back tells the camera whether the object is close (bright), or far (dim). So the video data that comes from the Kinect is grey scale, based on this brightness (closer is to white, as far is to black). To get a number from this data, we used a Calc Brightness actor, which tracks a steadily changing value corresponding to the brightness of the video. Then we used Pulse Generator and Trigger Value actors to frequently record this number. Finally, we used two Comparator actors: one that checked if the number from the Pulse Generator was less than the current brightness from the Calc Brightness actor, and one that did the opposite, if it was greater than. These Comparators each triggered Tigger Value actors that would trigger the speed of the Movie Player playing the footage of Cody to be -1 or 1 (meaning that it would play forward at normal speed or backwards at normal speed).

Once this basic structure was set up, quite a bit of fine tuning was needed. Many of the other actors you see in these photos were used to experiment with fine tuning. Some of them are connect and some of them are not. Some of them are even connected but not currently doing anything to manipulate the data (the Calculator, for example). At the moment, I cam using the Float to Integer actor to make whole numbers out of the brightness number (as opposed to one with 4 decimal points). This makes the system less sensitive (which was a goal because initially the video would jump between forward and backward when a person what just standing still, breathing). Additionally I am using a Smoother in two locations, one before the data reaches the Trigger Value and Comparator actors, and one before the data reaches the Movie Player. In both cases, the Smoother creates a gradual increase or decrease of value between numbers rather than jumping between them. The first helps the sensed brightness data change steadily (or smoothly, if you will); and the second helps the video slow to a stop and then speed up to a reverse, rather than jumping to reverse, which felt glitchy originally. As I move this into Urban Arts Space, where I will ultimately be presenting this installation, I will need to fine tune quite a bit more, hence why I have left the other actors around as additional things to try.

Once things were fine tuned and functioning relatively well, I had some play time with it. I noticed that I almost instantly had the impulse to dance with Cody, mimicking their movements. I also knew that depth was what the camera was registering, so I played a lot with moving forward and backward at varying times and speeds. After reflecting over my physical experimentation, I realized I was learning how to interact with the system. I noticed that I intuitively changed my speed and length of step to be one that the system more readily registered, so that I could more fluidly feel a responsiveness between myself and the footage. I wondered whether my experience would be common, or if I as a dancer have particular practice noticing how other bodies are responding to my movement and subtly adapting what I’m doing in response to them…

When I shared the system with my classmates, I rolled out a rectangular piece of astro turf in the center of the Kinect’s focus (and almost like a carpet runway pointing toward the projected footage of Cody). I asked them to remove their shoes and to take turns, one at a time. I noticed that collectively over time they also began to learn/adapt to the system. For them, it wasn’t just their individual learning, but their collective learning because they were watching each other. Some of them tried to game-ify it, almost as thought it was a puzzle with an objective (often thinking it was more complicated than it was). Others (mostly the dancers) had the inclination to dance with Cody, as I had. Even though I watched their bodies learned the system, none of them ever quite felt like they ‘figured it out.’ Some seemed unsettled by this and others not so much. My goal is for people to experience a sense of play and responsiveness between them and their surroundings, less that it’s a game with rules to figure out.

Almost everyone said that they enjoyed standing on the astro turf—that the sensation brought them into their bodies, and that there was some pleasure in the feeling of stepping/walking on the surface. Along these lines, Katie suggested a diffuser with pine oil to further extend the embodied experience (something I am planning to do in multiple of the digital ecosystems through out the installation). I’m hoping that prompting people into their sensorial experience will help them enter the space with a sense of play, rather than needing to ‘figure it out.’

I am picturing this specific digital ecosystem happening in a small hallway or corner in Urban Arts Space, because I would rather this feel like an intimate experience with the digital ecosystem as opposed to a public performance with others watching. As an experiment with this hallway idea, I experimented with the zoom of the projector, making the image smaller or larger as my classmates played with the system. Right away, my classmates and I noticed that we much preferred the full, size of the projector (which is MUCH wider than a hallway). So now I have my next predicament – how to have the image large enough to feel immersive in a narrow hallway (meaning it will need to wrap on multiple walls).

Cycle 3–Allison Smith

Posted: April 28, 2022 Filed under: Uncategorized | Tags: dance, Interactive Media, Isadora 1 Comment »I had trouble determining what I wanted to do for my Cycle 3 project, as I was overwhelmed with the possibilities. Alex was helpful in guiding me to focus on one of my previous cycles and leaning into one of those elements. I chose to follow up with my Cycle 1 project that had live drawing involved through motion capture of the participant. This was a very glitchy system, though, so I decided to take a new approach.

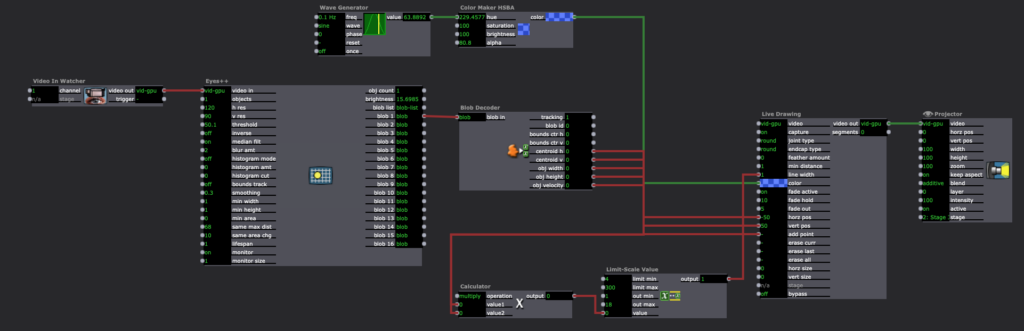

In my previous approach of this, I utilized the skeleton decoder to track the numbers of the participants’ hands. These numbers were then fed into the live drawing actor. The biggest problem with that, though, is that the skeleton would not track well and the lines didn’t correspond to the person’s movement. In this new iteration, I chose to use a camera, eyes ++ and the blob decoder to track a light that the participant would be holding. I found this to be a much more robust approach, and while it wasn’t what I had originally envisioned in Cycle 1, I am very happy with the results.

I had some extra time and spontaneously decided to add another layer to this cycle, where the participant’s full body would be tracked with a colorful motion blur. With this, they would be drawing but we would also see the movement the body was creating. I felt like this addition leaned into my research in this class of how focusing on one type of interactive system can encourage people to move and dance. With the outline of the body, we were able to then see the movement and dancing that the participant’s probably weren’t aware they were doing. I then wanted to put the drawing on a see-through scrim so that the participant would be able to see both visuals being displayed.

A few surprises came when demonstrating this cycle with people. I instructed that viewers could walk through the space and observe however they wanted, however I didn’t consider how their bodies would also be tracked. This brought out an element of play from the “viewers” (aka the people not drawing with the light) that I found most exciting about this project. They would play with different ways their body was tracked and would get closer and farther from the tracker to play with depth. They also played with shadows when they were on the other side of the scrim. My original intention with setting the projections up the way that they were–on the floor in the middle of the room–was so that the projections wouldn’t mix onto the other scrims. I never considered how this would allow space for shadows to join in the play both in the drawing and in the bodily outlines. I’ve attached a video that illustrates all of the play that happened during the experience:

Something that I found interesting after watching the video was that people were hesitant to join in at first. They would walk around a bit, and they eventually saw their outlines in the screen. It took a few minutes, though, for people to want to draw and for people to start playing. After that shift happened, there is such a beautiful display of curiosity, innocence, discovery, and joy. Even I found myself discovering much more than I thought I could, and I’m the one who created this experience.

The coding behind this experience is fairly simple, but it took a long time for me to get here. I had one stage for the drawing and one stage for the body outlines. For the drawing, like I mentioned above, I used a video in watcher to feed into eyes ++ and the blob decoder. The camera I used was one of Alex’s camera as it had a manual exposer to it, which we found out was necessary to keep the “blob” from changing sizes when the light moved. The blob decoder finds bright points in the video, and depending on the settings of the decoder, it will only track one bright light. This then fed into a live drawing actor in its position and size, with a constant change in the colors.

For the body outline, I used an astra orbec tracker feeding into a luminance key and an alpha mask. The foreground and mask came from the body with no color, and the background was a colorful version of the body with a motion tracker. This created the effect of having a white colored silhouette with a colorful blur. I used the same technique for color in the motion blur as I did with the live drawing.

I’m really thankful for how this cycle turned out. I was able to find some answers to my research questions without intentionally thinking about that, and I was also able to discover a lot of new things within the experience and reflecting upon it. The biggest takeaway I have is that if I want to encourage people to move, it is beneficial to give everyone an active roll in exploration rather than having just one person by themselves. I was focused too much on the tool in my previous cycles (drawing, creating music) rather than the importance of community when it comes to losing movement inhibition and leaning into a sense of play. If I were to continue to work on this project, I might add a layer of sound to it using MIDI. I did enjoy the silence of this iteration, though, and am concerned that adding sound would be too much. Again, I am happy with the results of the cycle, and will allow this to influence my projects in the future.