Pressure Project 1 – Interactive Exploration

Posted: February 4, 2025 Filed under: Uncategorized | Tags: Interactive Media, Isadora, Pressure Project Leave a comment »For this project, I wanted to prioritize joy through exploration. I wanted to create an experience that allowed people to try different movements and actions to see if they could “unlock” my project, so to say. To do this, I built motion and sound sensors into my project that would trigger the shapes to do certain actions.

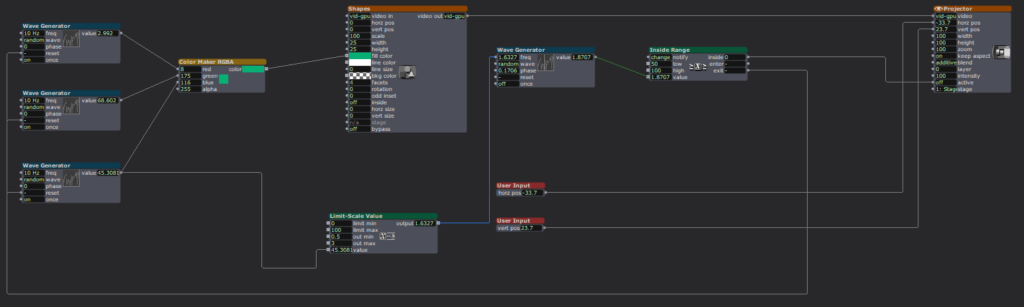

Starting this project was difficult because I didn’t know what direction I wanted to take it, but I knew I wanted it to have some level of interactivity. I started off small by adding a User Input actor to adjust the number of facets on each shape, then a Random actor (with a Limit-Scale Value actor) to simply change the size of the shapes each time they appeared on screen. Now it was on.

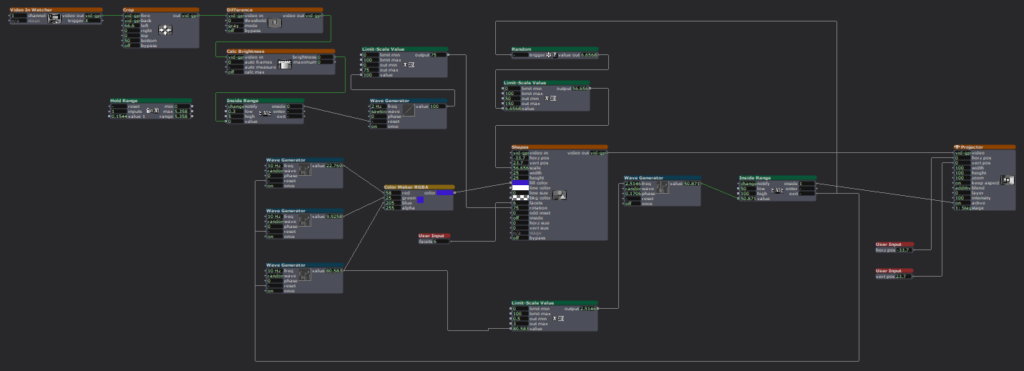

I started building my motion sensor, which involved a pretty heavy learning curve because I could not open the file from class that would have told me which actors to put where. I did a lot of trial-and-error and some research to jog my memory and eventually got the pieces I needed, and we were off to the races!

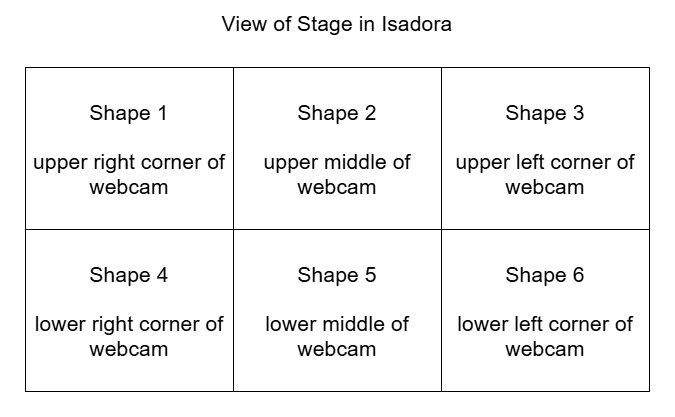

A mockup diagram of which section of the motion sensor is attached to each shape. The webcam is mirrored so it is actually backwards, which made it difficult to keep of which portion of the sensor attached to which shape.

Figuring out the motion sensor from scratch was just the tip of the iceberg; I still needed to figure out how to implement it. I decided to divide the picture into six sections, so each section triggered the corresponding shape to rotate. Figuring out how to make the rotation last the right amount of time was tricky, because the shapes were only on-screen for a short, inconsistent amount of time and I wanted the shapes to have time to stop rotating before fading. I plugged different numbers into different outputs of a Wave Generator and Limit-Scale Value actor to get this right.

Then it was time to repeat this process five more times. Because each shape needed a different section for the motion detector, I had to crop each one individually (making my project file large and woefully inefficient). I learned the hard way how each box interacts and that not everything can be copied to each box as I had previously thought, causing me to have to go back a few times to fix/customize each shape. (I certainly understand the importance of planning out projects now!)

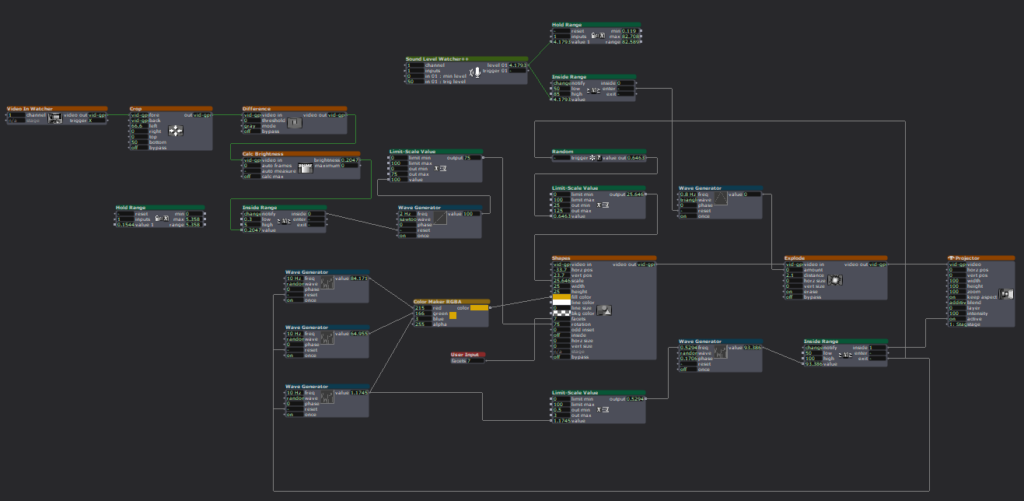

I had some time left after the motion sensor was done and functional, so I revisited an idea from earlier. I had originally wanted the motion sensor to trigger the shapes to explode, but realized that would likely be overwhelming, and my brain was melting trying to get the Explode actor plugged in right to make it work. Thus, I decided on an audio sensor instead. Finding the sweet spot to set the value at to trigger the explosion was difficult, as clapping and talking loudly were very close in value, so it is not a terribly robust sensor, but it worked well enough, and I was able to figure out where the Explode actor went.

I spent a lot of time punching in random values and plugging actors into different inputs to figure out what they did and how they worked in relation to each other. Exploration was not just my desired end result; it was a part of the creative process. For some functions, I could look up how to make them work, such as which actors to use and where to plug them in. But other times, I just had to find the magic values to achieve my desired result.

This meant utilizing virtual stages as a way to previsualize what I was trying to do, separate from my project to make sure it worked right. I also put together smaller pieces to the side (projected to a virtual stage), so I could get that component working before plugging it into the rest of the project. Working in smaller chunks like this helped me keep my brain clear and my project unjumbled.

I worked in small chunks and took quick breaks after completing a piece of the puzzle, establishing a modified Pomodoro Technique workflow. I would work for 10-20 minutes, then take a few minutes to check notifications on my phone or refill my water bottle, because I knew trying to get it done in one sitting would be exhausting and block my creative flow. Not holding myself to a strict regimen to complete the project allowed me the freedom to have fun with it and prioritize discovery over completion, as there was no specific end goal. I think this creative freedom and flexibility gave me the chance to learn about design and creating media in a way I could not have with a set end result to achieve because it gave me options to do different things.

If something wasn’t working for me, I had the option to choose a new direction (rotating the shape with the motion sensor instead of exploding them). After spending a few hours with Isadora, I gained confidence in my knowledge base and skill set that allowed me to return to abandoned reconsidered ideas and try them again in a new way (triggering explosions with a sound sensor).

I wasn’t completely without an end goal. I wanted to create a fun interactive media system that allowed for the discovery of joy through exploration. I wanted my audience to feel the same way playing with my project as I did making it. It was incredibly fulfilling watching a group of adults giggle and gasp as they figured out how to trigger the shapes in different ways, and I was fascinated watching the ways in which they went about it. They had to move their bodies in different ways to trigger the motion sensors and make different sounds to figure out which one triggered the explosions.

Link to YouTube video: https://youtu.be/EjI6DlFUof0