Cycle 3: Sensory Soul Soil

Posted: April 30, 2022 Filed under: Uncategorized Leave a comment »For my cycle 3 project I shifted the soundscape a bit, changed two interfaces and the title. I honed in on two of the growers interviews. I wanted to illustrate the connection to what they’ve grown through an organic interface rather than the former plastic tree. For instance grower Sophia Buggs, started out growing Zucchini in kiddie pools because her grandmother used to make Zucchini bread. Highlighting this history I wanted the participant to feel the electricity Sophia and her grandmother felt with hands in the soil and on fresh zucchini. I worked with my classmate Patrick to clean the sound of Sophia’s interview because when I interviewed her we were in a noisy restaurant. He worked with me to level the crowd in the background and amplify Sophia’s voice to the foreground. I used two tomatoes to illustrate grower Julialynn’s expression “It was just me and two tomatoes!”. This is her origin story to growing her church’s community garden. Sensory Soul Soil in an experiment of listening with your hands, feeling your body, and seeing with your eyes and ears.

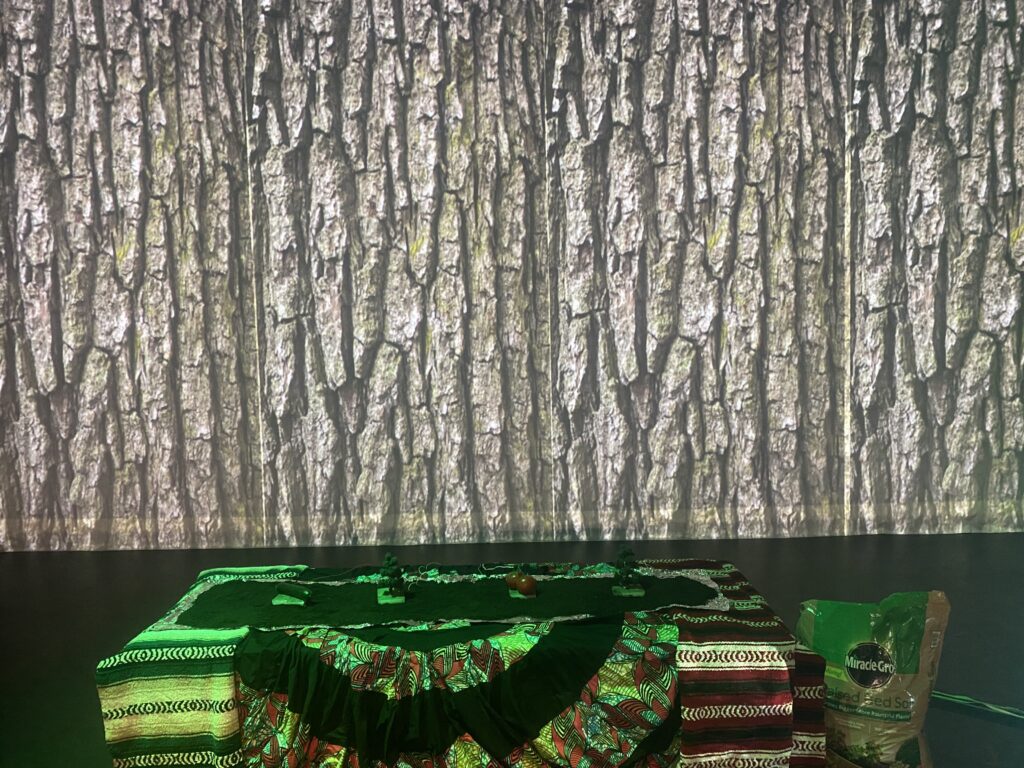

As for the set up I decided to start in front of the sensory soil box. I made this change so that I can better guide the participate in the experience. This go round I wanted to bring some color to the originally white box. I used Afro-Latinadad fabric to build in a sense of cultural identity through patterns and colors also representing those who have labored in our U.S. soil.

In the last iteration I didn’t really know how to bring the space to a close however within this cycle I closed the space with a charge to join the agricultural movement by getting involved anyway they can because the earth and their bodies will appreciate it. This call to action is and invitation to take this work beyond the performative, experiential space, and in to the world from which this inspiration came.

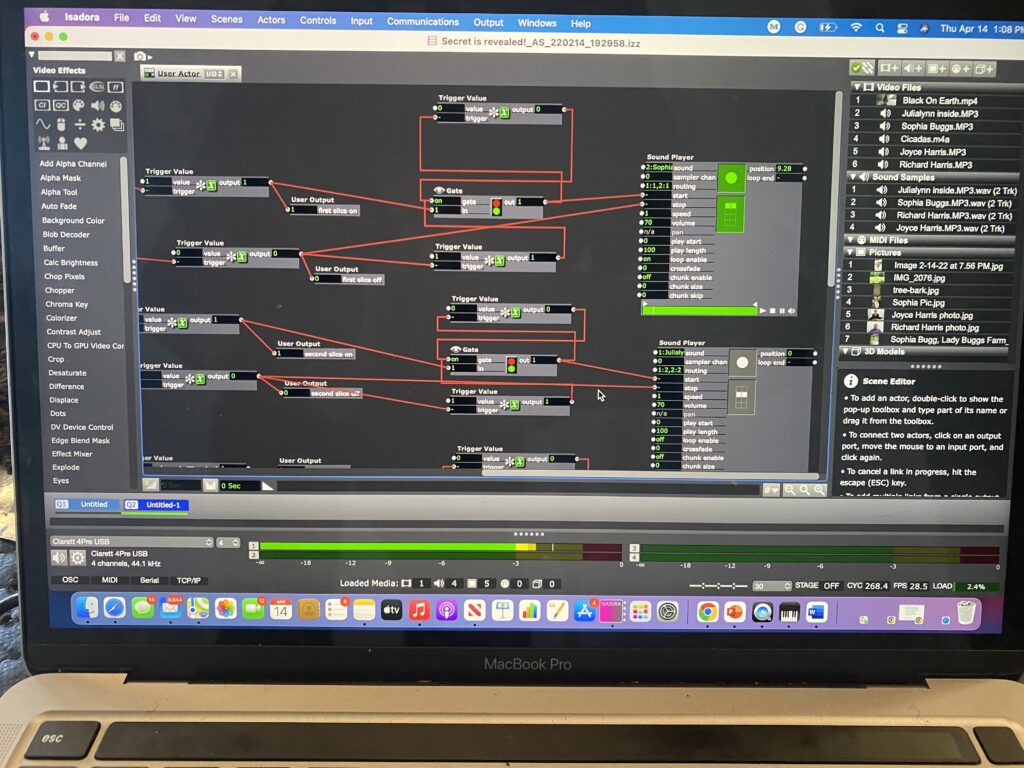

To get my sound to work the way I intended, I designed the trigger values and gates to be able to start the audio when touched and cut off when you are not connected. I found that having wav files for sound works better in the sound player. My MP3 continued to show in the movie player just an FYI.

Below you can see one of the Sensory Soul Soil experiences with Juilalynn and two tomatoes.

In the video below you will witness how I curated the space to be extremely immersive. My goal was to engage in the true labor of a grower not only by hearing their stories but also by embodying their movement. I changed the title because now all materials in this iteration are tress as before. I figured sense I come a culture of soul food, and food has the nutrients to feed the soul which comes from the soil why not call it what it is. A Senory Soul Soil experience.

Cycle 3–Allison Smith

Posted: April 28, 2022 Filed under: Uncategorized | Tags: dance, Interactive Media, Isadora 1 Comment »I had trouble determining what I wanted to do for my Cycle 3 project, as I was overwhelmed with the possibilities. Alex was helpful in guiding me to focus on one of my previous cycles and leaning into one of those elements. I chose to follow up with my Cycle 1 project that had live drawing involved through motion capture of the participant. This was a very glitchy system, though, so I decided to take a new approach.

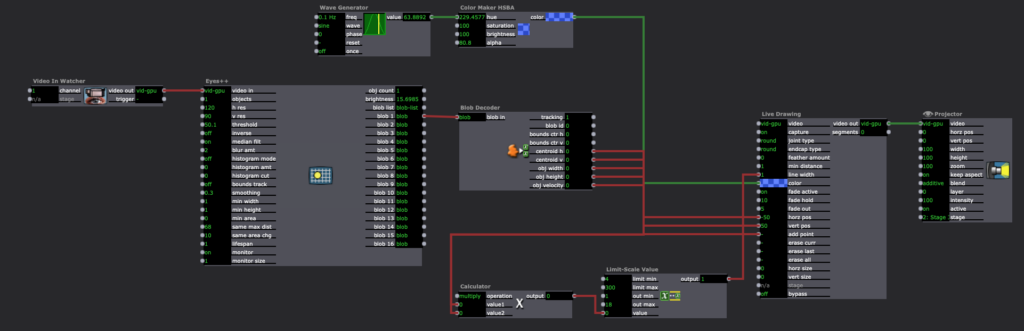

In my previous approach of this, I utilized the skeleton decoder to track the numbers of the participants’ hands. These numbers were then fed into the live drawing actor. The biggest problem with that, though, is that the skeleton would not track well and the lines didn’t correspond to the person’s movement. In this new iteration, I chose to use a camera, eyes ++ and the blob decoder to track a light that the participant would be holding. I found this to be a much more robust approach, and while it wasn’t what I had originally envisioned in Cycle 1, I am very happy with the results.

I had some extra time and spontaneously decided to add another layer to this cycle, where the participant’s full body would be tracked with a colorful motion blur. With this, they would be drawing but we would also see the movement the body was creating. I felt like this addition leaned into my research in this class of how focusing on one type of interactive system can encourage people to move and dance. With the outline of the body, we were able to then see the movement and dancing that the participant’s probably weren’t aware they were doing. I then wanted to put the drawing on a see-through scrim so that the participant would be able to see both visuals being displayed.

A few surprises came when demonstrating this cycle with people. I instructed that viewers could walk through the space and observe however they wanted, however I didn’t consider how their bodies would also be tracked. This brought out an element of play from the “viewers” (aka the people not drawing with the light) that I found most exciting about this project. They would play with different ways their body was tracked and would get closer and farther from the tracker to play with depth. They also played with shadows when they were on the other side of the scrim. My original intention with setting the projections up the way that they were–on the floor in the middle of the room–was so that the projections wouldn’t mix onto the other scrims. I never considered how this would allow space for shadows to join in the play both in the drawing and in the bodily outlines. I’ve attached a video that illustrates all of the play that happened during the experience:

Something that I found interesting after watching the video was that people were hesitant to join in at first. They would walk around a bit, and they eventually saw their outlines in the screen. It took a few minutes, though, for people to want to draw and for people to start playing. After that shift happened, there is such a beautiful display of curiosity, innocence, discovery, and joy. Even I found myself discovering much more than I thought I could, and I’m the one who created this experience.

The coding behind this experience is fairly simple, but it took a long time for me to get here. I had one stage for the drawing and one stage for the body outlines. For the drawing, like I mentioned above, I used a video in watcher to feed into eyes ++ and the blob decoder. The camera I used was one of Alex’s camera as it had a manual exposer to it, which we found out was necessary to keep the “blob” from changing sizes when the light moved. The blob decoder finds bright points in the video, and depending on the settings of the decoder, it will only track one bright light. This then fed into a live drawing actor in its position and size, with a constant change in the colors.

For the body outline, I used an astra orbec tracker feeding into a luminance key and an alpha mask. The foreground and mask came from the body with no color, and the background was a colorful version of the body with a motion tracker. This created the effect of having a white colored silhouette with a colorful blur. I used the same technique for color in the motion blur as I did with the live drawing.

I’m really thankful for how this cycle turned out. I was able to find some answers to my research questions without intentionally thinking about that, and I was also able to discover a lot of new things within the experience and reflecting upon it. The biggest takeaway I have is that if I want to encourage people to move, it is beneficial to give everyone an active roll in exploration rather than having just one person by themselves. I was focused too much on the tool in my previous cycles (drawing, creating music) rather than the importance of community when it comes to losing movement inhibition and leaning into a sense of play. If I were to continue to work on this project, I might add a layer of sound to it using MIDI. I did enjoy the silence of this iteration, though, and am concerned that adding sound would be too much. Again, I am happy with the results of the cycle, and will allow this to influence my projects in the future.

Cycle 3 – Ashley Browne

Posted: April 28, 2022 Filed under: Uncategorized 1 Comment »For cycle 3, I wanted to finalize the hardware for my video synthesizer and continue to work on the game portion so that the experience would be better suited for both players. This resulted in me making an audio file that was split into separate sets of headphones where Player 1 had a separate set of instructions from Player 2. For the player using the video synthesizer, they were instructed to turn the signals on and off and mix the channels so that it matched the tempo of the music playing through the headphones. For player 2, they used a makeymakey as a game controller where their goal was to collect as many items using the flower character within the 2 minute timer. Since they both played at the same time, Player 1 could choose to hinder Player 2’s game or not. It was fun to watch how people interacted together.

Also, for one of the input signals, I used an isadora patch that used a webcam live feed that watched the players as they worked together.

I received a lot of feedback and reactions to the aesthetics of the experience– lots of people enjoyed the nostalgic feeling it gave them seeing the CRT tv and being able to interact with it in a new way. People also enjoyed the free stickers I gave out!

Overall, I’m really happy with how the project turned out. In another iteration or cycle, I’d love to further develop the makeymakey controller so that it was similar to the video synthesizer– like using the same hardware casing and tactile buttons.

Cycle 3 Final Presentation, Gabe Carpenter, 4/27/2022

Posted: April 28, 2022 Filed under: Uncategorized Leave a comment »In cycle 3 I wanted to bring everything together. In cycle one I provided the proof of concept of using physical controls to interact with a virtual environment, and that I could create these physical tools out of almost anything. In cycle 2, I demonstrated how these tools could be used to control a game environment, however the environment was very limited and lacked sound. For cycle 3, I really wanted to turn up the heat. My game is based on another popular series called I’m on Observation Duty, and shares many of the same concepts. My cycle 3 presentation includes a small story, 3 rooms to play in, and 9 different anomalies to find.

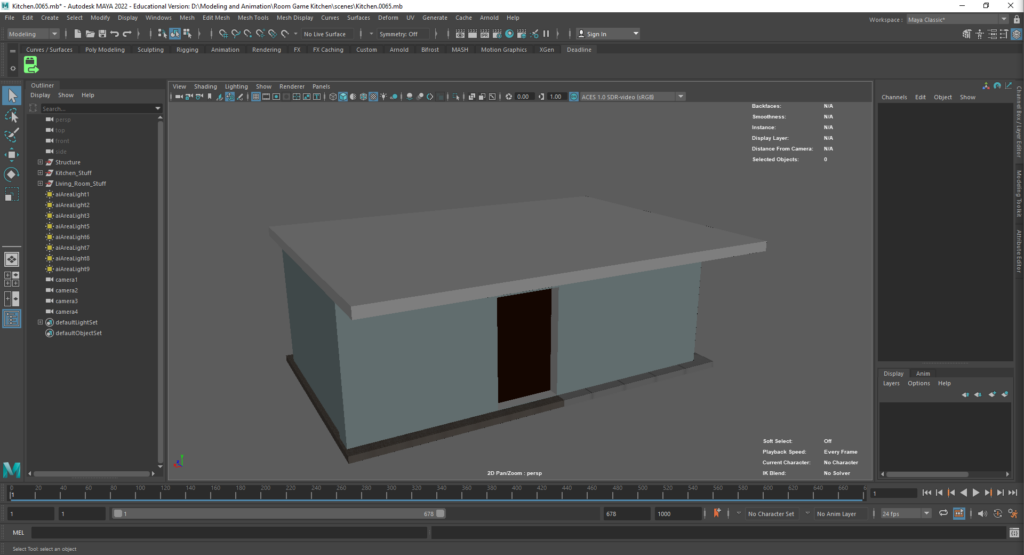

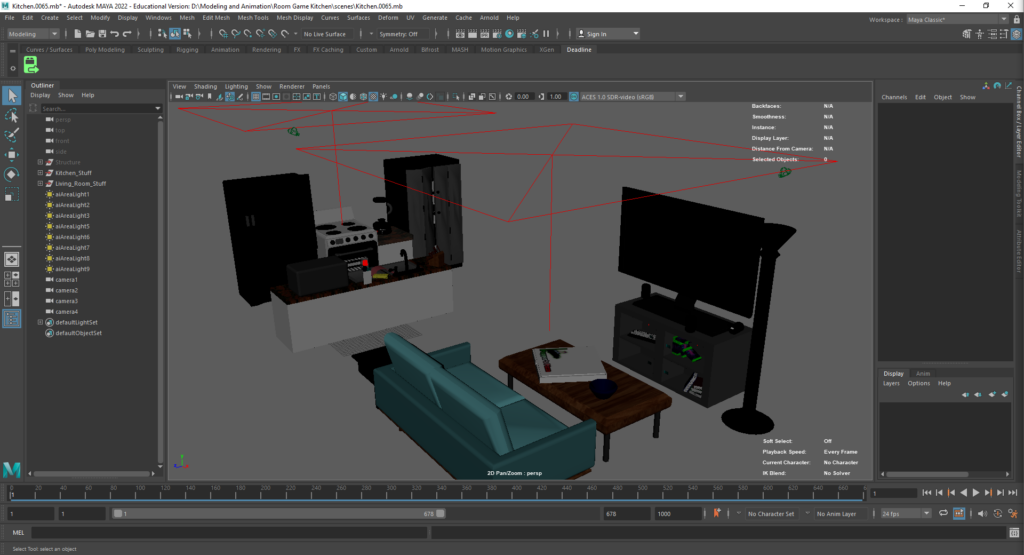

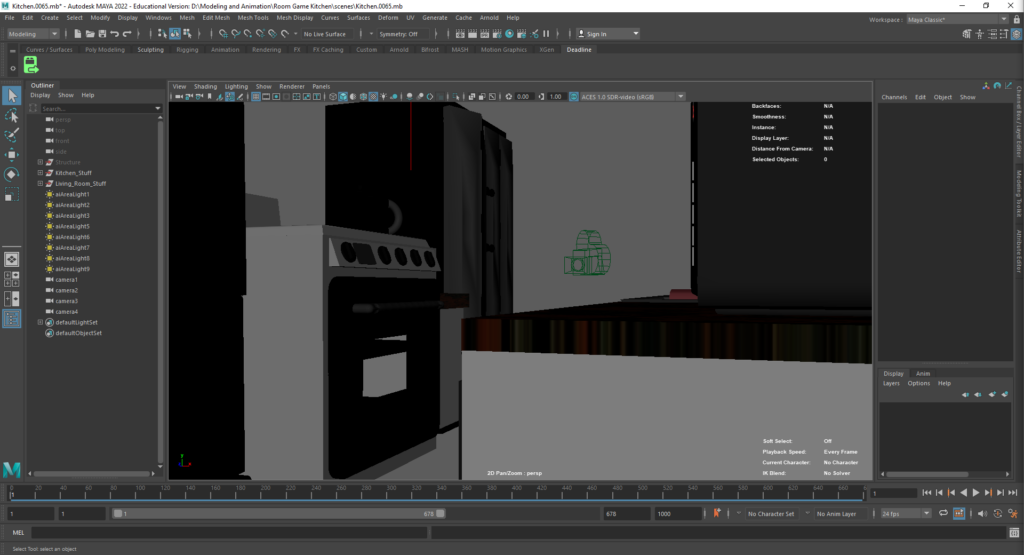

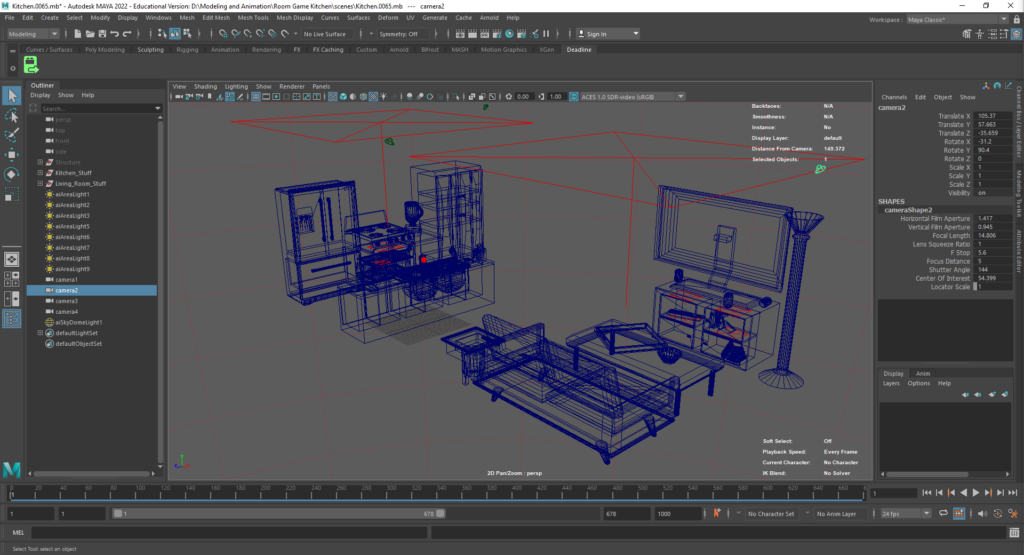

The first step, and arguably the most difficult step, was the animation and modeling itself. I used Maya 2022, as with previous cycles. Each asset in each of the three scenes was modeled from scratch, and no third party assistance was used in the creation of the game world. This meant that a majority of my time was spent modeling and rendering.

The three images above show a raw view of the newly modeled environments. For a look at the absent third room, check out my cycle 2 post where I go more in depth on that room specifically. The last step of the animation process was to choose the placement of the cameras, one of which you can see in the third image above. These were a bit challenging, as I wanted to give the player enough vision to complete the task of the game without giving too much to focus on at once. Texturing and lighting also took a good deal of my time, as I wanted to make things look realistically lit for a night time setting.

The next step was to get the sound and video assets for the final patch. The process I used involving taking multiple renders of each anomaly from each of the camera locations, and stitching them together as one large MP4. In the end, each camera was comprised of a 4 minute video. The Isadora patch would then allow the user to simply switch between which video they were viewing. I then needed to include sound. The music for the game was obtained through a royalty free music sharing platform, and the voice acting was done by good friend Justin Green. His work is awesome!

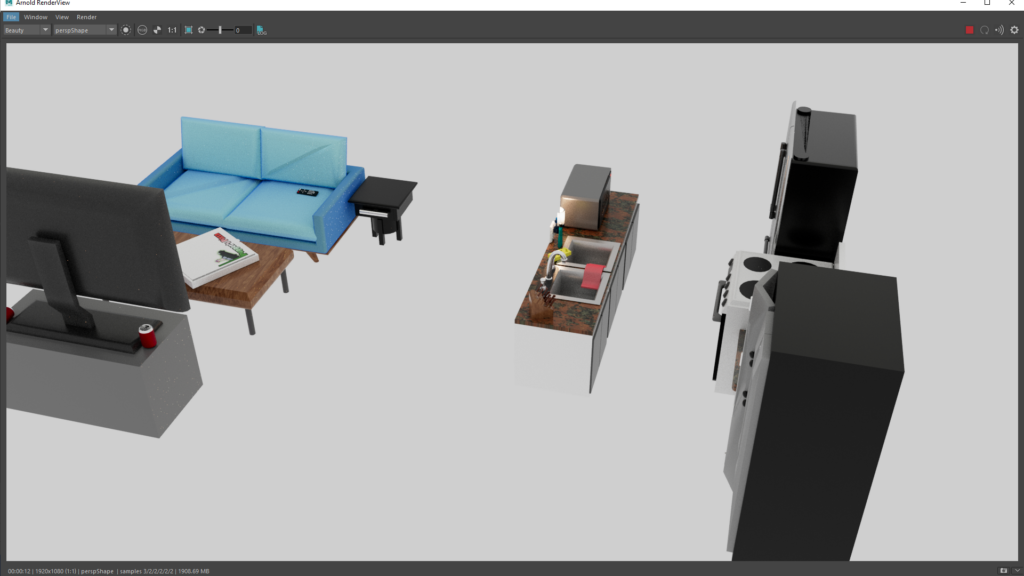

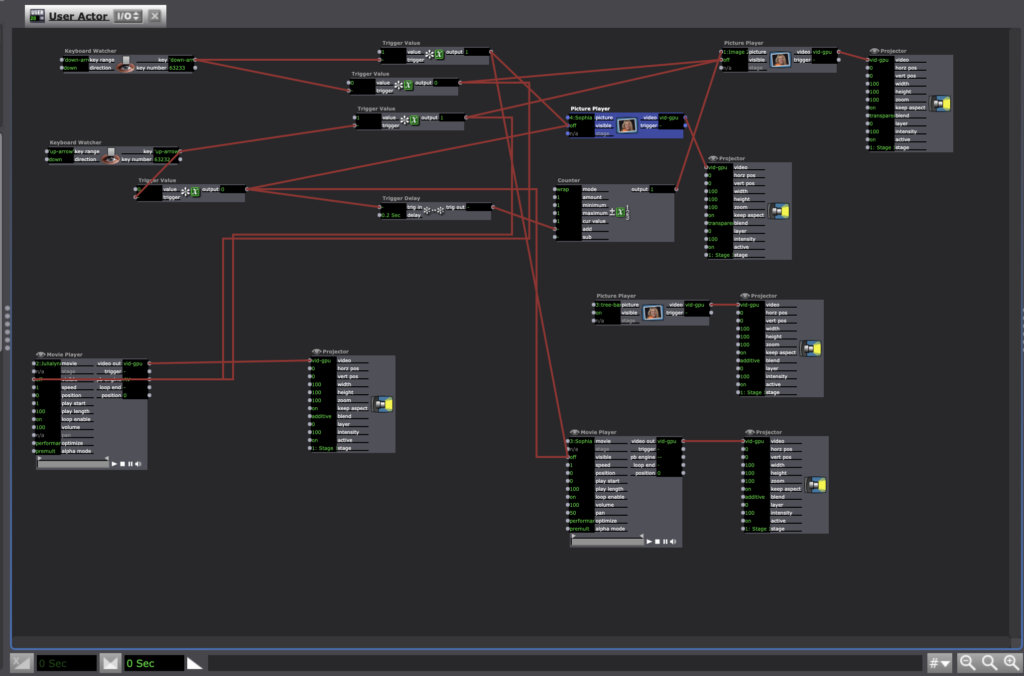

Finally, I needed to write the Isadora patch.

The full patch will be linked here. The patch is split up into 4 sections. The bottom left is the control scheme, comprised of keyboard watchers and global value receivers. The right side is all of the video and sound assets, as well as the timers and triggers that control how they are seen. The upper left is each of the anomalies, and triggers to allow the win condition to understand if the player reported correctly or not. The very top of the patch is the win condition which is made of two parts. The first condition is that the player must correctly identify at least 7 of the 9 anomalies. The second is that the player may not submit more than 11 reports total, to prevent the use of spam reporting. Overall, the actual patch took around 7 hours to write, but this was mainly due to some confusions in all of the broadcasters I ended up using.

In conclusion, I believe that my project was very successful. I did what I set out to do at the beginning of cycle 1, and had an Isadora patch that ran without issue.

Cycle 2 “If trees could talk, What would they say?”

Posted: April 21, 2022 Filed under: Uncategorized Leave a comment »In cycle two I wanted to take the experience to a larger scale. I added two more trees to the Makey Makey and worked with my classmate Patrick and instructors Oded and Alex to work on the sound aspect which I was most concerned with during this cycle. We configured the four interviews to come from four individual speakers in the motion lab by creating four channels of sound in Isadora.

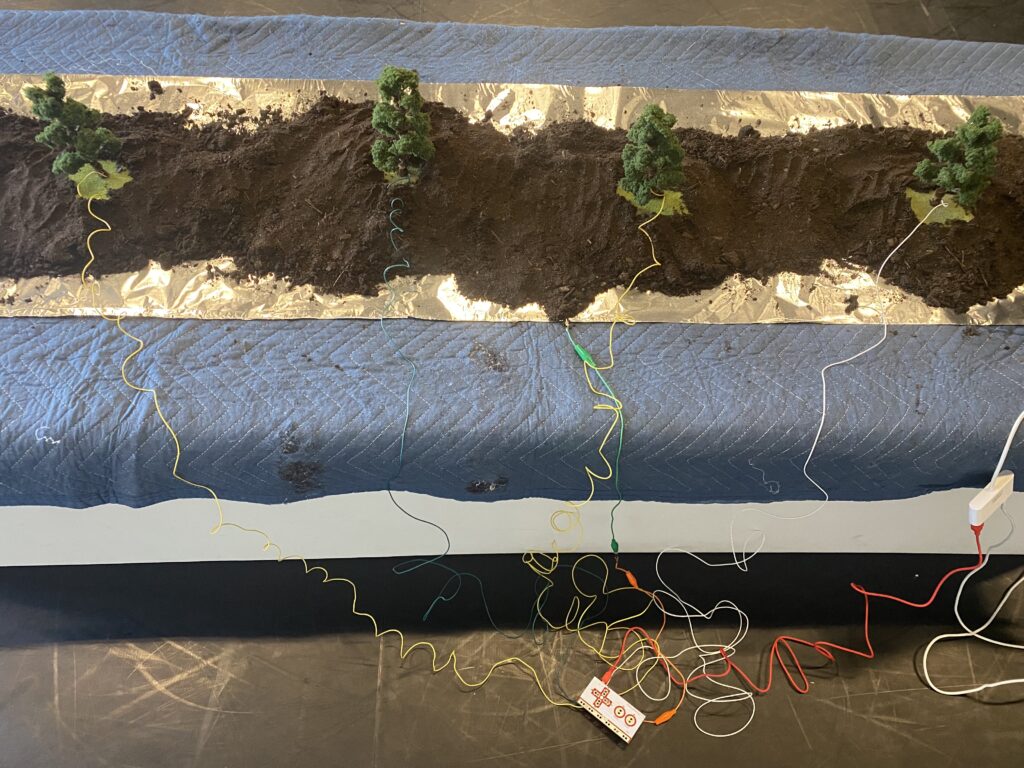

Below is an image of what I imagine to be a “raised bed” and row of electric soil of connectivity.

I used aluminum foil to create a more conductive grounding for the soil.

I learned how to build a gate to trigger each of the sound players so that when participants are not connected to the tree and the soil the audio nor the image of the growers will appear.

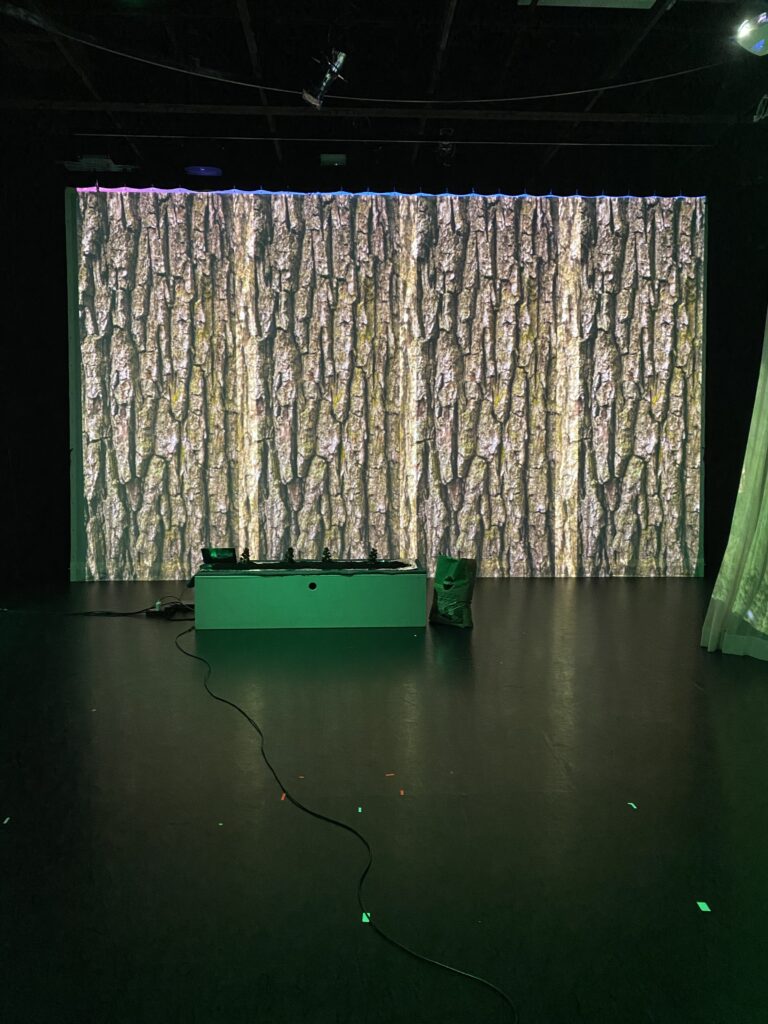

I chose to keep the raised bed box closer to the screen so that participants could have the feeling of looking up at the trees. My goal was to honor the wisdom coming from Black growers through bringing awareness to trees and how important their communication systems are complex yet work together underneath the soil.

I curated the green lighting, with two identical images of an urban garden on scrims that made a half circle in the space to give participants the feeling that they were truly in the thick of a green space. For African diasporic folx greenery has been a mode of protection and healing through imperial, capitalist empires that often destroy these types of environments for their own social gain.

In this iteration, I wanted to get people to move with me. Folx living in urban areas barely make time for cooking let alone be in a garden working. I want the participants to embody what it feels like to take on some of the labor of what it means to grow your food. Kneeling at the raised bed or pulling weeds, and even harvesting vegetables from the garden are often tough on the back and knees. This kind of labor is seen as servitude in this country. I invite the participants to join me as I do the work. In the 21st century, Black bodies are reclaiming our right to acquire land and provide quality food for our communities.

During this cycle I realized that participants needed a little more incentive to be invited into the space. As a performer I understand that a non-traditional performance asks participants to negotiate how to engage the space. At the beginning introduction, I gave clear instructions on how to engage with the space however once I started to move it was almost as if the participants didn’t want to move because they wanted to pay attention to me.

I figure for cycle 3 I will change my position in the room. It was suggested that I start in front of the raised bed to orient where the main engagement is, do my introduction, and then dance from in front of the screen providing a gesture of invitation into the experience.

Pressure Project #3 Audio

Posted: April 20, 2022 Filed under: Uncategorized Leave a comment »Unfortunately I was too into the experience to record however, I created an audio ecomemory of corn. Ecomemory is a term coined by Melanie L. Harris in her book Ecowomanism. I wanted to illustrate through sound my African American experience with corn. In this Audio project, I wanted to integrate movement, agriculture and technology. I love to layer sounds to create stories. To begin I placed Aretha Franklin’s Spirit in the dark as the base sound to provide soul and evoke spirit. I used my voice to give a brief history of Henry Blair the inventor of the corn planter, I also layered a recording of Micheal Twitty an African-American Jewish writer, culinary historian, and educator. He is the author of The Cooking Gene.

He sings a song called the “Whistling walk“. This was a song sung by enslaved folks when they walked down a walkway connected to the big house to serve food to indicate that they were not eating the food. This was layered with the sound of barking dogs with me saying ” hush puppy hush, hush puppy hush”. Hush puppies are made from corn meal and got their name because enslaved folks who liberated themselves would use these corn meal fried delights to keep dogs quite and off their trail. I then added my grandmother’s voice telling a story of her father and how they grew corn on his land. To close I ended with Micheal Twitty in his reconstructed version of the “Whistling walk” he sang, “no more whistling walk for me no more, no more. No more whistling walk for me many thousands gone.” This reconstructed song reclaims African American food labor for future generations who grow and work with food to heal as well as honoring those who had to suffer under chattel slavery.

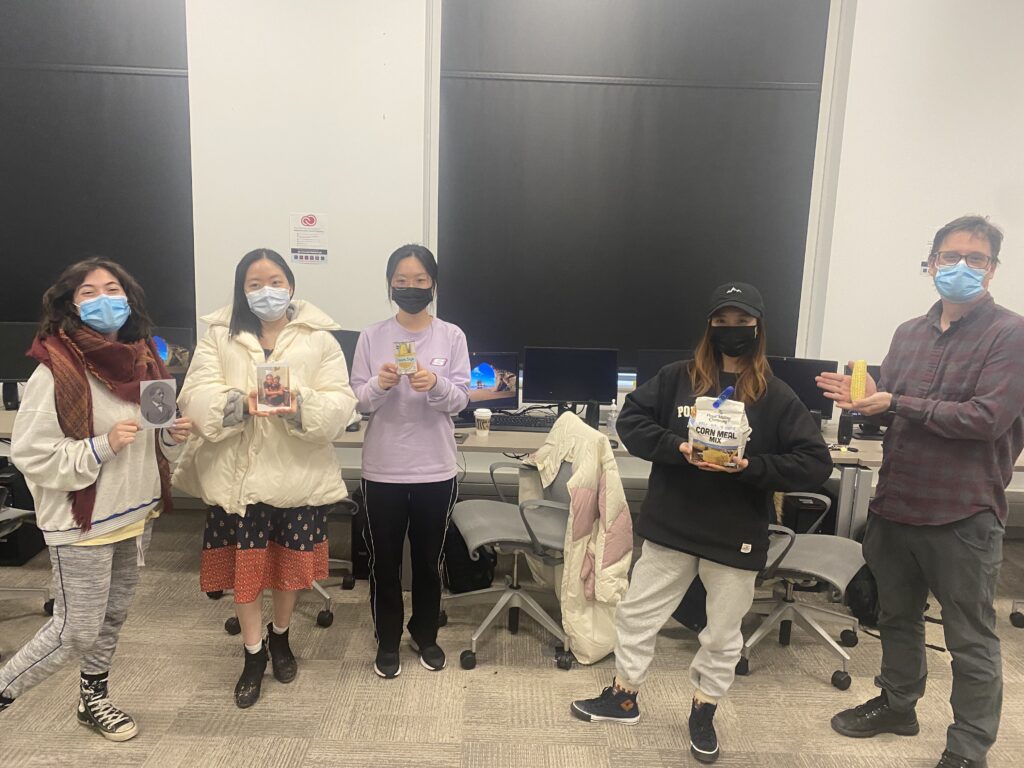

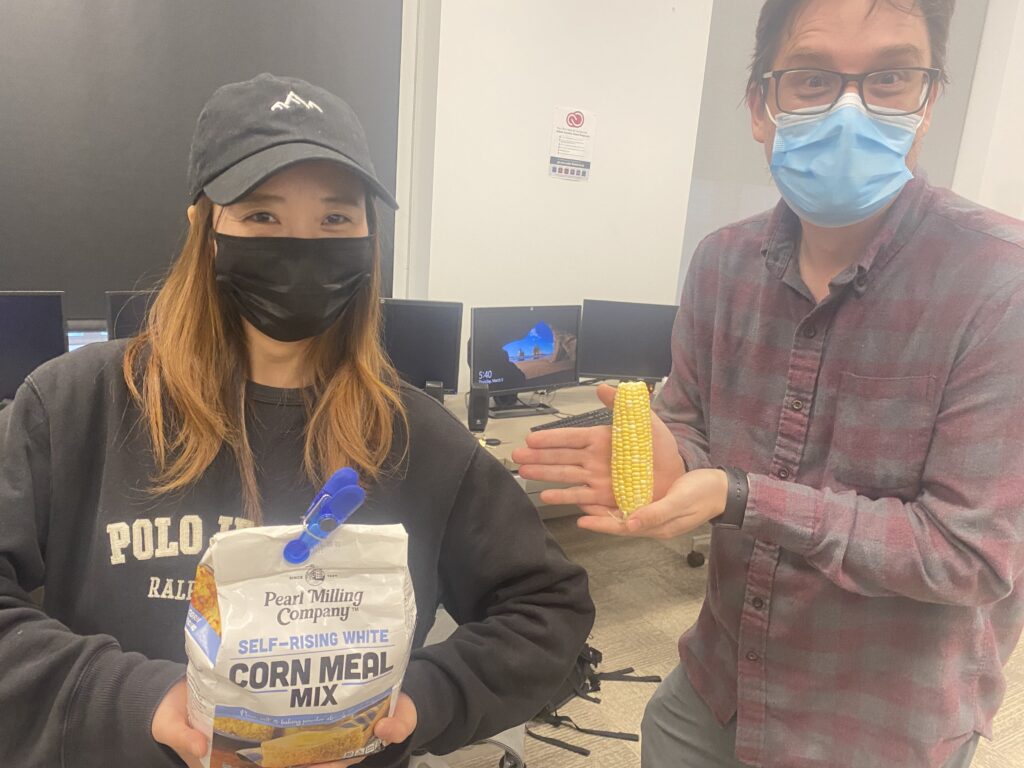

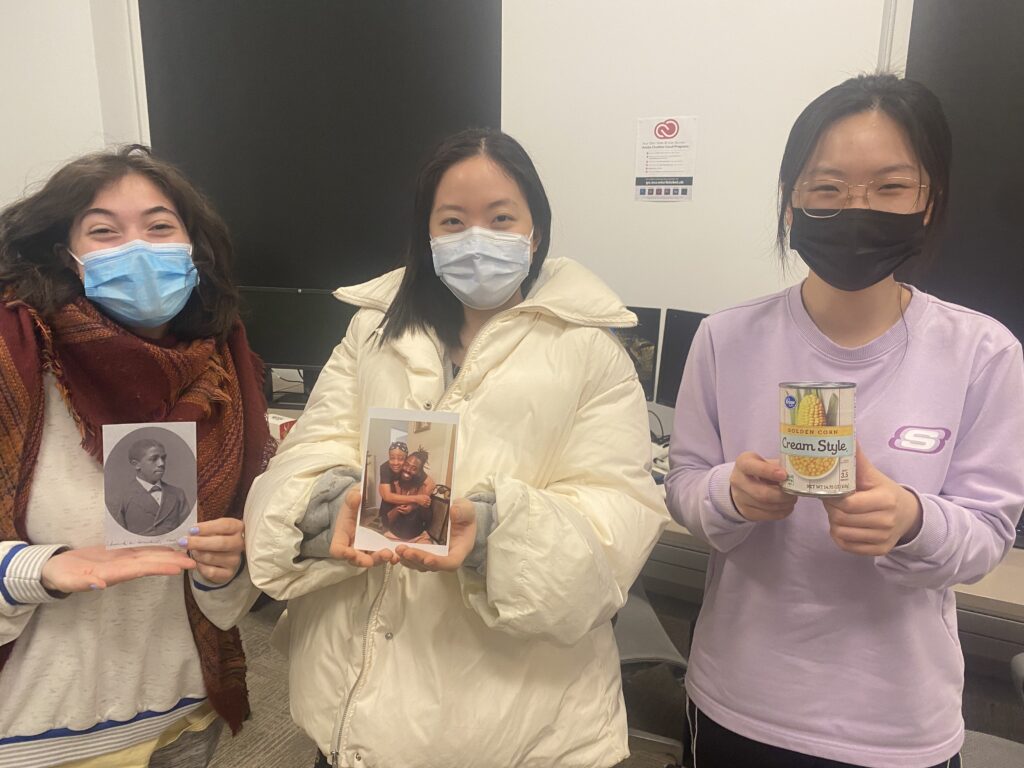

As the audio played, for the movement aspect I passed around a picture of Henry Blair, a photo of my grandmother hugging me, a can of creamed corn, corn meal mix, and a whole ear of corn. These physical object served as tools of embodiment. I wanted the participants to actively listen, literally, by passing the objects around this put not only my personal memory in motion but also created new memories while evoking past memories. Sankofa is a Ghanaian proverb meaning looking back to go forward, go back and fetch it.

Below are a few photos of my classmates and Alex (the instructor of the course) holding the memory technology.

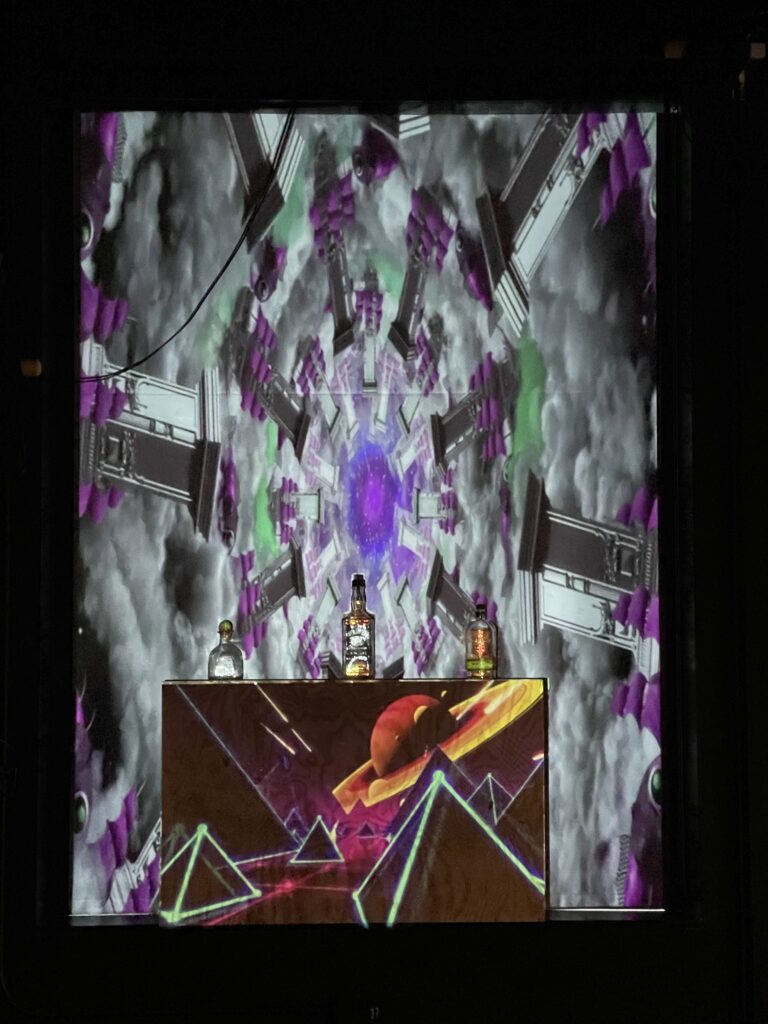

Cycle II – Bar: Mapped by Alec Reynolds

Posted: April 12, 2022 Filed under: Uncategorized Leave a comment »This iteration of the project was the realization of the first cycle, with physical assets, the framework of the Izzy patch, and actual mapped graphics. More specifically, a section in the ACCAD Motion Lab was selected for its large backdrop (which happens to be a blackout curtain), and versatile lighting opportunities.

There are multiple pieces of this larger projection mapping project:

- Physical Assets: These are the physical pieces that require a mapping and will have a digital projection placed on them uniquely (bottles, screen, box)

- Mapping: The manipulation of content on the software side so that it assimilates the shape of the physical asset

- Communication: Using the effective TouchOSC software, I was able to create a communicating menu that could interact with Isadora and the project patch

- Reaction: The spectacle that occurs to emulate a specialty themed cocktail being ordered.

The projection actor in Isadora is a powerful projection mapping tool and lets you create quick and simple maps for a performance. Bar: Mapped features upwards of 7 maps with unique content going to each different layered asset.

The menu is the device by which each reaction is triggered. TouchOSC channels are enumerated in my Isadora patch, through which OSC listening actors attend to any input from my menu. When a drink is “ordered” it triggers the specific reaction.

The purpose of the project is to create an atmosphere of a nightclub with music in the background and attention drawn to the bar. During the cycle II performance, critical information and feedback was gathered. The volume of the background music is important so as not to drown out the effects of the reactions. It will also be necessary to manipulate the location of the background music so that it creates more of an atmospheric effect versus grabbing a users attention in a distracting way.

Moving into next cycle the following changes will take place:

- Decrease the length of a reaction (~20 sec for Cycle III)

- Paint physical assets white

- Have a louder audio source for reactions

- Modify position of background music

- Add club-like lighting

- Other secret additions…

The cycle III documentation will host a full breakdown of how the Isadora patch works, along with design choice insights.

Cycle 2 – Ashley Browne

Posted: April 10, 2022 Filed under: Uncategorized Leave a comment »For cycle 2, I wanted to start thinking about how the experience could be multiplayer and how both players would have to work together to create an end goal. In this current stage, one player is using the video synthesizer to pass a signal to the screen while player 2 is directly altering the image using the Makey Makey as a controller. The Makeymakey uses the arrow keys to increase the scale of each sphere or color on screen. The video synthesizer can affect the clarity of the image and its hue and contrast.

Overall, I think this was a great step towards the next iteration of the project. Some of the feedback I received was about introducing sound and how that could connect to both players having specific goals in the experience. Also, I need to continue stress testing the unity file because after a while it seemed to have bugs.

For cycle 3, I want to finalize what the audio may be like and figure out what the 2nd video input will be for the synthesizer. I also will be finalizing the hardware for the synthesizer, using parts that are sturdier for multiple uses– to prepare for the open house.

Cycle 1 Project

Posted: April 10, 2022 Filed under: Uncategorized Leave a comment »For my cycle 1 project I wanted to keep with the element of reveal. My intrests live in earth wisdom and agricultural knowledge rooted in the unseen. Within this iteration of the project I wanted to reveal some of the gems the Black growers in my community are graciouly sharing through interviews with me. My graduate studies are based in bridging dance, agricultural, and technology (D.A.T). I am working from a quote from Nikki Giovanni “If trees could talk what would they say?”

I am continously insired by the soil and its ability to generate life, transmute energy and produce healing to humans. The soil in this work is central to the grounding for the entire experience. My goal is to connect and ground the participant in the moment to be present with the images and the knowledge provided by the growers.

My set up consists of the Makey Makey, soil, and two tree models for the participates to touch indicating the wisdom held in trees/people in my community. I wrapped brown pipe cleaners around the interior of the plastic “tree bark” to conduct an eletrical current to set off the digital engagemet with the Makey Makey. As participants touch the bark of the tree model, their hand must be grounded, touching the soil. This connection reveals an image of the growers and activates the interviews.

I realized in this cycle I needed to cut the interviews down to about 30 seconds each because I imagine this as a gallery installation. I watched the bodies in the room shift and kind of zone out as they tried to listen to the stories being told. Audio seems to be key for the experience to translate how I intend. I would like the experience to be so that the participate knows when the experience begins and ends. I got feedback that there should be a more engaging componet. This comment came from a dancer which I agree. In the future I plan to work with the kinetic sensor to provide a movement based engagement for the participants to embody agricultural knowledge to evoke ecomemories. For my second cycle I plan to project four growers and their stories. I would like to add two more trees and create an environment where each grower can be heard and engaged with simultaniously in a green space.

Here are the inner workings of my Isadora patch.

Cycle 2 – Seasons – Min Liu

Posted: April 9, 2022 Filed under: Uncategorized Leave a comment »For this cycle, I wanted to create interactive virtual environments that contribute to embodied learning. I chose Seasons as the theme of this project. Participants can interact with nature things in specific seasons using their bodies. For example, spring & flowers, summer & rain, autumn & falling leaves, winter & snow.

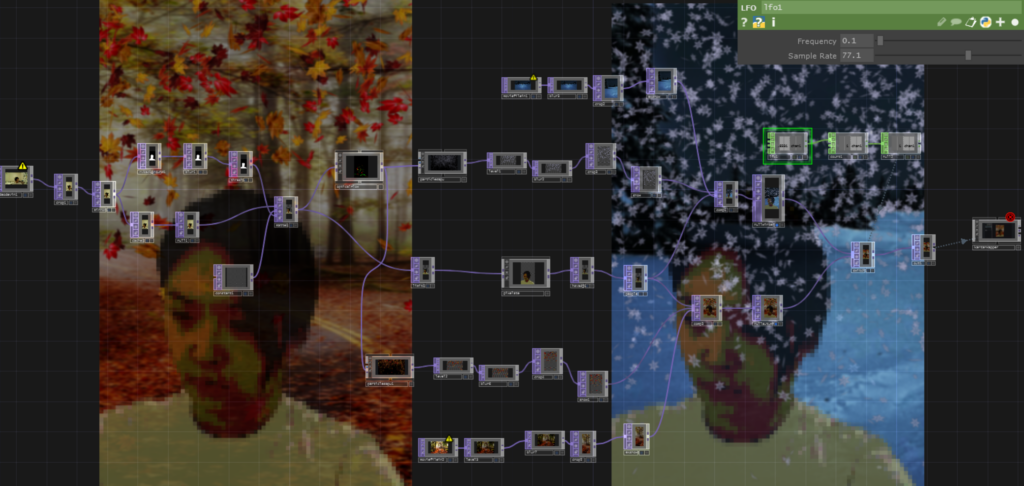

I worked with Touchdesigner this time since Isadora was not friendly to what I wanted to do. I used webcam as the input device. In Touchdesigner, the NVbackground actor can remove the background and the Optical flow actor can track body movements. By ParticleGup, different kind of interactive particles can be created. Since I was not familiar with Touchdesign, I spent a long time figuring out how to transit from one scene to another in time order which is very easy to realize in Isadora. In this cycle, I only created the autumn and winter scenes. I am still learning about other interactive visual effects using different body languages, and how to trigger sound by movements in Touchdesigner. This is the Touchdesigner patch:

I was excited to install it in the motion lab. To avoid shadow, Oded told me that I could project the image onto the back of the screen, and people stand in front of the screen to interact with virtual environments. It’s magical to me seeing both digital figures and human shadows reflected on the curtain from people standing before and behind the curtain.

Participants were happy to interact with snows and leaves. But due to the limitation of the webcam, they must stand in a specific area, or they were out of the screen. I will try Kinect next time to see if I can solve the problem. I also learned that lighting is important because it decides the quality of the real time input video.

Shadrick gave me some nice advice to further develop this learning system. First, I can add some fun filters to digital figures, like wearing them hats in winter. Second, different countries have different climates characteristics. I can build environments based on different regions. For the next cycle, I will also combine media-enhanced objects and environments together.

Here is the recording of the experience: