Cycle 3: It Takes 3

Posted: May 1, 2025 Filed under: Uncategorized | Tags: Cycle 3, Interactive Media, Interactive Shadow, Isadora, magic mirror Leave a comment »This project was the final iteration of my cycles project, and it has changed quite a bit over the course of three cycles. The base concept stayed the same but the details and functions changed as I received feedback from my peers and changed my priorities with the project. I even made it so three people could interact with it.

I wanted to focus a bit more on the sonic elements as I worked on this cycle. I started having a lot of ideas on how to incorporate more sonic elements, including adding soundscapes to each scene. Unfortunately I ran out of time to fully flesh out this particular idea and didn’t want to incorporate a half baked idea and end up with an unpleasant cacophony of sound. But I did add sonic elements to all of my mechanisms. I kept the chime when the scene became saturated, as well as the first time someone raised their arms to change a scene background. I did add a gate so this only happened the first time, to control the sound.

A new element I added was a Velocity actor that caused the image inside the silhouettes to explode, and when it did, it triggered a Sound Player with a POP! sound.This pop was important because it drew attention to the explosion to indicate that something happened and something they did caused it. This actor was also plugged into a Inside Range actor that was set to trigger a riddle at a certain velocity just below the range to trigger the explosion.

The other new mechanism I added was based on the proximity to the sensor of one of the users. The z-coordinate data for Body 2 was plugged into a Limit-Scale Value actor to translate the coordinate data into numbers I could plug into the volume input to make the sound louder as the user gets closer. I really needed to spend time in the space with people so I could fine-tune the numbers to the space, which I ended up doing during the presentation when it wasn’t cooperating. I also ran into the issue of needing that Sound Player to not always be on, otherwise that would have been overwhelming. I decided to have the other users have their hands raised to turn it on (it was actually only reading the left hand of Body 3 but for ease of use and riddle-writing, I just said both other people had to have them up).

I have continued adjusting the patch for the background change mechanism (raising the right hand of Body 1 changes the silhouette background and raising the left hand changes the background). My main focus here was making the gates work so it only changes one time while the hand is raised (gate doesn’t reopen until hand goes down), so I moved the gate to be in front of the Random actor in this patch. As I reflect on this, I think I know why it didn’t work; I didn’t program it to turn the gate on based on hand position, it only holds the trigger until the first one is complete, which is pretty much immediately. I think I would need an Inside Range actor to tell the gate to turn on when the hand is below a certain position, or something to that effect.

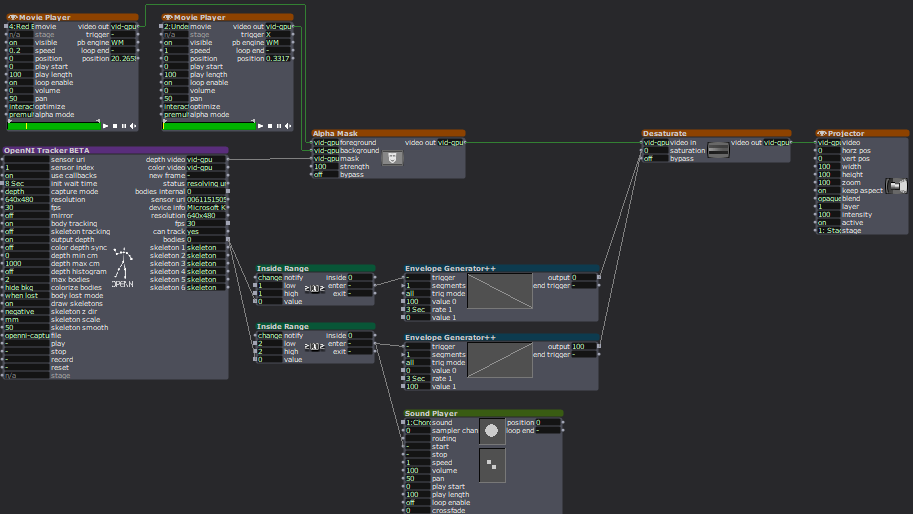

I sat down with Alex to work out some issues I had been having, such as my transparency issue. This was happening because the sensor was set to colorize the bodies, so Isadora was seeing red and green silhouettes. This was problematic because the Alpha Mask looks for white, so the color was not allowing a fully opaque mask. We fixed this with the addition of an HCL Adjust actor between the OpenNI Tracker and the Alpha Mask, with the saturation fully down and the luminance fully up.

The other issue Alex helped me fix was the desaturation mechanism. We replaced the Envelope Generators with Trigger Value actors plugged into a Smoother actor. This made for smooth transitions between changes because it allowed Isadora to make changes from where it’s already at, rather than from a set value.

The last big change I made to my patch was the backgrounds. Because I was struggling to find decent quality images of the right size for the shadow silhouettes, I took the information of one image that looked nice and created six simple backgrounds in Procreate. I wanted them to have bold colors and sharp lines so they would stand out against the moving backgrounds and have enough contrast both saturated and not. I also decided to use recognizable location-based backdrops since the water and space backdrops seemed to elicit the most emotional responses. In addition to the water and space scenes, I added a forest, mountains, a city, and clouds rolling across the sky.

These images worked really well against the realistic backgrounds. It was also fun to watch the group react, especially to the pink scene. They got really excited if they got a sparkle full and clear on their shadow. There was also a moment where they thought the white dots in the rainbow and purple scenes were a puzzle, which could be a cool idea to explore. I did have an idea to create a little bubble-popping game in a scene with a zoomed-in bubble as the main background.

The reactions I got were overwhelmingly positive and joyful. There was a lot of laughter and teamwork during the presentation, and they spent a lot of time playing with it. If we had more time, they likely would have kept playing and figuring it out, and probably would have loved a fourth iteration (I would have loved making one for them). Michael specifically wanted to learn it enough to manipulate it, especially to match up certain backgrounds (I would have had them go in a set order because accomplishing this at random would be difficult, though not impossible). Words like “puzzle” and “escape room” were thrown around during the post-experience discussion, which is what I was going for with the addition of the riddles I added to help guide users.

The most interesting feedback I got was from Alex who said he had started to experience himself ‘in third person’. What he means by this is that he referred to the shadow as himself while still recognizing it as a separate entity. If someone crossed in front of the other, the sensor stopped being able to see the back person and ‘erased’ them from the screen until it re-found them. This prompted that person to often go “oh look I’ve been erased”, which is what Alex was referring to with his comment.

I’ve decided to include my Cycle 3 score here as well, because it has a lot of things I didn’t get to explain here, and was functinoally my brain for this project. I think I might go back to this later and give some of the ideas in there a whirl. I think I’ve learned enough Isadora that I can figure out a lot of it, particularly those pesky gates. It took a long time, but I think I’m starting to understand gate logic.

The presentation was recorded in the MOLA so I will add that when I have it :). In the meantime, here’s the test video for the velocity-explode mechanism, where I subbed in a Mouse Watcher to make my life easier.

Cycle 1: It Takes Two Magic Mirror

Posted: April 1, 2025 Filed under: Uncategorized | Tags: cycle 1, Interactove Media, Isadora, magic mirror Leave a comment »My project is a magic mirror of sorts that allows for interaction via an XBox One Kinect depth sensor. The project is called “It Takes Two”, because it takes two people to activate. In its single-user state, the background and user’s shadow are desaturated with a desaturation actor linked to the “Bodies” output of the OpenNI Tracker BETA actor. When the sensor only detects 1 body (via an Inside Range actor), it puts the saturation value at 0. When a second body is detected, it sets the saturation value at 100. I have utilized envelope generators to ensure a smooth fade in and fade out of saturation.

The above patch was added onto the shadow mechanism I created. I did some research on how to achieve this, and experimented with a few different actors before concluding that I needed an Alpha Mask. The LumaKey actor was one I played with briefly but it did not do what I needed. I found a tutorial by Mark Coniglio, which is how I ended up in alpha-land, and it worked beautifully. I still had to tinker with the specific settings within the OpenNI Tracker (and there is still more to be fine-tuned), but I had a functional shadow.

My goal with Cycle 1 was to establish the base for the rest of my project so I could continue building off it. I sectioned off my time to take full advantage of lab time to get the majority of my work done. I stuck to this schedule well and ended Cycle 1 in a good position, ready to take on Cycle 2. I gave myself a lot of time for troubleshooting and fine-tuning, which allowed me to work at a steady, low-stress pace.

I did not anticipate having so much difficulty finding colorscape videos that would provide texture and contrast without being overwhelming or distracting. I spent about 45 minutes of my time looking for videos and found a few. I also ended up reusing some video from Pressure Project 2 that worked nicely as a placeholder and resulted in some creative insight from a peer during presentations. I will have to continue searching for videos, and I am also considering creating colored backdrops and experimenting with background noise. I spent about 20 minutes of my time searching for a sound effect to play during the saturation of the media. I wanted a sound to draw the users’ attention to the changes that are happening.

Overall, the reactions from my peers were joyful. They were very curious to discover how my project worked (there was admittedly not much to discover at this point as I only have the base mechanisms done). They seemed excited to see the next iteration and had some helpful ideas for me. One idea was to lean into the ocean video I borrowed from PP2, which they recognized, causing them to expect a certain interaction to occur. I could have different background themes that have corresponding effects, such as a ripple effect on the ocean background. This would be a fun idea to play with for Cycle 2 or 3.

The other suggestions matched closely to my plans for the next cycles. I did not present on a projector because my project is so small at the moment, but they suggested a bigger display would better the experience (I agree). My goal is to devise a setup that would fit my project. In doing so, I need to keep in mind the robustness of my sensor. I needed a very plain background, as it liked to read parts of a busy background as a body, and occasionally refused to see a body. Currently, I think the white cyc in the MOLA would be my best bet because it is plain and flat.

The other major suggestion was to add more things to interact with. This is also part of my plan and I have a few ideas that I want to implement. These ‘easter eggs’, we’ll call them, will also be attached to a sound (likely the same magical shimmer). Part of the feedback I received is that the sound was a nice addition to the experience. Adding a sonic element helped extend the experience beyond my computer screen and immerse the user into the experience.

This is a screen recording I took, and it does a great job demonstrating some of the above issues. I included the outputs of the OpenNI Tracker actor specifically to show the body counter (the lowest number output). I am the only person in the sensor, but it is reading something behind me as a body, so I adjusted the sensor to get rid of that so I could demonstrate the desaturation. Because it saw the object behind me as a body, Isadora responded as such and saturated the image. The video also shows how the video resaturates breifly before desaturating when I step out and step back in, which is a result of the envelope generator. (The sound was not recording properly, please see above for sound sample.)

My score was my best friend during this project. I had it open any time I was working on this project. I ended up adding to it regularly throughout the process. It became a place where I collected my research via saved links and tracked my progress with screenshots of my Isadora stage. It helped me know where I was at with my progress so I knew what to work on next time I picked it up and how to pace myself across this cycle by itself and all three cycles together. I even used to to store ideas I had for this or a future cycle. I will continue to build on this document in future cycles, as it was incredibly helpful it keeping my work organized.