Cycle 3 – Interactive Immersive Radio

Posted: May 1, 2025 Filed under: Uncategorized | Tags: Cycle 3 Leave a comment »I started my cycle three process by reflecting on the performance and value action of the last cycle. I identified some key resources that I wanted to use and continue to explore. I also decided to focus a bit more on the scoring of the entire piece, since many of my previous projects were very loose and open ended. I was drawn to two specific elements based on the feedback I had received previously. One of which was the desire to “play” the installation more like a traditional instrument. This was something that I had deliberately been trying to avoid in past cycles, so I decided maybe it was about time to give it a try and make something a little more playable. The other element I wanted to focus on was the desire to discover hidden capabilities and “solve” the installation like a puzzle. Using these two guiding principles, I began to create a rough score for the experience.

I addition to using the basic MIDI instruments, I also wanted to experiment with some backing tacks from a specific song, in this case, Radio by Sylvan Esso. In a previous project for the Introduction to Immersive Audio class, I used a program called Spectral Layers to “un-mix” a pre-recorded song. This process takes any song and attempts to separate the various instruments into their isolated tracks, to varying degrees of success. It usually takes a few try’s, experimenting with various settings and controls to get a good sounding track. Luckily, the program allows you to easily unmix and separate track components and re-combine elements to get something that is fairly close to the original. For this song I was able to break it down into four basic tracks; Vocals, Bass, Drums and Synth. The end result is not perfect by any means, but it was good enough to get the general essence of the song when played together.

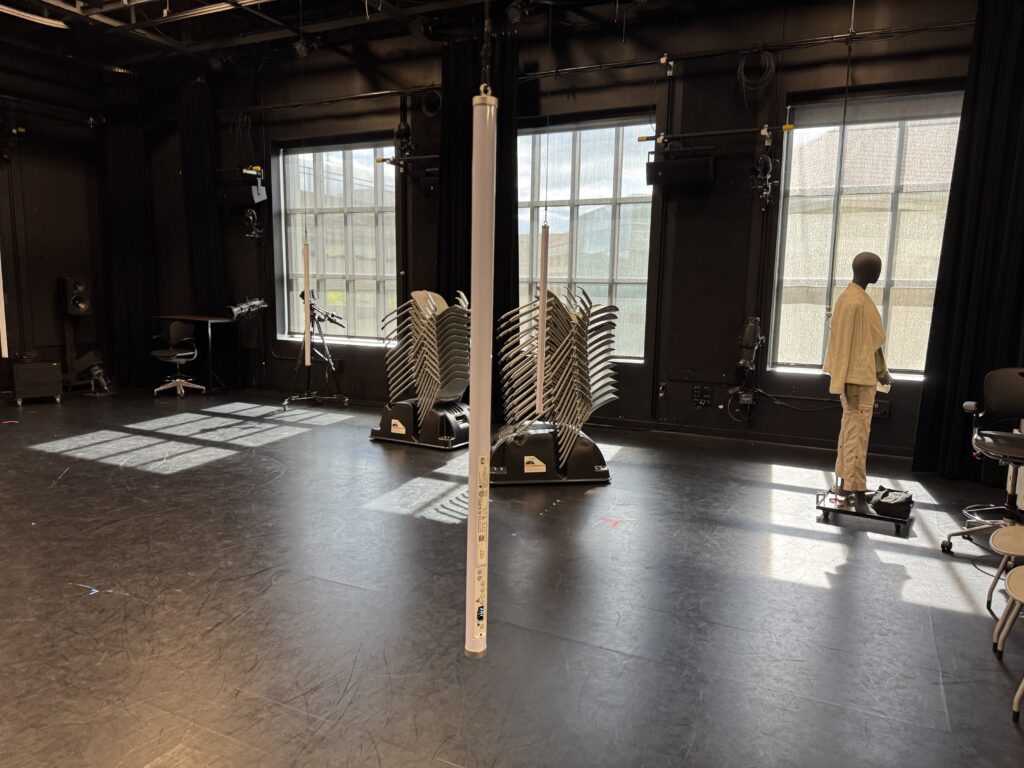

Another key element I wanted to focus on was the lighting and general layout and aesthetic of the space. I really enjoyed the Astera Titan Tubes that I used in the last cycle and wanted to try a more integrated approach to triggering the lighting console from Touch Designer. I received some feedback that people were looking forward to a new experience from previous cycles, so that motivated me to push myself a little harder and come up with a different layout. The light tubes have various options for mounting them and I decided to hang them from the curtain track to provide some flexibility in placement. Thankfully, we had the resources already in the Motion Lab to make this happen easily. I used spare track rollers and some tie-line and clips left over from a previous project to hang the lights on a height adjustable string that ended up working really well. This took a few hours to put together, but I think this resource will definitely get used in the future by people in the Motion Lab.

In order to make the experience “playable” I decided to break out the bass line into its component notes and link the trigger boxes in Touch Designer to correspond to the musical score. This turned out to be the most difficult part of the process. For starters, I needed to quantify the number of notes and they cycles they repeat in. Essentially, this broke down into 4 notes, each played 5 times sequentially. Then I also needed to map the boxes that would trigger the notes into the space. Since the coordinates are Cartesian x-y and I wanted the boxes arranged into a circle, I had to figure out a way to extract the location data. I didn’t want to do the math, so I decided to use my experience in Vectorworks as a resource to map out the note score. This ended up working out pretty well and the resulting diagram has an interesting design aesthetic itself. My first real life attempt in the motion lab was working as planned, but the actual playing of the trigger boxes in time was virtually impossible. I experimented with various sizes and shapes, but nothing worked perfectly. I settled on some large columns that a body would easily trigger.

The last piece was to link the lighting playback with the Touch Designer triggers. I had some experience with this previously and more recently have been exploring the OSC functionality more closely. It took a few tries, but I eventually sent the correct commands and got the results I was looking for. Essentially, I programmed all the various lighting looks I wanted to use on “submaster” faders and then sent the commands to move the faders. This allowed me to use variable “fade times” by using the “Lag” Chop in Touch Designer to control the on and off rate of each trigger. I took another deep dive into the ETC eos virtual media server and pixel mapping capabilities, which was sometimes fun and sometimes frustrating. It’s nice to have multiple ways to achieve the same effect, but it was sometimes difficult to find the right method based on how I wanted to layer everything. I also maxed out the “speed” parameter, which was unfortunate because I could not match the BPM of the song, even though the speed was set to 800%.

I was excited for the performance and really enjoyed the immersive nature of the suspended tubes. Since I was the last person to go, we were already running way over on time and I was a bit rushed to get everything set up. I had decided earlier that I wanted to completely enclose the inner circle with black drape. This involved moving all 12 curtains in the lab onto different tracks, something that I knew would take some time and I considered cutting this since we were running behind schedule. I’m glad I stuck to my original plan and took the extra 10 minutes to move things around because the black void behind the light tubes really increased the immersive qualities of the space. I enjoyed watching everyone explore and try to figure out how to activate the tracks. Eventually, everyone gathered around the center and the entire song played. Some people did run around in the circle and activate the “base line” notes, but the connection was never officially made. I also hid a rainbow light cue in the top center that was difficult to activate. If I had a bit more time to refine, I would have liked to make more “easter eggs” hidden around the space. Overall, I was satisfied with how the experience was received and look forward to possible future cycles and experimentation.

Cycle 3: It Takes 3

Posted: May 1, 2025 Filed under: Uncategorized | Tags: Cycle 3, Interactive Media, Interactive Shadow, Isadora, magic mirror Leave a comment »This project was the final iteration of my cycles project, and it has changed quite a bit over the course of three cycles. The base concept stayed the same but the details and functions changed as I received feedback from my peers and changed my priorities with the project. I even made it so three people could interact with it.

I wanted to focus a bit more on the sonic elements as I worked on this cycle. I started having a lot of ideas on how to incorporate more sonic elements, including adding soundscapes to each scene. Unfortunately I ran out of time to fully flesh out this particular idea and didn’t want to incorporate a half baked idea and end up with an unpleasant cacophony of sound. But I did add sonic elements to all of my mechanisms. I kept the chime when the scene became saturated, as well as the first time someone raised their arms to change a scene background. I did add a gate so this only happened the first time, to control the sound.

A new element I added was a Velocity actor that caused the image inside the silhouettes to explode, and when it did, it triggered a Sound Player with a POP! sound.This pop was important because it drew attention to the explosion to indicate that something happened and something they did caused it. This actor was also plugged into a Inside Range actor that was set to trigger a riddle at a certain velocity just below the range to trigger the explosion.

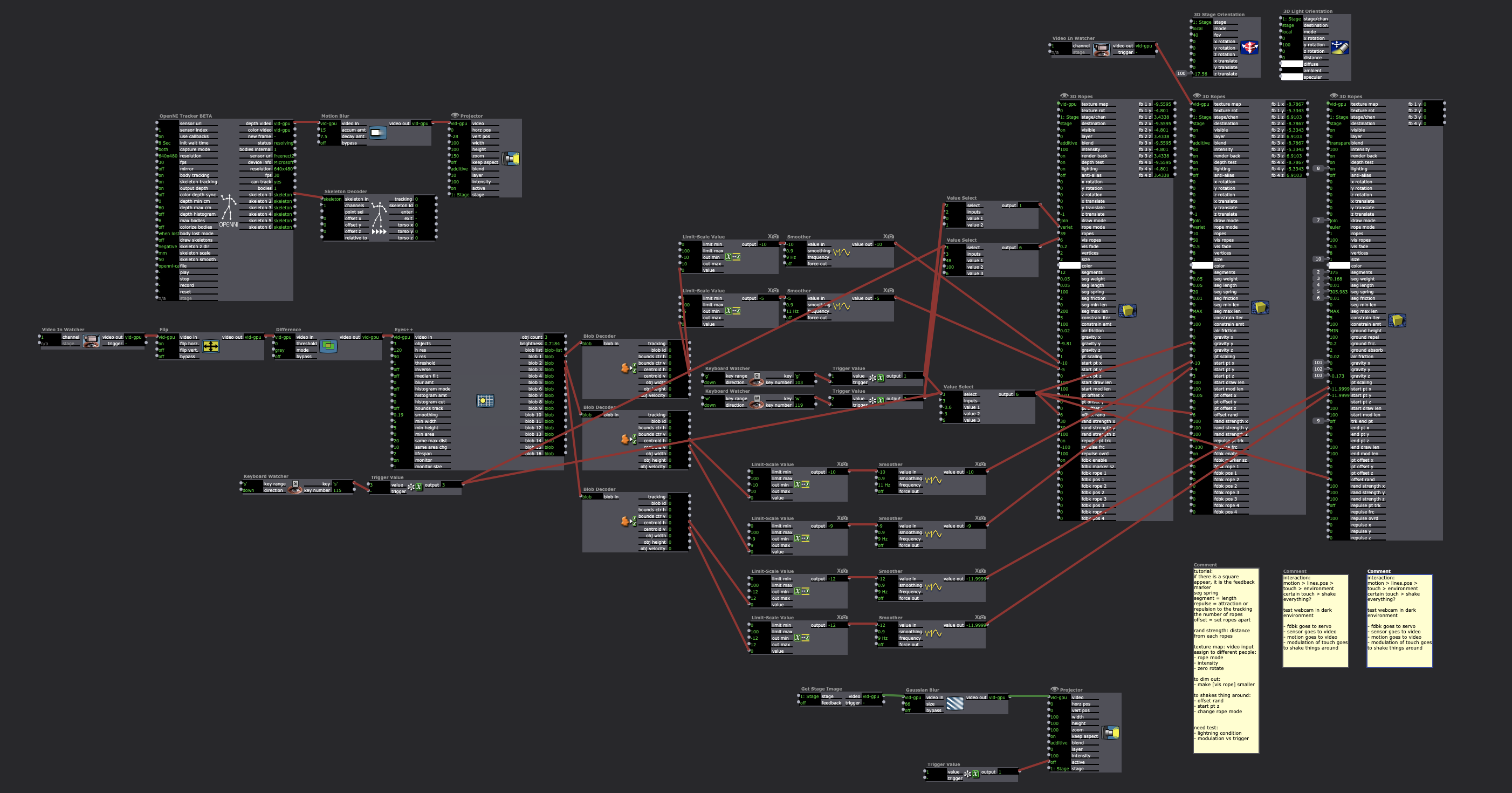

The other new mechanism I added was based on the proximity to the sensor of one of the users. The z-coordinate data for Body 2 was plugged into a Limit-Scale Value actor to translate the coordinate data into numbers I could plug into the volume input to make the sound louder as the user gets closer. I really needed to spend time in the space with people so I could fine-tune the numbers to the space, which I ended up doing during the presentation when it wasn’t cooperating. I also ran into the issue of needing that Sound Player to not always be on, otherwise that would have been overwhelming. I decided to have the other users have their hands raised to turn it on (it was actually only reading the left hand of Body 3 but for ease of use and riddle-writing, I just said both other people had to have them up).

I have continued adjusting the patch for the background change mechanism (raising the right hand of Body 1 changes the silhouette background and raising the left hand changes the background). My main focus here was making the gates work so it only changes one time while the hand is raised (gate doesn’t reopen until hand goes down), so I moved the gate to be in front of the Random actor in this patch. As I reflect on this, I think I know why it didn’t work; I didn’t program it to turn the gate on based on hand position, it only holds the trigger until the first one is complete, which is pretty much immediately. I think I would need an Inside Range actor to tell the gate to turn on when the hand is below a certain position, or something to that effect.

I sat down with Alex to work out some issues I had been having, such as my transparency issue. This was happening because the sensor was set to colorize the bodies, so Isadora was seeing red and green silhouettes. This was problematic because the Alpha Mask looks for white, so the color was not allowing a fully opaque mask. We fixed this with the addition of an HCL Adjust actor between the OpenNI Tracker and the Alpha Mask, with the saturation fully down and the luminance fully up.

The other issue Alex helped me fix was the desaturation mechanism. We replaced the Envelope Generators with Trigger Value actors plugged into a Smoother actor. This made for smooth transitions between changes because it allowed Isadora to make changes from where it’s already at, rather than from a set value.

The last big change I made to my patch was the backgrounds. Because I was struggling to find decent quality images of the right size for the shadow silhouettes, I took the information of one image that looked nice and created six simple backgrounds in Procreate. I wanted them to have bold colors and sharp lines so they would stand out against the moving backgrounds and have enough contrast both saturated and not. I also decided to use recognizable location-based backdrops since the water and space backdrops seemed to elicit the most emotional responses. In addition to the water and space scenes, I added a forest, mountains, a city, and clouds rolling across the sky.

These images worked really well against the realistic backgrounds. It was also fun to watch the group react, especially to the pink scene. They got really excited if they got a sparkle full and clear on their shadow. There was also a moment where they thought the white dots in the rainbow and purple scenes were a puzzle, which could be a cool idea to explore. I did have an idea to create a little bubble-popping game in a scene with a zoomed-in bubble as the main background.

The reactions I got were overwhelmingly positive and joyful. There was a lot of laughter and teamwork during the presentation, and they spent a lot of time playing with it. If we had more time, they likely would have kept playing and figuring it out, and probably would have loved a fourth iteration (I would have loved making one for them). Michael specifically wanted to learn it enough to manipulate it, especially to match up certain backgrounds (I would have had them go in a set order because accomplishing this at random would be difficult, though not impossible). Words like “puzzle” and “escape room” were thrown around during the post-experience discussion, which is what I was going for with the addition of the riddles I added to help guide users.

The most interesting feedback I got was from Alex who said he had started to experience himself ‘in third person’. What he means by this is that he referred to the shadow as himself while still recognizing it as a separate entity. If someone crossed in front of the other, the sensor stopped being able to see the back person and ‘erased’ them from the screen until it re-found them. This prompted that person to often go “oh look I’ve been erased”, which is what Alex was referring to with his comment.

I’ve decided to include my Cycle 3 score here as well, because it has a lot of things I didn’t get to explain here, and was functinoally my brain for this project. I think I might go back to this later and give some of the ideas in there a whirl. I think I’ve learned enough Isadora that I can figure out a lot of it, particularly those pesky gates. It took a long time, but I think I’m starting to understand gate logic.

The presentation was recorded in the MOLA so I will add that when I have it :). In the meantime, here’s the test video for the velocity-explode mechanism, where I subbed in a Mouse Watcher to make my life easier.

Video-Bop: Cycle 3

Posted: May 2, 2024 Filed under: Uncategorized | Tags: Cycle 3 Leave a comment »The shortcomings and learning lessons from cycle 2 provided strong direction for cycle 3

The audience found that the rules section of cycle 2 were confusing. Although, I intentionally labelled these essentials of spontaneous prose as rules, it was more of a framing mechanism to expose the audience to this style of creativity. Given this feedback, I opted to structure the experience so that they witnessed me performing video-bop prior to trying it themselves. Further, I tried to create a video-bop performance with media content which outlined some of the influences motivating this art.

I first included a clip from a 1959 interview with Carl Jung in which he is asked:

‘As the world becomes more technically efficient, it seems increasingly necessary for people to behave communally and collectively. Now, do you think it possible that the highest development of man may be to submerge his own individuality in a kind of collective consciousness?’.

To which Jung responds:

‘That’s hardly possible, I think there will be a reaction. A reaction will set in against this communal dissociation.. You know man doesn’t stand forever.. His nullification. Once there will be a reaction and I see it setting in.. you know when I think of my patients, they all seek their own existence and to assure their existence against that complete atomization into nothingness or into meaninglessness. Man cannot stand a meaningless life’

Mind, that this is from the year 1959. A quick google search can hint at the importance of that year for Jazz:

Further, I see there to be another overlapping timeline. That of the beat poets:

I want to point a few events:

29 June, 1949 – Allen Ginsberg enters Columbia Psychiatric Institute, where he meets Carl Solomon

April, 1951 – Jack Kerouac writes a draft of On the Road on a scroll of paper

25 October, 1951 – Jack Kerouac invents “spontaneous prose”

August, 1955 – Allen Ginsberg writes much of “Howl Part I” in San Francisco

1 November, 1956 – Howl and Other Poems is published by City Lights

8 August, 1957 – Howl and Other Poems goes on trial

1 October, 1959 – William S. Burroughs begins his cut-up experiments

While Jung’s awareness is not directly tied to American culture or jazz and adjacent artforms, I think he speaks broadly to the post WW2 society. I see Jung as an import and positive actor in advancing the domain of psychoanalysis and started working at a psychiatric hospital in 1900 in Zürich. Nonetheless, critique of the institutions of power and knowledge surrounding mental illness emerged in the 1960s most notably with Michel Foucault’s 1961 Madness and Civilization and in Ken Kesey’s (a friend of the beat poets) 1962 One Flew over the Cuckoo’s Nest. It’s unfortunate that a psychoanalytic method and theory developed by Jung which emphasizes dedication to the patient’s process of individuation, is absent in the treatment of patients in psychiatric hospitals throughout the 1900s. Clearly, Ginsberg’s experiences in a psychiatric institute deeply influenced his writings in Howl. I can’t help to connect Jung’s statement about seeing a reaction setting in to the abstract expressionism in jazz music and the beat movement occurring at this time.

For this reason I included a clip from the movie Kill your Darlings (2013) which captures a fictional yet realistic conversation had by the beat founders Allen Ginsburg, Jack Kerouac, and Lucian Carr upon meeting at Columbia University.

JACK

A “new vision?”

ALLEN

Yeah.

JACK

Sounds phony. Movements are cooked up by people who can’t write about the people who can.

LUCIEN

Lu, I don’t think he gets what we’re trying to do.

JACK

Listen to me, this whole town’s full of finks on the 30th floor, writing pure chintz. Writers, real writers, gotta be in the beds. In the trenches. In all the broken places. What’re your trenches, Al?

ALLEN

Allen.

JACK

Right.

LUCIEN

First thought, best thought.

ALLEN

Fuck you. What does that even

mean?!

JACK

Good. That’s one. What else?

ALLEN

Fuck your one million words.

JACK

Even better.

ALLEN

You don’t know me.

JACK

You’re right. Who is you?

Lucien loves this, raises an eyebrow. Allen pulls out his poem from his pocket.

I think this dialogue captures well the reaction setting in for these writers and how they pushed each other respectively in their craft and position within society. Interesting is Kerouac’s refute of being associated with a movement which is a stance he continued to hold into later life when asked about his role in influencing American countercultural movements of the 60s 1968 Interview with William Buckley. Further, this dialogue shows how Kerouac and Carr incited an artistic development in Ginsberg giving him the courage to break poetic rules and to be uncomfortably vulnerable in his life and work.

For me video-bop shares intellectual curiosities with that of the beats and a performative improvised artistic style with that of Jazz. So I thought performing a video-bop tune of sorts for the audience prior to them trying it would be a better way to convey the idea rather than having them read from Kerouac’s rules of spontaneous prose. See a rendition of this performance here:

With this long-winded explanation in mind, there were many technical developments driving improvements for cycle 3 of video-bop. Most important was the realization that interactivity and aesthetic reception is fostered better from play as opposed to rigid-timebound playback. The first 2 iterations of video-bop utilized audio-to-text timestamping technologies which were great at enabling programmed multimedia events to occur in time with a pre-recorded audio file. However, after attending a poetry night at Columbus’s Kafe Kerouac, the environment where poets read their own material and performed it live inspired me to remove the timebound nature of the media system and force the audience to write their own haikus as opposed to existing ones.

Most of the technical coding effort was spent on the smart-device web-app controller to make it more robust and ensure no user actions could break the system. I included better feedback mechanisms to let the users know that they completed steps correctly to submit media for their video-bop. Further, I made use of a google-images-download to allow users to pull media from google images as opposed to just Youtube which was an audience suggestion from cycle 2.

video-bop-cyc3 (link to web-app)

One challenge that I have yet to tackle, was the movement of media files as downloaded from a python script on my PC into the Isadora media software environment. During the performance, this was a manual step as I ran the script and dragged the files into Isadora and reformatted the haiku text file path. See the video-bop process here:

cycle three: something that tingles ~

Posted: May 1, 2024 Filed under: Final Project | Tags: Cycle 3 Leave a comment »In this iteration, i begin with an intention to :

– scale up the what I have in cycle 2 (eg: the number of sensors/motors, and imagery?)

– check out the depth camera (will it be more satisfying than webcam tracking?)

– another score for audience participation based on the feedback from cycle 2

– some touches on space design with more bubble wraps..

Here are how those goes…

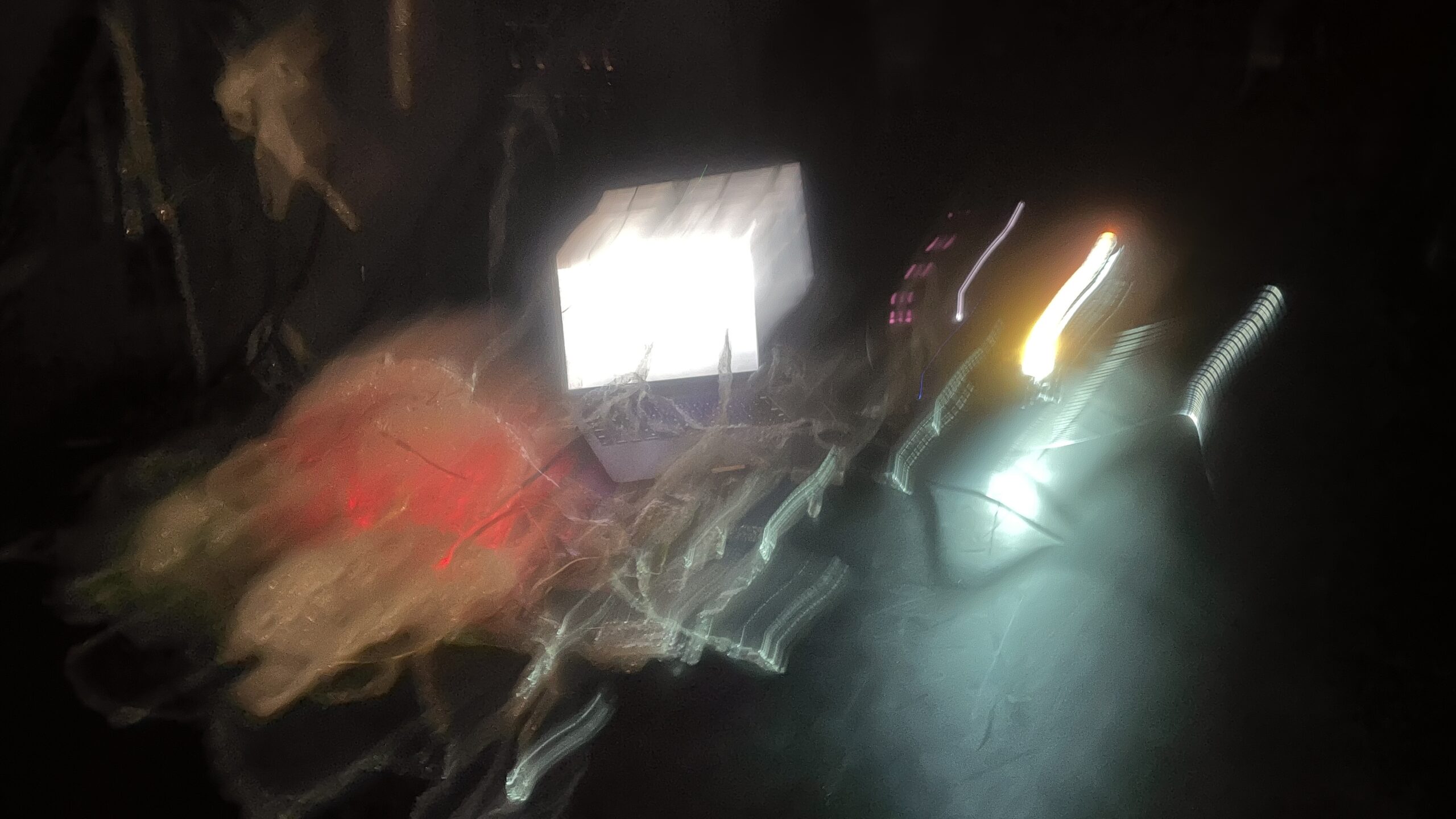

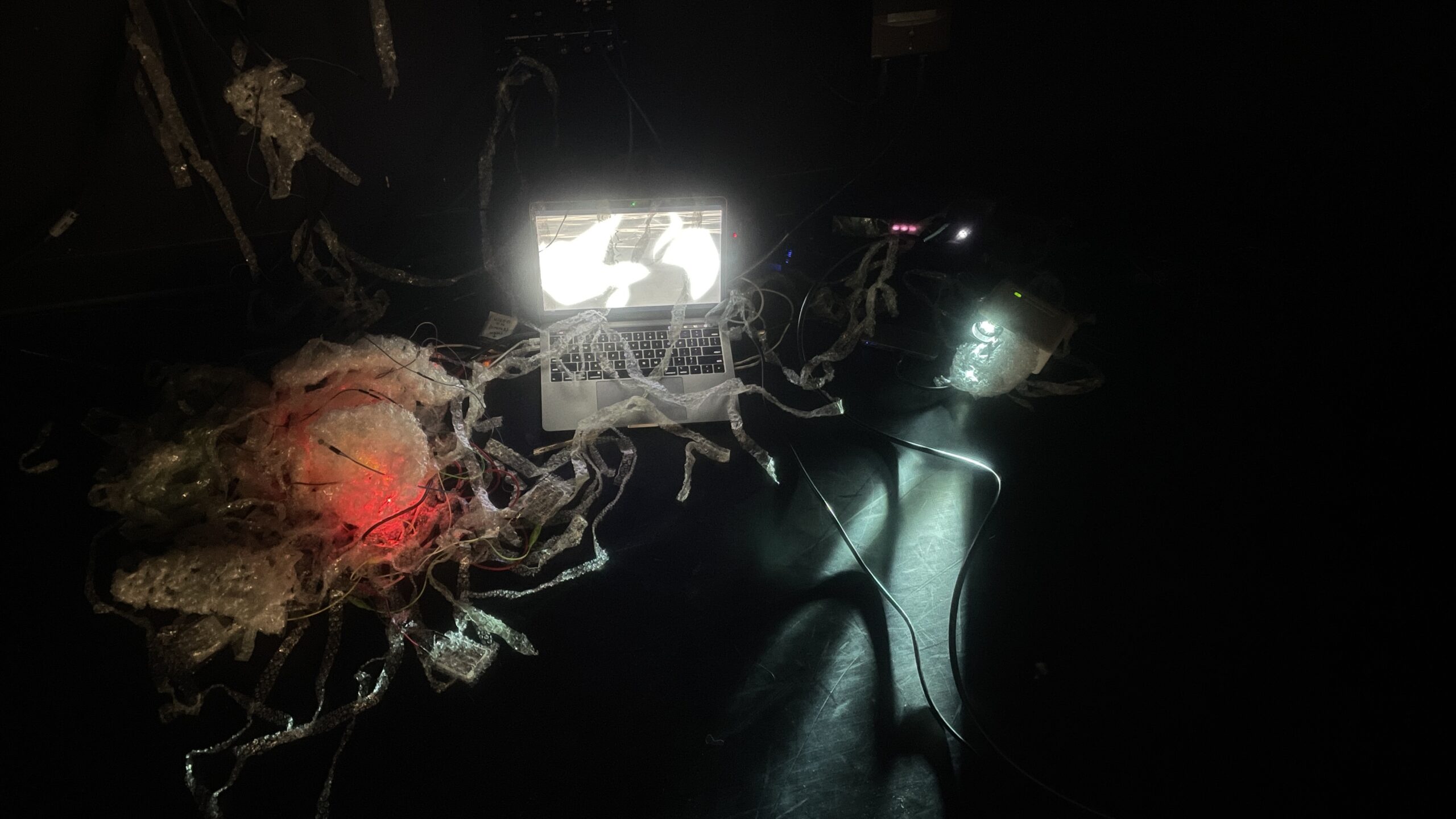

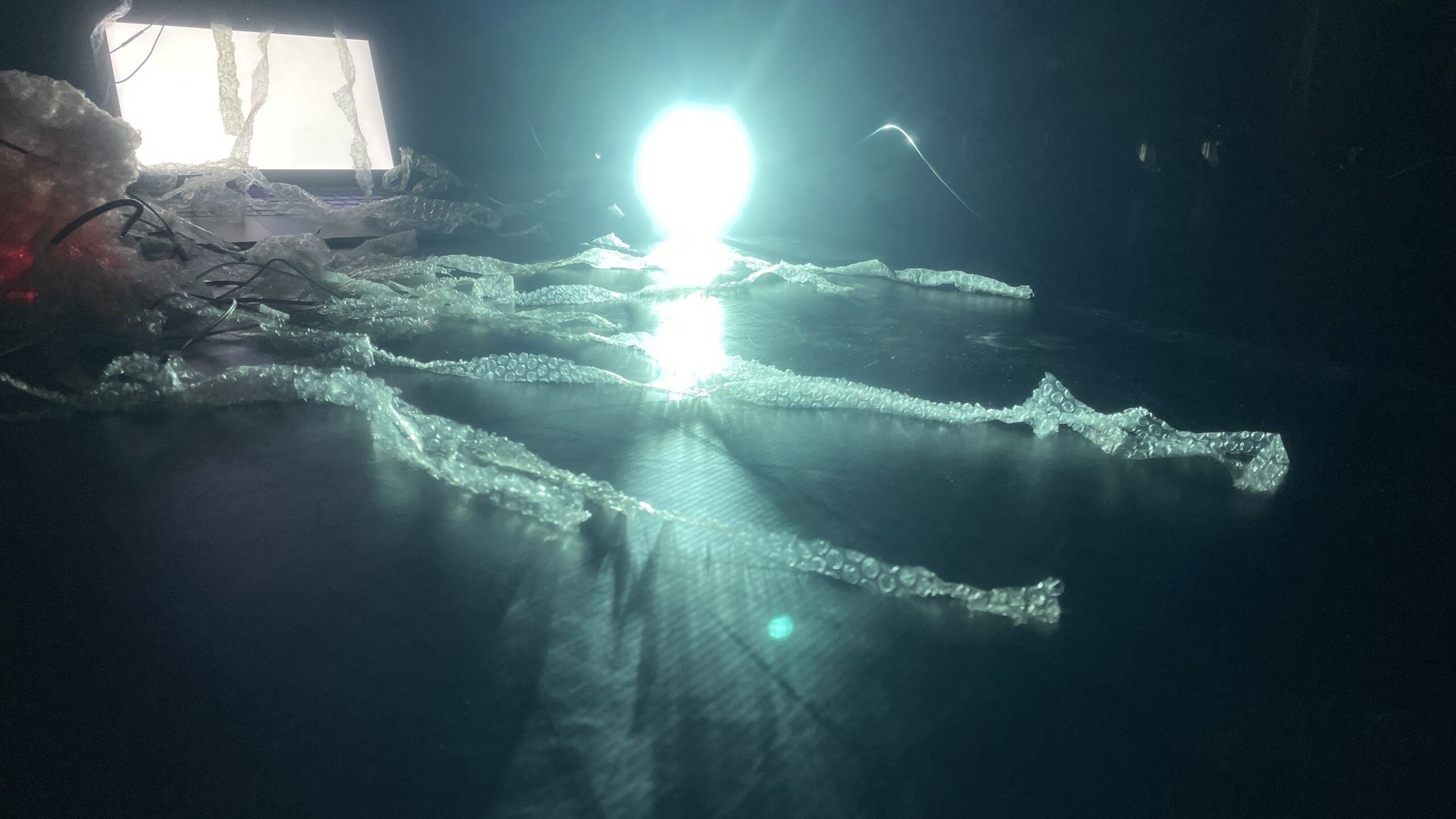

/scale up/

I added in more servo motors, this goes pretty smoothly, and the effects are instant — the number of servo wiggling gives it more of sense of a little creature.

I also attempted to add more flex/force sensor, but the data communication become very stuck, at times, Arduino is telling me that my board is disconnected, and the data does not go into Isadora smoothly at all. What I decide is: keep the sensors, and it is okay that the function is not going to be stable, at least it serves as a tactile tentacle for touching no matter it activates the visual or not.

I also tried to add a couple more imagery to have multiple scenes other than the oceanic scene I have been working with since the first cycle. I did make another 3 different imagery, but I feel that it kinda of become too much information packed there, and I cannot decide their sequence and relationship, so I decide leave them out for now and stick with my oceanic scene for the final cycle.

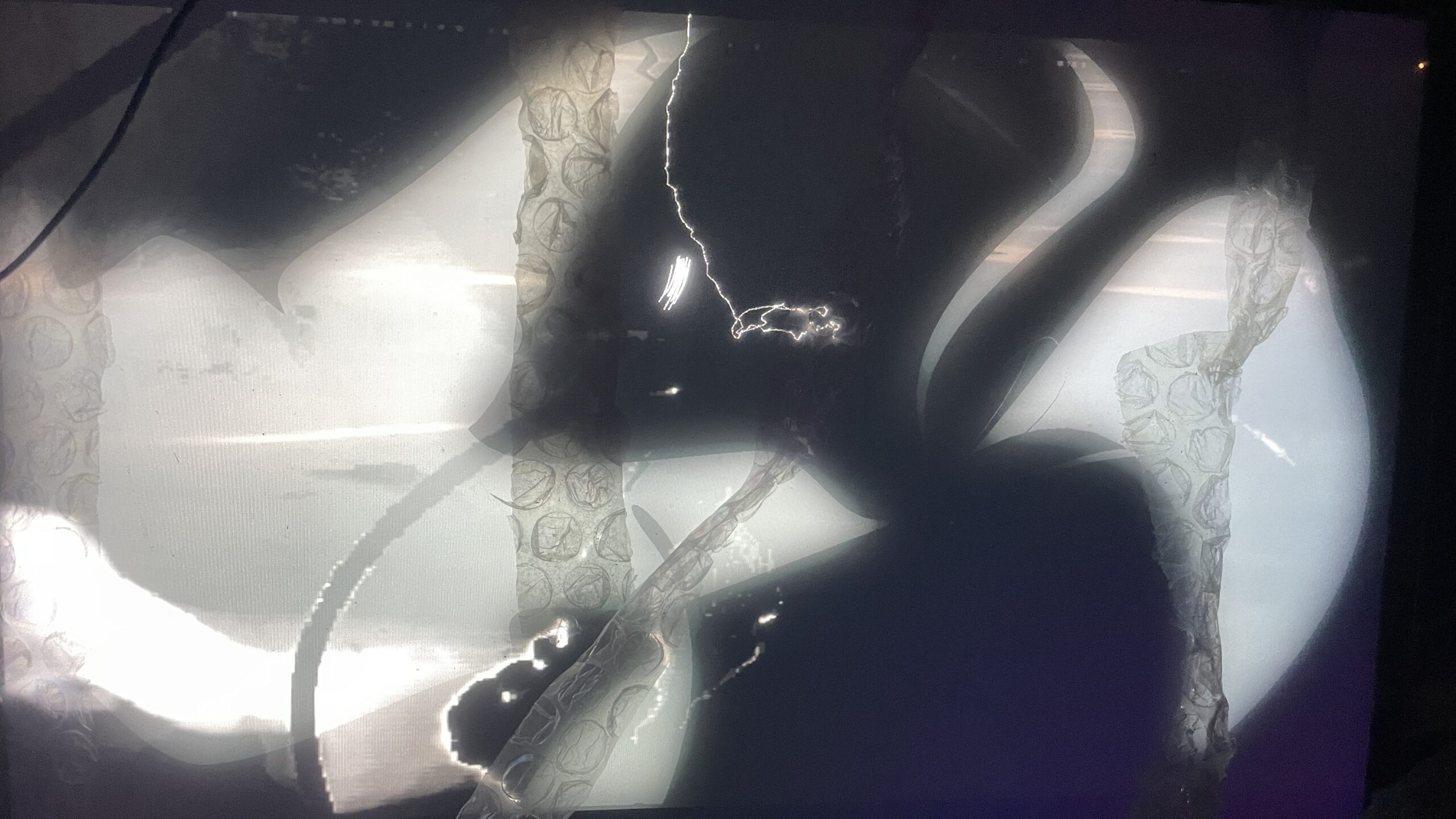

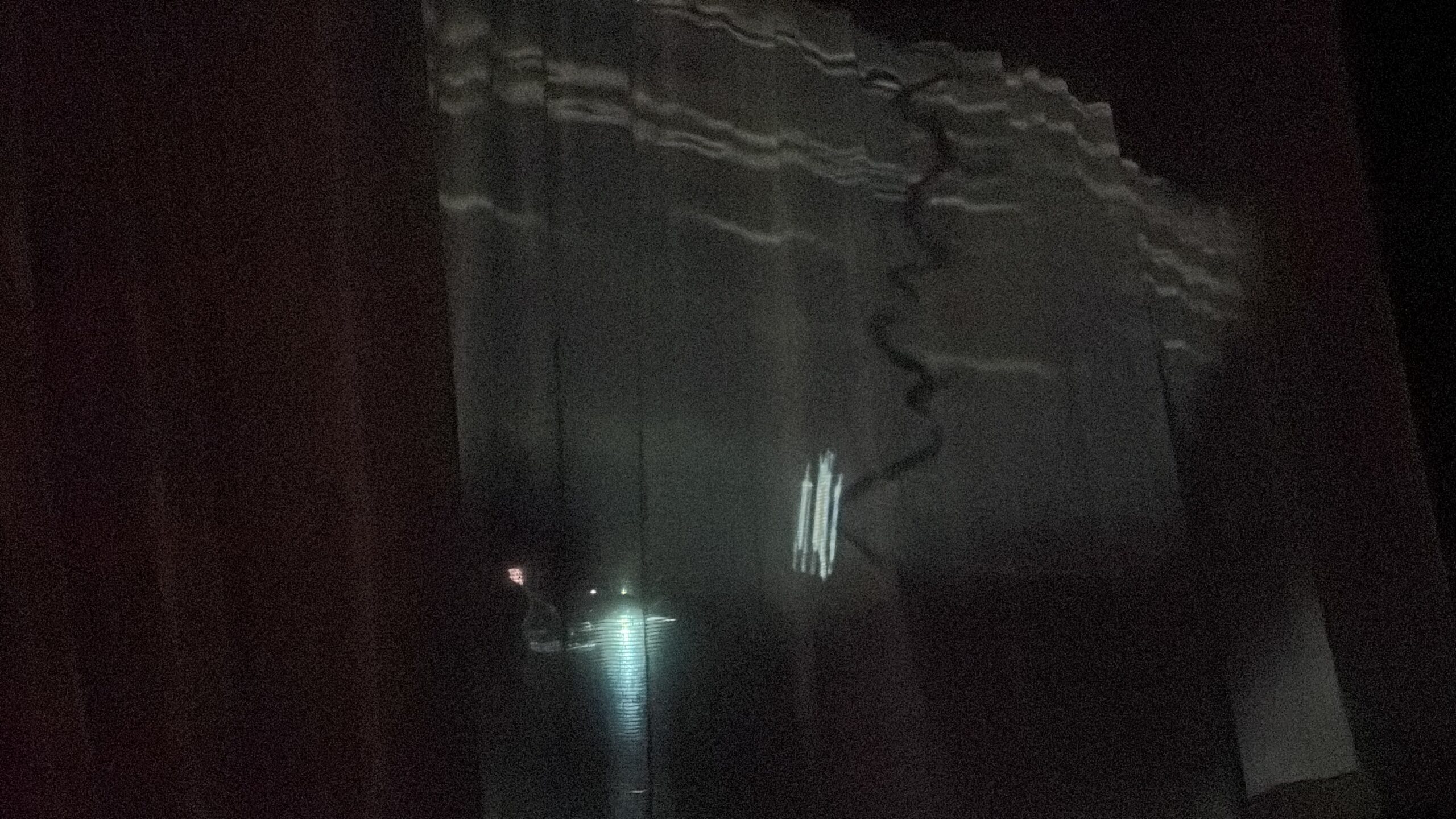

/depth cam?/

What I notice with the depth cam at first is that it keeps crashing Isadora, which is a bit frustrating, which propels me to work with it “lightly”. my initial intention of working with it is to see if it may serve better for body position tracking than webcam to animate the rope in my scene. But I also note that accurate tracking seems not matter too much in this work, so I just wanna see what’s the potential of depth cam. I think it does give a more accurate tracking, but the downside is that you have to be at a certain distance, and with the feet in the frame, so that the cam will start tracking your skeleton position, in this case it becomes less flexible than the eye++ actor. But what I find interesting with depth camera, is the white body-ghosty imagery it gives, so I ended up layering that on the video. And it works especially well with the dark environment.

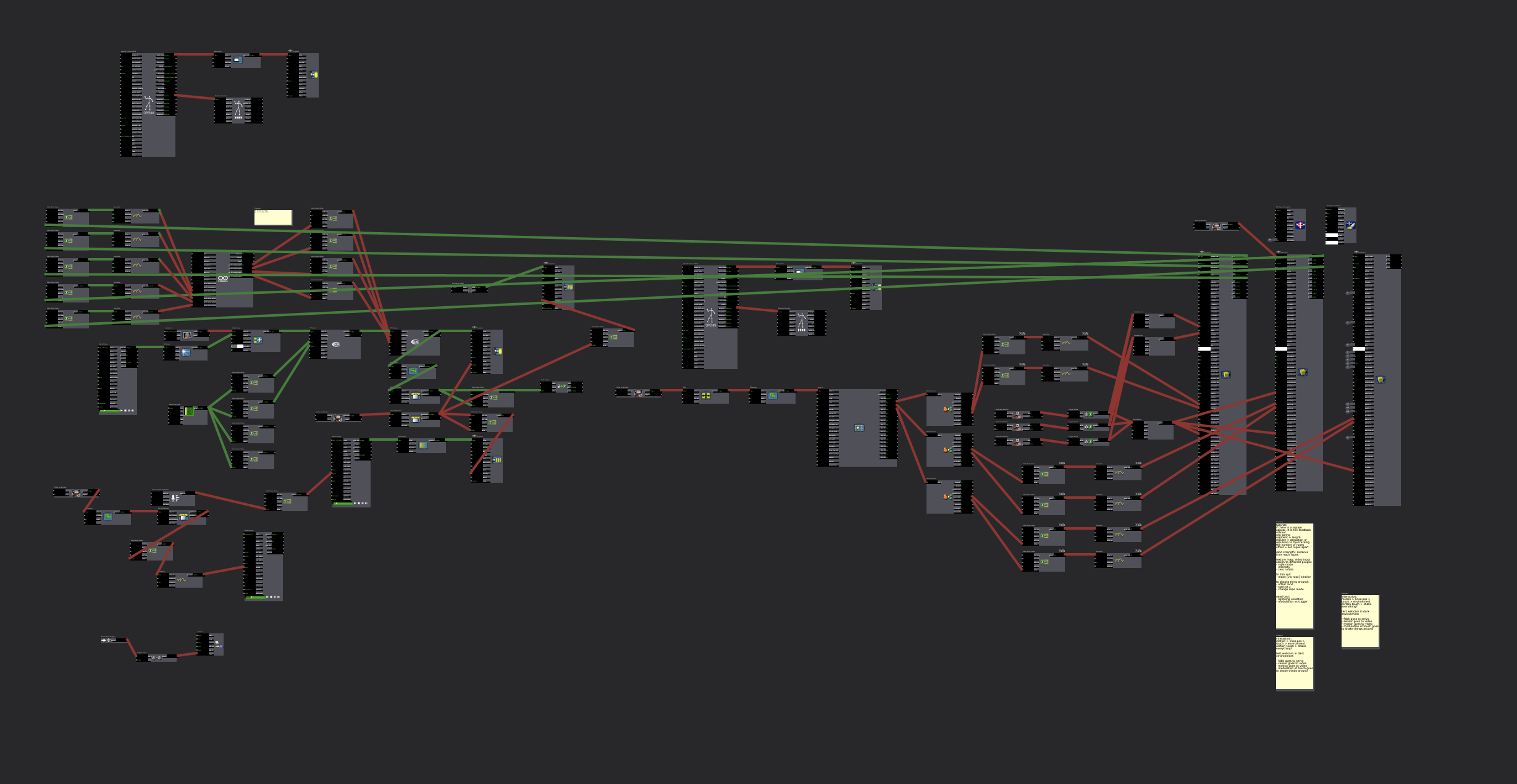

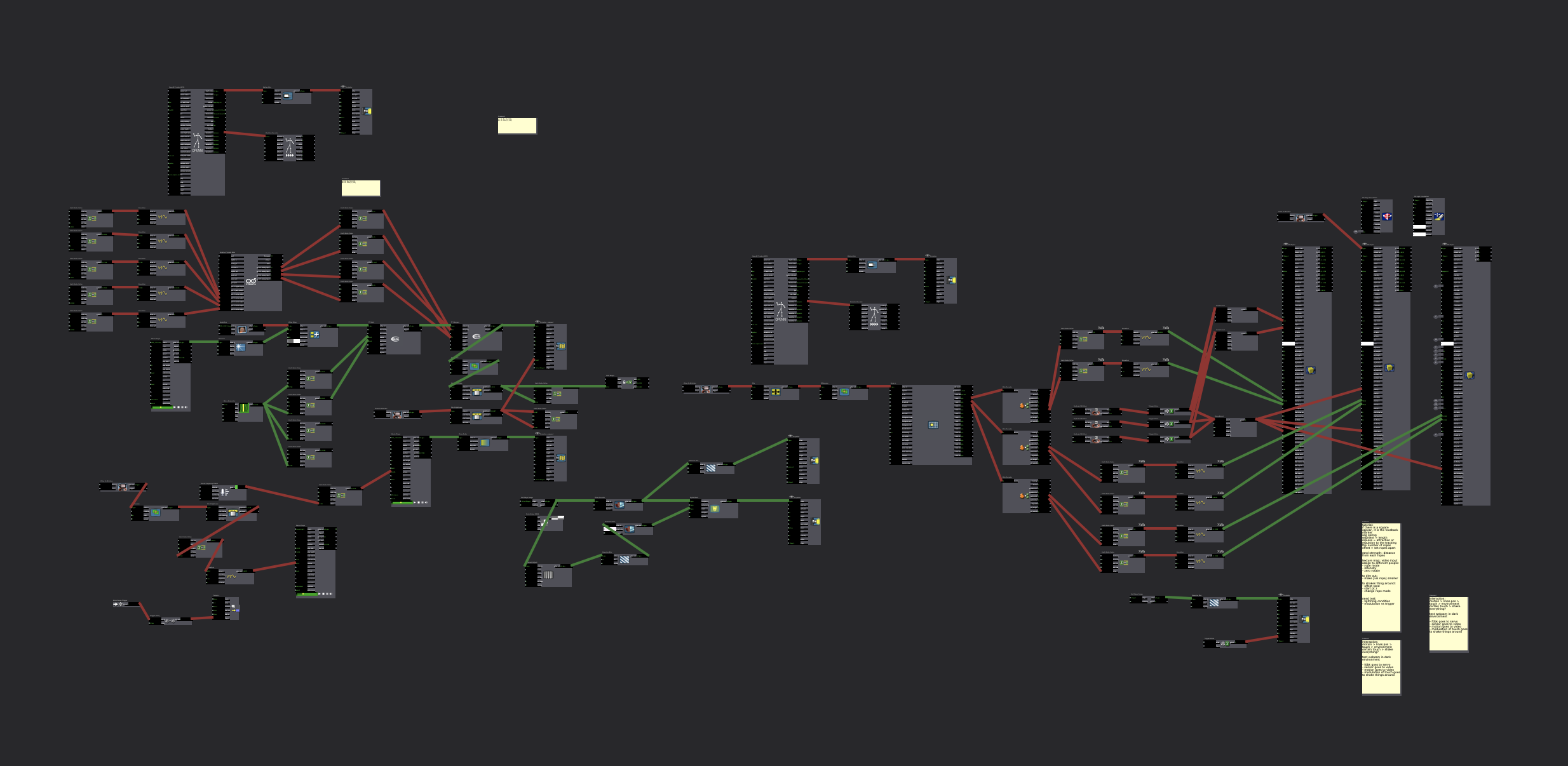

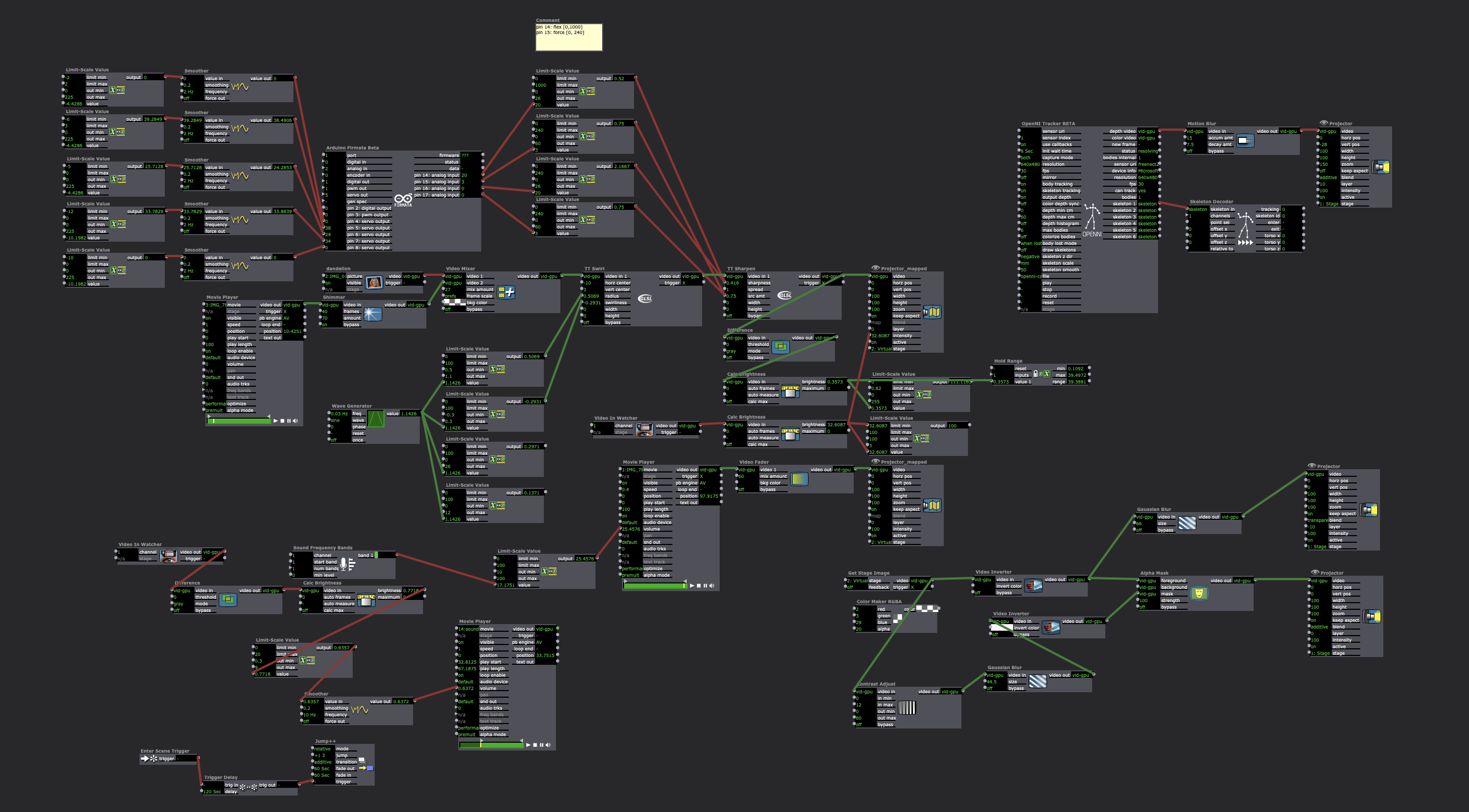

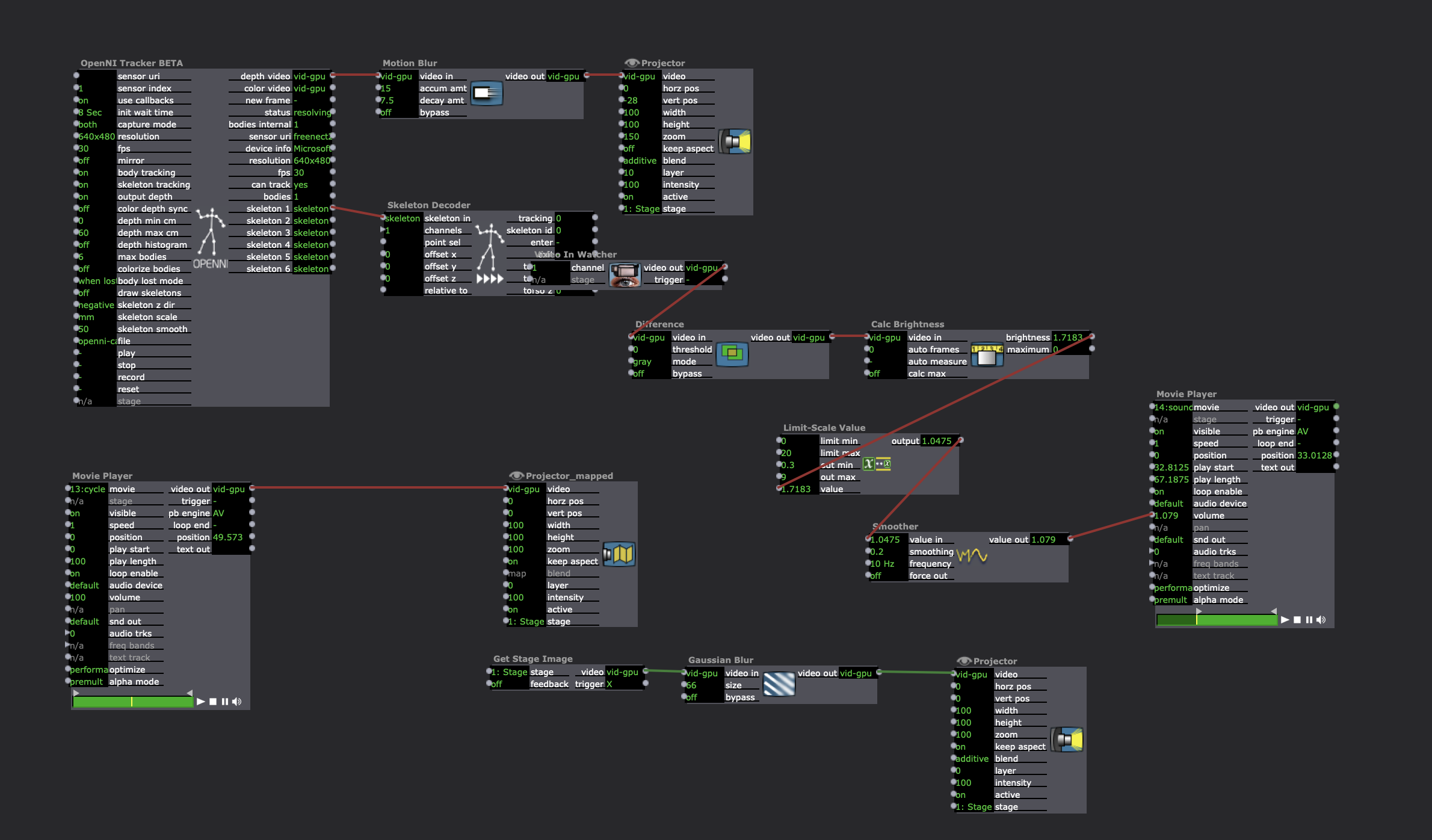

Here are the final Isadora patches:

/audience participation/

This time the score I decide to play with is: two people at a time, explore it. The rest are observers who can give to verbal cue to the people who are exploring — “pause” and “reverse”. Everyone can move around, in proximity or distance at any time.

/space design/

I wrapped and crocheted more bubble wrapper creatures in the space, tangling them through the wire, wall, charger, whatever happen to be in that corner that day. It’s like a mycelium growing on whatever environment there is, leaking out of the constructed space.

Feedback from folks and future iterations?

I really appreciate everyone’s engagement with this work and the discussions. Several people touches on the feeling of “jellyfish”, “little creature”, “fragile”, “desire to touch with care”, “a bit creepy?”. I am interested in all those visceral responses. At the beginning of cycle one, I was really interested in this modulation of touch, especially at a subtle scale, which I then find it hard to incite with certain technology mechanism, but it is so delightful to hear that the way the material composed actually evoke that kind of touch I am looking for. I am also interested in what Alex mentioned about it being like an “visual ASMR”, which I am gonna look into further. how to make visual/audio tactile is something really intrigues me. Also, I think I mentioned earlier that an idea I am working with in my MFA research is “feral fringe”, which is more of a sensation-imagery that comes to me, and through making works around this idea, it’s actually helping me to approach closer to what “feral fringe” actually refers to for me. I noticed that a lot of choice I made in this work are very intuitive (more “feel so” than “think so”) – eg: in the corner, the position of the curtain, and the layered imagery, the tilted projector, etc. Hearing people’s pointing out those help me to delve further into: what is a palpable sense of “feral fringe” ~

Lawson: Cycle 3 “Wash Me Away and Birth Me Again”

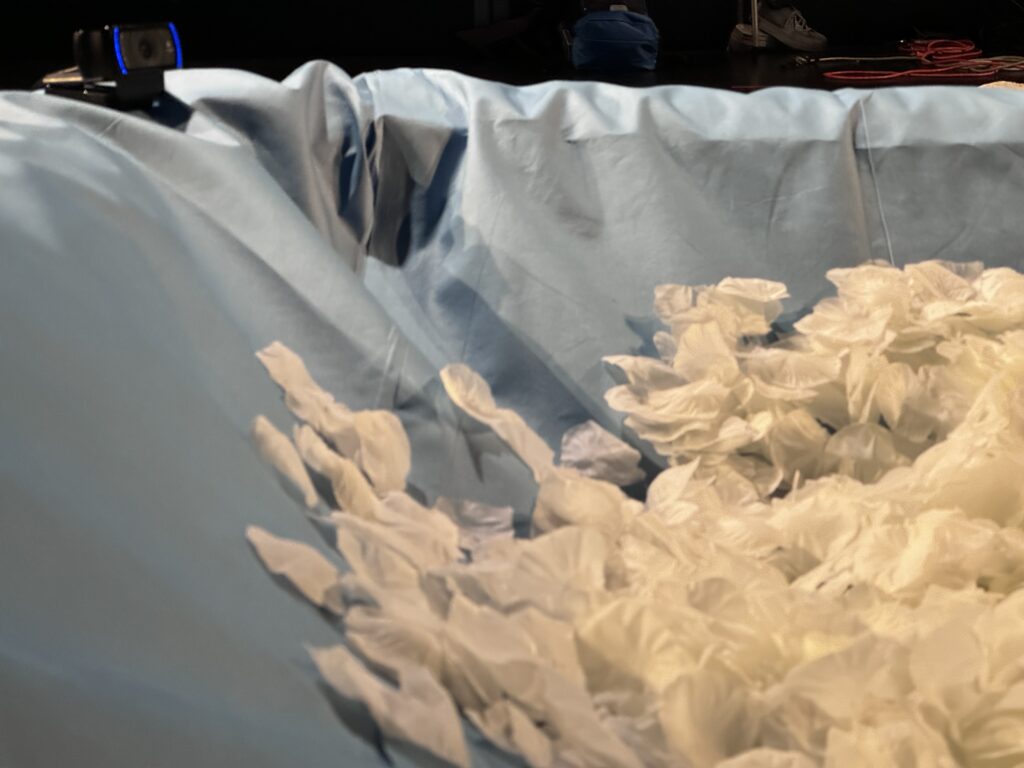

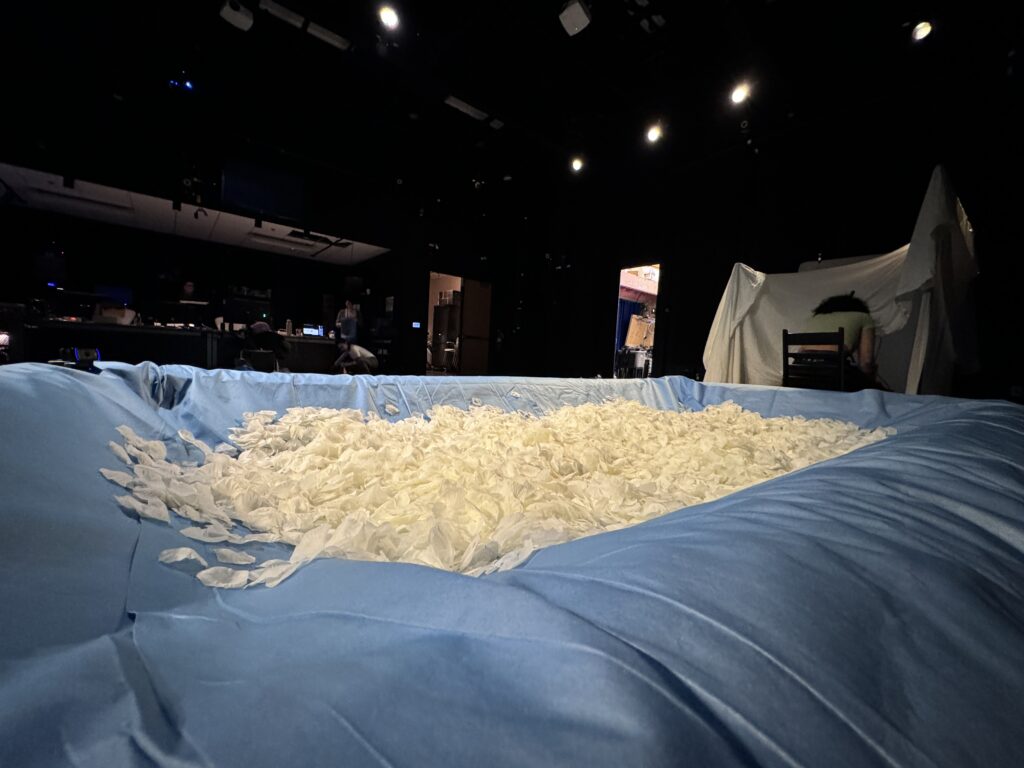

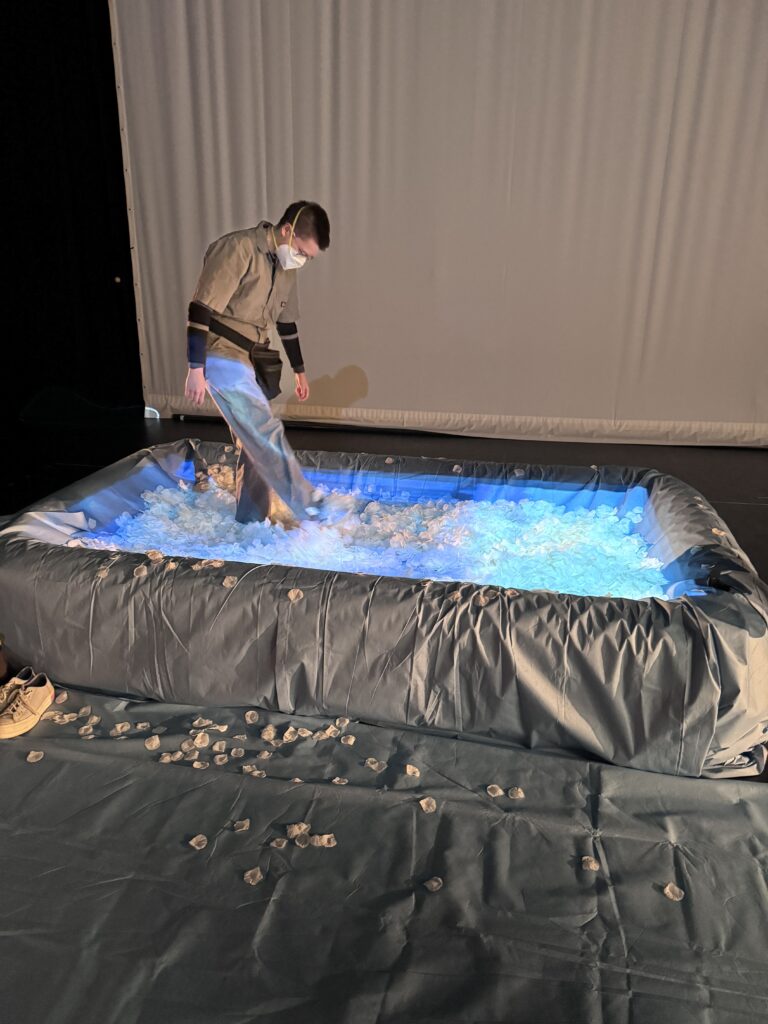

Posted: December 14, 2023 Filed under: Nico Lawson, Uncategorized | Tags: Au23, Cycle 3, dance, Digital Performance, Isadora Leave a comment »Changes to the Physical Set Up

For cycle 3, knowing that I wanted to encourage people to physically engage with my installation, I replaced the bunched up canvas drop cloths with a 6 ft x 10 ft inflatable pool. I built up the bottom of pool with two folded wrestling mats. Building up the bottom of the pool made the pool more stable and reduced the volume of silk rose petals that I would need to fill the pool. Additionally, I wrapped the pool with a layer of blue drop cloths. This reduced the kitschy or flimsy look of the pool, increased the contrast of the rose petals, and allowed the blue of the projection to “feather” at the edges to make the water projection appear more realistic. To further encourage the audience to physically engage with the pool, I placed an extra strip of drop cloth on one side of the pool and set my own shoes on the mat as a visual indicator of how people should engage: take your shoes off and get in. This also served as a location to brush the rose petals off of your clothes if they stuck to you.

In addition to the pool, I also made slight adjustments to the lighting of the installation. I tilted and shutter cut three mid, incandescent lights. One light bounced off of the petals. Because the petals were asymmetrically mounded, this light gave the petals a wave like appearance as the animation moved over top of them. The other two shins were shutter cut just above the pool to light the participant’s body from stage left and stage right.

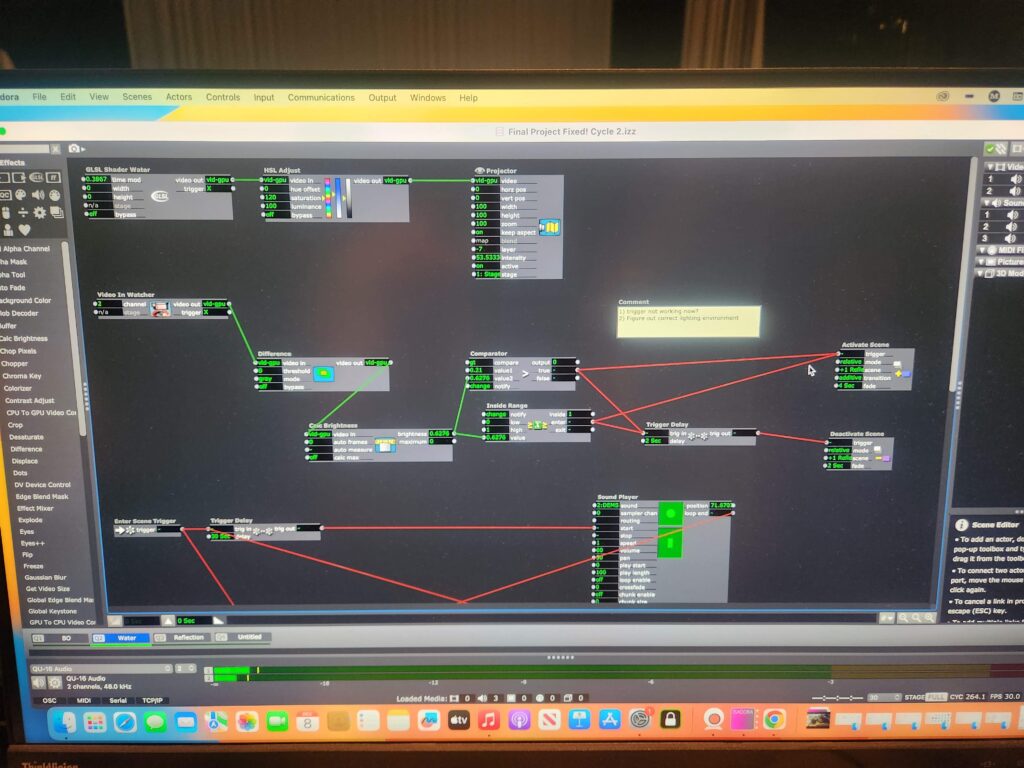

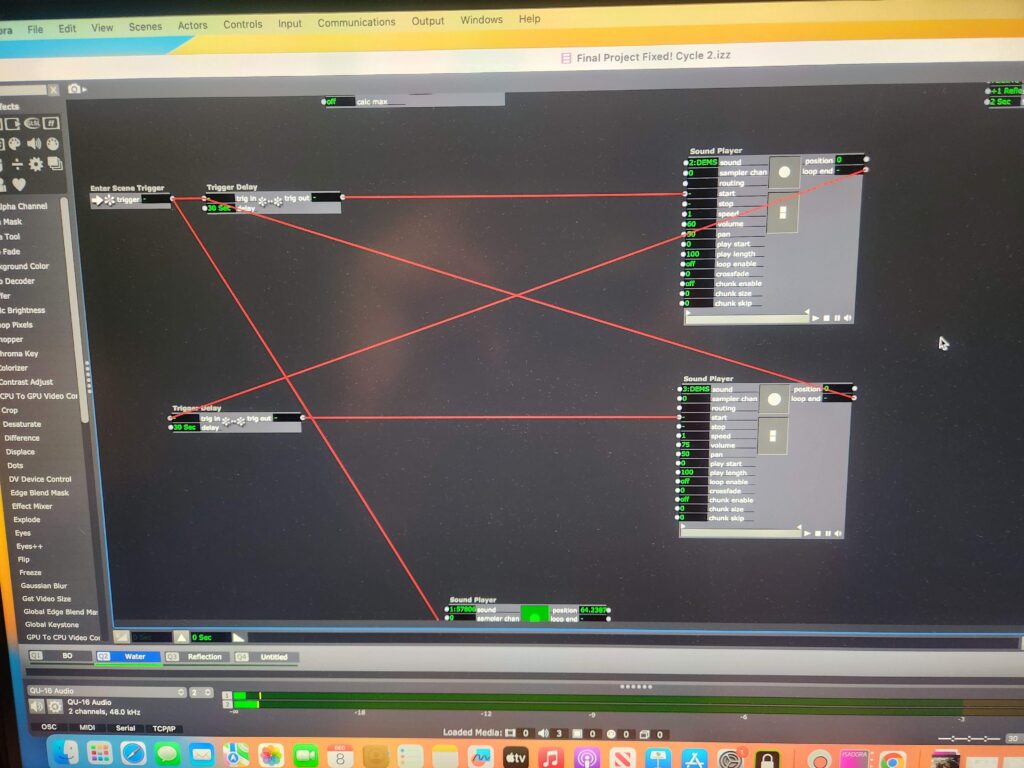

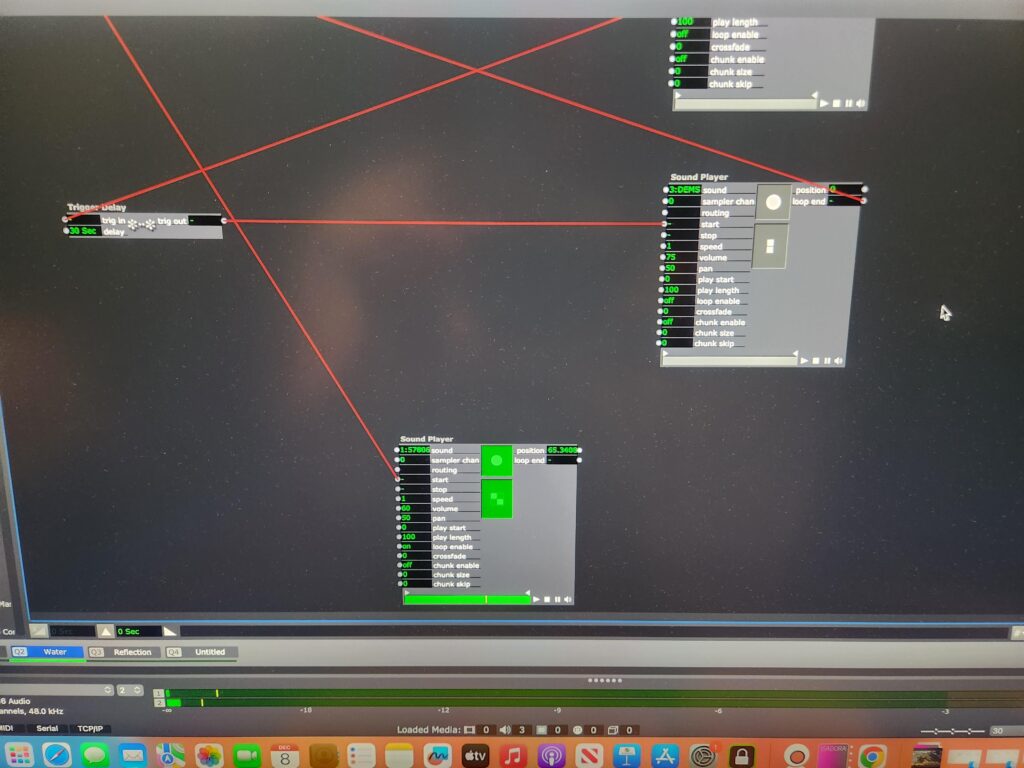

Changes to the Isadora Patch

During cycle 2, it was suggested that I add auditory elements to my project to support participant engagement with the installation. For this cycle, I added 3 elements to my project: a recording of running water, a recording of the poem that I read live during cycle 2, and a recording of an invitation to the audience.

The words of the poem can be found in my cycle 2 post.

The invitation:

“Welcome in. Take a rest. What can you release? What can the water carry away?”

I set the water recording to play upon opening the patch and to continue to run as long as the patch was open. I set the recordings of the poem and the invitation to alternate continuously with a 30 second pause between each loop.

Additionally, I made changes to the reflection scene of the patch. First, I re-designed the reflection. Rather than using the rotation feature of the projection to rotate the projected image from the webcam, I used the spinner actor and then zoomed in the projection map so it would fit into the pool. Rather than try to make the image hyper-realistic, I decided to amplify the distortion of the reflection by desaturating it and then using a colorizer actor to give the edges of the moving image a purple hue. I also made minor adjustments to the motion blur to play up the ghostliness of the emmanation.

Second, I sped up the trigger delay to 3 seconds and the deactivate scene trigger to 2 seconds. I made this change as a result of feedback from a peer that assisted me with my adjustments to the projection mapping. She stated that because the length of time of the fading up and down of reflection scene took so long to turn on and off and the reflection itself was so subtle that it was difficult to determine how her presence in the pool was triggering any change. I found the ghostliness of the final reflection to be incredibly satisfying.

Impact of Motion Lab Set Up

On the day of our class showing, I found that the presence of my installation in the context of other tactile and movement driven exhibits in the Motion Lab helped the handful of context-less visitors figure out how to engage with my space. When people entered the Motion Lab, they first encountered Natasha’s “Xylophone Hero” followed by Amy’s “seance” of voices and lightbulbs. I found that moving through these exhibits established an expectation that people could touch and manipulate my project and encouraged them engage to more fully with my project.

I also observed that the presence of the pool itself and the mat in front of it also encouraged full-body engagement with the project. I watched people “swim” and dance in the petals and describe a desire to lay down or to make snow angels in the petals. The presence of the petals in a physical object that visitors recognized appeared to frame and suggest the possibilities for interacting with the exhibit by making it clear that it was something that they could enter that would support their weight and movement. I also observed that hearing the water sounds in conjunction with my poem also suggested how the participants could interact with my work. Natasha observed that my descriptions of my movement in my poem help her to create her own dance in the pool sprinkling the rose petals and spinning around with them as she would in a pool.

The main hiccup that I observed was that viewers often would not stay very long in the pool once they realized that the petals were clinging to their clothes because of static electricity. This is something that I think I can overcome through the use of static guard or another measure to prevent static electricity from building up on the surface of the petals.

A note about sound…

My intention for this project is for it to serve as a space of quiet meditation through a pleasant sensory experience. However, as a person on the autism spectrum that is easily overwhelmed by a lot of light and noise, I found that I was overwhelmed by my auditory components in conjunction with the auditory components of the three other projects. For the purpose of a group showing, I wish that I had only added the water sound to my project and let viewers take in the sounds from Amy and CG’s works from my exhibit. I ended up severely overstimulated as the day went on and I wonder if this was the impact on other people with similar sensory disorders. This is something that I am taking into consideration as I think about my installation in January.

What would a cycle 4 look like?

I feel incredibly fortunate that this project will get a “cycle 4” as part of my MFA graduation project.

Two of my main considerations for the analog set up at Urban Arts Space are disguising and securing the web camera and creating lighting that will support the project using the gallery’s track system. My plan for hiding the web camera is to tape it to the side of the pool and then wrap it in the drop cloth. This will not make the camera completely invisible to the audience, but it will minimize it’s presence and make it less likely that the web cam could be knocked off or into the pool. As for the lighting, I intend make the back room dim and possibly use amber gels to create a warmer lighting environment to at least get the warmth of theatrical lighting. I may need to obtain floor lamps to get more side light without over brightening the space.

Arcvuken posed the question to me as to how I will communicate how to interact with the exhibit to visitors while I am not present in the gallery. For this, I am going to turn to my experience as neurodivergent person and my experience as an educator of neurodivergent students. I am going to explicitly state that visitors can touch and get into the pool and provide some suggested meditation practices that they can do while in the pool in placards on the walls. Commen sense isn’t common – sometimes it is better for everyone if you just say what you mean and want. I will be placing placards like this throughout the entire gallery for this reason to ensure that visitors – who are generally socialized not to touch anything in a gallery – that they are indeed permitted to physically interact with the space.

To address the overstimulation that I experienced in Motion Lab, I am also going to reduce the auditory components of my installation. I will definitely keep the water sound and play it through a sound shower, as I found that to be soothing. However, I think that I will provide a QR code link to recordings of the poems so that people can choose whether or not they want to listen and have more agency over their sensory experience.

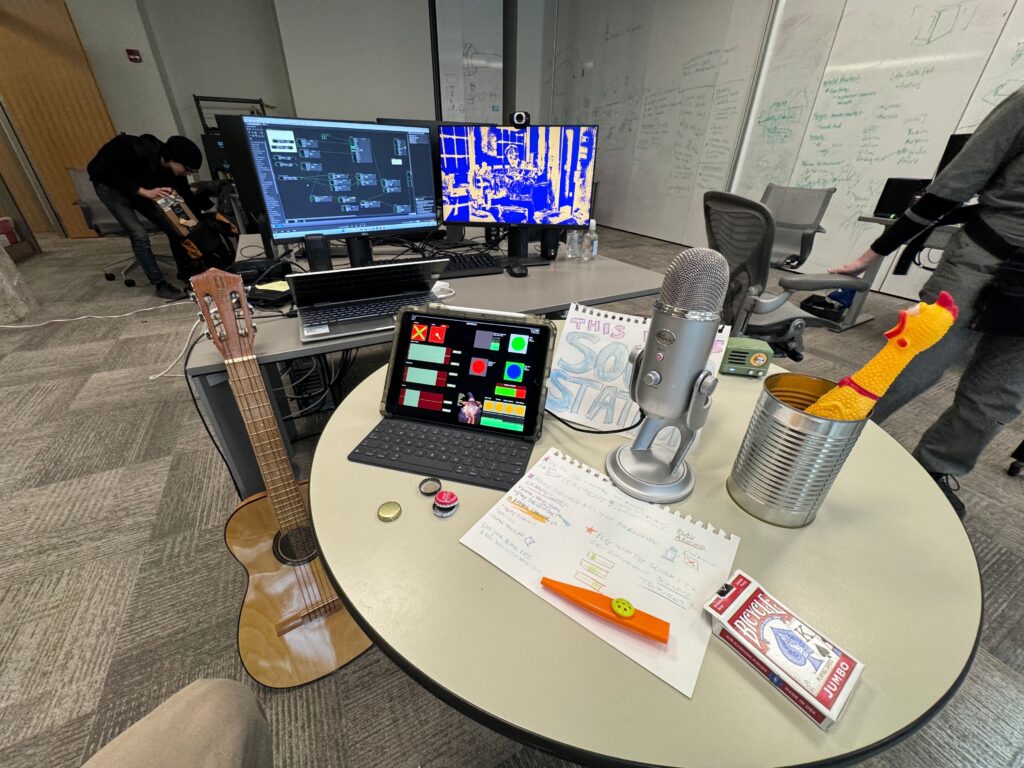

Cycle 3: The Sound Station

Posted: December 11, 2023 Filed under: Arvcuken Noquisi, Final Project, Isadora | Tags: Au23, Cycle 3 Leave a comment »Hello again. My work culminates into cycle 3 as The Sound Station:

The MaxMSP granular synthesis patch runs on my laptop, while the Isadora video response runs on the ACCAD desktop – the MaxMSP patch sends OSC over to Isadora via Alex’s router (it took some finagling to get around the ACCAD desktop’s firewall, with some help from IT folks).

I used the Mira app on my iPad to create an interface to interact with the MaxMSP patch. This meant that I had the chance make the digital aspect of my work seem more inviting and encourage more experimentation. I faced a bit of a challenge, though, because some important MaxMSP objects do not actually appear on the Mira app on the iPad. I spent a lot of time rearranging and rewording parts of the Mira interface to avoid confusion from the user. Additionally I wrote out a little guide page to set on the table, in case people needed additional information to understand the interface and what they were “allowed” to do with it.

Video 1:

The Isadora video is responsive to the both the microphone input and the granular synthesis output. The microphone input alters the colors of the stylized webcam feed to parallel the loudness of the sound, going from red to green to blue with especially loud sounds. This helps the audience mentally connect the video feed to the sounds they are making. The granular synthesis output appears as the floating line in the middle of the screen: it elongates into a circle/oval with the loudness of the granular synthesis output, creating a dancing inversion of the webcam colors. I also threw a little slider in the iPad interface to change the color of the non mic-responsive half of the video, to direct audience focus toward the computer screen so that they recognize the relationship between the screen and the sounds they were making.

The video aspect of this project does personally feel a little arbitrary – I would definitely focus more on it for a potential cycle 4. I would need to make the video feed larger (on a bigger screen) and more responsive for it to actually have any impact on the audience. I feel like the audience focuses so much more on the instruments, microphone, and iPad interface to really necessitate the addition of the video feed, but I wanted to keep it as an aspect of my project just to illustrate the capacity MaxMSP and Isadora have to work together on separate devices.

Video 2:

Overall I wanted my project to incite playfulness and experimentation in its audience. I brought my flat guitar (“skinned” guitar), a kazoo, a can full of bottlecaps, a deck of cards, and miraculously found a rubber chicken in the classroom to contribute to the array of instruments I offered at The Sound Station. The curiosity and novelty of the objects serves the playfulness of the space.

Before our group critique we had one visitor go around for essentially one-on-one project presentations. I took a hands-off approach with this individual, partially because I didn’t want to be watching over their shoulder and telling them how to use my project correctly. While they found some entertainment engaging with my work, I felt like they were missing essential context that would have enabled more interaction with the granular synthesis and the instruments. In stark contrast, I tried to be very active in presenting my project to the larger group. I lead them to The Sound Station and showed them how to use the flat guitar, and joined in making sounds and moving the iPad controls with the whole group. This was a fascinating exploration of how group dynamics and human presence within a media system can enable greater activity. I served as an example for the audience to mirror, my actions and presence served as permission for everyone else to become more involved with the project. This definitely made me think more about what direction I would take this project in future cycles, if it were for group use versus personal use (since I plan on using the maxMSP patch for a solo musical performance). I wonder how I would have started this project differently if I did not think of it as a personal tool and instead as directly intended for group/cooperative play. I probably would have taken much more time to work on the user interface and removed the video feed entirely!

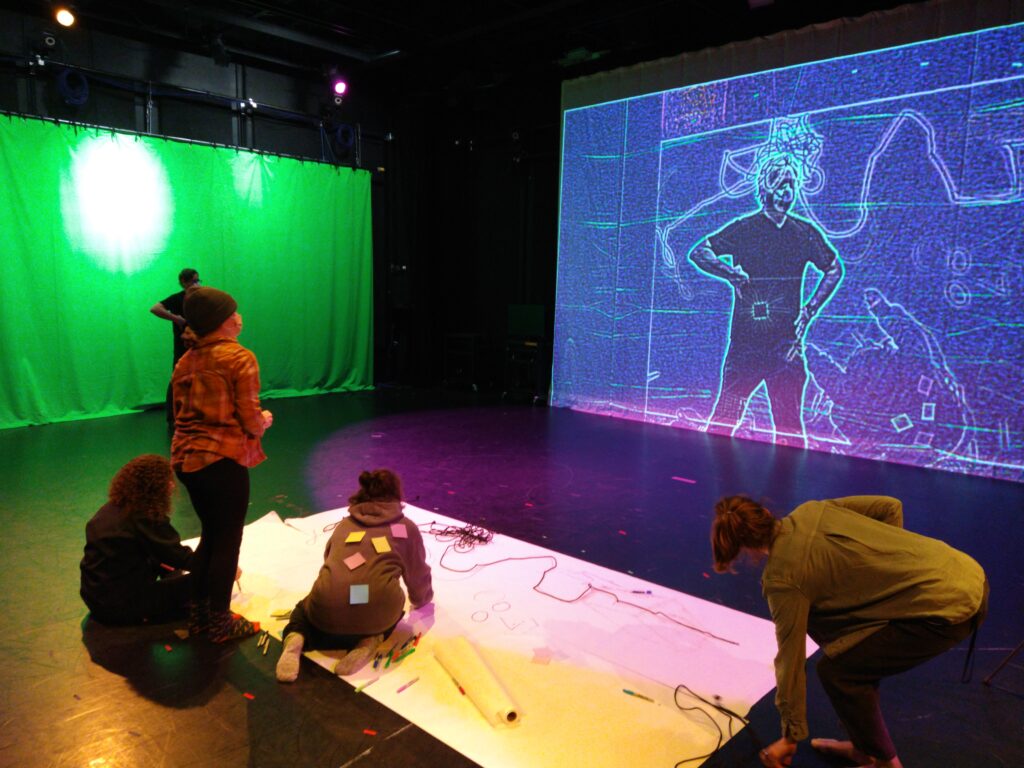

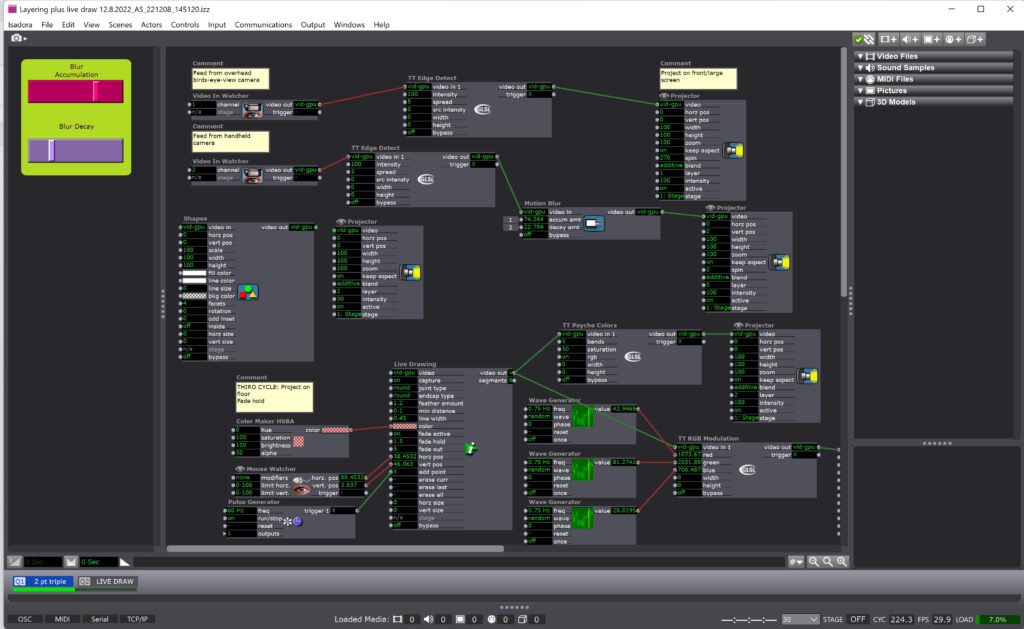

Cycle 3: Layering and Gesture: Collective Play

Posted: December 13, 2022 Filed under: Tamryn McDermott, Uncategorized | Tags: Cycle 3, Isadora 1 Comment »For this third iteration, I decided to set up three digital layers that provided space for play, collaboration, and digital/analog spaces to mingle. My initial idea was to consider how I could introduce the body/model into the space and suggest an opportunity for gestural drawing and experimentation both on physical paper and digitally. As you can see in the image below, participants were actively engaged in working on the paper, viewing what happening on the projection screen, and interacting with one another across these platforms and planes in space. A third layer not visible in the image below is a LIve Drawing actor in Isadora that comes into play in some of the videos below. I stuck with the TT Edge Detect actor in Isadora and played with a Motion Blur actor on the second layer so that the gestural movements would be emphasized.

Note the post-its on Alison’s back below. These were a great surprise as they were translated into digital space and were activated by her drawing and movement. They became a playful, unexpected surprise!

I really appreciated the feedback from this experience and want to share some of the useful comments I received as a record:

- Alison: I loved that Alison shared it was “confusing in a good way” and that she felt like it was a space where she could play for a long time. She identified that this experience was a social one and that it mattered that they were exploring together rather than a solo experience.

- Katie: Katie was curious about what would show up and explored in a playful and experimental way. She felt some disorientation with the screens and acknowledged that when Alex was using the live draw tool in the third layer, she didn’t realize that he was following her with the line. I loved that this was a surprise and realized that I didn’t share this as an option verbally well enough so she didn’t know what was drawing the line.

- Alex: Alex was one of the group that used the live draw tool and others commented that it felt separated from the group/collaborative experience of the other two layers. Alex used the tool to follow Katie’s movement and traced her gestures playfully. He commented that this was one of his favorite moments in the experience. He mentioned it was delightful to be drawn, when he was posing as a superhero and participants were layering attributes onto his body. There was also a moment when I said, “that’s suggestive” that was brought up and we discussed that play in this kind of space could bring in inappropriate imagery regardless if it was intended or not. What does it mean that this is possible in such a space? Consider this more. Think about the artifact on the paper after play, how could this be an opportunity for artifact creation/nostalgia/document.

- Mila: With each iteration, people discovered new things they can do. Drawing was only one of the tools, not the focus, drawing as a tool for something bigger. Love the jump rope action!

- Molly: How did we negotiate working together? This creates a space for emergent collaboration. What do we learn from emergent collaboration? How can we set up opportunities for this to happen? The live draw was sort of sneaky and she wondered if there was a way to bring this more into the space where other interactions were happening.

This feedback will help me work towards designing another iteration as a workshop for pre-service art teachers that I am working with in the spring semester. I am considering if I could stage this workshop in another space or if using the motion lab would be more impactful. If I set it up similarly in the lab, I would integrate the feedback to include some sort of floor anchors that are possibilities or weights connected to the ropes. I think I would also keep things open for play, but mention perspective, tools available, and gesture drawing to these students/participants who will be familiar with teaching these techniques to students in a K – 12 setting.

I have been exploring the possibility of using a cell phone mounted on the ceiling as the birds-eye-view camera and using NDI and a router to send through Isadora. I’ll work on this more in the spring semester as I move towards designing a mini-version for a gallery experience in Hopkins Hall Gallery as part of a research collective exhibition and also the workshop with the pre-service students. If I can get permission to host the workshop in the motion lab, I would love to bring these students into this space as my students this semester really appreciated the opportunity to learn about the motion lab and explore some of the possibilities in this unique space.