Cycle 1 documentation – Dynamic Cloth – prototype

Posted: October 27, 2022 Filed under: Uncategorized Leave a comment »

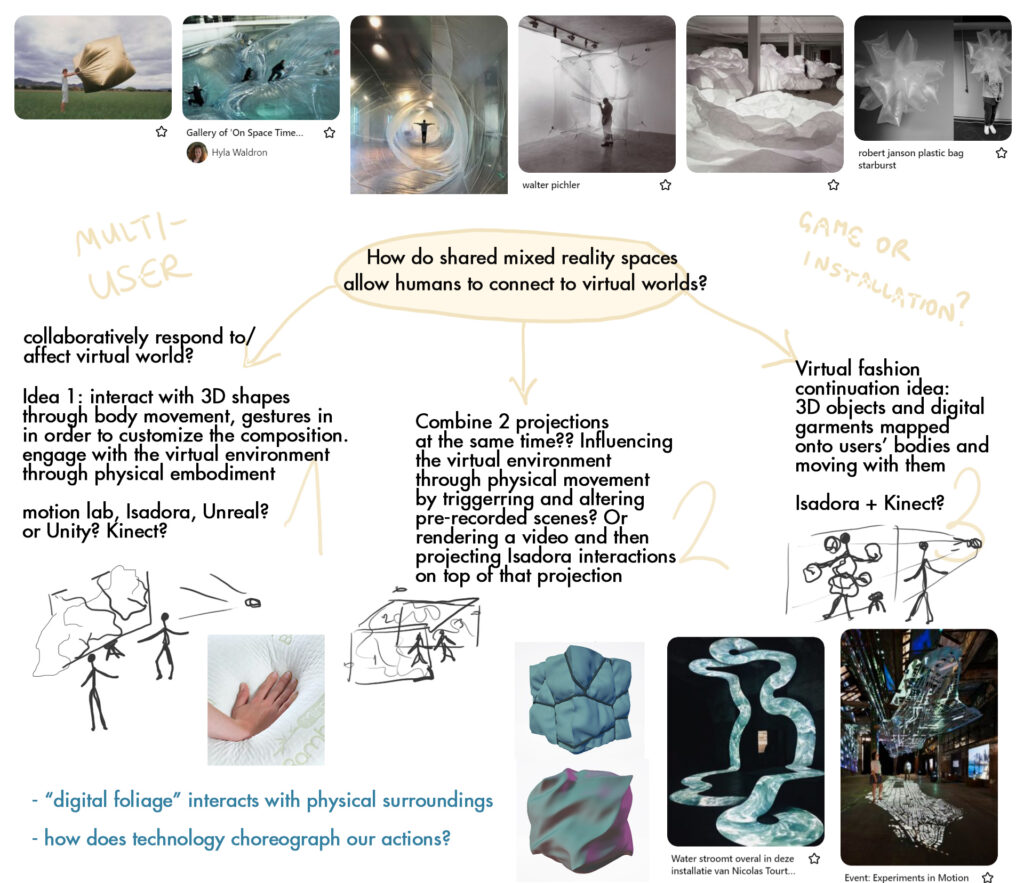

Since my research is based on how shared mixed reality experiences help us relate to virtual worlds, I wanted to use this project to create an experience where users can collaboratively affect/respond to digital cloth shapes through body movement tracking in the Motion Lab. I love creating experiences and environments that blend both physical and virtual worlds, and so I thought this would be a good way to explore how physical surroundings and inputs impact virtual objects, and I also thought this project direction would be an interesting way to explore how interactive technology creates performative spaces.

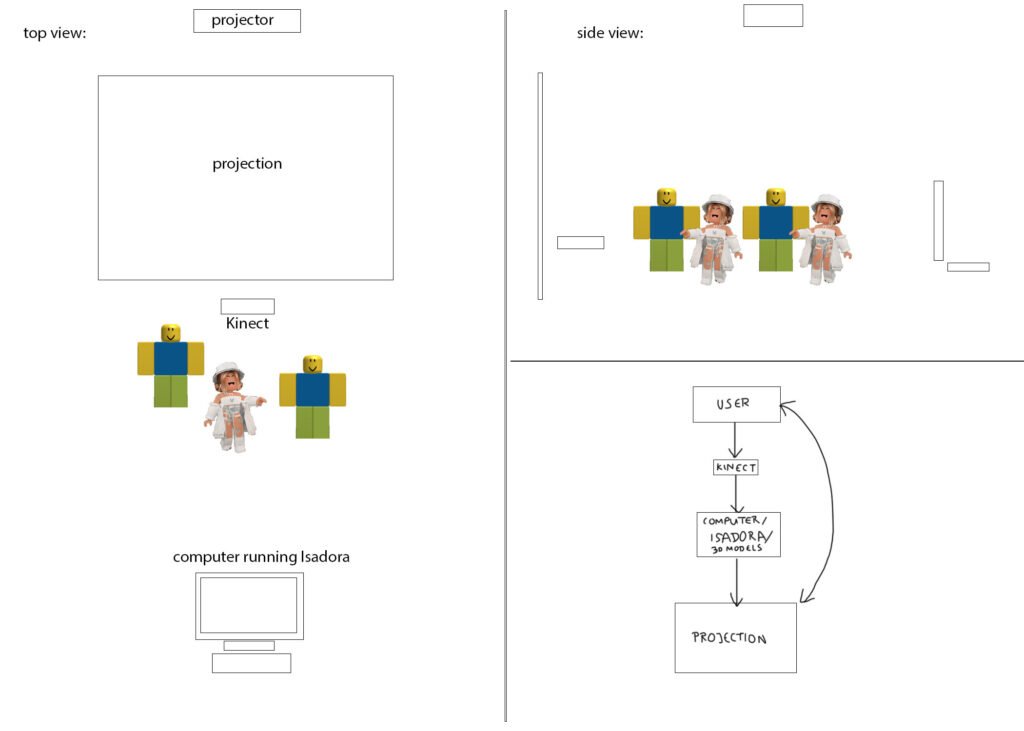

Since at the time (and now) I was still a beginner in Isadora I didn’t really have an idea how to go about doing this, and I didn’t know if Isadora is even the right software to use or should I just be using Kinect in a game engine. My goal is to have the users affect the virtual cloth in real time, but not knowing how to do this, in the beginning I was thinking an option could also be pre-rendering the cloth simulations and then use the Kinect inputs to trigger different animations to create this dynamic effect. However, after learning how to import 3D models to Isadora and affect lighting, I realized that I will be able to trigger real time changes to 3D shapes, without using pre-made animations. I might still use animations if I think they can benefit the experience, but after the progress I’ve made so far I realized that I have a sense of how to make this work in real time.

After deciding I need Kinect and Isadora for this experience, I needed some help from Felicity to install the drivers for Kinect on the computer in the classroom, so I can begin working on an early prototype. After that was setup, I first learned how to import 3D models in Isadora because I didn’t figure that out during PP1. I was able to import a placeholder cloth model I made a few months ago and use that to begin figuring out how to cause dynamic changes to it using Kinect input. Initially, I hooked the Kinect tracking input to the 3D light orientation and I was already happy where it was going since it felt like I was casting a shadow on a virtual object through my movement, but this was just a simple starting point:

After this, I wanted to test changing the depth of the shape through positions and motion, so I thought a good initial approach would be plugging the moving inputs into the shape’s size along the y axis, to make it seem like the object is shrinking and expanding:

I took this approach to the previous file and currently I have the Kinect input impacting the lighting, orientation, and y-axis size of the placeholder cloth shapes. In the gif below I plugged in the movement inputs to the brightness calculator, and when I’m further away from Kinect and when more light is being let in, the shapes expand along the y axis, but when I get closer and it gets darker, the shapes flatten down, which feels like putting pressure on them through movement:

I’m happy that I’m figuring out how to do what I want, but I want this to be a shared experience with multiple users’ data influencing the shapes simultaneously, so the next step is to transition from the computer where I made the prototype into the Motion Lab where I want the experience to be. Currently I need the Isadora on the Motion Lab computers to be updated to the version we are using in class, so I will remind Felicity about that. After the setup is done again both with Isadora and Kinect, I will keep working on this in the Motion Lab and modifying the patch based on that environment, since they are going to be interdependent. I also finally managed to renew my Cinema 4D license yesterday so this upcoming week I also want to make the final models (and animations?) I want to use for this project and replace the current placeholders.

Feedback from the presentation:

I appreciated the feedback from the class, and the patch I currently have seemed to produce positive reactions. The comments I got included that it was intriguing to see how responsive and sensitive the 3D cloth model was. It was nice to see that a lot of people wanted to interact with it in different ways. I realized I need to think more about the scale of the projection since it can impact how people perceive and engage with it.