‘You make me feel like…’ Taylor – Cycle 3, Claire Broke it!

Posted: December 14, 2016 Filed under: Final Project, Isadora, Taylor Leave a comment »towards Interactive Installation,

the name of my patch: ‘You make me feel like…’

Okay, I have determined how/why Claire broke it and what I could have done to fix this problem. And I am kidding when I say she broke it. I think her and Peter had a lot of fun, which is the point!

So, all in all, I think everyone that participated with my system had a good time with another person, moved around a bit, then watched themselves and most got even more excited by that! I would say that in these ways the performances of my patch were very successful, but I still see areas of the system that I could fine tune to make it more robust.

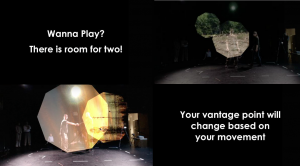

My system: Two 9 sided shapes tracked 2 participants through space, showing off the images/videos that are alpha masked onto the shapes as the participants move. It gives the appearance that you are peering through some frame or scope and you can explore your vantage point based on your movement. Once participants are moving around, they are instructed to connect to see more of the images and to come closer to see a new perspective. This last cue uses a brightness trigger to switch to projectors with live feed and video delayed footage playing back the performers’ movement choices and allowing them to watch themselves watching or watching themselves dancing with their previous selves dancing.

The day before we presented, with Alex’s guidance, I decided to switch to the Kinect in the grid and do my tracking from the top down for an interaction that was more 1:1. Unfortunately, this Kinect is not hung center/center of the space, but I set everything else center/center of the space and used Luminance Keying and Crop to match the space and what the Kinect saw. However, because I based the switch, from shapes following you to live feed, on brightness when the depth was tracked at the side furthest from the Kinect the color of the participant was darker (video inverter was used) and the brightness window that triggered the switch was already so narrow. To fix this I think shifting the position of the Kinect or how the space was laid out could have helped. Also, adding a third participant to the mix could have made (it even more fun) the brightness window greater and increased the trigger range, so that the far side is no longer a problem.

I wonder if I should have left the text running for longer bouts of time, but coming in quicker succession? I kept cutting the time back thinking people would read it straight away, but it took people longer to pay attention to everything. I think this is because they are put on display a bit as performers and they are trying to read and decipher/remember instructions.

The bout that ended up working the best, or going in order all the way through as planned was the third go-round, I’ll post a video of this one on my blog which I can link to once I can get things loaded. This tells me my system needs to accommodate for more movement because there was a wide range of movement between the performers (maybe with more space, or more clearly defined space). Also, this accounting for the time taken exploring I have mentioned above.