Cycle 2_Taylor

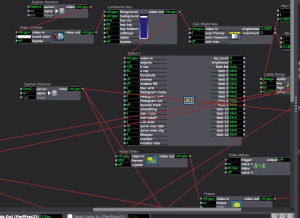

Posted: December 3, 2016 Filed under: Assignments, Isadora, Taylor Leave a comment »This patch was created to use in my rehearsal to give the dancers a look at themselves while experimenting with improvisation to create movement. During this phase of the process we were exploring habits in relation to limitations and anxiety. I asked the dancers to think about how their anxieties manifest physically calling up the feelings of anxiety and using the patch to view their selves in real time, delayed time, and various freeze frames. From this exploration, the dancers were asked to create solos.

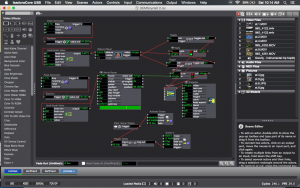

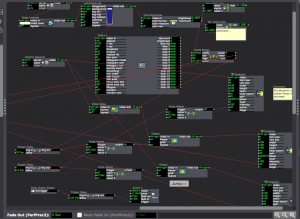

For cycle two, I worked out the bugs from the first cycle.

The main thing that was different for this cycle was working with the Kinect and using a depth camera to track the space and shift from the scenic footage to the live feed video instead of using live feed and tracking brightness levels.

I also connected toggles and gates to a multimix to switch between pictures and video for the first scene using pulse generators to randomize the images being played. For rehearsal purposes, these images are content provided from the dancers’ lives, a technological representation of their memories. I am glad I was able to fix this glitch with the images and videos. Before they were not alternating. I was able to use this in my previous patch along with the Kinect depth camera to switch the images based on movement instead of pulse generators.

Cycle 2 responses:

The participants thought that not knowing that the system was candidly recording them was cool. This was nice to hear because I had changed the amount of video delay so that the past self would come as more of a surprise. I felt that if the participant was unaware of the playback of the delay that there interaction with the images/videos at the beginning would be more natural and less conscious of being watched (even though our class is also watching the interaction).

Participants (classmates) also thought that more surprises would be interesting. Like the addition of filters such as dots or exploding to live video feed, but I don’t know how this would fit into my original premise for creating the patch.

Another comment that I wrote down, but am still a little unsure of what they meant was dealing with the placement of performers and inquiring if multiple screens might be effective. I did use the patch projecting on multiple screens in my rehearsal. I was interesting how the performers were very concerned with the stages being produced letting that drive their movement but were also able to stay connected with the group in the real space because they could see the stages from multiple angles. This allowed them to be present in the virtual and the real space during their performance.

I was also excited about the movement of participants that was generated. I think I am becoming more and more interested in getting people to move in ways they would not normally and think with more development this system could help to achieve that.

link to cycle 2 and rehearsal patches: https://osu.box.com/s/5qv9tixqv3pcuma67u2w95jr115k5p0o (also has unedited rehearsal footage from showing)