Magic Window

Posted: December 14, 2018 Filed under: Uncategorized Leave a comment »This interactive window came on the heels of a video installation that I made for the Hopkin’s Hall Gallery, State of Swing. Making this, I considered reality’s complexity, and the filtering and framing we do to make meaning for ourselves.

Two videos play simultaneously, and filters hang on a pedestal for viewers to pick up and look through. Each filter blocks out one video and lets the other pass through.

While I find the simplicity of State of Swing captivating, I wanted to see what could be possible with Isadora while holding onto a similar concept–the audience controls what they see and becomes aware of how much happens that they don’t perceive.

Thinking about framing, reflecting, refracting, and obscuring, a window came to mind. I was excited by the idea that a window would reflect myself back at me when I was on its the light side. I’m pocketing this, because, while this didn’t become a part of this version of this installation, I hope it comes back!

I went to Columbus Architectural Salvage, found dusty window, and tried to project onto it. The dust allowed me to see a faint projection on the window, but the Kinnect could only sense the glass when it was perfectly perpendicular to its waves.

Meanwhile, I did some research about projecting onto glass, and there is really expensive film that adheres to glass, making it a projectable AND TRANSPARENT surface. COOL! But, the cheaper option was to paint buttermilk onto the glass. (No it doesn’t smell at all once it dries.)

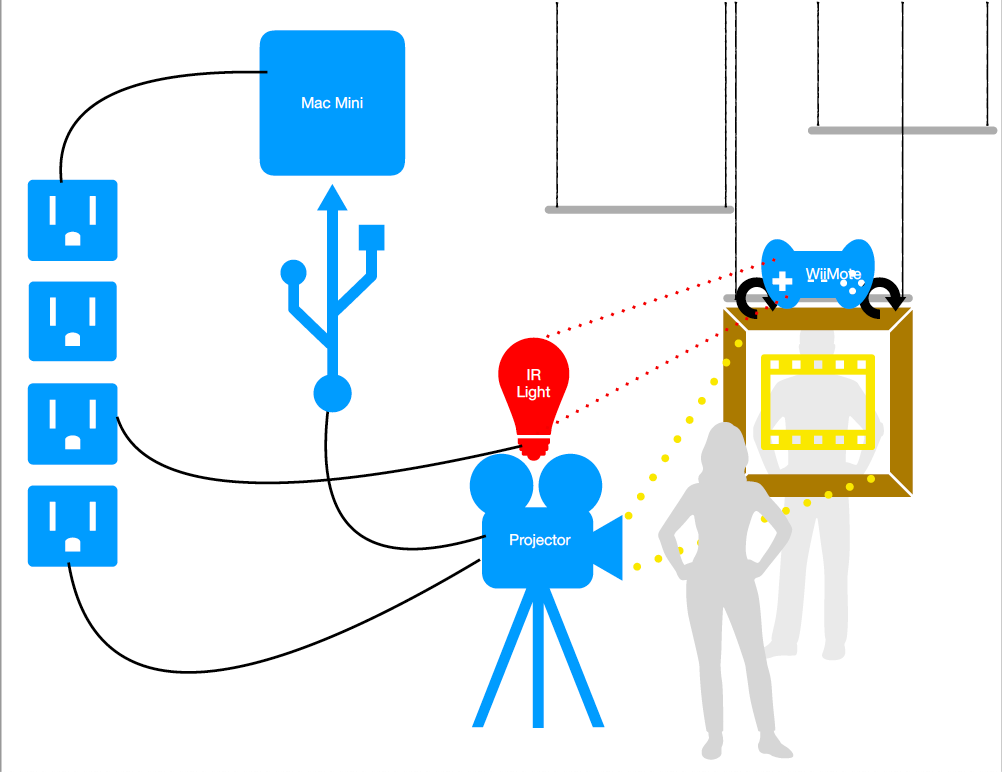

Still, the surface must still have been too flat and reflective for the Kinnect. So, Alex helped me figure out a WiiMote and Malu helped me solder together a couple of small IR lights and connect them to the window. I’m glad I learned how to do this, but they weren’t bright enough and were too directional to get a consistent reading from the WiiMote. Luckily, the MoLa had a couple of IR lights, and we turned the system around.

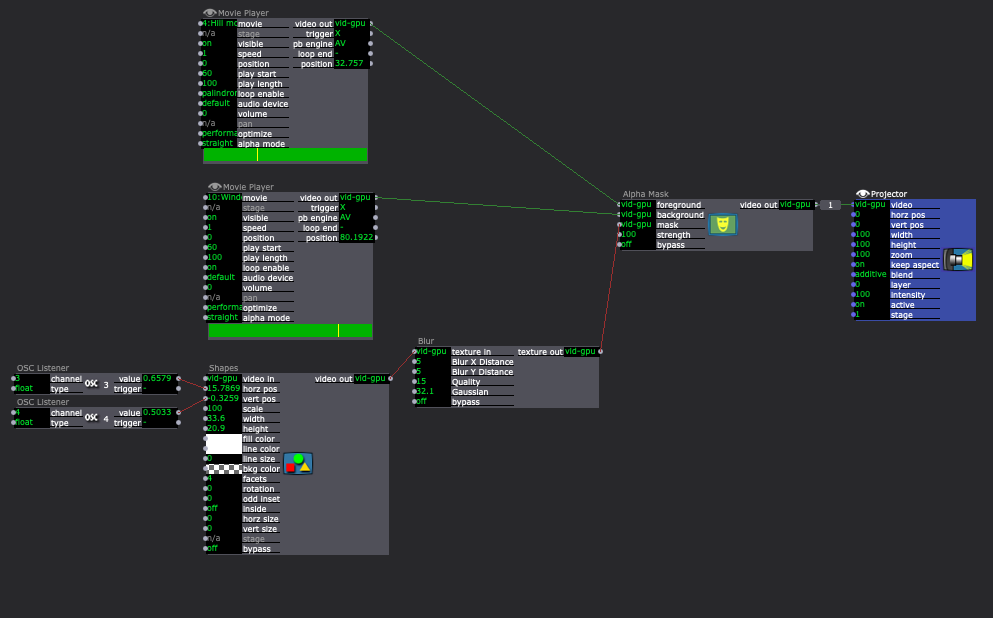

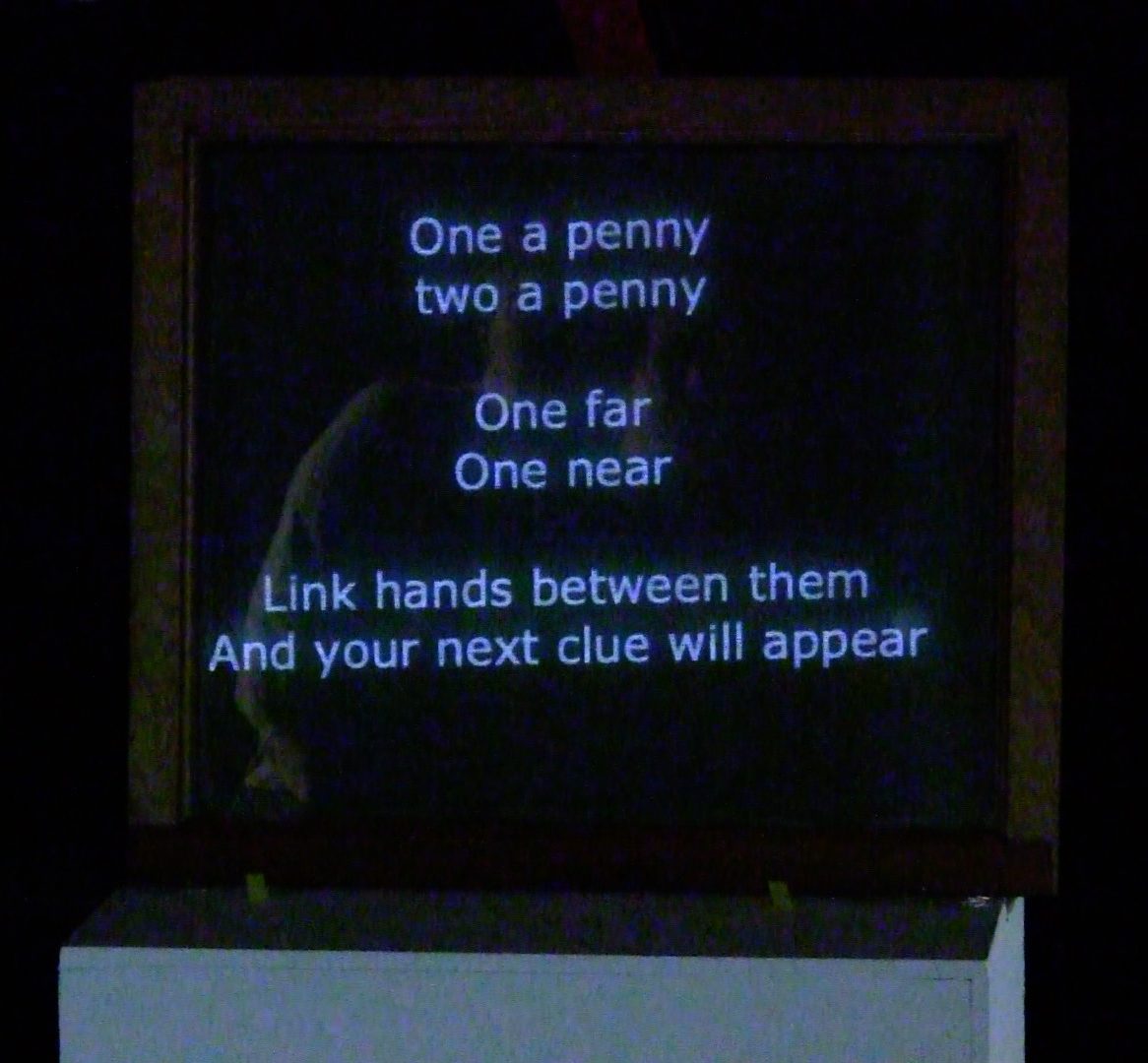

Now, the IR light sits on the projector and the WiiMote is connected to the window. The WiiMote sends its x and y coordinates to the computer via Bluetooth. These coordinates connect to the shapes actor in Isadora. Then, each scene had two videos playing. For example, one was a hill in the the woods. The other shows this same hill with people dancing. With the Shapes Actor and and Alpha Mask Actor, a user can aim the window (connected to the WiiMote) towards the WiiMote in order to see the dancers on the hill. Additionally, while holding the window, the user can move throughout the projection to see different parts of the image.

Then, I created four places where the window would hang. Using a Makey Makey, each hanging place changed the video projection by jumping to another scene. I mapped the projection to project only on the window while the Makey Makey circuit was closed. Then, once the window is lifted off of that hanging place, the image expands to fill the space and the the user can move around to reveal new parts of the image. If they set the video down in a different place, the scene will change and the process begins again.

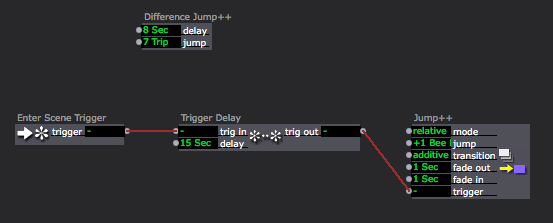

The Makey Makey works like an external keyboard. Closing the circuit functions like typing a letter. Turning the Projector Actors on and off with up and down key strokes (closing and opening the Makey Makey Circuits) was a little tricky. The closed circuit repeatedly sent the “down” signal. The image below shows a solution to this problem.

See it for yourself! This video contains some user interaction, and more of me explaining how to use the system and how it works.

Line Describing a Cone

Posted: December 3, 2018 Filed under: Uncategorized Leave a comment »I’ve been meaning to post this for a while. This is Line Describing a Cone by Anthony McCall. It is a 30 minute 16mm film that is projected with a fog machine, making the light appear solid.

PP3: A Hidden Mystery

Posted: November 13, 2018 Filed under: Uncategorized Leave a comment »For Pressure Project 3 we were to reveal a hidden mystery in 3 minutes without a keyboard or mouse. Bonus levels were to make it unique to each user, have users move in a larger environment, and for users to express delight in the search and reveal.

Rather than making it unique to each user, I wanted to make an experience in which users must work together to reveal the mystery, and decided to make a treasure hunt.

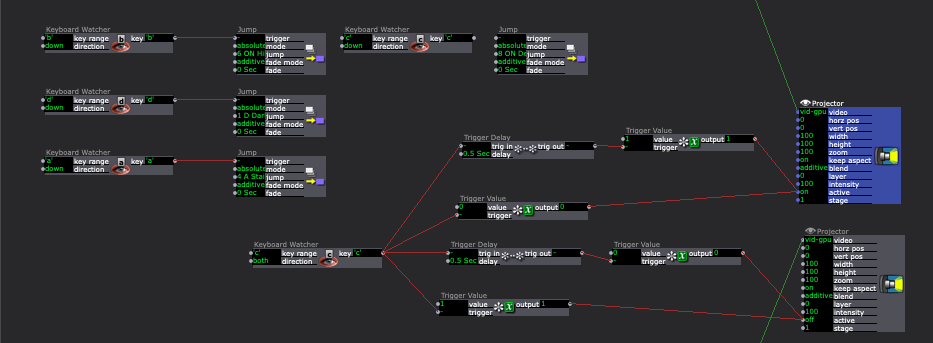

I chose to start with putting a penny into a jar to signify the investment. The Makey Makey is attached to the penny and the tin foil that lines the jar. When the penny enters the jar, it triggers the next scene. In this scene, I video is projected onto a white box, and my voice (which has been slowed down) explains the next clue. They are to find the window and set it on top of the while box. The window has a strip of tin foil on the bottom, so when they set it on the box, it tin foil closes the circuit and triggers the next scene. (If you look at the image to the right, I have wires set up on either side of where they set the frame. These wires connect to the Makey Makey.)

I chose to start with putting a penny into a jar to signify the investment. The Makey Makey is attached to the penny and the tin foil that lines the jar. When the penny enters the jar, it triggers the next scene. In this scene, I video is projected onto a white box, and my voice (which has been slowed down) explains the next clue. They are to find the window and set it on top of the while box. The window has a strip of tin foil on the bottom, so when they set it on the box, it tin foil closes the circuit and triggers the next scene. (If you look at the image to the right, I have wires set up on either side of where they set the frame. These wires connect to the Makey Makey.)

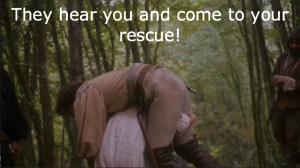

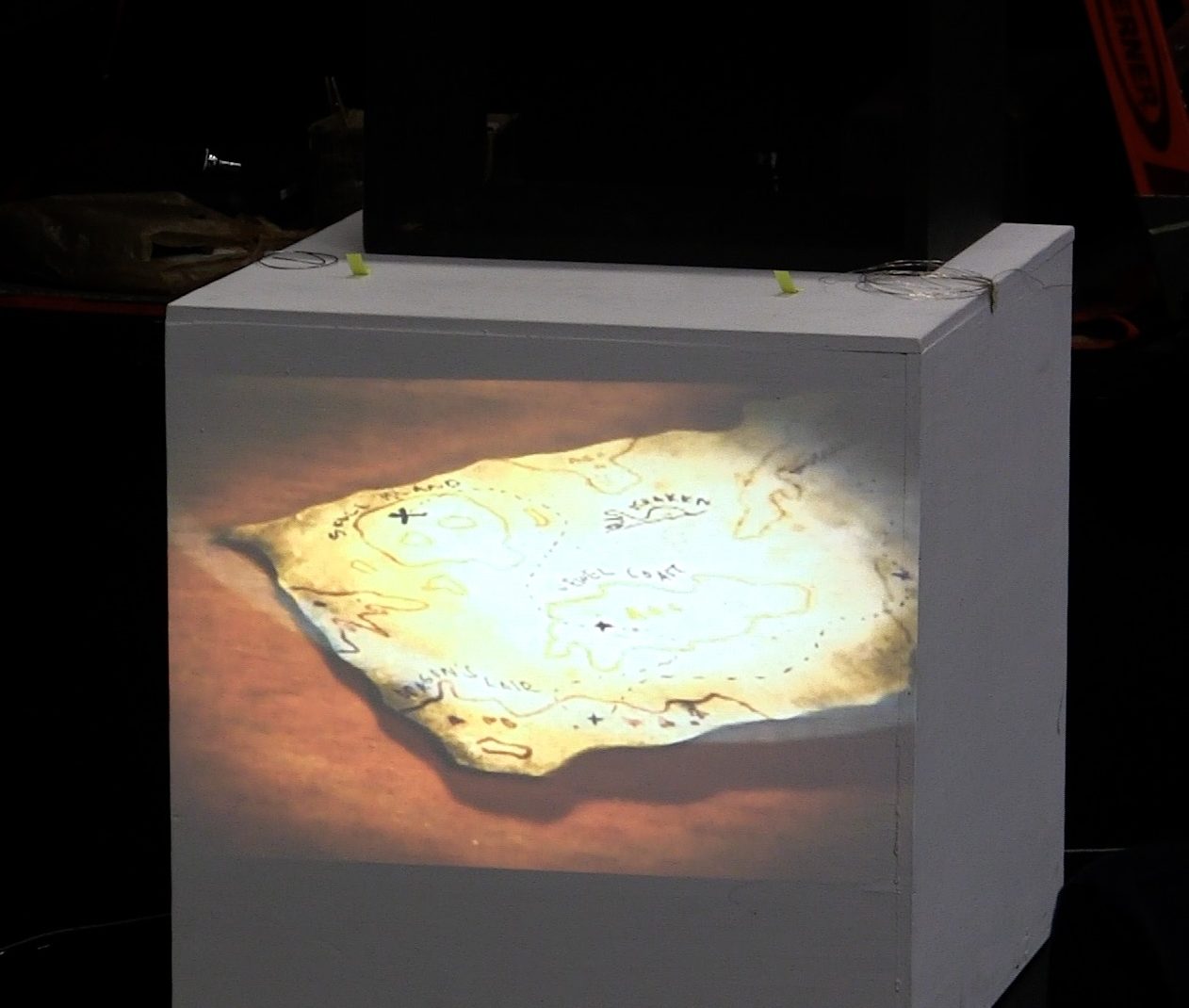

In the next scene, the voice gives some vague directions that become more clear when the projection appears on the window. The users figure out that they need to link hands in order to close the Makey Makey circuit this time. This is the moment when the whole group needs to work together.

In the next scene, the voice gives some vague directions that become more clear when the projection appears on the window. The users figure out that they need to link hands in order to close the Makey Makey circuit this time. This is the moment when the whole group needs to work together.

In this instance it would have been helpful to use something besides a penny, because the penny used in the first scene got mixed into the penny’s used in this scene.

Then, the next scene provides another riddle. It is pictured on the right.

They figured out that they needed to go back to the jar where they began. Taking the penny out of the jar triggered the next scene. I crossed my fingers that they would get the “Dig” message, but it wan’t clear enough. In hind sight, this scene might have had the voice tell them to keep digging. While we did it, however, I told them to think about the clue, and they figured out that they needed to go into the jar to find the treasure!

I definitely think that they expressed delight as they uncovered the treasure! Inside the jar, but obscured by the tin foil, I had hidden a bunch of chocolate coins.

I definitely think that they expressed delight as they uncovered the treasure! Inside the jar, but obscured by the tin foil, I had hidden a bunch of chocolate coins.

To watch the experience, check out this video!

Schematic and Schedule

Posted: November 8, 2018 Filed under: Uncategorized Leave a comment »

| 11/1 | Laboratory for Cycle 2

Set up other computer. Intro to WiMote. Try regular (not short throw) projector. Weekend: Make patching with WiMote. Buy and prepare materials for hanging the window.

|

| 11/6 | Presentation of ideation and current state of Prototypes

Try patches with window, Hang window bars, Map projections

|

| 11/8 | Laboratory for Cycle 2

Figure out dimensions in final cut for projections to land on window locations. Continue figuring out WiMote/mapping projections as necessary Weekend: Edit video (maybe shoot more?) and set them up in patches.

|

| 11/13 | Last second problem solving

Continue figuring out WiMote/mapping projection as necessary |

| 11/15 | Cycle 2 Performance |

| 11/20 | Critique

|

| 11/22 | TURKEY TIME |

| 11/27 | Laboratory for Final Cycle

Figure out how the computers communicate (if necessary for Makey Makey signals to reach patches) |

| 11/29 | Rehearsal of public performance

Set up Makey Makey with wires for window hangers. Program Triggers with Makey Makey |

| 12/4 | Last second problem solving

|

| 12/6th or 7th | Student Choice:

Public Performance at Class Time or Final Time |

| 12/? |

Official final time: Friday Dec 7 4:00pm-5:45pm

|

Pressure Project 2 (A Walk in the Woods)

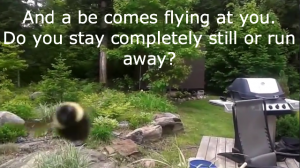

Posted: October 4, 2018 Filed under: Uncategorized Leave a comment »I wanted to get away from the keyboard for this assignment, and make something that asked people to move and make noise. I considered making some sort of mock fight what got people to dodge or gesture to certain sides of their body, but I didn’t want to make anything violent. Serendipitously, I was an outdoor gathering while I pondered what to make, and this party what highly attended by bees. So, it began–a walk in the woods. I thought I would tell people that things (like bees) were coming at then and ask them from a certain angle and then ask them to either dodge or gesture it away. I thought I would use the crop actor to crop portions of what my camera was seeing, and read where the movement was to trigger the next scene.

Less serendipitously (in some ways) I was at this party because my partner’s sister’s wedding the was the day before, and I was in the wedding party. This meant that I had 2 travel days, a rehearsal dinner, an entire day of getting ready for a wedding (who knew it could take so long?), and the day after party. WHEN WAS I SUPPOSED TO DO MY WORK?!

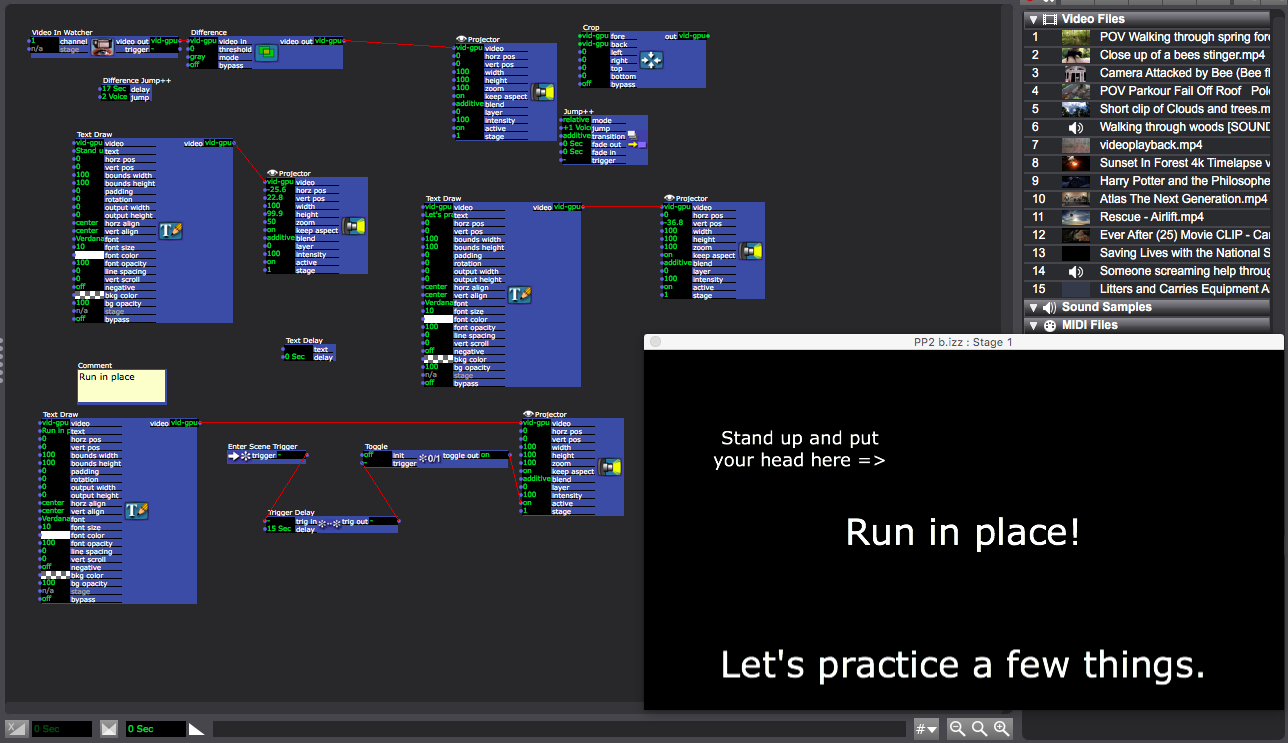

So, to simplify, I decided to not worry about cropping, and to trigger through movement, lack of movement, sound, or lack of sound. Then, the fun began! Making it work.

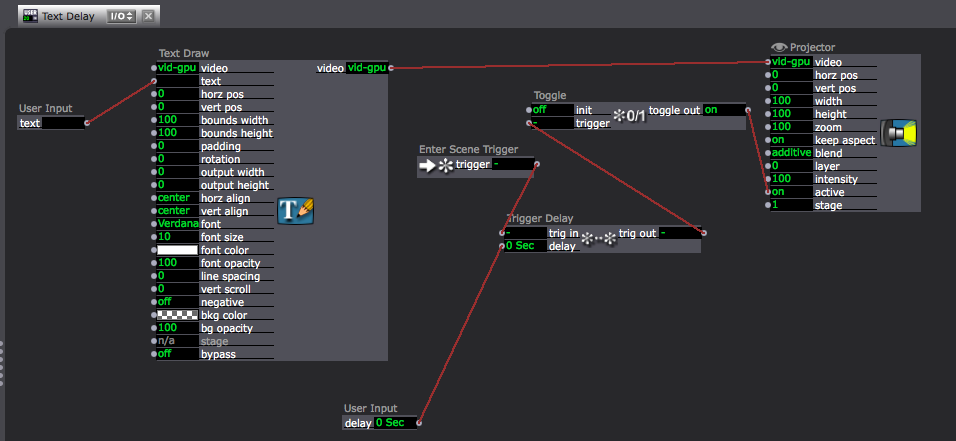

I decided to start with a training that would give the user hints/instructions/practice for how to continue. This also give me a chance to figure out the patches without worrying about the content of the story. Using Text Draw, I instructed the user to stand up, and told them that they would practice a few things. Then, more words came in to tell the user to run. I created a user actor that would delay text, because I planned to use this in multiple scenes.  The user actor has an enter scene trigger to a trigger delay to a toggle actor that toggles the projector’s activity between on and off. Since I set the projector to initialize “off” and the Enter Scene Trigger only sends one trigger, the Projector’s activity toggles from “off” to “on.” Then, I connected user input actors to “text” in the Text Draw and “delay” in the Trigger Delay Actor. This allows me to change the text and the amount of time before it appears every time I use this user actor.

The user actor has an enter scene trigger to a trigger delay to a toggle actor that toggles the projector’s activity between on and off. Since I set the projector to initialize “off” and the Enter Scene Trigger only sends one trigger, the Projector’s activity toggles from “off” to “on.” Then, I connected user input actors to “text” in the Text Draw and “delay” in the Trigger Delay Actor. This allows me to change the text and the amount of time before it appears every time I use this user actor.

I also made user actors that trigger with sounds and with movement. I’ll walk through each of these below.

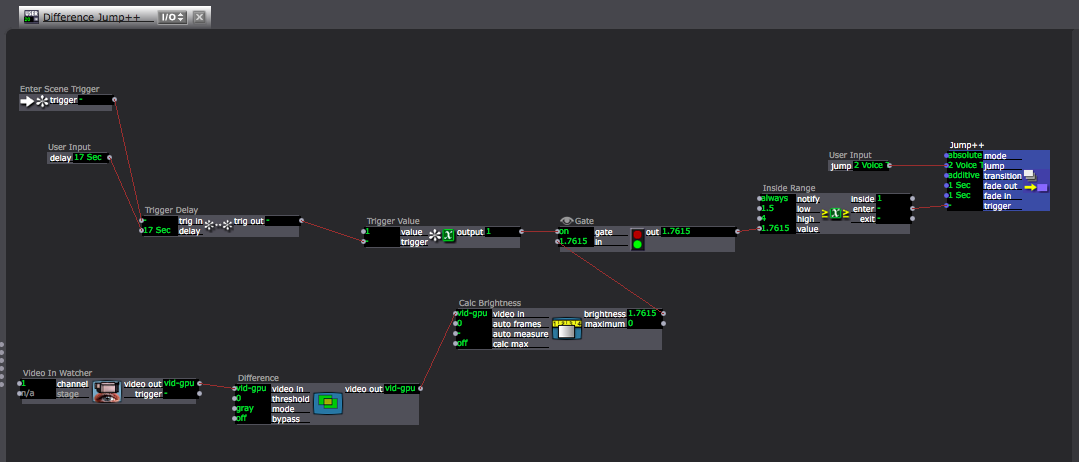

Any story that prompted them to run or stay still used a user actor that I called, “Difference Jump++.” Here, I used the Difference Actor to and the Calc Brightness actor to measure how much movement takes place. (Note: the light in the space really mattered, and this may have been part of what made this not function as planned. However, because this was in a user actor, I could go into the space before showing it and change the the values in the Inside Range actor and it would change these values in EVERY SCENE! I was pretty proud of this, but it still wasn’t working quite right when I showed it.)

I used to gate actor, because I wanted to use movement to trigger this scene, but the scene starts by asking the user to stand up, so I didn’t want movement to trigger the Jump++ actor until they were set up. So, I set this up similar to the Text Delay User Actor, and used the Gate Actor to block the connection between Calc brightness and Inside Range until 17 seconds into the scene. (17 second was too long, and something on the screen that showed a countdown would have helped the user know what was going on.)

I used to gate actor, because I wanted to use movement to trigger this scene, but the scene starts by asking the user to stand up, so I didn’t want movement to trigger the Jump++ actor until they were set up. So, I set this up similar to the Text Delay User Actor, and used the Gate Actor to block the connection between Calc brightness and Inside Range until 17 seconds into the scene. (17 second was too long, and something on the screen that showed a countdown would have helped the user know what was going on.)

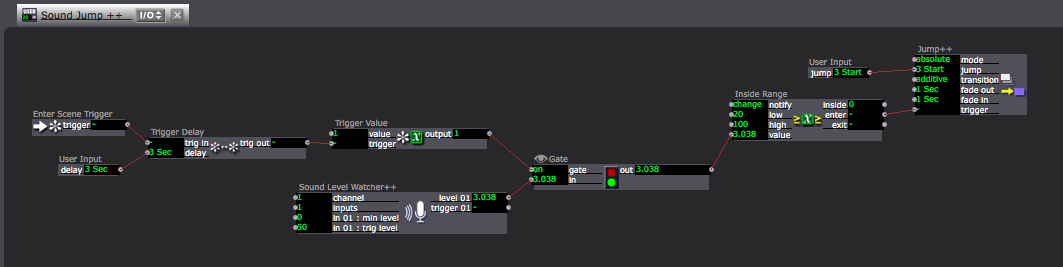

So, with this, the first scene was built (showing the dancer that their running action can trigger changes. The next scene trained the user that their voice can also trigger changes. For this, I built a user actor that I called, “Sound Jump++.” This functions pretty similarly to the Difference Jump++ User Actor.

So, the trigger for most of the scenes is usually either movement, stillness, sound, or silence. I’ve explained  how movement and sound trigger the next scene, but stillness and silence are the absence of movement and sound. So, in add

how movement and sound trigger the next scene, but stillness and silence are the absence of movement and sound. So, in add

ition to including a Difference Jump++ User Actor and/or a Sound Jump++ User Actor I had and Enter Scene Trigger to a Trigger Delay to a Jump ++ Actor. If the user had not triggered a scene change by the time the Trigger Delay triggered the next scene, it was assumed that the user had chosen stillness and/or silence.

Then, which scene we jumped to depended on how the scene change was triggered.

Next, I came up with a story line involving bears, bees, robots, mythical creatures, airlift rescues, search and rescue units, and I downloaded videos and sounds to represent these events. Unfortunately, I either had things jumping to the wrong scenes, or Inside Range values were set incorrectly, because the performance of this “choose your own adventure” story didn’t function, and we kept getting the same storyline when we tried it in class. I learned a lot in the process of building this though!

PP1

Posted: September 23, 2018 Filed under: Brianna Rae Johnson, Pressure Project I 1 Comment »Ok, so a story of cultural significance with sound…

I think I fixated on a story of cultural significance for me (in my lifetime). I started researching by watching hours of news footage from the morning of September 11, and processed the events as if for the first time. I remember feeling shocked, but it all felt surreal. There were so many pieces that I couldn’t understand as a 14 year old.

I watched stories about people who were in the towers and their families. I listened to peoples’ screams as they watched the second plane hit. I learned about another plane that went down in a field. I listened to voicemails from people who were on the planes.

I remember the Mr. McKegg’s (the principal’s) voice come over the intercom to announce that there has been a terrorist attack. Two years after the columbine shooting, I was among many who initially thought that our school had been attacked. I probably felt relieved to learn that the attack was so far away! I remember watching the second plane hit from the heath classroom at my middle school. I remember TVs being on all day at school. I remember the World Trade Center happening to be pictured in our math books on the page that we happened to be covering that day. I remember feeling somewhat pleasantly surprised by the coincidence. I remember getting off the bus and my mom, exasperated, asked if my brother and I were ok. I remember feeling like I should be more emotionally effected.

Now, in 2018, I think I understand the exasperation that I didn’t feel at 14–and if I could be this effected by listening to these voices, screams and reports, I think others might too. Is it ethical to put the unsuspecting DEMS group through this without them knowing what they will hear? Should I pick something else? I hadn’t come up with a different concept, and didn’t feel like i had time, so I leaned in and crossed my fingers that it would be ok. I tried to dampen the impact of the sound by locating it within my school day. I hoped to tell the story from my 14 year old perspective, but didn’t want to make light of it either. However, we are not middle schoolers anymore, and we all have our own memories of the event.

On youtube, I found the sounds of electric school bells, the pledge of allegiance, gym class, and kids voices to start it off, and began playing with how much of each clip to listen to and at what volume. Then, I went into the sounds of the event. Trying not to make light of it, I think I went heavier than necessary. I wanted the sound of the plane hitting because I remember the visual, but that part is pretty intense. Then, silence, the sounds of tv static, the sound of an old TV clicking off, and more silence. Finally, it drops into math class saying, “To simplify, we can only combine what we call our like terms.” Not only was this math class, but it seems like a nod towards the political climate that grew out of 9/11 and heightened perceptions of sameness and difference.

But what about showing this to a bunch of people who don’t know what they are in for? I debated giving a trigger warning, but also knew that it would impact their experience. Then, in the moment, I pushed play and suddenly realized that I hadn’t said anything. I crossed my fingers harder.

For the most part, people heard what I hoped they would hear, but they heard their own experience of it too. I told my story, and they understood–but they seemed to feel their own story. People have their own associations with familiar sounds.

So, here it is. Listen if you’d like.