Cycle 1

Posted: December 13, 2019 Filed under: Uncategorized Leave a comment »I changed the project I wanted to do many times leading up to Cycle 1. So, for cycle 1 I sort of did a pitch to the class of what I had finally decided that I wanted to work on.

I chose to do a Naruto inspired “game” that tracks the position of a user’s hands and then does something when the correct amount of hand signals were “recognized”.

I wanted to use Google’s hand tracking library but at the suggestion of the class I opted to use the much better Leap motion controller to due the hand tracking. The leap motion controller has a frame rate of 60 frames per second which makes it ideal for my use case.

Secondly, I had the idea of using osc to send messages to the lightboard when a jutsu was successfully completed.

Short presentation below:

Cycle 2 – Hand tracking algo

Posted: December 13, 2019 Filed under: Uncategorized Leave a comment »I spent the majority of the time leading up to this cycle devising a hand-tracking algorithm that could efficiently and robustly track the position of the fingers in (one) hand. There are a few approaches I took:

Approach 1: Get the position of the palm of the hand from polling the leap motion controller. Then subtract the difference of the finger position to get its relative position.

Pros: Consistent, deals with location away from Leap motion controller well

Cons: Gives bad data at certain orientations

Approach 2: Get the differences of the finger positions in relation to each other. These deltas will provide relative finger positions to each other.

Pros: Works well at most hand orientations

Cons: Not as good when fingers close together

Approach 3: Check to see if each finger is extended and generate a unique code corresponding to each finger for each frame.This is the approach I went with. This makes it much easier to program as it abstracts away a lot of the calculations involved. Also, this ended up being arguably the most robust out of the three due to only using Leap Motion’s api.

Pros: Very robust, easy to program, works well at most orientations

Cons: Will have to make hand signals simpler to effectively use this approach.

Cycle 3- One-Handed Ninjutsu

Posted: December 12, 2019 Filed under: Uncategorized 2 Comments »This project was inspired by an animated TV show that I used to watch in middle school; Naruto. In Naruto, the protagonists had special abilities that they could activate by making certain hand signals in quick succession. Example:

Almost all of the abilities in the show required the use of two hands. Unfortunately, The leap motion controller that I used for this project did not perform so well when 2 hands were in view and it would have been extremely difficult for it to distinguish between 2-handed signals. However, I feel that the leap motion was still the best tool for hand-tracking due to its impressive 60 frames per second hand tracking that was quite robust (with one hand) on the screen.

Some more inspirations:

I managed to program 6 different hand signals for the project:

Star, Fist, Stag, Trident, crescent, and uno

Star – All five fingers extended

Fist – No fingers extended (like a fist)

Uno – Index finger extended

Trident – Index, Middle, Ring extended

Crescent – Thumb and pinky extended

Stag – Index and pinky extended

The Jutsu that I programmed are as follows:

Fireball Jutsu – star, fist, trident, fist

Ice Storm Jutsu – uno, stag, fist, trident

Lightning Jutsu – stag, crescent, fist, star

Dark Jutsu – trident, fist, stag, uno

Poison Jutsu – uno, trident, crescent, star

The biggest challenges for this project was devising a robust-enough algorithm for the hand signals that would be efficient enough to not interfere with the program’s high frame rate.

And two: Animations and art. Until you start working on a game, I think people don’t realize the amount of work that goes into animating stuff and how laborious of a process it is. So, that was a big-time sink for me and if I had more time I definitely would have improved the animation quality.

Here it is in action: https://dems.asc.ohio-state.edu/wp-content/uploads/2019/12/IMG_8437.mov

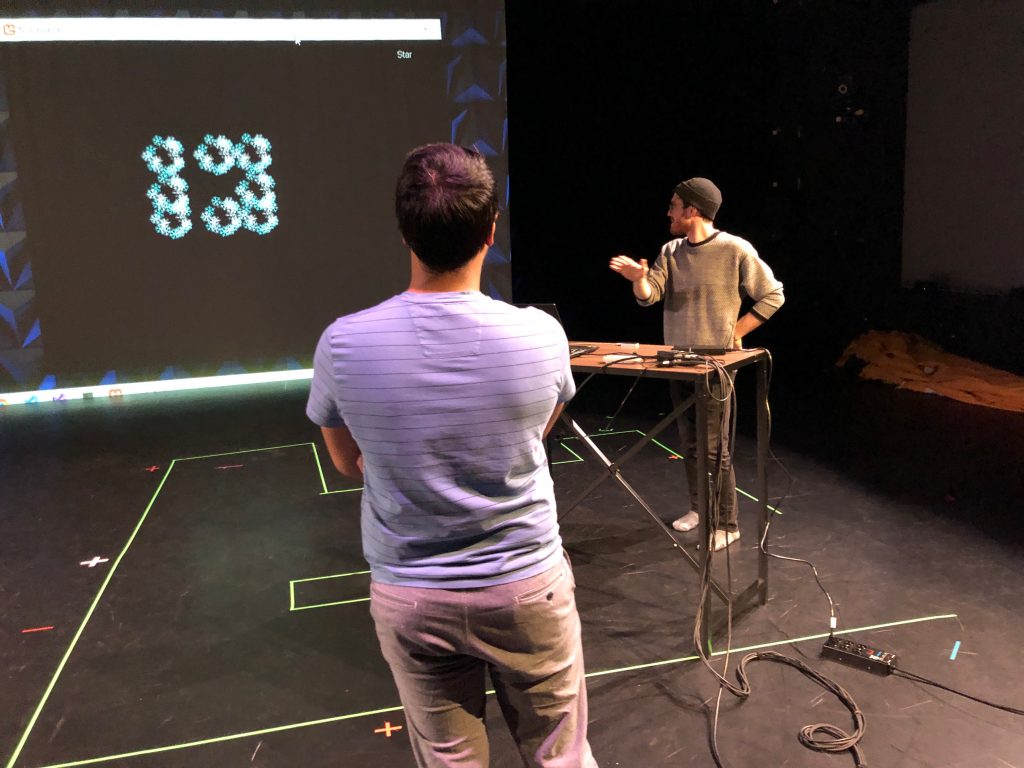

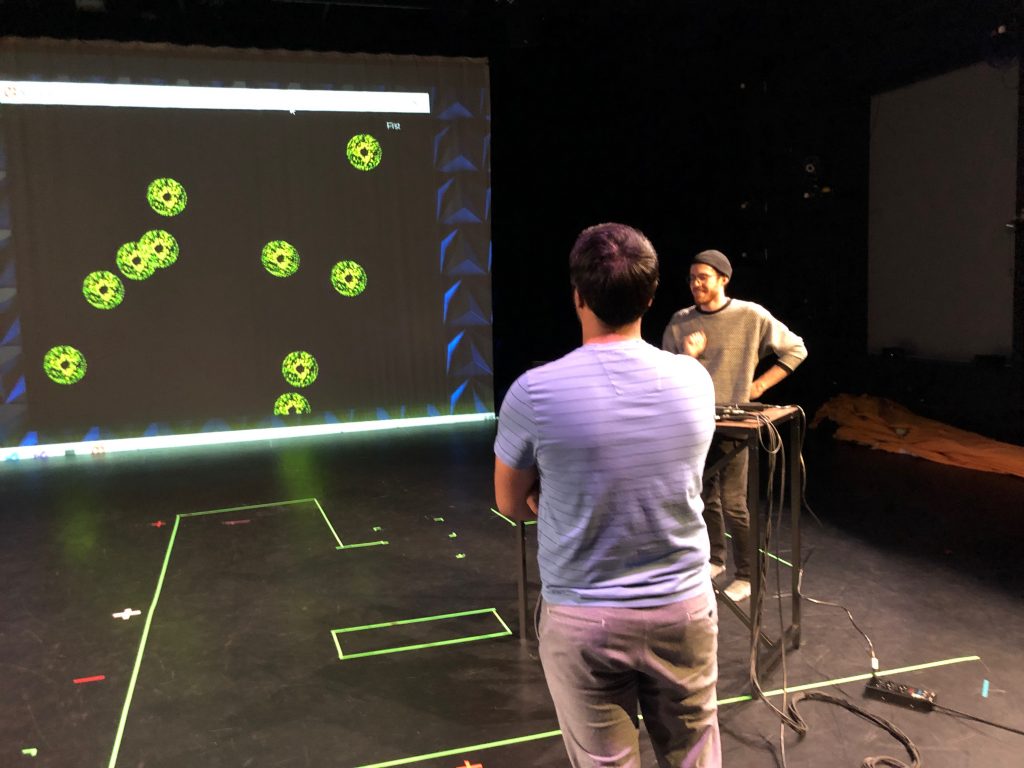

Some pics:

You can find the source code here: https://github.com/Harmanjit759/ninjaGame

NOTE: You must have monogame, leap motion sdk, c++ 2011 redistributable installed on your machine to be able to test out the program.

Pressure Project 3: Magic Words

Posted: October 21, 2019 Filed under: Uncategorized 1 Comment »For this pressure project I wanted to move away from Isadora since I prefer text-based programming and it is just something that I am more comfortable doing. I also experienced frequent crashes and glitches with Isadora and I felt I would have a better experience if I used different technology.

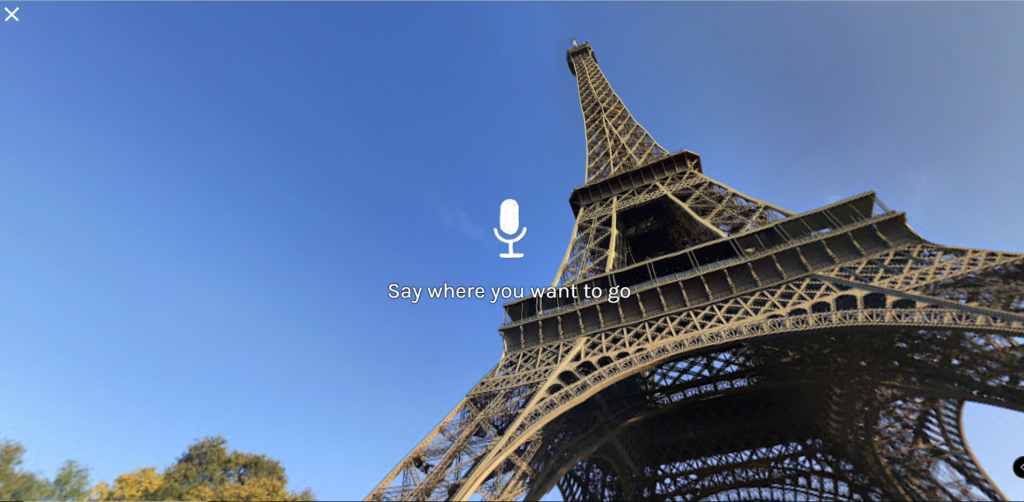

For this experience, we had to reveal a mystery in under 3 minutes. I spent some time searching the web for cool apis and frameworks that I could use that would help me achieve this task. Something that ended up inspiring me was Google’s speak to go experiment with the Web Speech API.

You can check it out here: https://speaktogo.withgoogle.com/

I found this little experience very amusing and I wondered how they did the audio processing. That’s how I stumbled upon the Web Speech API and the initial idea for the pressure project was conceived.

I had initially planned on using 3d models with three.js that would reveal items contained inside them. The 3d models would be wrapped presents and they would be triggered by a magic word. Then they would open/explode and reveal a mystery inside. However, I ran into a lot of issues with loading the 3d models, CORS restrictions, and I decided that I did not have enough time to accomplish what I had originally intented.

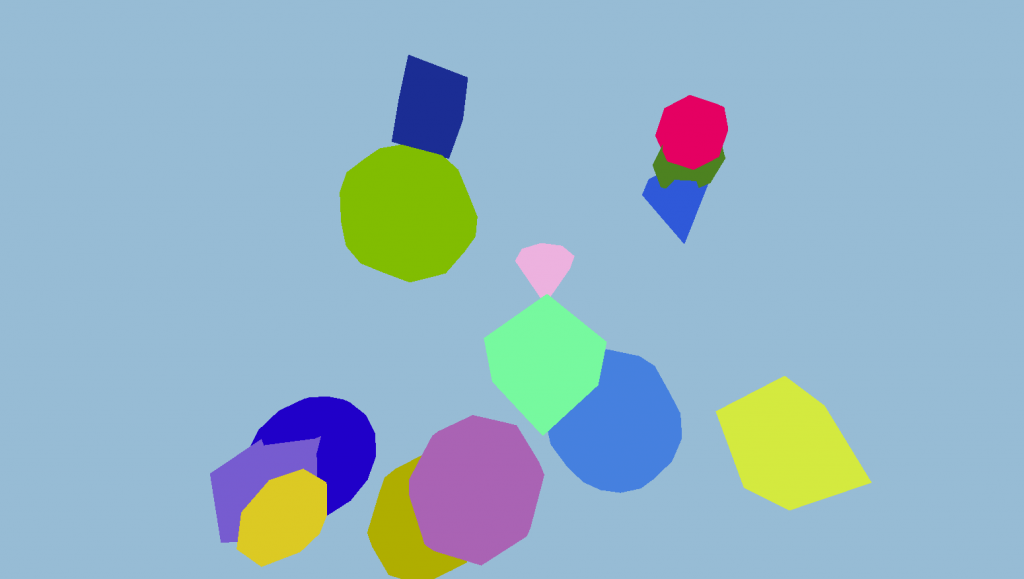

So, in the end I decided on using basic 3d objects that are included in the three.js standard library and having them do certain actions when triggered with the mystery being the specific words that would cause an action and what action they caused (since some are rather subtle).

You can get the source code here:https://drive.google.com/file/d/1RbOzD3Ktrbp2VpqDNj6SQSWb83hOEA2U/view?usp=sharing

PP2 Documentation – Harman

Posted: September 29, 2019 Filed under: Uncategorized Leave a comment »For this project I thought it would be best to tell a story that everybody would recognize. It would be easier for the audience to peace together missing information that way. The story I chose was the 3 little pigs.

I thought it would be interesting to tell the story by mashing up different audio clips of various movies. I used getyarn.io to find video clips based on the dialogue I needed to tell the story.

While I think it would have sufficed to stick only with audio; I had some free time and decided that using pictures wouldn’t hurt.

In the end what I created was a silly and somewhat random telling of the 3 little pigs.

Link: https://dems.asc.ohio-state.edu/wp-content/uploads/2019/09/PP2_Harman.zip

PP1 Documentation – Harmanjit

Posted: September 12, 2019 Filed under: Uncategorized Leave a comment »

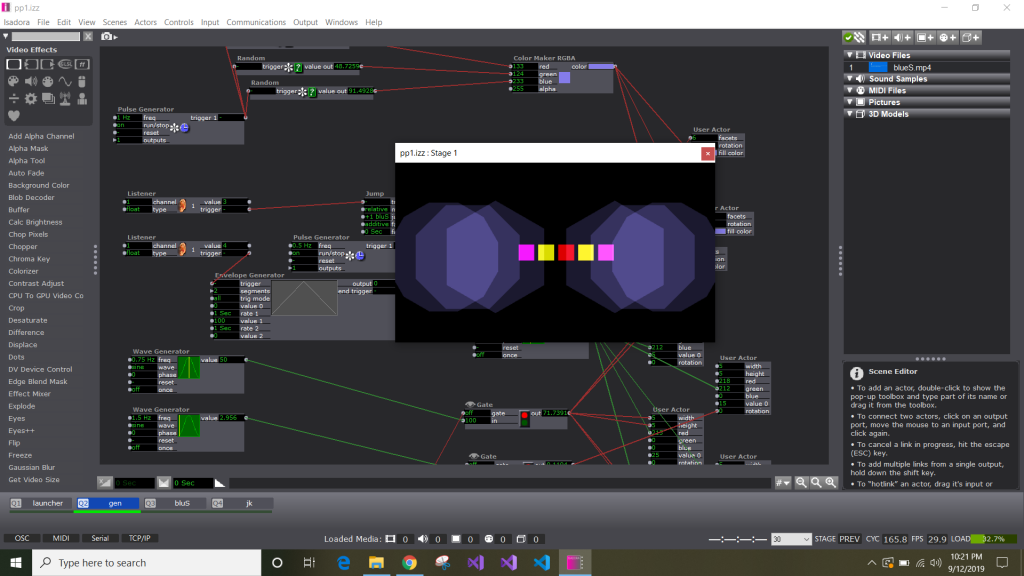

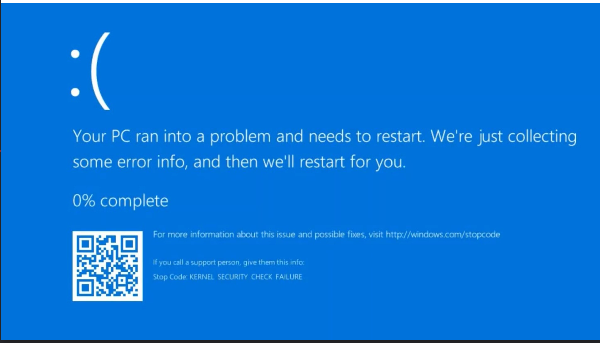

For this pressure project I decided to go in a different direction. I had a great idea in mind for how to hit all of the achievements for this project such as making someone laugh, responding to broadcasters, and maintaining surprise. The idea was to have a fairly standard self-generating patch that would incrementally respond to network broadcasters. When it received a value of 3 it would display a Windows blue screen indicating that the program had crashed only for it to be revealed as a joke a few seconds later.

Unfortunately, there was a problem with receiving the values from the network broadcasters and it didn’t go as planned.

I used random number generators for the color generation and the horizontal movement. The blue screen component was a separate scene that was accessed via a listener -> Inside Range –> jump. I also used toggle actors and gates to turn on/off different effects in response to network inputs.