Cycle 1

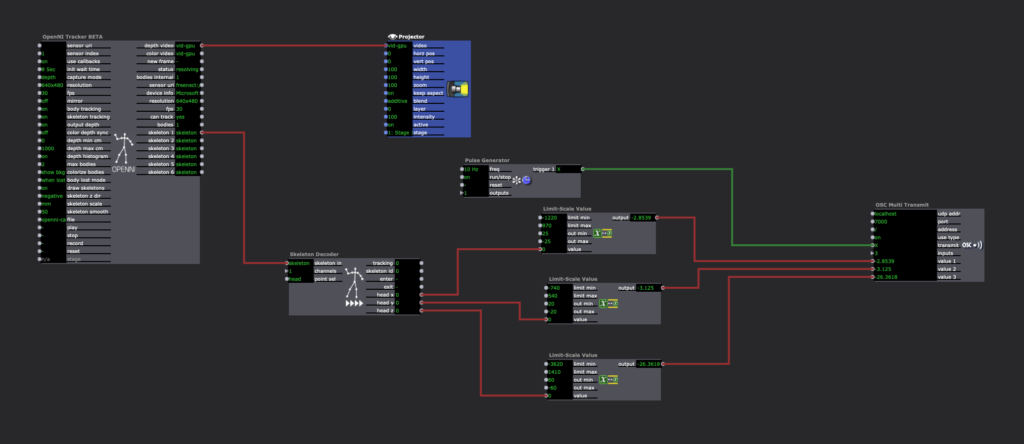

Posted: April 6, 2021 Filed under: Nick Romanowski Leave a comment »My overall goal for this final project is to create a video game like “shooting” environment that utilizes leap motion controls and a parallax effect on the display. Cycle 1 was focused on creating the environment in which the “game” will take place and building out a system that drives the head tracking parallax effect. My initial approach was to use basic computer vision in the form of blob tracking. I originally used a syphon to send kinect depth image from Isadora into TouchDesigner. That worked well enough, but it lacked a z-axis. Wanting to use all of the data the kinect had, I decided to try and track the head in 3D space. This lead me down quite the rabbit hole as I tried to find a kinect head tracking solution. I looked into using NIMate, several different libraries for processing, and all sorts of sketchy things I found on GitHub. Unfortunately none of those panned out so I fell on my backup, which was Isadora. Isadora looks at your skeleton and picks out the bone associated with your head. It then runs that through a limit scale value actor to turn it into values better suited for what I’m doing. Those values then get fed into an OSC transmit actor.

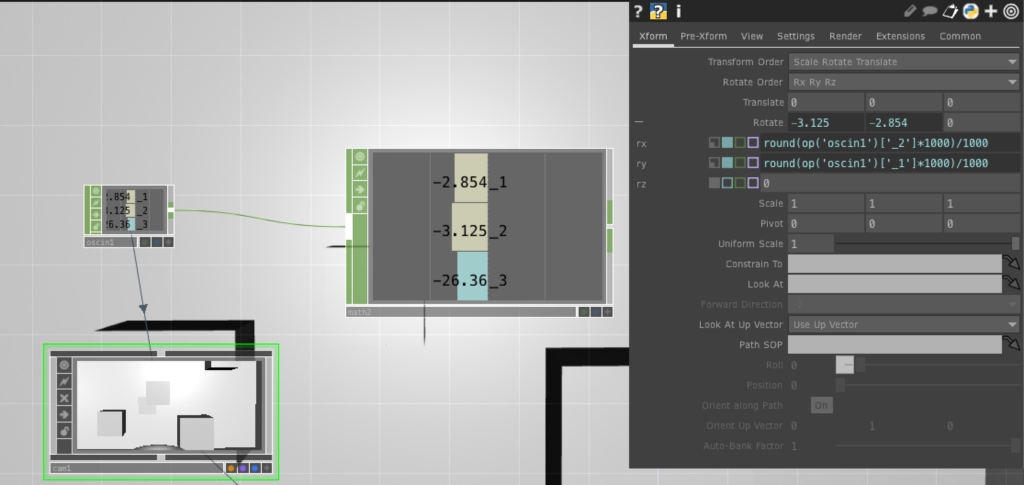

TouchDesigner has an OSCin node that listens for the stream coming out of Isadora. Using a little bit of python, a camera in touch allows its xRotation, yRotation, and focal length to be controlled by the values coming from the OSCin. The image below shows some rounding I added to the code to make the values a little smoother/consistent.

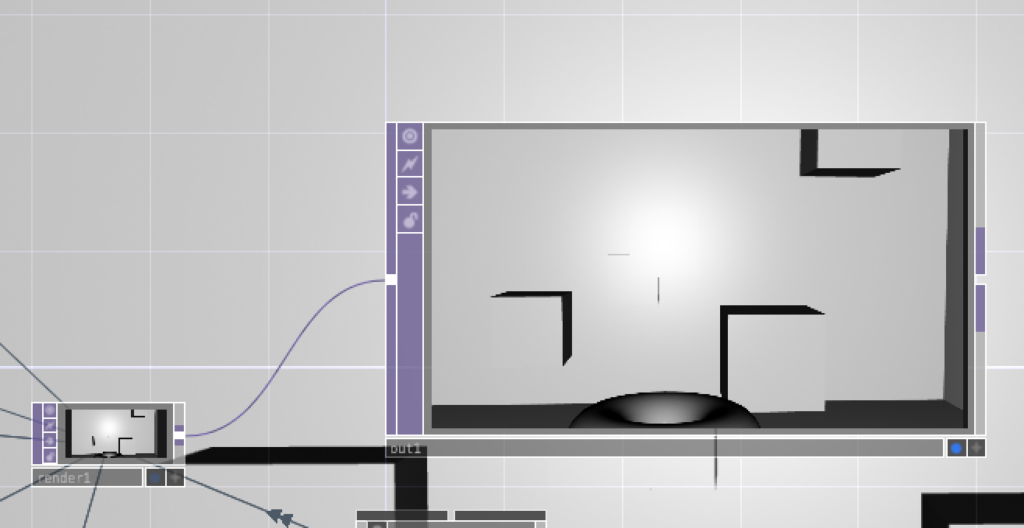

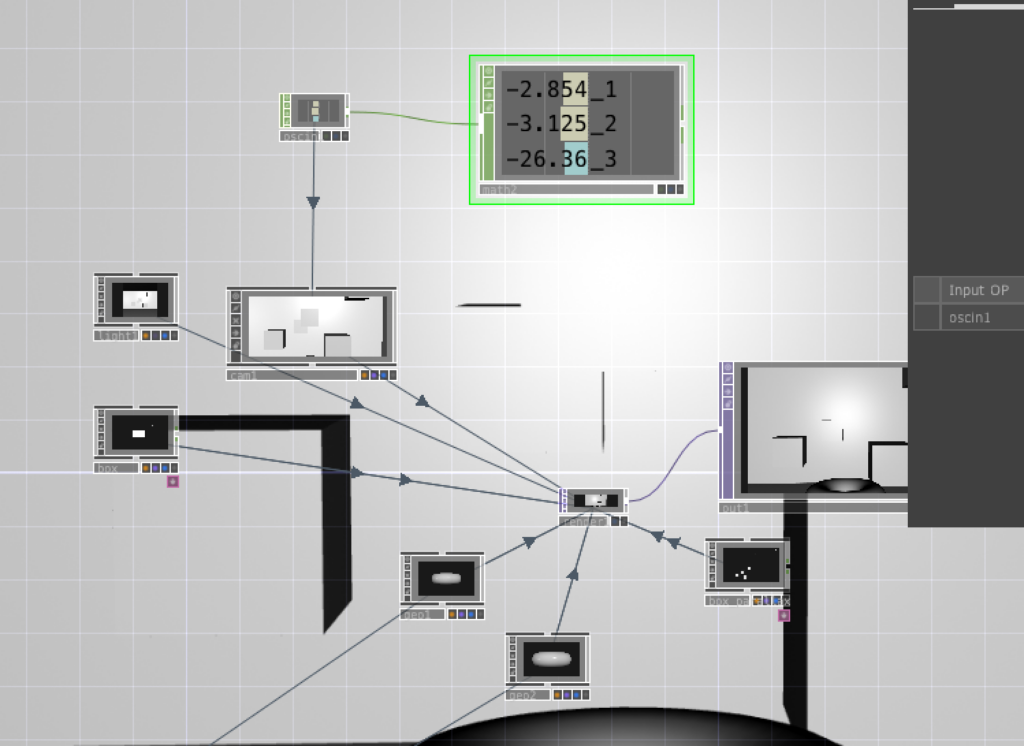

TouchDesigner works by allowing you to set up a 3D environment and feeding the various elements into a Render node. Each 3D file, the camera, and lighting are connected to the renderer which then sends that image to an out which can be viewed in a separate window.