The Present

Posted: November 9, 2015 Filed under: Anna Brown Massey, Final Project Leave a comment »The Past

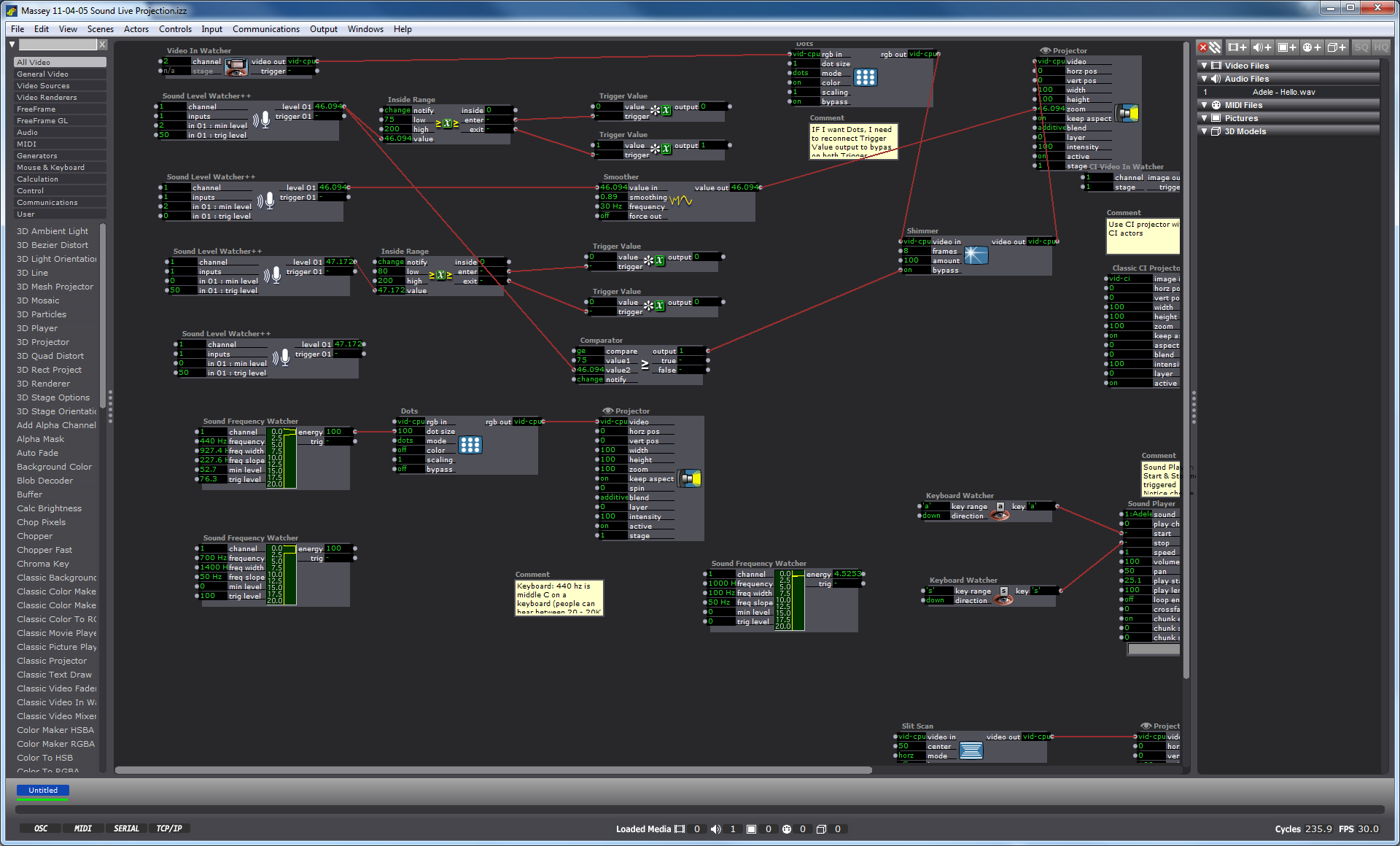

I am currently designing an audio-video environment in which Isadora reads the audio and harnesses the dynamics (amplification level) data, and converts those numbers into methods of shaping the live video output, which in its next iteration may be mediated through a Kinect sensor and separate Isadora patch.

My first task was to create a video effect out of the audio. I connected the Sound Level Watcher to the Zoom data on the Projector actor, and later added a Smoother in between in order to smooth the staccato shifts in zoom on the video. (Initial ideation is here.)

Once I had established my interest in the experience of zoom tied to amplitude, I enlisted the Inside Range actor to create a setting in which past a certain amplitude, the Sound Level Watcher would trigger the Dots actor. In other words, whenever the volume of sound coming into the mic hits a certain point and above, the actors trigger a effect on the live video projection in which the screen disperses into dots. I selected the Dots actor not because I was confident that it would create a magically terrific effect, but because it was a familiar actor with which I could practice manipulating the volume data. I added the Shimmer actor to this effect, still playing with the data range that would trigger these actors only above a certain point of volume.

The Future

User-design Vision:

Through this process my vision has been to make a system adaptable to multiple participants of a range between 2 and 30 who can all be simultaneously engaged by the experience and possibly having different roles by their own self-selection. As with my concert choreography, I am strategizing methods of introducing the experience of “discovery.” I’d like this one to feel, to me, to be delightful. With a mic available in the room, I am currently playing with the idea of having a scrolling karaoke projection with lyrics to a well-known song. My vision includes a plan to plan on how to “invite” an audience to sing and have them discover that the corresponding projection is in causation relationship to the audio.

Sound Frequency:

Next steps, as seen at the bottom of the screenshot (and on the righthand side some actors I have laid aside for possible future use), is to start using the Sound Frequency actor as a means of taking in data about pitch (frequency) as a means of affecting video output. To do so I will need to provide an audio file in a variety of ranges as a source to experiment to observe how data shifts at different human voice registers. Then I will take the frequency data range and match that up, through an inside actor, to connect to a video output.

Kinect Collaboration:

I am also considering collaborating with my colleague Josh Poston’s project which currently uses the Kinect with projection as a replacement “live video projection,” that I am currently using, and instead to affect a motion-sensing movement on a rear-projected screen. As I consider joining our projects and expand its dimensions (so to speak-oh puns!), I need to start narrowing on the user-design components. In other words, where does the mic(s) live, where the screen, how many people is this meant for, where will they be, will participants have different roles, what is the