Virtual Reality Storytelling & Mixed Reality

Posted: December 18, 2016 Filed under: Final Project Leave a comment »For my final project, I jumped into working with VR to begin creating an interactive narrative. The title of this experience is “Somniat,” and it’s hoped to revisit feelings of childhood innocence and imagination in its users.

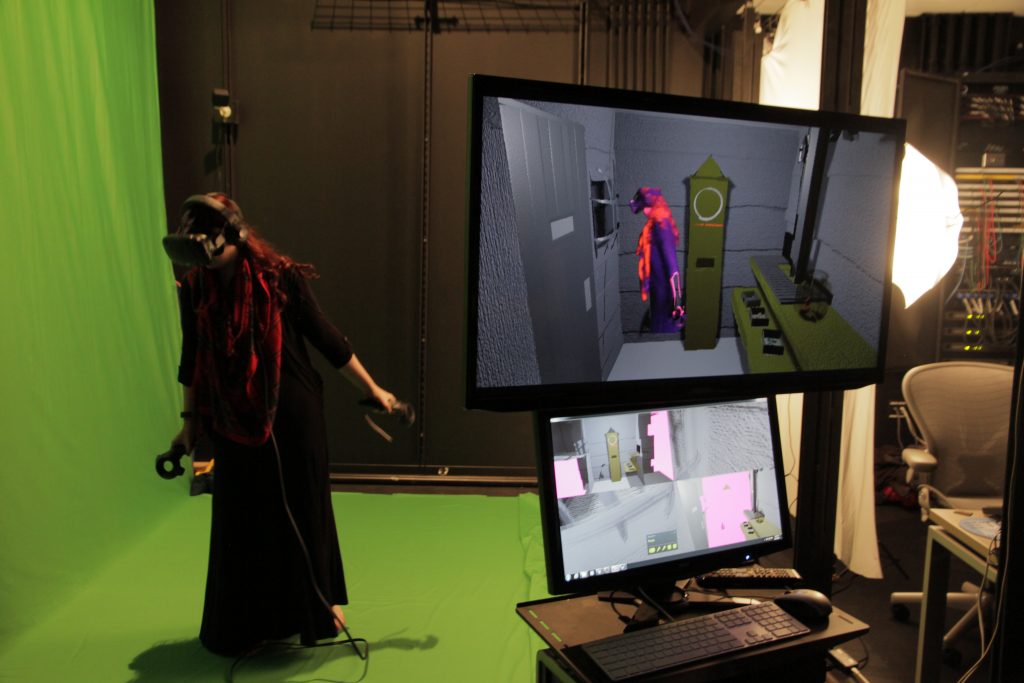

This project was built upon across three separate ACCAD courses – “Concept Development for Time-Based Media,” “Game Art and Design I,” and “Devising Experiential Media Systems.” In Concept Development, I created a storyboard, which eventually became an animatic of the story I hoped to create. Then in Game Design, I hashed out all the interactive objectives, challenges, and user cues, building them into a functional virtual reality experience. And finally, in DEMS, I brought all previous ideas together and built the entire experience, both inside and out of VR, into a complete presentation! A large focus of this presentation was the mixed reality greenscreen. Mixed reality is is where you record the user in the virtual world as well as the real world, and then blend both realities together! An example of this mixed reality from my project can be seen below:

I had a great time working on this project. I’d have to say my biggest takeaway from our class has been to start thinking of everything that goes into a user’s experience; from first hearing about the content to using it for themselves to telling others about it after. My perspective on creating experiential content has been widened, and I look forward to using this view on work in the future!

Peter’s Painting Pressure Project (3)

Posted: December 11, 2016 Filed under: Pressure Project 3 Leave a comment »For our final pressure project, we were told to use physical “dice” in some way and create an interactive system. I took that prompt and interpreted it to make use of coins!

So the first obstacle to overcome was to figure out how to get coins to communicate digital signals to my media system. I really wanted to encourage users to flick coins from afar, so I got a box for coins to be tossed into. Then, I lined the inside of the box with tinfoil strips. Every other strip was connected to ground, and every other strip was connected to a digital input on an Arduino microcontroller. So using this, every time a coin landed in the box, it would output a potentially different keyboard button press to the host computer, appearing seemingly random!

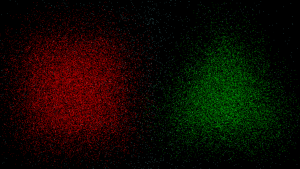

Next I needed to decide how to produce a response from the system to the coin-tossing input. I really enjoy the idea of splattering paint on a canvas, a la Jackson Pollock, and wanted to capture something similar as a result of the chaos of tossing coins and their unpredictability that results. So depending on where the coin lands, a colour associated with that location will begin radiating from a random position on-screen. This creates a really interesting splattering effect when the coin initially lands, as it rattles the other coins in the box, outputting a wide range of signals in rapid succession.

A neat observation made by the group was that it really encourages passersby to engage with the system as it produces dynamic and vibrant colours. Since the main way to interface is tossing in coins, it would also turn a pretty nice profit, too! Haha 🙂

The Synesthetic Speakeasy – Cycle 1 Proposal

Posted: October 9, 2016 Filed under: Uncategorized Leave a comment »For our first cycle I’d like to explore narrative composition and storytelling techniques in VR. I’m interested in creating a vintage speakeasy / jazz lounge environment in which the user passively experiences the mindsets of the patrons by interacting with objects that have significance to the person they’re associated with! This first cycle will likely be experimentation with interaction mechanics and beginning to form a narrative.

Peter’s Produce Pressure Project (2)

Posted: October 9, 2016 Filed under: Uncategorized Leave a comment »For our second pressure project, I used an Arduino (similar to a Makey-Makey) to allow for users to interact with a banana and orange as vehicles for generating audio. As the user touches a piece of fruit, a specific tone begins to pulse. Upon moving that fruit, it’s frequency increases with velocity. Then, if both pieces of fruit move quickly enough, geometric shapes on-screen explode! Once you let go of them, the shapes will reform into their geometric representations.

Our class’s reaction to the explosion was awesome; I wasn’t expecting it to be that great of a payoff 🙂

Meta EVERYTHING – Performance and Media Taxonomy

Posted: September 27, 2016 Filed under: Uncategorized Leave a comment »I wanted to share a video with you all! A virtual reality researcher recorded himself with Kinects interacting with a computer program, entered that recording in the present, and interacted with what his past self was creating. The interaction is a here / there / virtual, now / then, and actor / prop / mirror / costume all bundled into one! It’s fascinating and I hope you all enjoy 🙂

Cheers,

Peter

Peter’s Popping Pressure Project (1)

Posted: September 22, 2016 Filed under: Pressure Project I Leave a comment »For my first pressure project, I wanted to explore a sort of gameified concept. This project has two main components to it – popping digital bubbles by moving within their volume (this component is shown in the attached video – sorry it’s sideways!), and experiencing yourself via a third-person perspective (not in the video, but experienced in class).

My inspiration for the latter aspect of the experience came from staying up until 5am one night whilst demonstrating VR to friends from my dorm. We ended up streaming local video calls into the head mounted display, and were able to watch ourselves walk through the environment from a fixed perspective. I found it profound how quickly my mind associated with my third-person self and how different the experience is when navigating a space in such a way. I wanted to use that idea and make it accessible to everyone, so my solution was to have the computer’s camera at a different location from that of the screen, and encourage the users to conceptualize fine motor skills from this new perspective.

And that leads into the former means of interaction – popping bubbles. The bubbles both function as an encouragement to move about the space, as well as a curiosity to learn how we as participants can effect them. Their mechanic is they spawn in at random locations on the screen (faster if there is no motion in the environment), and the bubbles will pop once a user collides with a bubble (as seen from the camera’s perspective). To add curiosity and engage more senses, I caused the bubbles to play a MIDI sound each time they are popped, whose pitch is determined by the total motion within the camera’s field of view.

This system was achieved through clipping the incoming video signals into small portions, then dividing them apart into a 10 x 10 array. That array then detects motion within each 1 x 1 grid component, and will pop a bubble if there is enough motion and a bubble is present in that coordinate.

It was very enjoyable to observe everyone interact with this system; similar to how we stepped back and observed the workings of our “Conwy’s Game of Life” experience in the Motion Lab, taking the time to simply observe the users instead of their associated video makes the experience truly feel performative. The rules set forth by this patch caused users to move delicately as well as chaotically, in attempts to discover what the system’s rules are. I found it interesting that many users did not associate the popping of bubbles with their movements, as well associate the playing of audio when bubbles are popped, until 2 – 4 minutes into the experience (however seeing as I was the one who designed the system, I was quite blind to how it would look for a first-timer). Overall this project was very fun, and I enjoy seeing how everyone interacted with these different systems!

pp1 <– Isadora patch