Horse Bird Muffin Cycles

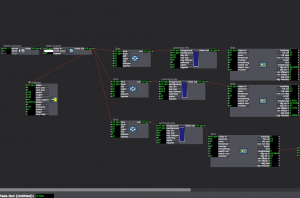

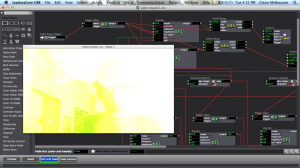

Posted: December 17, 2016 Filed under: Uncategorized Leave a comment »For my final project, I used Isadora, Vuo, a Kinect sensor, buttons and two projectors to create a sort of game or test to determine one’s inherent nature as horse, bird, or muffin, or some combination of them.

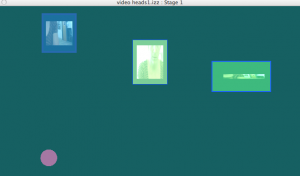

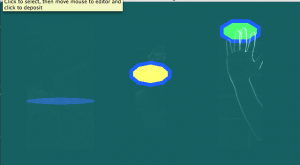

Through a series of instructed interactions, a person first chooses an environment from 3 images projected onto a screen. As a person walks toward a particular image, their own moving silhouette is layered onto the image. Text appears to instruct them to move closer to the place they’ve chosen, and the closer they get, the louder an ambient sound associated with their chosen image gets.

Through a series of instructed interactions, a person first chooses an environment from 3 images projected onto a screen. As a person walks toward a particular image, their own moving silhouette is layered onto the image. Text appears to instruct them to move closer to the place they’ve chosen, and the closer they get, the louder an ambient sound associated with their chosen image gets.

Once within a certain proximity, if all goes well, the first part of the test is complete. So they start with a subtly, self-selected identity.

So they start with a subtly, self-selected identity.

In the next scene, the participant can interact with an image of their choice: the image mirrors their body moving through space and the image changes size based on the volume of sound the participant and their surroundings create. Stomping loud feet and claps make the image fill the screen and also earn the participant at least one horse ranking, while lot’s of traveling through space accumulates to earn the participants a bird ranking. Little or in place movement after a designated, as many guessed, puts them in ranks with muffins.

Any of the three events trigger a song affiliated with their rank and the next evaluation to begin.

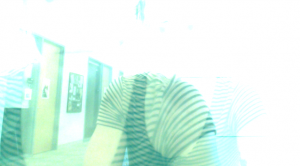

This last scene never quite reached my imagined heights, but it was intended for the participant to see themselves on video in real time and delay, still with the moving silhouettes tracked and projected through a difference actor on Isadora. This worked and participants enjoyed dancing with themselves and their echoes onscreen. Parts that needed work were a few shapes actors that were designed to follow movements in different quadrants of the Kinect’s RGB camera field and a patch that counted the number of times the participant crossed a horizontal field completely (to trigger a final horse ranking) or moved from high to low in vertical field, or again, observed themselves with small or no movements until a set time was up.

These functions sometimes worked and did not demonstrate robustness…..

Nonetheless, each participant was instructed to push a button at the end of their assessment and were able to discover if they were a birdhorsemuffin, a birdhorsehorse, triple horse or some other combination.

Pressure Project 3: Only the Lonely

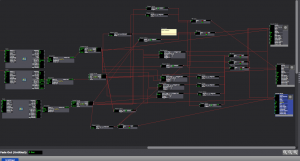

Posted: December 16, 2016 Filed under: Uncategorized Leave a comment »For the third pressure project involving dice, I wanted the dice to trigger a music player, with the location of where dice landed determining which instrumental parts of a song would play. I decided to use a Kinect’s depth sensor to detect the presence of dice in the foreground, mid-ground and background of a marked range of space.

I made origami cubes to use as dice so that they were a bit larger and easy to detect and also not as volatile and thereby easier to keep within the range I’d set.

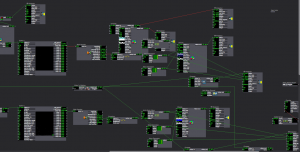

Using a Syphon Receiver in communication with Vuo, I connected the computer vision to 3 discrete ranges of depth and used a crop actor to cut out any information about objects, like people, that might come into the camera’s field of vision.

(This only partially worked and could use some fine tuning). In retrospect, I wonder if there is an adjustment on Eyes ++ I could use to require the presence of an object to last a certain amount of time before registering its brightness in order to prevent interfering messages besides the location of dice.

Each Eyes actor was connected to a smoother and an inside range actor which looked for the brightness detected within the set luminescent range for each one to reach a minimum in order to trigger a series of signals. The primary signal was to play that sections sound (because the brightness indicated an object present) or to stop it (because below minimum brightness indicated the absence of an object).

Because the three instrumental music files were designed to play together, it was possible for 3 dice to land in the 3 respective ranges, with the goal being that all three files started together, playing a song with drums, bass line and keyboard melody (reminiscent of Roy Orbison’s Only the Lonely). To make this work and not have the sound files start in split seconds of each others, I set up a system of simultaneity actors, trigger delays and gates, that I can’t actually explain in detail, but I understand conceptually as delaying action to look first for simultaneous presence of objects in multiple ranges, then sending a signal for 2 or all based on information gathered, and barring that, continuing signals through for an individual part to play.

For example, if a dice landed in the mid-ground and 2 in the foreground, the inside range enter triggers from both those ranges would send triggers to individual trigger delays that in 2 seconds send a message to play a single file. BUT, if the simultaneity actor for the mid-ground and foreground is triggered, it signals a gate to turn off on those two delays, preventing the individual file triggers and sending a shared trigger to both the bass and the piano files to start at the same time.

For whatever reason I can only get a zip file of garageband file to upload, but this is all 3 parts together: dice-player-band

The entire Isadora patch is here: http://osu.box.com/s/eyjn9zge850ywc81yhdoj4cpbic1rkka

Chance music….

cycle 1 plans

Posted: October 4, 2016 Filed under: Uncategorized Leave a comment »Computer vision which tracks movement of 1 (maybe two) people moving in different quadrants (or some other word that doesn’t imply 4) of the video sensor (eyes or maybe use kinect to work with depth of movement as well), such that different combinations of movement trigger different sounds. (ie, lots of movement in upper half and stillness below triggers buzzing, reverse triggers a stampede or alternating up and down distal movement triggers sounds of wings flapping…. more to come if I can figure that out.

Pressure Project 2

Posted: October 4, 2016 Filed under: Uncategorized Leave a comment »

https://osu.box.com/s/7evtez7zjct0hg2ws4o9g2zlj04mb0qg

https://osu.box.com/s/7evtez7zjct0hg2ws4o9g2zlj04mb0qg

I started with a series of makey makey water buttons as a trigger for sounds and wanting to come up with different sequences of triggering to jump to different scenes. So if you touched the blue bowl of water then the green then yellow twice and then green again, through the calculator and inside range actors, you get moved to another scene. The made up the scenes trying to create variations on the colors and shapes that were part of the first scene, save for one, that was just 3 videos of the person interacting fragmented and layered with no sound. The latter was to make a interruption of sorts. BUT…. in practice, people triggered the water buttons so quickly that scenes didn’t have time to develop and the motion detection and interpretation from some of the scenes (affecting shape location and sound volume), didn’t become part of the experience. Considering now how to provide a frame for those elements to be more immediately explored, to slow people down in engaging with the water bowls whether via text or otherwise AND the possibility of a trigger delay to make time between each action.

can i do anything to make it change?

Posted: September 19, 2016 Filed under: Uncategorized Leave a comment »https://osu.box.com/s/vwqcomm5zxzndy0ibt0vxro5xylqm6ah

I learned a ton from seeing folks’ processes and getting to experience other people’s projects. In particular, I’m beginning to have an inkling of how someone might animate an isolated square of a video.

city scape… it is pretty magical what audience can create.

people thought there were more interactive opportunities than there were because they were primed for it.

i realized i wanted to give more clues as to where to cause reactions, bigger reactions.