Pressure Project 2

Posted: October 7, 2018 Filed under: Uncategorized Leave a comment »For my pressure project, I chose to make a simple webpage that would give you a new fortune every time you clicked on a fortune cookie emoji.

I chose to do it like this because I wanted more experience with the particular web framework I used, and I wanted to make an interesting web experience in general.

Overall, I think I didn’t scope enough out for this project; I wish I had done more than what the final project ultimately ended up being but problems with getting a webpage up and running in the first place was an impediment in my project. I did have a lot of fun writing some of the fortunes though. There is a total of 81 unique fortunes that can be viewed, some I got from sites, others I changed a bit to fit the tone of the project more, and others I wrote from scratch. Given more time, I would have liked to include inputs from the user other than clicking such as giving fortunes to fit a name or picking from several fortune cookies on the screen.

If you’d like to read the fortunes in the project as a list, check out my github. The generation of the fortunes is in db/seeds.rb.

Pressure Project #2

Posted: October 5, 2018 Filed under: Uncategorized Leave a comment »For Pressure Project #2 we were assigned to create an automated fortune-telling machine utilizing any medium we felt comfortable with. I, still in the learning stages of Isadora, decided to use this platform to explore and develop. I had many goals for this project in order to challenge myself intellectually and artistically. My main goal of the project was not just to create t a functioning and responding system, but rather, a complex sensory experience that created a deliberate atmosphere. Naturally, I went with a 50s-esk carnival theme. I portrayed this theme through old film clips of San Francisco and a creepy sound scape that played throughout the user experience. My colleagues commented that they enjoyed these features of the system because it enhanced the mood of the experience and created a curious atmosphere.

I also wanted the computer fortune-teller to be tricky, clever, and mysterious. I tried to create a character within the system for the user to engage with. I did this by providing ambiguous instructions that could be interpreted many ways. I was curious to see if the user would be able to solve the riddles presented to them in order to receive their fortune. I also threw in some patterns that I hoped the user would eventually catch on to. My last goal was to utilize a wide range of triggers to keep the system moving forward. I used mouse watcher triggers, keyboard triggers, and voice and motion triggers intermittently throughout the system. The vocal triggers were the hardest to manage because every person has a clap or a snap of a different volume level. With this in mind, I made a pretty large inside range to catch a sound trigger. However, this caused my computer to sometimes pick up sounds that were unintentional. Or an intentional sound still didn’t fit within the inside range I allocated. This is still an area I need a lot of work in!

Every person who tried the system had a different experience. Some users immediately caught on to the patterns and loose instructions and others took longer to recognize that they were in a loop before they were able to get themselves to the next phase of the system. It was also interesting to use the devise in a group setting. When the people watching figured out the tricks of the system they experienced the uneasy feeling of keeping a secret to not ruin it for the user or future users. This uneasiness added to the overall mood of the system.

I really enjoyed this pressure project and am looking forward to how I can expand some of these goals in to my future work in the class and beyond!

Pressure Project 2 (A Walk in the Woods)

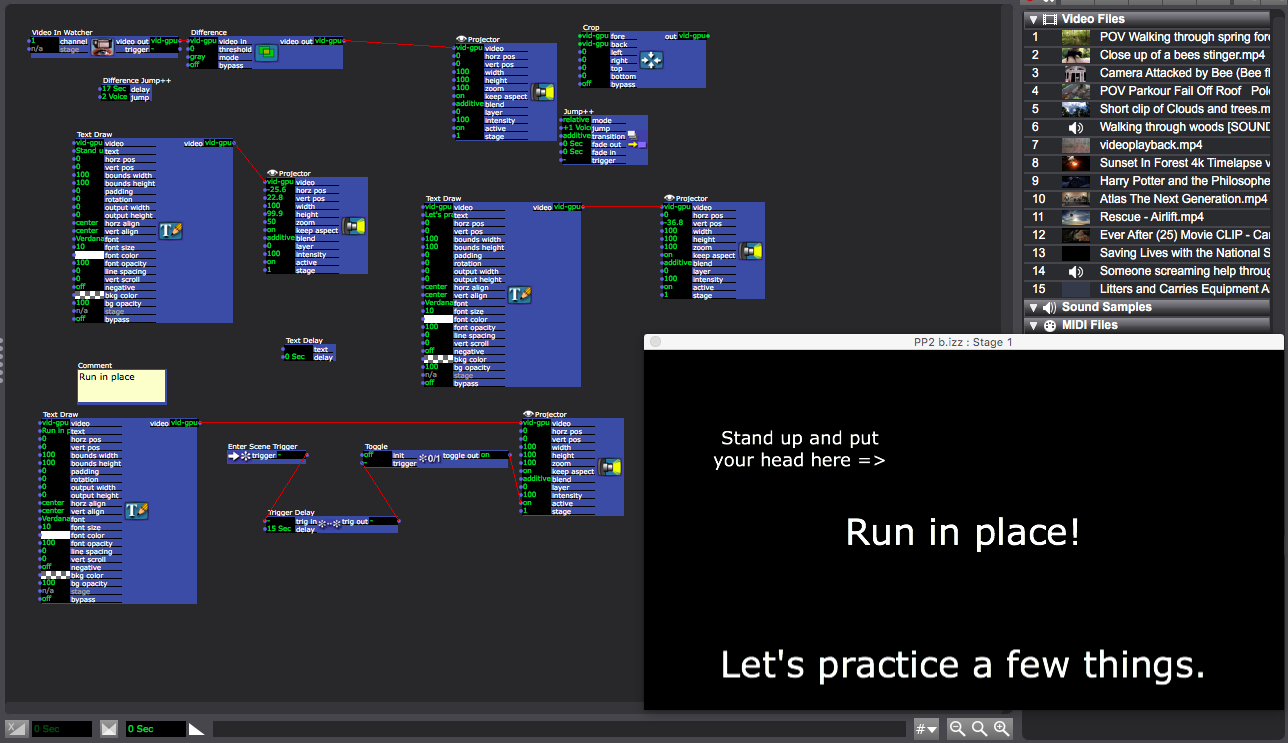

Posted: October 4, 2018 Filed under: Uncategorized Leave a comment »I wanted to get away from the keyboard for this assignment, and make something that asked people to move and make noise. I considered making some sort of mock fight what got people to dodge or gesture to certain sides of their body, but I didn’t want to make anything violent. Serendipitously, I was an outdoor gathering while I pondered what to make, and this party what highly attended by bees. So, it began–a walk in the woods. I thought I would tell people that things (like bees) were coming at then and ask them from a certain angle and then ask them to either dodge or gesture it away. I thought I would use the crop actor to crop portions of what my camera was seeing, and read where the movement was to trigger the next scene.

Less serendipitously (in some ways) I was at this party because my partner’s sister’s wedding the was the day before, and I was in the wedding party. This meant that I had 2 travel days, a rehearsal dinner, an entire day of getting ready for a wedding (who knew it could take so long?), and the day after party. WHEN WAS I SUPPOSED TO DO MY WORK?!

So, to simplify, I decided to not worry about cropping, and to trigger through movement, lack of movement, sound, or lack of sound. Then, the fun began! Making it work.

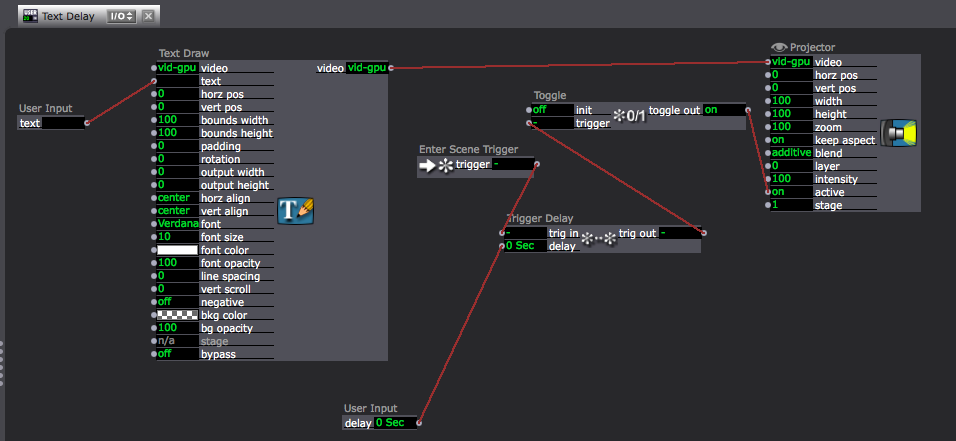

I decided to start with a training that would give the user hints/instructions/practice for how to continue. This also give me a chance to figure out the patches without worrying about the content of the story. Using Text Draw, I instructed the user to stand up, and told them that they would practice a few things. Then, more words came in to tell the user to run. I created a user actor that would delay text, because I planned to use this in multiple scenes.  The user actor has an enter scene trigger to a trigger delay to a toggle actor that toggles the projector’s activity between on and off. Since I set the projector to initialize “off” and the Enter Scene Trigger only sends one trigger, the Projector’s activity toggles from “off” to “on.” Then, I connected user input actors to “text” in the Text Draw and “delay” in the Trigger Delay Actor. This allows me to change the text and the amount of time before it appears every time I use this user actor.

The user actor has an enter scene trigger to a trigger delay to a toggle actor that toggles the projector’s activity between on and off. Since I set the projector to initialize “off” and the Enter Scene Trigger only sends one trigger, the Projector’s activity toggles from “off” to “on.” Then, I connected user input actors to “text” in the Text Draw and “delay” in the Trigger Delay Actor. This allows me to change the text and the amount of time before it appears every time I use this user actor.

I also made user actors that trigger with sounds and with movement. I’ll walk through each of these below.

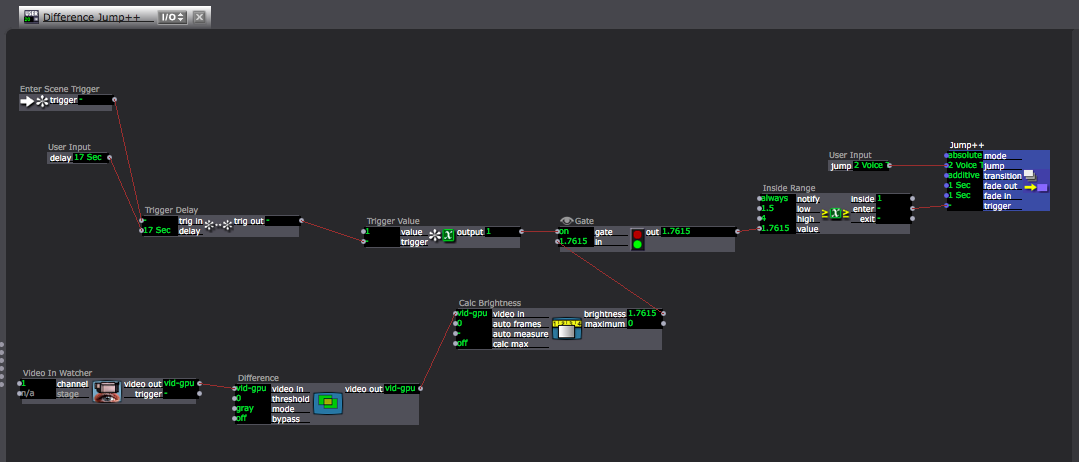

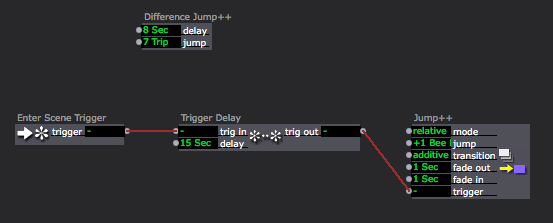

Any story that prompted them to run or stay still used a user actor that I called, “Difference Jump++.” Here, I used the Difference Actor to and the Calc Brightness actor to measure how much movement takes place. (Note: the light in the space really mattered, and this may have been part of what made this not function as planned. However, because this was in a user actor, I could go into the space before showing it and change the the values in the Inside Range actor and it would change these values in EVERY SCENE! I was pretty proud of this, but it still wasn’t working quite right when I showed it.)

I used to gate actor, because I wanted to use movement to trigger this scene, but the scene starts by asking the user to stand up, so I didn’t want movement to trigger the Jump++ actor until they were set up. So, I set this up similar to the Text Delay User Actor, and used the Gate Actor to block the connection between Calc brightness and Inside Range until 17 seconds into the scene. (17 second was too long, and something on the screen that showed a countdown would have helped the user know what was going on.)

I used to gate actor, because I wanted to use movement to trigger this scene, but the scene starts by asking the user to stand up, so I didn’t want movement to trigger the Jump++ actor until they were set up. So, I set this up similar to the Text Delay User Actor, and used the Gate Actor to block the connection between Calc brightness and Inside Range until 17 seconds into the scene. (17 second was too long, and something on the screen that showed a countdown would have helped the user know what was going on.)

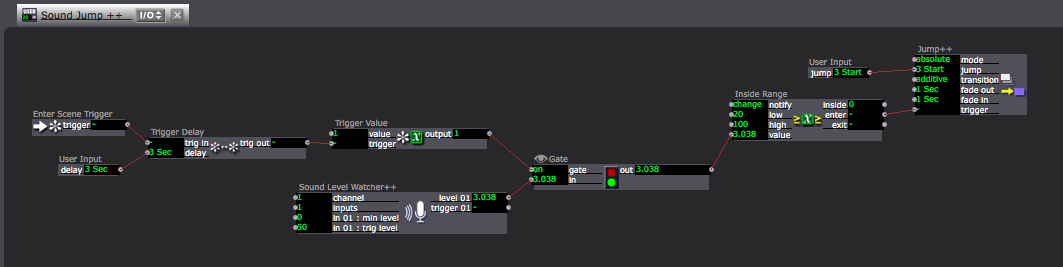

So, with this, the first scene was built (showing the dancer that their running action can trigger changes. The next scene trained the user that their voice can also trigger changes. For this, I built a user actor that I called, “Sound Jump++.” This functions pretty similarly to the Difference Jump++ User Actor.

So, the trigger for most of the scenes is usually either movement, stillness, sound, or silence. I’ve explained  how movement and sound trigger the next scene, but stillness and silence are the absence of movement and sound. So, in add

how movement and sound trigger the next scene, but stillness and silence are the absence of movement and sound. So, in add

ition to including a Difference Jump++ User Actor and/or a Sound Jump++ User Actor I had and Enter Scene Trigger to a Trigger Delay to a Jump ++ Actor. If the user had not triggered a scene change by the time the Trigger Delay triggered the next scene, it was assumed that the user had chosen stillness and/or silence.

Then, which scene we jumped to depended on how the scene change was triggered.

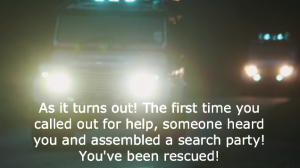

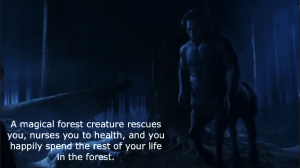

Next, I came up with a story line involving bears, bees, robots, mythical creatures, airlift rescues, search and rescue units, and I downloaded videos and sounds to represent these events. Unfortunately, I either had things jumping to the wrong scenes, or Inside Range values were set incorrectly, because the performance of this “choose your own adventure” story didn’t function, and we kept getting the same storyline when we tried it in class. I learned a lot in the process of building this though!

Robot Gender ASumptions

Posted: October 3, 2018 Filed under: Uncategorized Leave a comment »https://www.wired.com/story/robot-gender-stereotypes/?mbid=synd_digg

Stop Pretending

Posted: October 3, 2018 Filed under: Uncategorized Leave a comment »https://www.pcmag.com/news/364132/california-law-bans-bots-from-pretending-to-be-human

Infrared Tracking

Posted: October 1, 2018 Filed under: Uncategorized Leave a comment »A wonderful tutorial for how to do advanced Infrared Tracking in Isadora.

PP2

Posted: September 28, 2018 Filed under: Uncategorized Leave a comment »Task: For our second pressure project, we were tasked with creating a Fortune Teller Machine. I found this task to be interesting and just the right thing to help me focus on learning and exploring a few tools I might use in my thesis. In particular, this project allowed me to explore the abilities of FUNGUS which is a Unity Plugin that is used to help organize branching narratives.

Process: I first started with looking a little deeper into the task. From my last project, I didn’t want to have this system to have an “on the nose” quality. Therefore, I decided to go with Spirit Animals. The Animals have a fortune so to speak, but also, in the context of a vessel that predicts your future, they have a more personal connection. Your animal is the path.

I started with 10 animals, but ultimately settled on 6 animals and created a tree diagram. I started with the animals at the bottom. I knew I have to have the user select objects and the best representations I have given the tie constraints came from a model library from Google. From this library, I extracted a 2D image/ sprite and used that as the “items that one would choose in order to proceed along their chosen path. The items consisted of hats, shoes, and even some places like the sky.

Creating branches, laying down some music and making a few background changes lead me to a completed project.

Feedback: Most of the feedback was positive. The audience seemed to really like the ability to identify with a certain animal. It is something I hope to implement in future work. It really helped to give them a sense of uniqueness even though the choice was one of six.

One thing I might have done differently or didn’t expect was the very first user was interested in pressing the button as fast as they could. In the future, I suppose I should put some time gates even though that limits some of the freedom… Not sure. I suppose we are conditioned to play video games, or click on things with a mindless rapid succession. We might need to have the creators force individuals to pause in certain situations to actually observe the attention of the author.