Pressure Project 2: Creating an Escape

Posted: February 25, 2025 Filed under: Uncategorized Leave a comment »For this second pressure project, I had seven hours to design an interactive mystery without using a traditional mouse and keyboard. Based on feedback from my first project—which felt like the start of a game—I decided to fully embrace game mechanics this time. However, I initially struggled with defining the mystery itself. My first thought was to tie it into JumpPoint, a podcast series I wrote about time travel, but I quickly realized that the complexity of that narrative wouldn’t fit within a three-minute experience. Instead, I leaned into ambiguity, letting the interaction itself shape the mystery.

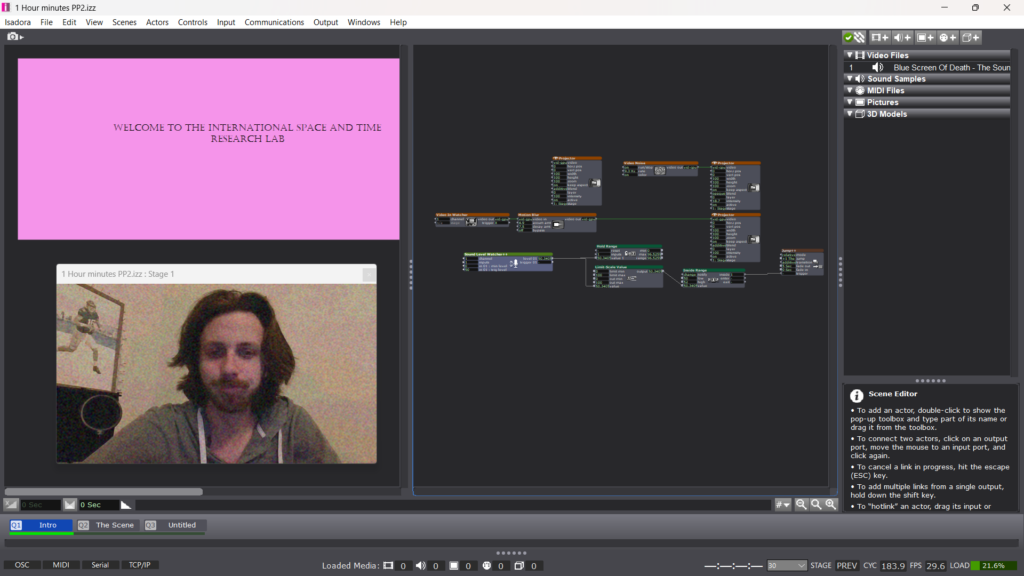

My first hour was spent setting up a live video feed that would give the user questions regarding their current state and environment. To achieve this, I utilized actors such as video noise and motion blur. My initial concept was to have the experience activated via depth sensors, something I had actively avoided in my first project. I set this up, only to realize that to ultize both my high quality web cam as well as the makey makey, all my USB drives were being utilized. So I pivoted to a sound level watcher which would activate the experience.

My second Cue titled “The Scene” serves as my soundbed for the experience. There is an enter scene trigger that gives the appearance of bypassing this in real time, with the 3rd scene utilizing an Activate Scene actor to trigger the music.

If you view the next screenshot, you will notice that the project says One Hour mark, this is not true. This is also your reminder to save early and save often as twice in this experience, I had the misfortune of having about a half hour of work, each time, disappear.

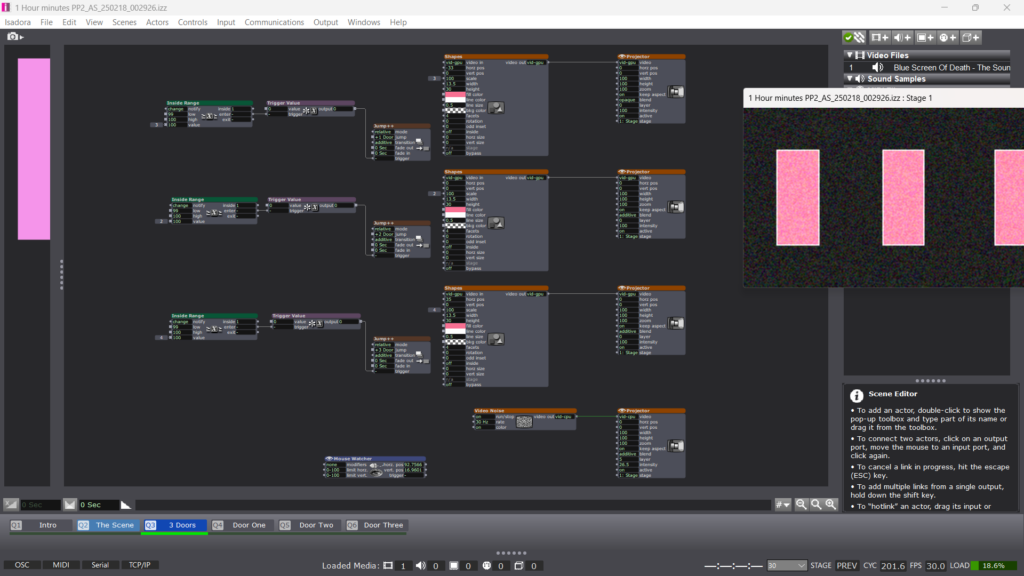

So between hour one and hour two, I set up what would be the meat of the experience. 3 doors with no clear path behind each. This is where I intended to really incorporate the makey makey as the first cue is activated off of sound. In the control panel, I created 3 sliders and attached them to each shape actor in addition to an inside range actor. What I would achieve by this, is creating the appearance that the door is coming closer if you choose to “open” it, while also creating a number which, when hit, would activate a trigger to a jump ++ actor to take the user to either Door 1, 2, or 3’s outcome. A mouse watcher was also added to track the movement from the Makey Makey, which at this point, I had not decided how it would be arranged.

Over the next hour, I would set up the outcomes of doors 1 and 3. Wanting to unlocked the achievement of “expressing delight”, I decided that Door 1 would utilize a enter scene trigger for 3 purposes. 1. To Deactivate the Sound bed. 2. To Activate a video clip to start. The video clip is from Ace Ventura: Pet Detective where Ace, dishelved, comes out of a room and declares “WOO, I would NOT go in there!!”. For the 3rd purpose of the enter scene trigger, I inserted a trigger delay for the duration of the clip to a Jump++ actor to go back to the three doors.

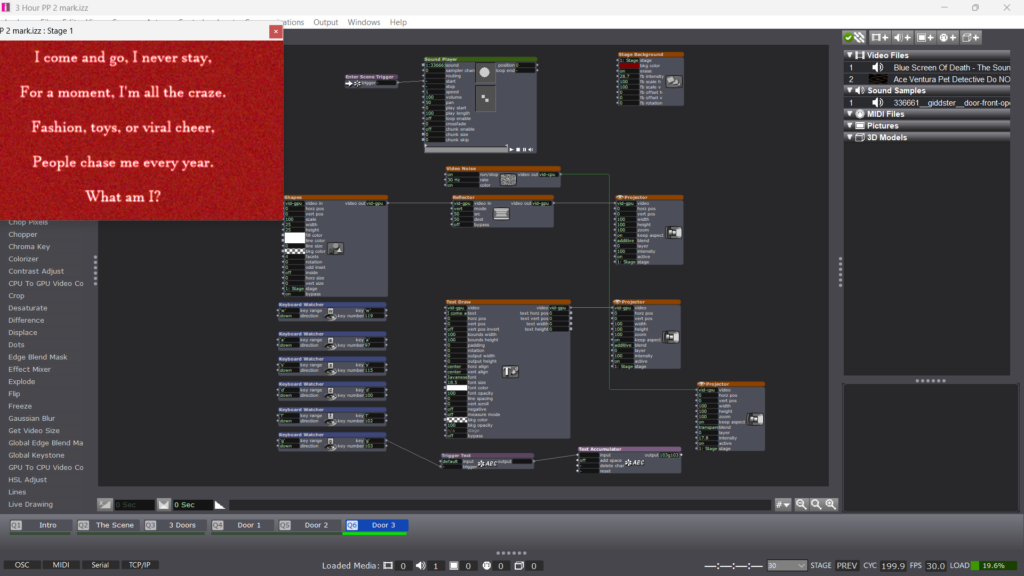

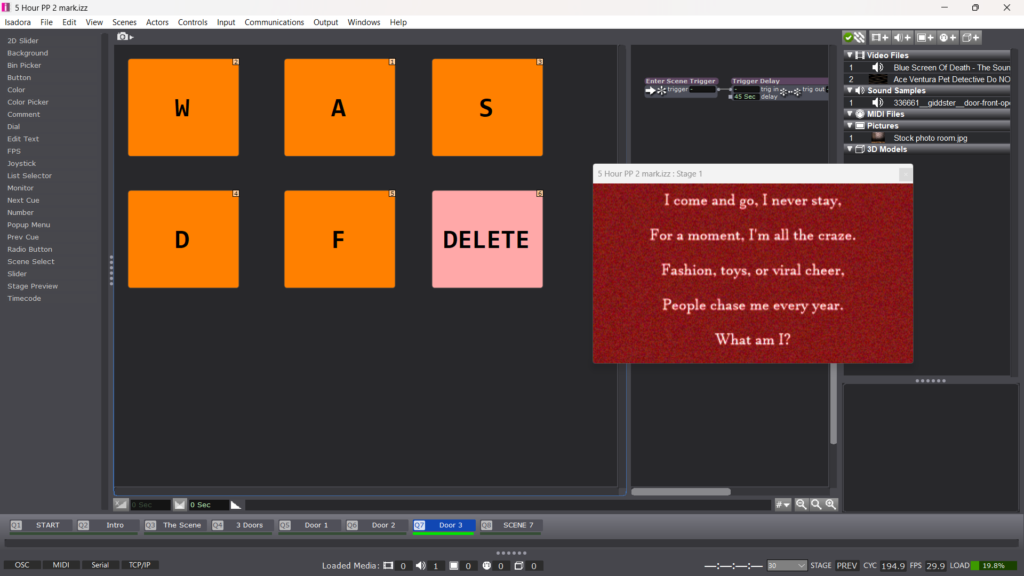

Behind Door 3, I decided to set up a riddle or a next step.To set this up, I utilized a text draw that would rise via an envelope generator. You’ll observe a few other actors, but those were purely for the aesthics. I wanted users to be able to use the Makey Makey in another capacity, so I utilized serval keyboard watchers to hopefully catch every letter being typed. I had made several attempts to figure out how exactly that I needed to inventory each letter being typed before emailing my professor who helped out big time!

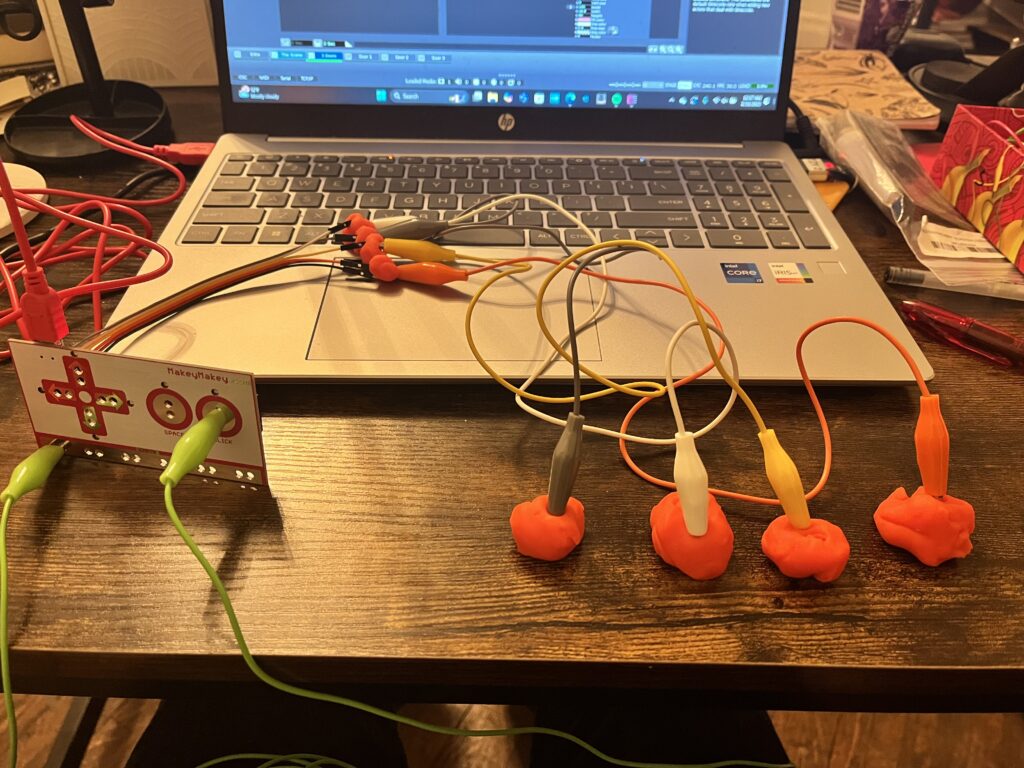

While I awaited a response, I spent about half an hour experimenting with the makey makey testing it’s robustness with and without playdough, which I intended to be the conduit for the experience. Please ignore the messy desk, a mad scientist was at work.

Hour 5 is where my pressure project went from being a stressor to being REALLY fun. (Thanks Alex!) Instead of a keyboard watcher, I created 6 text actors to coordinate with WASDFG. Those text actors would connect to a text accumulator which was attached to 2 additional actors. A text draw, which would put it on screen as I intended and a text comparator, which, when it matched the intended answer, would take send a trigger to take the actor to another scene. Instead of using the WASDFG inputs of the makey makey, I stuck to the directional and click inputs that I played around with earlier and instead created those keys with buttons on the control panel. This would still give the user the experience of typing their answer without having to add 6 additional tangible controls. As hour five drew to a close, I set up the outcome of door two which mirrors features and actors of the other two doors.

With the high use of the text draw actor, I was unintentionally creating a narrative voice for the piece. So in the 6th hour I worked on the bookend scenes to make it more cohesive. I added a scene at the top which included a riddle to tell the user the controls. I also used an explode actor on the text to hopefully instill the notion that the user needed to be quiet in order to play the game (which they would have to do the opposite on the live video, a fun trick). I created a scene on the backend where I felt that a birthday cake was an interesting twist that didn’t get too dark in plot. I liked the idea of another choice, so I simply decided to narrow down from 3 options like the doors, to 2. Still utilizing buttons in the control panel.

It was also in this sixth hour that I realized I didn’t know how this mystery was going to end. I had to spend a bit of time brainstorming, but ultimately felt that this experience was an escape of some kind, but to avoid going a dark direction, I decided that the final scene would lead to an escape room building.

My final hour was spent on 2 things. 1, establishing the scenes that would lead to the escape room end and setting up the experience in my office and asking a peer to play it so I could gauge the level of accessibility. Feeling confident after this experience, I brought my PP to class where I received positive feedback.

Much like an escape room, there was collaboration in both the tangible experience of controlling the escape room as well as decision making. I did observe that the ability to discern which parts of the control were the ground and the click wasn’t clear. In the future, I would like to distinguish these a bit more through an additional label.

Something else that occurred was the live video scene instantly bypassed due to the baseline level of volume in the room. So in the future, I would utilize the actor to update the range in real time as opposed to the hold range actor that I used as a baseline.

With how much jumping was occurring through scenes, I struggled throughout with ensuring the values would be were I wanted them upon entry of each scene. It wasn’t until afterwards that I was made aware of the initialized value feature on every actor. This would be a fundamental component if I were to work on this project moving forward.