Movement Meditation Room Cycle 3

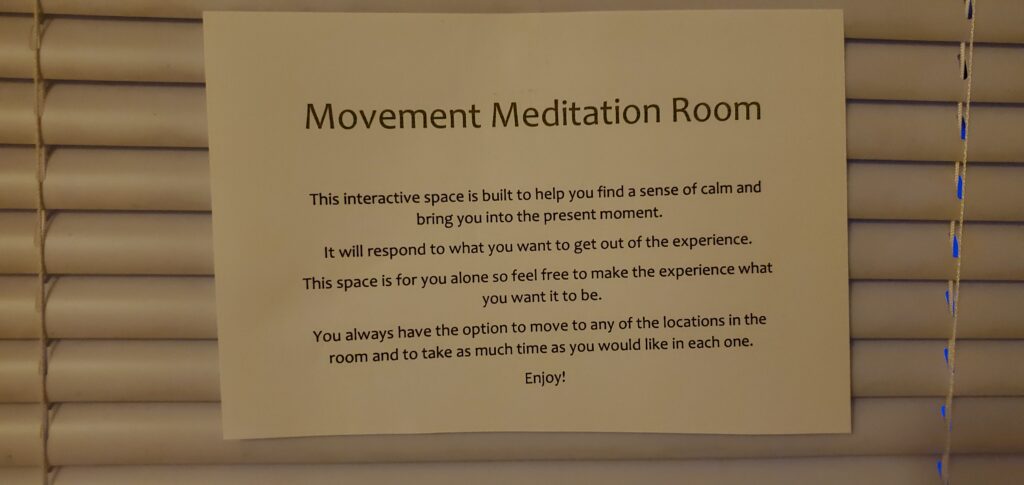

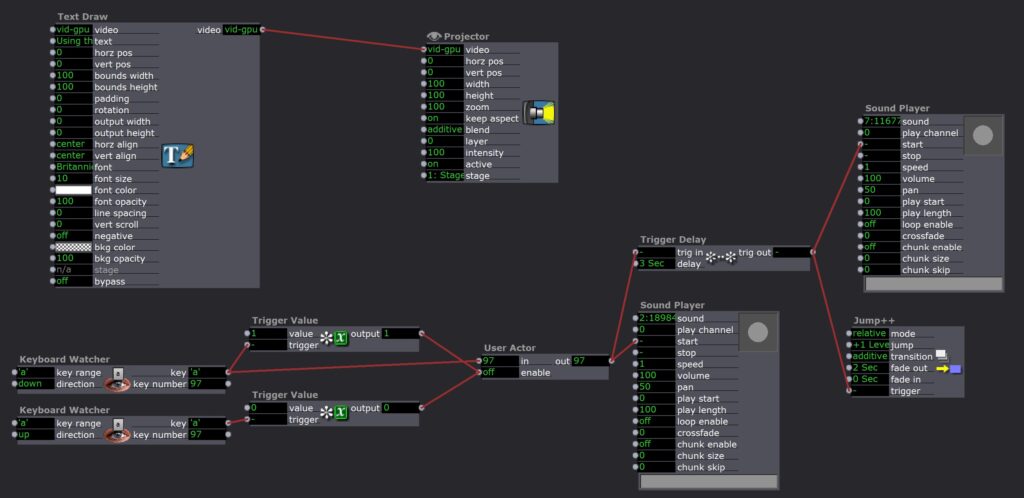

Posted: December 13, 2020 Filed under: Uncategorized Leave a comment »Cycle 3 was a chance for me to figure out how to guide people through the experience. Now that all of the technical elements of the room worked consistently, I needed to think about how to guide people through the space. Originally I thought of putting something on the door that explained the room but I felt that there was a lot of information that wouldn’t make sense until they were in the room itself. So instead I went with an audio guidance system that would tell the user how each element of the room worked as they moved through the space. I still had a brief description on the door that would welcome users into the space:

Here is a link where you can watch me go through each of the room’s locations and hear the audio guidance system in action: https://osu.box.com/s/dd5izpw890mx3330r43qgdj3fazkux6i

It is important to note that it was possible for a user to move between locations of the room if they wanted to experience either of the locations again. The triggers were on timers so that the actions of a particular location could restart if the user decided to re-enter the space after leaving it for a certain amount of time. So the amount of time that someone spent in the space was totally up to them and what they wanted to experience.

Unfortunately, due to Covid restrictions, the only people who were able to experience this project was myself and one of my housemates. This is what she said about her experience: “The room offered me a place to reconnect with myself amidst the hectic everyday tasks of life. When in the room, I was able to forget about the things that always linger in the back of my mind, like school work and job applications, and focus on myself and the experience around me. The room was a haven that awakened my senses by helping me unplug from the busy city and allowing me to interact with the calming environment around me.” I thought it was interesting how she felt the technology in the room helped her “unplug” and her feedback gave me further questions about how technology can sometimes hide its influence on our surroundings, and give us experiences that feel “natural” or “unplugged” while also being dependent on technology.

Overall, this cycle felt very successful in providing a calming and centering experience that engaged multiple senses and could be guided by the user’s own interests. I tried to add a biofeedback system that would allow the user to have their heartbeat projected through the sound system of the room, hopefully encouraging deeper body awareness, but my technology was somewhat limited.

I used a contact microphone usually used for musical instruments to hear the heartbeat but because of its sensitivity, it would also pick up the movement of my tendons at my wrist if I moved my hand or fingers at all. Even though I did successfully create a heartbeat sound that matched the rhythm of my heartbeat, the requirement of complete stillness from the elbow down for it to work conflicted too much with goals that were more important to me, like comfort and freedom to move.

In continuing cycles, I might try to build a more robust biofeedback system for the heartbeat and breath. I might also look into the Hybrid Arts Lab locations that might be able to host a Covid-safe installation of the room for more people to experience.

Even if the project itself isn’t able to continue, I do feel I learned a lot about how different kinds of devices interface with Isadora and I have a saved global actor that houses the depth sensor trigger system that I used to structure the room. My realm of possibility has expanded to include more technology and interdisciplinary approaches to creating art. The RSVP cycles that we used to create these final projects has already helped me start to plan other projects. Coming out of this I feel like I have about a dozen more tools in my art toolbox and I am extremely grateful for the opportunity to develop my artmaking skills beyond dance.

Movement Meditation Room Cycle 2

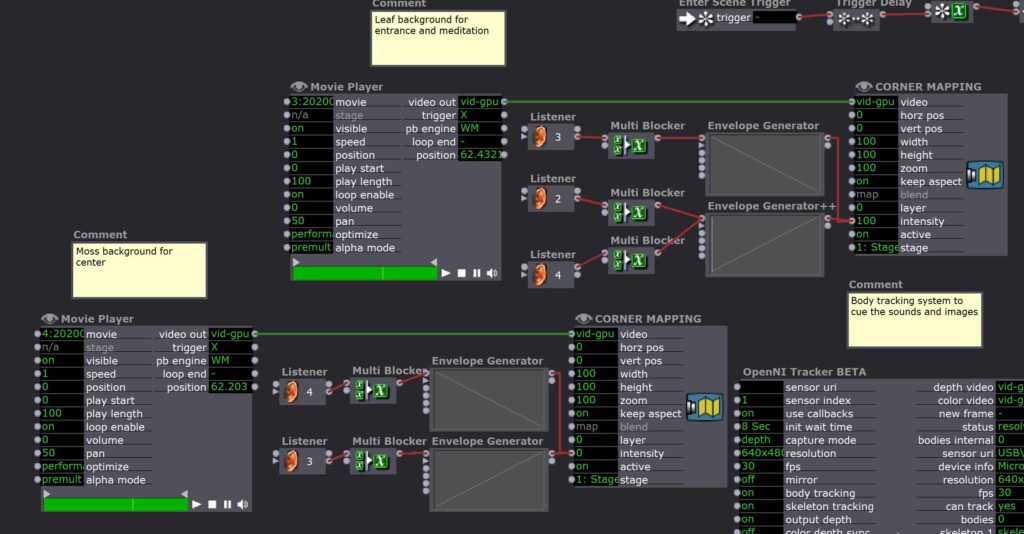

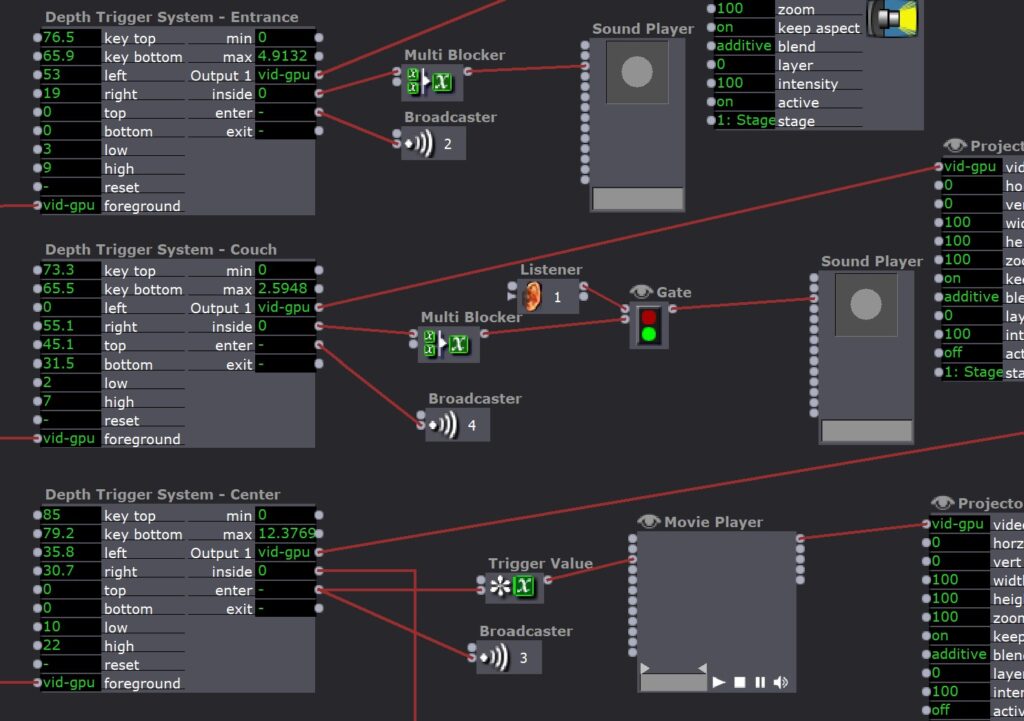

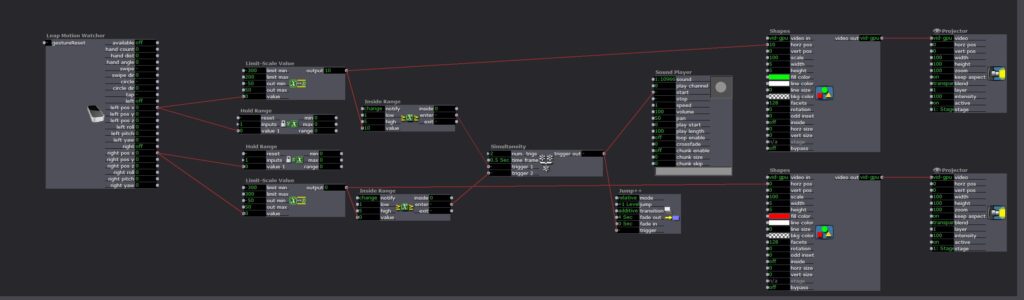

Posted: November 24, 2020 Filed under: Uncategorized Leave a comment »Cycle 2 of this project was to layer the projections onto the mapping of each location in the room. I started with the backgrounds which would fade in and out as the user moved around the room. A gentle view of leaves would greet them upon entering and when they were meditating, and when they walked to the center of the room it shifted to a soft mossy ground. This was pretty easy because I already had triggers built for each location so all I had to do was connect the intensity of the backgrounds to a listener that was used for each location. The multiblockers were added so that it wouldn’t keep triggering itself when the user stayed in the location, they are timed for the duration of the sound that occurs at each place.

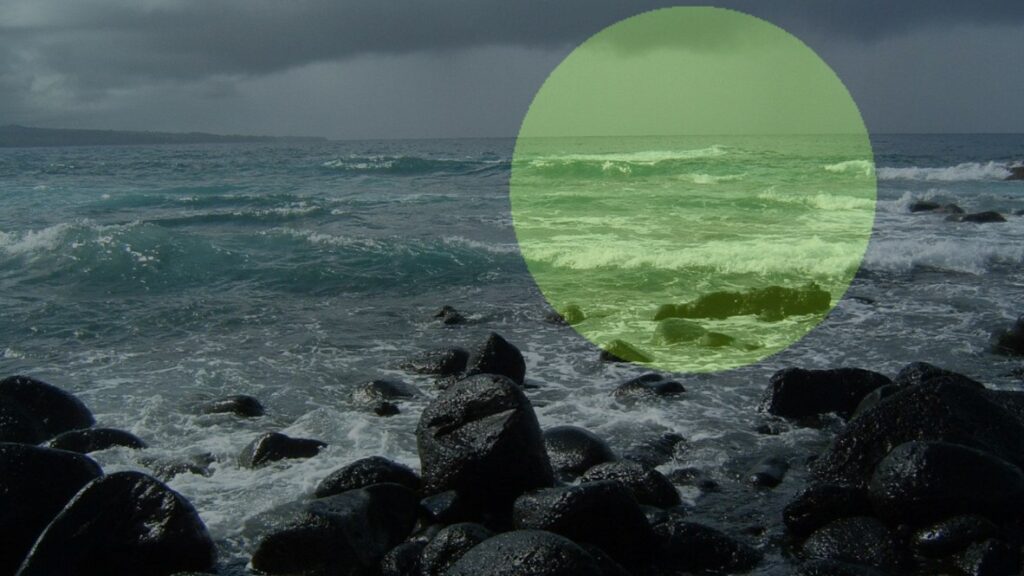

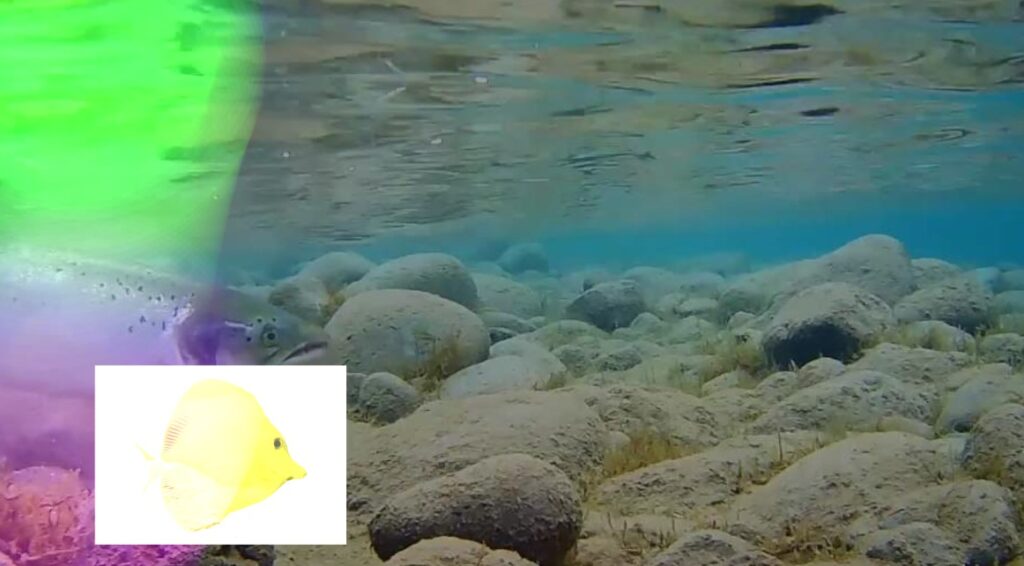

The part that was more complicated was what I wanted for the experience in the center. I wanted the use to be able to see themselves as a “body of water” and to be able to interact with visual elements on the projection that made them open up. I wanted an experience that was exciting, imaginative, open-ended, and fun so that the user would be inspired to move their body and be brought into the moment exploring the possibilities of the room at this location. My lab day with Oded in the Motion Lab is where I got all of the tools for this part of the project.

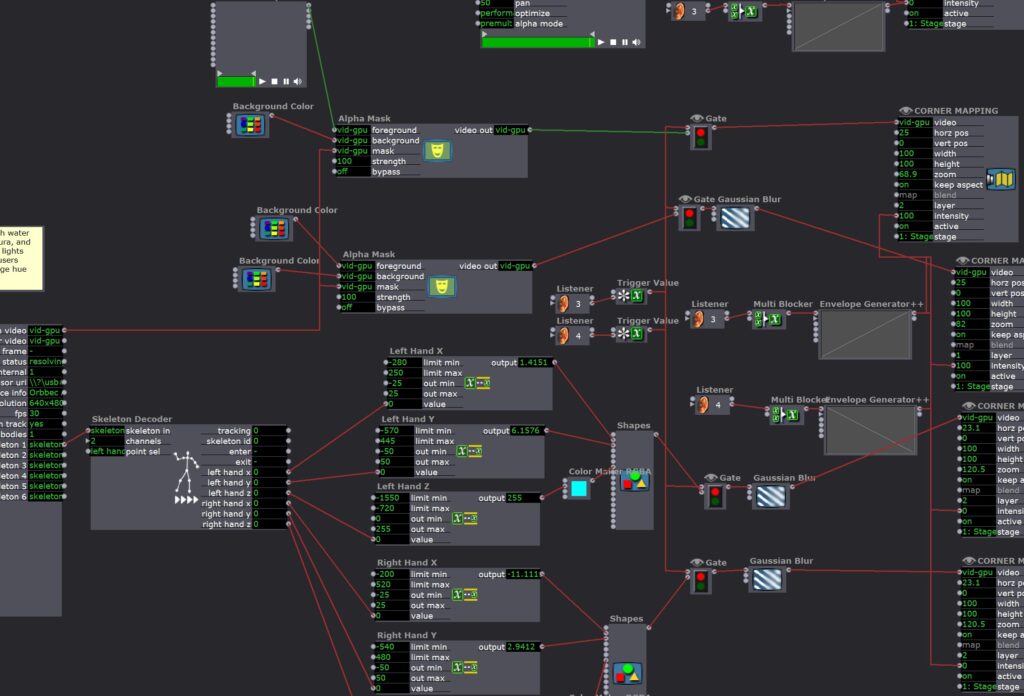

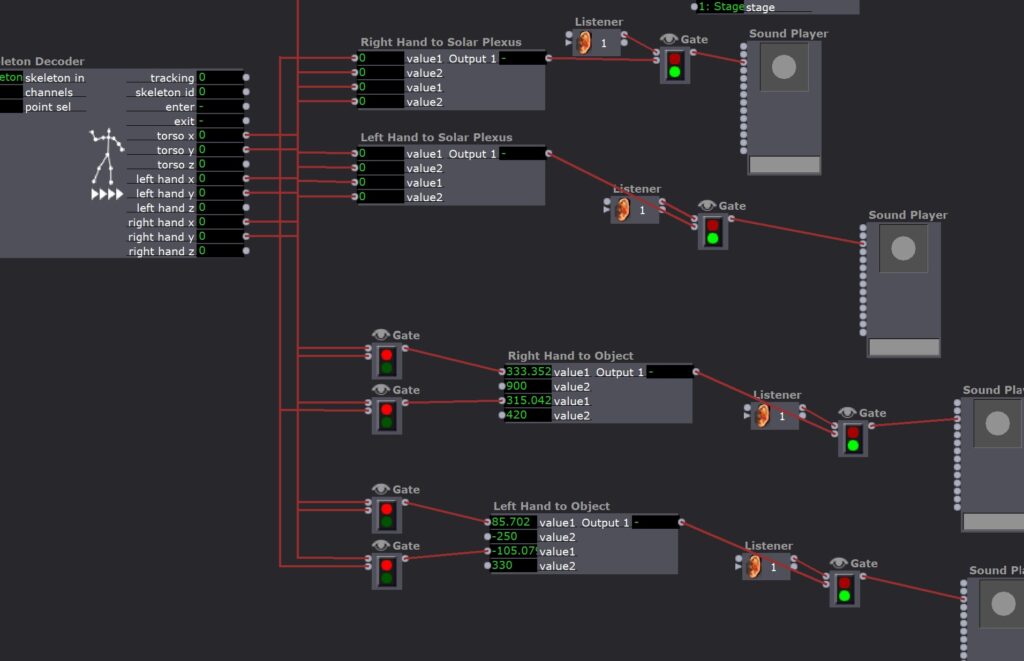

I rigged up a second depth sensor so that the user could turn toward the projection and still interact with it, then I created an alpha mask out of that sensor data which allowed me to fill the user’s body outline with a video of moving water. I then created an “aura” of glowing orange light around the person and two glowing globes of light that tracked their hand movements. The colors change based on the z-axis of the hands so there’s a little bit to explore there. All of these fade in using the same trigger for when the user enters the center location of the room.

I am really proud of all of this! It took me a long time to get all of the kinks out and it all runs really smoothly. Watching Erin (my roommate) go through it I really felt like it was landing exactly how I wanted it to.

Next steps from here mean primarily developing a guide for the user. It could be something written on the door or possibly an audio guide that would happen while the user walks through the room. I also want to figure out how to attach a contact mic to the system so that the user might be able to hear their own heartbeat during the experience.

Here is a link to watch my roommate go through the room: https://osu.box.com/s/hzz8lp5s97qw5q47ar32cgblh5hus8rs

Here is the sound file for the meditation in case you want to do it on your own:

Meditation Room Cycle 1

Posted: November 10, 2020 Filed under: Uncategorized Leave a comment »The goal for my final project is to create an interactive meditation room that responds to the users choices about what they need and allows them to feel grounded in the present moment. The first cycle was just to map out the room and the different activities that happen at different points in the space. There were two ways that the user can interact with the system: their location in space and relationship of their hands to various points.

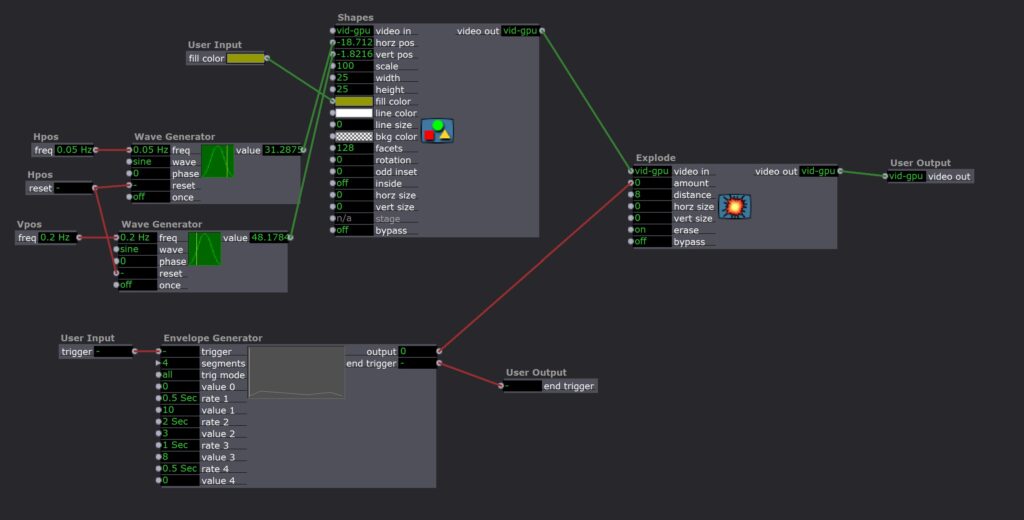

There were three locations in the room that triggered a response. The first was just upon entering, the system would play a short clip of birdsong to welcome the user into the room. From there the user has two choices. I am not sure as of right now if I should dictate which experience comes first or if that should be left for the user to decide. I think that I can make the program work either way. One option they could choose was to sit on the couch which would start an 8 minute guided meditation and focuses on the breath, heartbeat, and stillness. The other option is to move to the center of the room which is more of a movement experience where the user is invited to move as they like (with a projection across them and responding to their movement to come in later cycles). As they enter this location there is a 4 minute track of ambient sound that creates the atmosphere of reflection and might inspire soft movement. This location is primarily where the body tracking triggers are used.

Two of the body tracking triggers are used throughout the room, they trigger a heartbeat and a sound of calm breathing when the user’s arms are near the center of their body. This isn’t always reliable and seemed like too much sometimes with all of the other sounds layered on top so I am thinking of shifting to just work upon entry and maybe just the heartbeat in the center using gates the same way that I did with the triggers used in the center of the room. The other two body tracking triggers use props in the room that the user can touch. There is a rock hanging from the ceiling that will trigger the sounds of water when touched and there is a flower attached to the wall that will trigger the sound of wind when touched. These both will only have an on-gate when the user is in the correct space at the center of the room.

Overall I feel good about this cycle. I was able to overcome some of the technical challenges of body tracking and depth tracking as well as timing on all of the sound files. I was able to prove the concept of triggering events based on the user’s interaction with the space which was my initial goal.

The next steps from here are to incorporate the projections and possibly a biofeedback system for the heartbeat. I also need to think about how I am going to guide the experience. I think I will have some instructions on the door that help users understand what the space is for and how to engage with it and what choices they may make throughout. I also am not really sure how to end it. Technically I have the timers set up so that if someone finished the guided meditation, got up and played with the center space, and the wanted to do the guided meditation again, they totally could. So maybe that is up to the user as well?

Here is a link to me interacting with the space so you can see each of the locations and the possible events that happen as well as some of my thoughts and descriptions throughout the room (the sound is also a lot easier to hear): https://osu.box.com/s/iq2idk432jfn2yzbzre91i2gp3y4bu9d

Pressure Project 3

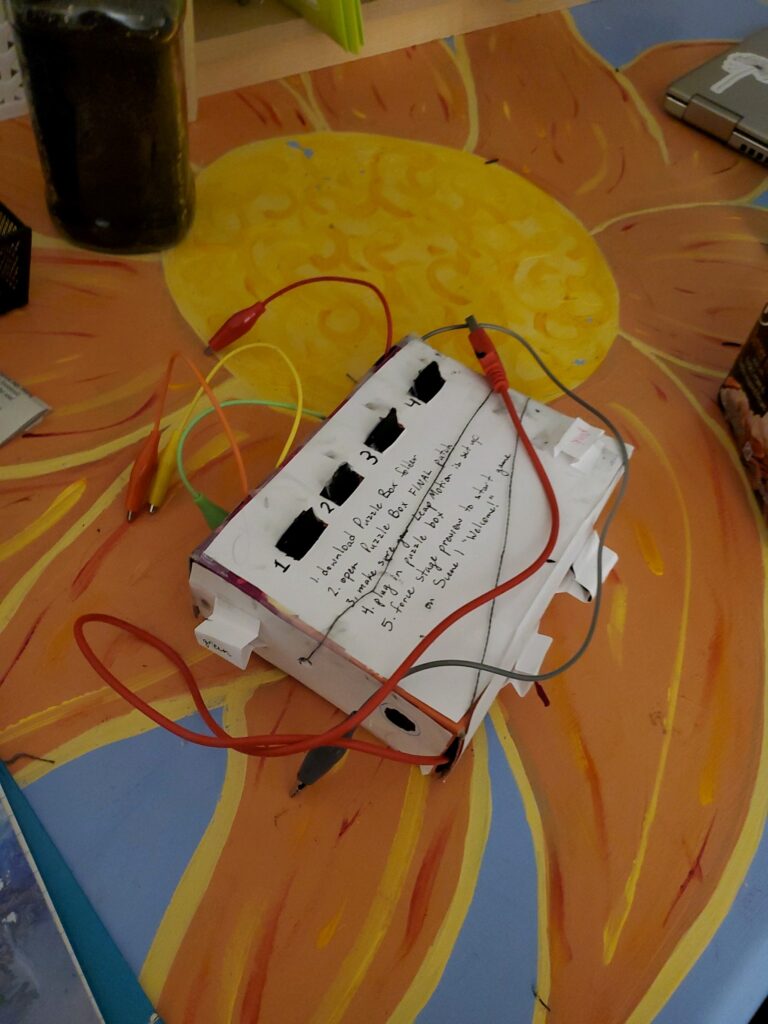

Posted: October 18, 2020 Filed under: Uncategorized Leave a comment »I really focused on the word “puzzle” within this prompt so I created a box that required physical connections in response to prompts by text on the Isadora screen. The box itself was a challenge to make because of how small it was (I used a Kroger mac and cheese box) so it honestly took me hours to poke the holes in the sides of the box and thread all of the wires through them without messing something up and having to redo it. I wanted to make the workings of the box unclear so that the person would have to solve the riddle/puzzle/task in order to complete the level, rather than being able to see where the connections lead.

The prompts for each level appeared on the stage of the Isadora patch while everything else supposedly remained hidden. Each level consisted of a task that had to be completed on the box and then a series of hand movements over the LEAP. Over time the difficulty of the puzzles increased and the number of gestures that you had to do also increased, so in a way each level was cumulative.

While the first part of the level was all done on the box, the second part of the levels involving the LEAP used the Isadora stage to help guide the hand movements of the user. There were circles to represent the hands and color coordinated boxes that I hoped would help guide the user to do the required action. Something that I had assumed here was that people would hold their hands flat over the LEAP and move slowly so that is how I tested everything. When I handed it over to Tara that isn’t what happened so sometimes it would register her movement before she was even able to see what the screen was which made the levels unclear.

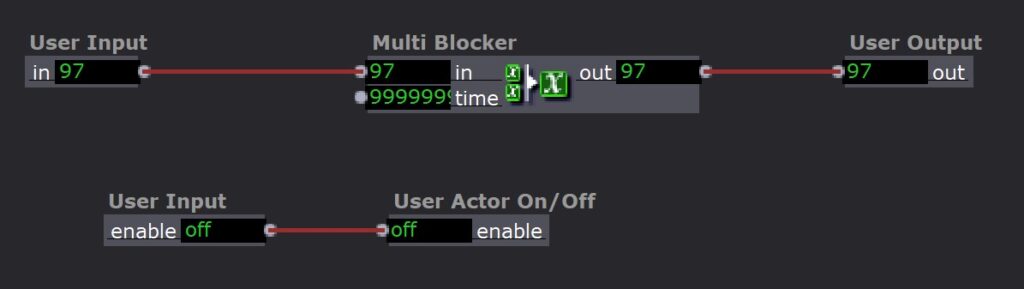

One of the challenges that I had when I was working on my patch was that I was trying to make a puzzle where people had to put a series of tones in ascending order (like a piano). This puzzle used alligator clips and tags on the box so I had the issue of constant triggers being sent. However, I couldn’t just use multiblocker because I assumed people would try to test the different alligator clips so I also needed the system to be able to reset once someone had tested the sound. I also needed the system to be able to recognize that the tones had been played in a specific order, from left to right on the tabs on the box.

The first challenge was solved with a user actor that contained a multiblocker but also allowed me to reset the time on it whenever the alligator clip was removed from the tab.

The second challenge was solved using a different scene for every tone. So once the user found the correct tone, it would jump to the next scene and they would get a prompt telling them that they got it right and could now move on to figuring out what sound went with the second tab on the box.

For the most part I was really proud of my puzzle box. There were some issues with the LEAP tracking so I would need to either be more specific in my instructions or make the system more able to adjust to different users. The last puzzle with the tones was also one where the instructions could have been more clear, Tara said that it wasn’t clear to her if she had got it right or not so I could have found a more clear way to indicate when each step had been successful. I learned a lot about how to use scenes and user actors when overcoming my struggles with the last puzzle. I also realized that I need to write out a list of the assumptions I am making about the user so that I can either instruct the user more clearly to match those assumptions or adjust to fit behavior outside of those assumptions.

Pressure Project 2

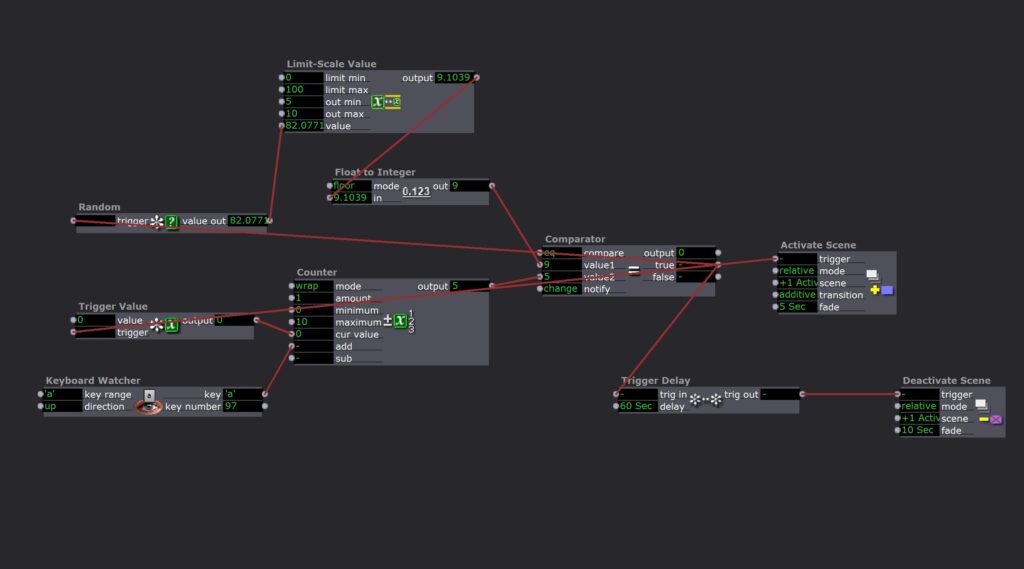

Posted: October 1, 2020 Filed under: Uncategorized Leave a comment »I learned from the last project and started with a written brainstorm of ideas before doing any actual work on this. Then I narrowed it down to what I actually wanted and drew a design, specified my goals, and the basic logic of the program. I decided to create a Spontaneous Dance Party Machine for my staircase. The goal was to randomly surprise someone coming down the stairs with an experience that would inspire them to dance and be in the moment. So while they might have been on their way to get food before trudging back up the stairs for more hours of Zoom, this gives them a chance to let loose for a minute of their day.

The resources I used for this were the Makey-Makey attached to aluminum for the trigger. I designed the aluminum on the staircase to be triggered by the normal way that someone might put their hand on the railing when descending.

Then the Makey-Makey plugged into my computer which ran the Isadora program.

Then I had to get the stage from Isadora into my projector. I did not have the right kind of cable to plug the projector directly into my computer but it would plug into my phone. So I used an app called ApowerMirror which was installed on both my phone and computer in order to mirror my computer screen onto my phone. This allowed me to transfer what was on my full screen stage on my computer into the projector that would serenade the person who triggered the stairs.

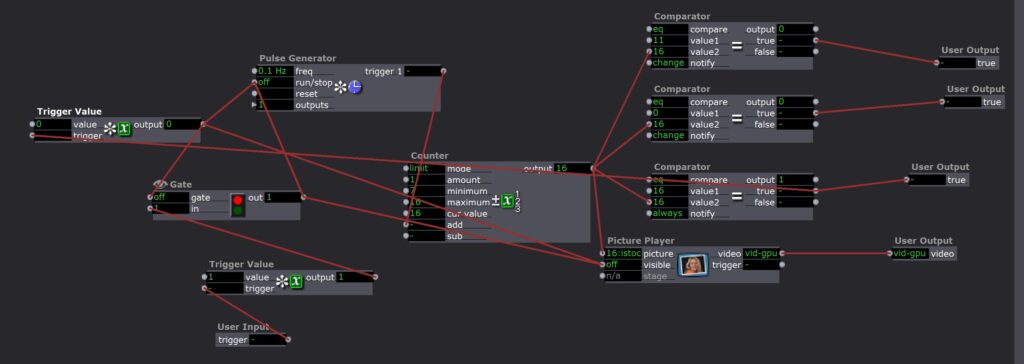

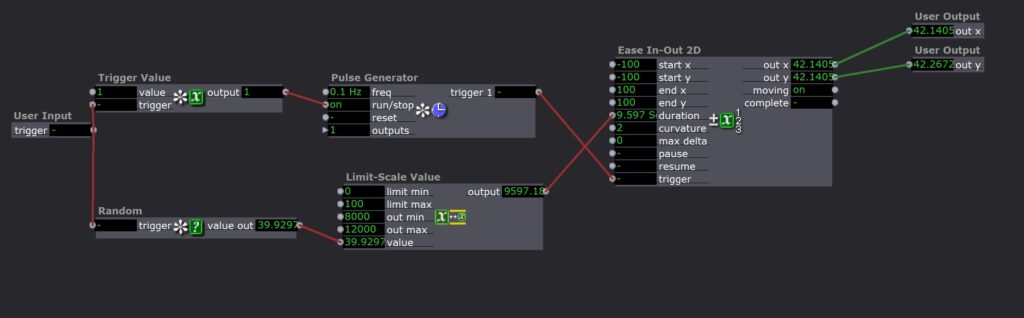

I used two scenes to manage this experience. The first one counted the triggers as well as determining how many times someone had to trigger the stairs before the projection and music would start. It randomly generated a new number from 5-10 for each round.

It would then activate the next scene which contained a colorful projection and started a random fun song to dance to. After one minute the scene would be slowly deactivated.

Overall I was really satisfied with this project. It took me a lot less time than the previous one I think because I took the time to plan at the beginning. It was also super fun to leave up in my house and spontaneously be able to jam out to a good song!

A few things to note for the future would be 1) I couldn’t projection map because I was just mirroring my computer screen; 2) my phone battery was used up very quickly my mirroring and projecting. So I would want to look for an app that could act as a second monitor or even better, get an HDMI to USB-A cord that would connect my projector directly to my computer.

Project Bump

Posted: September 7, 2020 Filed under: Uncategorized Leave a comment »I really enjoyed reading about Parisa Ahmadi’s final project “Nostalgia” (https://dems.asc.ohio-state.edu/?p=1743). The way the visuals of the final project overlapped on various fabrics created a full world of ideas just like how I would imagine my memories swirling around my mind. The softness of the fabric and genuine content of the visuals develops a more intimate space and allows audience members to feel comfortable experiencing what ever they end up experiencing. Since the visuals were connected to specific triggers on objects the audience would be able to directly interact and have a sense of how they impact their environment. Overall it seemed like a really thoughtful project and the result was able to surround the viewer with activity that interacted with all of the senses.