Infrared Tracking

Posted: October 1, 2018 Filed under: Uncategorized Leave a comment »A wonderful tutorial for how to do advanced Infrared Tracking in Isadora.

PP2

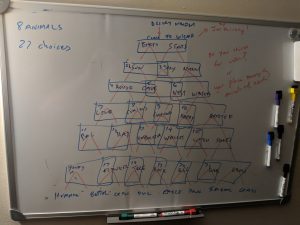

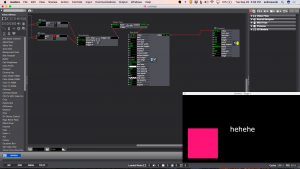

Posted: September 28, 2018 Filed under: Uncategorized Leave a comment »Task: For our second pressure project, we were tasked with creating a Fortune Teller Machine. I found this task to be interesting and just the right thing to help me focus on learning and exploring a few tools I might use in my thesis. In particular, this project allowed me to explore the abilities of FUNGUS which is a Unity Plugin that is used to help organize branching narratives.

Process: I first started with looking a little deeper into the task. From my last project, I didn’t want to have this system to have an “on the nose” quality. Therefore, I decided to go with Spirit Animals. The Animals have a fortune so to speak, but also, in the context of a vessel that predicts your future, they have a more personal connection. Your animal is the path.

I started with 10 animals, but ultimately settled on 6 animals and created a tree diagram. I started with the animals at the bottom. I knew I have to have the user select objects and the best representations I have given the tie constraints came from a model library from Google. From this library, I extracted a 2D image/ sprite and used that as the “items that one would choose in order to proceed along their chosen path. The items consisted of hats, shoes, and even some places like the sky.

Creating branches, laying down some music and making a few background changes lead me to a completed project.

Feedback: Most of the feedback was positive. The audience seemed to really like the ability to identify with a certain animal. It is something I hope to implement in future work. It really helped to give them a sense of uniqueness even though the choice was one of six.

One thing I might have done differently or didn’t expect was the very first user was interested in pressing the button as fast as they could. In the future, I suppose I should put some time gates even though that limits some of the freedom… Not sure. I suppose we are conditioned to play video games, or click on things with a mindless rapid succession. We might need to have the creators force individuals to pause in certain situations to actually observe the attention of the author.

How to make a button in the Isadora Window

Posted: September 25, 2018 Filed under: Uncategorized Leave a comment »PP1 – narrative sound and music

Posted: September 25, 2018 Filed under: Uncategorized Leave a comment »There are few things I like more than conspiracy theories, old science documentaries, and the king of rock and roll. Rarely do these treasures have a chance to coexist yet, in this first pressure project, I had the opportunity to create an auditory narrative of an urban legend using all three of my favorite things.

To speed up my workflow, and to follow the 5 hour time allotted, I made a list of what I needed to do. It began by coming up with what I wanted the story to be, how I wanted that story to be told, and then the steps I needed to accomplish that plan. My list ended up looking like this:

- Elvis was always alive

- Use Elvis Songs, create sentences using multiple audio tapes (inspired by this song I was listening to at the time), create sound bites that are choppy to create controlled confusion that evokes the sense of a conspiracy

- Gather all sounds that I might use first, import all sounds to after effects and cut out the pieces of what I want to use. Place those sounds in order and then fill in where there are gaps or information needs added to the story.

1. Elvis was Always Alive

To better understand the created narrative, one should know the original urban legend from which it was derived (the full story AVAILABLE HERE) .

2.Use Elvis Songs

To begin, I started by taking every Elvis song lyric (thanks wiki) and put them into a plain text doc. There, I picked apart the song lyrics to find the best songs that would match the story theme. Those songs were It’s Impossible, Lonely Man, You Don’t Know Me, All That I Am, and In the Ghetto.

Create sentences using multiple audio tapes.

From there, I knew that I wanted to be clear on parts of the story, so I found various news reports and took just one word from each video to piece together sentences.

Create sound bites that are choppy to create controlled confusion that evokes the sense of a conspiracy.

I didn’t want the entire story retold in word clips, so I took various sound bites that dealt with what I was trying to say, without actually saying it and put them in order of the story. This was to create a little bit of confusion for the listener, who could maybe get at what the story was trying to tell, but only if they drew their own conclusions or made their own assumptions of what was the truth (similar to a conspiracy).

3. Gather all sounds then fill in what it needed

After importing all the sounds, I realized that I had not addressed the DNA part of the story. I immediately thought of recent videos I had watched and found similar videos on James Watson and the structure of DNA.

Once time ended, I stopped working. I would have liked to have added visuals, but with the time constrains, it would have negatively affected the overall presentation. I am pleased with the final outcome and really appreciated the feedback from the class. I think that everyone was spot on in their assessments, and I’m happy that they were able to make the connections I hoped they could make.

PP1

Posted: September 24, 2018 Filed under: Uncategorized Leave a comment »For this PP1 project, I created a world inspired by the short story Where the Wild Things Are. Personally, I loved the story and wanted to explore what it would be like it influenced and told from the point of view other than the European slant it always had. In conjunction with this, I had visited Thailand a few years ago. The Island reminded me of the magical land portrayed in the story.

I decided to follow the storyline of WTWTA loosely using sound as the main driving force. I found that I did not have enough resources in sounds alone to convey the story as I wanted to tell it. Having a bunch of photos of this amazing place, I decided to use the photos as a background for the video. I used cutout animation for the foreground and used Premier 2018 to edit multiple layers of the film to give the illusion of depth and movement.

My feedback was very interesting. If I had to do it over again, I would have removed the iconic image of the monster so well known from the story. I thought that the audience would miss the reference, but instead, I felt I had provided something that was a bit too much on the nose. Anyway, I was extremely happy with the way it turned out and would only make that minor change if I edited it.

Joe Chambers

PP1

Posted: September 20, 2018 Filed under: Assignments, Uncategorized Leave a comment »For my first pressure project I chose to tell the story of The Jungle Book in just over a minute. I created a sound score from audio clips of a nostalgic tape-recording of the classic Disney tale. After assembling the sound score, I gathered some visuals from the VHS 1967 film. From there, I decided to assemble the audio and visual clips using Isadora. I used Isadora so I could project multiple videos at once and animate the projections. I also wanted to become more proficient at the program and I definitely learn by doing.

This work was centered around nostalgia and the reinvention and evaluation of childhood memories. My colleagues commented that they felt like they rewatched the full hour and a half movie in a minute because the key frames and quotes reminded them of the full scene. The time constraints turned the project in to a very concentrated reflection. During the critique the class also discussed the issues with the moral complications of race and culture associated with The Jungle Book. Though my concentrated reflection was able to avoid some of these complications, the issues should not and can not be invisibilized.

As a whole, I really enjoyed making this work and was inspired by all of the other interpretations of this pressure project!

Pressure Project 1

Posted: September 18, 2018 Filed under: Uncategorized Leave a comment »I chose to recreate a story that my father used to tell me and my brother when we were little. He embellished the original version with aspects of our own lives, so it always meant more to me. It was about a rooster, a cat, and a parrot, who all lived together in the forest. The parrot and cat would go out, and the rooster would continually be tricked by a fox to stick his head out of the window so he could grab him and run off. The final time he gets tricked you think he may not survive, and then out of the woods come me and my brother and cousins who save the rooster.

Although this story means a lot to me, I definitely did not expect my classmates to know the story. Therefore, I was hoping that they would focus more on the way the imagery and sound created a mood. I am always fascinated by mood more than anything, and I was trying to recreate the panic and eeriness that I felt during some children’s stories as a kid. I used almost completely Iranian music and wove it together, and when I felt the audio needed other elements, I tried really hard to mix the music in a way so that it sounded like it had always been that way. I wanted lots of overlapping, and therefore I had to really get to know Isadora in order to make that happen. I had to make a string of trigger delays, wave generators, and scene entrance triggers and play with the timing for each scene so that it flowed more easily. It took me a lot of time but it was a great way to feel more confident in the program by the end!

I was very shy to share it with classmates because it was such a personal story and it was Iranian. Even though I would have liked more time to get thoughts and feedback from my classmates about their experiences, I felt very proud of myself for what I had created.

Tactile Pavillion

Posted: August 28, 2018 Filed under: Uncategorized Leave a comment »Last Thursday I was unable to make it to class- I wanted to share with all of you what the project I was working on at the time!

As the poster states, the Tactile Pavilion engages sight and touch as a supplemental sensory experience to the surrounding music festival. It is a way to immerse oneself in the playful nature of found objects. Interior walls are covered in recycled and re-used materials such as textiles, metals, and plastics. Exterior walls are clad with construction mesh and bare wood. Insulation oozes at the connections. The pavilion’s functionality offers seating, shelter, and a place to relax and reset- devoting a full wall to signage made from the scraps of interior panels. The pavilion experiments with the engagement of alternative senses. It is not an escape from the music, but a deeper connection.

There will be more images/ descriptions about the project that you can check out at my personal site- https://marcd.co

2018 Readings

Posted: August 21, 2018 Filed under: Uncategorized Leave a comment »Here are links to the readings:

Few believe the world is…gnition.

– ResearchGate experiential learning – toolwire

EMS – Applications, motivating ideas, AI, summary

An Arts, Sciences and Engineering Education and Research Initiative for Experiential Media

http://faculty.georgetown.edu/irvinem/theory/Manovich-LangNewMedia-excerpt.pdf

Cycle 1 Reflection-Mystery House

Posted: December 11, 2017 Filed under: Kelsey Gallagher, Uncategorized Leave a comment »Mystery House

For my first showing of my system, I had built a 1″=1′ scale model of a room with 2 walls.

This section of a room was projection mapped with one pico projector through Isadora. Insolently when I set up my Isadora session it was not registering my Key so I lost my file from this session, leaving me to remake it for the final presentation.

The few things I was able to show during this presentation were

-Widow Effects such as a moon with clouds or a storm

-The lights turning on or off

The feedback I received from my peers was enlightening.

It was suggested that I make an interface for the viewer to play with rather than it be presentational. Also that audio would add greatly and to think about including headphones.

Overall my first round achieved what I wanted and even more I was given valuable feedback.