Pressure Project 3: Trailblazing

Posted: December 4, 2017 Filed under: Calder White, Paper & Pen, Pen and Pencil Games, Pressure Project 3, Uncategorized Leave a comment »For our third Pressure Project, our prompt was to take a pen & paper game and combine it with another game to make a delightfully replayable hybrid that had no more than 5 rules and did not require the creator’s verbal or gestural intervention. I love to play card games, and especially drinking games involved with cards, and so I decided to figure out a way in which I could revamp one of my favourite drinking card games — Ride the Bus — to suit this assignment.

For those who don’t know, Ride the Bus is a drinking game played with two people. The dealer lays out five cards and flips the first one. The second player then goes through a sequence of questions guessing the nature of the proceeding cards in this order:

- Is the second card higher or lower than the first card?

- Is the third card inside of (between) or outside of the first two cards?

- Is the fourth card red or black?

- What is the suit of the last card?

The catch is this: if at any point the second guesser makes an incorrect guess, they have to take a drink, a new set of five cards is dealt, and the game restarts. The player only wins once they “ride the bus” to the end.

Taking some inspiration from tic-tac-toe and Connect 4, I was curious about how Ride the Bus could function if placed in a grid. I decided that the cards would be set up in a 5×5 grid and this would become a game about which player — the dealer or the guesser — could collect the most cards by the end. Instead of having to drink for guessing a card incorrectly, an incorrect guess simply signaled the end of a player’s turn and therefore a stunted chance at collecting more cards.

The instruction sheet of my hybrid game can be found here: ACCADTrailblazing

In the end, this game reminded me a lot of afternoon’s spent at my Baba’s house playing War for hours. Although perhaps not the most stimulating for adults, Trailblazing could easily be an entertaining children’s or family game, and definitely gets more fun the more you play it!

Cycle 1 Presentation – here4u

Posted: November 28, 2017 Filed under: Calder White, Final Project, Uncategorized Leave a comment »For my final project in DEMS, titled here4u, I’m working to tell a non-linear narrative about a long distance platonic relationship between a mother and a son. The story is told mainly through text messages, voicemails, and journal entries and photographs (both physical and digital), unlocked via QR codes scattered throughout the installation. My inspiration comes from an extremely personal place as I have been in this very situation for the past four years of my undergraduate career, and my drive for this project stems from an artistic desire for transparency between creator/performer and the audience. Through this project, I am opening myself up to the audience — using actual voicemails and texts between my mother and I and actual entries from my journal — to tell a story about distance, loneliness, belonging, misinformation, struggle, and coming of age. I am doing all of this in the hopes that my “baring it all” can open a dialogue about connection and relation between myself and the people experiencing this installation, as well as between the visitors to the space.

In the first cycle presentation, I organized two desk spaces diagonal to each other in the Motion Lab, one being the “mother” space and the other being the “son” space. The mother space has various artifacts that would be found on my mom’s desk at home: note pads with hand-written reminders, prayer cards and rosaries from my Baba, and a film photograph from the cottage that my mom and I used to live in. Spread within the artifacts are QR codes that include worried voicemails from my mom about suspicious activity on campus, texts from her to me asking where I’ve been, and links to screen recordings of our longer text conversations.

Across the room stands the son space, a much less organized desk with worn and filled daily planners, books dealing with citizenship theory, journals open to personal entries, and crumpled to-do lists. The QR codes here lead to first-person videos of protests, an online gallery of silhouetted selfies, and voicemails apologizing for taking so long to respond to my mom.

In the creation of this project, I am dredging up a wealth of emotions from my time in Columbus and pinning it to a presentation that I hope won’t feel like a performance. That being said, I realize that in it’s presentational nature, there are certain theatrical elements that need to be considered and addressed. With the first cycle showing, I learned very quickly how individual an experience this is. In order to get the most out of the QR codes — particularly the voicemails — the audience members must wear headphones, which immediately isolates them into their own world and deters connection with other audience members during their experience. In my attempt for creating a feeling of loneliness, I would say this was a success.

I received a wealth of feedback on which aspects of both spaces worked and which didn’t, and agreement on the most powerful aspects of the story-telling being the voicemails and texts was unanimous due to the emotion heard in the voice. At the suggestion of Ashlee Daniels Taylor, I will be working to generally increase the amount of material in the space so that there is more to look through and find in the QR codes. This works towards another one of my goals with the narrative, which is to engender the sense of a story without the need for the audience member to find every single piece of the puzzle, amplifying individual experience within the installation.

For my second cycle, I have been working towards the generation of more material to be found and directing the experience moreso than in my first presentation. Without any sort of guidance using vocal, lighting, or other cues, the first presentation invited viewers to find their own way through the installation which, although was fun for me to watch and hear how different people pieced together the experience for themselves, led to awkward successive QR codes (being too similar or repetitive), a desire for more direction, and an undefined ending to the experience. I will be working with some theatrical lighting to guide the viewers in a more predetermined path through the space.

The emotional feedback that I received after the first presentation has inspired me to push forward with this project’s main intentions and to develop this experience has honestly and authentically as possible. The tears and personal relations shared in our post-presentation discussion proved to me the value in making oneself vulnerable to their audience and I intend to lean into this for the second cycle presentation.

Pressure Project 3

Posted: November 7, 2017 Filed under: Kelsey Gallagher, Uncategorized Leave a comment »I was super excited to do pressure project 3. I have a lot of background in playing tabletop/board games so I found this only challenging in the limitations. I knew immediately I wanted to use a game my friends and played many times, which is similar to pictionary and telephone mixed together. I just needed to find another game to meld with it. I knew, because of my large collection of dice, I wanted to use dice.

I did a bunch of google searches and pintrest searches for dice games. After reading 3 or 4 I found gambling game that was interesting and alterable.

Then I just let my own creative juice run to create a narrative story that involves both of those two games.

This was the final project.

The Unfortunate Adventurers and the Wishing Well

Kelsey Gallagher

Pressure Project 2: The Tell Tale Heart

Posted: November 7, 2017 Filed under: Kelsey Gallagher, Uncategorized Leave a comment »Pressure Project 2

So for this project my personal goal was to pull a physical response from my audience. I chose Edgar Allan Poe’s Tell Tale Heart because it is one of my most favorite stories from my childhood. It also is a story that is easily told with just audio. My first choice was to try and tell The Tale of the Beetle and the Bard story of the three brothers, but the concept was frustrating so I chose to switch stories.

I first scoured the internet for a creepy underscore that could set the tone of the minute piece. Eventually I found on I liked and I exported it.

After finding this I used FreeSound.org to find the correct sound effects to create the experience. Through the use of Audacity software I created one file that told the story.

This the end product.

Kelsey Gallagher

PP3 – Aut ’17

Posted: October 10, 2017 Filed under: Uncategorized Leave a comment »

Pen & Paper + (LEVEL 3 Pressure Project)

Context:

According to wikipedia, examples of Pen & Paper Games include ” Tic-tac-toe, Sprouts, and Dots and Boxes. Other games include: Hangman, Connect 5, M.A.S.H., Boggle, Battleships, Paper Soccer, and MLine.”

Assignment:

Choose a known (at least to you) pen & paper game*.

Combine it with another game. The second game can be another pen and pencil game, a card game, a dice game, or a interactive projection.

You have no more than 5 hours to complete this project. (Not including research.)

*Please feel free to keep things simple. Yet, here are some more adventurous examples:

- http://zenseeker.net/BoardGames/PaperPenGames.htm ,

- http://www.thegamesjournal.com/articles/GameSystems4.shtml,

Basic Limitations:

- new game can have no more than 5 rules

- a novice player must be able to play without your verbal or gestural intervention. (provide an instruction sheet with the rules.)

Basic Level 3 Achievements :

- Fun to play

- Game is delightfully repayable

Legendary Achievements:

- includes a plot driven narrative

- includes an interactive projection

Pressure Project #2: Alligators in the Sewers!

Posted: October 8, 2017 Filed under: Uncategorized Leave a comment »This audio exploration is designed to evoke sensation through sound in a whimsical way. Working from the myth of the alligators who live in the New York City subway system, I sketched out a one-minute narrative encounter that would be recognizable (especially to a city-dweller), a little creepy, and just a bit funny.

- A regular day on a busy New York City street

- The clatter of a manhole cover being opened

- Climbing down into the dark sewer

- Walking slowly through the dark through running water (and who knows what else)

- A sound in the distance . . . a pause

- The roar of an alligator!

Assembling these sounds was simply a matter of scouring YouTube, but mixing them proved to be a bit more challenging. I layered each track in GarageBand, paying special attention to the transition from the busy world above and the dark, industrial swamp below. I also worked to differentiate the sound of stepping down a ladder from the sound of walking through the tunnel. An overlay of swamp and sewer sounds created the atmosphere belowground, and a random water drop built the tension. It was important to stop the footsteps immediately after you hear the soft roar of the alligator in the distance, pause, and then bring in the loudest sequence of alligators roaring I could find.

The minute-long requirement was tricky to work with because of the relationship between time and tension. I think the project succeeds in general, but an extra minute or two could really ratchet up the sensations of moving from safety, to curiosity, to trepidation, to terror. Take a listen for yourself! I recommend turning off all the lights and lying on the floor.

Pressure Project 2 – 2017

Posted: September 22, 2017 Filed under: Uncategorized Leave a comment »PP2 – Media and Narrative:

This Pressure Project was originally offered to me by my Professor, Aisling Kelliher:

Topic – narrative sound and music

Select a story of strong cultural significance. For example this can mean an epic story (e.g. The Odyssey), a fairytale (e.g. Red Riding Hood), an urban legend (e.g. The Kidney Heist) or a story that has particular cultural meaning to you (e.g. WWII for me, Cuchulainn for Kelliher).

Tell us that story using music and audio as the the primary media. You can use just audio or combine with images/movies if you like. You can use audio from your own personal collections, from online resources or created by you (the same with any accompanying visuals). You should aim to tell your story in under a minute.

You have 5 hours to work on this project.

Navy plans to use X-Box 360 Controllers to control (parts of) their submarines

Posted: September 18, 2017 Filed under: Uncategorized Leave a comment »http://gizmodo.com/why-the-navy-plans-to-use-12-year-old-xbox-360-controll-1818511278

Pressure Project #1: (Mostly) Bad News

Posted: September 16, 2017 Filed under: Pressure Project I, Uncategorized Leave a comment »Pressure Project #1

My concept for this first Pressure Project emerged from research I am currently engaged in concerning structures of representation. The tarot is an on-the-nose example of just such a structure, with its Derridean ordering of signs and signifiers.

I began by sketching out a few goals:

The experience must evoke a sense of mystery punctuated by a sense of relief through humor or the macabre.

The experience must be narrative in that the user can move through without external instructions.

The experience must provide an embodied experience consistent with a tarot reading.

I began by creating a scene that suggests the beginning of a tarot reading. I found images of tarot cards and built a “Card Spinner” user actor that I could call. Using wave generators, the rotating cards moved along the x and y axes, and the z axis was simulated by zooming the cards in and out.

Next I built the second scene that suggest the laying of specific cards on the table. Originally I planned that the cards displayed would be random, but due to the time required to create an individual scene for each card I opted to simply display the three same cards.

Finally, I worked to construct a scene for each card that signified the physical (well, virtual) card that the user chose. Here I deliberately moved away from the actual process of tarot in order to evoke a felt sensation in the user.

I wrote a short, rhyming fortune for each card:

The Queen of Swords Card – Happiness/Laughter

The Queen of Swords

with magic wards

doth cast a special spell:

“May all your moments

be filled with donuts

and honeyed milk, as well.”

The scene for The Queen of Swords card obviously needed to incorporate donuts, so I found a GLSL shader of animated donuts. It ran very slowly, and after looking at the code I determined that the way the sprinkles were animated was extremely inefficient, so I removed that function. Pharrell’s upbeat “Happy” worked perfectly as the soundtrack, and I carefully timed the fade in of the fortune using trigger delays.

Judgment Card – Shame

I judge you shamed

now bear the blame

for deeds so foul and rotten!

Whence comes the bell

you’ll rot in hell

forlorn and fast forgotten!

The Judgement card scene is fairly straightforward, consisting of a background video of fire, an audio track of bells tolling over ominous music, and a timed fade in of the fortune.

Wheel of Fortune – Macabre

With spiny spikes

a crown of thorns

doth lie atop your head.

Weep tears of grief

in sad motif

‘cuz now your dog is dead.

The Wheel of Fortune card scene was more complicated. At first I wanted upside-down puppies to randomly drop down from the top of the screen, collecting on the bottom and eventually filling the entire screen. I could not figure out how to do this without having a large number of Picture Player actors sitting out of site above the stage, which seemed inelegant, so I opted instead to simply have puppies drop down from the stop of the stage and off the bottom randomly. Is there a way to instantiate actors programmatically? It seems like there should be a way to do this.

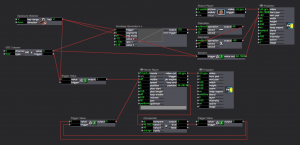

Now that I had the basics of each scene working, I turned to the logics of the user interaction. I did this in two phases:

In phase one I used keyboard watchers to move from one scene to the next or go back to the beginning. The numbers 1, 2, and 3, were hooked up on the selector scene to choose a card. Using the keyboard as the main interface was a simple way to fine-tune the transitions between scenes, and to ensure that the overall logic of the game made sense.

The biggest challenge I ran into during this phase was in the Wheel of Fortune scene. I created a Puppy Dropper user actor that was activated by pressing the “d” key. When activated, a puppy would drop from the top of the screen at a random horizontal point. However, I ran into a few problems:

- I had to use a couple of calculators between the envelope generator and the projector in order to get the vertical position scaling correct such that the puppy would fall from the top to the bottom

- Because the sound the puppy made when falling was a movie, I had to use a comparator to reset the movie after each puppy drop. My solution for this is convoluted, and I now see that using the “loop end” trigger on the movie player would have been more efficient.

Phase two replaced the keyboard controls with the Leap controller. Using the Leap controller provides a more embodied experience—waving your hands in a mystical way versus pressing a button.

Setting up the Leap was simple. For whatever reason I could not get ManosOSC to talk with Isadora. I didn’t want to waste too much time, so I switched to Geco and was up and running in just a few minutes.

I then went through each scene and replaced the keyboard watchers with OSC listeners. I ran into several challenges here:

- The somewhat scattershot triggering of the OSC listeners sometimes confused Isadora. To solve this I inserted a few trigger delays, which slowed Isadora’s response time down enough so that errant triggers didn’t interfere with the system. I think that with more precise calibration of the LEAP and more closely defined listeners in Isadora I could eliminate this issue.

- Geco does not allow for recognition of individual fingers (the reason I wanted to use ManosOSC). Therefore, I had to leave the selector scene set up with keyboard watchers.

The last step in the process was to add user instructions in each scene so that it was clear how to progress through the experience. For example, “Thy right hand begins thy journey . . .”

My main takeaway from this project is that building individual scenes first and then connecting them after saves a lot of time. Had I tried to build the interactivity between scenes up front, I would have had to do a lot of reworking as the scenes themselves evolved. In a sense, the individual scenes in this project are resources in and of themselves, ready to be employed experientially however I want. I could easily go back and redo only the parameters of interactions between scenes and create an entirely new experience. Additionally, there is a lot of room in this experience for additional cues in order to help users move through the scenes, and for an aspect of randomness such that each user has a more individual experience.

Click to download the Isadora patch, Geco configuration, and supporting resource files:

http://bit.ly/2xHZHTO

The War on Buttons

Posted: September 11, 2017 Filed under: Uncategorized Leave a comment »https://www.theringer.com/tech/2017/9/11/16286158/apple-iphone-home-button