Cycle 1, 2 & 3

Posted: December 15, 2016 Filed under: Uncategorized Leave a comment »Hello,

I documented all parts of my process on my wordpress blog:

https://ecekaracablog.wordpress.com/2016/10/17/visualizing-the-effects-of-change-in-landscape/

I documented the process on my blog. All the visuals and sketches are on the website and the video is on the way.

Cycle 1:

While I was dealing with analysis of data, I came up with a very simple demonstration of variables in order to place them on a visual reference (map) to show the exact locations in the country. I wanted to show the main location by using a map, considering the audience might not know where exactly Syria is.

Text and simple graphic elements formed the main start of the project. After I shared it with the class the feedback I got was mainly around curious questions. One of my peers asked “What is sodium nitrate?” I could tell that he understood that chemical was not good for environment or health. Another classmate continued, “They attack to cultural areas during wars, like they are trying to destroy the culture or history of nations.” That comment helped me to add more on to the project and consider the different results of the war, connected to the same ending “damage”.

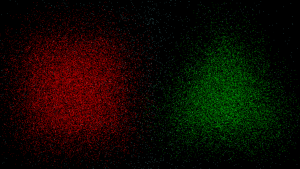

After more research and analysis I had to admit that I can’t use clear Satellite imagery or maps for the infographic, which would’ve been a great tool. I learned from Alex, since the war is still ongoing Google Maps have some restrictions in the war areas. All I got from Google Maps was pixelated, blurry maps. I moved on with abstract visualizations applied on map imagery, also included photographs from cities to the final outcome.

Cycle 2:

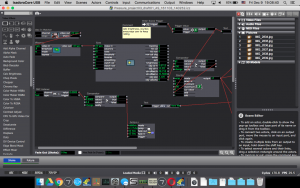

I believe the feedback that I got from the Cycles helped me a lot to consider the thoughts of my peers. When we work on our projects for long time periods, being subjective and critical towards the become harder. Since I worked on the collection, analysis, sketching and design of the dataset by myself, I was clear about the details of the dataset while I knew it was still too complex for the viewers. In the end the dataset and design is still too complex. It was decision I made for my first complex interactive information design project. Because the analysis of data showed me the complexity is the nature of this project. There is catastrophic damage given to a war country and even a small detail, an environmental issue I tried to visualize is connected to multiple variables on the data set. Therefore I used the wires to show the direct connection between variables and communicate the country is wired with these risks and damages.

Since my peers saw the work and heard me talking about the project, they were more familiar with it. So I was not sure the project was clear to them but I got a positive feedback in the second cycle. I’ve been told that the project looks more complete.

After I got feedback from Alex, I started to think about an active/passive mode for the project. I included a sound piece from the war area, that is activated by the audience walking by the hall in front of my project. The aim for the sound is to take the attention of the audience to the work and give an idea about the topic. Camera to track the motion sees the people passing by and activates the sound afterwards. Since I want the audience to focus on the data set, I targeted the potential audience for the sound part.

I believe sound completed the work and created an experience.

I know the work is not very clear or ideal for the audience, but I wanted to push the limits of layering and complexity in this project. Taking the risk of failure, I am happy to share that I learned a lot (from everyone in this class)!

Right now I have a general understanding of Unity, I know how to add more and manipulate the code (even I don’t know about complex coding). I learned the logic of Unity prefabs, inspector, interactivity and general interface. In addition to MAX MSP (used it last year), I learned using Isadora, which is way more user friendly. Finally I learned using a 3rd information design software called Tableau, which helped me to develop the images (under Infographic Data) on my blog.

I have very valuable feedback from my peers and professors. Even if the result was not perfect I learned using 3 softwares in total, had fun with the project, experimented, pushed the limits and learned a lot of things!

Please read the complete process from my blog. 🙂

Pressure Project #3

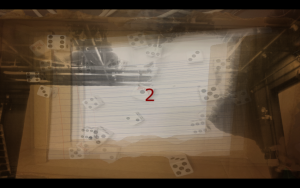

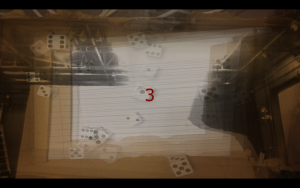

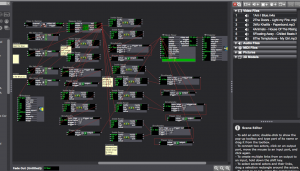

Posted: December 9, 2016 Filed under: James MacDonald, Pressure Project 3, Uncategorized Leave a comment »At first, I had no idea what I was going to do for pressure project #3. I wasn’t sure how to make a reactive system based on dice. This lead to me doing nothing but occasionally thinking about it from the day it was assigned until a few days before it was due. Once I sat down to work on it, I quickly realized that I was not going to be able to create a system in five hours that could recognize what people rolled with the dice. I began thinking about other characteristics of dice and decided that I should explore some other characteristics of dice. I made a system with two scenes using Isadora and Max/MSP. The player begins by rolling the dice and following directions on the computer screen. The webcam tracks the players’ hands moving, and after enough movement, it tells them to roll the dice, of which the loud sound of dice hitting the box triggers the next scene, where various images of previous rolls appear, with numbers 1-6 randomly appearing on the screen, and slowly increasing in rapidity while delayed and blurred images of the user(s) fade in, until it sends us back to the first scene, where we are once again greeted with a friendly “Hello.”

The reactions to this system surprised me. I thought that I had made a fairly simple system that would be easy to figure, but the mysterious nature of the second scene had people guessing all sorts of things about my project. At first, some people thought that I had actually captured images of the dice in the box in real time because the first images that appeared in the second scene were very similar to how the roll had turned out. In general, it seemed like the reaction was overall very positive, and people showed a genuine interest in it. I think that I would consider going back and expanding on this piece more and exploring the narrative a little more. I think that it could be interesting to develop the work into a full story.

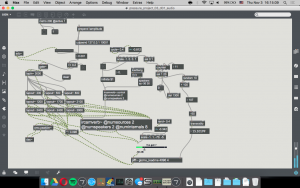

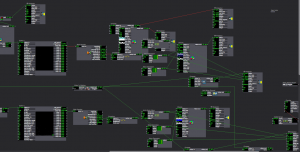

Below are several images from a performance of this work, along with screenshots of the Max patch and Isadora patch.

Cycle 1… more like cycle crash (Taylor)

Posted: December 3, 2016 Filed under: Isadora, Taylor, Uncategorized Leave a comment »The struggle.

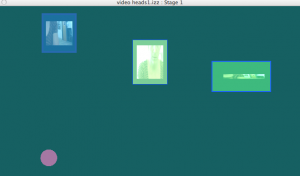

So, I was disappointed that I couldn’t get the full version (with projector projecting, camera, and a full-bodied person being ‘captured’) up and running. Even last week I did a special tech run two days before my rehearsal using two cameras (an HDMI connected camera and a web cam). I got everything up, running, and attuned to the correct brightness Wednesday and then Friday it was struggle-bus city for Chewie, then Izzy was saying my files were corrupted and it didn’t want to stay open. Hopefully, I can figure out this wireless thing for cycle 2 or maybe start working with a Kinect and a Cam?…

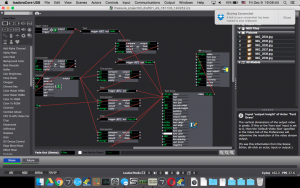

The patch.

This patch was formulated from/in conjunction with PP(2). It starts with a movie player and a picture player switching on&off (alternating) while randomly jumping through videos/images. Although recently I am realizing that it is only doing one or the other… so I have been working on how the switching back and forth btw the two players works (suggestions for easier ways to do this are welcome). When a certain range of brightness (or amount of motion) is detected from the Video In (fed through Difference) the image/vid projector switches off and the 3 other projectors switch on [connected to Video In – Freeze, Video Delay – Freeze, another Video In (when other 2 are frozen)]. After a certain amount of time the scene jumps to a duplicate scene, ‘resetting’ the patch. To me, these images represent our past and present selves but also provide the ability to take a step back or step outside of yourself to observe. In the context of my rehearsal, for which I am developing these patches, this serves as another way of researching our tendencies/habits in relation to inscriptions/incorporations on our bodies and the general nature of our performative selves.

The first cycle.

Some comments that I received from this first cycle showing were: “I was able to shake hands with my past self”, “I felt like I was painting with my body”, and people were surprised by their past selves. These are all in line with what I was going for, I even adjusted the frame rate of the Video Delay by doubling it right before presenting because I wanted this past/second self to come as more of a surprise. Another comment that I received was that the timing of images/vids was too quick, but as they experimented and the scene regenerated they gained more familiarity with the images. I am still wondering with this one. I made the images quick on purpose for the dancers to only be able to ‘grab’ what they could from the image in this flash of time (which is more about a spurring of feeling than a digestion of the image). Also, the images used are all sourced from the performers so they are familiar and these images already have certain meanings for them… Don’t quite know how the spectators will relate or how to direct their meaning making in these instances…(ideas on this are also welcomed). I want to set up the systems used in the creation of the work as an installation that spectators can interact with prior to the performers performing, and I am still stewing on through line between systems… although I know it’s already there.

Thanks for playing, friends!!!

Also, everyone is invited to view the Performance Practice we are working on. It is on Fridays 9-10 in Mola (this Friday is rescheduled for Mon 11.14, through Dec. 2), please come play with us… and let me know if you are planning to!

Wizarding Project – VR, Cycle 2

Posted: November 29, 2016 Filed under: Uncategorized Leave a comment »Things are coming along very well! An actual dungeon layout is coming into scope, puzzles are being created and spells are working as designed. Up until now the focus has been to create a proper interface as well as create the building-blocks required to make a real game. Now that the pieces are in place, real level designs can be created and a story constructed.

Another major focus is audio. The story, as it is now, is for the player to enter the world and be told that they are beginning their wizarding examination in order to become a proper sorcerer. The instructor (myself) will help them both within the experience and outside of it using trigger-based sound queues inside and myself in character outside.

The final product is coming into view. I am excited.

Pressure Project 3 Rubik’s Cube Shuffle

Posted: November 5, 2016 Filed under: Pressure Project 3, Taylor, Uncategorized Leave a comment »For this project, I learned the difficulties of chroma detecting. I was trying to create a patch that would play a certain song every time the die (a Rubik’s cube) landed on a certain color. Since this computer vision logic depends highly on lighting I trying to work in the same space (spiking the table and camera) so that my color ranges would be specific enough to achieve my goal. With Oded’s guidance, I decided to use a Simultaneity actor that would detect the Inside Ranges of two different chroma values through the Color Measure actor, which was connected to a Video In Watcher. I duplicated this set up six times, trying to use the most meaningful RGB color combinations for each side of the Rubik’s cube. The Simultaneity actor was plugged into a Trigger Value that triggered the songs through a Movie Player and Projector. Later in the process I wanted to use just specific parts of songs since I figured there would not be a lot of time between dice roles and I should put the meaning or connection up front. I did not have enough time to figure out multiple video players and toggles and I did not have time to edit the music outside of Isadora either, so I picked a place to start that worked relatively well with each song to get the point across. However, this was more changing when wrong colors were triggering songs. I feel like a little panache was lost by the system’s malfunction, but I think the struggle was mostly with the Webcam’s consistent refocusing – causing the use of larger ranges. I am also wondering if a white background might have worked better lighting wise. (Putting breaks of silence between the songs may have also been helpful to people’s processing of the connections btw colors and songs). Still, I think people had a relatively good time. I had also wanted the video of the die to spin when a song was played, but with readjusting the numbers for lighting conditions, which was done with Min/Max Value Holds to detect the range numbers, was enough to keep me busy. I chose not to write in my notebook in the dark and do not aurally process well, so I am not remembering other’s comments.

Here are the songs: (I trying to go for different genres and good songs)

Blue – Am I blue?- Billie Holiday

Red – Light My Fire – Live Extended Version. The Doors

Green – Paperbond- Wiz Khalifa

Orange – House of the Rising Sun- The Animals

White – Floating Away – Chill Beats Mix- compiled by Fluidify

Yellow – My girl- The Temptations

Also, Rubik’s Cube chroma detection is not a good idea for use in automating vehicles.

https://osu.box.com/s/5qv9tixqv3pcuma67u2w95jr115k5p0o PresProj3(1)

The Synesthetic Speakeasy – Cycle 1 Proposal

Posted: October 9, 2016 Filed under: Uncategorized Leave a comment »For our first cycle I’d like to explore narrative composition and storytelling techniques in VR. I’m interested in creating a vintage speakeasy / jazz lounge environment in which the user passively experiences the mindsets of the patrons by interacting with objects that have significance to the person they’re associated with! This first cycle will likely be experimentation with interaction mechanics and beginning to form a narrative.

Peter’s Produce Pressure Project (2)

Posted: October 9, 2016 Filed under: Uncategorized Leave a comment »For our second pressure project, I used an Arduino (similar to a Makey-Makey) to allow for users to interact with a banana and orange as vehicles for generating audio. As the user touches a piece of fruit, a specific tone begins to pulse. Upon moving that fruit, it’s frequency increases with velocity. Then, if both pieces of fruit move quickly enough, geometric shapes on-screen explode! Once you let go of them, the shapes will reform into their geometric representations.

Our class’s reaction to the explosion was awesome; I wasn’t expecting it to be that great of a payoff 🙂

cycle 1 plans

Posted: October 4, 2016 Filed under: Uncategorized Leave a comment »Computer vision which tracks movement of 1 (maybe two) people moving in different quadrants (or some other word that doesn’t imply 4) of the video sensor (eyes or maybe use kinect to work with depth of movement as well), such that different combinations of movement trigger different sounds. (ie, lots of movement in upper half and stillness below triggers buzzing, reverse triggers a stampede or alternating up and down distal movement triggers sounds of wings flapping…. more to come if I can figure that out.

Pressure Project 2

Posted: October 4, 2016 Filed under: Uncategorized Leave a comment »

https://osu.box.com/s/7evtez7zjct0hg2ws4o9g2zlj04mb0qg

https://osu.box.com/s/7evtez7zjct0hg2ws4o9g2zlj04mb0qg

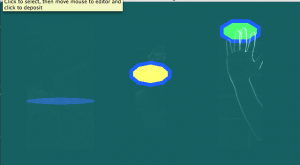

I started with a series of makey makey water buttons as a trigger for sounds and wanting to come up with different sequences of triggering to jump to different scenes. So if you touched the blue bowl of water then the green then yellow twice and then green again, through the calculator and inside range actors, you get moved to another scene. The made up the scenes trying to create variations on the colors and shapes that were part of the first scene, save for one, that was just 3 videos of the person interacting fragmented and layered with no sound. The latter was to make a interruption of sorts. BUT…. in practice, people triggered the water buttons so quickly that scenes didn’t have time to develop and the motion detection and interpretation from some of the scenes (affecting shape location and sound volume), didn’t become part of the experience. Considering now how to provide a frame for those elements to be more immediately explored, to slow people down in engaging with the water bowls whether via text or otherwise AND the possibility of a trigger delay to make time between each action.