“Trailhead” Final project update

Posted: December 15, 2015 Filed under: Uncategorized Leave a comment »Reflection

I am very pleased with how my final iteration of this project went. Not all of the triggers worked perfectly (the same scene was always finicky, which could have called for some final tweaking), but the interactivity I was looking for occurred.

Please refer to my video documentation of the participants experience and experiments with the light projections:

When I had the opportunity to discuss the participants interactions here is the feedback I received as well as my observations.

- One participant suggested I play with the velocity as a reactive element, using slow as well as fast as options. I explained that this actual was a step in the creation process but I preferred the allure of the constant light. I would like to in the future use the velocity in a different way because I agree it would add a lot of interest to the experience.

- When I asked them to “paint” the light with their hands, they actually rubbed their hands on the ground not in the air like patch necessitated. I realized after the first cycle of participants that using wording more akin to “conducting” would give them the depth information they would need to interact.

- I found that their impetus after interacting for a while was to use try and use their feet to manipulate the light because the light was projected onto the floor. Giving them information about their vertical depth being a factor of body recognition would have helped them. On the contrary though, I did enjoy watching the discovery process unfold on its own as their legs got higher off the ground and the projection began to appear.

- Another participant, when asked how they decided to move after seeing the experiential media said it was in a way of curiosity. They wanted to ask to test the limits of the interaction.

- The most exciting feedback for me was when a final participant expressed their self-proclaimed ‘non-dancer’ status but said my patch encouraged them to move in new ways. Non movers found themselves moving because of the interest the projection generated. So cool!

I wanted to give the participants a little bit of information about the patch but not too much as to spoon feed it to them. The written prompts worked well, but as stated above, the “paint” wording while poetic was not effective.

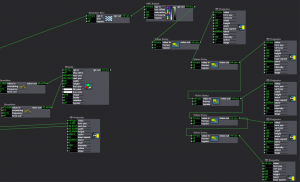

Kinect to Isadora

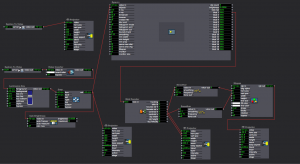

Isadora Patch Details

Scene Names/Organization

“Come to me,” “Take a knee: Paint the light with your hands,” and “A little faster now” were all text prompts that would trigger when a person exited the space to give them instructions for their next interaction. The different “mains” was the actual trailing projection itself, separated into different colors. Finally, the “Fin, back to the top!” screen was simply there for a few seconds to reveal they had reached the end and the patch would loop all over again momentarily. This could potentially cause an infinite loop of interactivity, allowing participants to observe others and find new ways of spreading, interacting, hiding and manipulating the projected light.

I am excited to take this patch, intelligence, feedback forward with me into my dance studies. I am going to integrate a variant of this project immediately into my performance in the Department of Dance’s Winter Concert in February of 2016, of which I have a piece of choreography and soon an experiential projection, in.

Fin!

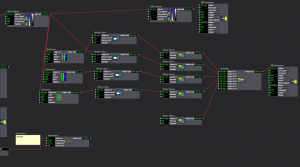

Final Project Patch Update

Posted: December 2, 2015 Filed under: Alexandra Stilianos, Final Project Leave a comment »Last week I attempted to use the video delays to create a shadow trail of the projected light in space and connect them to one projector. I plugged all the video delay actors into each other and then one projector which caused them to only be associated to one image. Then, when separating the video delays and giving them their own projectors and giving them their own delay times, the effect was successful.

Today (Wednesday) in class, we adjusted the Kinect and the Projector to be aiming straight towards the ground so the reflection caused by the floor from the infrared lights in the Kinect would no longer be a distraction.

I also raised the depth that the Kinect can read from a persons shins and up so when you are below that area the projection no longer appears. For example, a dancers can take a few steps and then roll to the ground and end in a ball and the projection will follow and slowly disappear, even with the dancer still within range of the kinect/projector. They are just below the readable area.

Ideation 2 of Final Project- Update

Posted: November 20, 2015 Filed under: Alexandra Stilianos, Pressure Project 3 Leave a comment »A collaboration has emerged between Sarah, Connor and myself where we will be interacting/manipulating various parts of the lighting system in the MoLa. My contribution is a system that will listen to sounds of a tap dancer on a small piece of wood (a tap board) and affect the colors of specific lights projected on the floor on or near the board.

Connection from microphone to computer/Isadora:

Microphone (H4) –> XLR code –> Zoom Mic –> Mac

The zoom mic acts as a sound card via a USB connection. It needs to be on Channel 1, USB transmit mode, and audio IF.

Our current question now is can we control individual lights in the grid through this system and my current next step is playing with the sensitivity of the microphone so the lights don’t turn on and off out of control or pick up sounds away from the board.

Iteration 1 of Final Project

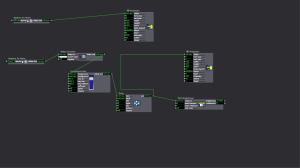

Posted: November 9, 2015 Filed under: Alexandra Stilianos, Final Project Leave a comment »Here is my patch for the first iteration:

The small oval shape followed a person around the space that is visible by both a Kinect and the projector. This was difficult since their field of views were slightly off, but was able to create it with good accuracy.

I would’ve like the shape to move a bit more smoothly and conform more closely to the shape of the body. I think using some of the actors that Josh did (Gaussian Blur and Alpha Mask) will help with these issues.

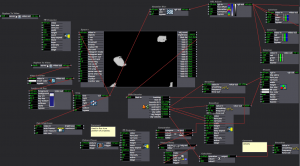

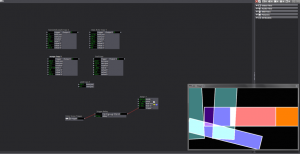

Vuo + Kinect + Izzy

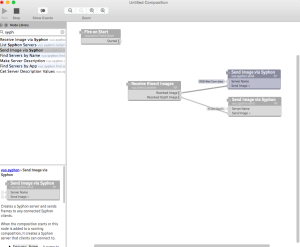

Posted: October 29, 2015 Filed under: Alexandra Stilianos, Pressure Project 3 Leave a comment »Screen caps and video of Kinect + Vuo + Izzy. Since we were working on the demo version on Vuo I couldn’t use video feed right from Isadora to practice tracking with the eyes and blob so a screen capture video was taken and imported.

We isolated a particular area in space that the kinect/vuo could read the depth as a gray scale to identify that shape

Patch connecting video of depth tracking to Izzy and using Eyes++ and Blob decoder, I could get exact coordinated for the blob in space.

https://www.youtube.com/watch?v=E9Gs_QhZiJc&feature=youtu.be

Ideation of Prototype Summary- C1 + C2

Posted: October 26, 2015 Filed under: Alexandra Stilianos, Assignments 1 Comment »My final project unfolds in 2 cycles that culminate to one performative and experiential environment where a user can enter the space wearing tap dance shoes and manipulate video and lights via the sounds from the taps and their movement in space. Below is an outline of the two cycles I plan to actualize this project.

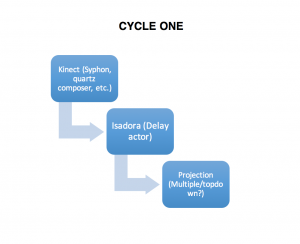

Cycle one: Friederweiss-esque video follow

Goal: Create a patch in Isadora that can follow a dancer in space with a projection. When the dancer is standing still the animation is as well and as the performer accelerates through the space the animation leaves a trail that is proportionate to the distance and speed traveled.

Current state: I attempted to use Syphon into Quartz Composer to be able to read skeleton data from the Kinect to use in Isadora and met some difficulties installing and understanding the software. I referenced Jamie Griffiths blogs linked below.

http://www.jamiegriffiths.com/kinect-into-isadora

http://www.jamiegriffiths.com/new-kinect-into-syphon/

Projected timeline: 3-4 more classes to understand and implement software into Isadora and then create and utilize patch.

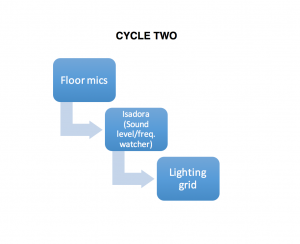

Cycle two: Audible tap interactions

Goal: Create environment that can listen to the frequency and/or amplitude of the various steps and sounds a tap shoe can create and have those interactions effect/control the lighting for the piece.

Current State: Not attempted this yet since still in C1 but my classmate Anna Brown Massey did some work with sound in our last class that may be helpful to me when I reach this stage.

Current questions: How do I get the software to recognize frequency in the tap shoes since the various step create different sounds. Is this more reliable or difficult than using volume alone, as it is definitely more interesting to me.

Projected timeline: 3+ classes, no use yet with this kind of software so a lot of experimentation expected.

Lexi PP3 Summary/reflection

Posted: October 12, 2015 Filed under: Alexandra Stilianos, Pressure Project 3 Leave a comment »I chose to write a blog post on this project for my main wordpress page for another dance related class –> https://astilianos.wordpress.com/2015/10/13/isadora-patches-computer-vision-oh-my/

Follow the link in wordpress to a different wordpress post for WP inception.

Class notes on 9/30 regarding PP3

Posted: September 30, 2015 Filed under: Alexandra Stilianos, Uncategorized Leave a comment »PP3 GOAL: Create an interactive ‘dance’ piece (as a group), each should create a moment of reaction from the ‘dancer’

Group goals/questions:

- Focus on art!

- What will we do?

- How will we do it?

- What is my job?

- Establish perimeters and workflow?

- Understand the big picture?

- How is the sensing being done?

- What is the system design?

Resources:

- 4 projectors

- 3 projectors on floor

- 2 HDMI Cameras

- Top down camera with infra red

- Kinect

- Light/sound system

- Isadora

- Max/MSP

- Myo (bracelets)

- MoLa

- Various sensors (?)

Group 1: Sarah, Josh, Connor, “Computer Vision Patch”

- Turn on MoLa, video input, write simple computer vision (CV) patch that gives XY coordinates

- Program lighting system, provide outputs and know what channels they’re on

Channels (1- 10)

- x

- y

- Velocity

- Height

- Width

All x2 for the 2 cameras (top down and front), 2nd camera would be same identifiers but on channels 6-10.

Group 2: John, Lexi, Anna, “Projector System”

- Listen to channels 1-10 of 2 cameras, take data, create patch and a place for them (6 quadrants were discussed, each in charge of one)

Options:

- Create scenes and implement triggers

- Each create our own user actor in the same scene

(This is by no means an exhaustive or exclusive list, just my general notes from today!)

Square Dance (literally-ish): PP2

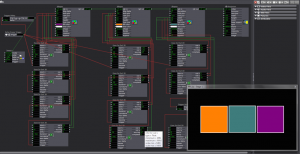

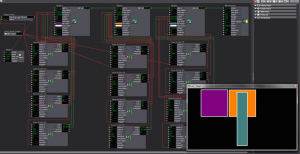

Posted: September 24, 2015 Filed under: Alexandra Stilianos, Isadora, Pressure Project 2 Leave a comment »For Pressure Project #2, I created 4 different scene’s in Isadora, using various actors but the most helpful was the Ease in/out 2D to coordinate and consolidate the movements and sizes of the boxes. Enter scene triggers, values and delays were used to time the scenes and various other actors were used (dots, reflection, envelope generator++ etc.).

It was delightful in its simplicity and choreographed movement and it was automatic through the use of scene triggers to loop and repeat. Complexities came into play by varying the movement of the shapes and sizes after setting a constant with the first frame.

I *believe* we watched it for about a minute, time lapse achievement: unlocked. Some of the feedback from my classmates included the video being delightful (even looked like a bowtie at time) and that the shapes all had particular pleasing places to go as they overlapped. One suggestion/criticism was to include more boxes, which I agree with if time would’ve permitted.

I *believe* we watched it for about a minute, time lapse achievement: unlocked. Some of the feedback from my classmates included the video being delightful (even looked like a bowtie at time) and that the shapes all had particular pleasing places to go as they overlapped. One suggestion/criticism was to include more boxes, which I agree with if time would’ve permitted.

All in all: https://www.youtube.com/watch?v=Uuzmub5PXNQ

Davis, ASU and Halprin Thoughts

Posted: September 13, 2015 Filed under: Alexandra Stilianos, Reading Responses 2 Comments »ART IN THE AGE OF DIGITAL REPRODUCTION- DAVIS

First of all, Davis, do not cast against an age when you claim original art is vanish(ed)ing and then refuse to distinguish ‘The Queen of Touch” who’s identity you claim you “don’t need.” Interesting choice to lead with a dash of hypocrisy.

I found his train of thought around transferring his own work from analog to digital as a revisionist tool interesting. The ‘post-original original.’ I think the ability to reflect and change is not so much indicative of the technology but the time he lived in where there was a major shift of technology. Nothing before was stopping an edit but the transference caused him to reflect.

2 out of 4 stars

ASU READING:RIKAKIS, SPANIAS, SUNDARAM, HE

This article was a great read for me and aptly timed as I am struggling to finding where to engage at the forefront of my Graduate studies. The organizational axes was a great way to visualize where I can cross train in my own strengths and where and how to outsource.

The article was helpful (for me) to engage with a more clear language of what I am looking to attain by studying experiential (and all medias) outside of my discipline or current skill set. Especially the three key trends:

- Novel embedded interfaces (interaction through meaningful multimodal physical activity)

- Human computing communication at the level of meaning

- Participational knowledge creation and content generation frameworks.

3 out of 4 stars

THE RSVP CYCLES- HALPRIN

“… free the creative process by making it visible.”

This could be effective if one feels creatively blocked but opposite perhaps by defining and restraining the possibilities/order.

“Science implies codification of knowledge and drive toward perfectibility…”

No? Science is hypothesis, experiment, posing questions and attempting to try and find answers to them?

This article was incongruent and kind of repetitive for me, 1 out of 4 stars.