Cycle 2 Demo

Posted: November 7, 2015 Filed under: Jonathan Welch, Uncategorized | Tags: Jonathan Welch Leave a comment »The demo in class on Wednesday showed the interface responding to 4 scenarios:

- No audience presence (displayed “away” on the screen)

- Single user detected (the goose went through a rough “greet” animation)

- too much violent movement (the words “scared goose” on the screen)

- more than a couple audience members (the words “too many humans” on the screen)

The interaction was made in a few days, and honestly, I am surprised it was as accurate and reliable as it was…

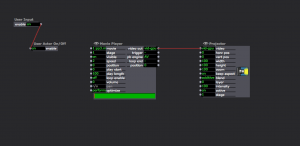

The user presence was just a blob output. I used a “Brightness Calculator” with the “Difference” actors to judge the violent movement (the blob velocity was unreliable with my equipment). Detecting “too many humans” was just another “Brightness Calculator”. I tried more complicated actors and patches, but these were the ones that worked in the setting.

Most of what I have been spending my time solving is an issue with interlacing. I hoped I could build something with the lenses I have, order a custom lens (they are only $12 a foot + the price to cut), or create a parallax barrier. Unfortunately, creating a high quality lens does not seem possible with the materials I have (2 of the 8″ X 10″ sample packs from Microlens), and a parallax barrier blocks light exponentially based on the number of viewing angles (2 views blocks 50%, 3 blocks 66%… 10 views blocks 90%). On Sunday I am going to try a patch that blends interlaced pixels to fix the problem with the lines on the screen not lining up with the lenses (it basically blends interlaces to align a non-integral number of pixels with the lines per inch of the lens).

Worse case scenario… A ready to go lenticular monitor is $500, the lens designed to work with a 23″ monitor is $200, and a 23 inch monitor with a pixel pitch of .270 mm is about $130… One way or another, this goose is going to meet the public on 12/07/15…

Links I have found useful are…

Calculate the DPI of a monitor to make a parallax barrier.

Specs of the one of the common ACCAD 24″ monitor

http://www.pcworld.com/product/1147344/zr2440w-24-inch-led-lcd-monitor.html

MIT student who made a 24″ lenticular 3D monitor.

http://alumni.media.mit.edu/~mhirsch/byo3d/tutorial/lenticular.html

Josh Isadora PP3

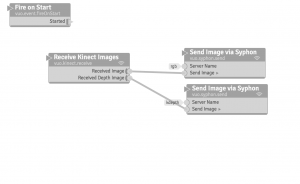

Posted: November 6, 2015 Filed under: Josh Poston, Pressure Project 3 Leave a comment »For Pressure project three I used the tracking to trigger a movie on the upstage projection screen when a person entered into my assigned section of the space. Here is the patch that I utilized.

Josh Final Presentation 1 Update

Posted: November 6, 2015 Filed under: Josh Poston, Uncategorized Leave a comment »

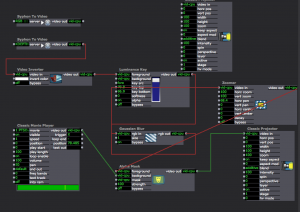

This is my patch which receives the kinect data through syphon into isadora. There it takes the kdepth data and using luminance key and gaussian blur creates a solid and smoother image. From there that smooth image of the person standing in the space is fed into an alpha mask and combined with a video feed which projects a video within the outline of the body.

Checking In: Final Project Alpha and Comments

Posted: November 4, 2015 Filed under: Connor Wescoat, Isadora Leave a comment »Alpha:

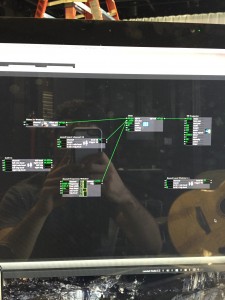

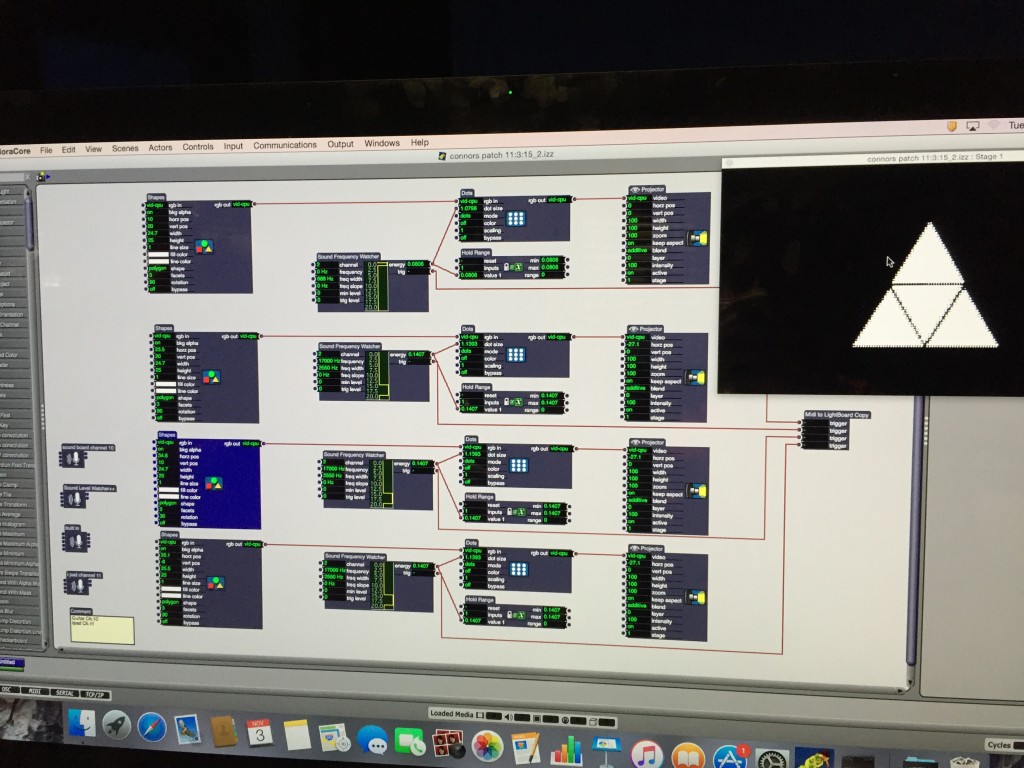

Today marked the first of our cycle presentation days for our final project. While I initially was stressing hard about this day, I was able to, with the help of some very important people, present a very organized “alpha” phase to my final project. While my idea initially took a lot of incubating and planning, I hashed it out and executed the initial connection/visual stage of my performance. Below is a sketch of my ideas for layouts, my beginning patch and my final alpha patch that I demonstrated in class today.

You will see the difference between my first patch and my second.

The small actor off to the right and bellow the image is a MIDI actor calibrated to the light board and my guitar. Sarah worked with me through this part and did a wonderful job explaining and visualizing this process.

Comments:

Lexi: Great start to your project! You made a really cool virtual spotlight using the Kinect. I know that the software may be giving you some initial troubles but I know you’ll be able to work through it and deliver an awesome final cut that incorporates your dance background. Keep it up!

Anna: I really like what you are doing with the Mic and Video connection. I believe that when it all comes together it will be something that’s really interactive and fun to play around with. I’m already having fun seeing how my voice influences the live capture. Keep it up!

Sarah: Words cannot describe how much you have helped me in this class and I look forward to working with you to not only complete but also OWN this project. I’m sure the final cut of your lighting project is going to be awesome and I look forward to working with you more.

Josh: How you implemented the Kinect with your personal videos is a very cool take on how someone can actually interact with video in a space. I cant wait to see what else you add to your project!

Jonathan: Dude, your project is practically self-aware! Even if you explained it to me, I would never know how you were able to do that. I look forward to seeing your final project. Keep it up!

Isadora Updates

Posted: November 2, 2015 Filed under: Uncategorized Leave a comment »http://troikatronix.com/isadora-2-1-release-notes/

Mark recently updated Isadora …

Check out the release notes.

It changes the ways that videos are assigned to the stage.