Dynamic Light Tracking System Prototype

Posted: December 12, 2020 Filed under: Uncategorized Leave a comment »By: Kenneth Olson

(Iteration 3)

Approach

For this project I wanted to build off what I learned from the previous iteration. In Iteration 2 I utilized the “Eyes ++ Actor” to manipulate objects on a computer screen by using my fingers, a web camera, black tape, and a table top to create an interactive Sci-fi user interface. This time around I wanted to create an even easier, more approachable, way to make objects on a screen move in correlation to how the user moves. A more intractable and abstract system than previous. The system utilizes the “Eyes ++ Actor” and the contrasting qualities of physical light and the void of darkness, with a web camera. The overall system is simple, however, depending on the case usage could result in complicated outputs.

The system works as follows. First, in the middle of a dark room, the user will use their phone flashlight to wave around (the user could be responding to music through dance, or other forms of stimulus that would cause the human body to move freely). Second, A web camera, facing the user, will then feed into Isadora. Third, the web camera output would then connect to the “Eyes ++ Actor” to then affect other objects.

With this system I discovered an additional output value I could utilize. Within Iteration 2 I was limited to only “X” and “Y” values of the “Blob Decoder” coming from the “Eyes ++ Actor” .In iteration 3 I also had “X” and “Y” values to play with (because the light from the flashlight was high enough contrast from the black darkness for the “Eyes ++ Actor” to track) My third output, as a result of using light, was the brightness output of the “Eyes ++ Actor”. Unlike before, in Iteration 2, the size of the object in the tracking area did not change significantly, if at all. However, in Iteration 3 the amount of light shown at the Web Camera would drastically change the size of the object being tracked, resulting in more or less of the tracking area to be filled with white or black. So by using one dynamic contrasting light value as an input to the “Eyes ++ Actor” I was able to affect several different objects in several different ways. This discovery only came about from playing around with the Issadora system.

With this dynamic light tracking system, I made ten different vignettes with different interactable objects and videos. Bellow are just a few examples:

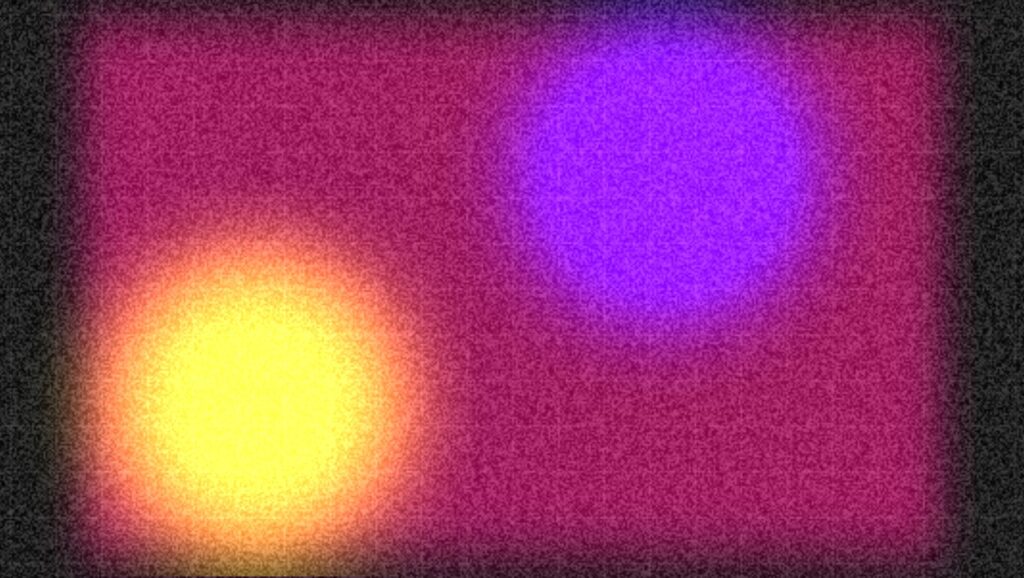

Vignette 1

In this scene the blue and purple shapes would change scale with the addition of more or less light.

Vignette 2

In this scene the two colored circles would move in both “X” and “Y” directions in correlation to the light’s position within the tracking area. And a white grid would appear and overlay the circles with the addition of more light and the grid would fade away with less light.

Vignette 3

In this scene the pink lines would squash in succession when the light source moves from left to right or from right to left. (this scene was inspired by my iteration 2 project)

Assessment

Overall I found this system to be less accurate or less controllable for precise movements, when compared to iteration 2. I could imagine with a more focused amount of light the system would behave better. However, the brightness value output was very responsive and very controllable. I did not try using multiple light sources as an input, but with too much light the system does not function as well. I would love to see this system integrated into a large in person concert or rave with every member of the audience wearing a light up bracelet, or something. But as a party of one, I used a projector as an output device for this system and created a mini rave for one, in my apartment. I used my phone light and also played music from my phone. With even more projectors in the space I could imagine the user would become even more engaged with the system.

Isadora Patch: