Pressure Project 1 – The best intentions….

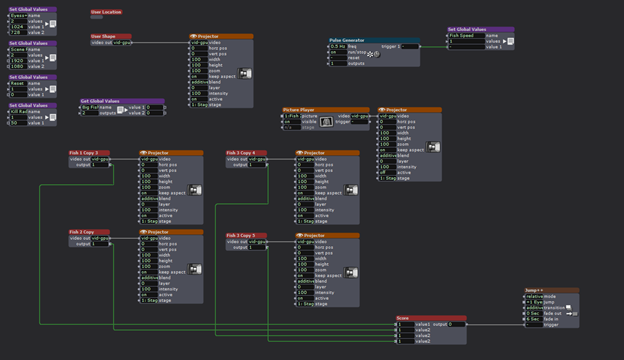

Posted: September 21, 2025 Filed under: Uncategorized | Tags: PP1 Leave a comment »I would like to take a moment and marvel that a product like Isadora exists. That we can download some software, and within a few hours create something involving motion capture and video manipulation, is simply mind blowing. However, I learned that Isadora makes it very easy to play with toys without fully understanding them.

The Idea

When we were introduced to the Motion Lab we connected our computers into the “magic USB” and were able to access the rooms cameras, projectors, etc… I picked a camera to test and randomly chose what turned out to be the ceiling mounted unit. I’m not sure where the inspiration came from, but I decided right then that I wanted to use that camera to make a Pac-Man like game where the user would capture ghosts by walking around on a projected game board.

The idea evolved into what I was internally calling the “Fish Chomp” game. The user would take on the role of an angler-fish (the one with the light bulb hanging in front of it). The user would have a light that, if red, would cause projected fishes to flee, or if blue, would cause the fish to come closer. With a red light the user could “chomp” a fish by running into it. When all the fish were gone a new fish would appear that ignored the users light and always tried to chase them, a much bigger fish would try to chomp the user. With the user successfully chomped, the game would reset.

How to eat a big fish? One bite at a time.

To turn my idea into reality it was necessary to identify the key components needed to make the program work. Isadora needed to identify the user and track its location, generate objects that the user could interact with, process collisions between the user and the objects, and process what happens when all the objects have been chomped.

User Tracking:

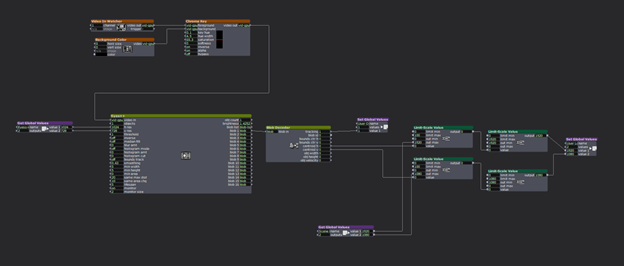

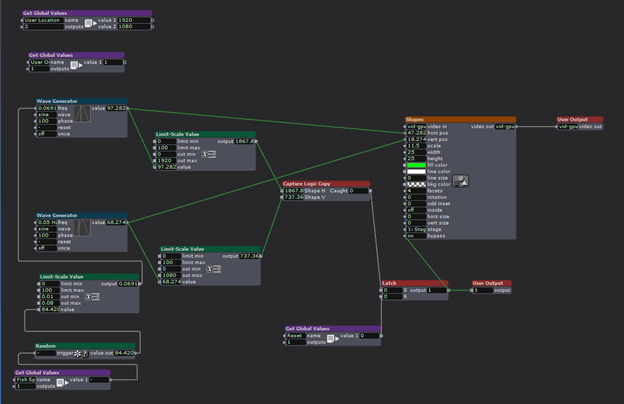

The location of the user was achieved by passing a camera input through a chroma key actor. The intention was that by removing all other objects in the image the eyes actor would have an easier time of identifying the user. The hope was that the chroma key would reliably identify the red light held by the user. The filtered video was then passed to the eyes++ actor and its associated blob decoder. Together these actors produced the XY location of the user. The location was processed by Limit-Scale actors to convert the blob output to match the projector resolution. The resolution of the projector would determine how all objects in the game interacted, so this value was set as a Global Value that all actors would reference. Likewise, the location of the user was passed to other actors via Global Values.

Fish Generation:

The fish utilized simple shape actors with the intention of replacing them with images of fish at a later time (unrealized). The fish actor utilized wave generators to manipulate the XY position of the shape, with wither the X or Y generator updated with a random number that would periodically change the speed of the fish.

Chomped?

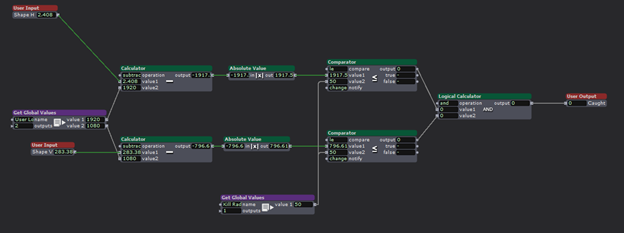

Each fish actor contained within it a user actor to process collisions with the user. The actor received the user location and the shape position, subtracted their values form each other, and compared the ABS of the result to a definable “kill radius” to determine if the user got a fish. It would be too difficult for the user to chomp a fish if there locations had to be an exact pixel match, so a comparator was used to compare the difference in location to an adjustable radius received form a global variable. When the user and a fish were “close enough” together, set by the kill radius, the actor would output TRUE, indicating a successful collision. A successful chomp would trigger the shape actor to stop projecting the fish.

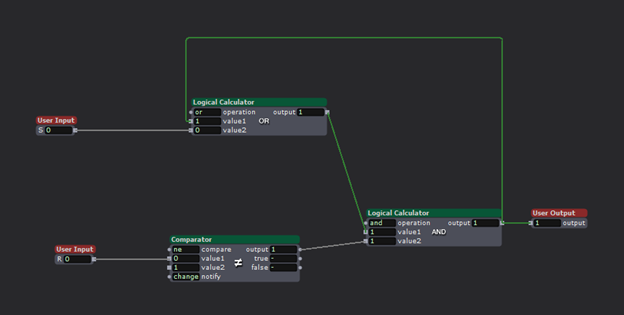

Keeping the fish dead:

The user and the fish would occupy the same space only briefly, causing the shape to reappear after their locations diverged again. To avoid the fish from coming back to life, they needed memory to remember that they got chomped. To accomplish this, logic actors were used to construct a SR AND-OR Latch. (More info about how they work can be found here https://en.wikipedia.org/wiki/Flip-flop_(electronics) .) This actor, when triggered at its ‘S’ input, causes the output to go HIGH, and critically, the output will not change once triggered. When the collision detection actor recognized a chomp, it would trigger the latch, thus killing the fish.

All the fish in a bowl:

The experience consisted of the users and four fish actors. For testing purposes the user location could be projected as a red circle. The four fish actors projected their corresponding shapes until chomped. When all four fish actors latches indicated that all the fish were gone, a 4-inpur AND gate would trigger a scene change.

We need a bigger fish!

When all the fish were chomped, the scene would change. First, an ominous pair of giant eyes would appear, followed by the eyes turning angry with the addition of some fangs.

The intention was for the user to go from being the chomper to being the chomped! A new fish would appear that would chase the user until a collision occurred. Once this occurred, the scene would change again to a game over screen.

The magic wand:

To give the user something to interact with, and for the EYES++ actor to track, a flashlight was modified with a piece of red gel and a plastic bubble to make a glowing ball of light.

My fish got fried.

The presentation did not go as intended. First, I forgot that the motion lab ceiling webcam was an NDI input, not a simple USB connection like my test setup at home. I decided to forgo the ceiling camera and demo the project on the main screen in the lab while using my personal webcam as the input. This meant that I had to demo the game instead of handing the wand to a classmate as intended. This was for the best as the system was very unreliable. The fish worked as intended, but the user location system was too inconsistent to provide a smooth experience.

It took a while, but eventually I managed to chomp all the fish. The logic worked as intended, but the scene change to the Big Fish eyes ignored all of the timing I put into the transition. Instead of taking several seconds to show the eyes, it jumped straight to the game over scene. Why this occurred remains a mystery as the scenes successfully transitioned upon a second attempt.

Fish bones

In spite of my egregious interpretation of what counted as “5 hours” of project work, I left many ambitions undone. Getting the Big Fish to chase the user, using images of fish instead of shapes, making the fish swim away or towards the user, and the ideas of adding sound effects were discarded like the bones of a fish. I simply ran out of time.

Although the final presentation was a shell of what I intended, I learned a lot about Isadora and what it is capable of doing and consider the project an overall success.

Fishing for compliments.

My classmates had excellent feedback after witnessing my creation. What surprised me the most was how my project ended up as a piece of performance-art. Because of the interactive nature of the project I became part of the show! In particular, my personal anxiousness stemming from the presentation not going as planed played as much a part of the show as Isadora. Much of the feedback was very positive with praise being given for the concept, the simple visuals, and the use of the flashlight to connect the user to the simulation in a tangible way. I am grateful for the positive reception from the class.