Cycle 3 “”lighting dance challenge”

Posted: December 13, 2019 Filed under: Uncategorized Leave a comment »

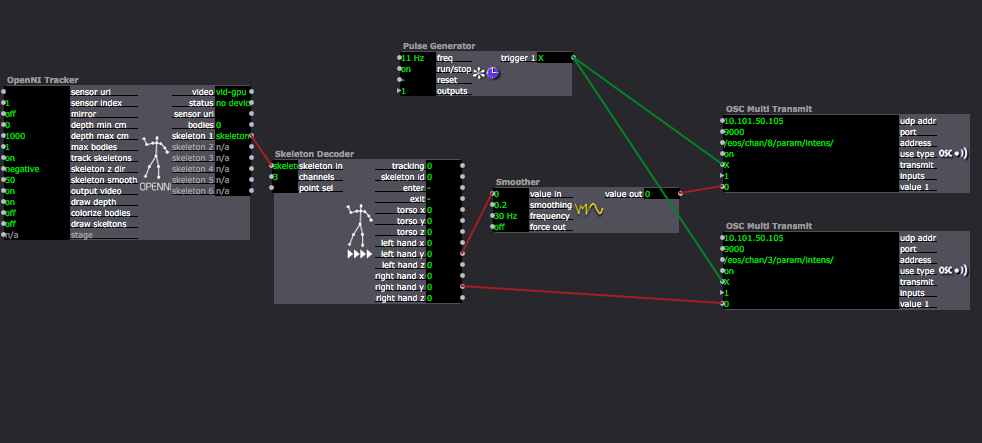

In the last performance, I changed a lot to play with the actor “OpenNI”. It helped me really design the various simple movements which can be recognized by the connector while showing a great “dance composition” on the stage. Another thing is, with the help of the actor “OSC Multi Transmit”, I have the chance to connect the data of human body directly to the data of lighting intensity/color. With out only trigger the cues I made in to lighting board, it bring up more possibilities of the idea of having audience member control single light directly.

So I finally made the structure for my final:

Introduction part:

People: myself

Position: Red Spot

Movement: Rise/put down arms (allow various speed).

Isadora: connector see the arms position data, connect to the lighting intensity.

Audience Participate Part:

People: three audience members

Position: stand on “red spot”, “yellow spot” and “blue spot” individually

Movement: red: rise/put down arms (with certain tempo I gave).

yellow: kneel down and stand up (with certain tempo I gave).

Blue: jump into certain area and leave (with certain tempo I gave).

Isadora: red: arms position data

yellow: torso position data

blue: body brightness data

When all the audience members all doing the right movement with the right tempo on stage, they can successfully trigger the lights at different tempo to create a dance piece with all various movement and lighting changes. If they all doing right, I will go in front of the connect sensor 2 to add the intensity so that it will trigger a strobing lights with the sudden silence. It seems something wrong, but after 5 seconds, a automatically lighting dance will be triggered which means everyone did a good job and it is the symbol of the success.

However, in the final show, the connector didn’t work that well which did not fully showed my design. But the introduction part went really well.

Thank you Alex and Oded really helped me go through every technology problem and artistic problems I had. This project kept changing until the end and without everyone’s help, i cannot really finish this! And really a great course!

Cycle 2 “Tech Rehearsal”

Posted: December 13, 2019 Filed under: Uncategorized Leave a comment »In my cycle 2 showing, I took away the fun part we had in cycle one where I have audience members dancing with the music on the stage and reacted to the lightings. In this cycle, I tried a little bit serious run to have people become my crew member and help me trigger the lightings with their movement.

In this run, I called them helper instead of “lighting designer” which makes more sense to me. But still, I don’t think the audience members only have one title when they join my project. They can be the performer moving on the stage while become the crew member trigger the lighting with their movement tasks. I think I should really blur their title instead of really giving them a position contains various jobs.

Also, with the help of Alex, I add one OpenNI actor to have the connector recognize people’s shape, so that it can see where people’s arms/legs/torso are moving. With the help of this, I designed a movement of clapping over head and when the connect see both of the hands reach the same hight it will trigger the cue. It really helped me develop more movements for audience members rather than just letting them appear/not appear in the area where the connector can see.

I will develop more movements after this cycle and really blur audience member’s role as being a lighting helper and a performer at the same time.

Cycle 1: Collaborative Performance

Posted: November 6, 2019 Filed under: Uncategorized Leave a comment »With my initial thoughts about building a “supportive system”, I set my cycle 1 as a collaborative performance with the help of the audience. I make people into three groups: performer, lighting helper, and audience member. The performers will feel free to dance on the center stage space. The lighting helper will help me trigger the lighting cues to keep the dance going.

Actually the mission for the lighting helpers are heavier. I have a connect capture the depth data of the lighting helpers. They will run and then land on the marked line I preset on the floor so that they will appear in the depth sensor capture area to get a brightness data. When the brightness data bigger than a certain number, it will trigger my lighting cue. Each lighting cue will fade in dark after a certain amount of time, so my helpers will keep doing the “running and land” movement action to keep the performance space bright so that audience members can see the dance.

Also, I have a lighting cue which uses five different lights to shine one by one. I set this the same function as a count down system. This cue is for the lighting helper to notice that they are going to be ready, and run after this lighting cue is done.

I want to use the human’s body movement to trigger the lighting instead of press the “Go” button on the lighting board so that audience members can be involved into the process and be interactive. However, I found the performer seems become less and less important in my project since I want the audience members to help with the lighting and really get a sense of how lighting works in the performance. I want to build a space where people can physically move and contribute to the lighting system. And I think I want to develop my project as a “tech rehearsal”. I will become the stage manager to call the cues. And my audience members will become my crew members to work all together to get the lighting board run.

PP3

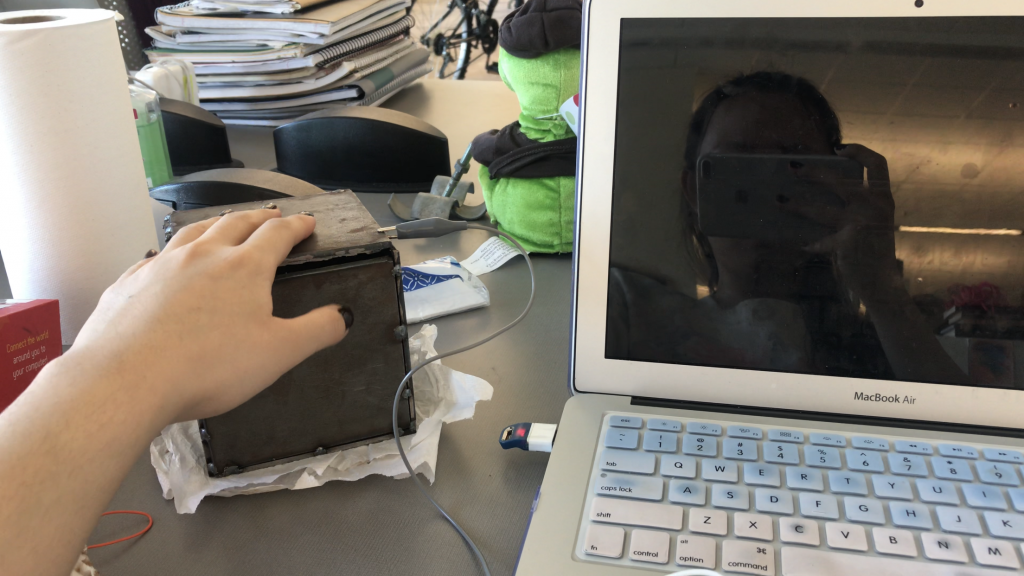

Posted: October 29, 2019 Filed under: Uncategorized Leave a comment »Pressure project 3 is about revealing a mystery during the interaction with audience member(s). I thought for a long time about what mystery I want to reveal but ended up with nothing. However, I saw my metal box for my welding exercise in art sculpture class which it has a big gap I failed to fill in. I thought this gap really can means something since people can never open this box and can never see the inside clear by just looking through the gap. The metal box can also connect with Makey Makey and become a button to trigger the system. So, I decided to make a “Mystery Box”.

This is how I connect the box to my computer, so as soon as people touch the box, people can see what is actually happening inside the box, which I use Isadora to fake a inside world and convey that through computer interface.

At the very beginning, I didn’t plan to make this project mean. But I saw some news about a Korean pop artist suicided at her place during the time I created this project. She encountered horrible internet violence before she die and she cannot bear that anymore and choose to leave the world. I was shocked by that news since I know her pretty well and she was only 25 years old. So I decided to insert a little bit educational purpose to let people feel what is the feeling of being judged badly with no reason and what can we do when we are in that situation.

I use the live capture so that the users can see their faces on the screen while the bad words are keep flying in front of their face. They have method to escape this scene by say no. But I declined the captured volume so that users really need to shout out no to reach the highest level to escape. I want the users can be angry and really saying no to the violence.

It was a really great experience when seeing my classmates trying altogether to increase the volume. But in one scene when I have 3 projector capturing the live images on the screen, I typed the word “Ugly” but changed the font so that the word turned into some other characters I don’t know. I should be careful about this since I don’t know the exact meaning of these and it may influence people’s experience if they know that.

pp2 Story telling

Posted: September 27, 2019 Filed under: Uncategorized Leave a comment »In this pressure project 2, we have 5 hours to work with how do we tell a story with cultural significance.

Thinking about the cultural significance, I decided to tell an ancient Chinese story. I decided to tell the love story between Xuanzong, who is the seventh emperor of Tang dynasty, and Yang Guifei. Their love story has already been recorded a lot through the history and I was really drawn into one movie which also re-investigates the love between them. How much a man can do when he hold the extreme power and what a woman can do by the side of such man.

I found the poetry and a lot of documentation which talk about their love story and the death of Yang online and I summarized and translated by my self in english.

By thinking about tell a story through mass media, I tried to investigate what can be achieved by media editing which would never be done by human voice. So, I decided to record my story sentence by sentence so that I can overlap them, repeat them and manipulate them.

I used Isadora to finish this audio editing. I use the timed trigger to count times so that each sentence can be played at certain timing. This could also be done by a lot of audio programer, but I just want to try with Isadora.

During that presentation, I felt really satisfied by everyone’s feedback and I think they get all the points I want to express. Something about the female cannot hold power in ancient China, some thing about a man can not do everything he want when he hold the extreme power. I felt great that I can express those only through the audio files with my manipulation. And I realize that the sound can really contain, express or even change a lot of informations.

Pressure Project 1

Posted: September 12, 2019 Filed under: Uncategorized Leave a comment »

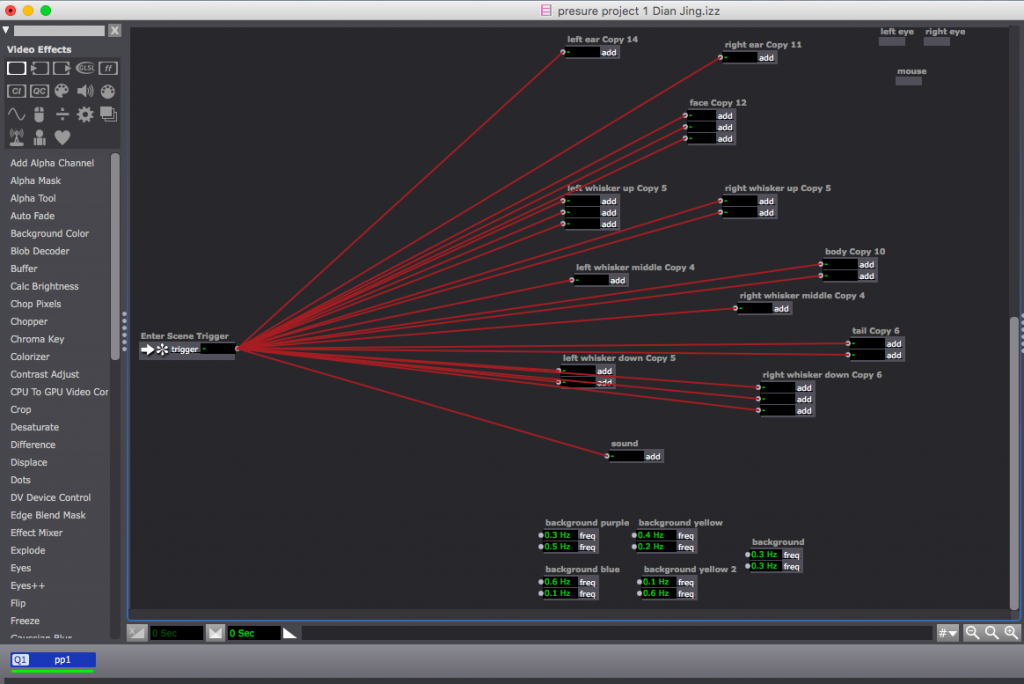

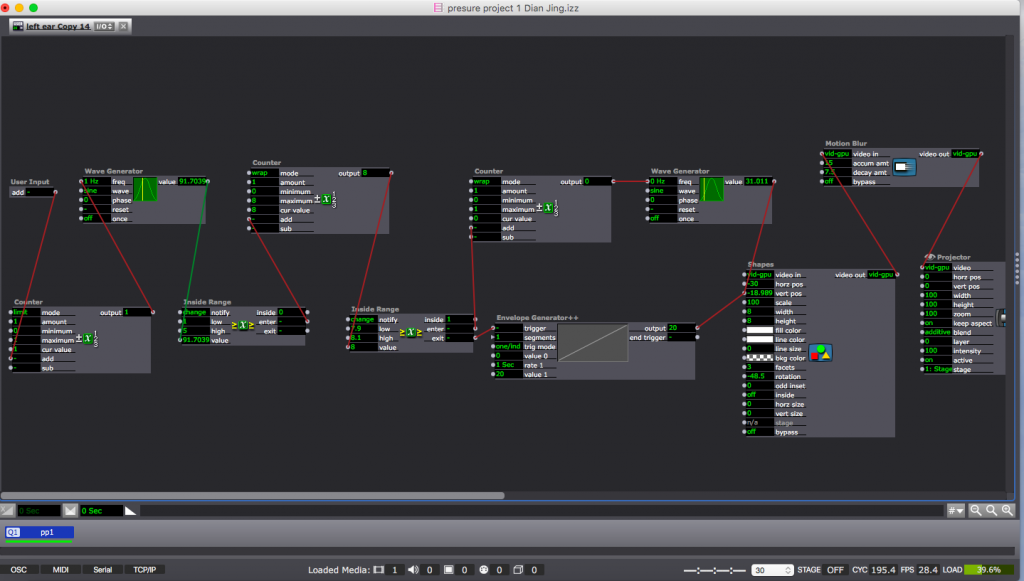

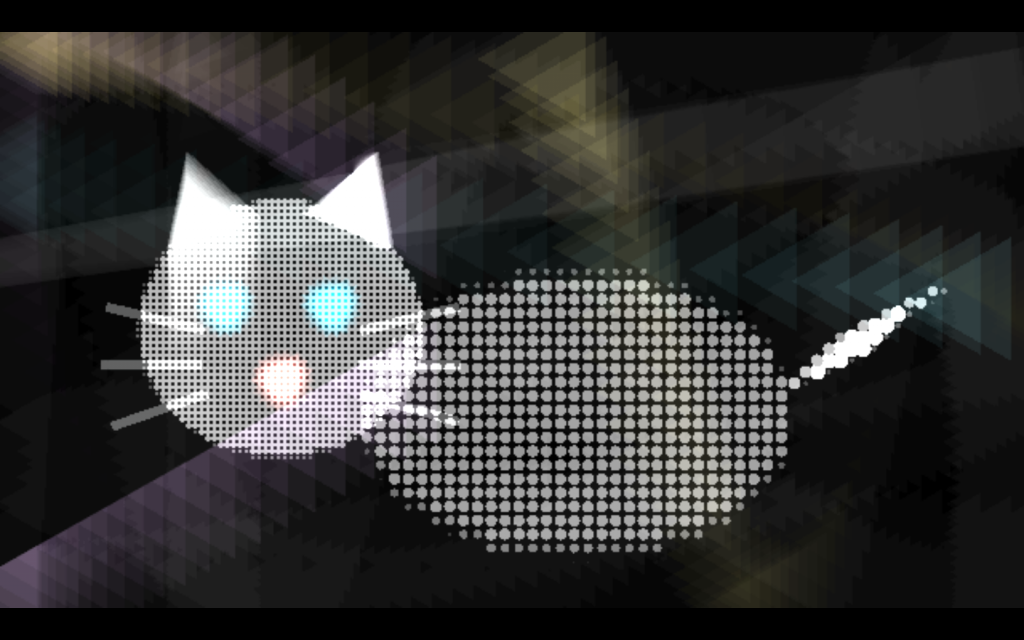

In this first pressure project, we have 4 hours to really investigate a self-generating patch. With a deep interesting in the “Shape” actor, I decided to just use shapes to complete my visual design.

When thinking about how to make people laugh and how to maintain audience members’ attention, I try to shape a recognizable image after giving a disorientation. I shape a cat by series different shapes imitating each body parts at the very beginning, and try to make them really disoriented so that audience can not figure out what the moving patterns are doing. The shapes which imitating the cat’s body parts are moving all together with some background patterns. And then I settled those body parts and build them up into a real cat image so that the audience can finally figure out what is happening on the screen. Also, when all the patterns are moving on their on path, I add some mew sound of my cat which can build up the curiosity of the audience.

I separate each body part into a user actor so that I can design each body part’s moving path. In each user actor, I have “Inside Range”-“Count”-“Inside Range” connected together to help me count a mount of time for all the body parts moving. While at the end of this amount of time the “Inside Range” can send out a trigger to the ” Envelope Generator ++” to help the body parts settle down and keep still for several seconds, which is the time when the audience can figure out the cat image.

My purpose is trying to give myself limitation of only using “Shape” actor to design visually and to let audience members actively figure out something from the disorientation. But the surprise cannot maintain after the audience figuring out what it is. And I got feedback about if I try to make the moving patterns keep shaping into other recognizable images. I feel really excited about the feedback I got and want to investigate more with it.