Cycle III – Bar: Mapped by Alec Reynolds

Posted: May 2, 2022 Filed under: Uncategorized Leave a comment »The Experience

Cycle III was the final presentation stage of the project. Bar: Mapped is a demonstrative example of an elevated nightclub bar experience that would be regarded as a special attraction at venue. The purpose of this project was to demonstrate a pragmatic and engaging use case of projection mapping. Below I will walk you through the intention behind the Isadora side of the experience:

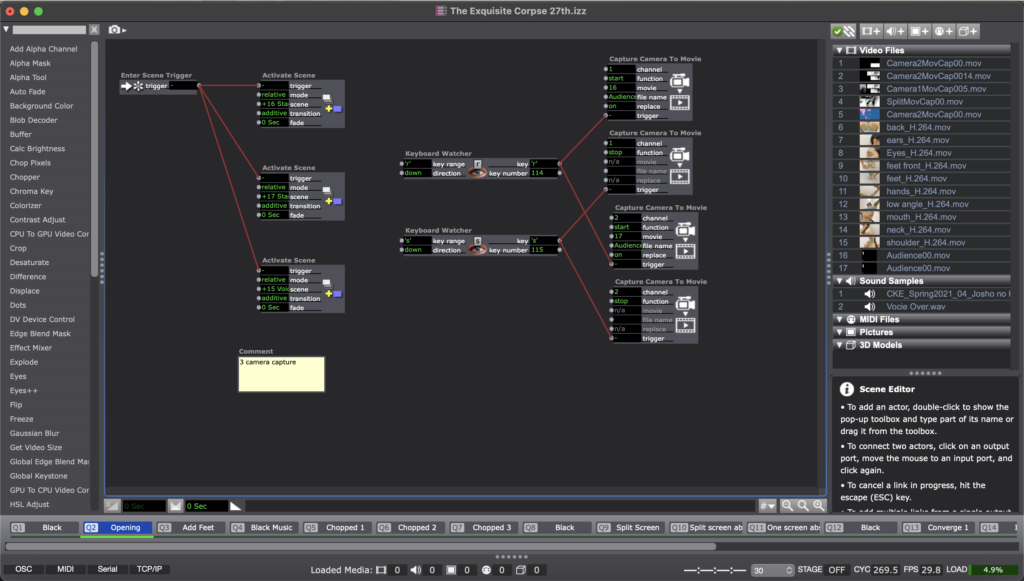

- A user will order a drink off of the themed menu. The drink will be prepared and then the bartender will press the drinks button on the iPad kiosk which will trigger the desired scene in the bar area.

- The iPad communication works through a TouchOSC communication protocol over a network that both the client running Isadora and the iPad are on.

- When the Isadora patch receives the input from the iPad, a trigger is sent from the TouchOSC actor to a jump scene actor. There are six total scenes in the patch representing the Main bar scene and then one scene for each of the six themed cocktails.

- Once on the desired scene there are four main pieces of media that are playing:

- A large video is mapped to the screen

- A smaller video is mapped to the box that the bottles rests on

- The smallest video is mapped to four out of five of the liquor bottles. The fifth bottle is a mapped image of itself. The design intent behind this was to highlight the main ingredient in their specific cocktail

- A sound effect that compliments the theme is played

- After the large video reaches the end of its loop, the movie player actor triggers a scene jump back to the Main bar scene and a new drink can be ordered.

Lessons Learned

Isadora is an extremely sensitive and temperamental software. For my Cycle III performance, I unfortunately was not able to present my work in the fashion that I intended to. Due to what I believe was a performance issue with the high variability in resolution and codec, Isadora would reliably crash each time that I tried opening a stage into full screen mode, or mapped any bottle. In order to salvage a performance, I was able to loosely map the Main bar scene bottles and fit my small stage to full screen. TouchOSC still worked and demonstrated the triggering well, but nothing was mapped.

Beyond complications with Isadora, I was able to have a fundamental appreciation for how much skill Projection Mapping takes. Being able to manipulate the projector angled lighting onto a physical asset is nothing short of an artistic craft. Just scratching the surface of complexity with small curve bottles, I now have a deeper appreciation for some of the large scale projections, the likes of which you see at Disney and Large Municipality Events.

If I Had To Do It Again

If I were to do this project again there are a few steps I would take to ensure that the performance was more successful. The primary change is that I would ensure that all my media and content was formatted the same so that Isadora could process it without crashing. Next, I would try to make the experience understood better that Bar: Mapped is in its own room / section of the nightclub and that the music that is heard is bleed over from the main dance floor. In tech rehearsal the music was prepared such that it was at a quieter volume and was only playing from two speakers in the rear of the performance space from the perspective of Bar: Mapped. During the Cycle III performance, the music was blaring and playing from all channels making it sound like the room itself was the nightclub. The final lesson I have learned is that in an environment like that, the people that want to be there are going to have fun, and the people that got dragged there, well its them that you have to work the hardest on to ensure they have a good time. My hope would be that the extra layer of immersion that the projection mapping provides, would fascinate the unsteady patron and keep them drinking and dancing.

Cycle 3 – Seasons – Min Liu

Posted: May 2, 2022 Filed under: Uncategorized Leave a comment »Based on the second cycle, I further developed the experiential system for children to learn about geography & season in this final cycle. I built a spherical interface with makeymakey, copper tape and conductive paint. Participants can touch specific locations on the globe and trigger different interactive environments featuring local geographical and climatic characteristics.

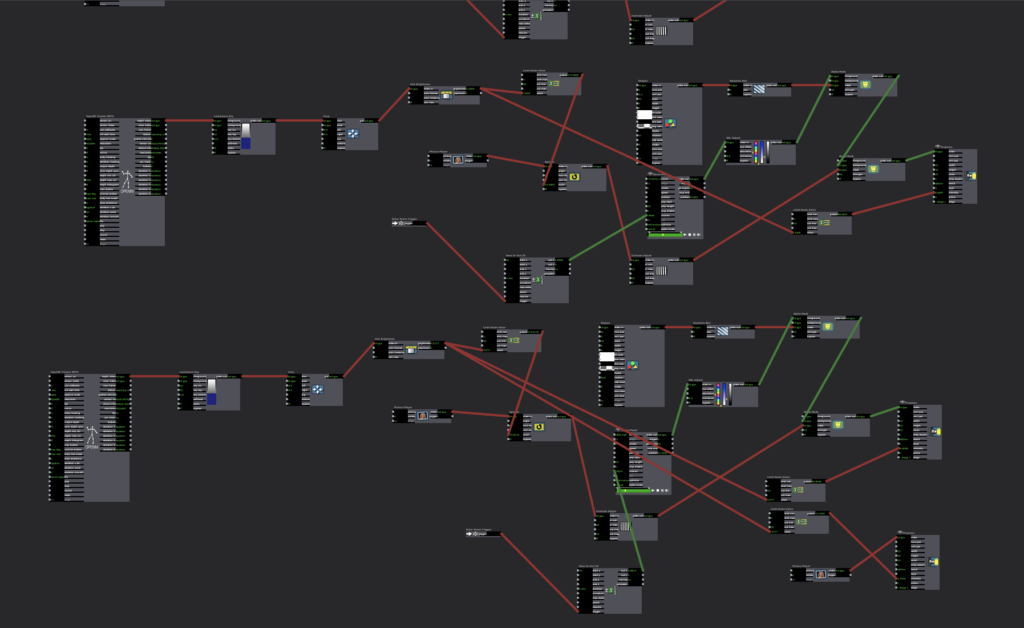

I searched for countries and places that is now (late April) in Spring, Summer, Autumn and Winter. I choose Tokyo (Japan), Amazon rainforest (Brazil), Mount Maceton (Australia), and Yellowknife (Canada) correspondingly and marked them on the globe. I used Kinect as input device this time and it worked much better than Webcam. In the autumn and the winter scenes I had created in the previous cycle, participants can interact with falling leaves and snows. I built the other two scenes this time. In the Spring Tokyo scene, the Kinect tracks people’s head and Sakura flowers will fall right above participants’ heads on the screen. In the Summer Amazon rainforest scene, rain falls on people and bounces off. I will explore more interactive visual effects in the future. I also added audios to each scene which made the experience more immersive. Here are the scenes I built in Touchdesigner:

In the final presentation, I was happy that participants explored the physical globe and engaged in interacting with different virtual environments both individually and together. They said the experience was fun and educational and they didn’t even notice that they were learning. Also, children will be excited about this experience. It’s a very COSI thing.

Here are some points to improve about this system. First, there needs to be some ambient light to make people brighter and more detectable, thus improving the sensitivity of interaction. Second, the spring Tokyo scene is too bright, and the flowering interaction is too slow. Third, there can be some explanations for participants to know that they are experiencing the weather in the area in current time.

Here is the recording of the experience:

Cycle # Patrick Park

Posted: May 2, 2022 Filed under: Uncategorized Leave a comment »For the last cycle I prepared a play space where the music evolves by positions or it activates one shot samples. The song I composed plays throughout the entirety of the experience. If the audience goes closer to the camera the voice switches from regular to pitched up voice. Vive versa, when the participant is moving away from the camera, the singing switches back to normal. In the mid spaces there were triggers that played notes in the scale the song was written in. In front there are trigger that turned on echos and delays (although it did not activate this time). In the back there are 808 bass drums and a snare sound. My plan was to create “out of bound” area where every track would be playing in reverse. This did not happen because making sure that the main interactive functions took a long time to actually work. In this cycle there were more excitement and urge to interact in the room than last couple cycles. Making sound triggers to interact together with a song is a fun idea. Hope to keep developing this.

Cycle 3 by Jenna Ko

Posted: May 1, 2022 Filed under: Uncategorized Leave a comment »I used Isadora and Astra Orbbec for an experiential media design where a tunnel zooms in and out as the audience walks toward the projection surface.

The tunnel represents my state of mind where I mentally suffer from the news of the Russian invasion of Ukraine, the Prosecution reform bill in Korea, and marine pollution. The content begins with an empty tunnel. As the participant walk toward the projection surface, the news content fades in. I wanted to articulate my hopelessness and powerless feeling through the monochromatic content that fills the tunnel. As the participant walks towards the end of the tunnel, hopeful imagery of faith in humanity fades in. The content is in color, contrasting with the tunnel interior.

Finally, I was able to implement the motion-sensing function with my media design. If I were to do this again, I would set up the projector in a physical tunnel for a more immersive user experience.

Cycle 2 by Jenna Ko

Posted: May 1, 2022 Filed under: Uncategorized Leave a comment »I used Isadora and Astra Orbbec for an experiential media design where projection content reverses in Korean political history as a participant walk towards the motion detector.

The content begins with superficial imagery of modern Korea with K-pop, kimchi, and elections. It reverses to 2017 impeachment and candlelight vigil, the democratization movement in the 1980s, President Park Jeong-hee and the Miracle on the Han River, 6.25 Korean War, ending with the Korean independence movement. As political polarization intensifies, I wanted to remind each party’s contributions to Korean democracy and prosperity for more respect and gratitude towards each other. This piece reflects my hope for politicians and supporters of each side to cooperate to make a better country than condemning and bogging each other down.

I used the Yinyang symbol of the Korean national flag to represent each political party. The Yinyang symbol represents the complementary nature of contrary forces and the importance of their harmonization. I translated the meaning to political colors, the red representing the conservative party and the blue symbolizing the liberal party. Synchronizing with the triumphing side, the yin and yang alternate as the participant walks towards the motion sensor until the participant reaches the Korean independence movement, where the symbol is complete.

I could not make the motion-sensing part work, so I triggered the scenes manually for the presentation and utilized the motion sensing function for Cycle 3.

Cycle @ Documentation Patrick Park

Posted: May 1, 2022 Filed under: Uncategorized Leave a comment »Cycle 2 I successfully was able to capture data from motion capture in Isadora and send them back to MAX/MSP to play the audio. There were five tracks that built into the whole song. I had 5 sections and each track being triggered by participant’s presence in front of the camera. Guitar, bass drum, snare, hi-hat, and pitched up voice played when standing in certain area. There were triggers that activated delays, echos, and distortion but they were not triggered during experience. This was better designed than the last cycle, nonetheless I could see rooms for improvement. I realized that having sounds play most of the time can be very frustrating and the song should play throughout the experience. Sound should not stop at all. Rather than placements having triggers to play the tracks, the song should be playing all the time. For the next cycle I plan on adding one shot samples of instruments that can be activated and play along with the song playing in the background.

Cycle 1 Documentation

Posted: May 1, 2022 Filed under: Uncategorized Leave a comment »For the first cycle I envisioned a motion detected sound scape. The furthest point from the motion camera played the beach ocean sound, as audience got closer, the beach sound changed to under water sound. Lastly when the participant got close to the camera, it would activate a salsa song. Though this experiment I realized that having just sound play from moving is not too stimulating. There were only three sound sources and I feel like I could have done more to this. Part of the issue that came up while working on this cycle 1 was that Isadora could not handle audio plugins being processed through the program. In the next cycle, I mean to take in motion capture data from Isadora and send it to MAX/MSP where I can work with more audio manipulation.

Circle 3 – Yujie

Posted: May 1, 2022 Filed under: Uncategorized Leave a comment »In circle 3, I kept the key ideas and media design developed during circle 1 and 2. Two things are still central to my project. The first one is to use fragmented body parts of the culturally marked dancer to resist the idea of returning to the whole which represents the so-called cultural essence. The whole is also easy to be categorized into racial stereotypes. The fragmented body parts then can be seen as a challenge. The second part is to continue offering the negative mode of seeing. The inverted color (or the negatives) can be seen as a metaphor for undeveloped images or hidden “truth” in the darkroom for one to see differently.

I also added two things in the final circle: the preshow projections and the voice-over from my interview with the dancer. From the feedback after the showing, I receive helpful comments from the class for these two add-ons. I was told that they appreciate that the preshow projections allow them to explore the space and the circular setting forms a more intimate relationship between the dancer and the viewer. Also, the contradictions of performing the Japanese body discussed in the voice recording have some guiding effect for the viewer to get my intention.

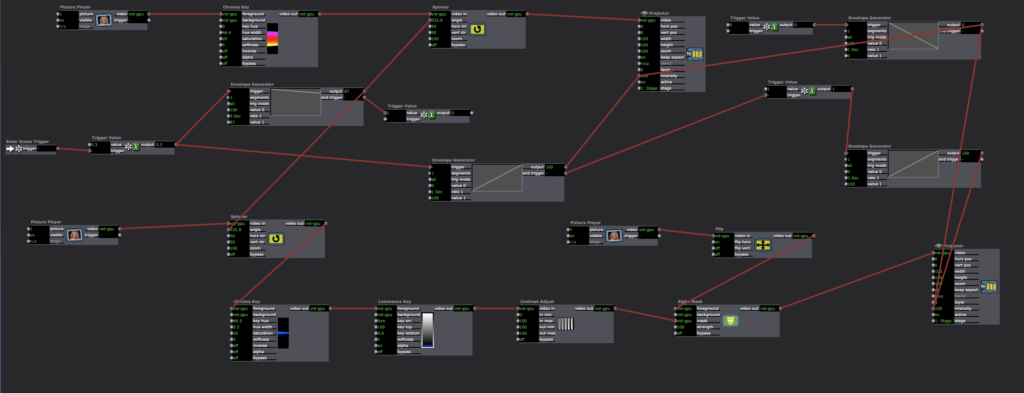

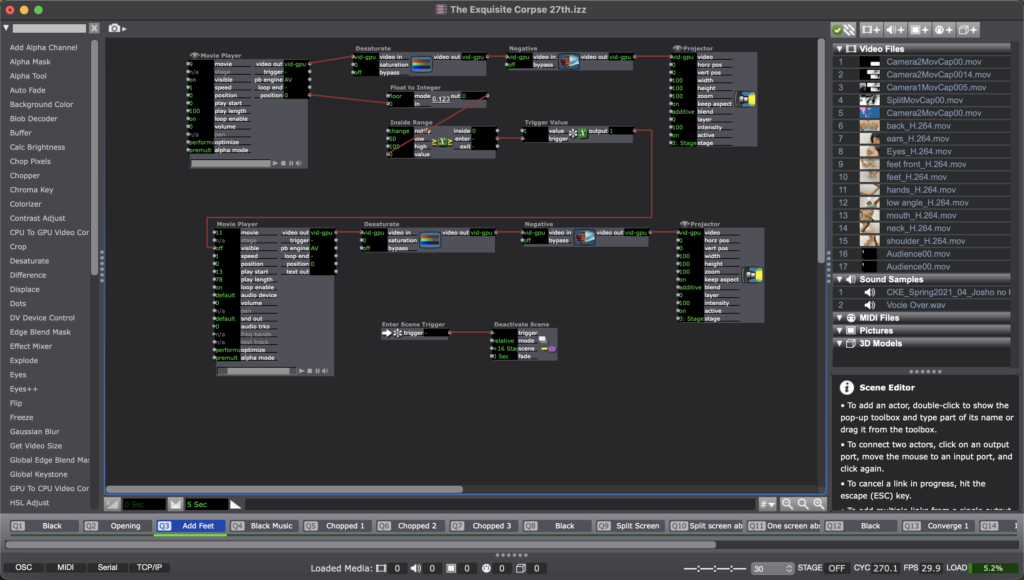

Here are some Isadoro patches

Here is the video document of the final performance

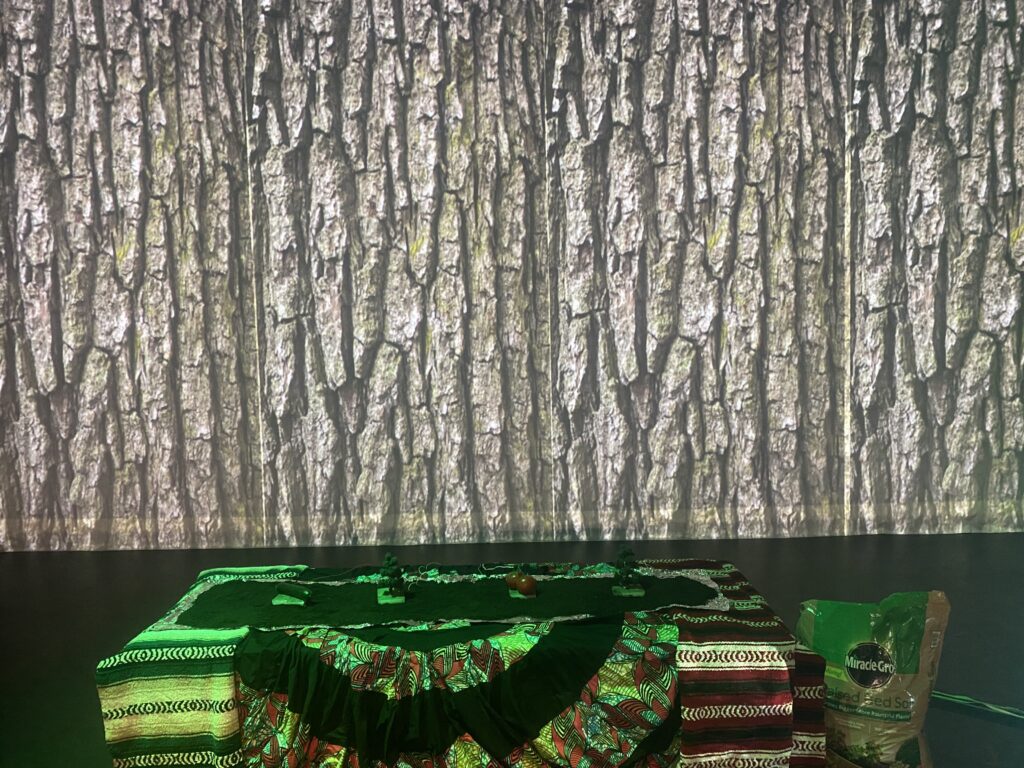

Cycle 3: Sensory Soul Soil

Posted: April 30, 2022 Filed under: Uncategorized Leave a comment »For my cycle 3 project I shifted the soundscape a bit, changed two interfaces and the title. I honed in on two of the growers interviews. I wanted to illustrate the connection to what they’ve grown through an organic interface rather than the former plastic tree. For instance grower Sophia Buggs, started out growing Zucchini in kiddie pools because her grandmother used to make Zucchini bread. Highlighting this history I wanted the participant to feel the electricity Sophia and her grandmother felt with hands in the soil and on fresh zucchini. I worked with my classmate Patrick to clean the sound of Sophia’s interview because when I interviewed her we were in a noisy restaurant. He worked with me to level the crowd in the background and amplify Sophia’s voice to the foreground. I used two tomatoes to illustrate grower Julialynn’s expression “It was just me and two tomatoes!”. This is her origin story to growing her church’s community garden. Sensory Soul Soil in an experiment of listening with your hands, feeling your body, and seeing with your eyes and ears.

As for the set up I decided to start in front of the sensory soil box. I made this change so that I can better guide the participate in the experience. This go round I wanted to bring some color to the originally white box. I used Afro-Latinadad fabric to build in a sense of cultural identity through patterns and colors also representing those who have labored in our U.S. soil.

In the last iteration I didn’t really know how to bring the space to a close however within this cycle I closed the space with a charge to join the agricultural movement by getting involved anyway they can because the earth and their bodies will appreciate it. This call to action is and invitation to take this work beyond the performative, experiential space, and in to the world from which this inspiration came.

To get my sound to work the way I intended, I designed the trigger values and gates to be able to start the audio when touched and cut off when you are not connected. I found that having wav files for sound works better in the sound player. My MP3 continued to show in the movie player just an FYI.

Below you can see one of the Sensory Soul Soil experiences with Juilalynn and two tomatoes.

In the video below you will witness how I curated the space to be extremely immersive. My goal was to engage in the true labor of a grower not only by hearing their stories but also by embodying their movement. I changed the title because now all materials in this iteration are tress as before. I figured sense I come a culture of soul food, and food has the nutrients to feed the soul which comes from the soil why not call it what it is. A Senory Soul Soil experience.

Cycle 3–Allison Smith

Posted: April 28, 2022 Filed under: Uncategorized | Tags: dance, Interactive Media, Isadora 1 Comment »I had trouble determining what I wanted to do for my Cycle 3 project, as I was overwhelmed with the possibilities. Alex was helpful in guiding me to focus on one of my previous cycles and leaning into one of those elements. I chose to follow up with my Cycle 1 project that had live drawing involved through motion capture of the participant. This was a very glitchy system, though, so I decided to take a new approach.

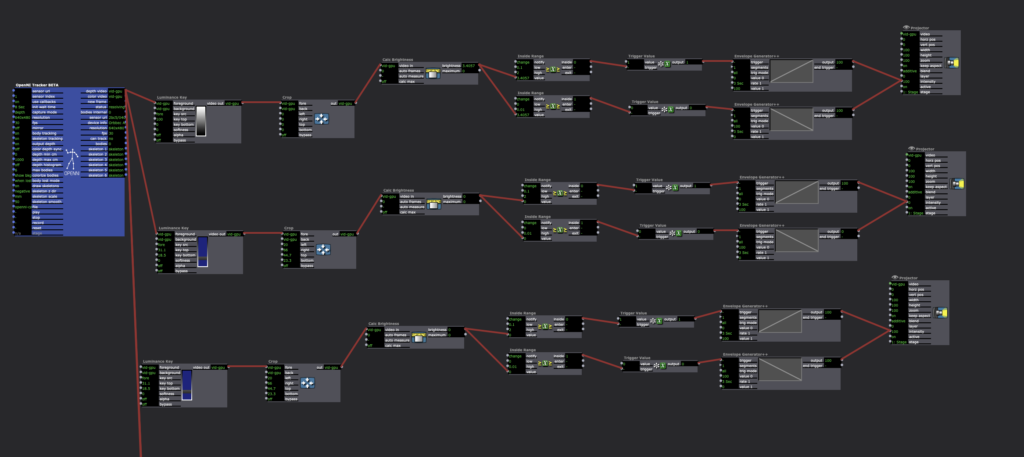

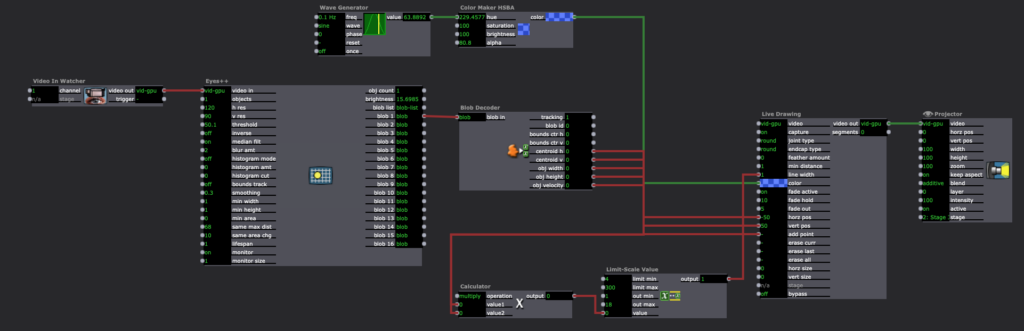

In my previous approach of this, I utilized the skeleton decoder to track the numbers of the participants’ hands. These numbers were then fed into the live drawing actor. The biggest problem with that, though, is that the skeleton would not track well and the lines didn’t correspond to the person’s movement. In this new iteration, I chose to use a camera, eyes ++ and the blob decoder to track a light that the participant would be holding. I found this to be a much more robust approach, and while it wasn’t what I had originally envisioned in Cycle 1, I am very happy with the results.

I had some extra time and spontaneously decided to add another layer to this cycle, where the participant’s full body would be tracked with a colorful motion blur. With this, they would be drawing but we would also see the movement the body was creating. I felt like this addition leaned into my research in this class of how focusing on one type of interactive system can encourage people to move and dance. With the outline of the body, we were able to then see the movement and dancing that the participant’s probably weren’t aware they were doing. I then wanted to put the drawing on a see-through scrim so that the participant would be able to see both visuals being displayed.

A few surprises came when demonstrating this cycle with people. I instructed that viewers could walk through the space and observe however they wanted, however I didn’t consider how their bodies would also be tracked. This brought out an element of play from the “viewers” (aka the people not drawing with the light) that I found most exciting about this project. They would play with different ways their body was tracked and would get closer and farther from the tracker to play with depth. They also played with shadows when they were on the other side of the scrim. My original intention with setting the projections up the way that they were–on the floor in the middle of the room–was so that the projections wouldn’t mix onto the other scrims. I never considered how this would allow space for shadows to join in the play both in the drawing and in the bodily outlines. I’ve attached a video that illustrates all of the play that happened during the experience:

Something that I found interesting after watching the video was that people were hesitant to join in at first. They would walk around a bit, and they eventually saw their outlines in the screen. It took a few minutes, though, for people to want to draw and for people to start playing. After that shift happened, there is such a beautiful display of curiosity, innocence, discovery, and joy. Even I found myself discovering much more than I thought I could, and I’m the one who created this experience.

The coding behind this experience is fairly simple, but it took a long time for me to get here. I had one stage for the drawing and one stage for the body outlines. For the drawing, like I mentioned above, I used a video in watcher to feed into eyes ++ and the blob decoder. The camera I used was one of Alex’s camera as it had a manual exposer to it, which we found out was necessary to keep the “blob” from changing sizes when the light moved. The blob decoder finds bright points in the video, and depending on the settings of the decoder, it will only track one bright light. This then fed into a live drawing actor in its position and size, with a constant change in the colors.

For the body outline, I used an astra orbec tracker feeding into a luminance key and an alpha mask. The foreground and mask came from the body with no color, and the background was a colorful version of the body with a motion tracker. This created the effect of having a white colored silhouette with a colorful blur. I used the same technique for color in the motion blur as I did with the live drawing.

I’m really thankful for how this cycle turned out. I was able to find some answers to my research questions without intentionally thinking about that, and I was also able to discover a lot of new things within the experience and reflecting upon it. The biggest takeaway I have is that if I want to encourage people to move, it is beneficial to give everyone an active roll in exploration rather than having just one person by themselves. I was focused too much on the tool in my previous cycles (drawing, creating music) rather than the importance of community when it comes to losing movement inhibition and leaning into a sense of play. If I were to continue to work on this project, I might add a layer of sound to it using MIDI. I did enjoy the silence of this iteration, though, and am concerned that adding sound would be too much. Again, I am happy with the results of the cycle, and will allow this to influence my projects in the future.