Documentation Pictures From Alex

Posted: December 12, 2015 Filed under: Uncategorized Leave a comment »Hi all,

You can find copies of the pictures I took from the public showing here:

https://osu.box.com/s/ff86n30b1rh9716kpfkf6lpvdkl821ks

Please let me know if you have any problems accessing them.

-Alex

Final Project Patch Update

Posted: December 2, 2015 Filed under: Alexandra Stilianos, Final Project Leave a comment »Last week I attempted to use the video delays to create a shadow trail of the projected light in space and connect them to one projector. I plugged all the video delay actors into each other and then one projector which caused them to only be associated to one image. Then, when separating the video delays and giving them their own projectors and giving them their own delay times, the effect was successful.

Today (Wednesday) in class, we adjusted the Kinect and the Projector to be aiming straight towards the ground so the reflection caused by the floor from the infrared lights in the Kinect would no longer be a distraction.

I also raised the depth that the Kinect can read from a persons shins and up so when you are below that area the projection no longer appears. For example, a dancers can take a few steps and then roll to the ground and end in a ball and the projection will follow and slowly disappear, even with the dancer still within range of the kinect/projector. They are just below the readable area.

Moo00oo

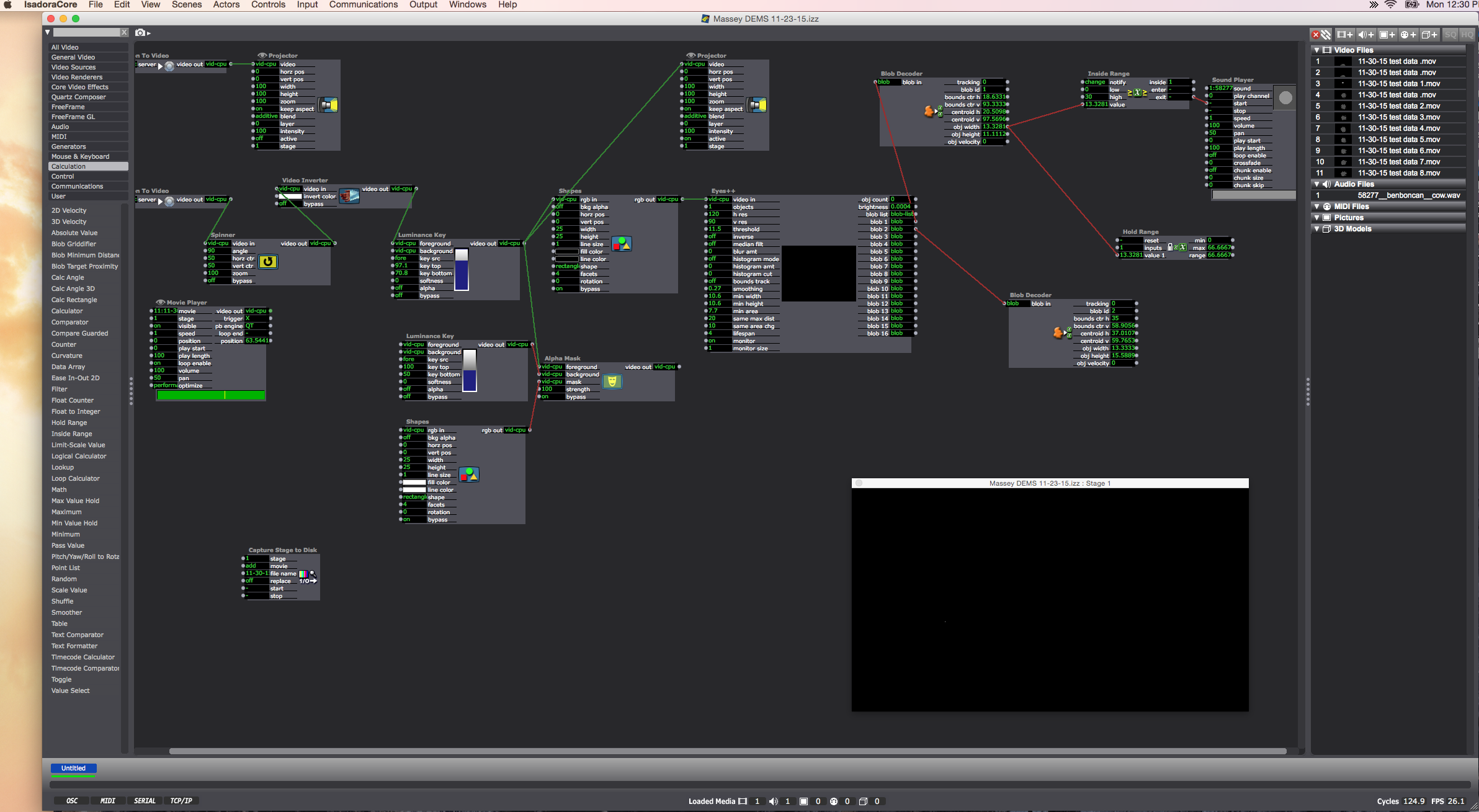

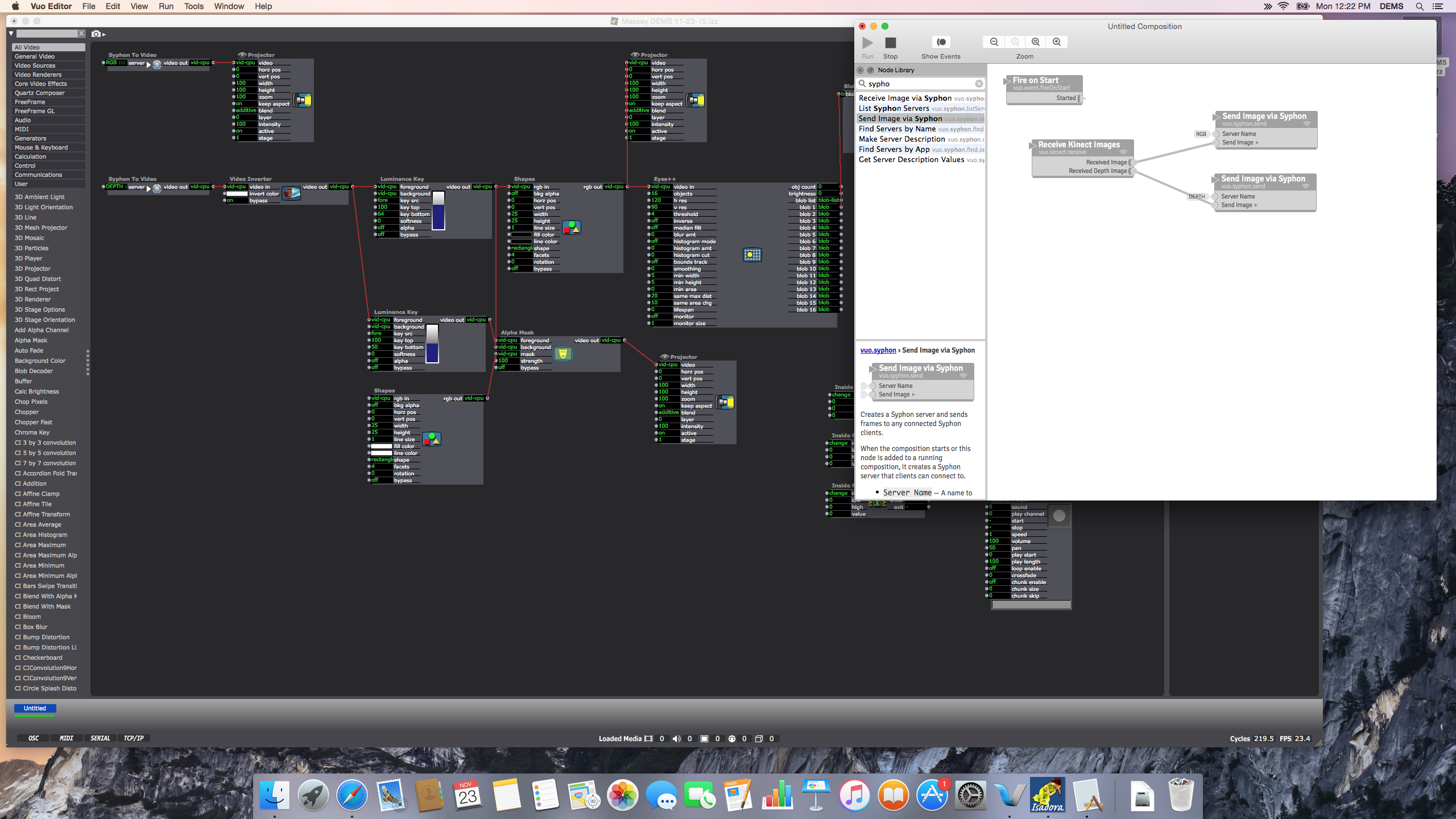

Posted: November 30, 2015 Filed under: Anna Brown Massey, Final Project Leave a comment »Present: Today was a day where the Vuo and Isadora patches communicated with the Kinect line-in. Alex suggested it would be helpful to record live movement in the space as a way of later manipulating the settings, but after a solid 30 minutes of turning luminescence up and down and trying to eliminate the noise of the floor’s center reflection, we made a time-based decision that the amount of time I hoped to save by having a recording off of which I could work outside of the Motion Lab would be less than the amount of time it would take to establish a workable video. I moved on.

Oded kindly spiked the floor to demarcate the space which the (center ceiling) Kinect captures. This space turns out to be oblong: deeper upstage than wider right to left. The depth data of “blobs” (“objects or people in space – whatever is present) is taken in through Isadora (first via Vuo as a way of mediating Syphon). I had Alex/Oded in space walk around so that I could examine how Isadora measures their height and width, and enlisted an Inside Range actor to set the output so that whenever the width data rises above a certain number, it triggers … a mooing sound.

Which means: when people are moving within the demarcated space, if/when they touch each other, a “Mooo” erupts. It turns out it’s pretty funny. This also happens when people spread their arms wide in the space, as my data output allows for that size.

Future:

Designing the initial user-experience responsive to the data output I can now control: how to form the audience entrance into the space, and to create a projected film that guides them to – do partner dancing? A barn-cowyboy theme? In other words, how do I get my participants to touch, and to separate while staying within the dimensions of the Kinect sensor, and they touch by connecting right-to-left in the space (rather than aligning themselves upstage-downstage). Integrating the sound output of a cow lowing, and having a narrow and long space is getting me thinking on line dancing …

Blocked at every turn

Posted: November 30, 2015 Filed under: Anna Brown Massey, Final Project Leave a comment »- Renamed the server in Vuo (to “depth anna”) and noted that the Syphon to Video actor in isadora allowed that option to come up.

- Connected the Kinect with which Lexi was successfully working to my computer, and connected my Kinect line to hers. Both worked.

This was one of those days where all I started to feel I was running out of options:

UPDATE 11-30-15: Alex returned to run the patches from the MOLA preceding our before-class meeting. He had “good news and bad news:” both worked. We’re going to review these later this week to see where my troubleshooting could have brought me final success.

Cycle… What is this, 3?… Cycle 3D! Autostereoscopy Lenticular Monitor and Interlacing

Posted: November 24, 2015 Filed under: Jonathan Welch, Uncategorized | Tags: Jonathan Welch Leave a comment »One 23″ glasses-free/3D lenticular monitor. I get up to about 13 images at a spread of about 10 to 15 degrees, and a “sweet spot” 2 to 5 or 10 feet (depending the number of images); the background blurs with more images (this is 7). The head tracking and animation are not running for this demo (the interlacing is radically different from what I was doing with 3 images, and I have not written the patch or made the changes to the animation). The poor contrast is an artifact of the terrible camera, the brightness and contrast are normal, but the resolution on the horizontal axis diminishes with additional images. I still have a few bugs, honestly I hoped the lens would be different, this does not seem to really be designed specifically for a monitor with a pixel pitch of .265 mm (with a slight adjustment to the interlacing, it works just as well on the 24 inch with a pixel pitch of .27 mm). But it works, and it will do what I need.

better, stronger, faster, goosier

No you are not being paranoid, that goose with a tuba is watching you…

So far… It does head tracking and adjusts the interlacing to keep the viewer in the “sweet spot” (like a nintendo 3Dsa, but it is much harder when the viewer is farther away, and the eyes are only 1/2 to 1/10th of a degree apart). The goose recognizes a viewer, greets and follows the position… There is also recognition of sound, number of viewers, speed of motion, leaving, and over volume vs talking, but I have not written the animations for the reaction for each scenario, so it just looks at you as you move around. And the background is from the camera above the monitor, I had 3, so it would be in 3D, and have parallax, but it was more than the computer could handle, so I just made a slightly blurry background several feet back from the monitor. But it still has a live feed, so…

Ideation 2 of Final Project- Update

Posted: November 20, 2015 Filed under: Alexandra Stilianos, Pressure Project 3 Leave a comment »A collaboration has emerged between Sarah, Connor and myself where we will be interacting/manipulating various parts of the lighting system in the MoLa. My contribution is a system that will listen to sounds of a tap dancer on a small piece of wood (a tap board) and affect the colors of specific lights projected on the floor on or near the board.

Connection from microphone to computer/Isadora:

Microphone (H4) –> XLR code –> Zoom Mic –> Mac

The zoom mic acts as a sound card via a USB connection. It needs to be on Channel 1, USB transmit mode, and audio IF.

Our current question now is can we control individual lights in the grid through this system and my current next step is playing with the sensitivity of the microphone so the lights don’t turn on and off out of control or pick up sounds away from the board.

Dominoes

Posted: November 19, 2015 Filed under: Anna Brown Massey, Final Project Leave a comment »

I am facing the design question of how to indicate to the audience the “rules” of “using” the “design” i.e. how to set up a system of experiencing the media, i.e. how to get them to do what I want them to do, i.e. how to create a setting in which my audience makes independent discoveries. Because I am interested in my audience creating audio as an input that generates an “interesting” (okay okay, I’m done with the quotation marks starting… “now”) experience, I jotted down a brainstorm of methods of getting them to make sounds and to touch preceding my work this week.

We use “triggers” in our Isadora actors, which is a useful term for considering the audience: how do I trigger to the audience to do a certain action that consequently triggers my camera vision or audio capture to receive that information and trigger an output that thus triggers within the audience a certain response?

Voice “from above”

- instructions

- greeting

- hello

- follow along – movement karoaoke

- video of movement

- → what trigger?

- learn a popular movement dance?

- dougie, electric slide, salsa

- markings on floor?

- dougie, electric slide, salsa

- video of movement

- follow along touch someone in the room

- switch to kinect – Lexi’s patch as basis to build if kinesphere is bigger then creates output

- what?

- every time people touch for sustained length is creates

- audio? crash?

- lights?

- how does this affect how people touch each other

- what needs to happen before they touch each other so that they do it

- switch to kinect – Lexi’s patch as basis to build if kinesphere is bigger then creates output

- follow along karaoke

- sing without the background sound

- Maybe with just the lead-in bars they will start singing even without music behind

- sing without the background sound

Multi-Viewer 3D Displays (why the 3DS is a handheld divice)

Posted: November 12, 2015 Filed under: Uncategorized Leave a comment »I have been trying to make a, glasses free, multi-viewer 3D monitor (like the Nintendo 3DS, only 23 inch and multiple viewers), and it is much more tricky than it looks.

The parallax barrier cuts down the brightness exponentially based on the number of viewer (50% light cut to do 1 pair of eyes, 75% for 2), so with 2 viewers the screen is dim. But there is more, the resolution is not only cut by 1/4, a pixel is just as narrow, but I am adding a huge gap between them (some test subjects could not even tell what they were looking at)…

Lenticular lenses cost about $12 a foot, but none of the ones I got in the sampler pack line up with the pixels on any of the monitors, so I have to sacrifice even more horizontal resolution…

I gave up and started doing head tracking, but isolating individual eyes on that scale is just about impossible, so I wind up wasting 4X the resolution just to have enough margin for a single viewer…

And it still doesn’t isolate the eyes properly!!!

Now I have a 3D display (if you do not move faster than the head tracking can follow, and remain 2 to 3 1/2 feet from the monitor) that tracks your head, but will not respond to more than 1 viewer…

So I decided that when the head tracking detects more than one person, or if you move around too fast, the character (Tuba-Goose) will get irritated and leave…

If I can get it to do that, I would consider it a hell of an achievement. And even watching this quasi-3D interface figure out where you are and adjust the perspective to compensate (with a bit of a lag sometimes) is a little unsettling… Kind of like being eyeballed by a goose…

I can work with this, but I might still have to buy the 3D monitor, or at least the lens that is made for the 23 inch display…

The Present

Posted: November 9, 2015 Filed under: Anna Brown Massey, Final Project Leave a comment »The Past

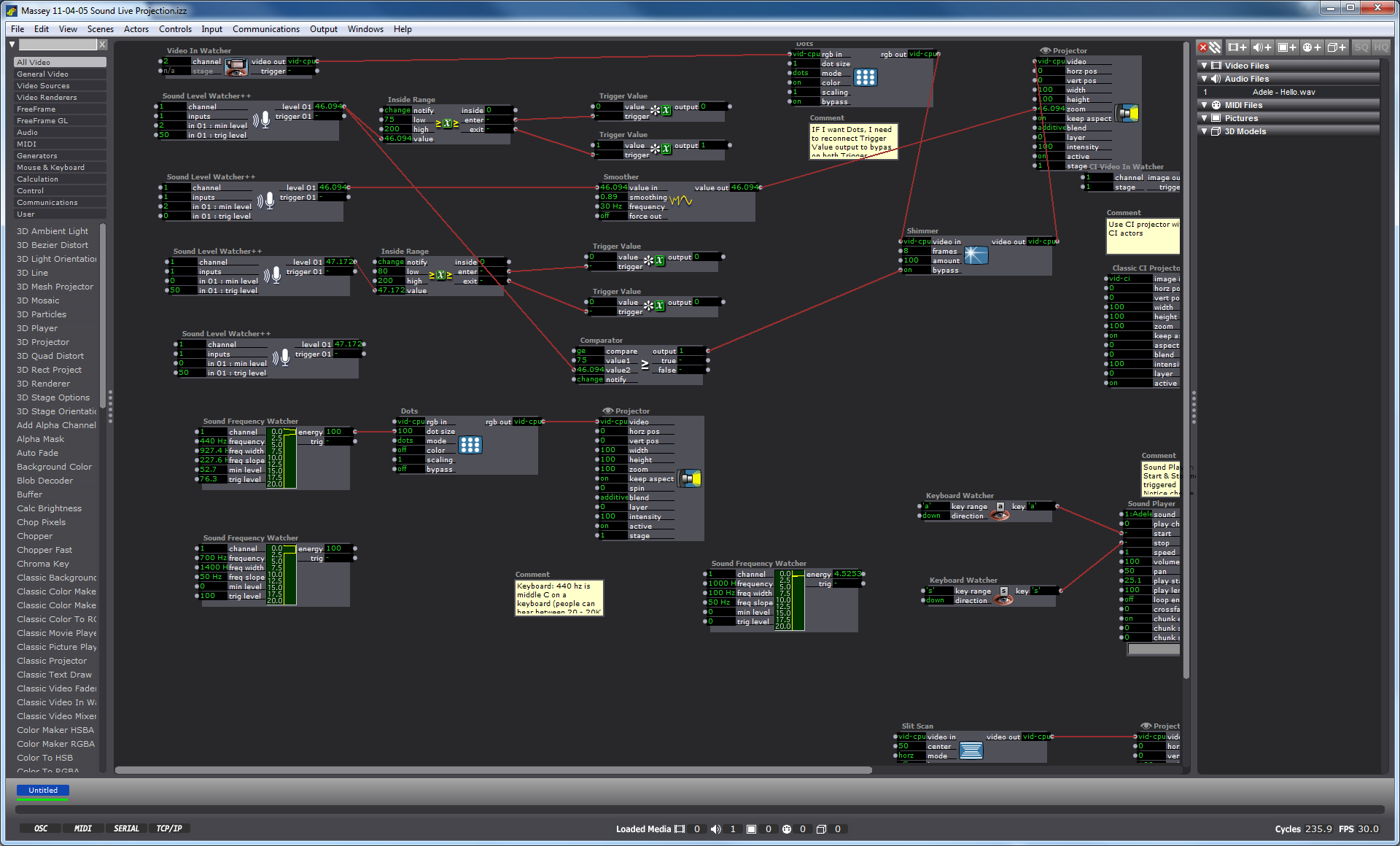

I am currently designing an audio-video environment in which Isadora reads the audio and harnesses the dynamics (amplification level) data, and converts those numbers into methods of shaping the live video output, which in its next iteration may be mediated through a Kinect sensor and separate Isadora patch.

My first task was to create a video effect out of the audio. I connected the Sound Level Watcher to the Zoom data on the Projector actor, and later added a Smoother in between in order to smooth the staccato shifts in zoom on the video. (Initial ideation is here.)

Once I had established my interest in the experience of zoom tied to amplitude, I enlisted the Inside Range actor to create a setting in which past a certain amplitude, the Sound Level Watcher would trigger the Dots actor. In other words, whenever the volume of sound coming into the mic hits a certain point and above, the actors trigger a effect on the live video projection in which the screen disperses into dots. I selected the Dots actor not because I was confident that it would create a magically terrific effect, but because it was a familiar actor with which I could practice manipulating the volume data. I added the Shimmer actor to this effect, still playing with the data range that would trigger these actors only above a certain point of volume.

The Future

User-design Vision:

Through this process my vision has been to make a system adaptable to multiple participants of a range between 2 and 30 who can all be simultaneously engaged by the experience and possibly having different roles by their own self-selection. As with my concert choreography, I am strategizing methods of introducing the experience of “discovery.” I’d like this one to feel, to me, to be delightful. With a mic available in the room, I am currently playing with the idea of having a scrolling karaoke projection with lyrics to a well-known song. My vision includes a plan to plan on how to “invite” an audience to sing and have them discover that the corresponding projection is in causation relationship to the audio.

Sound Frequency:

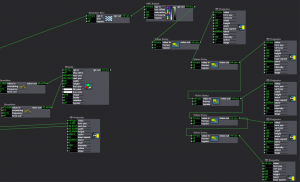

Next steps, as seen at the bottom of the screenshot (and on the righthand side some actors I have laid aside for possible future use), is to start using the Sound Frequency actor as a means of taking in data about pitch (frequency) as a means of affecting video output. To do so I will need to provide an audio file in a variety of ranges as a source to experiment to observe how data shifts at different human voice registers. Then I will take the frequency data range and match that up, through an inside actor, to connect to a video output.

Kinect Collaboration:

I am also considering collaborating with my colleague Josh Poston’s project which currently uses the Kinect with projection as a replacement “live video projection,” that I am currently using, and instead to affect a motion-sensing movement on a rear-projected screen. As I consider joining our projects and expand its dimensions (so to speak-oh puns!), I need to start narrowing on the user-design components. In other words, where does the mic(s) live, where the screen, how many people is this meant for, where will they be, will participants have different roles, what is the