PP3 — You can do it!

Posted: November 5, 2019 Filed under: Uncategorized Leave a comment »When we were first given the parameters of this project, I was immediately anxious because I felt like it was outside of my skill and capability. This class has definitely been a crash course in Isadora and I’m still not the most proficient at using this program. The assignment was to spend no more than 8 hours using Isadora to put together an experience that revealed a mystery; bonus points were given for making a group activity that organized people in the space. The idea of mystery was really confusing to me because the only thing that immediately came to mind was TouchOSC and Kinect tools that I don’t know know how to use independently yet. However, Alex assured me that he would help me learn the components of which I was unsure, and was very helpful in showing me some actors that I could use to explore the idea that I had which was to play a game of “Telephone” with the class.

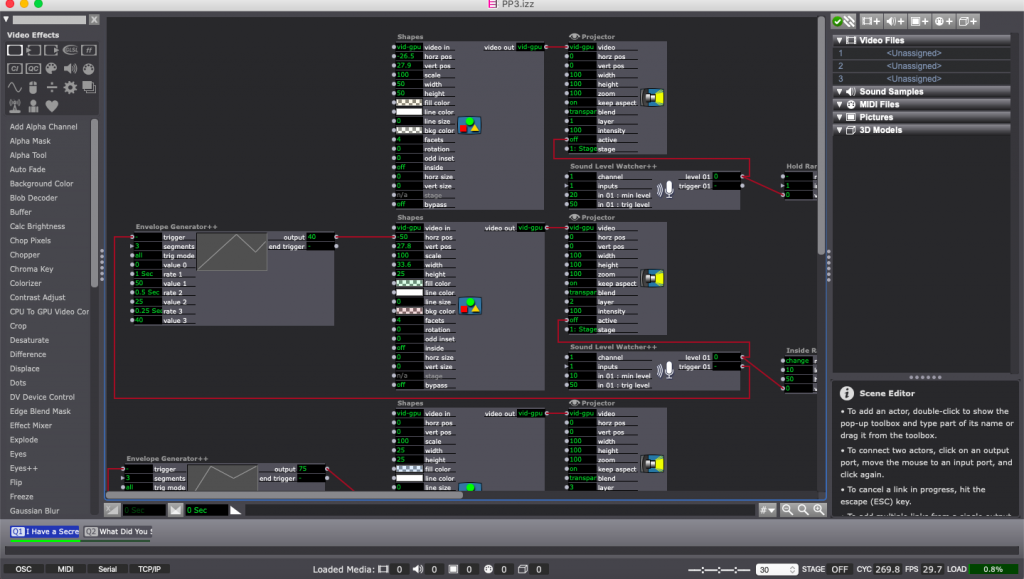

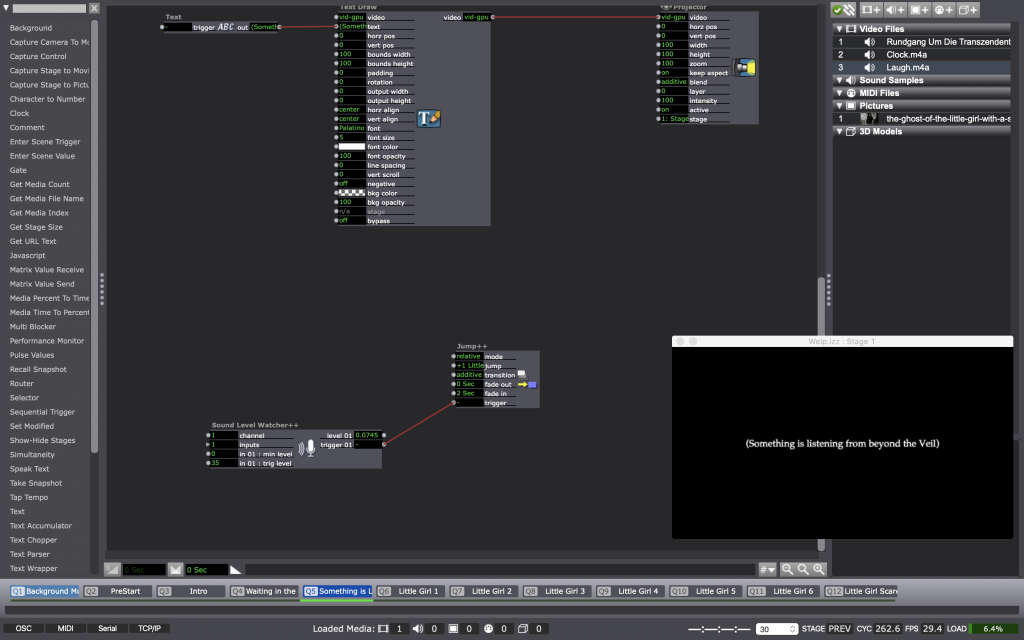

After having the class line up one after the other in front of the microphone, I used the mic to record the “secret” while it was passed down the line. I had played with locomoting some shapes and that reacted to the levels of the voices as they spoke in to the mic. Initially, I had wanted to use the sound frequency bands actor in addition to the sound level watcher actor, but when I started working on the project at home, the frequency bands would not output any data. Unbeknownst to the participants, I was recording the “secret” as it passed down the telephone so that at the end, I could “reveal” the mystery of what the initial message was and what it became as it was went from person to person.

It was really exciting actually to hear the play back and listen to the initial message be remixed, translated into Chinese and then back to English, to finally end in the last iteration. It was fun for the class to hear their voices and see that the flashing, moving shapes on screen actually meant something and were not just arbitrary.

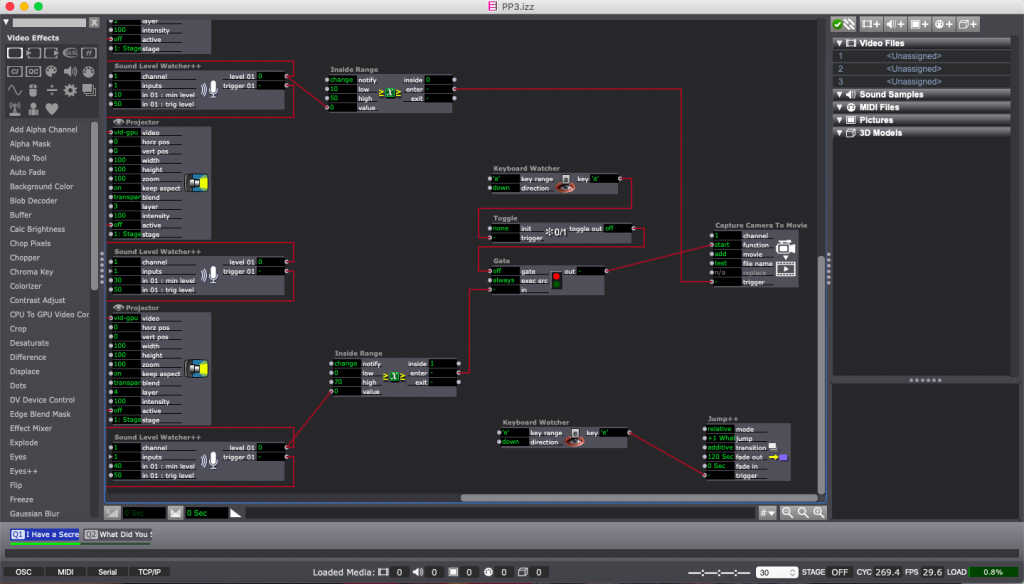

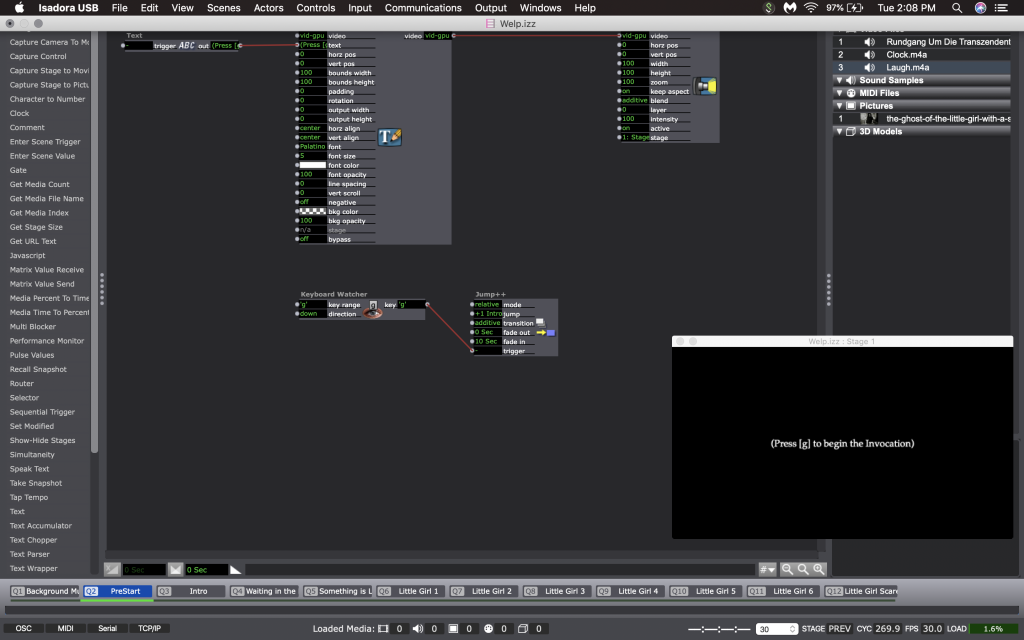

Overall, the most complicated thing about PP3 was learning how to program the camera to start and stop recording the audio. To do this I used a camera to movie actor, and played with automating the trigger to start, switch to stop, and then stop. Ultimately, I ended up setting up a gate and a toggle to control the flow to the start/stop trigger and using keyboard watcher that I manually controlled to open and close the gate so that the triggers could pass through.

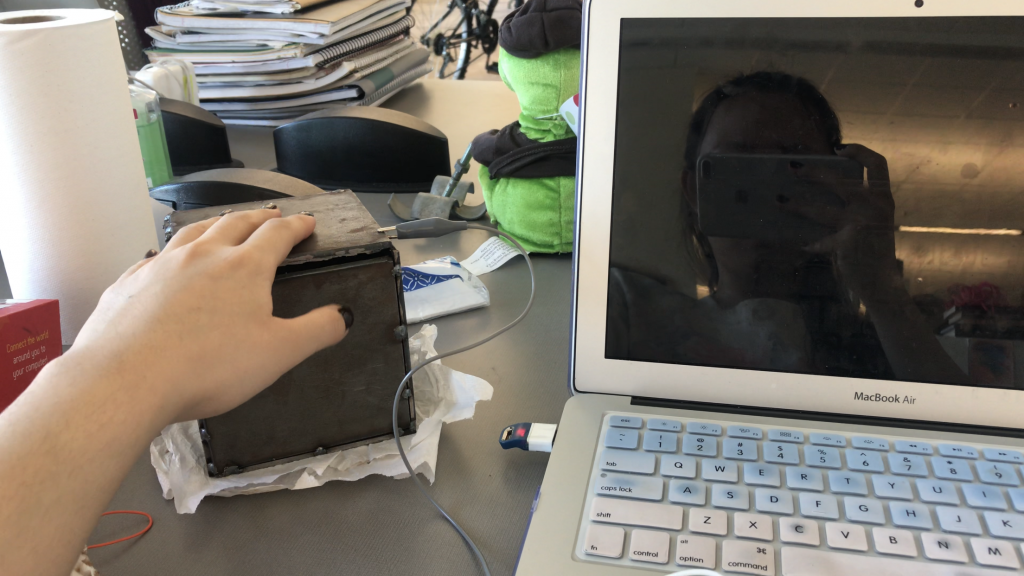

In spite of my initial concerns, I found this project to be a nice challenge. I had even been exploring ways to integrate a Makey Makey into the presentation, but realized I don’t have enough USB ports on my personal computer to support both an Isadora USB key, the USB for the mic and a Makey Makey. This was definitely a project that was the result of the resources that I had available to me in regards to both skill and computer systems.

The shapes actors and the sound level watchers and envelop generators that controlled them. There were 4 in total.

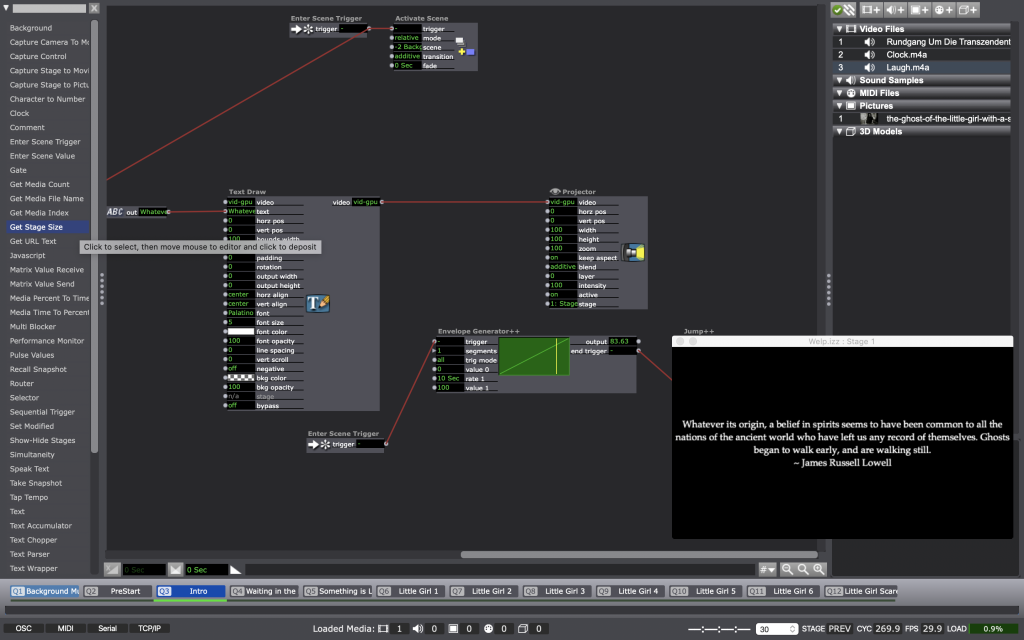

This data fed into a camera to movie actor that recorded all the sound that was picked up by the mic.

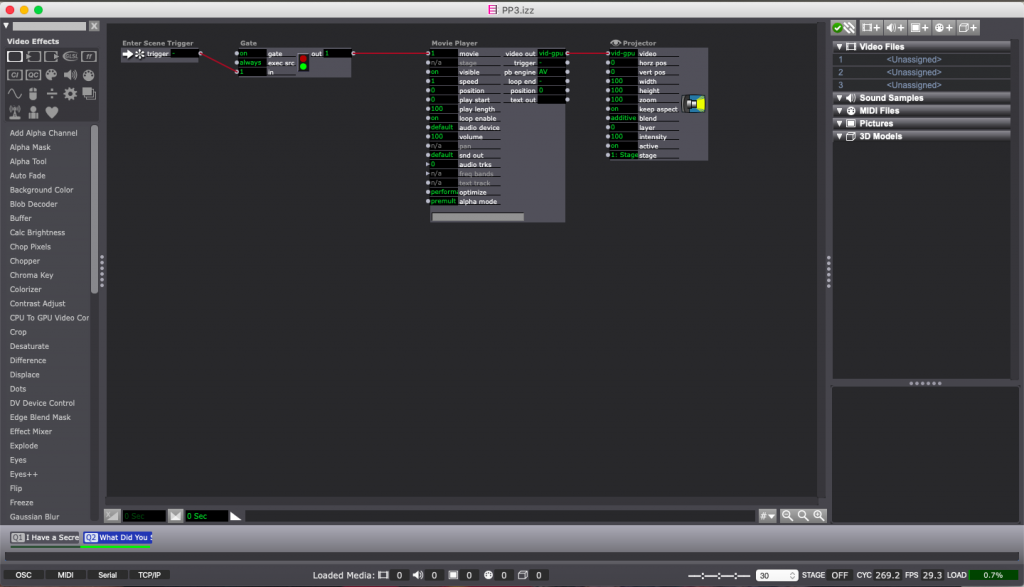

This enter scene trigger sent a value of 2 into the the movie player, allowing the projector to play the sound recorded in the previous scene.

PP3 — composer

Posted: November 4, 2019 Filed under: Uncategorized Leave a comment »In my pressure project 3, I used Makey Makey as an intermedia to connect people and Isadora. My ideation for this project was to create some music keyboard that people could play randomly and compose their music work. I believe that the process of composition is a mystery. People have no idea about what kind of music they will produce until the end. I created an Isadora interface with the piano keyboard and three mystery buttons that were four background music, some funny human/robot/animal voices. And then, I did the same interface on that paper and connected it through Makey Makey to the Isadora. At first, I asked people to choose a color mark-pen that I had and to draw that paper. Which means they could control the color (button). Because Makey Makey works when people connect, I also asked them to touch each other without using hands. I thought that maybe fun. Then the journey started. I made instructions to guide people to play the piano at first. And then, the guideline introduced them to the other funny/weird buttons so they could explore mixing all the sound and music. People were enjoying playing it. They more tended to play based on background music rather than create something new. I feel this was because my instructions jump to fast so that they barely saw. And also, they feel weird in the part of touching because of the space limitation.

PP3

Posted: October 29, 2019 Filed under: Uncategorized Leave a comment »Pressure project 3 is about revealing a mystery during the interaction with audience member(s). I thought for a long time about what mystery I want to reveal but ended up with nothing. However, I saw my metal box for my welding exercise in art sculpture class which it has a big gap I failed to fill in. I thought this gap really can means something since people can never open this box and can never see the inside clear by just looking through the gap. The metal box can also connect with Makey Makey and become a button to trigger the system. So, I decided to make a “Mystery Box”.

This is how I connect the box to my computer, so as soon as people touch the box, people can see what is actually happening inside the box, which I use Isadora to fake a inside world and convey that through computer interface.

At the very beginning, I didn’t plan to make this project mean. But I saw some news about a Korean pop artist suicided at her place during the time I created this project. She encountered horrible internet violence before she die and she cannot bear that anymore and choose to leave the world. I was shocked by that news since I know her pretty well and she was only 25 years old. So I decided to insert a little bit educational purpose to let people feel what is the feeling of being judged badly with no reason and what can we do when we are in that situation.

I use the live capture so that the users can see their faces on the screen while the bad words are keep flying in front of their face. They have method to escape this scene by say no. But I declined the captured volume so that users really need to shout out no to reach the highest level to escape. I want the users can be angry and really saying no to the violence.

It was a really great experience when seeing my classmates trying altogether to increase the volume. But in one scene when I have 3 projector capturing the live images on the screen, I typed the word “Ugly” but changed the font so that the word turned into some other characters I don’t know. I should be careful about this since I don’t know the exact meaning of these and it may influence people’s experience if they know that.

PP3 – Haunted Isadora

Posted: October 27, 2019 Filed under: Uncategorized Leave a comment »For PP3, I attempted to create the experience of talking to spirits through a computer interface. To do this, I used a simple sound level controller to move between scenes in Isadora.

When a user begins the program, the Isadora patch randomly generates wait times that the user must wait through before Isadora begins listening. Once the user speaks (or, to my dismay, when the background music plays… oops) the scenes progress and the “little girl” asks them to play hide and seek.

After a moment or two, a jump-scare pops up and the little girl has found you. Over-all I wasn’t super proud of the project. It could have had more substance (the original plan was to have multiple “entities” to talk to… but that didn’t come together). I learned a bit about background scene triggers and text actors so I suppose it wasn’t a total flub.

Pressure Project 3: Magic Words

Posted: October 21, 2019 Filed under: Uncategorized 1 Comment »For this pressure project I wanted to move away from Isadora since I prefer text-based programming and it is just something that I am more comfortable doing. I also experienced frequent crashes and glitches with Isadora and I felt I would have a better experience if I used different technology.

For this experience, we had to reveal a mystery in under 3 minutes. I spent some time searching the web for cool apis and frameworks that I could use that would help me achieve this task. Something that ended up inspiring me was Google’s speak to go experiment with the Web Speech API.

You can check it out here: https://speaktogo.withgoogle.com/

I found this little experience very amusing and I wondered how they did the audio processing. That’s how I stumbled upon the Web Speech API and the initial idea for the pressure project was conceived.

I had initially planned on using 3d models with three.js that would reveal items contained inside them. The 3d models would be wrapped presents and they would be triggered by a magic word. Then they would open/explode and reveal a mystery inside. However, I ran into a lot of issues with loading the 3d models, CORS restrictions, and I decided that I did not have enough time to accomplish what I had originally intented.

So, in the end I decided on using basic 3d objects that are included in the three.js standard library and having them do certain actions when triggered with the mystery being the specific words that would cause an action and what action they caused (since some are rather subtle).

You can get the source code here:https://drive.google.com/file/d/1RbOzD3Ktrbp2VpqDNj6SQSWb83hOEA2U/view?usp=sharing

Pressure Project 2: Audio Story

Posted: October 21, 2019 Filed under: Uncategorized Leave a comment »Prompt

This Pressure Project was originally offered to me by my Professor, Aisling Kelliher:

Topic – narrative sound and music

Select a story of strong cultural significance. For example this can mean an epic story (e.g. The Odyssey), a fairytale (e.g. Red Riding Hood), an urban legend (e.g. The Kidney Heist) or a story that has particular cultural meaning to you (e.g. WWII for me, Cuchulainn for Kelliher).

Tell us that story using music and audio as the the primary media. You can use just audio or combine with images/movies if you like. You can use audio from your own personal collections, from online resources or created by you (the same with any accompanying visuals). You should aim to tell your story in under a minute.

You have 5 hours to work on this project.

Process

I interpreted the prompt as that “music and audio as the primary media” means that the audio can stand on its own or changes the meaning of the visual from what it would mean on its own.

I also was working on this project concurrently with a project for my Storytelling for Design class in which we were required to make a 30 second animation describing a how-to process. The thoughts and techniques employed in this project were directly influenced by hours of work on that project.

After the critique of that project, I had a very good sense for timing, sound, and creating related meeting from the composition of unrelated elements.

I blocked out five hours of time for my project and began with practicing on the narrative of Little Red Riding Hood. I used the sounds available from soundbible.com, a resource introduced in the previously mentioned Design class, to try to recreate this narrative from a straight-ahead viewpoint. After starting on my Little Red Riding Hood prototype, I found that I spend over thirty seconds introducing a foreshadowing of the wolf and that this story wouldn’t do.

I then moved on to other wolf-related stories including a prototype of The Three Little Pigs. My work with these animal noises brought me close to current recent life experiences. I had 2 friends test the story and then refined it, completing the assignment.

Result

The resulting recording (included below) told the story of a farmer defending his sheep from a wolf using only sound effects, no dialogue.

Critique

The most intriguing part of this project was not the work itself, but rather the final context in which the work existed on presentation day. Most of the other original stories were about large cultural issues. I presented last. I was struck while presenting the piece how much priming affects perception. From the previous examples, the class was primed for something large and culturally controversial or making a bold statement.

My piece was simple and different as it used no visuals and no words to tell the story. This simplicity was pretty much lost to a group that was primed for something large and controversial. I found the critique unsuccessful in that I did not receive feedback on the work I had created so much as the work I had not created.

From this exercise, I learned the importance of allowing time to contextualize your work and reset the mood when you are presenting a unique piece among a series of unique pieces. Our mind naturally desires to make connections between unconnected things; this is the root of creativity itself. So in the context of coursework, conference presentations, etc. and every interaction in the era where everything exists within a larger frame, it is vital to be clear about distinguishing work that is meant to be separate. This can often be achieved through means as simple as a Title and an Introduction. Giving some understanding of whose the work is, why they created it, and what they desired to learn through it gives much better context to critiquers and helps keep conversation focused and centered for the best learning experience for everyone.

PP3 – Mysterious Riddles

Posted: October 21, 2019 Filed under: Uncategorized 2 Comments »For this pressure project we had to reveal a mystery within 3 minutes with the following caveats:

1. Create an experience for one or more participants

2. In this experience a mystery is revealed

3. You must use Isadora for some aspect of this experience

4. Your human/computer interface may(must?) NOT include a mouse or keyboard.

**Above is an attachment of my ideation**

Ideation

- Have users move around a space to use an alpha mask to reveal lines that lead to spaces where more movement is needed to maintain a visual / note. [3 Phases]

- Use colors as the visual indicators of objects

- White? Orange? Green? (What colors do people generally stray away from?)

- What materials?

- Balloons

- Paper

- Cubes / Discs

- What materials?

- White? Orange? Green? (What colors do people generally stray away from?)

- Use colors as the visual indicators of objects

- Use camera movements to “wipe away” an image to reveal something underneath. Modes of revealing are referenced either literally or figuratively. [3 Phases]

- Use movement to reveal the visual

- How the “wiping” initially introduced as the method of revealing?

- Spinning Shapes (?)

- Spinning wiper blade

- Real World examples (?)

- Wiper blade on a car windshield

- Literal Instructions (?)

- Move your arms/body to wipe away the visual

- Spinning Shapes (?)

- How the “wiping” initially introduced as the method of revealing?

- The image / text underneath gives them their next interactive “mode” to reveal the next scene.

Use audio to reveal the scene.- Top / Mid / Bottom splits (horizontal)

- Frequency ranges

- Tenor freq. – Top Band / Alto freq. – Mid Band / Bass freq. – Low Band

- Top / Mid / Bottom splits (horizontal)

- Use movement to reveal the visual

Process

- Create the webcam interactivity

To do this, there needs to be a webcam hooked up to Isadora. After the interface is “live”, I used a Difference actor to notice these variations and output them to a virtual stage. On Virt Stage 1: we can see this effect being used. The grey area is the dynamically recorded imagery being noticed as separate pixels on from the webcam. On Virt Stage 2: we can see that I changed the visuals to a threshold–making them stand out as pure white. Through this pipeline, I then used a Calculate Brightness actor to notice the amount of white-pixels (created by movement) on the screen. This number could then be base-lined, and outputted to an Inside Range actor.

You might notice on Stage 1 (not virtual) that the grainy pieces along the edges of the imagery are less apparent–this is due to ‘grabbing’ the frame (Freeze actor), and regulating the change in imagery rather than having it be constantly examined. Putting this through an Effect Mixer actor, I was able to have a threshold of the original image and the live-frames being used. This provided a more smooth measurement of the difference in frames rather than a disruption from stray pixels. Putting this data through a Smoother actor also alleviated arbitrary numbers that would ‘jump’ and disrupt the data stream.

- Use the webcam interactivity as a base for the elucidation of visuals

After creating the webcam interactivity, I used the Inside Range actor as the basis of measuring how many times ‘movement’ was captured. This was done by having a trigger activate when the amount of white on the screen (from movement) was enough to trigger a reaction. I used this to ‘build’ tension as a meter filled up to reveal text underneath. The triggers were sent to a Counter actor, and then to a shape actor to continuously update the width of the shape. To make sure that the participants kept moving, the meter would go recede back to normal through a Pulse Generator actor. This pulse was subtracting the numbers, rather than counting. On top of this, there was a beeping sound that increased in amplitude and the speed at which it was played if it was continuously triggered. This extra bit of feedback made the audience more aware that what they were doing was having an effect.

- Use the motion data to influence the experience

As the amount of threshold from the pixels was attained, the experience would jump to another scene to reveal the answer to the riddle. Though I didn’t give the user a choice to try to answer the riddle, I think this would have pushed the experience over the 3-minute mark. It came in 3 phases: Movement, Audio, Movement & Audio.

For the Audio version, I used the microphone of the webcam and the same ‘building’ shape technique as the movement scene.

For the dual combination, I upped the amount of times the Pulse Generator triggered the subtraction from the counter, but made sure that both the sound and movement was being accounted for. This made the experience more difficult to achieve if someone was solely moving, or making noise. Having both required a deeper level of interactivity beyond the standard of just one of them working to complete the task.

For those who are interested (and for my own reference) here’s the list of actors that I used within the patch:

- Picture Player

- Video In / Audio In Watcher

- Freeze

- Threshold

- Max Value Hold

- Calculate Brightness

- Calculator

- Effect mixer

- Background Color

- Alpha Mask

- Gaussian Blur

- Text Draw

- Text Formatter

- Dots

- Jump++

- Projector

- Enter Scene Trigger

- Trigger Delay

- Enter Scene Value

- Pulse Generator

- Counter

- Inside Range

- Shapes

Outcome

I think that I would have changed this experience to have more of a ‘choice’ once the user filled up the meter. Though, making them re-do the experience if they guessed wrong might push back the notion to continue in the first place (my subjects might get tired). I could just have a glaring noise if they guessed wrong, and allow them to continue the experience. I also wanted to use the choice through an object or a body to make the decision. Things to think about for next time!

-Taylor

Let’s Play iSpy – PP3

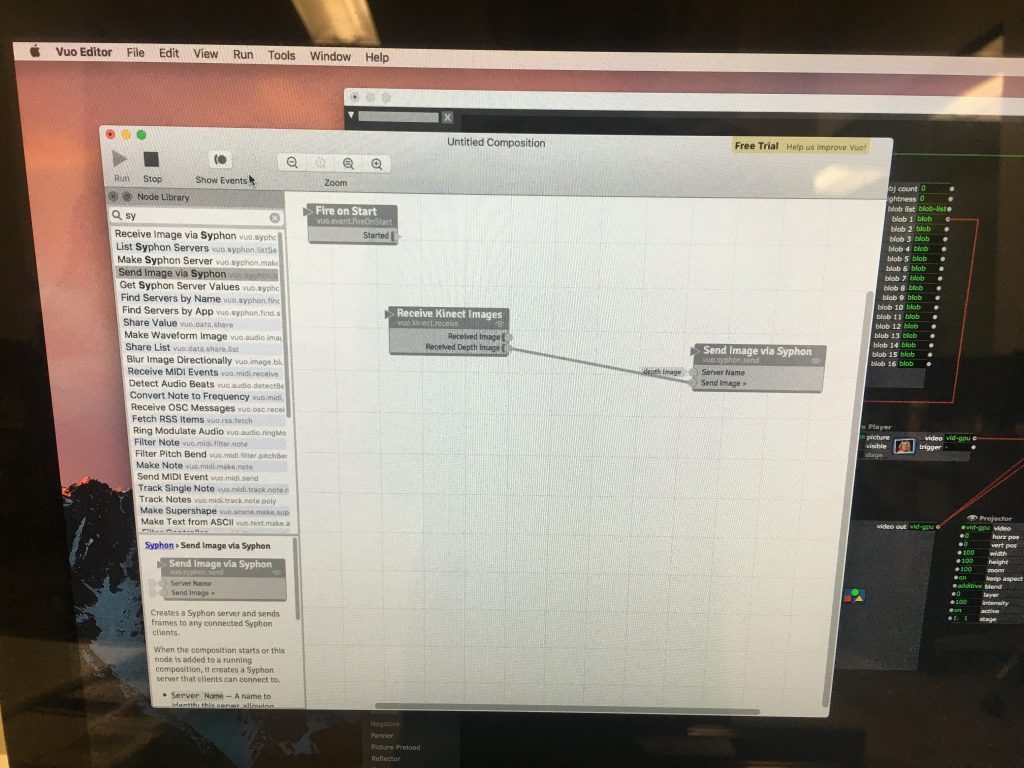

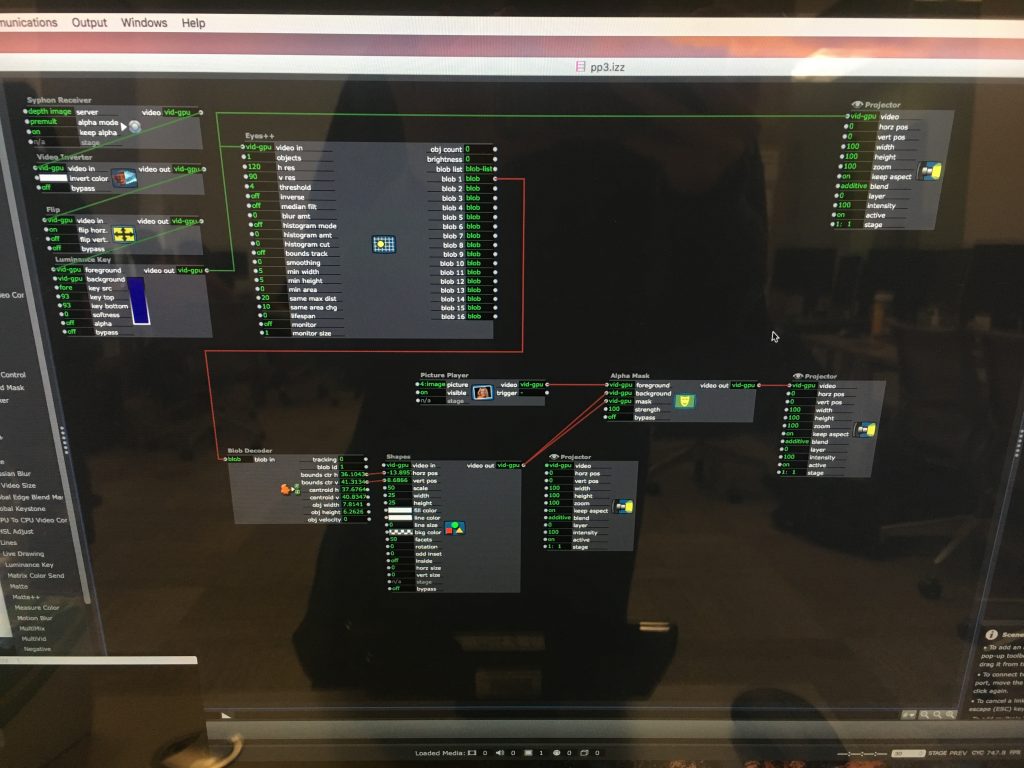

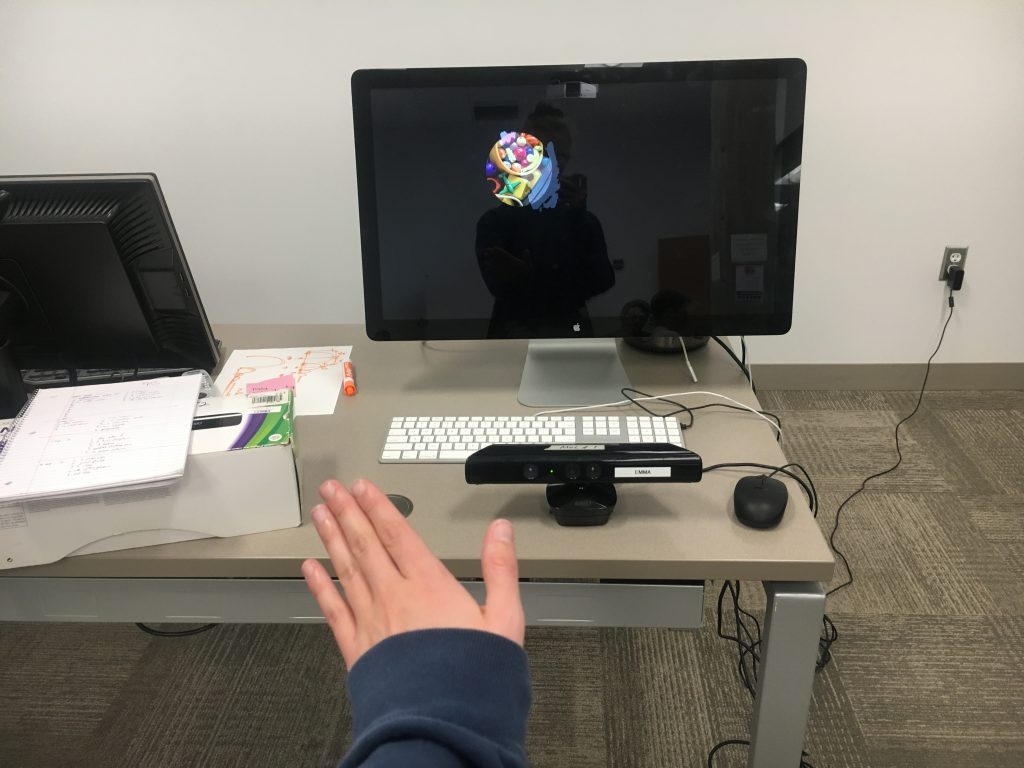

Posted: October 17, 2019 Filed under: Uncategorized Leave a comment »I created an interactive system to play iSpy with the Xbox Kinnect. My idea was to have individuals play iSpy using their hand to move a spotlight and try to discover different objects.

I used the Kinnect to sense the participant’s hand depth and then sent those digits to Isadora. In Isadora, I was able to program the system using actors like syphon receiver, luminance key, and eyes++. A syphon reciever is what communicates digits/info between programs. In order to get the data from the Kinnect into Isadora, I used a syphon reciever sent from a program called Vuo, which read Kinnect data and could send it out to other programs.

I linked a shape to the hand so it followed the coordinates of the hand movement. Then I put an Alpha mask over the shape to reveal only a spotlight on top of the iSpy gameboard background.

The class found my game really fun and enjoyed the level of interactivity. They wished I included music to fill the awkward silence as the participant heavily searched for given items. I mediated the game by giving them objects to find and helping clue them to find the objects if they grew frustrated.

We also talked a lot about how to lost the ‘ghost hand’ behind the spotlight. I had hooked up a projector the luminescence key which made the ghost hand visible. By deactivating the projector actor, I could lose the white-out effect and better see the image in the spotlight.

How to make a button only fire once

Posted: October 2, 2019 Filed under: Uncategorized Leave a comment »It was much simpler than I thought!

Here is a easy solution:

Video explanation: Single Fire Button Example

Image of patch:

PP2 – Straight Talk

Posted: October 1, 2019 Filed under: Uncategorized Leave a comment »For pressure project 2, we were challenged to use audio to tell a narrative in one minute. My project attempted to tell the story of the AIDS crisis during the 1980s, specifically the relationship that straight anxiety about AIDS (or lack of anxiety in the case of the Reagan administration) related to the death-toll the crisis had on the lgbtq+ community. The main idea was to use entirely straight voices (other than my own) to show the way straight society looked at AIDS during the crisis.

The tone I took was largely critical as I selected news clips that highlighted some of the ridiculous fears that straight people had about the disease (“can my dog catch AIDS from a bone my neighbor handled?”). These sound clips were then edited together and played over a fairly unsettling atmospheric track in order to create a feeling of tension.

The next component consisted of me reading yearly AIDS death tolls in the U.S. during the 1980s and early 1990s. This was done simultaneously with the playing of the audio in a droning, mechanical fashion. Initially, this reading was going to be recorded and edited with the audio clips, but upon listening to my recording (which took over an hour of my 5 hour time constraint), I opted to read it live. I honestly didn’t have a plan as to how I would read it due to how last-minute this decision was, so I largely improvised. As such, the live performance portion could definitely be polished and refined into something more formal than someone sitting behind a computer screen.

The final component was a closing critique of the Reagan administration’s reaction to AIDS. At the end of the performance, I stopped reading the death totals and an audio clip played of reporter Lester Kinsolving asking Press Secretary Larry Speakes what the president was doing about the AIDS crisis. Mr. Speakes responds with a number of jokes which the other reports can be heard laughing at, and the clip ends with the Mr. Kinsolving asking if “the White House looks at this as a great joke?”

Second performance, perhaps not as good as the first

Overall I think the project was successful in eliciting an emotional response from its listeners. There was a moment of emotional silence after the first run-through that I didn’t expect. Perhaps this silence was reverence for those that died or everyone processing all the sound I had just thrown at them, but those few seconds made me proud that I could tell this tragic story and honor those that suffered through it.