PP1 Documentation – Harmanjit

Posted: September 12, 2019 Filed under: Uncategorized Leave a comment »

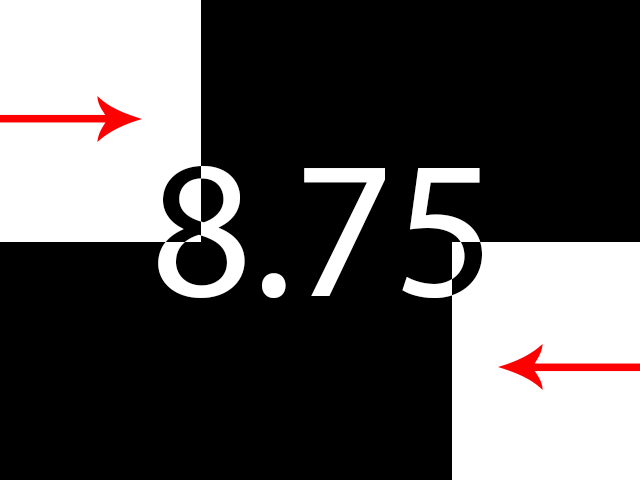

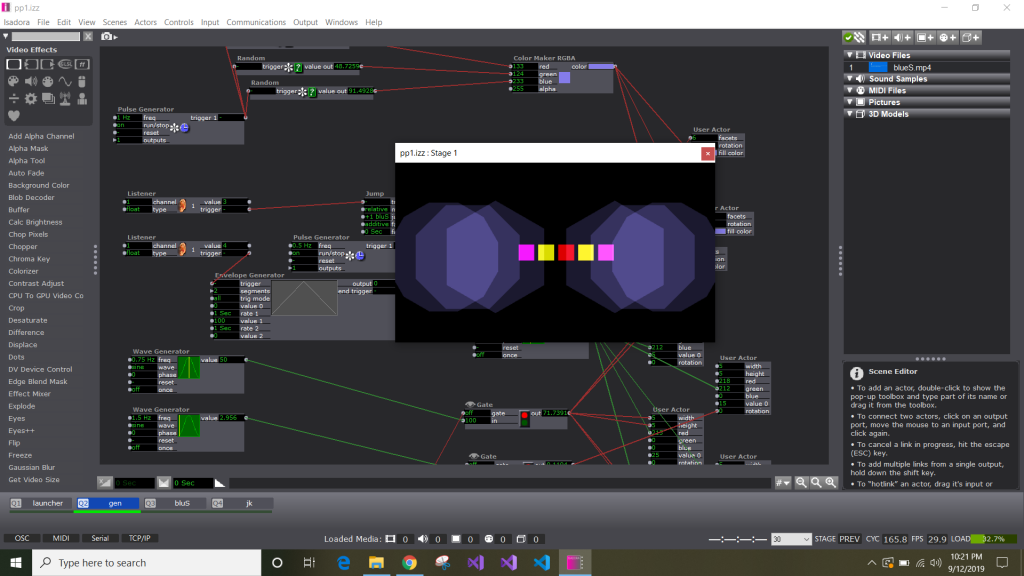

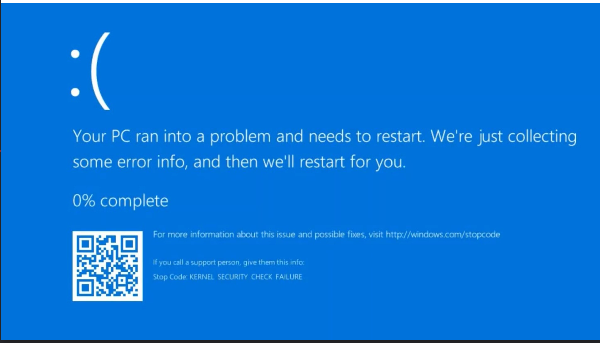

For this pressure project I decided to go in a different direction. I had a great idea in mind for how to hit all of the achievements for this project such as making someone laugh, responding to broadcasters, and maintaining surprise. The idea was to have a fairly standard self-generating patch that would incrementally respond to network broadcasters. When it received a value of 3 it would display a Windows blue screen indicating that the program had crashed only for it to be revealed as a joke a few seconds later.

Unfortunately, there was a problem with receiving the values from the network broadcasters and it didn’t go as planned.

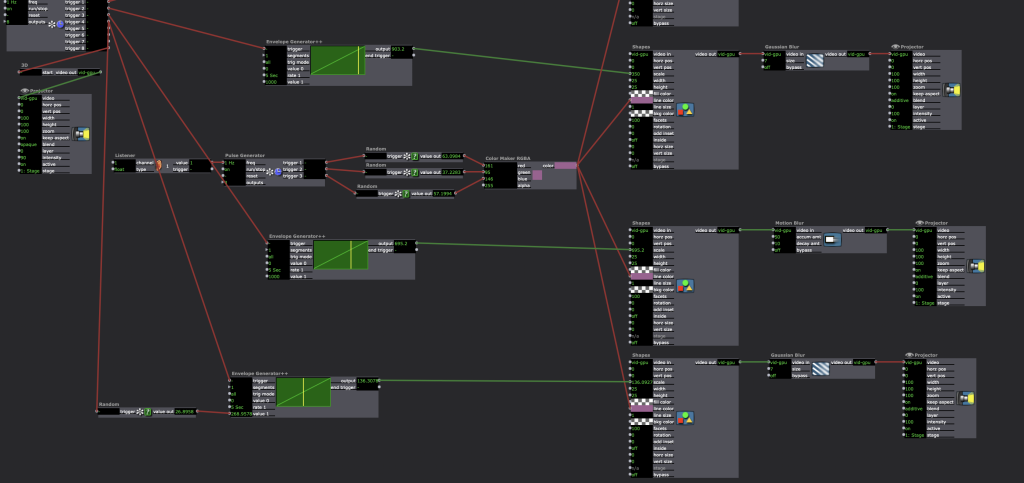

I used random number generators for the color generation and the horizontal movement. The blue screen component was a separate scene that was accessed via a listener -> Inside Range –> jump. I also used toggle actors and gates to turn on/off different effects in response to network inputs.

PP1 Documantation — Andi

Posted: September 12, 2019 Filed under: Uncategorized Leave a comment »

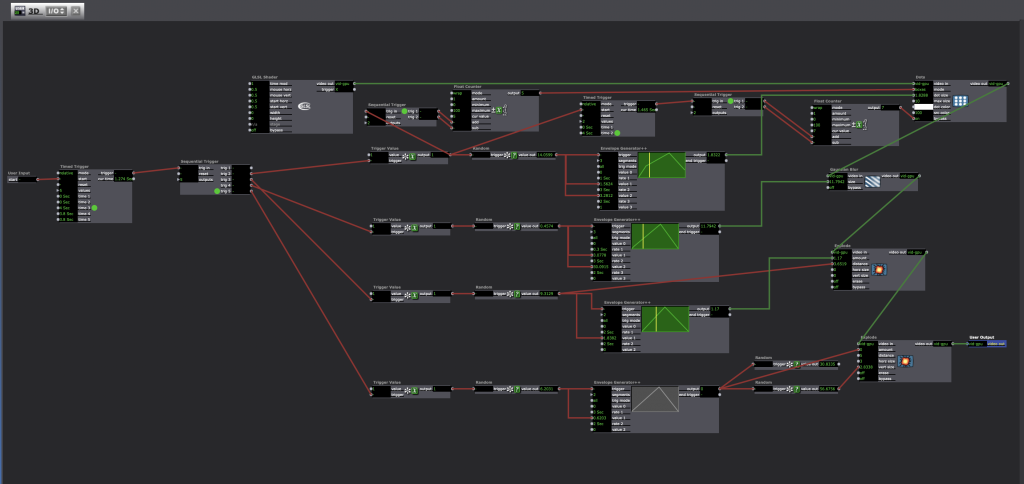

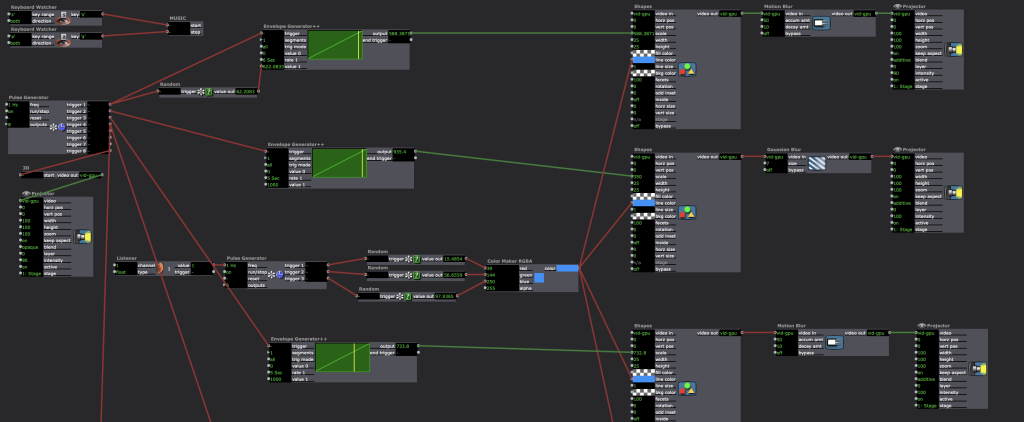

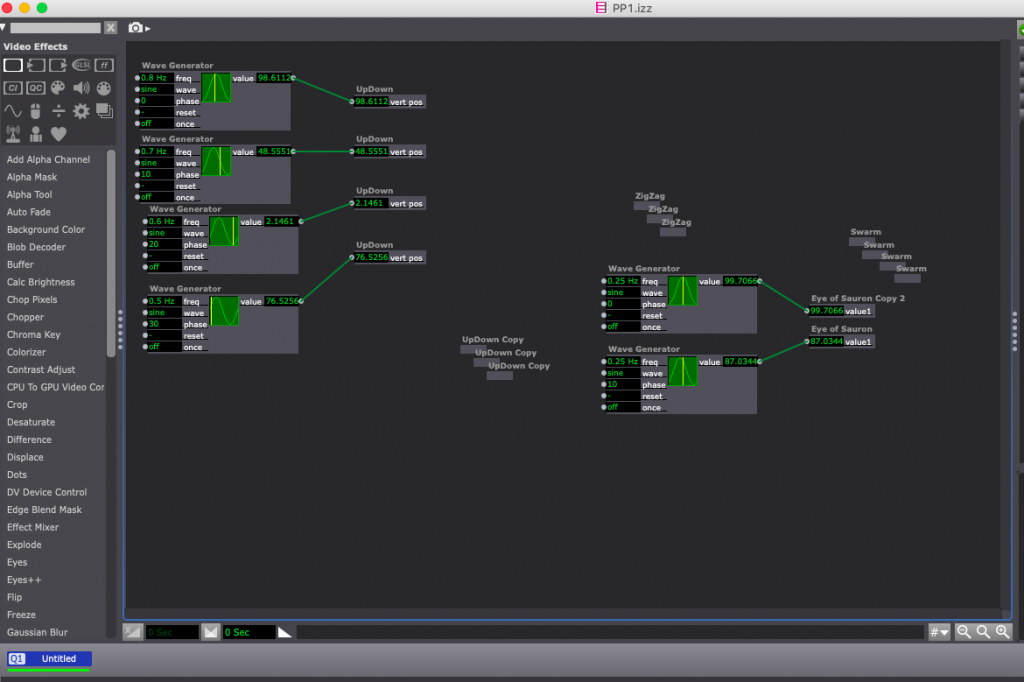

At the beginning when I was doing my pressure project, I just quickly looked through the “Shadertoy” website, wanted to find some 3D mode that is simple but colorful. And then I found the mode “Phase Ripple” by Tdhooper (https://www.shadertoy.com/view/ttjSR3). It made me felt the heartbeats. As you can see in my screenshot pictures, I added some effects like Dots, Gaussian Blur and two different explodes to this 3D model and use some random actors to control it in some way. After looked it several times, I felt it was a good way to combine the 3D model with 2D shape. So, I tried to add four circle shapes with two effects and changed the colors and scales of the shapes by random actors. In the end, I used keyboard watcher actor to play and stop the music. Through this exploring experience of Isadora, I have become more interested in using random actor. At the beginning of this creation, I always wanted to set up all the changes in a certain time, but because of the time limitation, I eventually chose to use random actor. Surprisingly, it gives me more surprises and unexpected effects than I thought before!

PP1- Tay

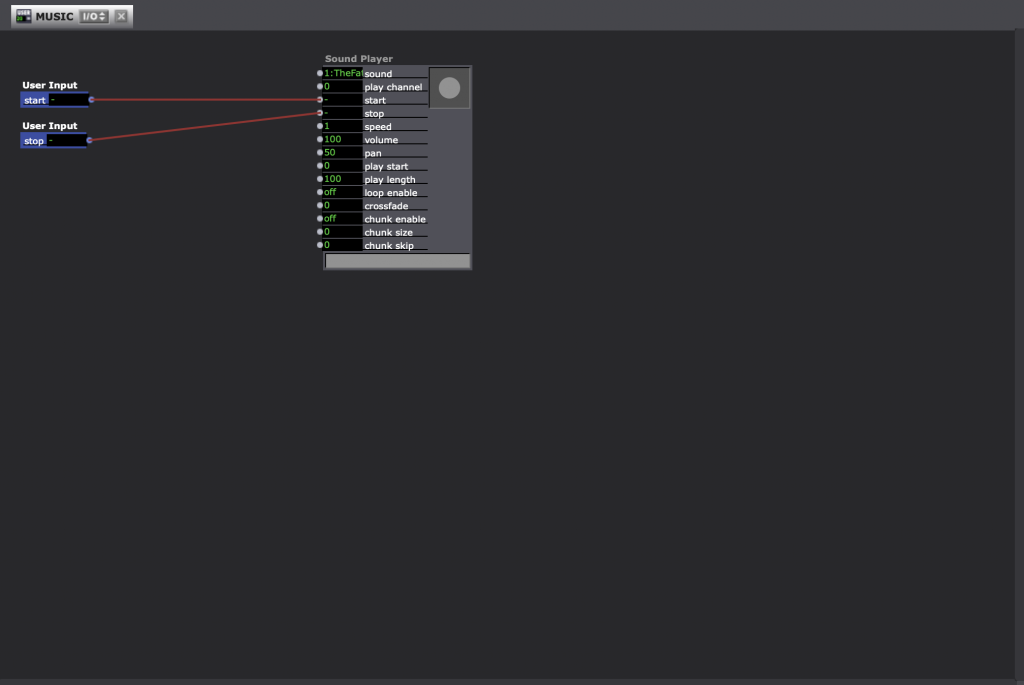

Posted: September 12, 2019 Filed under: Uncategorized Leave a comment »For this pressure project we had 4-hours and some “goals” to attempt to achieve:

1. Someone laughs

2. Maintain surprise for more than 10sec

3. Maintain surprise for more than 30sec

4. Picks up a number between ‘1’ and ‘5’ and reacts

Ideation

Initially for my Pressure Project I decided on 3 different scenarios:

- Have visuals move for 10sec / transition / 10sec / transition / etc.

–The experience would eventually repeat itself. The visuals would be a combination of moving lines, moving shapes, then a combination of both. - Have a webcam track movements of an individual.

–The user would move around to activate the experience. The color of screen would then be tied to how much the user was moving within the space.

RED = No movement

ORANGE/YELLOW = Little movement

GREEN = High amount of movement - Have an interactive game [This is the one I decided upon. Though it strayed from my initial idea.]

–Have shapes that begin to shrink with a timer in the middle. The user is also able to control a circle and move it around with the mouse.

–Once the timer runs out have music begin to play.

–Have a screen that says “Move your body to draw and fill in the space!”

–If they fill the canvas: screen transitions to say “You’re the best!”

–If they don’t fill the canvas: screen transitions to say “Move them hips!” // and repeat the experience.

I chose to go with my 3rd idea. Below is a visual representation from my initial write-up of what I wanted the experience to do. (also available in the attached word document)

The way I spent my time for this pressure project was as follows:

Hour 1: Ideation of the different scenarios

Hour 2: Setting up the different scenes

Hour 3: Asking for help on ‘actors’ I didn’t know existed or how to do certain things within Isadora. (Thank you Oded!)

Hour 4: Playing, debugging, watching, fixing.

Process:

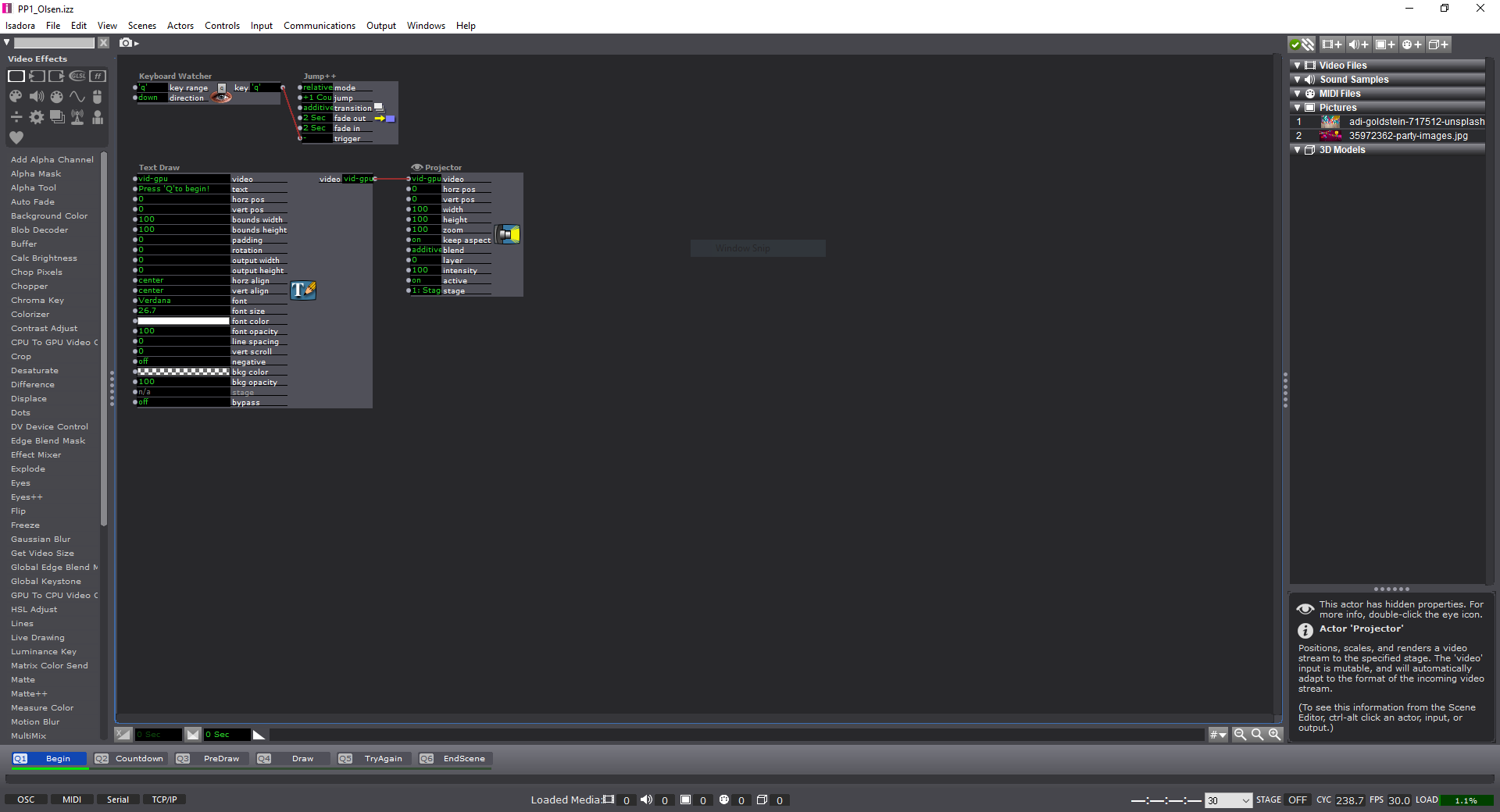

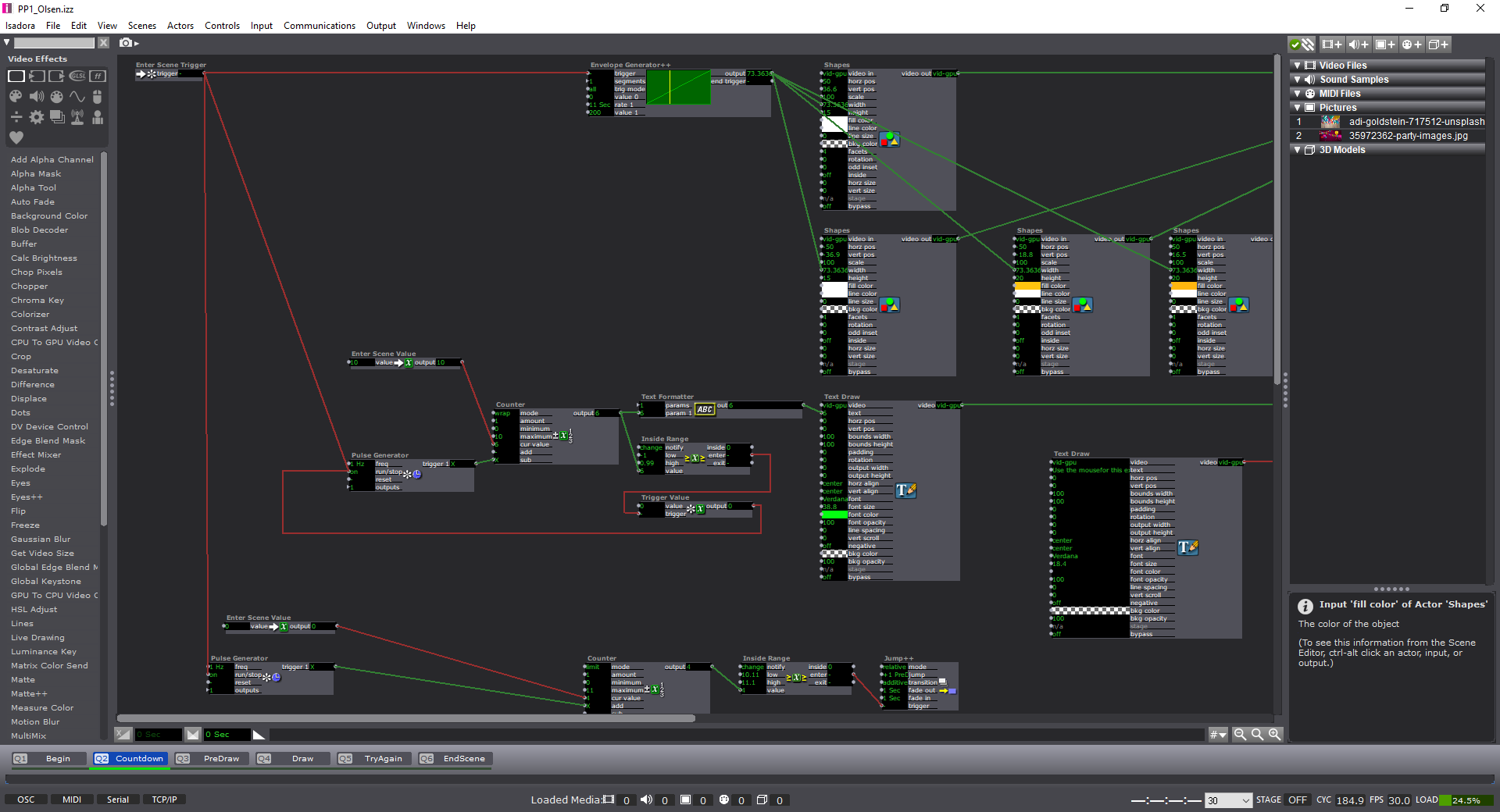

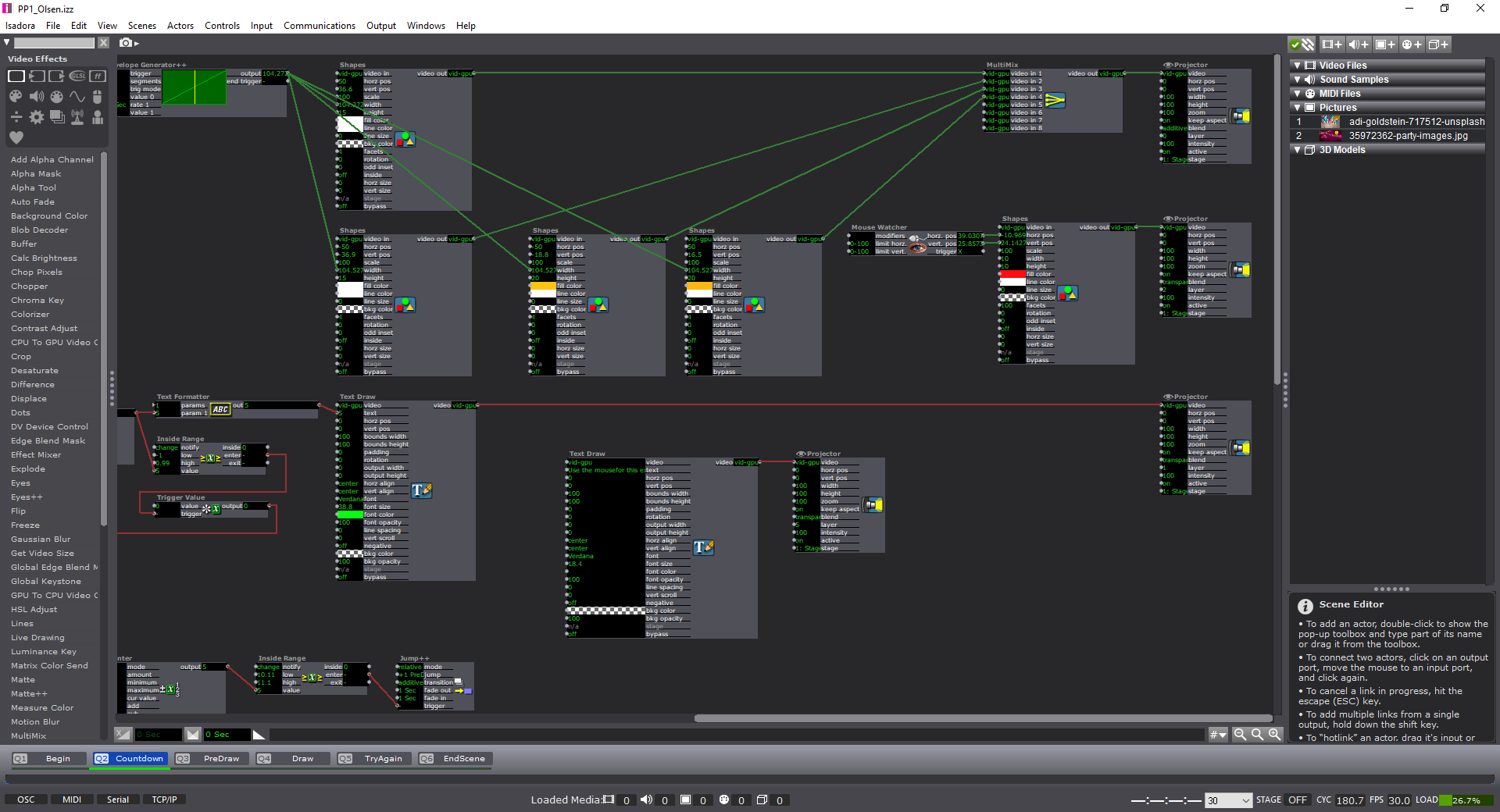

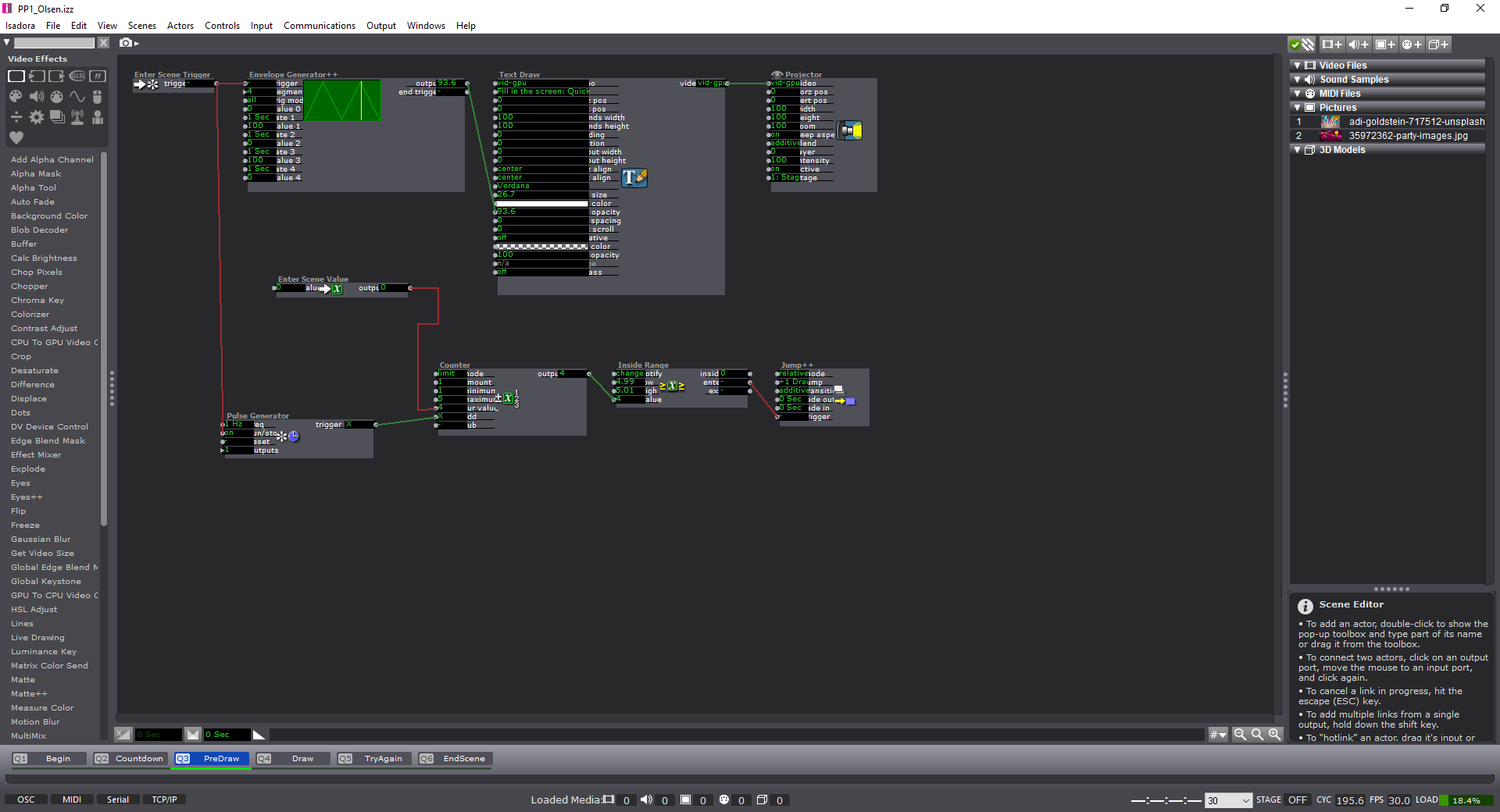

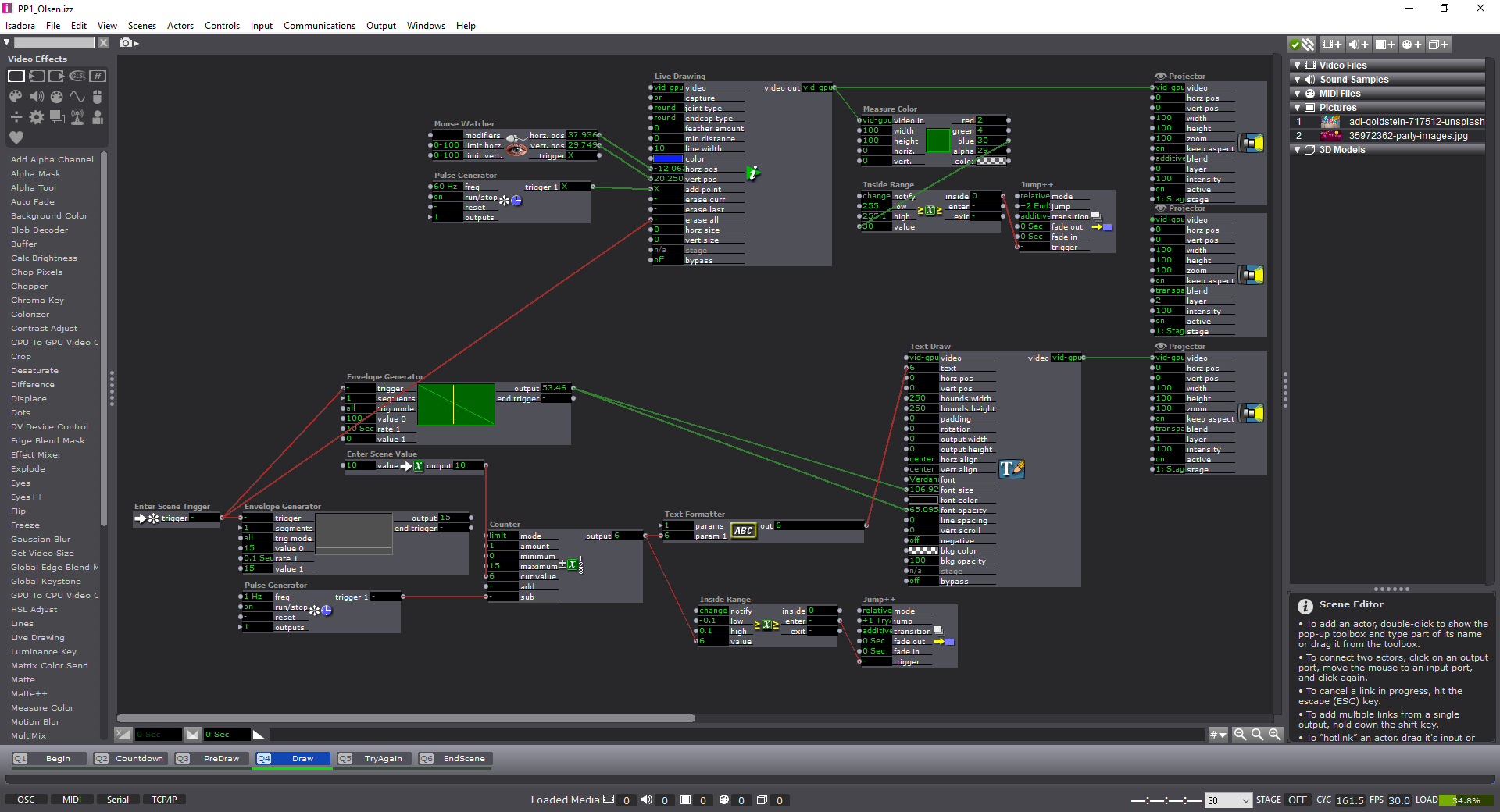

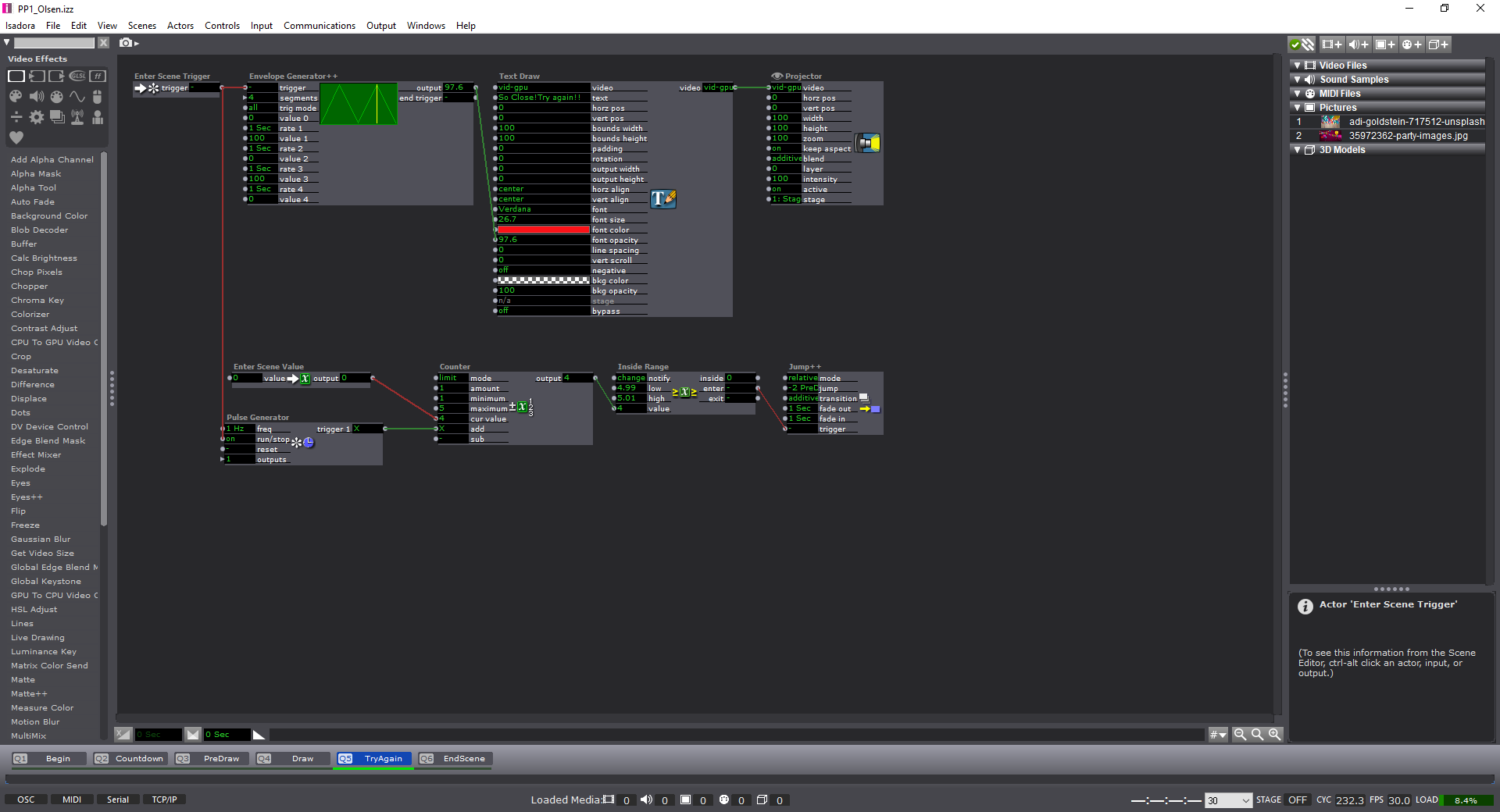

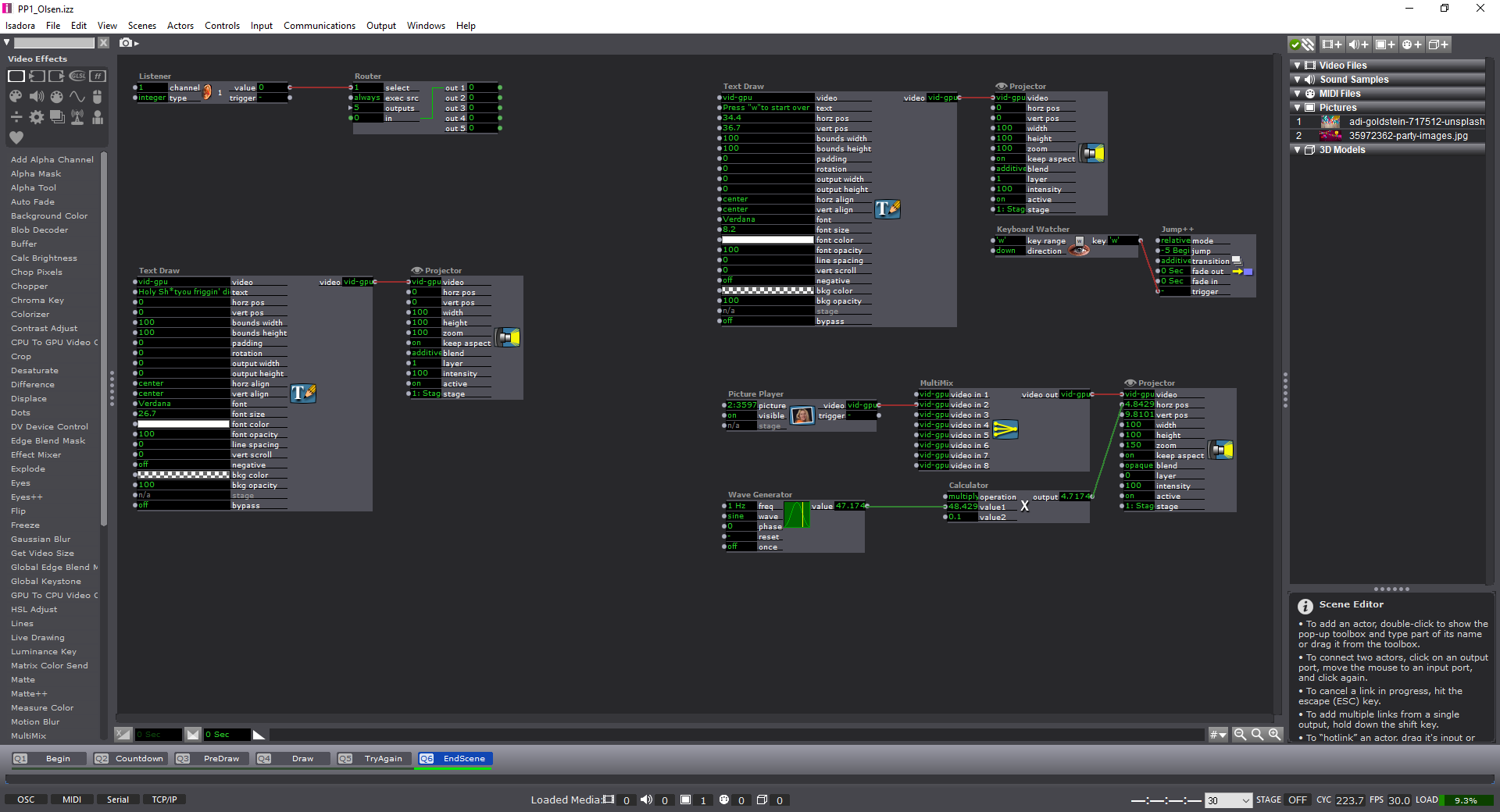

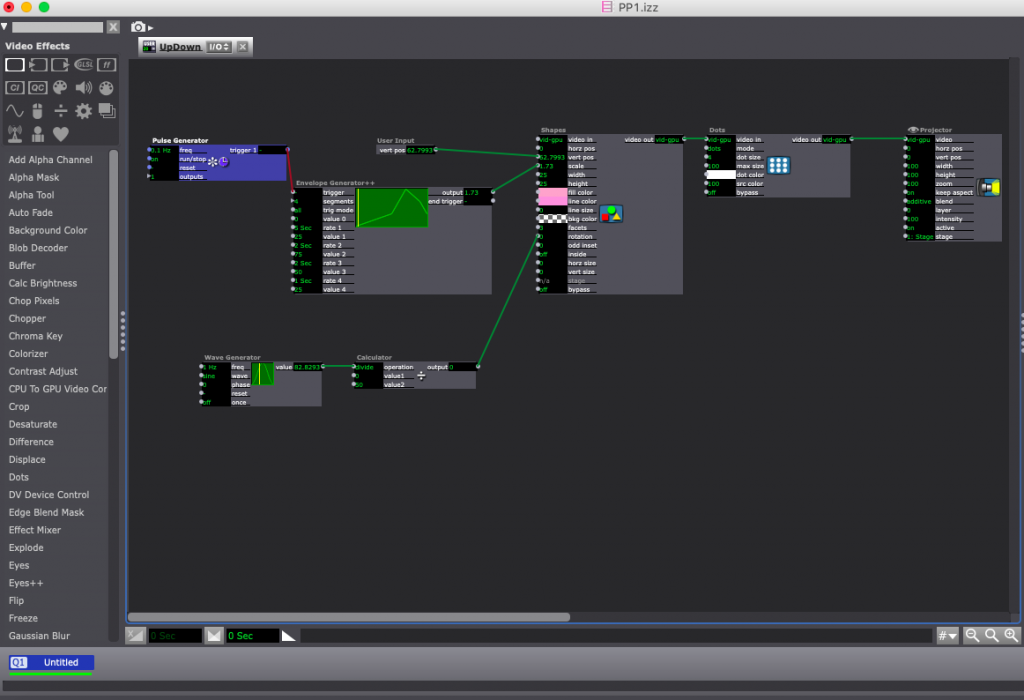

For those who are interested (and for my own reference) here’s the list of actors that I used within the patch:

- Keyboard Watcher

- Mouse Watcher

- Live Drawing

- Picture Player

- Measure Color

- Text Draw

- Text Formatter

- Jump++

- Projector

- Multimix

- Enter Scene Trigger

- Enter Scene Value

- Pulse Generator

- Wave Generator

- Counter

- Inside Range

- Trigger Value

- Shapes

- Envelope Generator (++)

Feedback: (sorry I haven’t learned everyone’s names!)

–Izzy patch could use more of a choice for what color is used to draw on the screen.

–The clear instructions to push certain keys and what was going to be used for the experience was a good touch.

–The sense of urgency was nice.

–Fix the small bug where if you restart the experience it jumps to the end without letting the user draw.

–Change the color countdown on the draw screen to something more noticeable (white?)

Response:

-I think this was a wonderful experience. I haven’t ever considered the idea of a ‘pressure project’ before. But after working through, I am excited for the next one! My approach was pretty straightforward in terms of design process: Ideate, Create, Test, Fix, Test, etc.

-I think next time I will have more people try it before I get the chance to present again as I only had one other person attempt the experience. But, this showcase actually helped me find the bug in my system as well! I couldn’t find the time (or the solution) to why it was happening, but it shows the power of ‘performing’ your piece before the final show to find any errors.

-I’m grateful to have seen everyone else’s project too, and I also appreciated being able to look at the ‘guts’ of their Izzy patches. I learned a few new things in terms of using ‘User Actors’ in an effective way–as I didn’t use any in the first place. It was unfortunate that I couldn’t connect up a “Listener” actor correctly in the end, but the only state change would have changed the ending photo. Regardless I enjoyed working on this, the experience, and the feedback from my peers.

-Tay

PP1

Posted: September 12, 2019 Filed under: Uncategorized Leave a comment »The idea of a “pressure” project had been causing me concern since the beginning of this course. I was very pleasantly surprised at how effective and useful the constraints of the “pressure” were in completing this assignment. I kept track of my time spent working on the project, setting a timer for every block of time I worked, and used all but 30 minutes. I felt that I had achieved all of the objectives of the project that I understood how to complete, and was thus, done. However, with knowledge of what some of my classmates were making, I was concerned that my I-have-no-idea-how-to-work-this-thing attempt wouldn’t be of much interest or use to the class. It was surprising to me that I had created something that everyone was intrigued by and had included enough layers to obscure the aspect controlled by the mouse watcher for so long for many of my classmates. This presentation was the first time that I felt that even my limited skills could have an interesting outcome, and it made the course objectives seem much more accessible. It was a very affirming experience. I also learned a lot from seeing what others had done; it exposed me to more of the capability of Isadora for a variety of aims and goals. Overall, I am excited to continue learning and to discover more of how I can use digital media to further my own research interests while engaging with audiences in new ways.

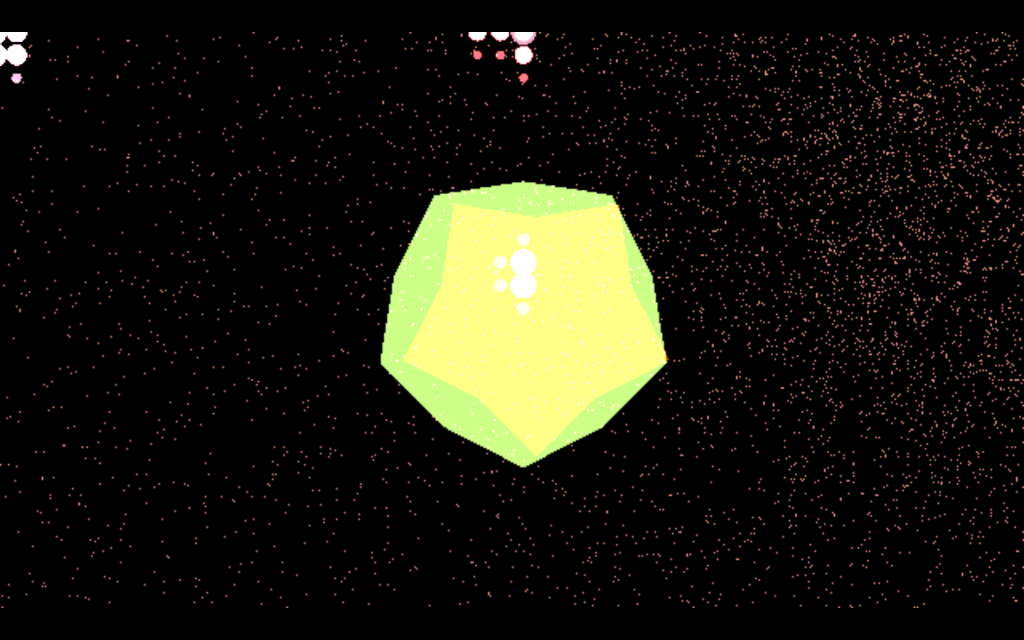

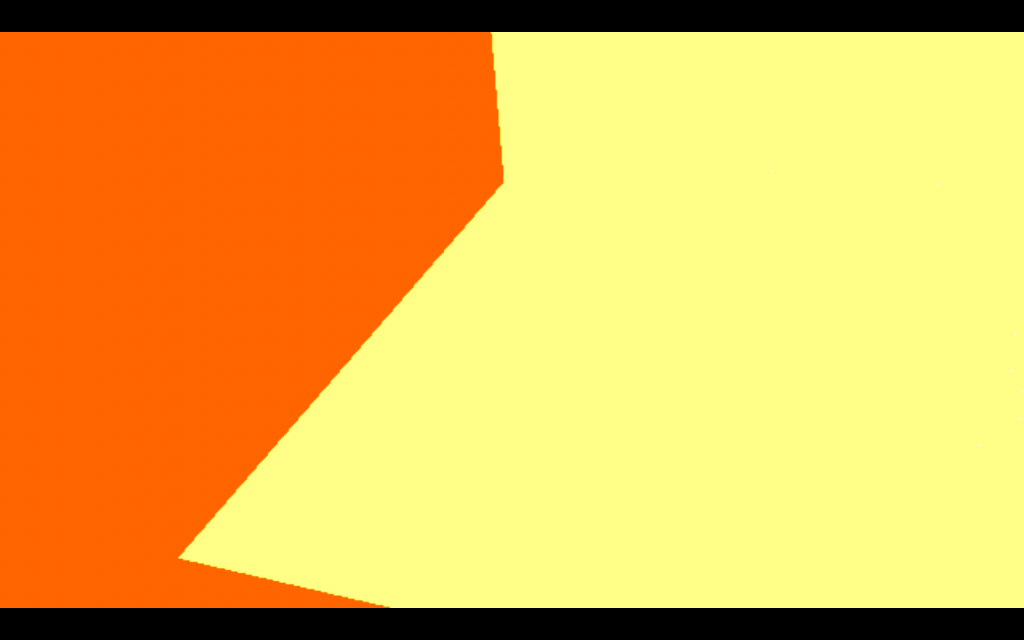

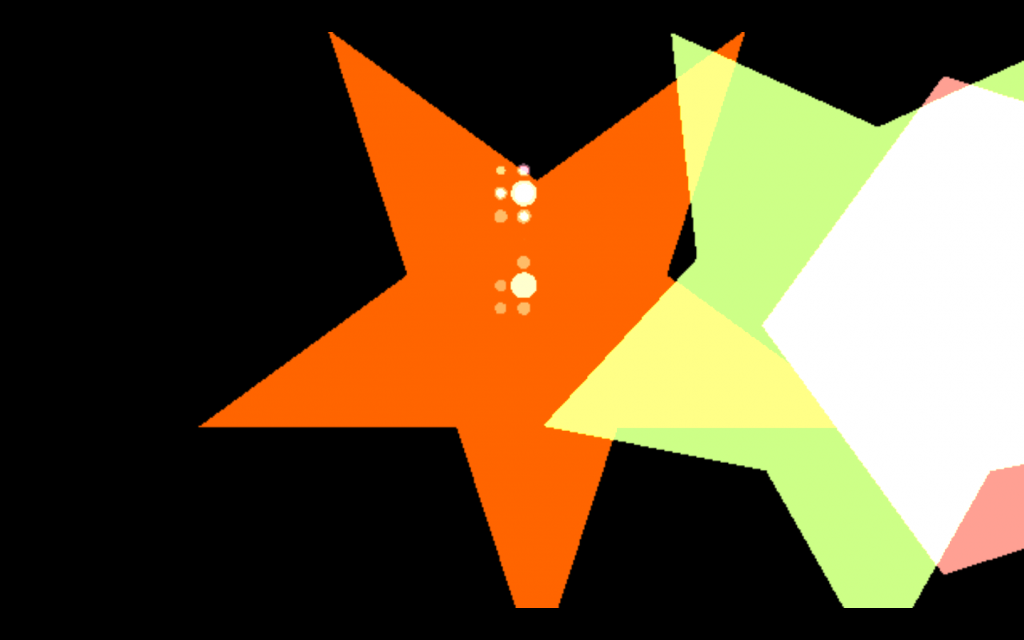

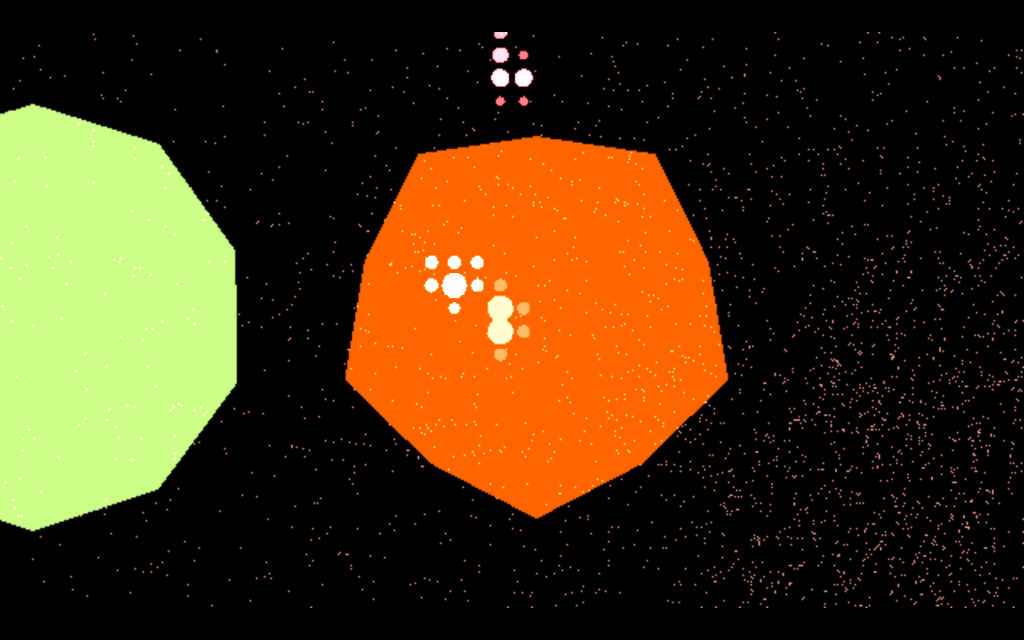

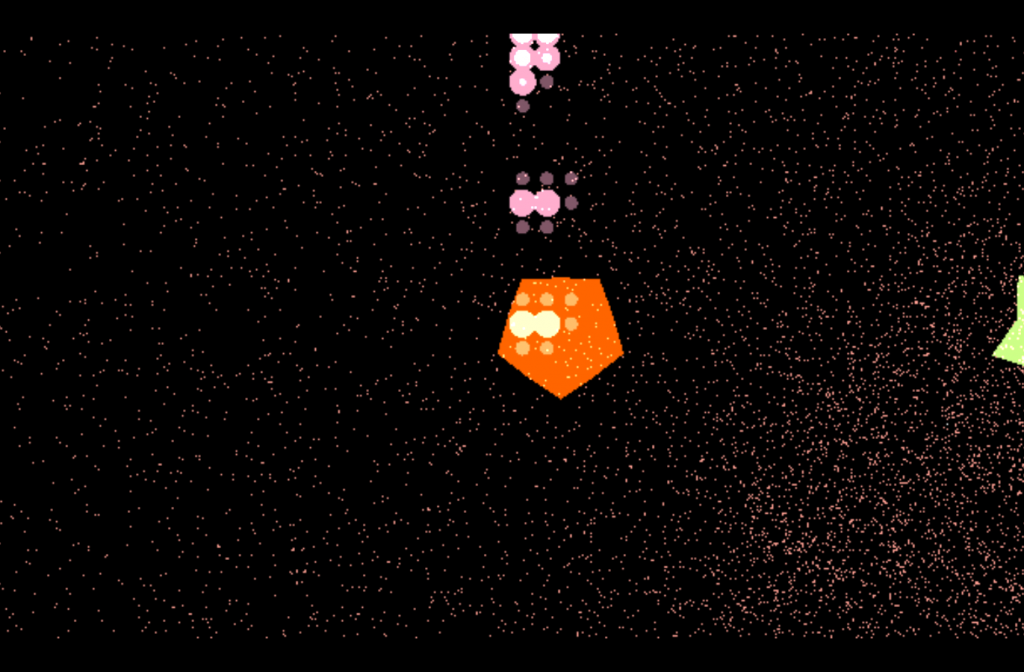

Stills from the stage view.

How does duplication impact color?

I was playing with scale and repetition.

Bump

Posted: September 3, 2019 Filed under: Uncategorized Leave a comment »Fake It Til You Make It

Posted: September 3, 2019 Filed under: Uncategorized Leave a comment »Olsen_ProjectBump

Posted: September 3, 2019 Filed under: Uncategorized Leave a comment »Post Bump

Posted: September 3, 2019 Filed under: Uncategorized Leave a comment »I am bumping up Robin Edigar-Seto’s post about his final project. Robin is my classmate, and not only do I love him dearly, but I was intrigued by his making/editing process and how he arrived at his final product. His method of connecting with memory and storytelling is compelling.

previous project reflection

Posted: September 3, 2019 Filed under: Uncategorized Leave a comment »Reflection on the Magic Window

Posted: September 3, 2019 Filed under: Uncategorized Leave a comment »https://dems.asc.ohio-state.edu/?p=1735(opens in a new tab)

I’m bumping this project because I’m fascinated by the technical feat she has achieved. The way the buttermilk-coated window produces a dreamlike quality while with the projected image is an amazing sight to see.