Pressure Project #2- Orlando Hunter

Posted: February 22, 2022 Filed under: Uncategorized Leave a comment »Pressure project #2

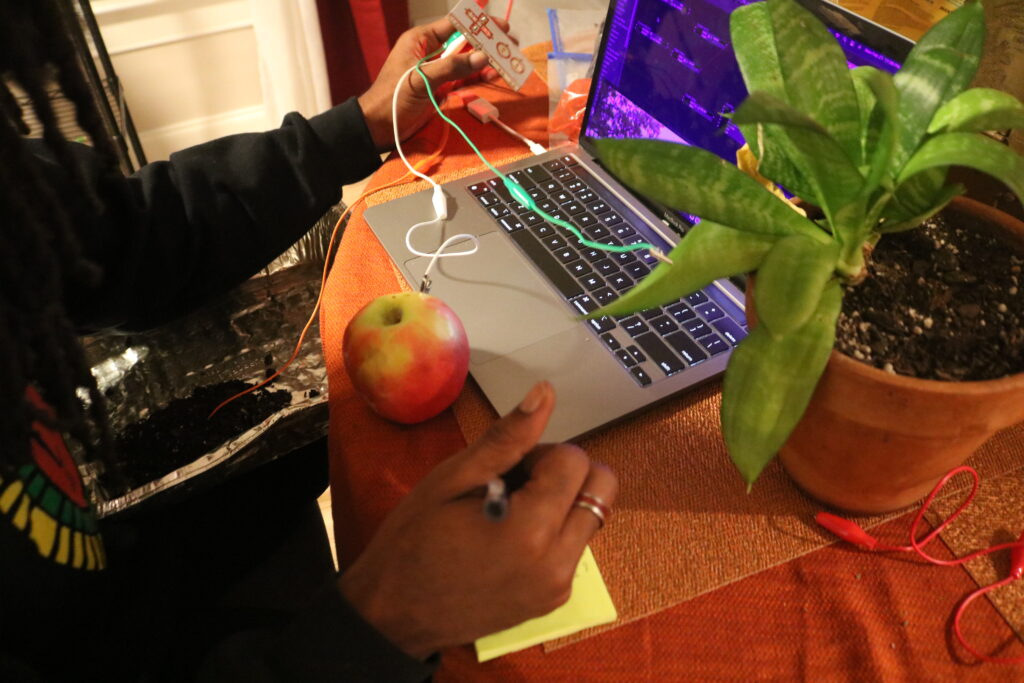

For pressure project two I thought about how to incorporate my artistic practice using a dance film created in an urban garden with my dance collective Brother(hood) Dance! In order to create a revealing element within the film I wanted to use photography to illustrate urban growers in the community. To provide sensory awareness to the earth I engage participants by placing their left hand in moist soil to literally ground them in the moment. While lifting the leaf of a plant to reveal photos on the screen and picking up an apple to remove the photo all while the film plays as background, lift the leaf, surprise! Another photo. You only get the surprise if you stay grounded.

Keep your left hand on the soil.

Pick up the apple for nutrition.

lift the leaf for relief.

Always leave on a high note.

Using the Makey Makey I curated the moist soil to connect to the literal earth on the device which serves as an electronical grounding for the full experience. The apple as the down arrow symbolizing the digestive process which is a downward process to revealing the picture on the screen to illustrate those who grow the nutrition in my neighborhood and the up arrow indicating how house plants give you an upward boost in your wellness from mental to spiritual health.

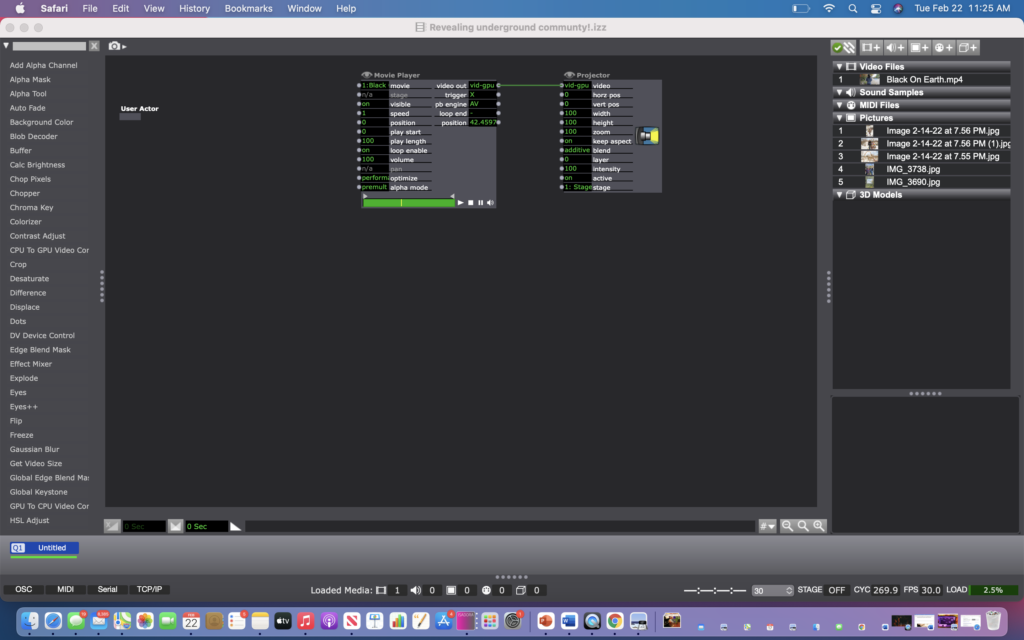

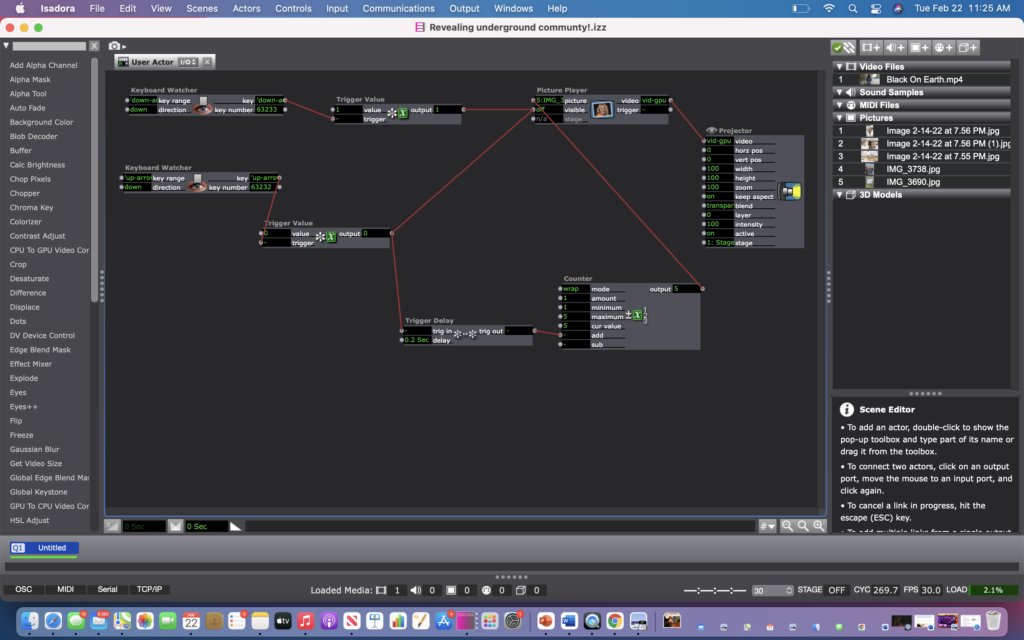

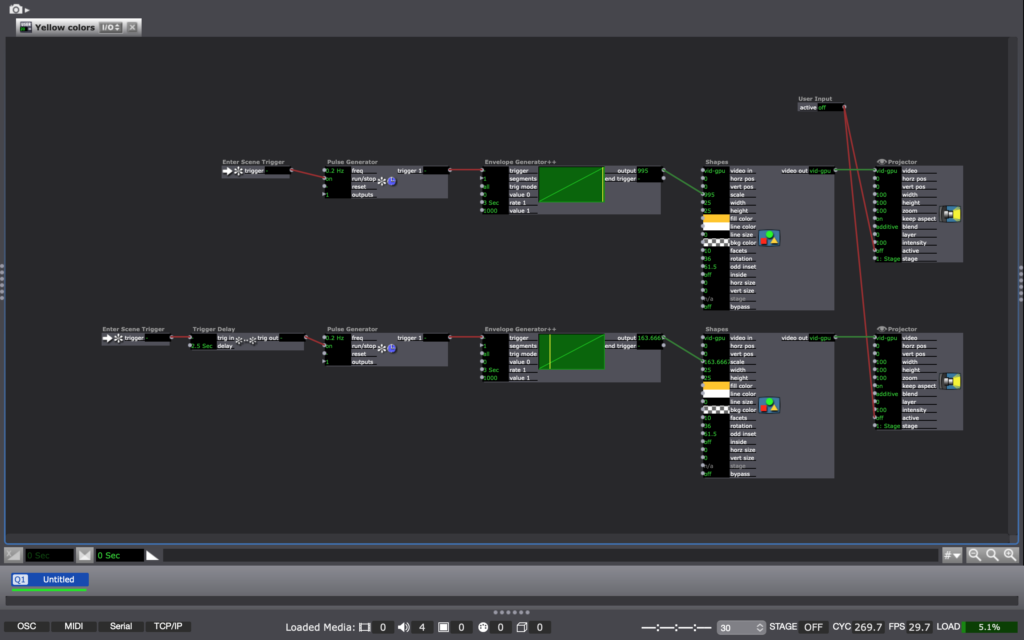

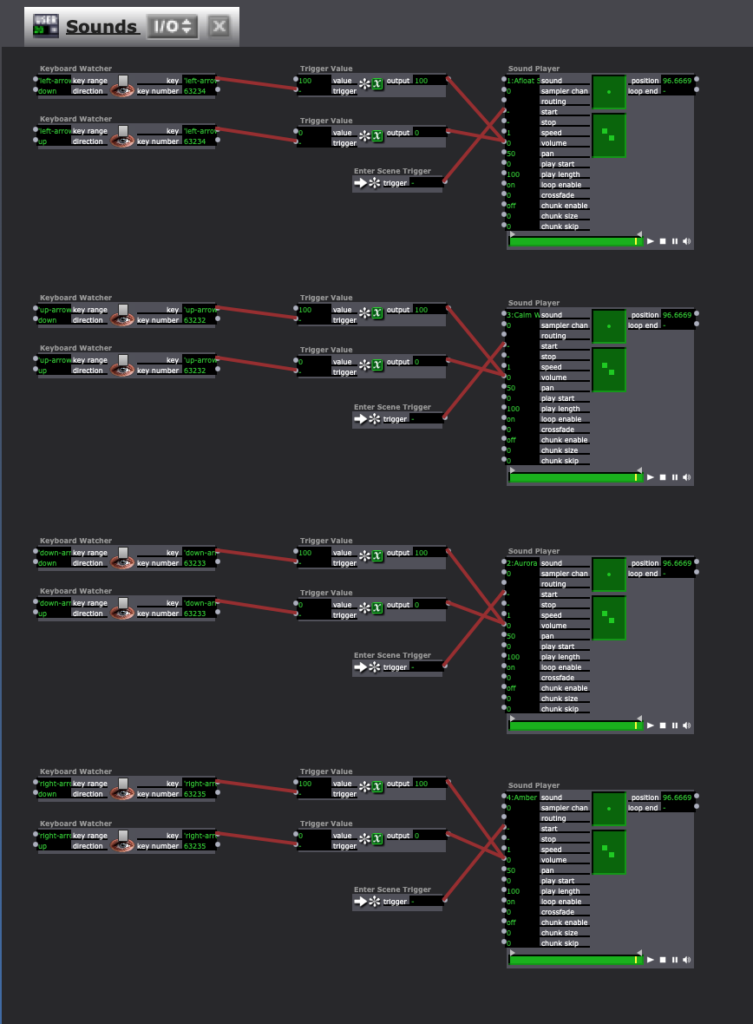

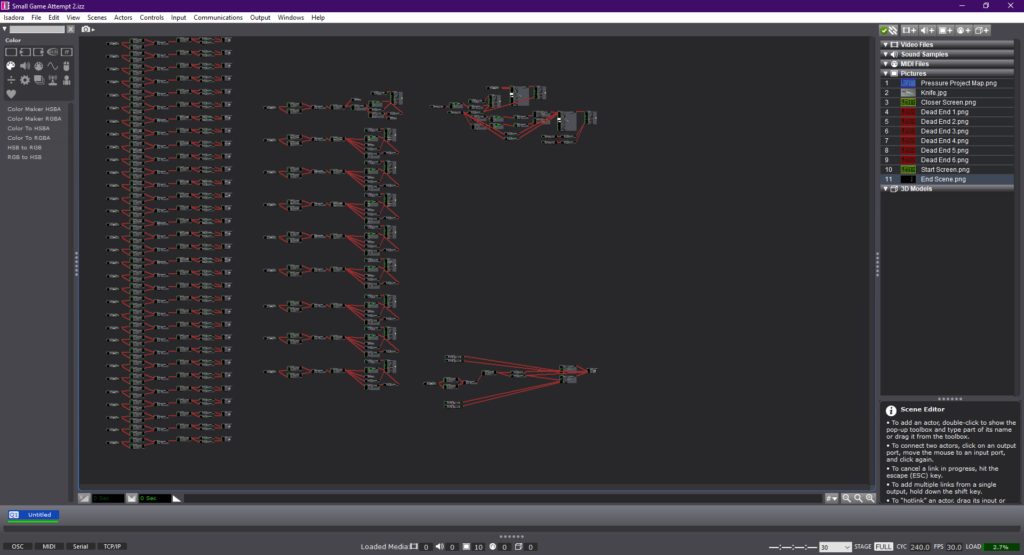

On the front end I have a movie player playing the dance film. To keep the back end hidden I used a user actor. In the user actor I conducted the change of pictures circuit by using a keyboard watcher to indicate up and down. Then I needed to trigger these functions which in this case up equals plant leaf and down equals apple, soil equals. I had to shift the value for the down arrow so that Isadora knows to signal the photos onto the screen. I also created a trigger delay so that the up arrow would know to switch out photos a specified time within the picture player.

Pressure Project 2 – Ashley Browne

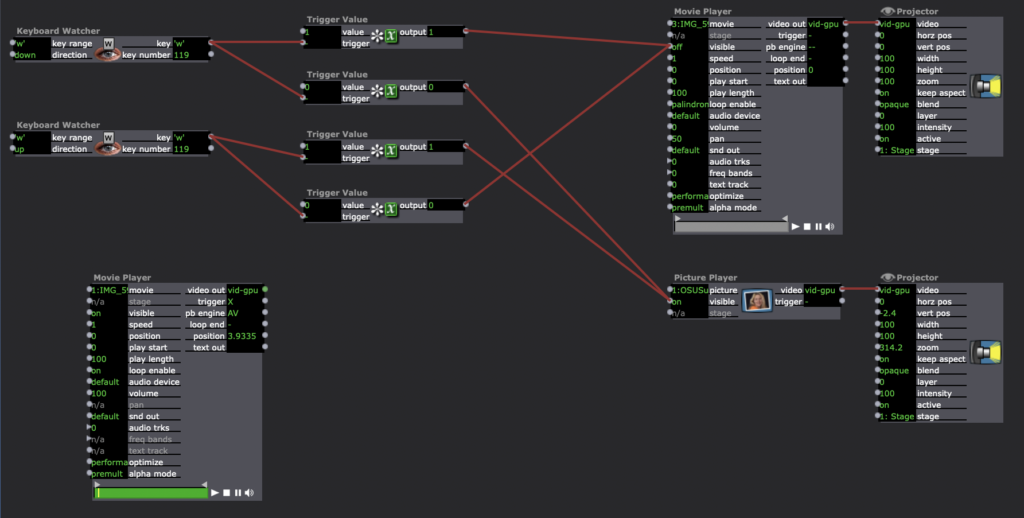

Posted: February 22, 2022 Filed under: Uncategorized Leave a comment »My pressure project 2 uses conductive paint and the MakeyMakey to make a pseudo video synthesizer using Isadora.

On the MakeyMakey, the connection inputs are using the ‘W’ ‘A’ and ‘D’ keys that each trigger a unique video effect in Isadora. The effects are able to be combined simultaneously, and the user is able to mix and match. With the conductive paint, I did a little research about how to work with it before I started. I learned that each unique signal or ‘key’ could not overlap with the other, meaning I had to be very deliberate about where I painted each section. Also, I made sure to work with separate brushes and cups of water so that there was no contamination or mixing between the acrylic paint and the conductive paint.

I’ve labeled the 3 effects as:

- Glitch

- Split Screen

- Surprise (Video Portal)

For the glitch effect, I used a GLSL Shader from the shadertoy website that produces a glitch overlay on top of the video feed. This is triggered by tapping anywhere along the black line of paint on the canvas. Here is the link to the code I used and my patch in Isadora:

https://www.shadertoy.com/view/XtK3W3

I used a gate and toggle switch to turn the GLSL Shader on and off when triggered.

The split screen was fairly simple, I just split up the video feed into two projectors that were similarly triggered on and off using the toggle actor.

Lastly was the Video Portal patch, which was one of the more complex ones. This was ultimately my surprise or secret reveal. It reveals the 2nd video underneath, which plays through the shape of a circle or portal. I learned how to do this by searching through the Isadora forum page, and messed around with the Alpha Channel to get different results. It basically connects the second video into an Alpha Channel that projects on to the specific shape actor. The Get Stage Size ensures that the video proportions are the same.

I put each effect into its own User Actor for better organization and had a movie player and projector set up independently. Here is final documentation of the project:

Pressure Project: Umbrella

Posted: February 22, 2022 Filed under: Uncategorized Leave a comment »My original idea was to have three umbrellas that each represents me and my friends on a rainy day. I wanted Isadora to have unique sonic effects when the operator opens the umbrellas as follows:

Umbrella A: Jenna by herself on a rainy day (in her car)

Umbrella B: Ellen by herself (listening to music)

Umbrella C: Sejeong by herself (taking a walk)

Umbrella A and B: Jenna and Ellen having phone call

Umbrella A and C: Jenna and Sejeong having phone call

Umbrella B and C: Ellen and Sejeong having phone call

Umbrella A, B, and C: All Chatting

I wanted to reveal our video clips when the audience figure out the system. However, I could not figure out how to trigger videos with multiple keys in different combinations, so I decided to narrow them down to one variable: Jenna on a rainy day, indoor versus outdoor. When the umbrella opens, the audience see an outdoor video on a rainy day. When it closes, the audience see an interior image of the Sullivant Hall, where I took the video.

Pressure Project 2–Allison Smith

Posted: February 22, 2022 Filed under: Uncategorized | Tags: MakeyMakey Leave a comment »For this project we were required to use the MakeyMakey and Isadora, and our prompt was that a secret is revealed. I had trouble coming up with what I wanted to do for this project. I tried to think of an experience involving touch that I already enjoy, and then look for a way to make that more enjoyable with an unexpected digital media element. I went with the game Twister where there is a mat and you have to place different hands and feet on different colors. Since that reminded me of dance, I wanted to add music to it. To make it extra fancy, not only did I add music but I also added visuals for each of the colors.

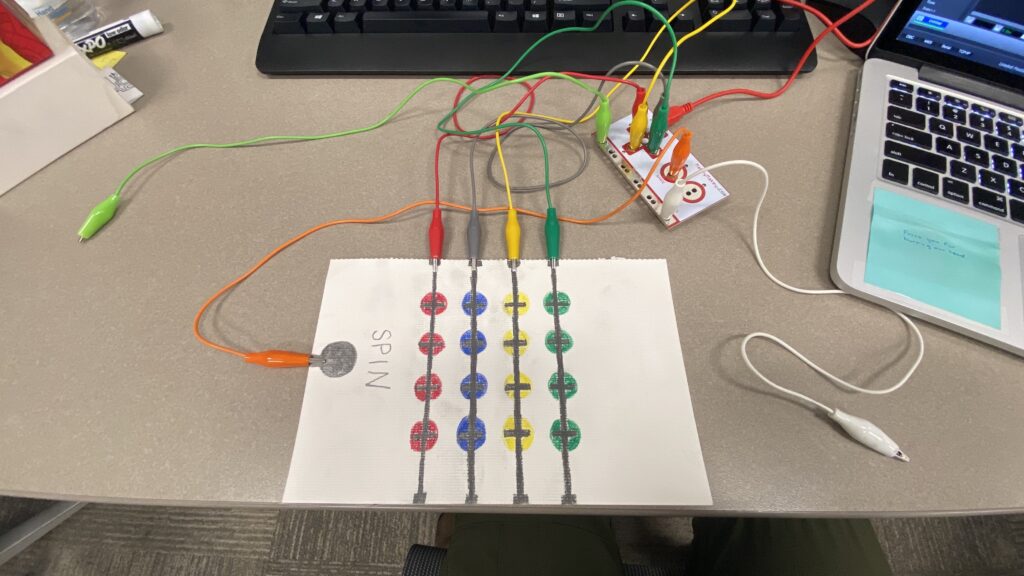

Here is a picture of my Twister game. Due to the shortage of time, instead of full body Twister, it is Finger Twister!

As you can see, I used the pencil to connect all of the colors to one trigger. I also chose to make a spinner on the side. With one participant holding the light green grounding alligator, they could use their finger to press the spinner. With the other participant holding the white grounding alligator, they could play the game with their fingers acting as the connection.

When the red was played on, it brought up an image of red squares circling the screen while playing the sound of a synth. When the blue was played on, small blue circles fell from the top of the screen similar to digital raindrops or snowflakes, with a different sound playing. When yellow was played on, there were yellow stars that changed in scaling on the screen with a new sound. Finally, when the green was played, there were three green triangles rotated in the center of the screen with a grounding beat playing.

There were a few surprises ingrained in this experience. Obviously, normal twister doesn’t play music or cue videos with the same colors, so that was a surprise. Another surprise was that the spinner had the option of not just saying “THUMB RED” but a secret “PARTY” cue that would give permission for ending the game and just playing around with all of the sounds as a sort of DJ. The final secret surprise, though, was that all of the sounds fit together on the same beat and made a cohesive song together. As you can see in the video from the presentation, one truly gets the opportunity to create their own music.

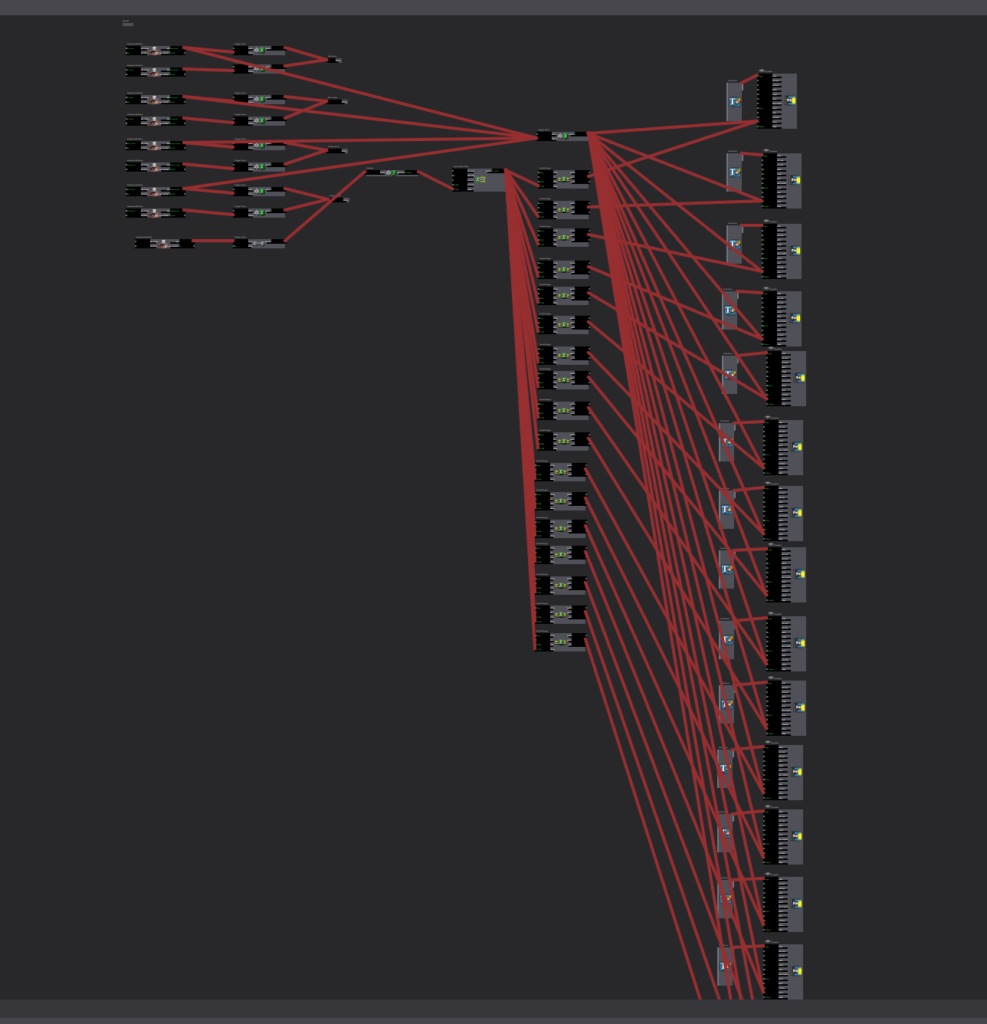

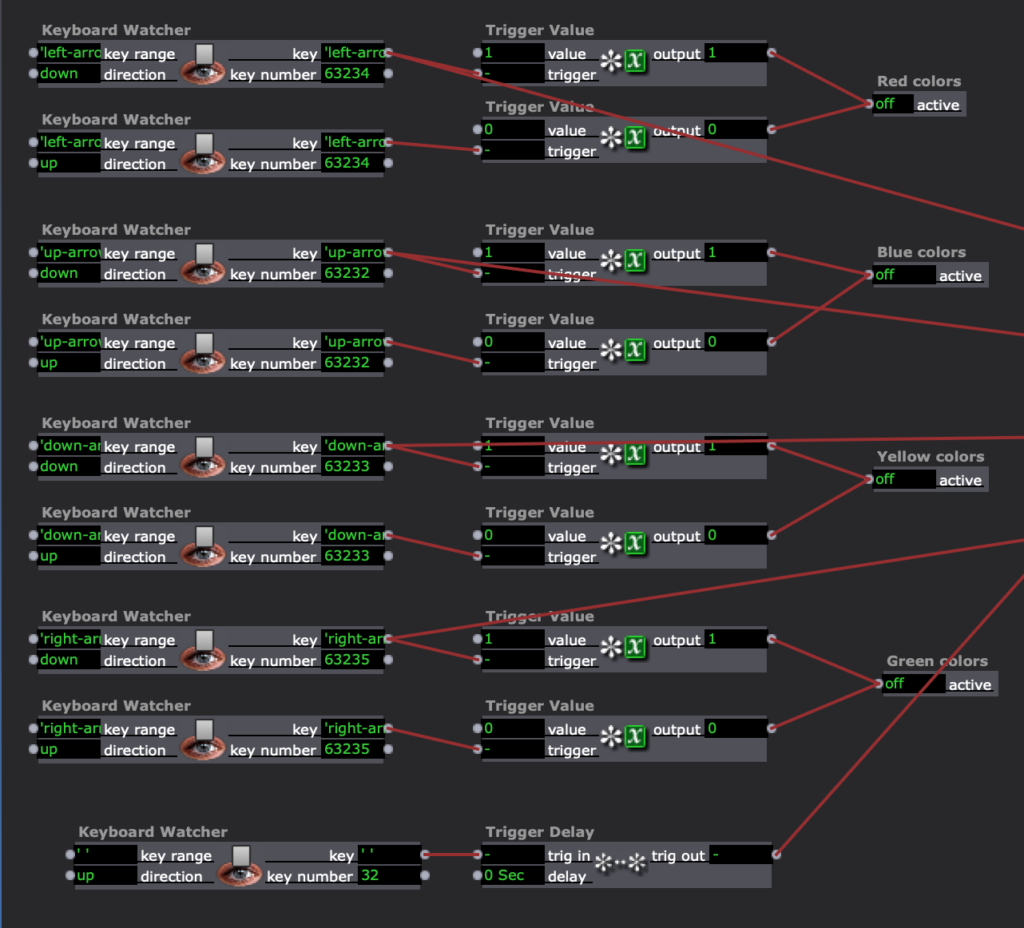

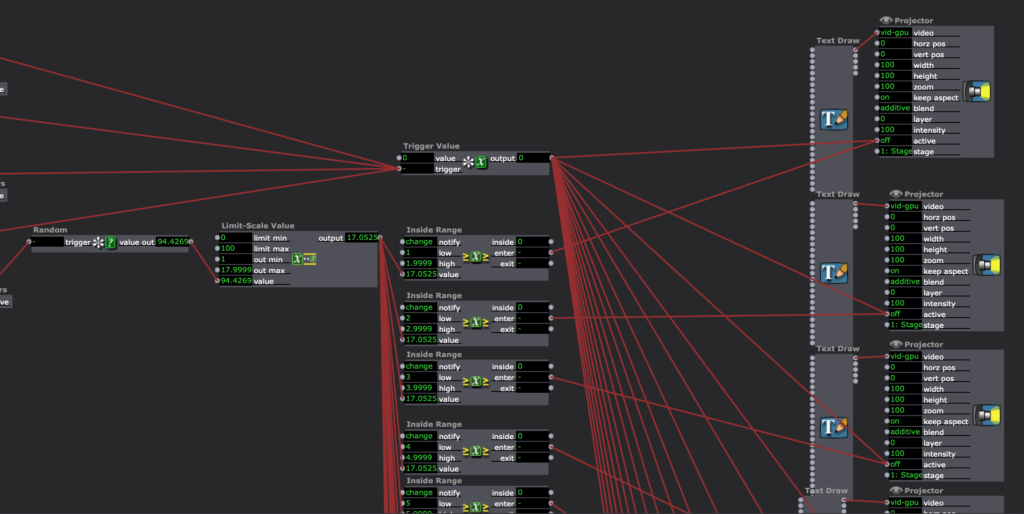

To make this happen, I created a different keyboard watcher trigger for each color and for the spinner. For the colors and sounds, the trigger was on for the “down” action of the key and off for the “up” action of the key. For the spinner, there was a random generator from 1-17, and depending on the number, a certain text was triggered. Then, when the down action occurred that also activates the sound and color, the spinner text would be deactivated. Here are screenshots of my isadora setup.

Finally, in order to create my sounds, I went into GarageBand and played around with the different loop tracks. After finding four loops that I liked together, I exported the raw aif. files into Isadora. They loops continued playing the whole time so they stayed on the same timing, and all that was adjusted was the volume.

If I were to keep working on this project, I think it would be fun to make this into a full-sized Twister. A more bite-sized next step, though, would be to make the spinner text go away with a timer instead. A new key, or color, needed to be touched in order for the text to go away, and if the spinner instructed the participant to stay on the same color with a different finger, the text stayed on the screen. Overall, though, I am super happy with the product and found it was fun to program and it was enjoyable to watch my friends experience this.

Pressure Project 2, Gabe Carpenter

Posted: February 21, 2022 Filed under: Uncategorized Leave a comment »My pressure project focuses on playing a simple maze game with the Makey-Makey. The game is controlled with the D-Pad and includes a reset mechanic, secret messages, and a short ending that reveals a secret exit.

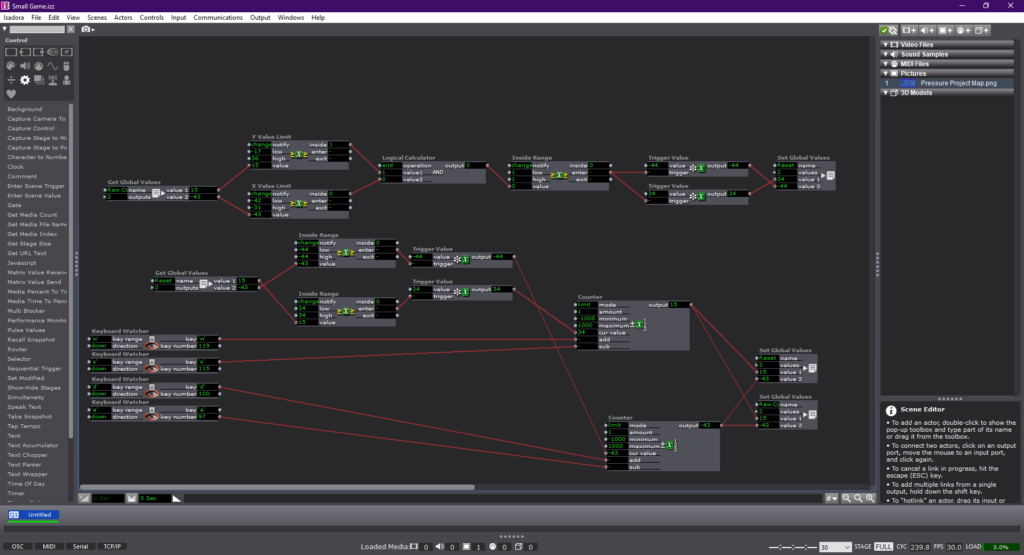

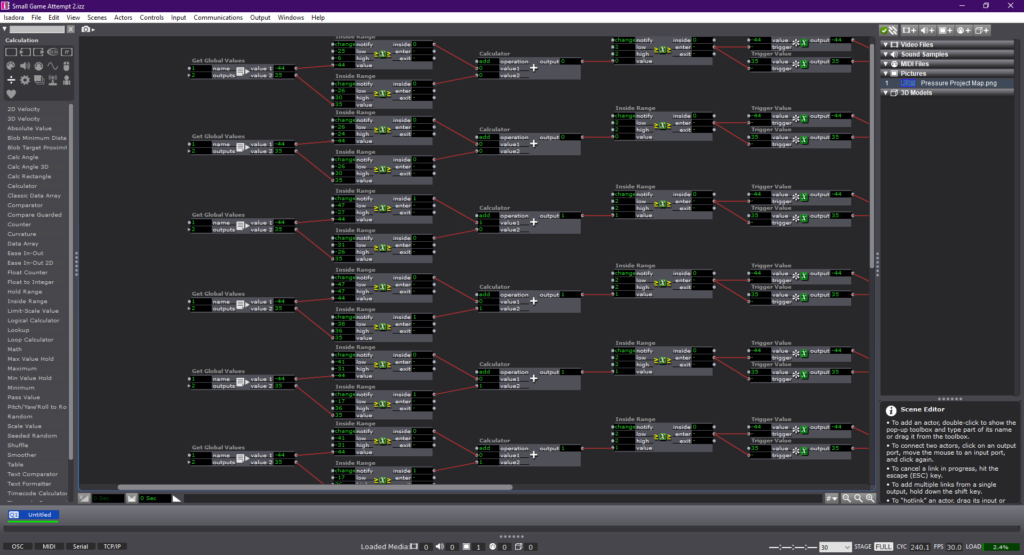

The first trial of my game included a non-functional collision system but aimed to set up the basic wall detection that the program would use. The wall detection started out as an attempt to use 2 inside range actors and comparators to determine if each parameter was met. The 2 inside range actors act as the x and y boundaries of any given wall.

The next phase of development yielded a solid method for determining hitboxes. This method feeds the aforementioned two inside range actors into a calculator, that then adds the outputs together. Upon receiving a “2” output, the calculator triggers a value that resets the player back to their original position. The data of the position is sent to a global values actor, which relays it to the counter that acts as the memory portion of the game.

The final layout may seem messy at first, but the long row along the left side of the image is a series of hit detection pipelines for each individual wall. The row in the middle is an added sequence change that occurs when the player enters a certain area. The screen will change color and a message will be given to the player. This is done using another hitbox detection system, and triggering a waveform generator to fade the new image onto the map and then take it away again. The other smaller pipelines are the controller link and the ending “cutscene” trigger.

This is a video of me playing the game and achieving the ending.

Pressure Project 1- Congestion – Min Liu

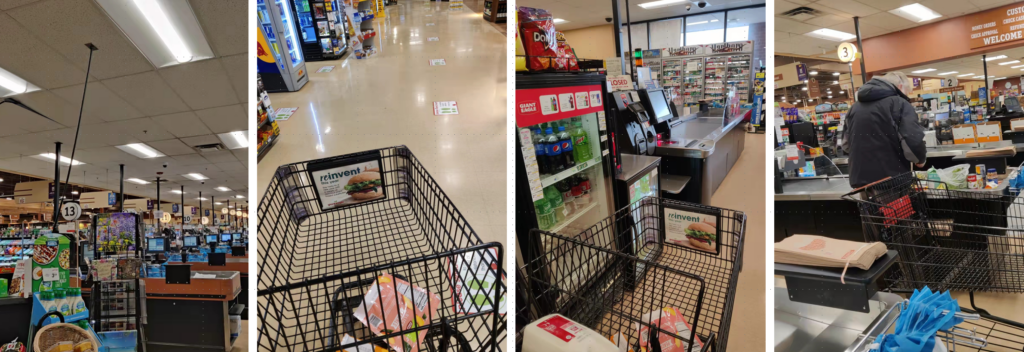

Posted: January 27, 2022 Filed under: Uncategorized Leave a comment »In weekends and holidays, there are many people shopping in groceries and congestion often happens in checkout area. For this pressure project, I observed people, environment and technology in the self-checkout area of the Giant Egle grocery store to have better understanding of the traffic happens here, and brainstormed some ideas (benevolent intervention) to solve this issue.

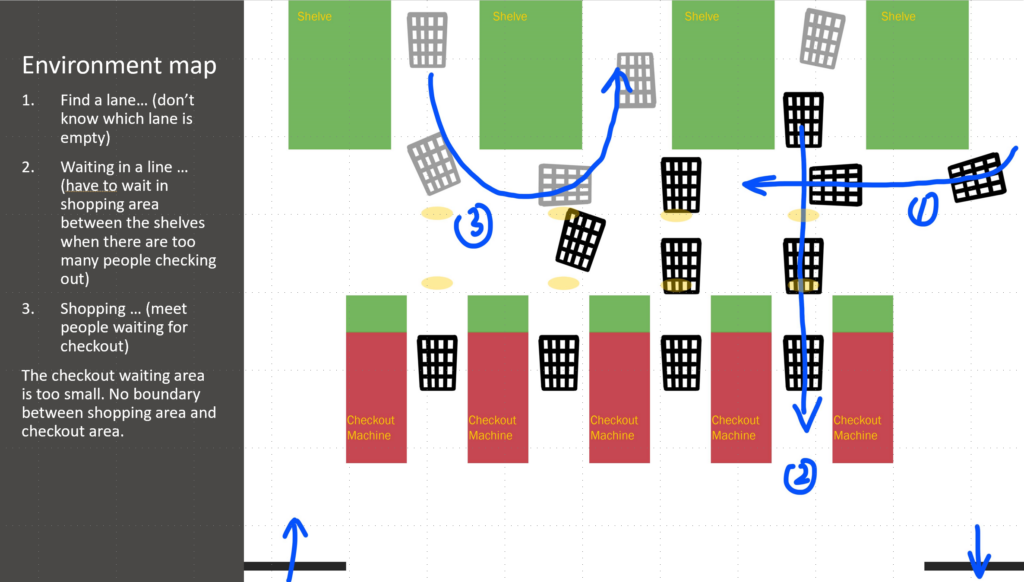

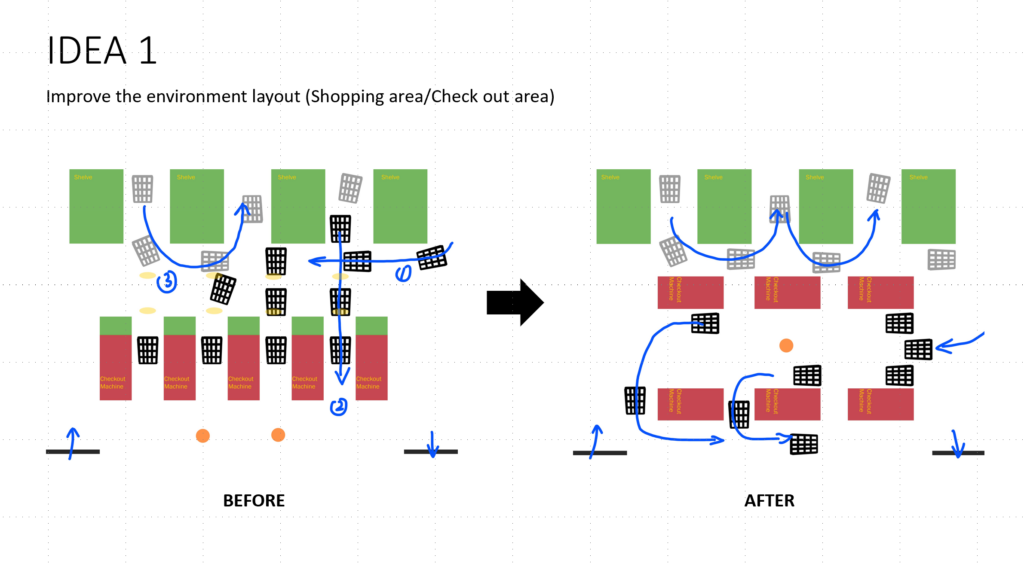

I took some photos in this area. Based on past experience and my observation today, I draw this environment map which shows the flow direction, congestion pattern and their relationship to physical environment.

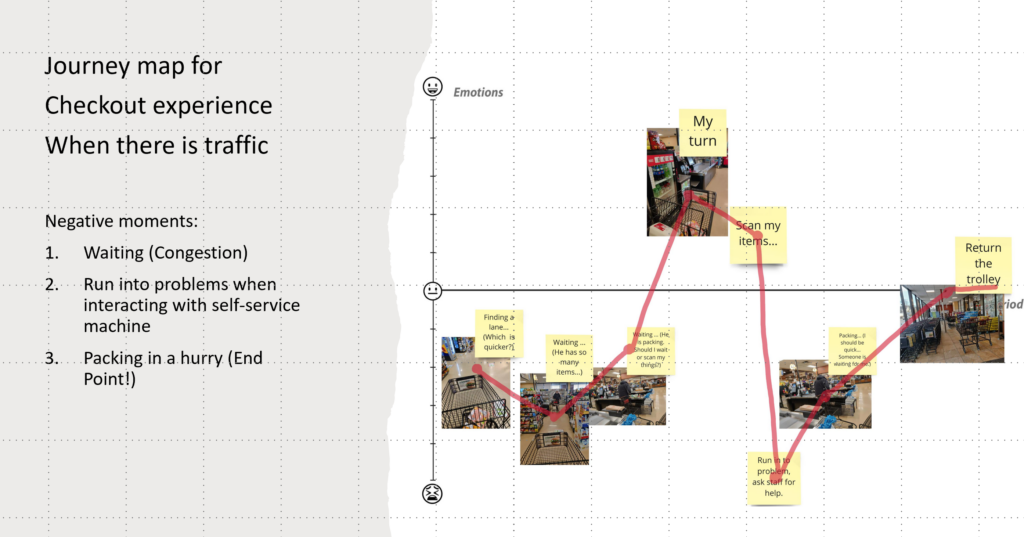

The journey map helped me organize and visualize custom’s behavior and emotional change during self-checkout process. It also shows some design possibilities to enhance shopping experience.

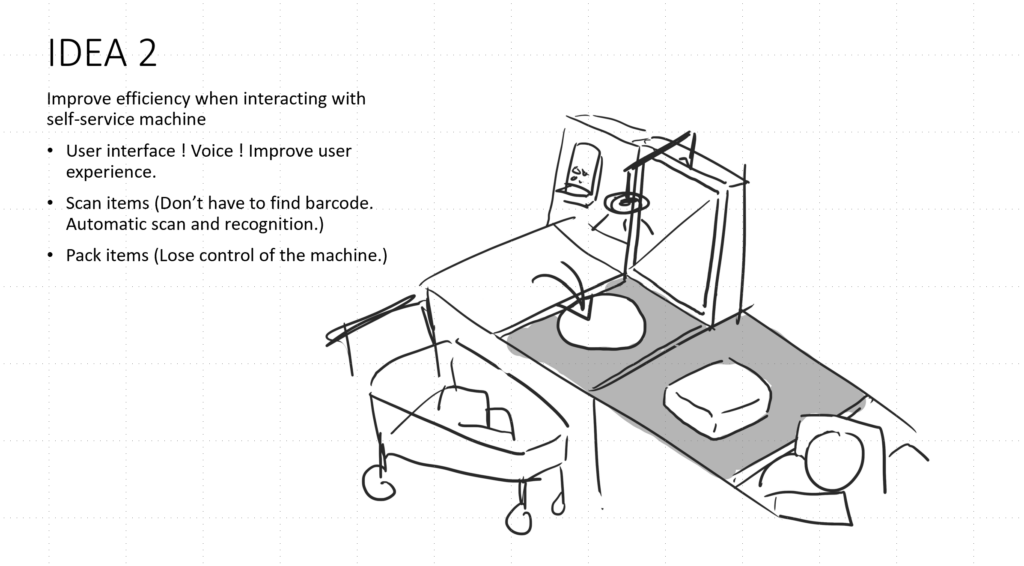

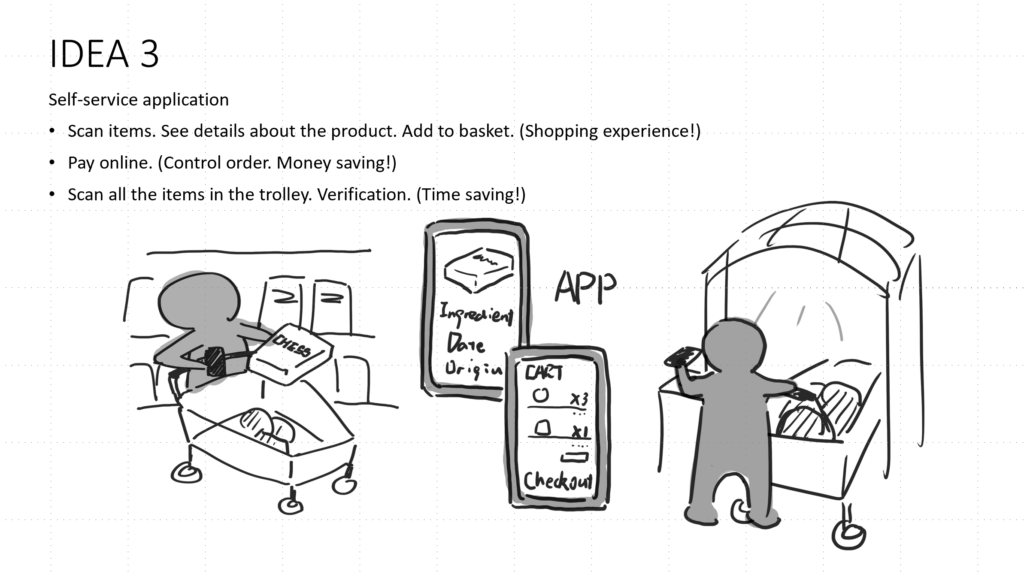

To avoid congestion and enhance self-checkout experience, we can change the environment layout (IDEA 1) and improve the efficiency of interaction with the self-service machines (IDEA 2). Re-designing a self-service machine is a big project. A lot of things need to be improved including the user interface, packing area, scanning system… More automatic scanning system can avoid troublesome process of finding the barcodes or inserting the item number. (Alec said Kroger had tried similar checkout system in some pilot stores.) Then I suddenly think of why we must wait and checkout in a specific area and why we cannot just pay while we are shopping. And I came up with the third idea -a self-service app, which I thought it’s time and money saving. Alex said that this app can be malevolent if it’s designed to intrigue consumption. It’s true. It’s important to think about possible issues causes by technologies.

Pressure Project 1 (Theme Park Admission Queuing) – Alec Reynolds

Posted: January 27, 2022 Filed under: Uncategorized Leave a comment »

During my visit to Universal Orlando Resort Island’s of Adventure, I noticed an interesting dynamic of social congestion before I even entered the park. To gain admission to the park, one must pass through turnstiles at the front of the park with a valid form of admission. Before the park opens for the operating day, eager guests, ready to get a jump on their day, pile in by the hundreds. This leads to a huge back up of guests and an inefficient admission throughput. Before we dive in to a solution to this problem, let’s contextualize the situation with some of my observations.

OBSERVATIONS:

-Looking at the nature of the congestion I noticed that it was homogenous and the majority of people moved towards the gates. A majority of the congestion contained groups, versus individuals.

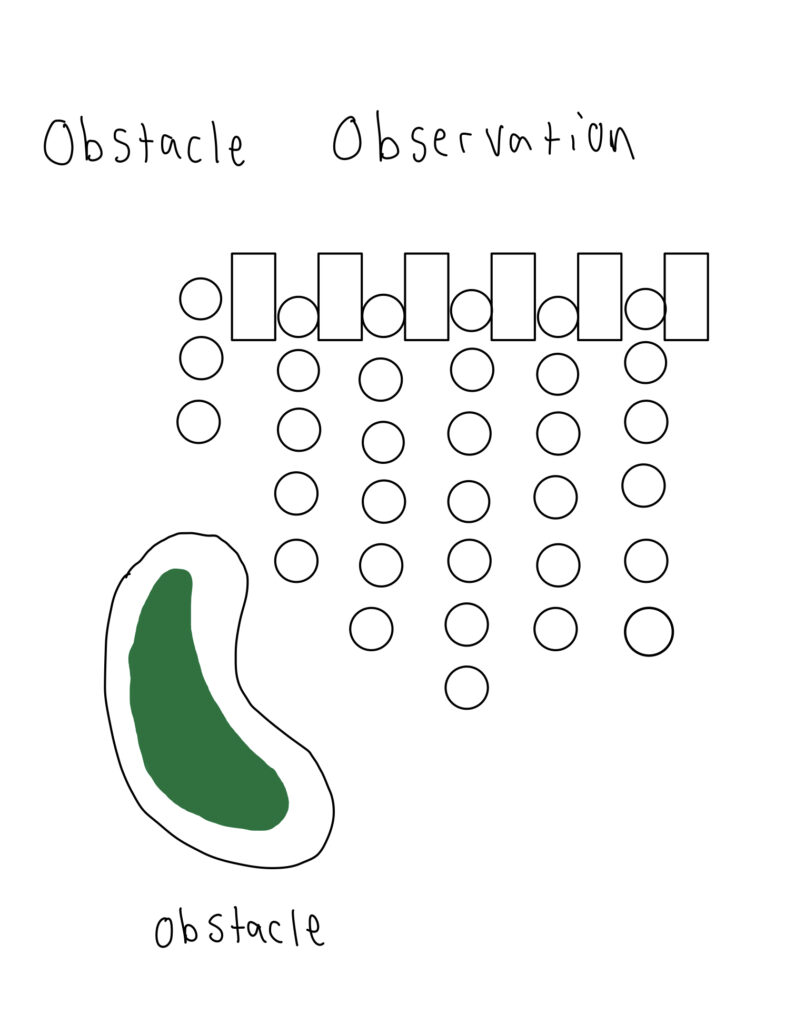

-There were many obstacles that the crowd had to navigate around, including floral planters, ticket kiosks, poles and shade structures, ticket sale booths, trees and other common objects like waste recepticals.

-All the lines moved at a unique pace.

-Groups were often spotted abandoning their spot in line to opt in for a seemingly faster one.

-Some turnstile lines appeared to have less guests in them if they were partially obscured by an object.

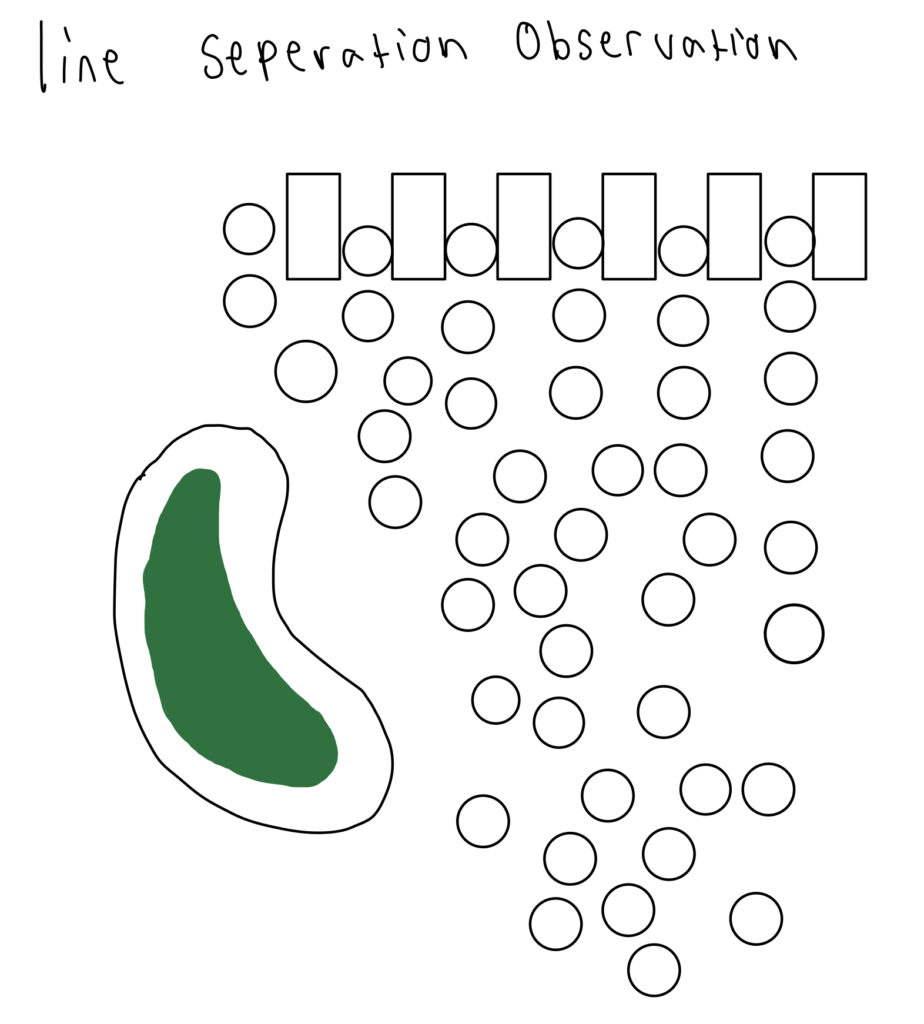

-From far away, there was no clear separation of individual lines.

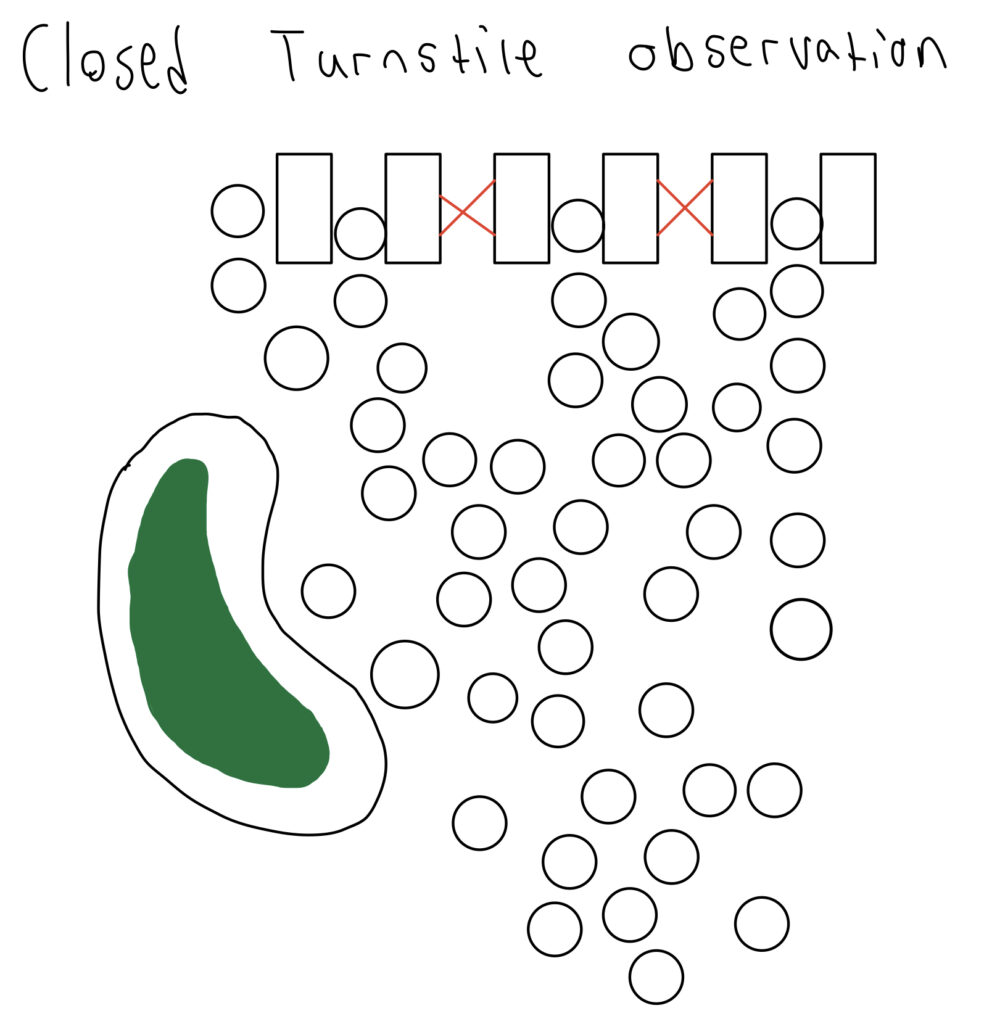

-Closed turnstiles would lead to a bottleneck merge effect close to the open turnstiles, creating an even denser congestion.

Observational Imagery:

Benevolent Solution

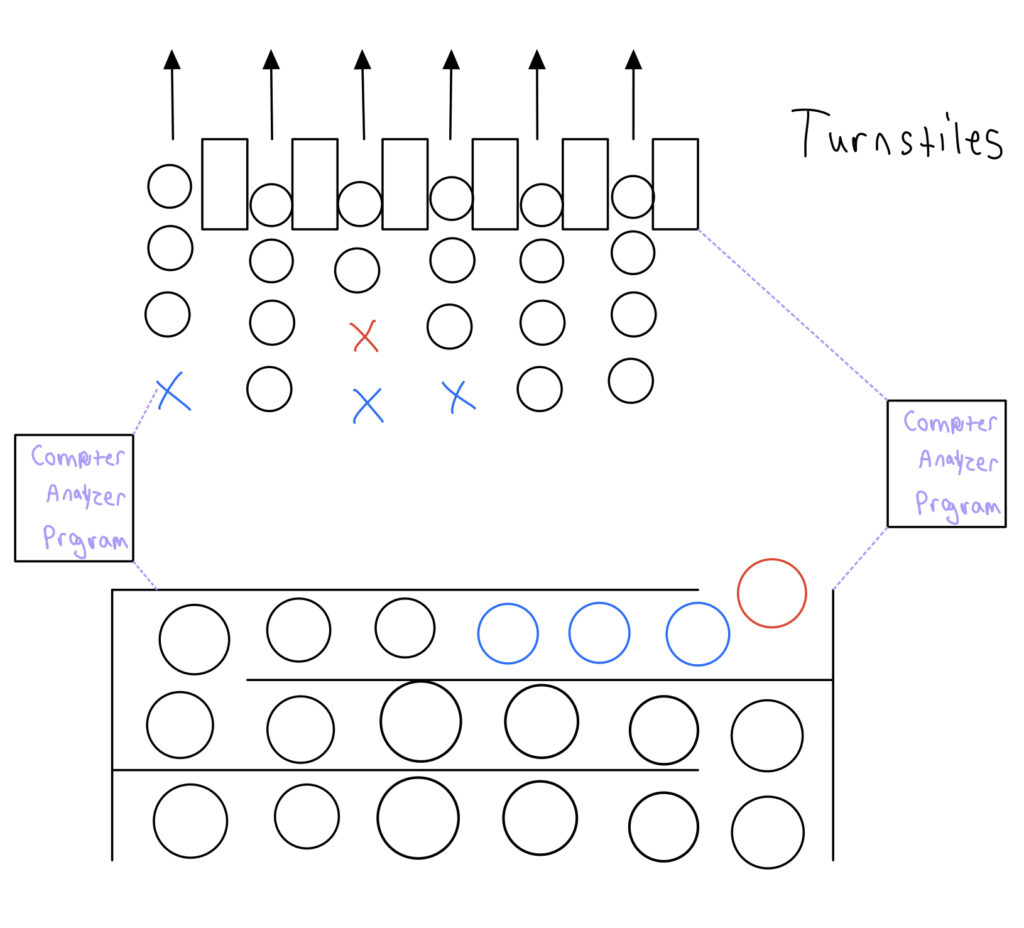

My solution to this problem revolves around a machine program that can identify the current state of a line regarding its length and identify guests waiting in a single queue and assign them a turnstile row to go to. There would be a physical queue that all guests would enter together and they would eventually be instructed on which line to join by a human operator who would have a line of communication with the crowd grouping analyzer.

More Specifically, the program would have to give a weight to different groups in order to ensure that its assignments create a near equal wait time for all guests entering the park. There are a lot of discretionary factors that would have to go into this type of screening algorithm that borders the line of data representation and discrimination.

Ultimately, this is a way of giving the perception to guests that their wait time is less than it actually is. By having the crowds moving consistently in a single line (everyone equal) and then assigning them a turnstile line that is an equivalently short wait as everyone else, it should take away that nagging sensation that all too many of us have had before, “Ugh, I picked the wrong line.”

Pressure Project 1: Benevolent Spacing

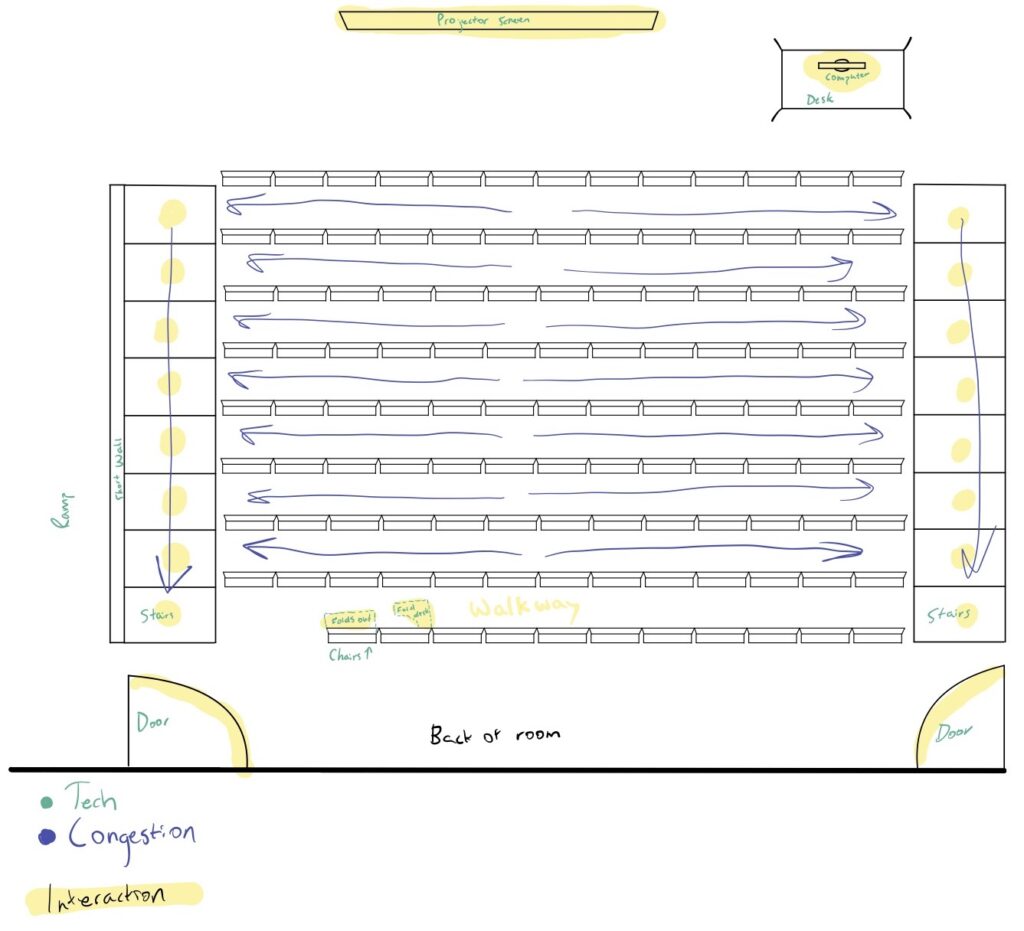

Posted: January 27, 2022 Filed under: Pressure Project I, Uncategorized Leave a comment »For this pressure project, we were assigned to view a public space experiencing a high level of traffic and congestion. The day before it was due, I had a very stressful experience in a classroom having to do with traffic patterns and congestion (including a spilled coffee), so I decided to make that classroom my chosen space for the project.

In the classroom, there are rows of seats that are very close together and hard to walk through. There are desks attached to the seats that fold out, causing even less walking space. During the last 60 seconds of class, everyone already starts to aggressively pack and zip their backpacks, queuing to our teacher to end the class. In order for people to get out of their seats, they either have to step around people with their fat backpacks running into each other, or wait for the outside person to finish packing up. I, being left-handed, like to sit on the outside to get the left-handed desk. When class ends, people are either awkwardly squeezing around me or waiting for me to gather my items in a discombobulated manner.

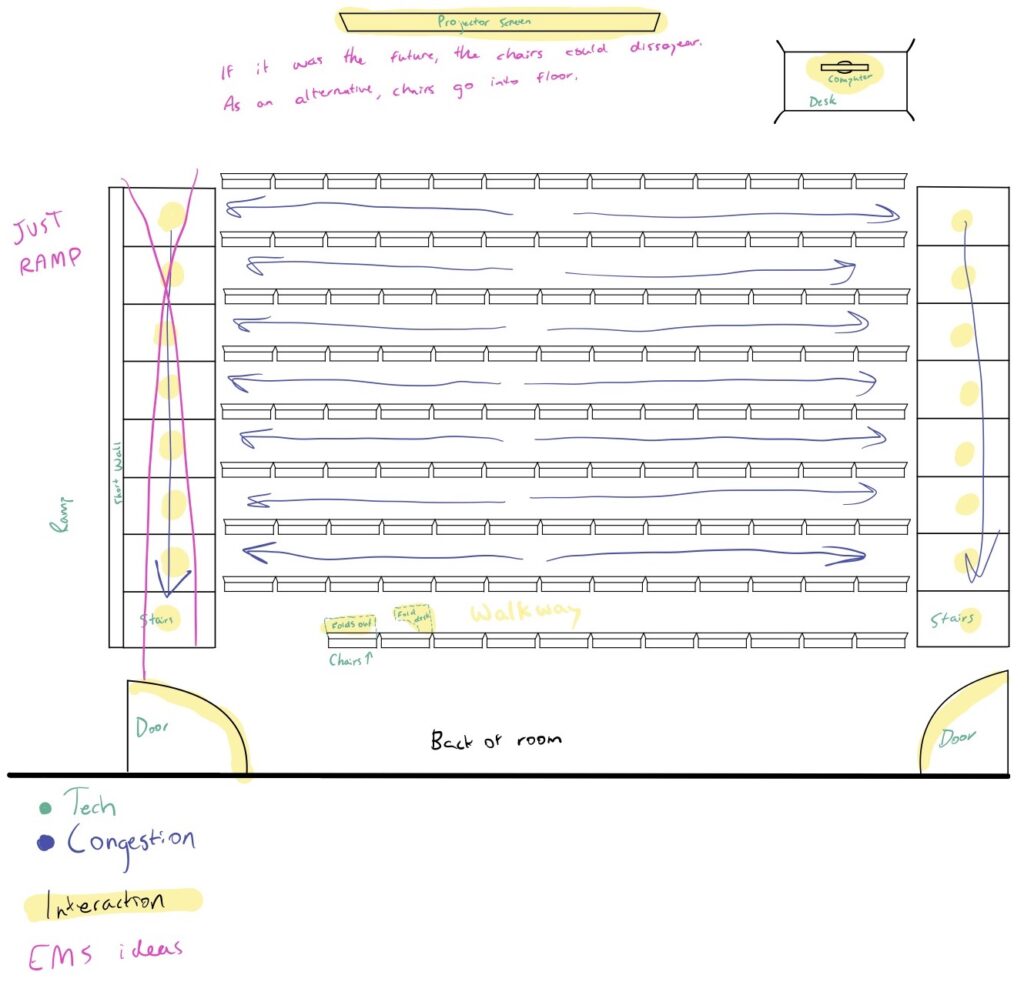

In the picture below, I drew a diagram of the classroom illustrating the different tech in the room, as well as where the congestion occurs.

After creating this diagram, I then began to brainstorm some ideas for how to benevolently adjust the space, reducing the congestion. Here were some ideas I had:

- Eliminate the left-side stairs, making that whole side a ramp and giving more space to walk up towards the door.

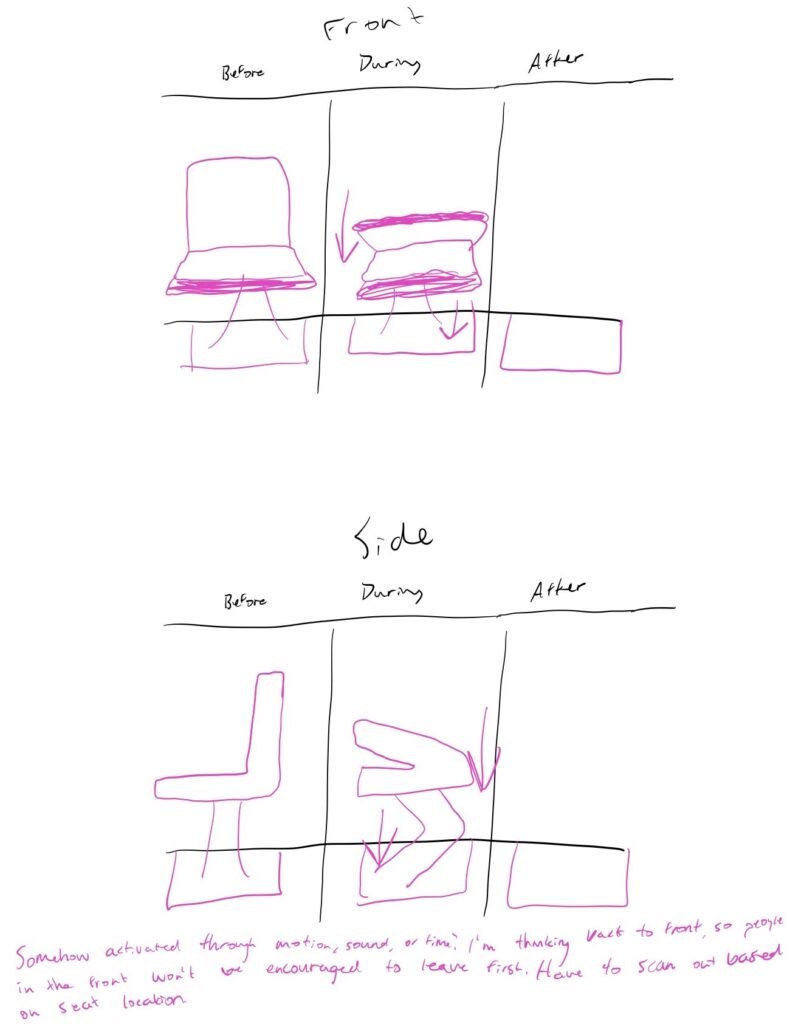

- There is not enough space between the chairs. To fix this, we only need chairs necessary for the amount of students. When not in use, chairs are stored under the floor. They are collapsable/foldable, and are only out when they are being sat in.

- To guide traffic, the seats could fold down automatically, working their way from the back to the front. This would encourage students who sit in the back to leave first, and the students who are sitting in the front will be encouraged to wait their turn to leave and not run over the people trying to get out of their seats closer to the back.

- Since this is college, it’s possible that the students won’t care the order the seats fold in. So instead, the students would swipe their BuckID on their seat, and they would need to swipe their BuckID to exit the room. The swipes would only be accepted in a particular order based on the seats that students were sitting in. While this is more authoritarian than I prefer, it would create a more efficient traffic pattern when leaving the classroom.

- Finally, Alex offered the idea of the teacher letting the students out five minutes early, but making sure to discuss with the students that the five minutes was given to them to take their time leaving and to not run fellow students over on their way out the door.

In the picture below, I drew out what some of the modifications to the room could look like, and I also drew out what the folding seat would look like:

This pressure project challenged me to think more deeply about inefficient uses of the space and designs that were not made by people who would also be using the space. The people who designed this classroom were not also going to be sitting in there, and then have to make it across campus in ten minutes for their next class. As someone who is in the space, I am able to think more experientially about the design, and then make adjustments where I see fit.

Pressure Project 1 – Yujie

Posted: January 27, 2022 Filed under: Uncategorized Leave a comment »When I see the key words “traffic” and “congestion” in pressure project instruction, the first thing come to my mind is the intersections on the street. Because English is my second language and my learning experience of those two words when I was young restrict me from thinking broadly and metaphorically. Though I realized that I limit myself to the narrow concept of traffic and congestion, the pressure time frame stops me from trying to do the process again. So I decide to follow what I have already chosen, which is an intersection on the High Street. One thing I’ve got from this experience is that we should always think twice about the descriptive language itself and be aware of any cultural or experiential bias we might have around it.

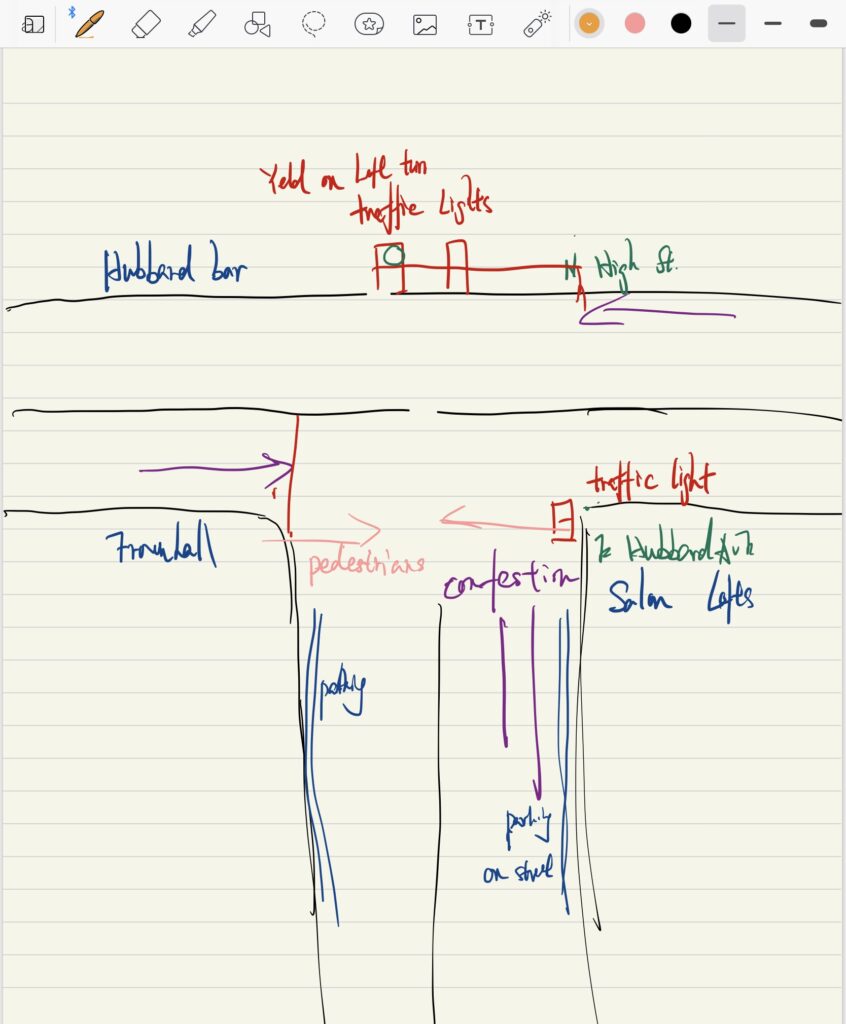

Here is a top-down diagram I have drown:

I’m using AEIOU framework from the week 1 readings to organize information I observed in a high traffic congestion on the intersection of the N High St. and E Hubbard Ave. at night in the weekend. AEIOU stands for Activities, Environments, Interactions, Objects, and Users.

Activities: pedestrians passing across E Hubbard Ave; vehicles moving from E Hubbard Ave. into N High St. and vise versa.

Environments: the intersection located in busy venues of bar and restaurant on Short North. During the weekend after about 10pm, partygoers are packed on the high street. There is a high traffic congestion on E Hubbard Ave where cars try to turn right of left into High St.

Interactions: Drivers coming from E Hubbard Ave encounter the traffic lights, avoid pedestrians walking across the street, and yield drivers on the High Street; drivers on high street move on normal speed; pedestrians encounter traffic light.

Objects: there is a traffic lights for pedestrians to cross the street; another traffic light is for vehicles coming from E Hubbard Street.

Users: drivers on the E Hubbard Street who experience congestion during the traffic peak hour at night on the weekend.

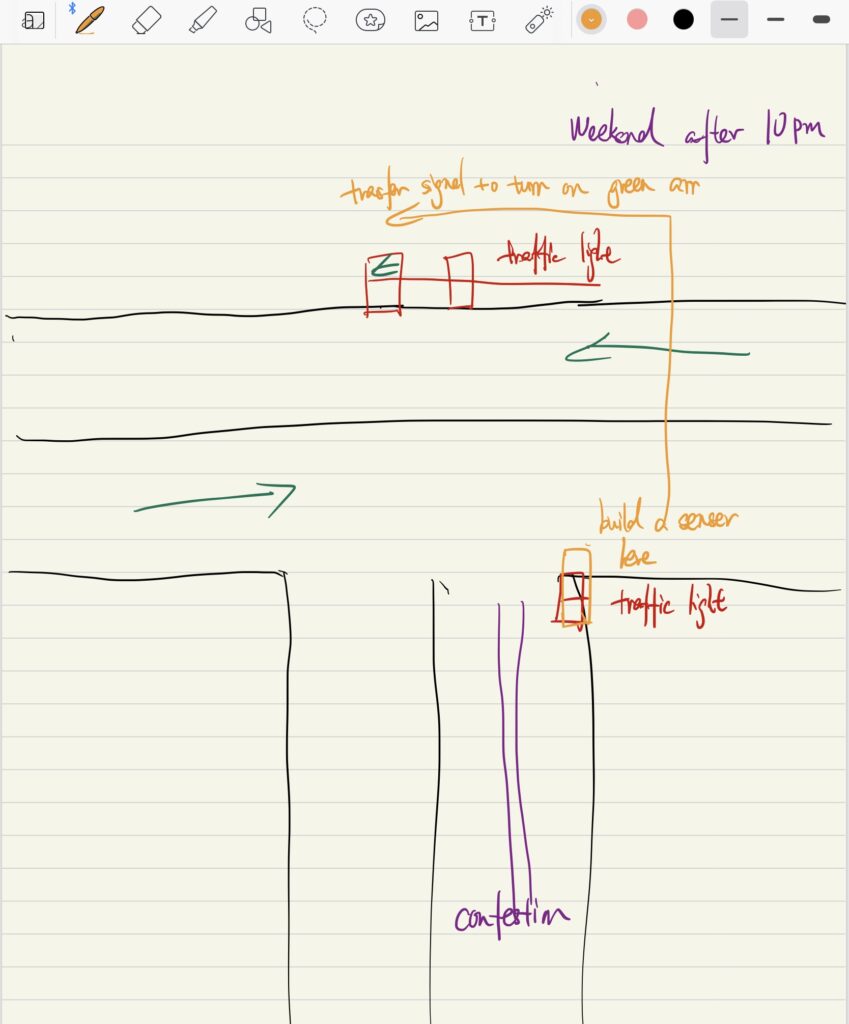

Problem: drivers coming from E Hubbard Street experience congestion because they have to wait for pedestrians to cross and have to yield drivers on the high street coming from both directions. The left turn traffic light only turn to green circle instead of greet arrow, so drivers have to wait for a long time until both direction are clear. This might not a problem during non-peak time, say weekdays or daytime.

Intervention: I suggest building a congestion senser on the right side of E Hubbard Street to track the congestion. The senser can be something like the kinetic senser which is sensitive to the movement of vehicles. If there is a stop of vehicle movement and it last for about more than 1 minutes, the senser will trigger the traffic light on High Street to turn to green arrow instead of green circle. The senser can be only turned on during the weekend at 10pm-1am.

See the photo below:

Pressure project !

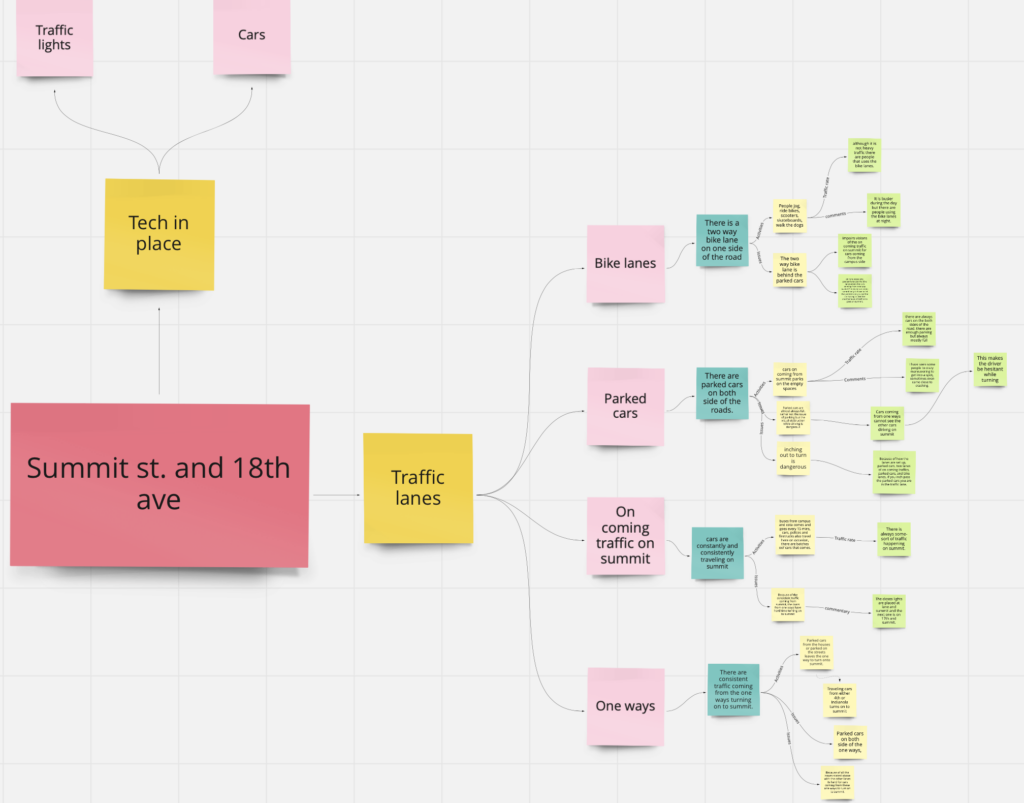

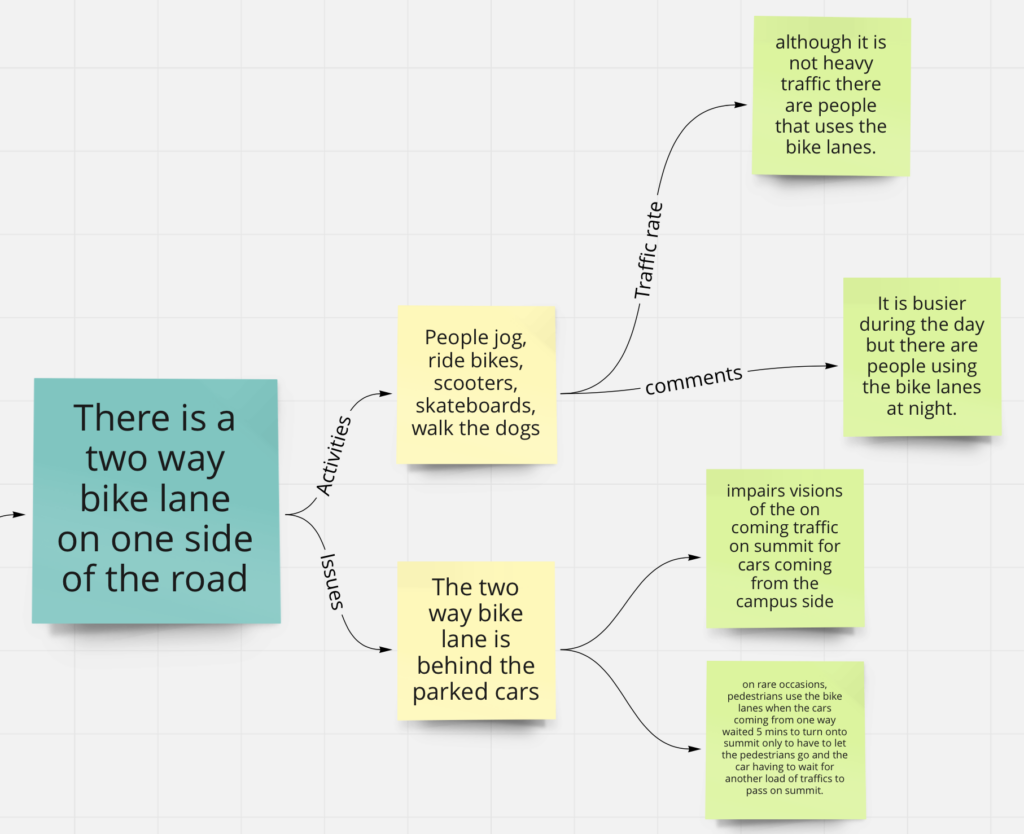

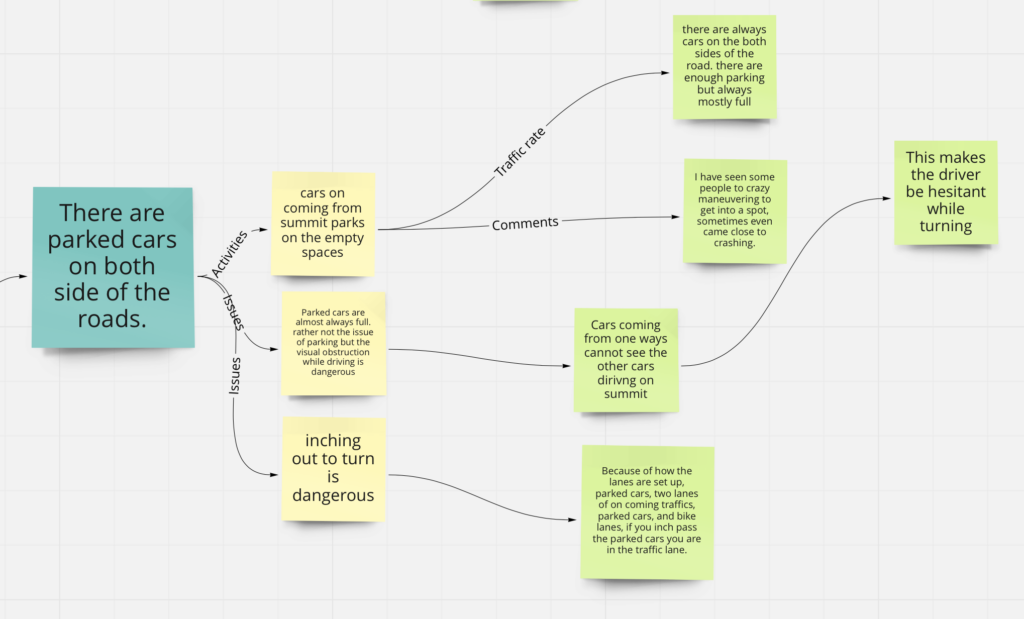

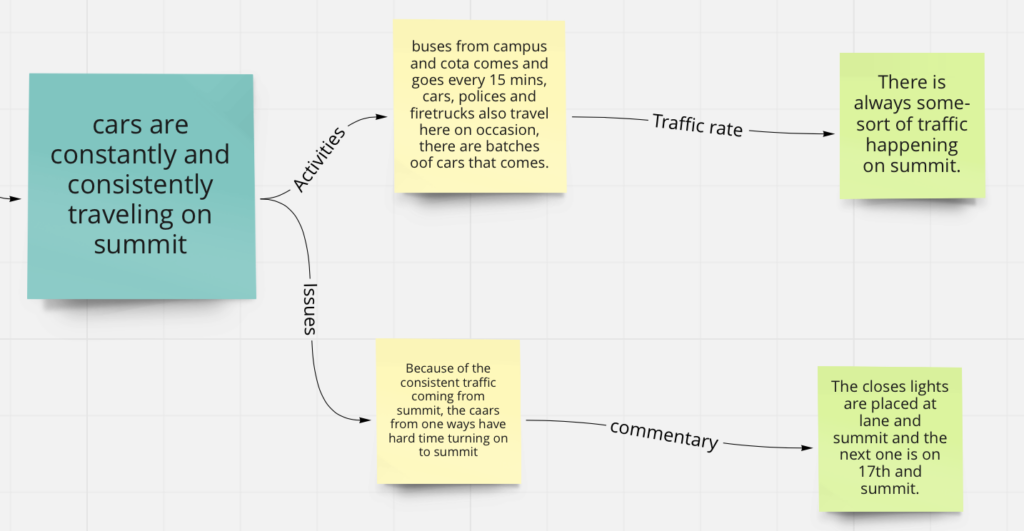

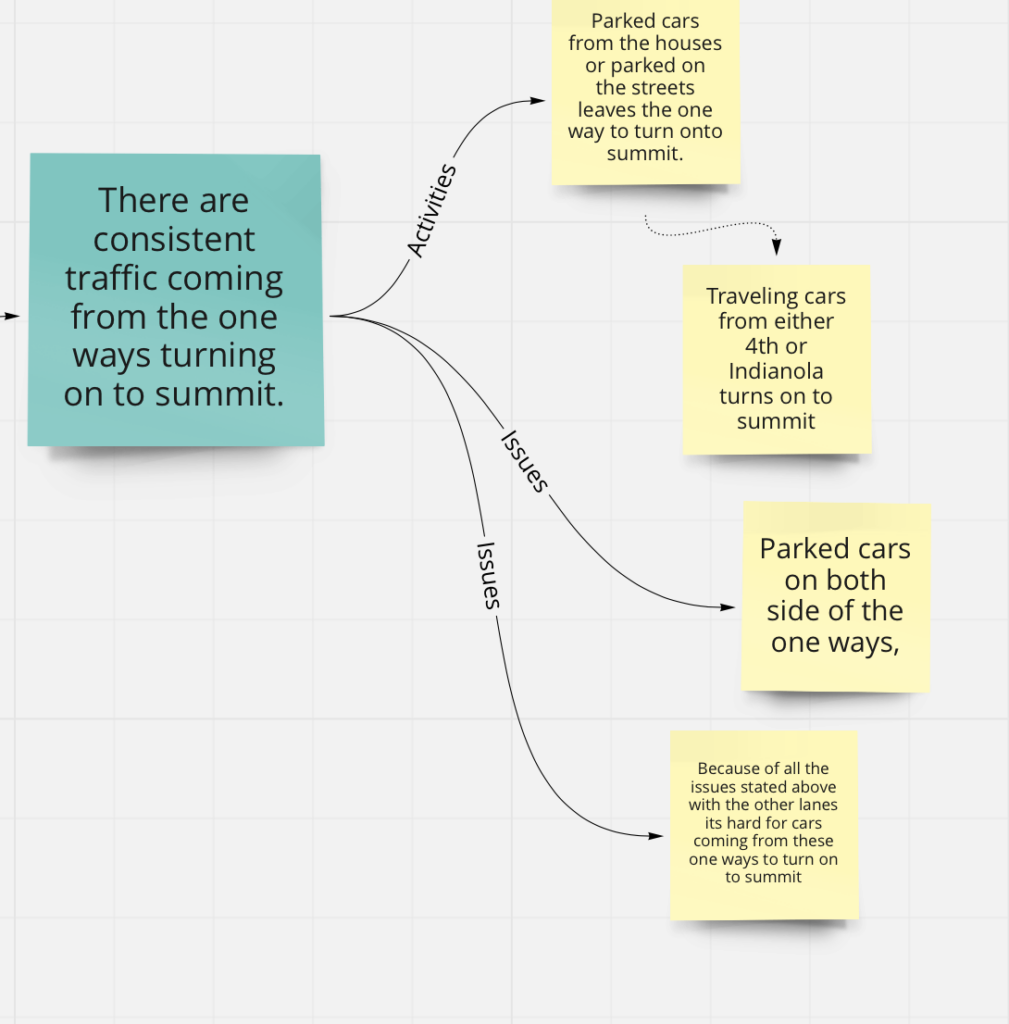

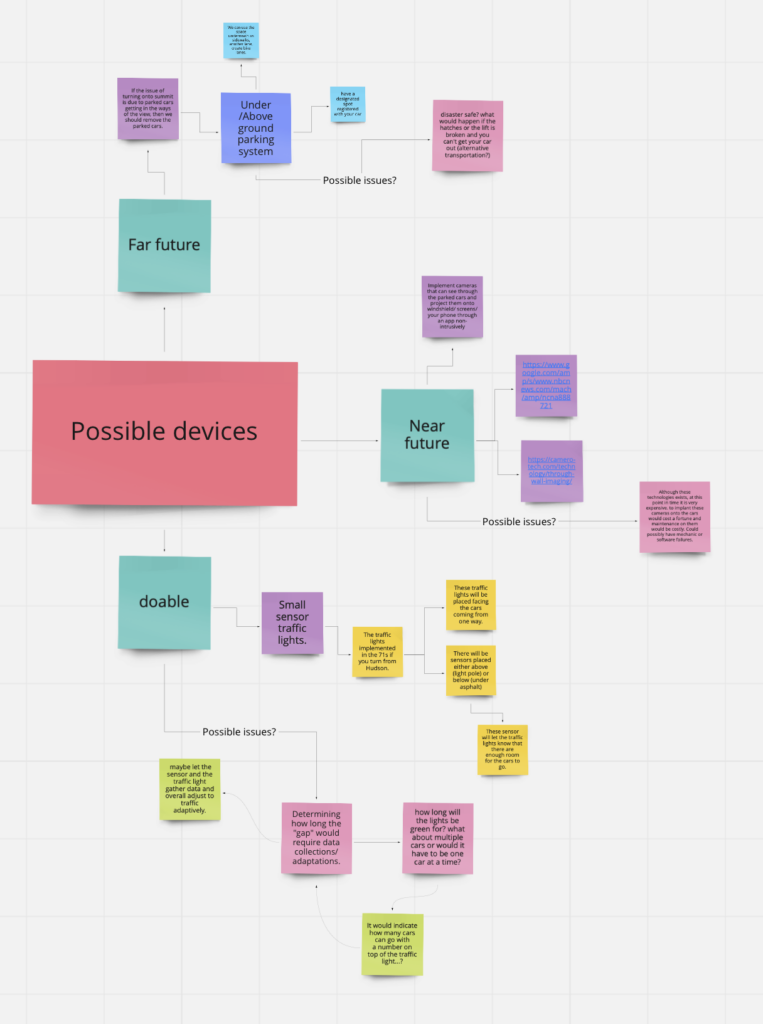

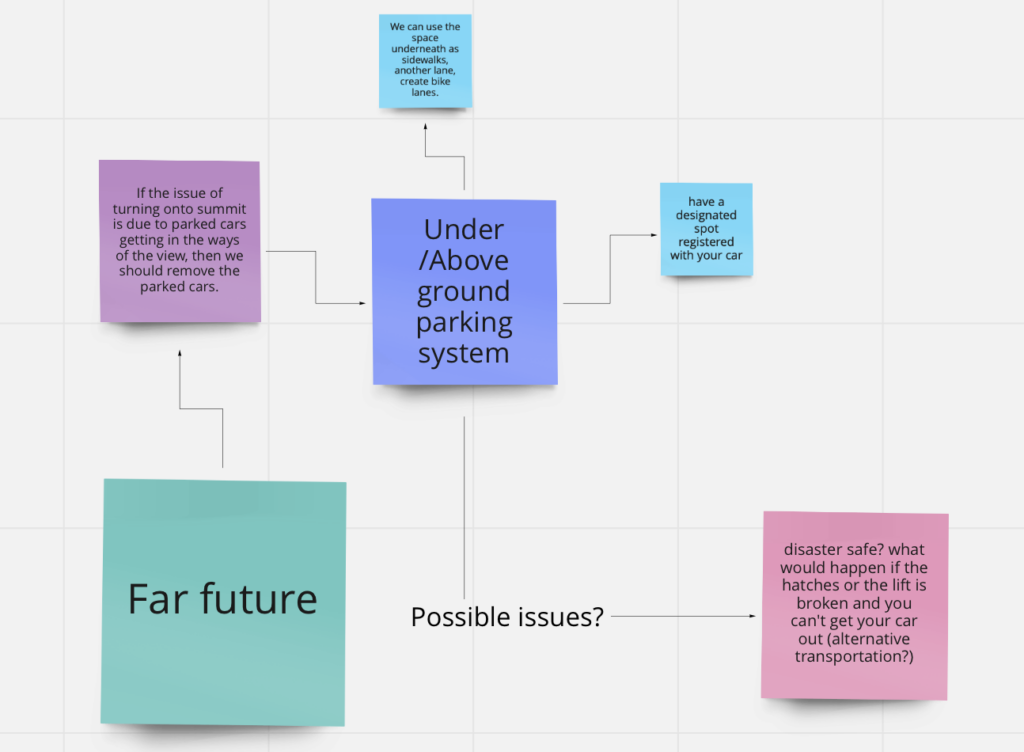

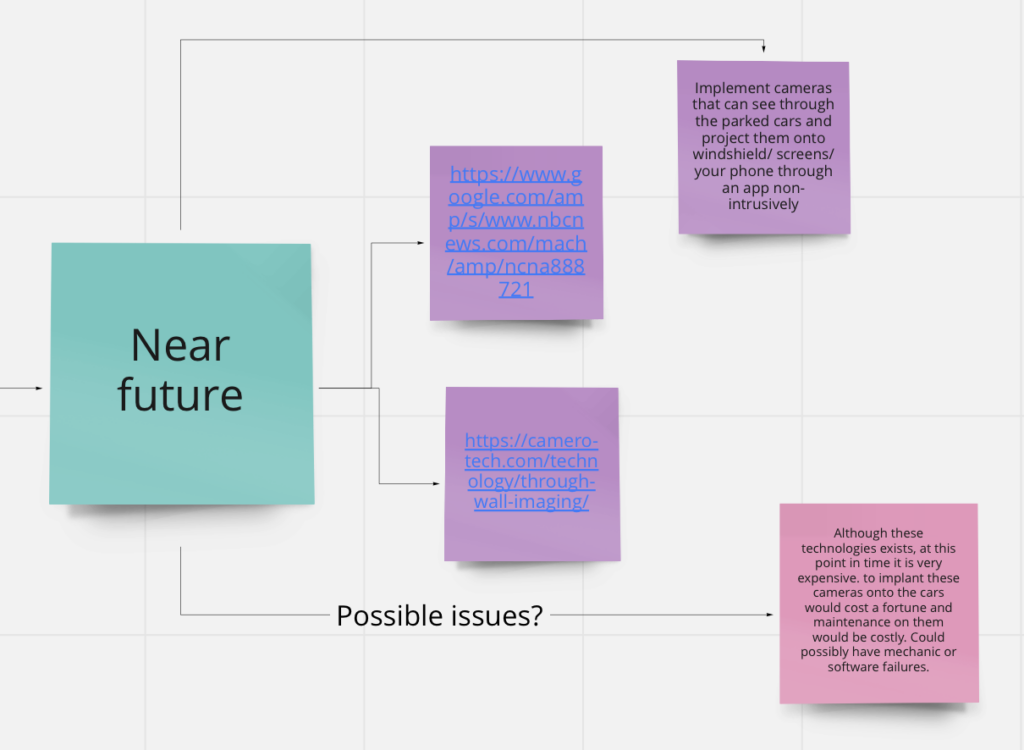

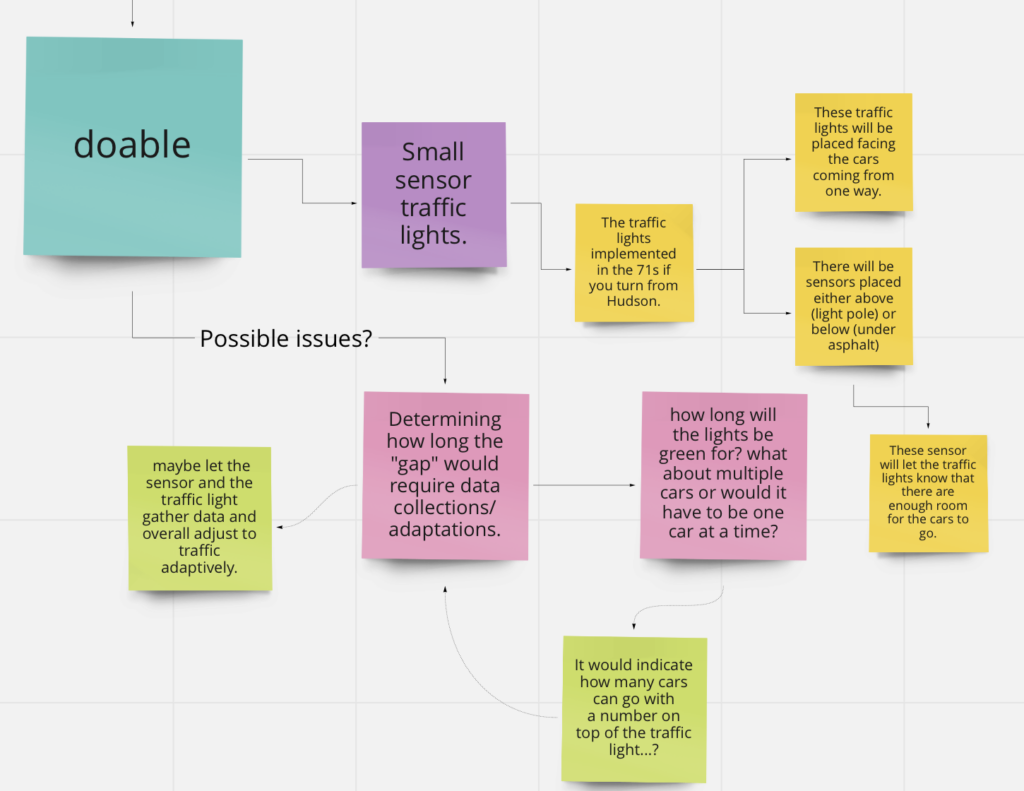

Posted: January 27, 2022 Filed under: Uncategorized Leave a comment »I chose the intersections of the one ways and Summit st. Summit is a two lane one way street with parked cars on each side of the road, with two way bike path. So realistically summit is a 5 lane streets taken up with w lanes of parked cars. Throughout my observation I remembered how terrible it is to turn on to summit because you cannot see through the parked cars for the on coming traffic of the summit. So I started taking notes on my iPad of traffic patterns and eventually transferred them to a mind map.

After taking notes and organizing them I tried to think of ways to help with these issues and made a mind map.

These are two links that are in the mind map. it talks about see through walls tech If anyone is interested.

In conclusion, there are possible implementable actions we could plan and initiate, we just haven’t figured out where the taxes we paid last year went.