Pressure Project II

Posted: December 16, 2016 Filed under: Pressure Project 2, Robin Ediger-Seto, Uncategorized Leave a comment »For our second pressure project we were tasked with creating a project with a Single person experience and a interface. As I was already thinking about story for my final project I wanted integrate intimacy and ideas of story told by the audience. I wanted to create a space in which the viewer was prompted to tell a story and then be audience to other stories about the same topic. I also want to using unconventional tactile Interface.

In the end I create a system which read one of my shirts and looked at the color to determine if it was there or not. if the shirt was present it would trigger a text on the screen, that instructed the viewer to tell a story. This then would be captured to the computer and would be played along with all the other stories after the party was done. In theory this would work but in practice it kind of failed. I don’t give myself time and the space to calibrate the computers understanding of the shirt under new lighting conditions. And I had not quite figured out how do use Isadora’s captured to disk actor.

There where ideas in it that I were very attracted and will continue to work on. I really like the idea of a tactile interface being an everyday object, especially when the object hold significance or comfort. I was also interested in the different stories that me emerge from someone simply talking into a computer.

Pressure Project 3

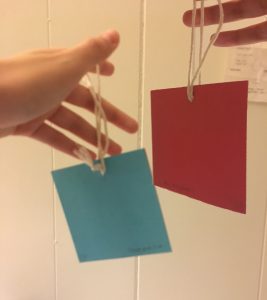

Posted: December 15, 2016 Filed under: Uncategorized Leave a comment »For the Pressure Project 3 I used 2 colors as a dice to activate different interactivity modes. Firstly I created a square shaped, paper, two sided (red & blue) colored dice on a piece of string.

I introduced the dice to the viewer and asked her to experiment with it by showing it to the camera. In order to see the “trick” she needs to match the right side of the camera and the dice. I divided the screen in two half with the crop object and assigned red or blue colors to each side. Blue is tracked only on the left side of the camera/ screen and red is only on the right.

Blue color activates a different sound and blue visuals also the color picker triggers a type object that indicates “ “ in addition to a whisper soundtrack on the background. Red color activates a jackpot! İllustration with a changing scale based on the movement value and a clap soundtrack in order to indicate the “win”.

I used the dice as a symbolization of two different image and sound results that are presented to the viewer. Red symbolizes a winner theme while blue symbolizes a random/neutral trigger with the murmur and whisper sounds on the background. I tried to use blue for keep trying to find the right side and red as a finish point of the game (with a win). It seemed to work on the audience. They found both red and blue sides.

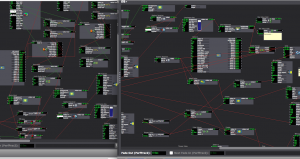

I tried to come up with a simplistic dice idea in order to enable the motion and flexibility of the object in front of the camera. Color code seemed to work well, which is an important feature to use/learn in visual programming. Also in addition to using chroma key object, I used inside range and measure color object for the first time. Measure color object works great, especially for measuring the exact numbers of RGB pixel values. I am surprised how much I learned from a dice concept. A dice can be anything that triggers different functions.

Here is the Isadora file: karaca_pp3-izz

Two sided paper dice

Video is captured from the screens perspective, so when we see red side of the dice on the video, camera recognizes the blue and vice versa for red.

Cycle 1, 2 & 3

Posted: December 15, 2016 Filed under: Uncategorized Leave a comment »Hello,

I documented all parts of my process on my wordpress blog:

https://ecekaracablog.wordpress.com/2016/10/17/visualizing-the-effects-of-change-in-landscape/

I documented the process on my blog. All the visuals and sketches are on the website and the video is on the way.

Cycle 1:

While I was dealing with analysis of data, I came up with a very simple demonstration of variables in order to place them on a visual reference (map) to show the exact locations in the country. I wanted to show the main location by using a map, considering the audience might not know where exactly Syria is.

Text and simple graphic elements formed the main start of the project. After I shared it with the class the feedback I got was mainly around curious questions. One of my peers asked “What is sodium nitrate?” I could tell that he understood that chemical was not good for environment or health. Another classmate continued, “They attack to cultural areas during wars, like they are trying to destroy the culture or history of nations.” That comment helped me to add more on to the project and consider the different results of the war, connected to the same ending “damage”.

After more research and analysis I had to admit that I can’t use clear Satellite imagery or maps for the infographic, which would’ve been a great tool. I learned from Alex, since the war is still ongoing Google Maps have some restrictions in the war areas. All I got from Google Maps was pixelated, blurry maps. I moved on with abstract visualizations applied on map imagery, also included photographs from cities to the final outcome.

Cycle 2:

I believe the feedback that I got from the Cycles helped me a lot to consider the thoughts of my peers. When we work on our projects for long time periods, being subjective and critical towards the become harder. Since I worked on the collection, analysis, sketching and design of the dataset by myself, I was clear about the details of the dataset while I knew it was still too complex for the viewers. In the end the dataset and design is still too complex. It was decision I made for my first complex interactive information design project. Because the analysis of data showed me the complexity is the nature of this project. There is catastrophic damage given to a war country and even a small detail, an environmental issue I tried to visualize is connected to multiple variables on the data set. Therefore I used the wires to show the direct connection between variables and communicate the country is wired with these risks and damages.

Since my peers saw the work and heard me talking about the project, they were more familiar with it. So I was not sure the project was clear to them but I got a positive feedback in the second cycle. I’ve been told that the project looks more complete.

After I got feedback from Alex, I started to think about an active/passive mode for the project. I included a sound piece from the war area, that is activated by the audience walking by the hall in front of my project. The aim for the sound is to take the attention of the audience to the work and give an idea about the topic. Camera to track the motion sees the people passing by and activates the sound afterwards. Since I want the audience to focus on the data set, I targeted the potential audience for the sound part.

I believe sound completed the work and created an experience.

I know the work is not very clear or ideal for the audience, but I wanted to push the limits of layering and complexity in this project. Taking the risk of failure, I am happy to share that I learned a lot (from everyone in this class)!

Right now I have a general understanding of Unity, I know how to add more and manipulate the code (even I don’t know about complex coding). I learned the logic of Unity prefabs, inspector, interactivity and general interface. In addition to MAX MSP (used it last year), I learned using Isadora, which is way more user friendly. Finally I learned using a 3rd information design software called Tableau, which helped me to develop the images (under Infographic Data) on my blog.

I have very valuable feedback from my peers and professors. Even if the result was not perfect I learned using 3 softwares in total, had fun with the project, experimented, pushed the limits and learned a lot of things!

Please read the complete process from my blog. 🙂

Pressure Project 2

Posted: December 15, 2016 Filed under: Pressure Project 2 Leave a comment »The assignment requires physical material input, so I experimented with Makey Makey for the first time. While using marshmallows as an interactive board, I assigned 4 different cues to each of them. Also, I included the camera input and blob objects to control the speed and movement of the work, which are controlled by the viewer.

The opening scene is an automated/wave generated objects and filters. Filters respond to the sound level in the room. Also random text commands respond to the high sound level. Based on the level of sound the text appears/disappears on the screen.

A quick summary of the project:

Interacting with 4 marshmallows activate 4 different soundtracks and visuals. The final work on screen is controlled by the viewer:

- 4 different music track – Speed of the tracks controlled by sound level watcher, the louder sound level increase the speed of track.

- 4 different visual scenes – different colors, objects and filters are used for each scene (and each marshmallow) which are controlled by the motion tracking.

- The background sounds are different versions of instrumental music, even if they don’t sound like it! It was a happy accident (not the prettiest one). While I was experimenting with the sound level watcher, accidentally connected a wire to sound speed and it became a different sound then expected so I kept that system.

Working with Makey Makey added a fun, clear cue to work, giving a direction to the viewer. However the system was still mysterious and it took time for audience to understand the functions.

I believe using text objects create an interactive communication with the viewer. Almost starts a different type of conversation.

Overall it was a fun project and working with a new tool has been a new learning experience.

Here is the isadora file and a video of the project. karaca_pp2-izz

‘You make me feel like…’ Taylor – Cycle 3, Claire Broke it!

Posted: December 14, 2016 Filed under: Final Project, Isadora, Taylor Leave a comment »towards Interactive Installation,

the name of my patch: ‘You make me feel like…’

Okay, I have determined how/why Claire broke it and what I could have done to fix this problem. And I am kidding when I say she broke it. I think her and Peter had a lot of fun, which is the point!

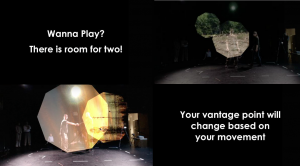

So, all in all, I think everyone that participated with my system had a good time with another person, moved around a bit, then watched themselves and most got even more excited by that! I would say that in these ways the performances of my patch were very successful, but I still see areas of the system that I could fine tune to make it more robust.

My system: Two 9 sided shapes tracked 2 participants through space, showing off the images/videos that are alpha masked onto the shapes as the participants move. It gives the appearance that you are peering through some frame or scope and you can explore your vantage point based on your movement. Once participants are moving around, they are instructed to connect to see more of the images and to come closer to see a new perspective. This last cue uses a brightness trigger to switch to projectors with live feed and video delayed footage playing back the performers’ movement choices and allowing them to watch themselves watching or watching themselves dancing with their previous selves dancing.

The day before we presented, with Alex’s guidance, I decided to switch to the Kinect in the grid and do my tracking from the top down for an interaction that was more 1:1. Unfortunately, this Kinect is not hung center/center of the space, but I set everything else center/center of the space and used Luminance Keying and Crop to match the space and what the Kinect saw. However, because I based the switch, from shapes following you to live feed, on brightness when the depth was tracked at the side furthest from the Kinect the color of the participant was darker (video inverter was used) and the brightness window that triggered the switch was already so narrow. To fix this I think shifting the position of the Kinect or how the space was laid out could have helped. Also, adding a third participant to the mix could have made (it even more fun) the brightness window greater and increased the trigger range, so that the far side is no longer a problem.

I wonder if I should have left the text running for longer bouts of time, but coming in quicker succession? I kept cutting the time back thinking people would read it straight away, but it took people longer to pay attention to everything. I think this is because they are put on display a bit as performers and they are trying to read and decipher/remember instructions.

The bout that ended up working the best, or going in order all the way through as planned was the third go-round, I’ll post a video of this one on my blog which I can link to once I can get things loaded. This tells me my system needs to accommodate for more movement because there was a wide range of movement between the performers (maybe with more space, or more clearly defined space). Also, this accounting for the time taken exploring I have mentioned above.

Study in Movement – Final Project

Posted: December 14, 2016 Filed under: Final Project, James MacDonald Leave a comment »For my final project, I wanted to create an installation that detected people moving in a space and used that information to compose music in real time. I also wanted to create a work that was not overly-dependent on the resources of the motion lab; I wanted to be able to take my work and present it in other environments. I knew what I would need for this project; a camera of sorts, a computer, a projector, and a sound system. I had messed around with a real-time composition library in the past by Karlheinz Essl, and decided to explore it once again. After a few hours of experimenting with the modules in his library, I combined two of them together (Super-rhythm and Scale Changer) for this work. I ended up deciding to use two kinect cameras (model 1414) as opposed to a higher resolution video camera, as the kinect is light-invariant. One kinect did not cover enough of the room, so I decided to use two cameras. To capture the data of movement in the space I used a piece of software called TSPS. For a while, I was planning on using only one computer, and had developed a method of using both kinect cameras with the multi-camera version of TSPS (one camera was being read directly by TSPS, and the other was sent into TSPS via Syphon by an application created in Max/MSP).

This is where I began running into some mild problems. Because of the audio interface I was using (MOTU mk3), the largest buffer size I was allowed to use was 1024. This became an issue as my Syphon application, created with Max, utilized a large amount of my CPU, using even more than the Max patch, Ableton, TSPS, or Jack. In the first two cycle performances, this lead to CPU-overload clicks and pops, so I had to explore other options.

I decided that I should use another computer to read the kinect images. I also realized this would be necessary as I wanted to have two different projections. I placed TSPS on the Mini Mac I wanted to use, along with a Max patch to receive OSC messages from my MacBook to create the visual I wanted to display on the top-down projector. This is where my problems began.

At first, I tried sending messages between the two computers over OSC by creating a network between the two computers, connected by ethernet. I had done this before in the past, and a lot of sources stated this was possible to do. However, this time, for reasons beyond my understanding, I was only able to send information from machine to another, but not to and from both of them. I then explored creating an ad-hoc wireless network, which also failed. Lastly, I tried connecting to the Netgear router over wi-fi in the Motion Lab, which also proved unsuccessful.

This lead me to one last option: I needed to network the two computers together using MIDI. I had a MIDI to USB interface, and decided I would connect it to the MIDI output on the back of the audio interface. This is when I learned that the MOTU interface does not have MIDI ports. Thankfully, I was able to borrow another one from the Motion Lab. I was able to add some of the real-time composition modules to the Max patch on the Mini Mac, so that TSPS on the Mini Mac would generate the MIDI information that would be sent to my MacBook, where the instruments receiving the MIDI data were hosted. This was apparently easier said than done. I was unable to set my USB-MIDI interface as the default MIDI output in the Max patch on the Mini-Mac, then ran into an issue where something would freeze up the MIDI output from the patch. Then, half an hour prior to the performance on Friday, my main Max patch on my MacBook completely froze; it was as if I paused all of the data processing in Max (which, while possible, is seldom used). This Max patch crashed, and I reloaded it, then reopened the on one the Mini Mac, adjusted some settings for MIDI CC’s that I thought were causing errors, and ten minutes after that, we opened the doors and everything worked fine without errors for two and a half hours straight.

Here is a simple flowchart of the technology utilized for the work:

MacBook Pro: Kinect -> TSPS (via OSC) -> Max/MSP (via MIDI) -> Ableton Live (audio via Jack) -> Max/MSP -> Audio/Visual output.

Mini Mac: Kinect -> TSPS (via OSC) -> Max/MSP (via MIDI) -> MacBook Pro Max Patch -> Ableton Live (audio via Jack) -> Max/MSP -> Audio/Visual output.

When we first opened the doors, people walked across the room, and heard sound as they walked in front of the kinects and were caught off-guard, and then stood still out of range of the kinects as they weren’t sure what just happened. I explained the nature of the work to them, and they stood there for another few minutes contemplating whether or not to run in front of the cameras, and who would do so first. After a while, they all ended up in front of the cameras, and I began explaining more of the technical aspects of the work to a faculty member.

One of the things I was asked about a lot was the staff paper on the floor where the top-down projector was displaying a visual. Some people at first thought it was a maze, or that it would cause a specific effect. I explained to a few people that the reason for the paper was because the black floor of the Motion Lab sucks up a lot of the light from the projector, and the white paper helped make the floor visuals stand out. In a future version of this work, I think it would be interesting to connect some of the staff paper to sensors (maybe pressure sensors or capacitive touch sensors) to trigger fixed MIDI files. Several people also were curious about what the music on the floor projection represented, as the main projector had stave with instrument names and music that was automatically transcribed having been heard. As I’ve spent most of my academic life in music, I sometimes forget that people don’t understand concepts like partial-tracking analysis, and since apparently the audio for this effect wasn’t working, it was difficult for me to effectively get the idea across of what was happening.

During the second half of the performance, I spoke with some other people about the work, and they were much more eager to jump in and start running around, and even experimented with freezing in place to see if the system would respond. They spent several minutes running around in the space and were trying to see if they could get any of the instruments to play beyond just the piano, violin, and flute. In doing so, they occasionally heard bassoon and tuba once or twice. One person asked me why they were seeing so many impossibly low notes transcribed for the violin, which allowed me to explain the concept of key-switching in sample libraries (key-switching is when you can change the playing technique of the instrument by playing notes where there aren’t notes on that instrument).

One reaction I received from Ashley was that I should set up this system for children to play with, perhaps with a modification of the visuals (showing a picture of what instrument is playing, for example), and my fiance, who works with children, overheard this and agreed. I have never worked with children before, but I agree that this would be interesting to try and I think that children would probably enjoy this system.

For any future performances of this work, I will probably alter some aspects (such as projections and things that didn’t work) to work with the space it would be featured in. I plan on submitting this work to various music conferences as an installation, but I would also like to explore showing this work in more of a flash-mob context. I’m unsure when or where I would do it, but I think it would be interesting.

Here are some images from working on this piece. I’m not sure why WordPress decided it needed to rotate them.

And here are some videos that exceed the WordPress file size limit:

Third Pressure Project

Posted: December 13, 2016 Filed under: Pressure Project 3, Robin Ediger-Seto Leave a comment »The final pressure project had the least requirements yet. It simply had to involve dice and the computer had to interact with them directly. Immediately my mind drifted to my last pressure project in which I attempted to use the computer to read colors. I felt the dice might have enough difference between each number that the dots would create distinct cumulative colors. I work for a time on using the Eyes actor to track the position of the die and the color calculator to get an accumulative color of the dice. Sadly, even under good lighting conditions there were too many shadows and differences to make a robust system. But in this I realized that I could just use the eyes actor. By creating a patch that cordoned off six areas of the view of the camera and having the audience Rolling dice without telling them I was basically creating a die.

This whole process ran parallel to the content of the work. As you probably have guest I really like talking about my experiences in my work. the die through me back to playing dice games on long train trips during our vacations as a child. As these train rides were up to three day journeys the potential for boredom and easy entertainment was high. The easiest entertainment was a game at me and my sister would play four hours at a time. We would go back and forth saying “woof” until one of us said “meow” and I would precede to laugh uncontrollably, and to this day still do. I want to share the riveting joy of the game dubbed “woof” with the world. Thus I came up with the final work.

One out of the six designated areas in the cameras view would make the computer say meow while the other five would say woof. Once the meow was landed on it would appear in another place. It was that simple. The final element and what I think sold the project was my reaction to it. the reward for landing on the correct sector was not only a “meow” but it also my smiling face.

I think there’s something to be said about technologies ability to reconnect us with what we truly feel is important. By making a simple Isadora patch I was able to re-create and experience that is extremely fond to me.

Third and Last time Around with Tea

Posted: December 13, 2016 Filed under: Final Project, Robin Ediger-Seto Leave a comment » For my final project I wanted to take the working elements of the two earlier projects and simplify them whilst creating in more complex work. The two earlier projects contained tea in them. During deeper expirations of my relationship with tea I realize that it’s a symbol that is tied to many facets of my identity. It is important part of almost all the cultures I was brought up in, Indian, American, Japanese, and Iranian. This brought me back to the key premise of this journey, the expiration of time in story. I am very much a product of the times and would not exist anytime before this. I was also interested in the way that we tell the story that got us here. And how as audience members we are able to imagine alternate pasts while giving facts. From these ponderings I came up with a new score.

For my final project I wanted to take the working elements of the two earlier projects and simplify them whilst creating in more complex work. The two earlier projects contained tea in them. During deeper expirations of my relationship with tea I realize that it’s a symbol that is tied to many facets of my identity. It is important part of almost all the cultures I was brought up in, Indian, American, Japanese, and Iranian. This brought me back to the key premise of this journey, the expiration of time in story. I am very much a product of the times and would not exist anytime before this. I was also interested in the way that we tell the story that got us here. And how as audience members we are able to imagine alternate pasts while giving facts. From these ponderings I came up with a new score.

In the final performance I would make tea while explaining what I Believe to be true about tea. To get this material I free wrote a list of every fact that I know. I then measured how long it took me to make tea and recorded me reading the list. This portion would be filmed in chunks and playback in eight tiles much like the last performance. During the brewing of the tea I talked about the Inspiration for the work. I did this into a microphone and made a patch that listened to the decibels I was producing and produced a light whatever they got loud enough. My meanderings on the microphone ended with me presenting the tiles and then I poured tea for people.

In the final performance I would make tea while explaining what I Believe to be true about tea. To get this material I free wrote a list of every fact that I know. I then measured how long it took me to make tea and recorded me reading the list. This portion would be filmed in chunks and playback in eight tiles much like the last performance. During the brewing of the tea I talked about the Inspiration for the work. I did this into a microphone and made a patch that listened to the decibels I was producing and produced a light whatever they got loud enough. My meanderings on the microphone ended with me presenting the tiles and then I poured tea for people.

I did not get a lot of feedback after the show, but I did get a lot while rehearsing it. For instance, during the performance I needed to assert my role during the making of the tea by looking at the audience. Also that I needed to put a little more attention into the composition of my set. These pieces of advice really help the final performance and I wish I had better used my lab time as the space for rehearsals incident of doing so much work at home.

My constant worry through the process of the three performances was that I was not keeping in the spirit of the class by making simple systems. during the final performance I realized that what I was working on was highly applicable to the class. I was creating systems where the user could express themselves and have complete control over the performance. I was designing a cockpit for a performance with a singular user, me. The designers, stage manager, and performer only had to be one person. The triggers for actions played into the narrative of the work. In the second performance cycle the cup of tea attached to the Makey Makey integrated a prop into the system that controlled the performance. In the final performance I ditched the cup instead opting for a more covert controlling method. I placed to aluminum foil strips on the table and attached the Makey Makey so that I could covertly Control the system by simply touching booth strips. The other cue was controlled by a brightness calculator that was triggered by turning off the lamb after I made the tea.

This performance will not be a final product and I will continue to work on it for my senior project to be performed in March at MINT gallery, here in Columbus. I plan on integrating the Kinect that I just bought to create motion triggers, so that even less hardware will be seen on stage. I believe that I’m going good direction with the technological aspect of the work and now need to focus on the content. This class allowed me to think about systems in theater and ways to give the performer more freedom in the performance. The entire theater proses could benefit from a holistic design proses, where even the means od truing on a light must be taken in to consideration.

Second Time Around with Tea

Posted: December 13, 2016 Filed under: Robin Ediger-Seto Leave a comment »Playing off the ideas of my last creation I decided to make my work capture me as I performed. In this way the subject of the peace shifted from the story I was trying to tell to a story that was being created by performing. However this thought process took a bit of time to end up with the product.

For a bit I tried to the idea of focusing on the presentation of the information considered giving the audience carte blanche on how they absorbed the media. I would film from different viewpoints and then connect it to a stylist that would allow the audience to view the temporality of the information on a spatial level. This would continue the thread of the relationship to time but would massively change the project. I put this idea in a box for another day and started with another solution.

I decided that the story itself was an event that could be told. This time I created a patch in Isadora that would video me for a certain length of time. It would store these videos away until I chose to play them all at once on nine tiles. These tiles would be projected onto me as the video camera again would capture my actions and projector image and then play this back.

During this time I also became obsessed with the idea of the magical mundane. In my performance I wanted to equally show my flaws as well as my strengths. To do this I credit improve score. While the video camera recorded me the first time I would do a free write. For the performers that was shown I wrote about the audiences expectations of my work or at least how I felt about the audience expectations. As I did this I drink tea to add a little bit of action into the tile frames. Once the video was done recording I read the free write which was captured buy another recording. Finally this recording was playback also as tiles in Cannon but not split up, in other words each video was delayed a little bit from the last.

I finally felt like this was an interactive piece, in the vain of the rest of the class. To make it even more interactive I involved a Makey Makey that, with the help of some tinfoil, would turn my tea cup into a switch that would trigger the start of the first recording and that the switch from the second recording to the showing of the second recording. This was highly successful in that it changed the way the queues were read, in that my face do not have to be in the computer and I was allowed to perform more freely.

For my final iteration I wanted to try to further the ideas in this one and make it more exciting to watch and take it a bit out of draft state. The feedback that I got included consideration of detail. With the final iteration coming up so soon I’m not sure about how much Will change.

Fist Time Round with Tea

Posted: December 13, 2016 Filed under: Robin Ediger-Seto Leave a comment »I had a very difficult time getting started with my project. I had a fairly clear ideas for what I wanted but did not how to express them in an interactive format. At the base of my project I was trying to explore storytelling as an act of time travel. We experience the world in linear mode, but categorize it fairly non-sequentially, skipping from detail the detail and making associations with other times. In this way our bodies experienced time in a different way then our minds do and the way did we interpret the world. I have been dwelling on these thoughts for a little bit now. There is an idea in the theater that the show starts at the first pamphlet and never really ends. As performers we can control what the audience sees but everything that is not the performance ultimately has mass effect on the nature of the work.

From these fairly heady notions I wanted to create a format for telling stories without my control and manipulation of time. I wanted the viewer to experience it as a thought, disconnected from a temporal state. My first project was more about achieving this shuffled time state then the interactions with the audience. I filmed short three second clips of me making tea and the images that I saw while making tea. I’ve then made an Isadora patch that would play these images at random either forward or backward.

At the stage in the process the choice of tea was fairly arbitrary. I chose it because of its sequential nature and because it was a process that was familiar to me and to the viewer. I was committed to it being a performative work and thus read a script while the videos occurred. The script run counter to the objective view presented by the camera. It’s featured nuggets of information that I knew about tea and interlaced them with instructions for making tea with the steps reversed.

During my lab time I toyed with projection mapping in to corners. I was interested in the effects that would have on immersing the audience into the work. I think this Will be a rabbit hole I jump down in the future, but did not serve my work in an intentional way.

After the performance I got feedback which allowed me to focus more on the content. For my next cycle I wanted to try to make the world more interactive, and not necessarily interactive with the audience but interactive in that content would be generated during the performance.