Cycle 1

Posted: March 29, 2022 Filed under: Uncategorized Leave a comment »Concept: Following the 20th presidential election in Korea, I wanted to portray beauty of democracy and its hidden dark side. My goal was to articulate the irony of the rigid dichotomies between left and right, old and young, and Southeastern and Southwestern Korea balancing the power of the nation and protecting our democracy. With the emergence of social media, people began to access and believe in different information whether it’s true or not. The nation has become more polarized, and the politicians took advantage of the situation, disuniting the nation to gain more votes. With all the socioeconomic, generational, and regional conflicts and hatred, the polarization became uncontrollable to the point where the very purpose of the governance and elections degenerated into the survival of the party. No matter a plan was good or bad for the nation, each side would disagree with whatever the other side carried forward. One of the few times they agreed upon was when they wanted a pay raise. I got sick of the politicians having double standards towards each other(In Korea, we call this 내로남불, which means, “If I do it, it’s a romance, if you do it, it’s an infidelity.) and disuniting the nation, so I wanted to let my audience be aware of the danger of unconditional support towards certain political party which hinders the self-purification. In order to make rational decisions as voters, I thought it would be important to build mutual gratitude towards each other for bringing prosperity and democracy to the nation.

Methodology: Besides articulating my political thoughts, I wanted to explore the possibilities of texture and layering with the projections. Oded helped me install two projectors facing each other with a scrim in between them. I gathered videos of the poverty after the Korean war, Miracle of the Han River, the military dictatorship and the Democratization Movement, and Korea today with direct elections and added textures that would support the ideas. There was a live camera capture to individualize and highlight citizen power. It ended with intertwining red and blue waves which represents two political sides.

Next step: I think for the first cycle, I understood how to set up the layering of projections, how to add textures to them, and what effects they create. I think for the next step, I would like to add audio so the audience can follower and understand my intentions with the piece. I also want to explore interactivity with motion capture.

Cycle 1 – Analog Video Synthesizer – Ashley Browne

Posted: March 29, 2022 Filed under: Uncategorized 1 Comment »For the cycle 1 project, I fabricated an analog video synthesizer that would allow the user to mix two separate video inputs into one. I knew that I wanted this chance to experiment with CRTs and live video, so I used a video camera as one input, which was directed towards the audience and the other was from a video sourced online. When mixing the two, I had to be deliberate in the videos chosen so that they would be able to overlap one another on the television screen– it had to have enough negative space for the second video input to show through.

For the physical material, I wanted the housing to resemble a controller or something small enough to be held with both hands. I enjoyed a lot of the feedback received from my classmates– many of them mentioned it felt nostalgic and that the challenge of using the video synthesizer seemed to fit the aesthetic that was happening.

Here is the schematic I used, from Karl Klomp:

http://www.karlklomp.nl/wp-content/uploads/2018/12/index.html

And documentation of the synthesizer and video output:

For cycle 2, I’d like to build another video synthesizer with different toggle switches so that it’s a bit easier to use. I’d also like to focus more on the content of the work and think closely about which two video inputs are being used and how that may affect audience participation and reception. For the 3rd cycle, I’d imagine it coming together as a two player interaction, where player 1 uses the video synthesizer and player 2 uses another apparatus that also affects what is happening on screen. It would also be interesting to see how I can incorporate projection or consider different kinds of installation setup moving forward.

Cycle 1 – Tomatoes – Min Liu

Posted: March 26, 2022 Filed under: Uncategorized Leave a comment »My research interest is designing for joint and interactive educational experience for family visitors in informal learning environment like museums and zoos. In this project, I explored how physical and digital materials can work together to enhance sense-making, and how individual and collaborative interaction can motivate learning.

I chose tomato as the learning theme. In the default scene, participants will see a tomato garden with birds and some insects. They can wave their hands to get rid of the animals. A background music of nature winds, insects and chimes is on. A green tomato, a red tomato and two leaves (one is fresh, and one is dry) are controllers. I hope this can create a sense for participants that they are in a tomato garden holding tomatoes. When the participant holds the green tomato and a leave, he/she will see the growing process of tomatoes. When the participant holds the red tomato and a leave, there shows the rotting process. When two participants hold different tomatoes together, they will see microscopic structures of tomatoes.

In the test in class, players were surprised when they triggered different video clips about tomatoes. They thought these clips were interesting and well-chosen. One participant said that the rounded style of videos makes the experience intimate. Players needed to keep touching the tomatoes along their watching the video. I wished this setting could stimulate people to make some connections between the sense of touching physical materials and the digital contents on the screen. However, the feedback was that the connections were not strong there. Players just concentrated on the videos and separated the touch sense. So, how to design integral interactions with physical and virtual materialities needs further exploration.

I tested the system again with a ten-year-old kid at home. To be honest, I failed to act as a good guidance and companion. I couldn’t help telling him what to do to trigger things and explaining the system mechanism. During the experience, the boy told me that he had watched a similar video before at school. It’s about a seed growing into a big tree. When the boy and I held the two tomatoes and watched the video together, he asked me questions like which part of the tomato is observed under microscope. This system is successful in establishing conversations between children and adults.

In the next cycles, I will keep exploring: 1. How can participants interactive with the system autonomously without verbal or written instructions? 2. How to build the connection between physical and digital materials? 3. Other creative learning and collaborative interaction ways. 4. Installations in big space.

Circle 1-Yujie

Posted: March 24, 2022 Filed under: Uncategorized Leave a comment »My final project is to collaborate with my dancer Yukina who is working on a restaging project. She is going to restage Japanese contemporary choreographer Saeko Ichinohe’s piece and then create her own piece responding to Ichinohe’s work. I’m working with her to create the mediation for her own choreography.

We center our project around one question that is derived from Yukina’s own lived experience. Why people in the US expect her, a Japanese woman, to perform and embody Japanese cultural elements on stage. I’m inspired by Rachel Lee’s book The Exquisite Corpse of Asian American. Lee thinks that certain Asian American artists use fragmented body parts to resist the idea of returning to the whole which represents the so-called cultural essence. The whole is also easily to be categorized into racial stereotypes. The fragmented body parts then can be seen as a challenge.

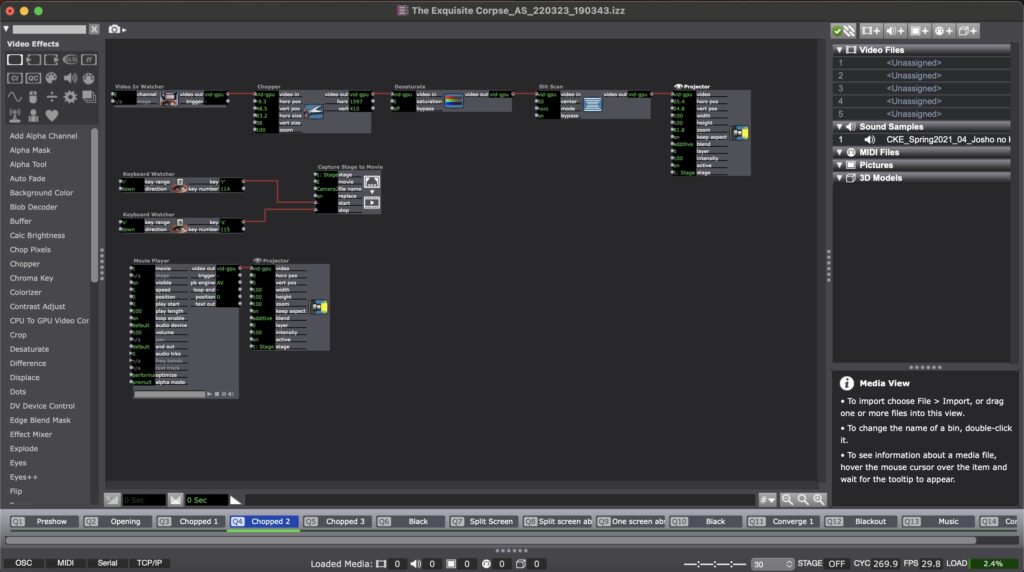

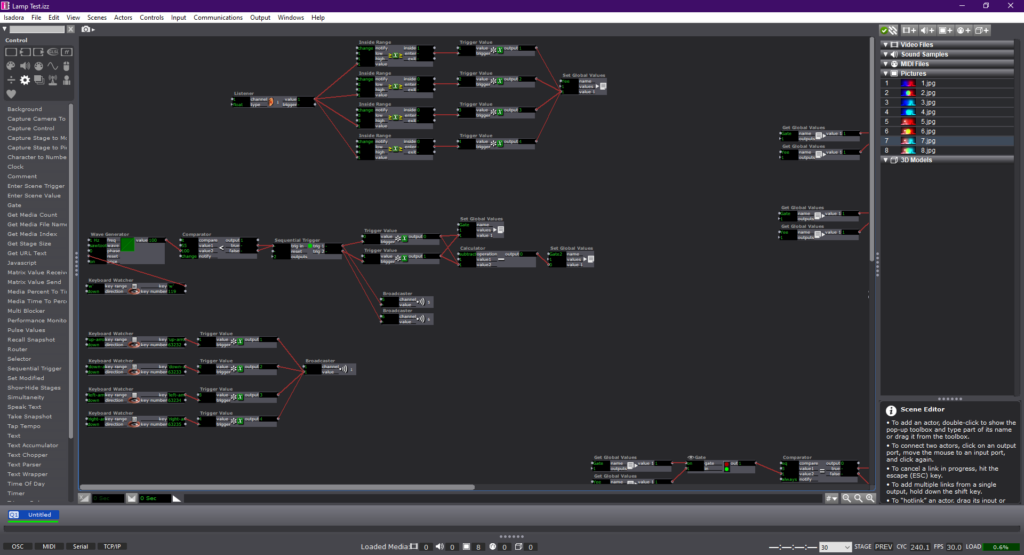

In my circle 1, I use live camera to capture dancer’s body parts and frame it in discrete space on the projection. I also use Isadora to capture the performance in real time and juxtaposition the recording with the real-time performance. Dancers’ body is not just divided in space but also in time. Then, I use video effects to present dancer’s inner state. In the end, I try to put all the fragmented body parts randomly together, but it was not successful in this circle.

Here is one scene in Isadora:

Here is the video documentation of my circle 1:

For my circle 2, I’m thinking of the following development:

- Use two cameras to free my laptop. Work on camera angles to really get close to the dancers’ body

- Develop a version of the surrealist game that invite a more dynamic participation from the audience. I might consider adding the Kinect to include the audience’s motion, so that they are not just gaze into the dancer’s body.

- Consider more possibilities of the projection. I want to try different ways to devise the space with the projection.

Cycle One–Allison Smith

Posted: March 24, 2022 Filed under: Uncategorized Leave a comment »For my first cycle, I chose to practice with motion tracking and what I can do with that. As a dancer, I was excited to play with how the tech could inspire movement both for dancers and for non dancers. My goal was to track the extremities of the user, right and left hands and feet, and use that tracking to paint lines on a projector screen. Noticing how these experimental systems encourage people to play, I was hoping that people would be intrigued to play and then see what the body can create.

Using a Kinect feeding into the skeleton decoder actor, I was able to get numbers for all of the extremities. I plugged the X and Y coordinates into the live drawing actor, and I plugged the velocity of these coordinates into the hue of the drawing. The goal was that faster movement would be a different color than slower movement, cuing the participant to play with different speeds. I also plugged the velocity of these coordinates into a trigger for adding a point of the drawing, so that when the participant isn’t actively moving their arms, it won’t create unwanted drawings.

This didn’t go as technically smooth as I would’ve wanted it to. The Kinect was finicky and didn’t track more than one extremity at once. It also was glitchy in its tracking, so the movement of the drawing was rigid rather than smooth. It also took a long time to catch the cactus pose of my first individual, which wasn’t the experience I was hoping for. Finally, as much as I played with the numbers, the color changing didn’t pan out the way I wanted it to. I wanted it to look like paint that was blending into different colors, but instead it looks more like an LED light. I’ve attached a video of my demo here:

I received helpful feedback after the demo. It was brought up how the cactus pose experience was turning off other people from volunteering. People also explained that going up by themselves was intimidating, and it might have been more inviting if they were by themselves or if other people could do it with them. I was encouraged to consider my audience and my setting to motivate how I set up the experience. Do I tell people what to do, or do they figure it out themselves? Would having something on the screen before people approached the screen be more inviting than having a black screen to walk up to?

If I were to work further on this project, I would try to make this experience more inviting. Since I am a dancer, it was helpful to hear feedback on how this is intimidating for someone who doesn’t typically move their body in that manner, and it was helpful to hear how to cater this experience differently. I would also try to make this either fully independent of my instruction so people could engage with it in an installation, or fully capable of tracking dance that could be used during a performance. I am not sure if I want to continue to work on this project in the next cycle or the third cycle, but I will keep this feedback in mind for any of my future work.

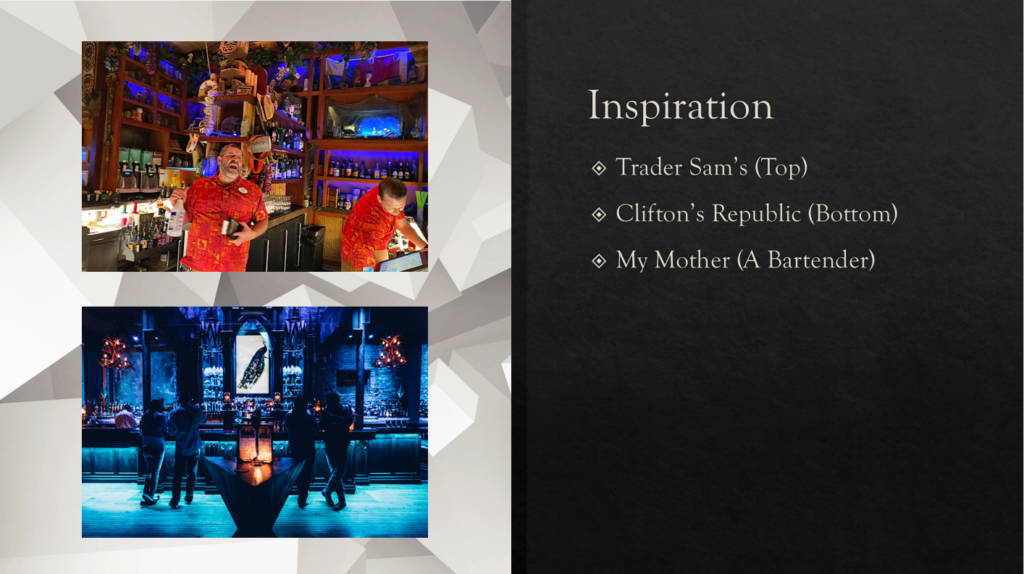

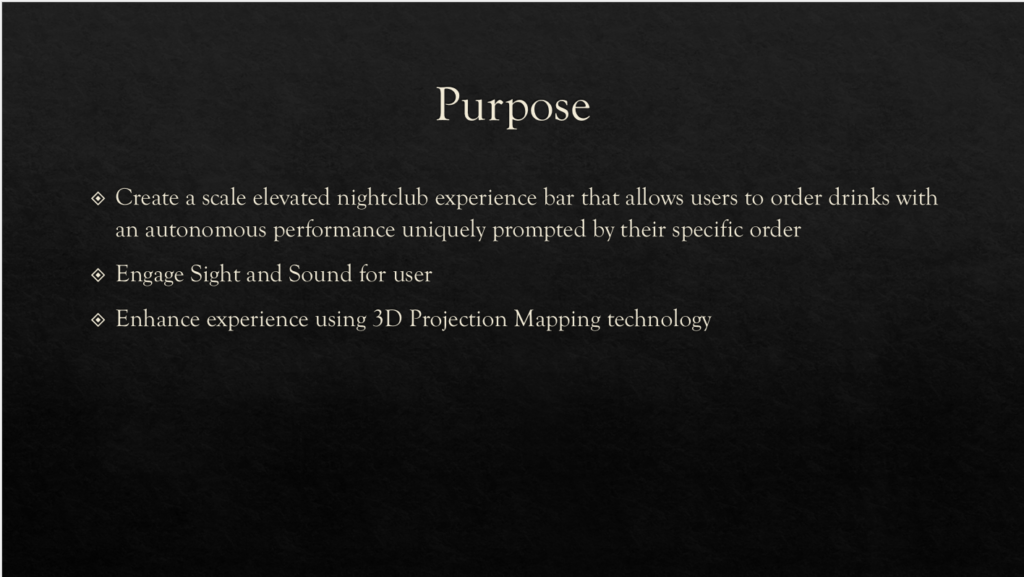

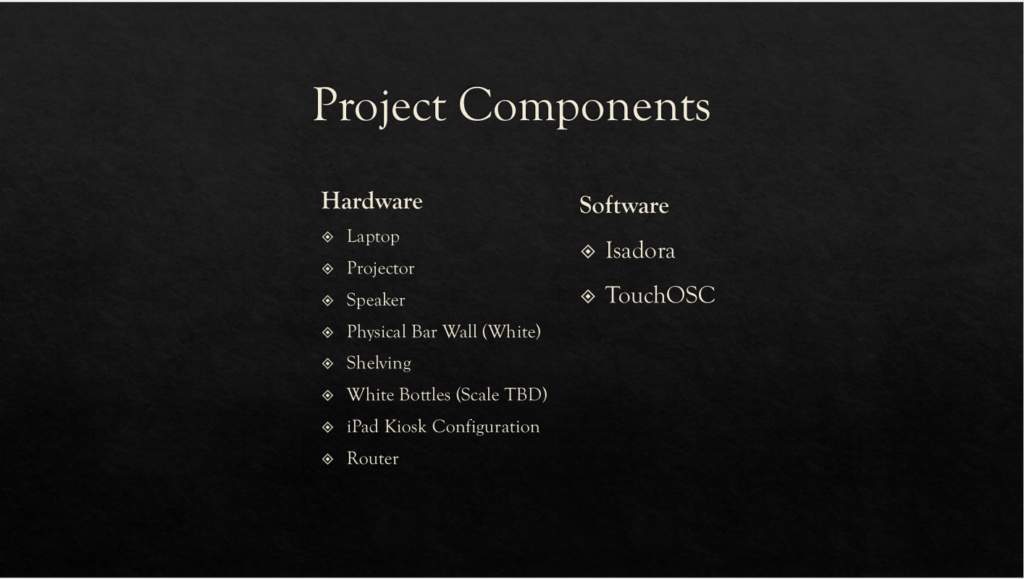

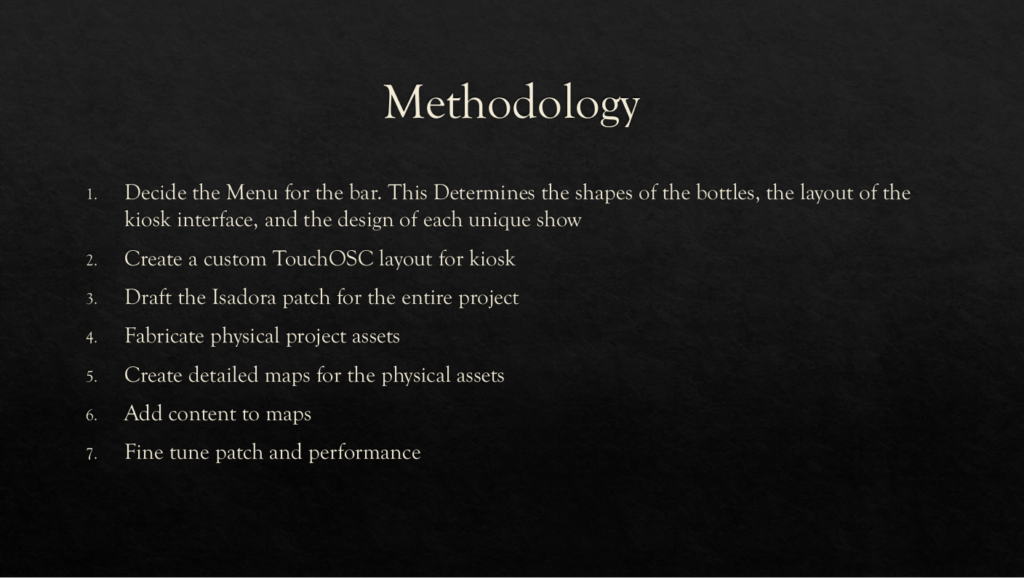

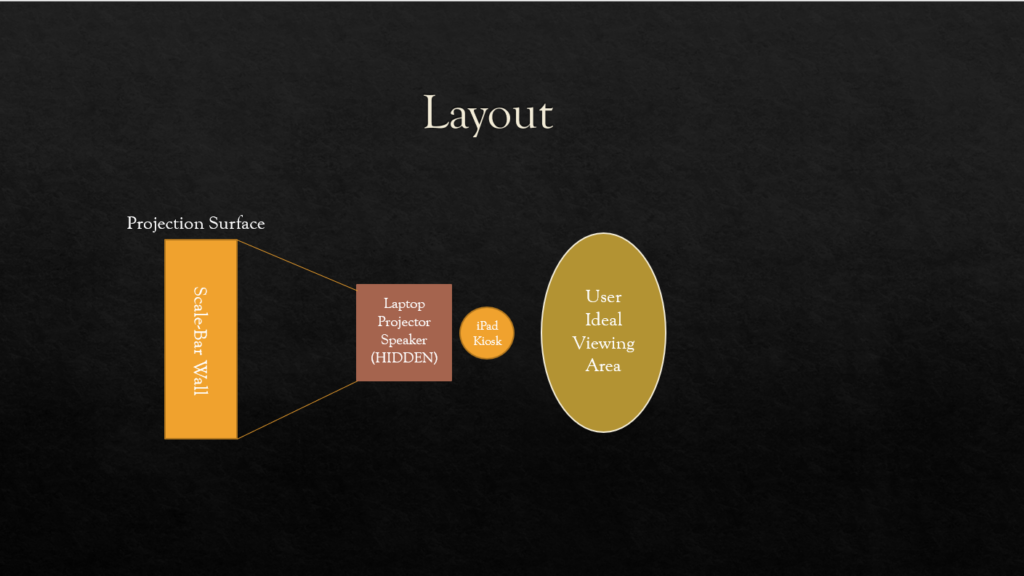

Cycle 1 – Bar Mapped – Alec Reynolds

Posted: March 24, 2022 Filed under: Uncategorized Leave a comment »

Cycle 1 Project Demo, Gabe Carpenter, 3/23/2022

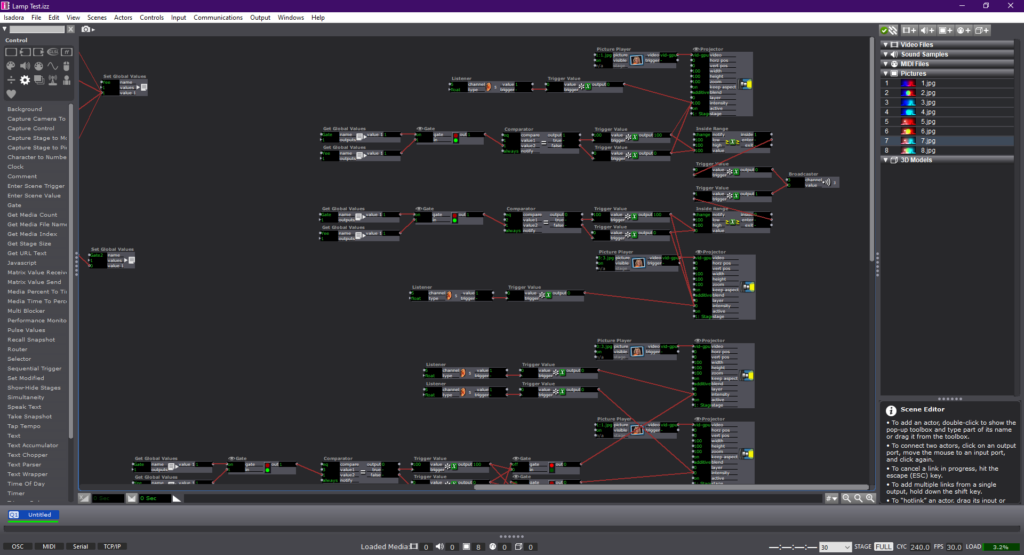

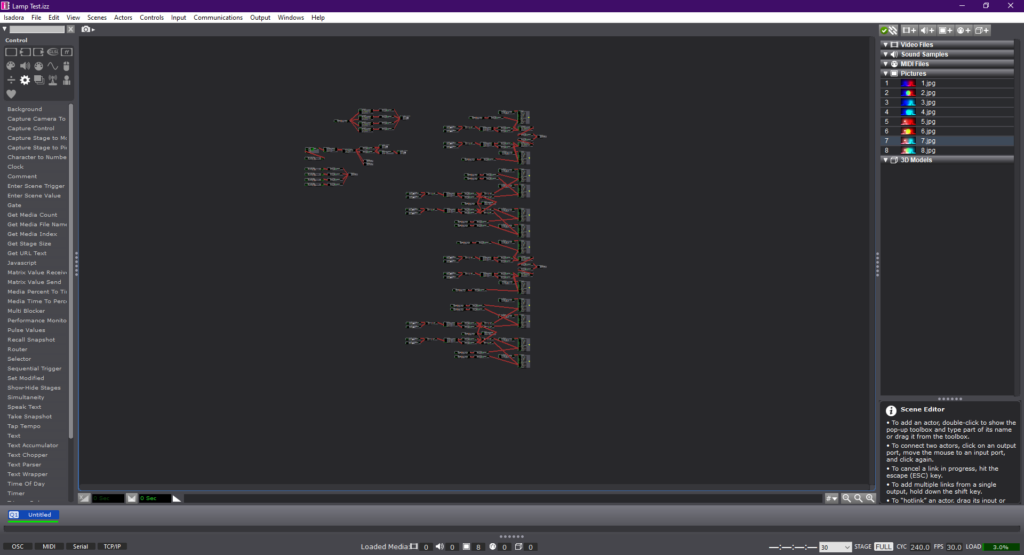

Posted: March 23, 2022 Filed under: Uncategorized Leave a comment »This project is based on a game that allows the player to change the environment in order to gather information and come to a final decision. This demo will allow me to demonstrate the viability of using physical switches to cause changes to a digital environment. The first step to any project involving Isadora is the code, below are images from the completed patch, however, the patch has some issues. The main issue is that the third switch that I originally intended to use as a third option emits a constant signal, which Isadora does not like, so I removed the option for this demo.

The next two videos are examples of me using the switches to change the environment, first just using the keyboard and then incorporating the physical switches with the help of a Makey-Makey.

Below is a link to a copy of the Isadora patch used in the demo.

Pressure Project #

Posted: March 7, 2022 Filed under: Uncategorized 1 Comment »When I first heard audio project my brain started going in every direction of what to do. I could make a podcast, I could write music score, make a pop song, there were so many possibility. The only problem was telling a story that would make someone laugh. I’m not used to telling that kind of story in a song. I started to write the song but it just wasn’t coming off with the right vibe I wanted to give.

Later that day I was hanging out with my roommate in the balcony watching the neighbor take out their dog and not picking up after their dogs and we were commentating this entire situation from our open balcony (as in if they walked over to our direction 10 feet they could probably hear what we are saying. We were just being stupid, saying things that didn’t make sense like pocket poop that you would throw at people you didn’t like.

Then it struck me.

I didn’t know what to do with the topic of cultural heritage because I don’t have a “cultural heritage” other than my friends that I am closely connected to. After immigrating from Korea, I had to build my new home, a new culture and custom for myself. And I found that home in my friends that I grew up with.

So I started to dig through my collections of recordings I have on my phone to get some inspiration. Found some clips of recordings I got from different times. I wanted to make a sonic dialog that had a message if you dig through but on the surface it just seems like music happening with words. So I started sampling some beats I’ve made, and put the recordings on top of it and create a flow to the dialog that I wanted. Polished the recordings with EQ and other effects that made it sound hazy. Automation for progress of development of the effects.

Overall, I really enjoyed the creation process of the project and I had fun making art (which I have’t felt in a while so it felt good to make something and feel good about it).

Enjoy the recording 🙂

Pressure Project 3 – Marvel – Alec Reynolds

Posted: March 4, 2022 Filed under: Uncategorized Leave a comment »For the final pressure project, I enlisted the use of a franchise that has had a significant impact on my life. Marvel Studios has created a Global Phenomena that has not been matched before. The complexity of story telling and world building that the Marvel films achieve is nothing short of incredible.

The prompt for the third pressure project encouraged students to create a auditory narrative that discussed a piece of cultural importance in our lives. From this, I was able to attribute the influence that the films have had on me as a person. With the release of Iron Man in 2008, I fell in love with the character and the complexity of his suits. I would go so far to say that the character heavily motivated my passions for STEM and especially engineering.

Further, the Marvel films do an impressive job at tackling real world issues through the lens of a supernatural world. By giving characters that are literally God’s, flaws and grounded issues, generates a deeper sense of connection and reflection.

For my pressure project I wanted to create an auditory experience that created a sense of excitement and inspiration for the viewer. I begin the audio with a narration of how I view Marvel in the context of my culture. I have shared so many intimate moments with friends, family and strangers over the existence of these films. The next segment in the audio is a narrative story about the growth of the marvel cinematic universe up to the finale of the Infinity Saga, Endgame. The sounds in this story are designed to evoke excitement, inspiration and appreciation for the dynamic capacity of the films.

I chose to focus the visual aspect of the experience around the Marvel 10 Years promo, since this was an important time in the MCU where I really reflected on how big of a role these movies had on me. I think back to all the times that I was obsessing over these films at a very young age, and to see the passion stick with me all these years is so fascinating. The medium which I shared the image is through a 3D printed picture called a lithophane. These are 3D pictures that you can feel due to the contoured depth that they are printed with. I accessorized the experience with RGB LEDs to highlight specific moods in the audio.

If I had more time with this project I would love to print the banner in a much larger format and then create a lighting system that illuminates each character when they have a sound bite introduced in the audio. I also would have created an audio track that was 120 sec versus 180 sec.

Pressure Project 3 – The Story of Mulan – Min Liu

Posted: March 3, 2022 Filed under: Uncategorized Leave a comment »In this project, I retold the story of Mulan, a woman hero in Chinese history who disguised herself as a man and fought on the battlefield instead of his father. As a Chinese, I found it hard to tell a cultural story in English. The language itself affords meanings and I am not a good translator. That’s the main reason why I chose the story of Mulan. I knew there were several versions of movie about this story and two of them are in English, which could be good resources for me. I chose the Disney version. I reorganized the story to different parts (start, development, climax, end) based on the poem, the ballad of Mulan, an old poem that almost everyone can recite in China. Then I found audio resources (background sound, music, dialogues) for each part and tried to make the music transform smoothly between each session. As a totally new hand in compiling audio and video files, it’s not easy. Alex asked about the choice of the Disney version. I admitted that some of the scenarios and dialogues in the movie was funny and awkward. Actually, the short poem is more vivid and inspiring, and that’s why I added the poem in the video. I am learning to be a good storyteller of my culture. I am also happy that this project gave me an opportunity to learn about Au and Pr. I will incorporate audios in the following projects because sound is so powerful. Here is the final work: