Circle 2 – Yujie

Posted: April 8, 2022 Filed under: Uncategorized Leave a comment »For the circle 2, I was going to add the composition of multiple projection screens and audience interaction.But I spent too much time on some technical issues. I end up having little progress on these two things. But I did try something new. I add a viewing experience that aims at defamiliarizing what we see every day as “real” experience. Especially, when we perceive things according to already-made categories. I ask the viewers to invert their iPhone to negative mode. Then they can choose to (or not) use their negative mode camara to see the performance. When they look at the dancer, they will see that she is invert to negative, while when they look at the projection (parts of the body in negative), they will see the normal images. I hope that in consistently seeking to “see”, the viewers will reflect on their own subjective viewing experience and on what they can really “see.”

Here is the recording of the circle 2 showing:

For the circle 3, I will continue what I have left from circle 1 and I will add voice recording of the dancer

1. Develop multiple projections

2. Audience presence in the performance

3. voice recording of the dancer

Cycle 2–Allison Smith

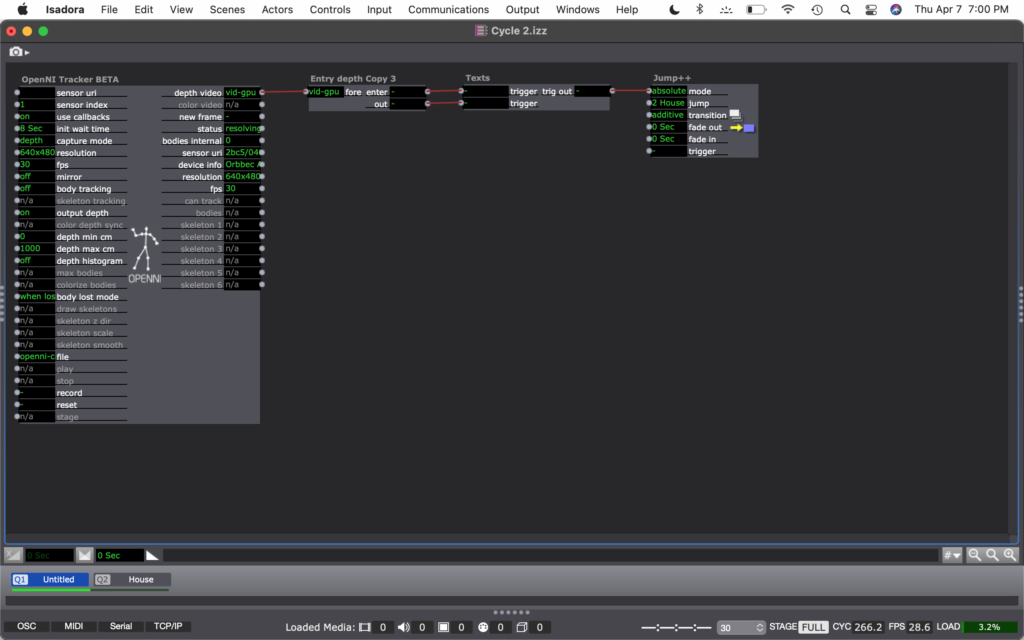

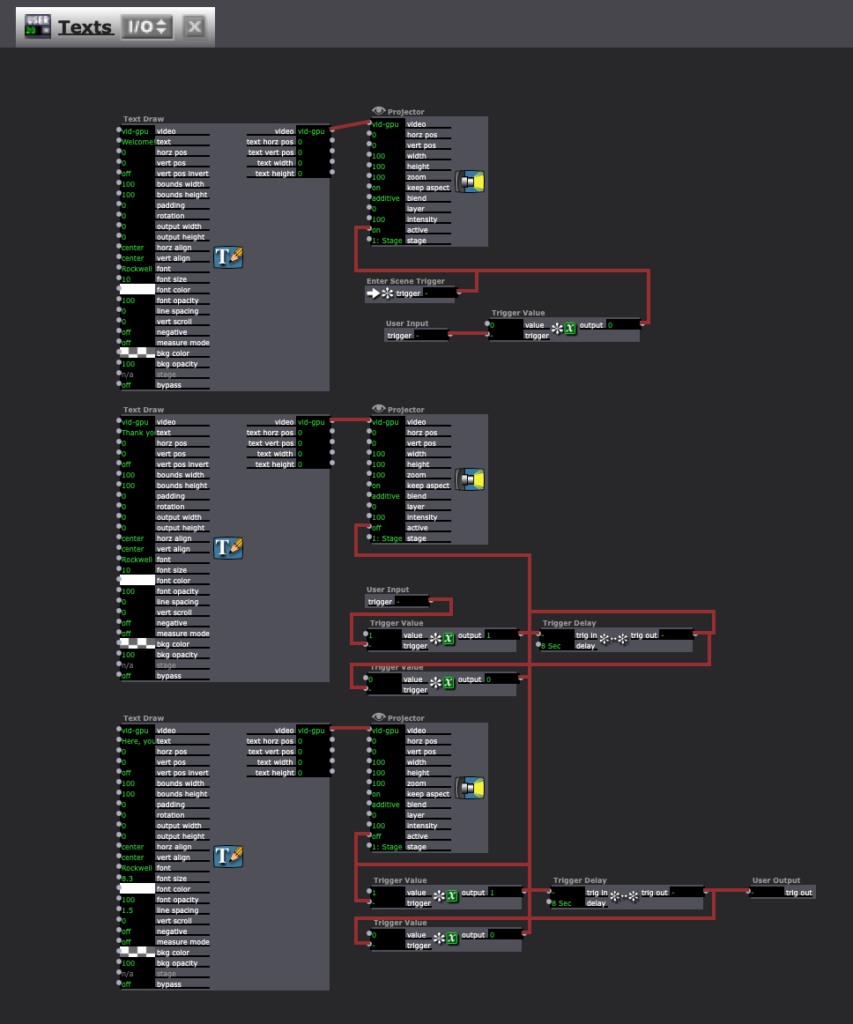

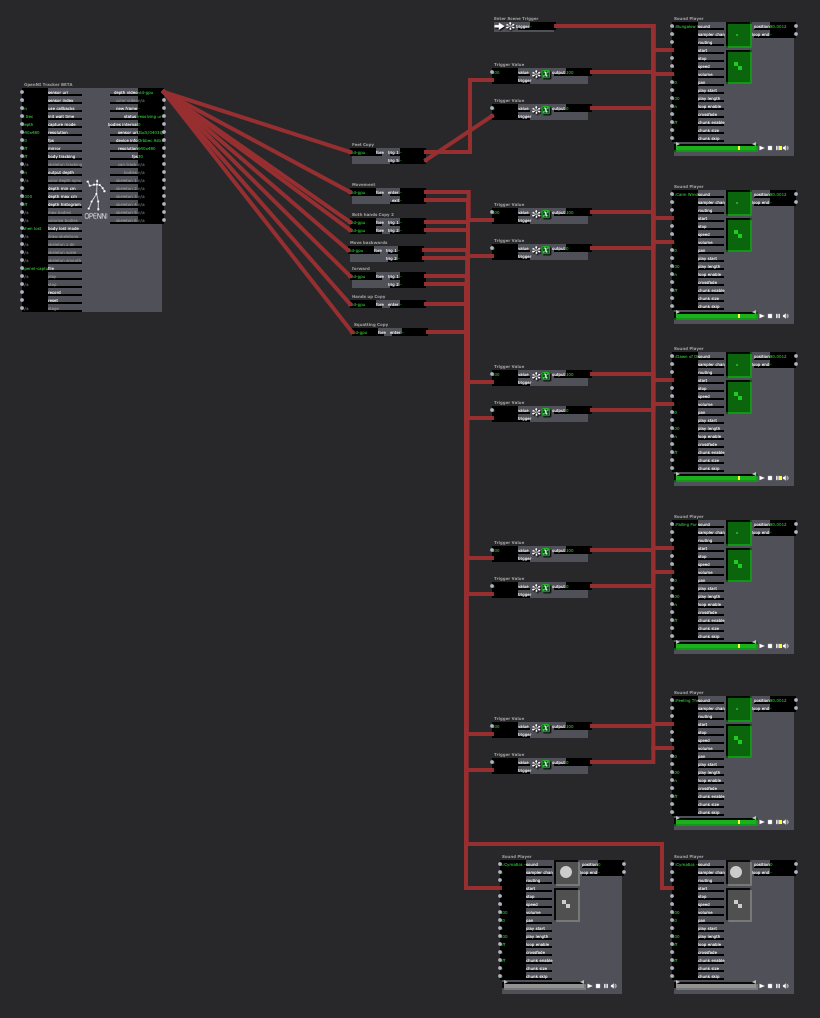

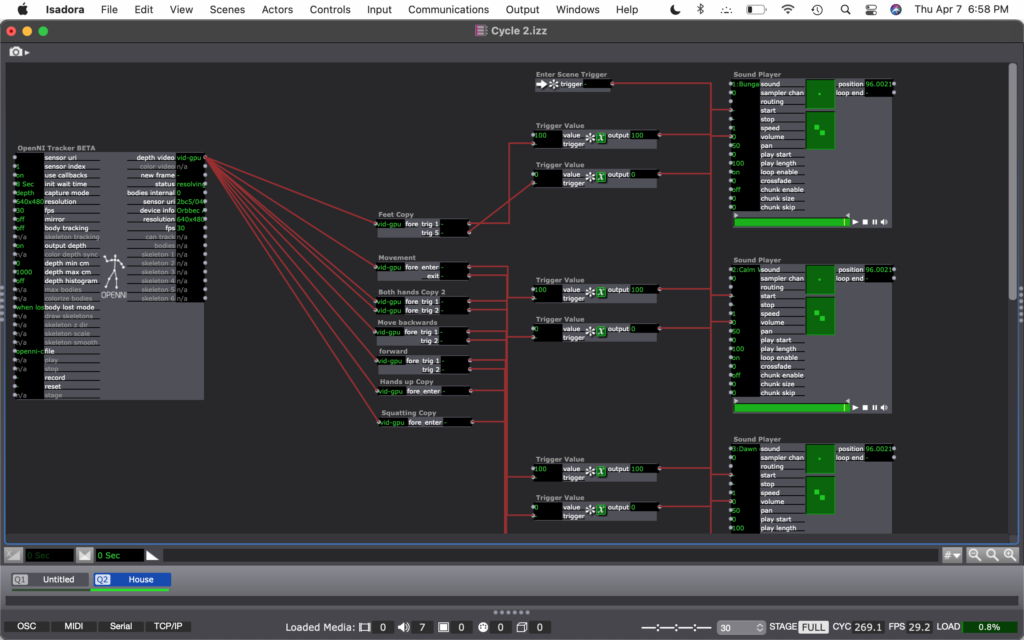

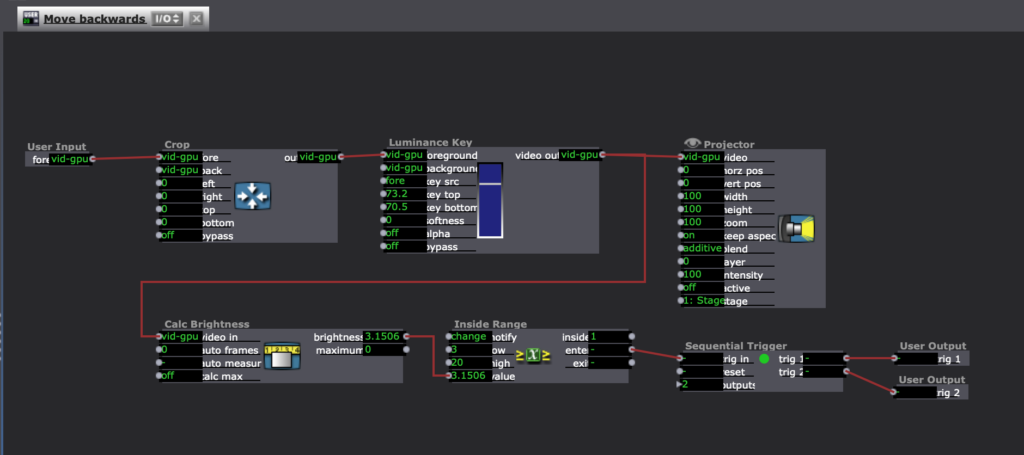

Posted: April 7, 2022 Filed under: Uncategorized | Tags: Cycle 2, dance, Interactive Media, Isadora Leave a comment »For my cycles, I’m working on practicing different media tools that can interact with movement. For this cycle, I chose to work with the interaction of movement and sound. Similar to my PP2, I had a song with several tracks playing at the same time, and the volume would turn up when it’s triggered. The goal was to allow a space to play with movement and affect the sound, allowing that to affect the movement.

I had two possible audiences in mind for this. The first audience I was considering was people who don’t typically dance, and who find this in a type of installation and want to play with it. Like I mentioned at the beginning, I’m curious about how completing an activity motivated through exploration will knock down inhibitions that are associated with movement. Maybe finding out that the body can create different sounds will inspire people to keep playing. The other audience I had in mind was a dancer who is versed in freestyle dance, specifically in house dance. I created a house song within this project, and I inspired the movement triggers based on basic moves within house dance. Then, the dancer could not only freestyle with movement inspired by the music, but their movement can inspire the music, too.

For this demo, I chose to present it in the style for the first audience. Here is a video of the experience:

I ran into a few technical difficulties. The biggest challenge was how I had to reset the trigger values for each space I was in. The brightness of the depth was different in my apartment living room than it was in the MOLA. I also noticed that I was able to create the different boundaries based on my body and how I would move. No one moves exactly the same way, so sounds will be triggered differently for each person. It was also difficult to keep things consistent. Similarly to how each person moves differently from each other, we also don’t ever move exactly the same. So when a sound is triggered one time, it may not be triggered again by the same movement. Finally, there was a strange problem where the sounds would stop looping after a minute or so, and I don’t know why.

My goal for this cycle was to have multiple songs to play with that could be switched between in different scenes. If I were to continue to develop this project, I would want to add those songs. Due to time constraints, I was unable to do that for this cycle. I would also like to make this tech more robust. I’m not sure how I would do that, but the consistency would be nice to establish. I am not sure if I will continue this for my next cycle, but these ideas are helpful to consider for any project.

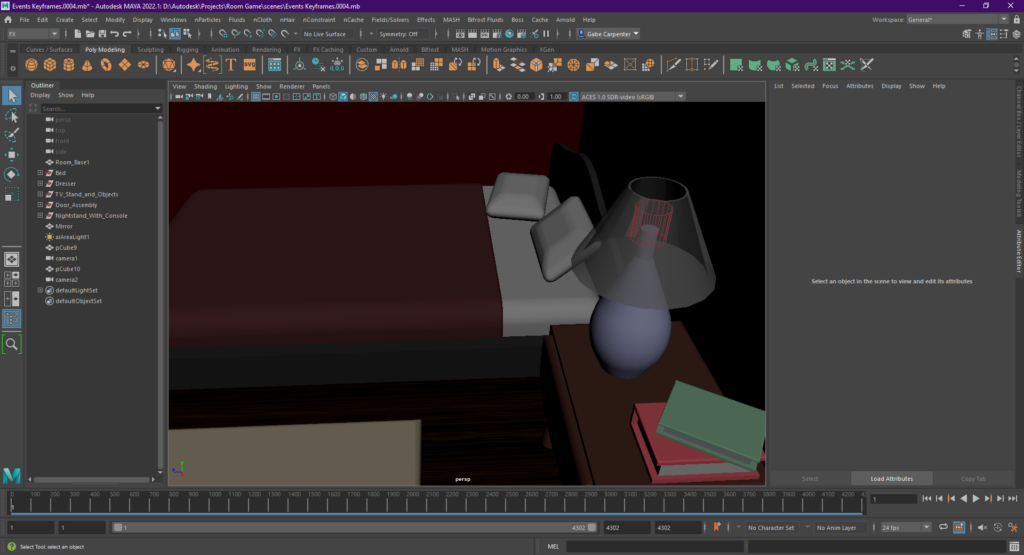

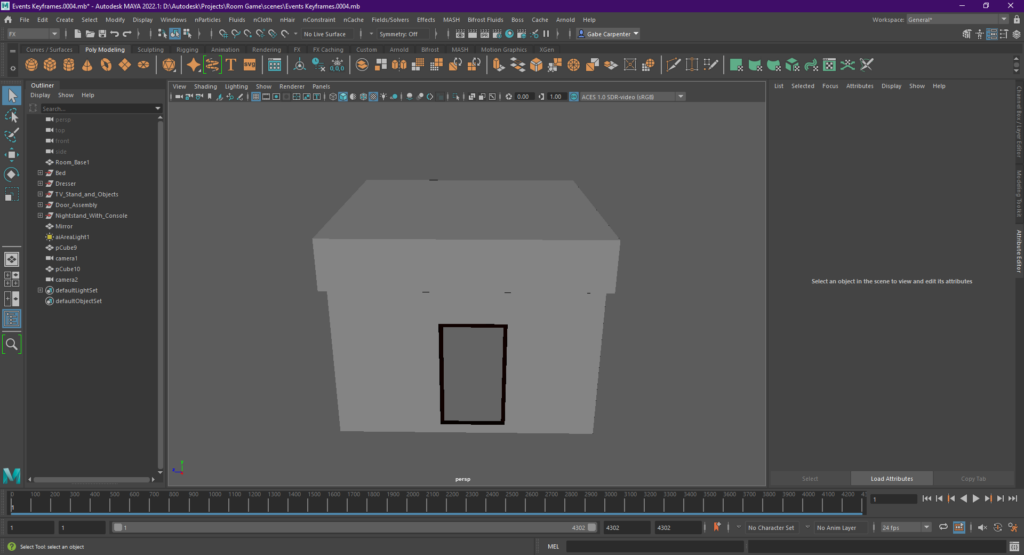

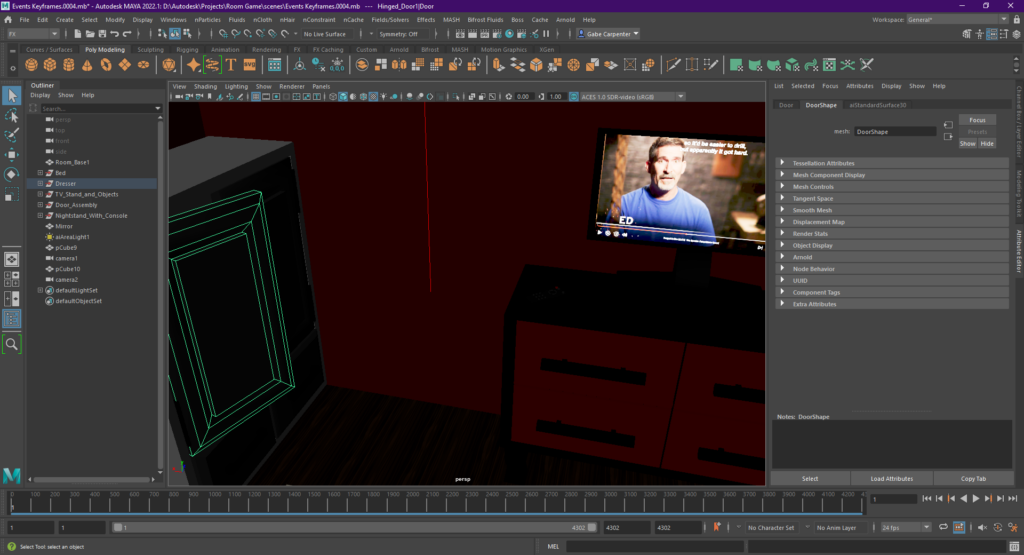

Cycle 2, Gabe Carpenter, 4/6/2022

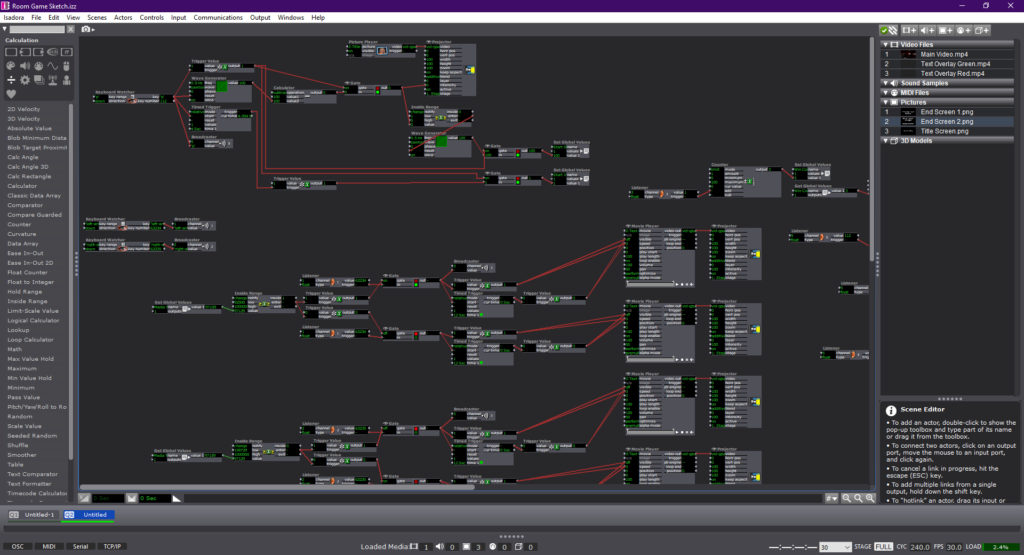

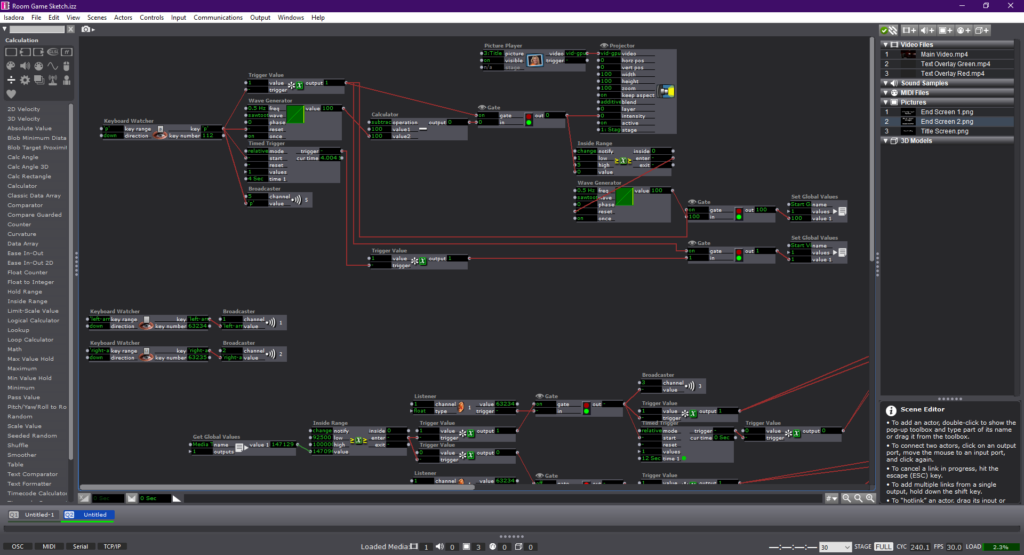

Posted: April 6, 2022 Filed under: Uncategorized Leave a comment »This phase of my project is focused on creating the media aspects of my final project. There are no physical controls involved with this stage, as those were demonstrated in cycle 1. I plan to combine both of these phases in the final project. I began this project by creating my room in Maya. I modeled all of the assets used in the game from scratch and rendered the footage using a render farm and the Deadline system. Below are a few screenshots of the work done in Maya

The next portion of the project involved creating the Isadora patch, which I will link here.

The experience is roughly 4 minutes long, includes a pass/fail ending system, and dynamically responsive choices throughout the game.