Pressure Project 3 – SP35 Potions 1

Posted: May 5, 2024 Filed under: Uncategorized Leave a comment »I’m putting a bunch of documentation in here!

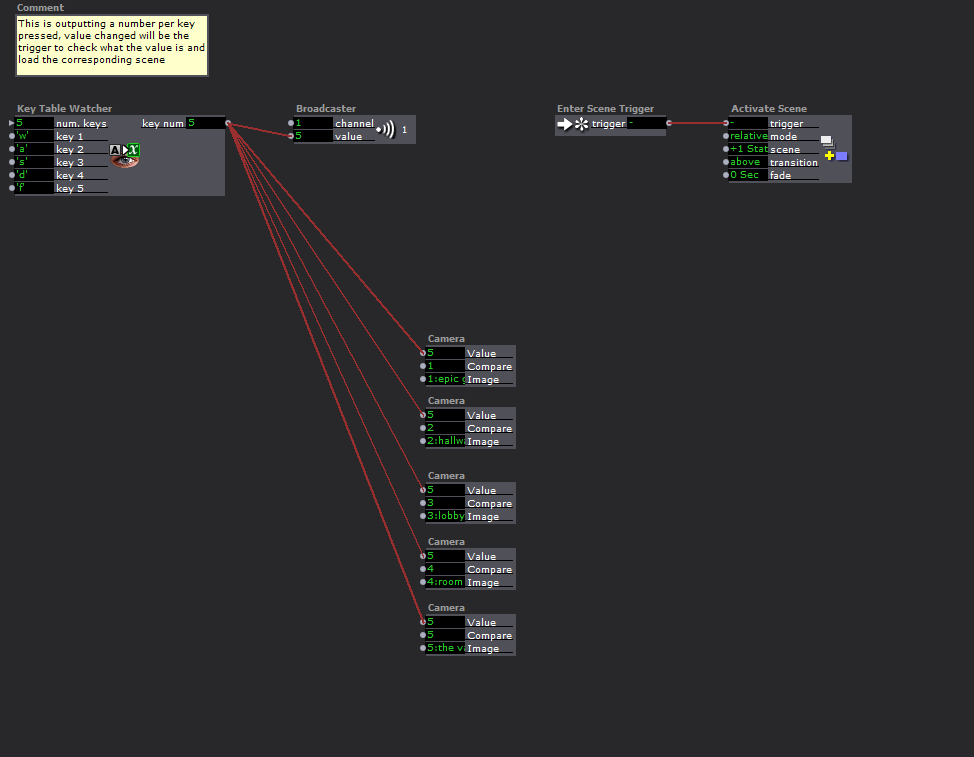

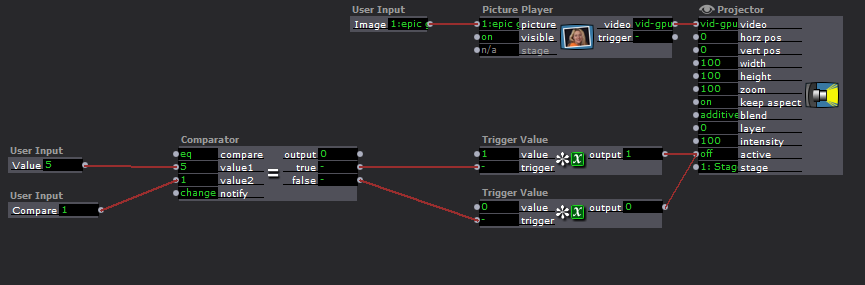

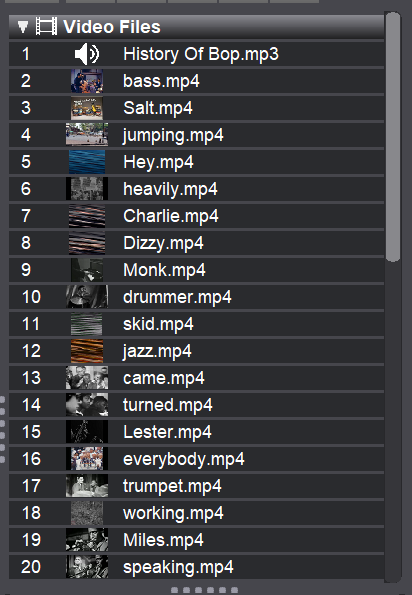

Images of my camera system 😀

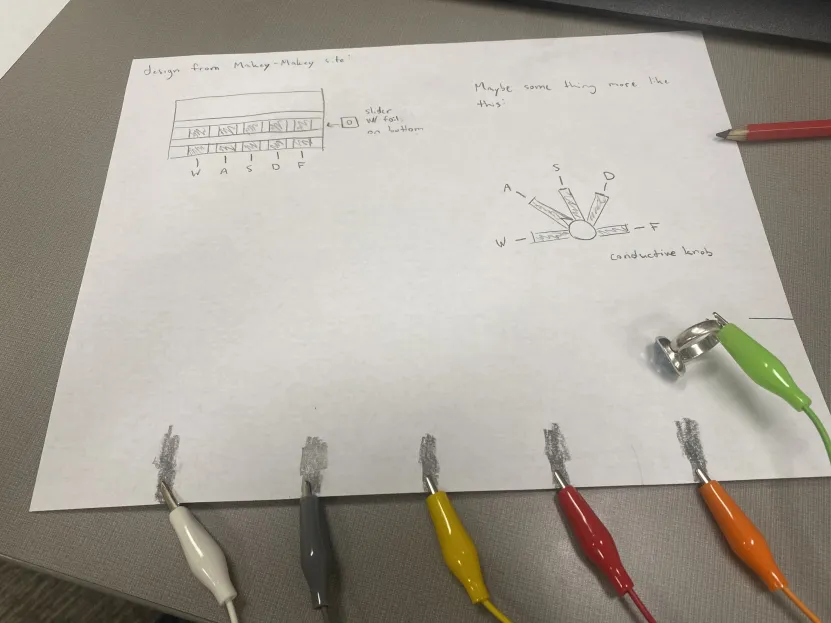

Prototyping lol, I’m thinking of creating a custom knob?

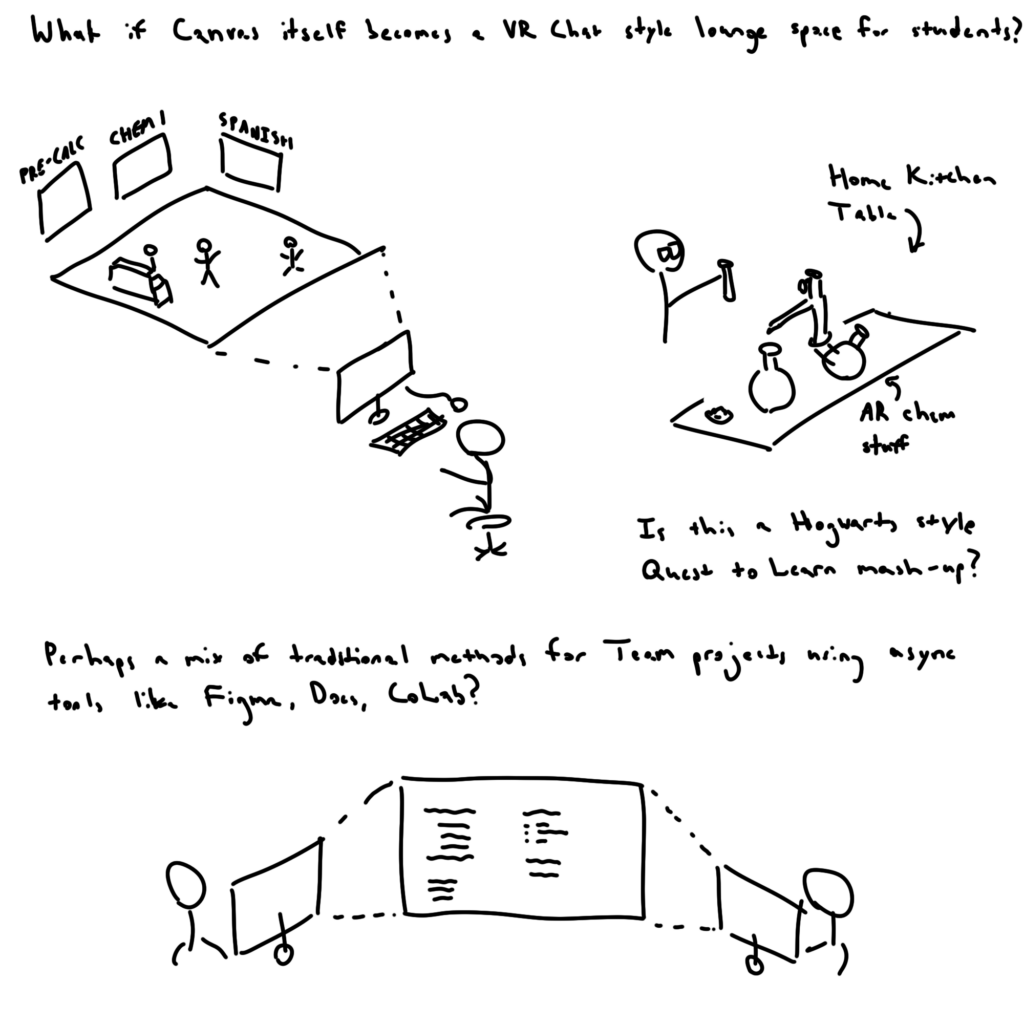

So this project changed right in the middle. I was thinking more about the ideas I had with perspective puzzles and I really wanted to take this idea and use it for my cycles, so I tabled the idea for now. In DESIGN 6300, I had been doing more research into educational games and the concept of serious games, which are games that are used for non-entertainment purposes (examples could be games used in the classroom, training simulations for new hires, or training simulations for military personnel like pilots). I wanted steer my project in a different direction and I was thinking about a conjecture I did while looking into instructional design.

I had found a lot of research looking into the effects of the pandemic and hybrid education, and I had also found a lot of research about integrating educational tools into devices created for the Internet of Things (in general integrating educational tools with new technologies was a big theme). In that conjecture I tried to imagine what a game-based hybrid learning space could look like, thinking about how massively multiplayer online games (MMOs) represent their public virtual spaces, and how that relates to public spaces in schools that students gravitate towards. I also tried to think about how collaboration and lessons would be taught, inspired by the work I do on the IFAB VR projects, what if chemistry was taught in AR, so that students could get proper hands-on experience using things like distillery sets? The biggest problem I saw actually related to that idea, students needing hands-on experience and to be engaged, which was a downside of hybrid learning; it seems that there is something about being in the room that’s important to cultivating a learning environment.

Thinking about this, I wondered if the tactile nature of Makey-Makey controllers could be used to help with hybrid learning experiences. Building off of how Quest to Learn (Quest to Learn (q2l.org)) gamifies it’s learning and a previous project I had made that was a wizard battle game using Makey-Makey’s, I wanted to see if I could make a Harry Potter style “potions” class, where the potions are chemicals, and the end goal is some sort of chemistry lesson.

One of the first things I did was think about how I wanted to use the Makey-Makey. At first I was thinking about actually using liquids of some sort. There are a lot of simple chemistry lessons that teach about concepts like why oil and water don’t mix well together, and I was hoping that I could work these kinds of lessons into the hardware (plus water conducts electricity well, oil doesn’t, there is definitely room for some sort of electronic magic).

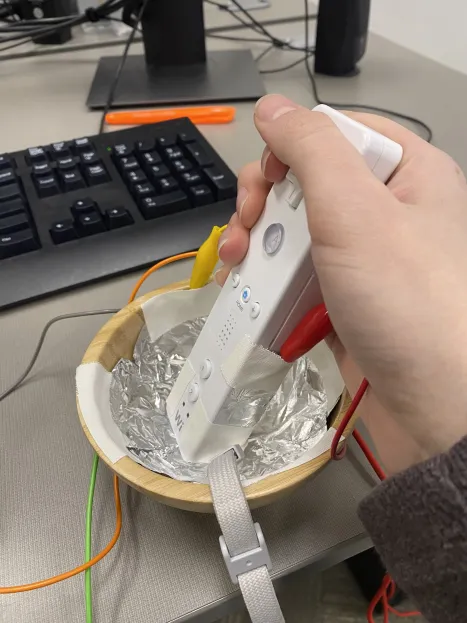

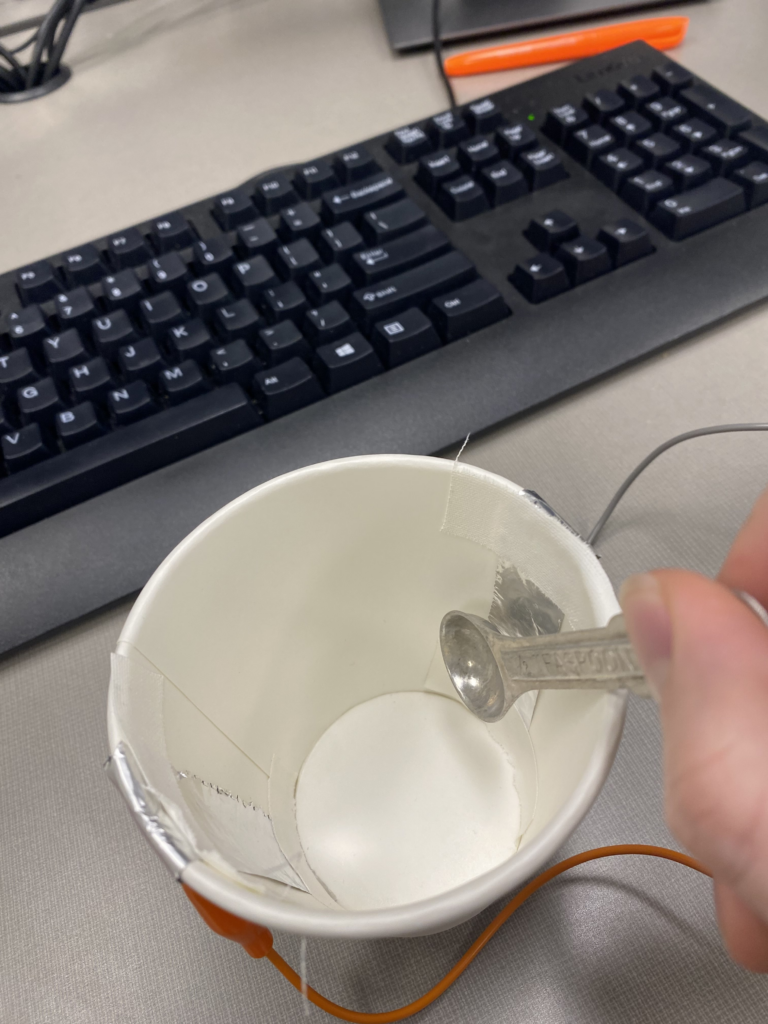

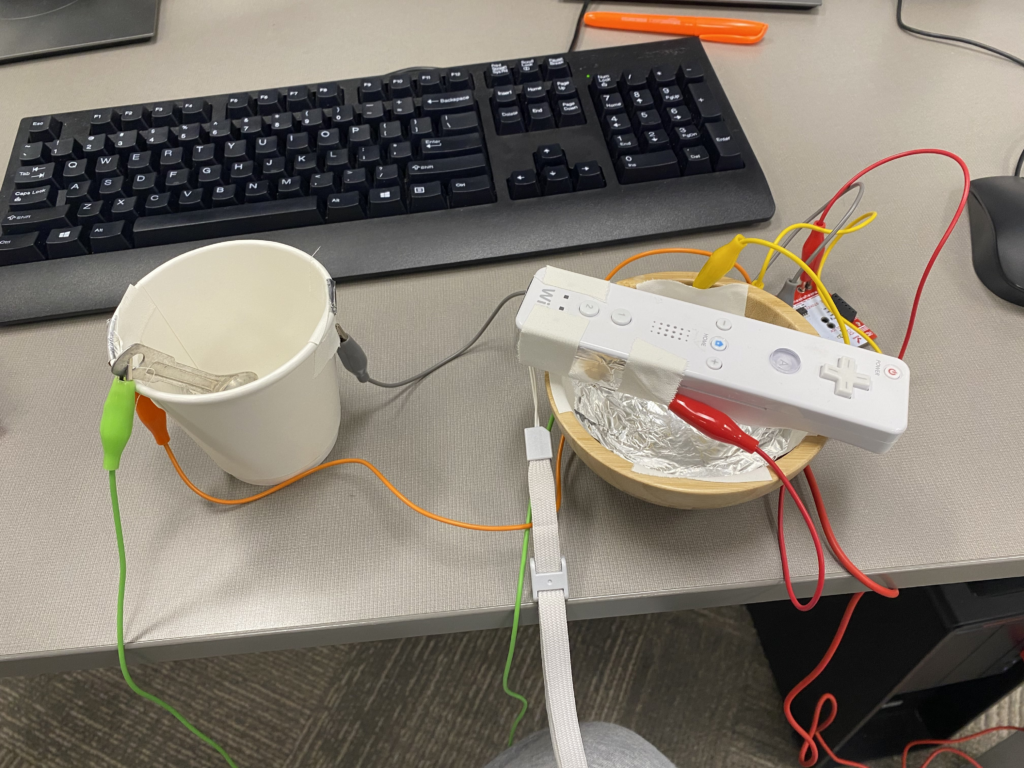

I started with a cup of water that could be dipped into to get electrical current, but I found that I wasn’t able to control the circuit very well when I was using liquids. I then thought about how I could potentially mimic actions that one might have to do in a “potions” class, inspired by the kinds of strange chemistry magic that NileRed does (he has a wild YouTube channel where he does wacky chemistry hijinks, here is a video where he turns vinyl gloves into grape soda: https://youtu.be/zFZ5jQ0yuNA?si=EHvenzlJcoIEZPO6). I wanted to get the physical motions of grinding up a piece of material, pouring that material into a liquid, and stirring to dissolve it and saturate a solution. To do this, I was going to make a mortar and pestle controller and a beaker controller. I didn’t have a mortar and pestle or a beaker, and I only had 10 hours (now 5 after pivoting) to do the whole project, so I had to get creative. For my mortar and pestle, I filled the inside of a wooden bowl with aluminum foil and taped some to the bottom of a Wii Remote, which looked like this:

I also ended up making this cup and checking in Isadora whether the person was “stirring” and how fast. I did this by putting two pieces of foil on either side of the of the inside of the cup. The play used a metal spoon to tap both of the pieces of foil in rapid succession, which led to a back and forth motion that is akin to stirring.

Video-Bop: Cycle 3

Posted: May 2, 2024 Filed under: Uncategorized | Tags: Cycle 3 Leave a comment »The shortcomings and learning lessons from cycle 2 provided strong direction for cycle 3

The audience found that the rules section of cycle 2 were confusing. Although, I intentionally labelled these essentials of spontaneous prose as rules, it was more of a framing mechanism to expose the audience to this style of creativity. Given this feedback, I opted to structure the experience so that they witnessed me performing video-bop prior to trying it themselves. Further, I tried to create a video-bop performance with media content which outlined some of the influences motivating this art.

I first included a clip from a 1959 interview with Carl Jung in which he is asked:

‘As the world becomes more technically efficient, it seems increasingly necessary for people to behave communally and collectively. Now, do you think it possible that the highest development of man may be to submerge his own individuality in a kind of collective consciousness?’.

To which Jung responds:

‘That’s hardly possible, I think there will be a reaction. A reaction will set in against this communal dissociation.. You know man doesn’t stand forever.. His nullification. Once there will be a reaction and I see it setting in.. you know when I think of my patients, they all seek their own existence and to assure their existence against that complete atomization into nothingness or into meaninglessness. Man cannot stand a meaningless life’

Mind, that this is from the year 1959. A quick google search can hint at the importance of that year for Jazz:

Further, I see there to be another overlapping timeline. That of the beat poets:

I want to point a few events:

29 June, 1949 – Allen Ginsberg enters Columbia Psychiatric Institute, where he meets Carl Solomon

April, 1951 – Jack Kerouac writes a draft of On the Road on a scroll of paper

25 October, 1951 – Jack Kerouac invents “spontaneous prose”

August, 1955 – Allen Ginsberg writes much of “Howl Part I” in San Francisco

1 November, 1956 – Howl and Other Poems is published by City Lights

8 August, 1957 – Howl and Other Poems goes on trial

1 October, 1959 – William S. Burroughs begins his cut-up experiments

While Jung’s awareness is not directly tied to American culture or jazz and adjacent artforms, I think he speaks broadly to the post WW2 society. I see Jung as an import and positive actor in advancing the domain of psychoanalysis and started working at a psychiatric hospital in 1900 in Zürich. Nonetheless, critique of the institutions of power and knowledge surrounding mental illness emerged in the 1960s most notably with Michel Foucault’s 1961 Madness and Civilization and in Ken Kesey’s (a friend of the beat poets) 1962 One Flew over the Cuckoo’s Nest. It’s unfortunate that a psychoanalytic method and theory developed by Jung which emphasizes dedication to the patient’s process of individuation, is absent in the treatment of patients in psychiatric hospitals throughout the 1900s. Clearly, Ginsberg’s experiences in a psychiatric institute deeply influenced his writings in Howl. I can’t help to connect Jung’s statement about seeing a reaction setting in to the abstract expressionism in jazz music and the beat movement occurring at this time.

For this reason I included a clip from the movie Kill your Darlings (2013) which captures a fictional yet realistic conversation had by the beat founders Allen Ginsburg, Jack Kerouac, and Lucian Carr upon meeting at Columbia University.

JACK

A “new vision?”

ALLEN

Yeah.

JACK

Sounds phony. Movements are cooked up by people who can’t write about the people who can.

LUCIEN

Lu, I don’t think he gets what we’re trying to do.

JACK

Listen to me, this whole town’s full of finks on the 30th floor, writing pure chintz. Writers, real writers, gotta be in the beds. In the trenches. In all the broken places. What’re your trenches, Al?

ALLEN

Allen.

JACK

Right.

LUCIEN

First thought, best thought.

ALLEN

Fuck you. What does that even

mean?!

JACK

Good. That’s one. What else?

ALLEN

Fuck your one million words.

JACK

Even better.

ALLEN

You don’t know me.

JACK

You’re right. Who is you?

Lucien loves this, raises an eyebrow. Allen pulls out his poem from his pocket.

I think this dialogue captures well the reaction setting in for these writers and how they pushed each other respectively in their craft and position within society. Interesting is Kerouac’s refute of being associated with a movement which is a stance he continued to hold into later life when asked about his role in influencing American countercultural movements of the 60s 1968 Interview with William Buckley. Further, this dialogue shows how Kerouac and Carr incited an artistic development in Ginsberg giving him the courage to break poetic rules and to be uncomfortably vulnerable in his life and work.

For me video-bop shares intellectual curiosities with that of the beats and a performative improvised artistic style with that of Jazz. So I thought performing a video-bop tune of sorts for the audience prior to them trying it would be a better way to convey the idea rather than having them read from Kerouac’s rules of spontaneous prose. See a rendition of this performance here:

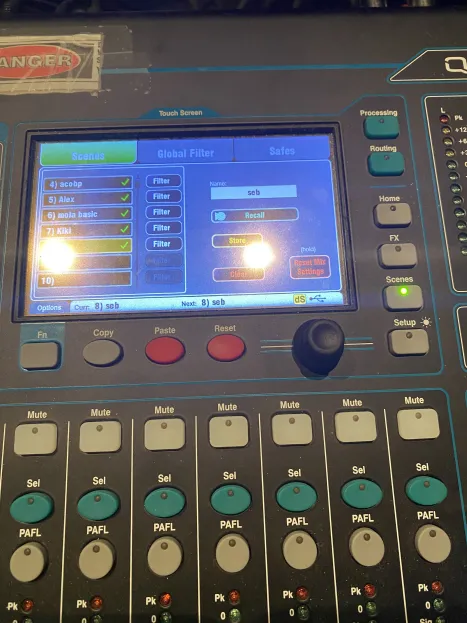

With this long-winded explanation in mind, there were many technical developments driving improvements for cycle 3 of video-bop. Most important was the realization that interactivity and aesthetic reception is fostered better from play as opposed to rigid-timebound playback. The first 2 iterations of video-bop utilized audio-to-text timestamping technologies which were great at enabling programmed multimedia events to occur in time with a pre-recorded audio file. However, after attending a poetry night at Columbus’s Kafe Kerouac, the environment where poets read their own material and performed it live inspired me to remove the timebound nature of the media system and force the audience to write their own haikus as opposed to existing ones.

Most of the technical coding effort was spent on the smart-device web-app controller to make it more robust and ensure no user actions could break the system. I included better feedback mechanisms to let the users know that they completed steps correctly to submit media for their video-bop. Further, I made use of a google-images-download to allow users to pull media from google images as opposed to just Youtube which was an audience suggestion from cycle 2.

video-bop-cyc3 (link to web-app)

One challenge that I have yet to tackle, was the movement of media files as downloaded from a python script on my PC into the Isadora media software environment. During the performance, this was a manual step as I ran the script and dragged the files into Isadora and reformatted the haiku text file path. See the video-bop process here:

Cycle 2: Video-Bop

Posted: May 1, 2024 Filed under: Uncategorized | Tags: Cycle 2 Leave a comment »Feedback from cycle I directed me towards increased audience interactivity with the video-bop experience.

Continuing in the spirit of Kerouac, I was inspired by one of his recordings called American Haikus in which he riffs back and forth with tenor saxophone player Zoot Sims. Kerouac, not being a traditional musical instrumentalist (per say), recites his version of American Haiku’s in call and response with Zoot’s improvisations:

“The American Haiku is not exactly the Japanese Haiku. The Japanese Haiku is strictly disciplined to seventeen syllables but since the language structure is different I don’t think American Haikus (short three-line poems intended to be completely packed with Void of Whole) should worry about syllables because American speech is something again… bursting to pop. Above all, a Haiku must be very simple and free of all poetic trickery and make a little picture and yet be as airy and graceful as a Vivaldi Pastorella.” (Kerouac, Book of Haikus)

Kerouac’s artistic choice to speak simple, yet visually oriented ‘haikus’, allows him inhabit and influence the abstract sonic space of group improvised jazz. These haikus are at par with the music motifs typical of trading which is when the members of jazz ensemble will take turns improvising over small musical groupings within the form of the tune they are currently playing. What I find most cool is how you can feel Zoot representing Kerouac’s visual ideas in sound and in real-time. In this way, a mental visual landscape is more directly shaped by merging musical expression and the higher cognitive layer of spoken language. It is not new for abstract music to be given visual qualities. Jazz pianist Bill Evans described the prolific ‘Kind of Blue’ record as akin to “Japanese visual art in which the artist is forced to be spontaneous…paint[ing] on a thin stretched parchment.. in such a direct way that deliberation cannot interfere”(Evans, Kind of Blue Liner notes 1959)

As a new media artist, I tried to create a media system which would engage the audience in this creative process of translating abstract ideas of one form into another. I believe this practice can help externalize the mental processes of rapid free association. To do so, I had to build a web-application accessible to the audience connected to a cloud database which could be queried from my PC running the Isadora media software. This web-app could handle the requests of multiple users from their phones or other smart-devices with internet access. I used a framework familiar to me using Dash to code the dynamic website, Heroku to host the public server, and AstraDB to store and recall audience generated data.

See src code for design details

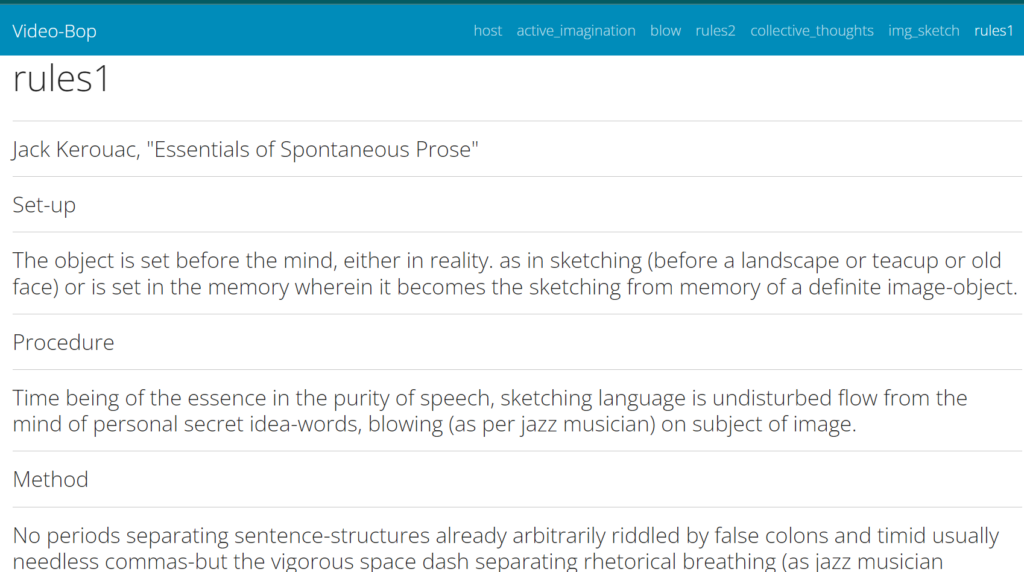

The experience stared with the audience scanning a QR code to access the website, effectively tuning their phones into an interactive control surface. Next, they were instructed to read Kerouac’s Essentials of Spontaneous Prose which humorously served as the ‘rules’ for the experience. This was more of a mood setting device to frame the audience to think about creativity from this spontaneous image oriented angle.

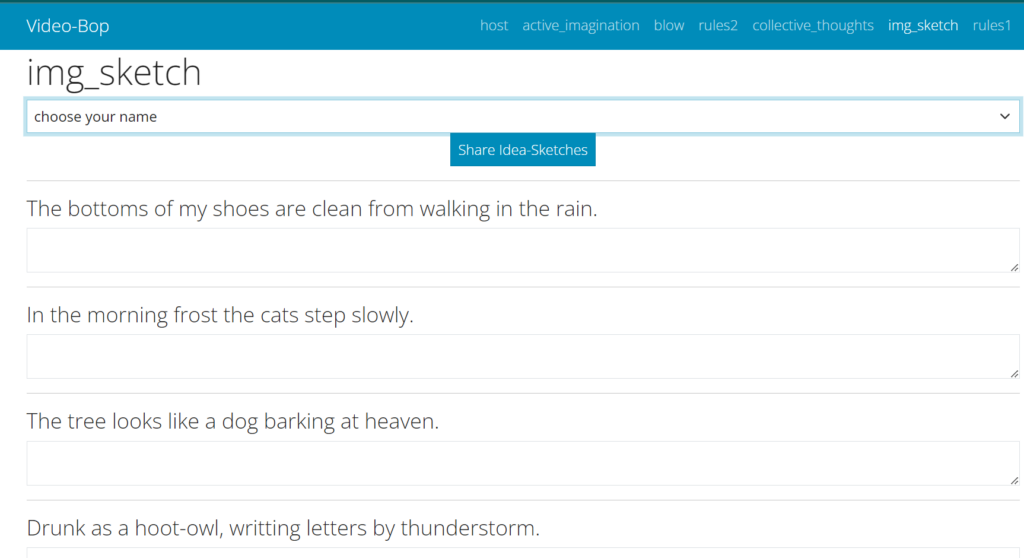

Next, I played a series of Kerouac’s Haikus and instructed the audience to visit a page on the site where they could jot their mental events for each haiku as they listened to the spoken words and musical interpretation by Zoot. After this, there was a submit button which sent all their words to the database and was then dynamically rendered onto the next page called ‘collective thoughts’. This allowed everyone in the audience to anonymously see each other’s free associations.

Example from our demo

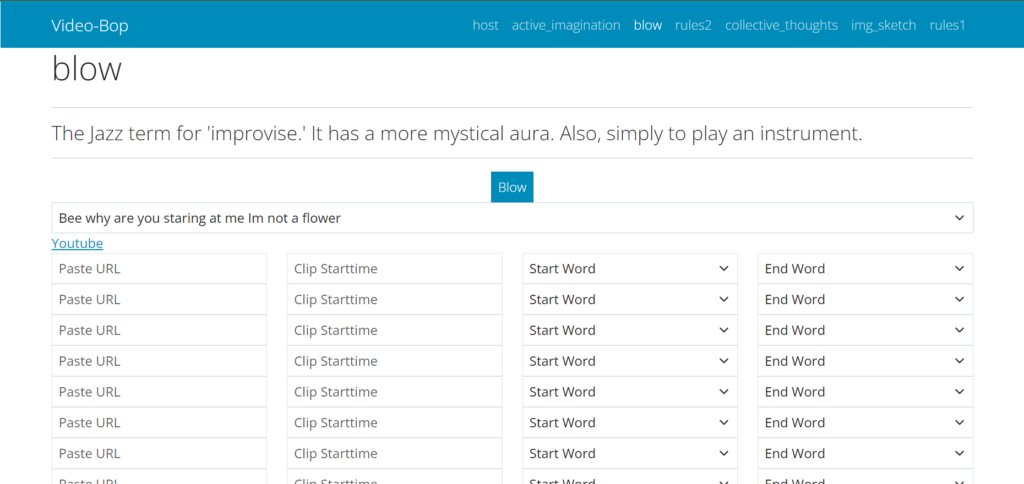

After, reading through the collective image-sketches from the group, we decided on a crowd favorite Haiku to be visualized. The visualization process was equipped to handle multiple Youtube video links with timecode information to align with the time occurrence of spoken words in a prerecorded video. This process followed form from Cycle I in how I quickly explored Youtube to gather imagery which I thought expressive of the message within the ‘History of Bop’ Poem. This practices forces a negotiation in expression between original image thoughts and the available videos on the medium of Youtube equipped with its database of uploaded content and recommender systems. An added benefit to having the interaction on their personal phones is that it connects to their existing Youtube app and any behavioral history made prior to entering into the experience. The page to add media looked like this:

This was the final step and it allowed tables to be generated within the cloud database which were in a form for which they could be post-processed into a json file which worked with the visualizing patch I made in Isadora for Cycle 1. I had written a python script to query the database and download all of the media artifacts and place them into the proper format.

Unfortunately, I didn’t have much time to test the media system prior to presentation day and the database was overwritten due to a design issue. Someone had submitted a blank form which overwrote all of the youtube data entered by the other audience members. For this reason, I was not able to display the final product of their efforts. Yet, it was a good point of failure for improvement in cycle 3. The audience was disappointed that they didn’t see the end product, but I took this as a sign that the experience created an anticipation which had powerful buildup.

Cycle 3- Who’s the Alien?

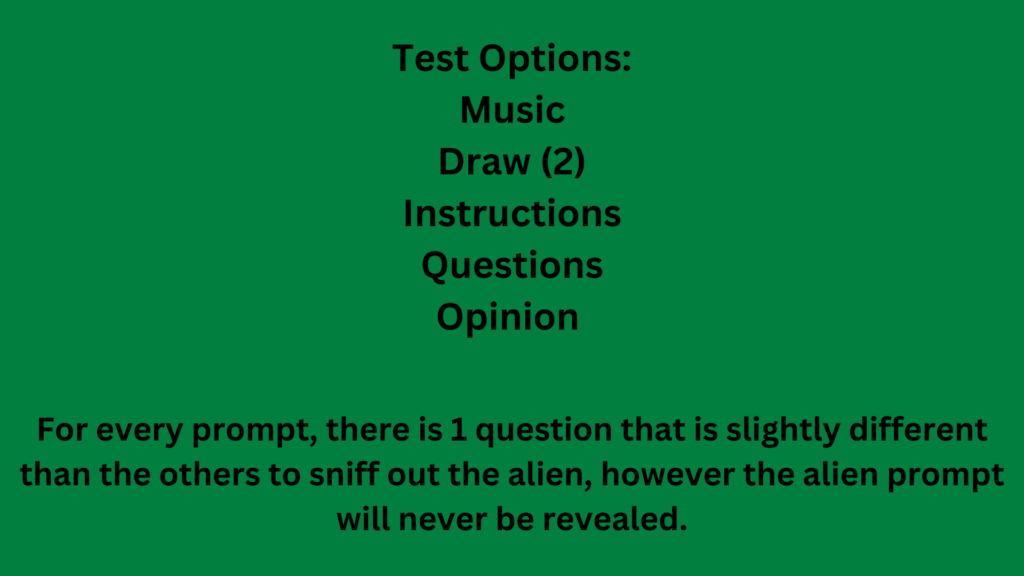

Posted: May 1, 2024 Filed under: Uncategorized Leave a comment »For Cycle 3 I wanted to switch the game up a bit and combine the two game ideas I did before. This game would be played majority through the headphones but still have visuals to follow. This game was about figuring out who the alien is among the humans on a spaceship. I chose two aliens. There would be a captain for each round and they would choose which 3 layers have to take a test to prove their humanity. Each of the test would happen through the headphones. I didn’t change much in terms of Isadora patches. I wanted to keep the game simple but social.

These were the test options.

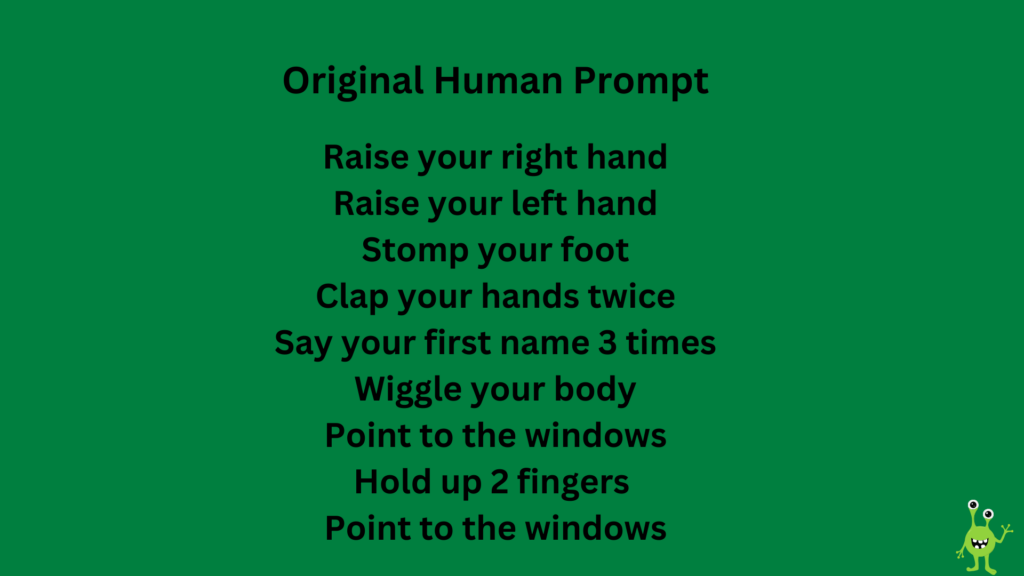

For every prompt, two questions were the same and one was slightly off. Here is an original question asked for the Instructions prompt.

Cycle 2- The Village

Posted: May 1, 2024 Filed under: Uncategorized Leave a comment »For Cycle 2 I decided to continue my idea with the multi channel sound system using the headphones and incorporating a game. The game I chose was Werewolf which is similar to the game Mafia, but I titled it The Village. I created different visual scenes that I could click through using Isadora so that the game had a flow and I could easily moderate. I created these through Canva.

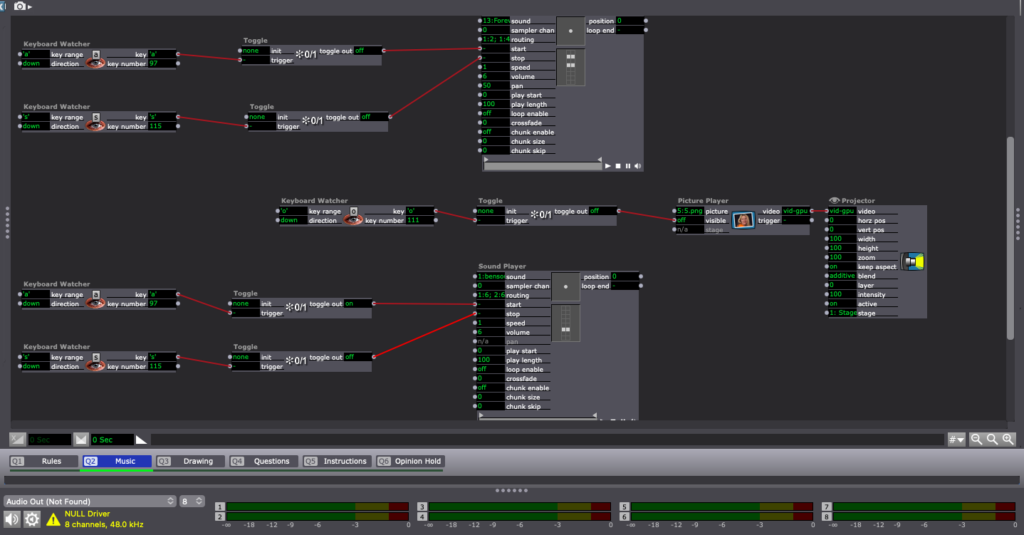

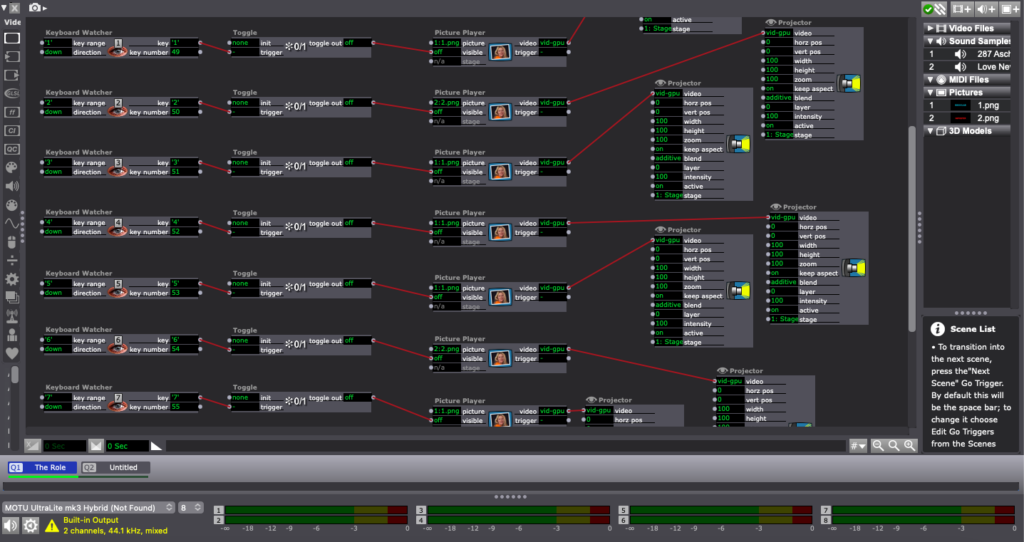

This is the patch I made for when people chose their roles. Depending on what headphones they chose, that would be their role in the game. I had 6 different media files sent to six separate headphones. I had the keyboard watcher as the same so that all of the audio played at the same time.

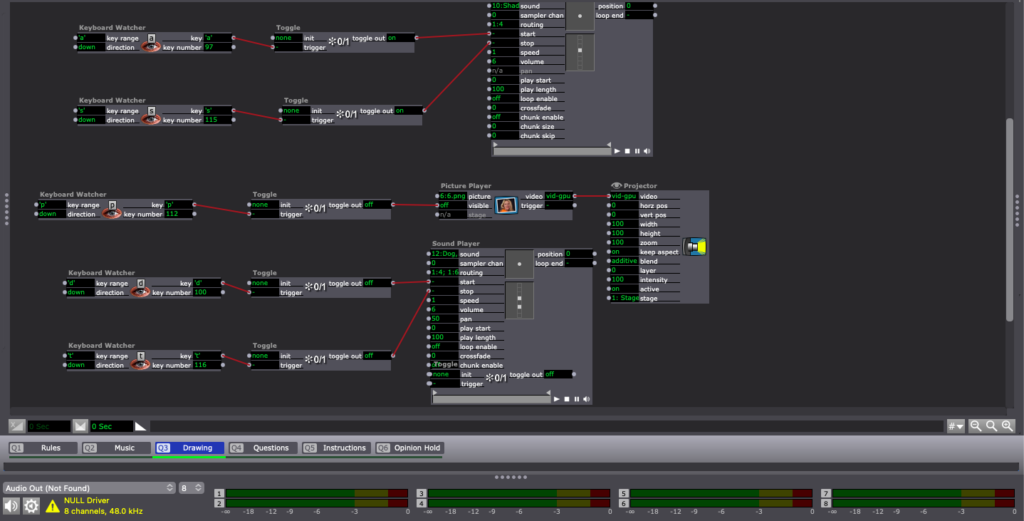

This was the patch I used for clicking through each visual. The keyboard watcher was assigned to different letters so I knew which visual would pop up.

What was successful about this was the game was fun, each role was hidden from the others, and the visuals had a nice flow for th egame. What I need to clarify are the game rules and how much replay value the game has. I also had the idea of incorporating some kind of sensor to play with as the game goes on.

Cycle One Mirror Room

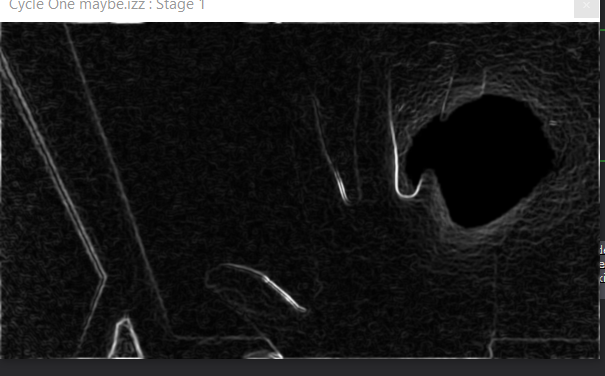

Posted: April 10, 2024 Filed under: Uncategorized | Tags: cycle 1, Isadora Leave a comment »The concept for the whole project is to just have fun messing with different video effects that Isadora can create in real time. One of the ways of messing with your image in real time is with a mirror that is warped in some way. The first cycle is simple by just deciding on what kind of effects that should be applied to the person in the camera. I wanted each one to be unique so the effect is something not available in usual house of mirrors.

The feedback for the effects that had something that took a bit of time for the user to figure out what was going on was positive. They enjoyed messing around in the camera until they could have a decent idea on how they affect the outcome. The one they thought was lack luster was the one with lines trailing their image. They were able to figure out almost immediately how they affect the image. So, for the next cycle the plan is to update the one effect screen to make it a bit harder to decipher what is going on. Next on the list is to try and get a configuration set up with projectors and projection mapping so the person can be in view of the camera and see what is happening on the screen or projection without blocking the projection or showing up on screen at a weird angle.

Cycle I: History of Bop

Posted: April 10, 2024 Filed under: Uncategorized | Tags: cycle 1 Leave a comment »Video Bop interpretation of an Excerpt from Jack Kerouac’s ‘History of Bop’

History Of Bop The History of Bop by Jack Kerouac

As a jazz enthusiast and young adult, reading Kerouac’s ‘On The Road’ was a transformative experience. In school, I was predominantly interested in the math and sciences and hardly cared to read a book or pick up a pen to write. However, Kerouac’s style, legacy, and approach to writing (and life for that matter) convinced me of the value in these types of intellectual pursuits. Over the last five years or so, I’ve continued to explore Kerouac and other works from the beat canon; One exciting find was his set of spoken word readings called ‘On the Beat Generation’. For cycle I, I focused on the final minute and a half section from his work ‘History of Bop’. I find this writing to be a triumphant portrayal of the evolution of bebop and cultural changes in America surrounding the genre.

Upon researching the piece, it was interesting to find that it was originally published in the April 1959 issue of an lewd magazine called Escapade – a hidden gem of writing amongst smutty caricatures and 50s advertisements. Although, I was not entirely surprised by this discordant arrangement given that the hero of the beats (Neal Cassady) was, according to Allen Ginsberg, an “Adonis of Denver—joy to the memory of his innumerable lays of girls in empty lots & diner backyards, moviehouses’ rickety rows, on mountaintops in caves or with gaunt waitresses in familiar roadside lonely petticoat upliftings & especially secret gas-station solipsisms of johns, & hometown alleys too”(Howl, 1956). This quality of the beats did not age well especially from the vantage of sexual equality, but unconventional behavior and criticism are the norm for this eclectic group. What I’d call a most foundational criticism of Kerouac and this piece of writing in particular is in the realm of black appropriation. Scholars like James Baldwin described this ‘untroubled tribute to youthful spontaneity [as] a double disservice—to the black Americans who were assumed to embody its spirit of spontaneity and to Kerouac’s full literary achievement… a romantic appraisal of black inner vitality’(Scott Saul, FREEDOM IS, FREEDOM AIN’T, pg 56). It cannot be denied that Kerouac and his writer friends were escaping what they feared as the trap of the middle class white picket fence, and couldn’t have experienced the true reality of being a black in the mid 20th century. Yet, their works speak to a deep respect and profound inspiration for the black art of their time (jazz).

One artistic technique embodied by the beats and inspired from jazz is what Kerouac calls ‘spontaneous prose’. Jazz musicians exceled at this rapid invention of musical structures through heightened sonic sensibility and borderline phantasmal prostheses of an instrument to their nervous systems and soul for that matter. The beats took up this approach with the technology of their time; namely the typewriter. Kerouac even made a manifesto called ESSENTIALS OF SPONTANEOUS PROSE in which he describes the procedure of writing as ‘the essence in the purity of speech, sketching language is undisturbed flow from the mind of personal secret idea-words, blowing (as per jazz musician) on subject of image.’ Other writers of his time, such as Truman Capote, didn’t see eye to eye with this artistic style and humorously commented “That’s not writing, that’s typing.” While I don’t mean to argue that thoroughly edited and fully composed art is better or worse than spontaneous artforms like jazz and bop-prose, it is just that spontaneous modes of creation akin to ‘play’ can potentially more honestly externalize such private and elusive inner processes occurring in the lawless relational environment of the mind.

It has been a good 70 years since these creative practices surfaced within the avant-garde art scene, and the technologies at society’s disposal for externalizing thought have tremendously improved especially due to the massive sea of networked image and video objects available to the average internet user. Further, a concentrated development of skills in computer programming may be analogous to the level of discipline in harnessing artistic technics like the saxophone, piano, pencil, paper, typewriter, voice recorder and so on. With this long winded explanation in mind, these ideas are much the backbone of my inspirations in new media art and what I wish to explore in this 3 cycled project.

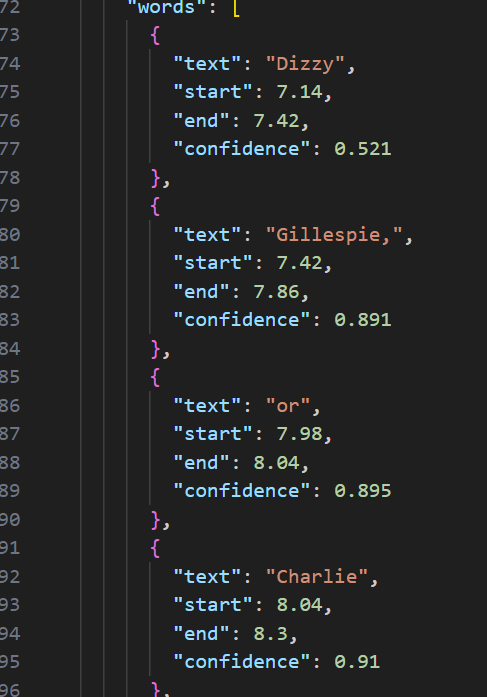

In cycle 1, I set out to visualize the final section of history of bop. I’ve listened to this poem recited countless times and did a lyrical transcription – meaning I listened to the recording and wrote out all of its words. This is a common practice among jazz musicians and writers since as it helps internalize language. Next, I followed Dmytro Nikolaiev’s implementation of a Vosk LLM in Python to convert the audio file into a transcribed text JSON file which contained the words and their position in time as spoken.

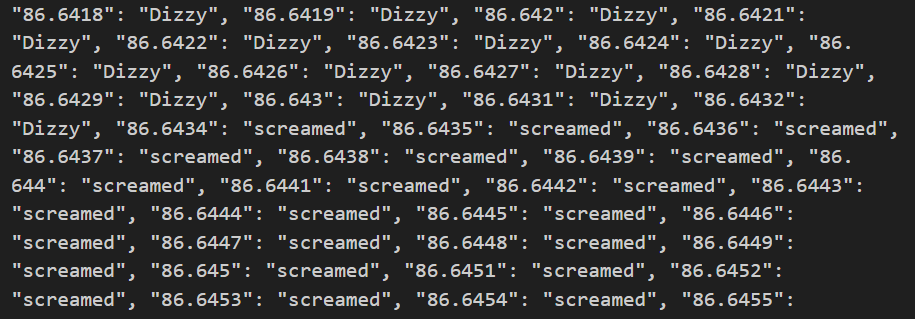

Not all the words were accurately transcribed so I had to manually correct as AI models are good but not nuanced enough to decipher all spoken phrases especially from an unconventional speaker like Kerouac. Further, I did some post-processing of the data to get it in a form which would play nicely with Isadora’s JSON parser actor. The format involved Key-Value pairs of timestamps and words spoken. Through experimentation, I found that repeating each word in the JSON list near the millisecond (ms) frequency ensured that it would appear onscreen and remain illuminated consistently as the corresponding audio is spoken. I found that although the resolution of the timecode variable of the movie player actor was at the ms scale, it didn’t increment consistently enough to predict the values it would trigger. Consequently, having a large and widespread array of timestamps between the start and end of a word ensures that it will be triggered. Additionally, Isadora plays in the realm of percentages between 0 and 100 rather than the typical format of videos being in time duration. So I had to account for a conversion of timestamp as percent completed with respect to the total length of the audio clip.

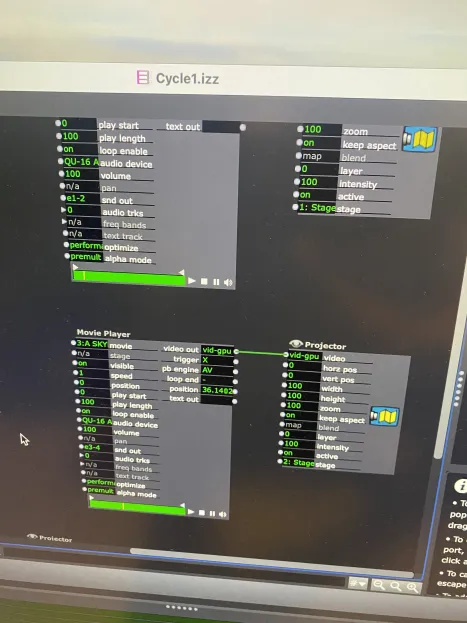

This allowed me to funnel the current position of a playing audio file through to the JSON parser actor, such that as the timecode increments, the transcribed text would display onscreen exactly in time with the recited poetry. This was exciting on its own as it was a semi-autonomous method to generating lyric videos. Also, the style of the text was strobe like giving the quality of spoken words – they appear and vanish in an instant. See below the media circuit implemented in Isadora (with image player disabled) to see the flow of time triggering text and subsequently displaying on screen.

The next stage in the process was to find imagery representing the ideas that Kerouac is expressing. Following Kerouac’s ‘Setup’ step: ‘The object is set before the mind, either in reality. as in sketching (before a landscape or teacup or old face) or is set in the memory wherein it becomes the sketching from memory of a definite image-object.’, I used Kerouac’s speaking of the words to serve as the stimulus (object) for mental imagery. Once an image or set of ideas was established in mind through free-association (‘mental image blowing’), I would search Youtube to find a clip which best represented this mental image. Instead of using one of the many available and ad-prone sites for converting Youtube videos to .mp4 files, I adapted a Python script using the yt-dlp/yt-dlp library to do so with better speed and precision. This allowed me to quickly find sections of videos, copy their video url along with start / end time into a function which would download the video file with a specific name to a designated folder. This method allows more mental energy to be concentrated on thinking of images and finding existing internet representations rather than downloading and cutting the video segments. In this way a quick flow can be achieved, better mimicking Kerouac’s spontaneous prose method. To add, just as writing is a negotiation between image thoughts and the language available to one’s tongue and fingers at that moment, video-bop (new term for this method) is a negotiation between a visualized animation and the medium of available images / videos online. This medium includes not only the content available and the form they take, but also the algorithmic recommender process personalized by the user’s previous internet activity. For in this intentionally fast paced creative process, one relies heavily on differentiating search terms to approach on an appropriate visualization.

When videos were found, they were named with the first ‘semi-unique’ word within the phrase they belonged to, and the length of the video was chosen to match the duration of that phrase as calculable from the initial audio transcription step. The grouping of phrases is to the video-bopper’s discretion and in accordance to the aesthetic sensibility eminent in jazz’s musical structuring. It is not necessary to find video images in the order for which they are spoken. I’d hopped around between Kerouac’s phrases freely and would encourage this approach as it may follow the flow of thinking more closely and it builds natural structures of moments and transitions between moments. This idea was neatly phrased by Mark Turner, a modern tenor saxophone player who describes “When I’m in the middle of a solo, whenever I am most certain of the next note I have to play, the more possibilities open up for the notes that follow.”(The Jazz of Physics, Stephon Alexander). To riff on Heisenberg’s uncertainty principle, there is this interdependence on knowing the exactness of both a particle’s momentum and position. To extend this into the domain of thought and artistic expression, perhaps carelessly, it suggests that there is a tradeoff in awareness. When the improvisor’s awareness is tuned most closely to what idea should come next, they may be unaware of the larger artistic structure to emerge. In contrast, if the improvisor’s awareness is tuned to larger timespans and movements in the piece, they may have less awareness of the idea to come next.

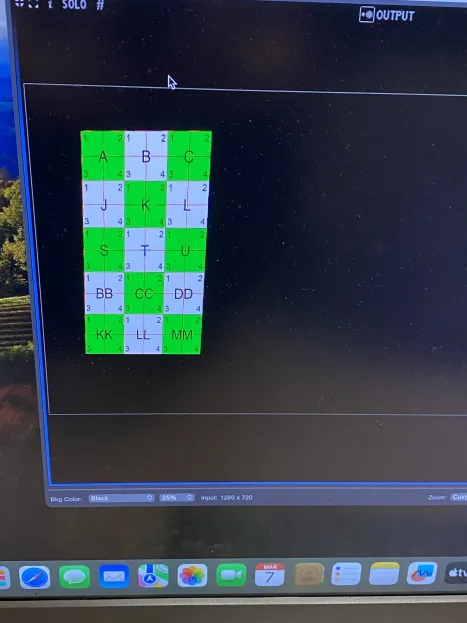

As a continuation on methodology, Isadora unfortunately doesn’t read file-paths for media artifacts and rather relies on an internal numbering system as files are uploaded to the project. To accommodate this structure, I manually updated a JSON object to convert between video trigger words and their index in Isadora. These index values are passed into Isadora’s movie player object to allow them to be visualized in time with the typography and spoken words.

Upon showing the project to my classmates and delving into ideas on how it can be more interactive for cycle’s 2 and 3 to come, many important suggestions were accumulated to form the next direction:

- Jiang – Words that are action oriented may be good to include for transitions of images. (ie ‘turn’).

- Afure – She liked my interpretation of word ‘Dreaming’ – Although, everyone would have a different interpretation of that word and what img they’d select.

- Tik Tok Trend for going on Pinterest and searching words and displaying what image that is algorithmically connected with.

- Kiki – Liked the subject matter – she teaches jazz dance and it would be helpful to have a more interesting way to teach about the this genre.

- Alex – ways for interactivity – what sorts of ways to automate img generation and allow for user thoughts / personalities to be included. Potential for a custom web-app.

- Nathan – would like to have clicked on links related to the content being shown. As a way to learn more about each part (as informative).

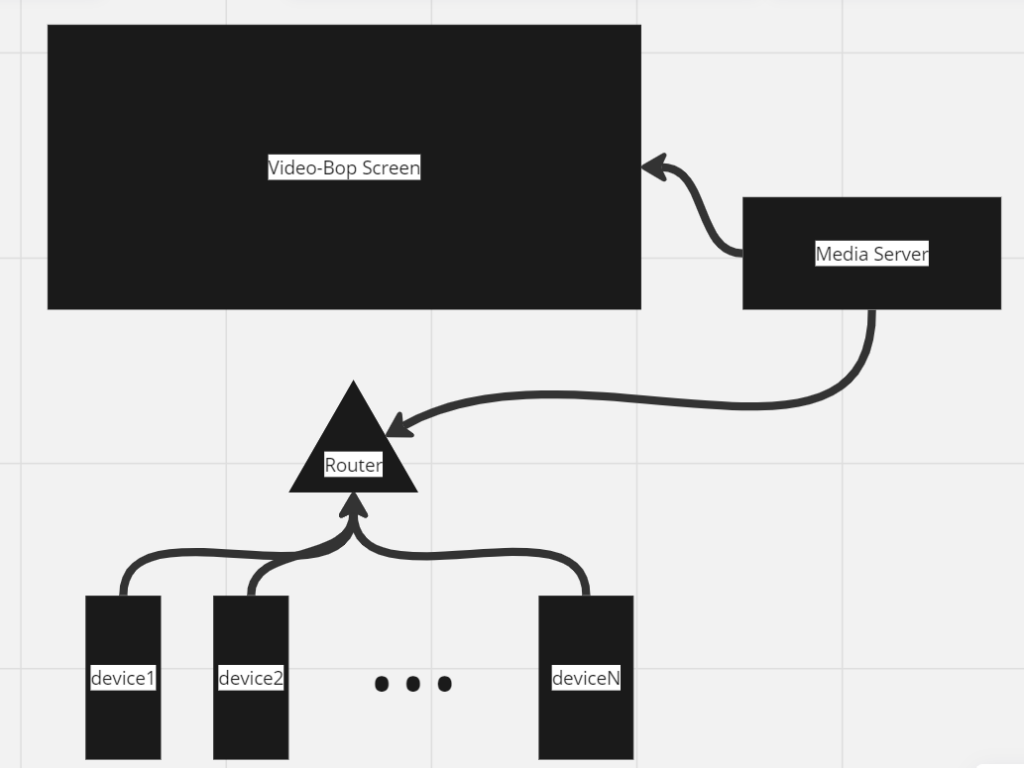

With these suggestions in mind, I plan to explore the use of Dispatcher — python-osc 1.7.1 documentation to build a simple server hosted web interface open to smart-device users connected to a LAN. A spoken word poem should be found and disseminated to each of the audience’s device surfaces. As an experience, there should be a listening period in which the audience engages with their own forms of active imagination to see what phrases catch their ears and images that become naturally available to them. From there, they should go through the video-bop process and find a clip which matches what they’ve concepted. Then they will connect to the media server and paste the link of that video, the start and end time, as well as the phrase it connects to. The media server will need to collect these audience responses and run the Youtube extraction script to grab all the associated artifacts and make them available for rendering in time with the spoken word poetry. This is the direction I envision for cycle two and a diagram of how I see the interaction occurring:

Cycle 1 – Unknown Creators

Posted: April 9, 2024 Filed under: Uncategorized Leave a comment »For my final project I knew I wanted to do a perspective piece sort of thing after my work from last project. After thinking about it for a while I decided that I would go back a social issue that occurs in the games industry and other media that require large teams of people. There is a phenomenon where the creation of a piece of art is attributed to one person, even we know that there are more people behind the scenes. One might refer to a movie as a Hitchcock or Tarantino film, or a play as something by Sondheim. These individuals that get referenced, mostly male, are often leads in some way: main writers, directors, etc., and they often get more screen time and interviews. The games industry is no different, and there are several big names that get thrown around: Hideo Kojima, Hidetaka Miyazaki, Shigeru Miyamoto, Warren Specter, the list goes on. In order to address and convey this issue to those that don’t know so much about games, I wanted to take an example of a revered creator and juxtapose that person with some others that are relatively unknown, people who did programming, mocap, animation, etc.

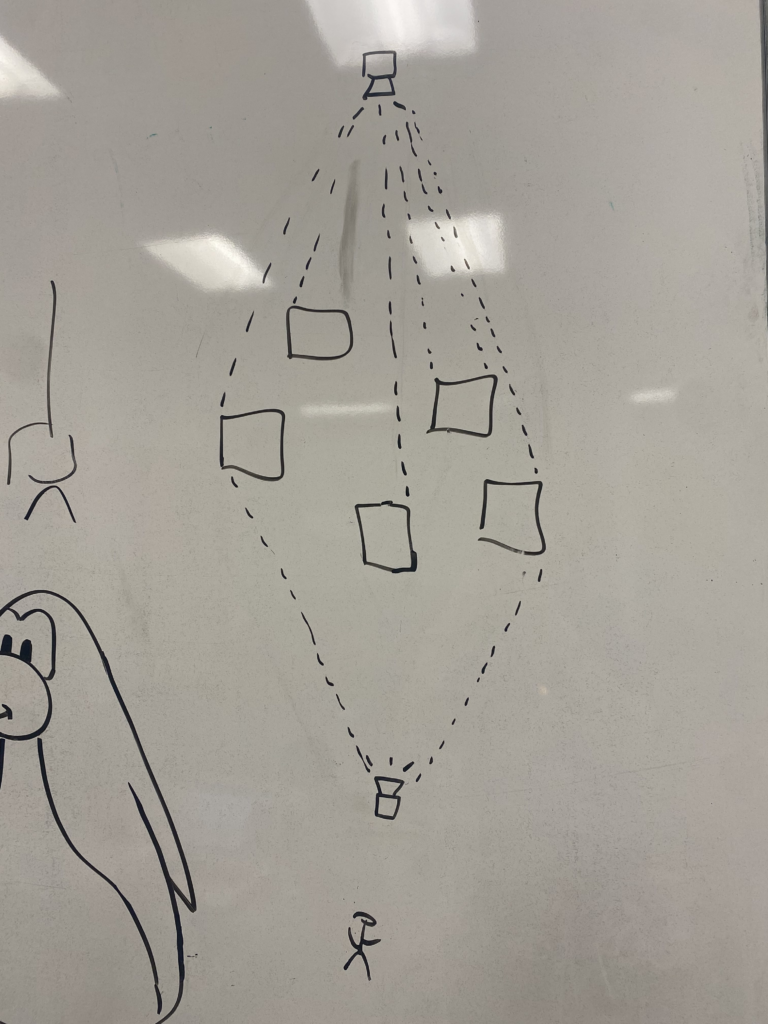

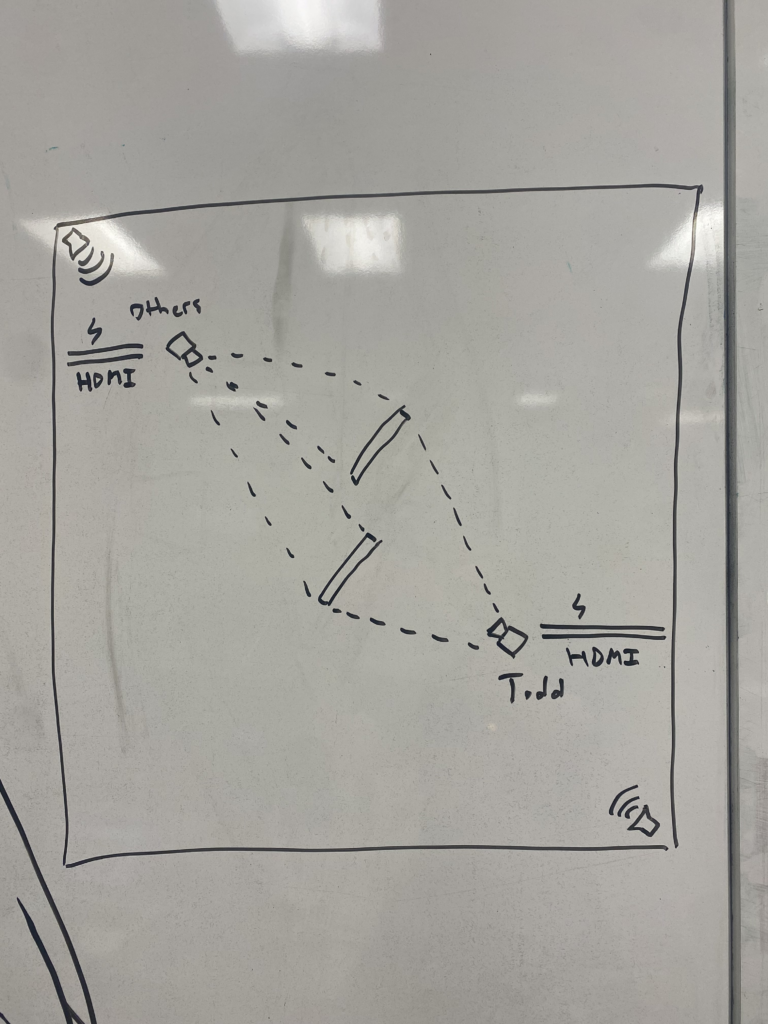

In my piece I wanted to focus on a studio that I know quite well, Bethesda, and it’s lead designer Todd Howard. I found a short interview from GameInformer about his life and how he got into the industry, linked here: How Skyrim’s Director Todd Howard Got Into The Industry (youtube.com). For the people who were lesser known, I am pulling footage from some documentaries made by ex-employees at Bethesda: How Bethesda built the worlds of Skyrim and Fallout (youtube.com), and A SKYRIM DOCUMENTARY | You’re Finally Awake: Nine Developers Recount the Making of Skyrim (youtube.com). In terms of set up, my idea looked a little like this:

Essentially I wanted to have some tall boxes set up in the center of a space (ideally the motion lab). On one side of these boxes, there would be a large projection of Todd Howard, the footage would be stretched over all the boxes so that when looked at as a whole, the image would become clear. On the other side of the boxes, individuals that worked at Bethesda would be projected. Each person would be projected onto one box. The Isadora part is fairly simple, just a bunch of projection mapping and some videos, the setup and space would really be the big issues.

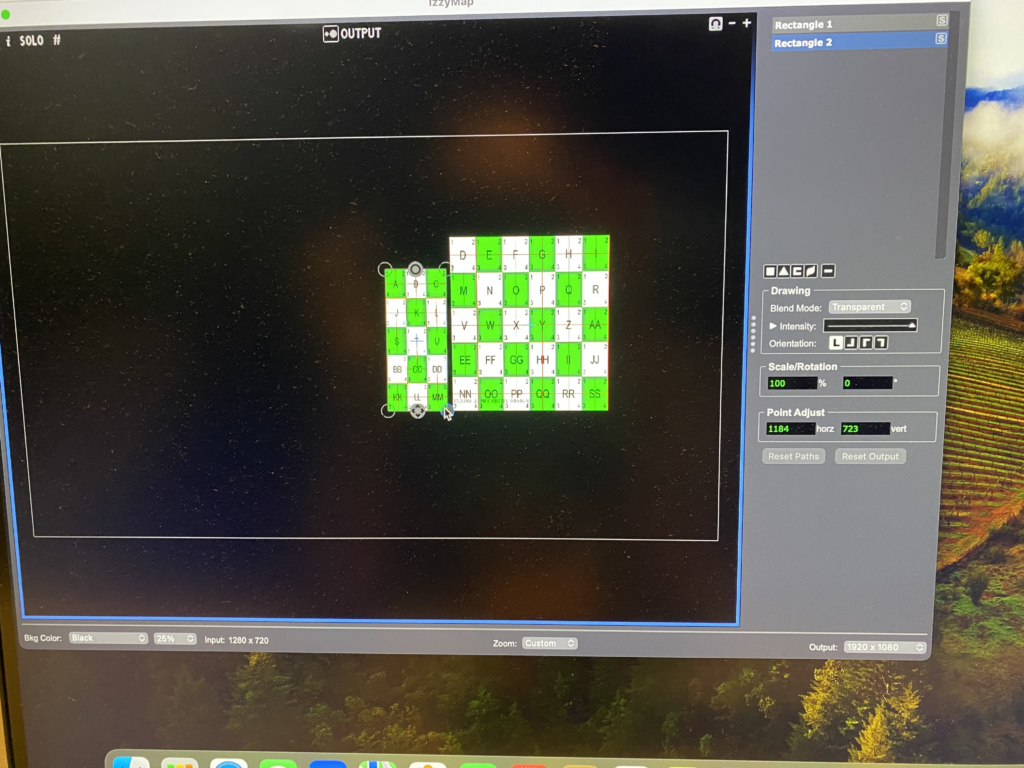

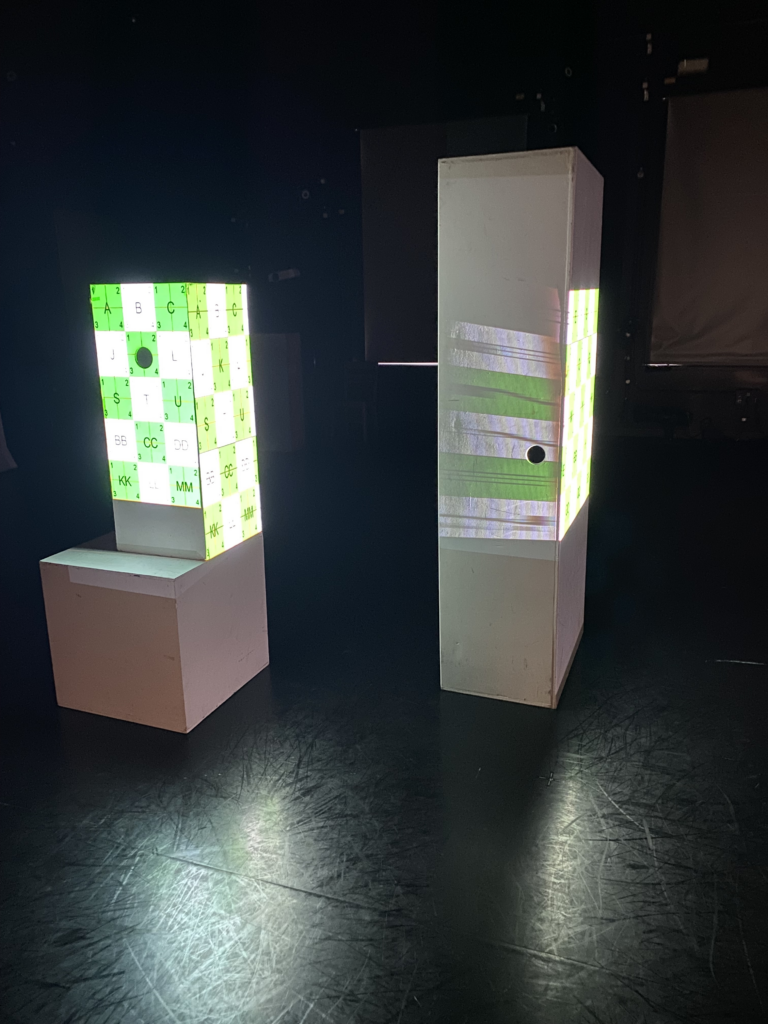

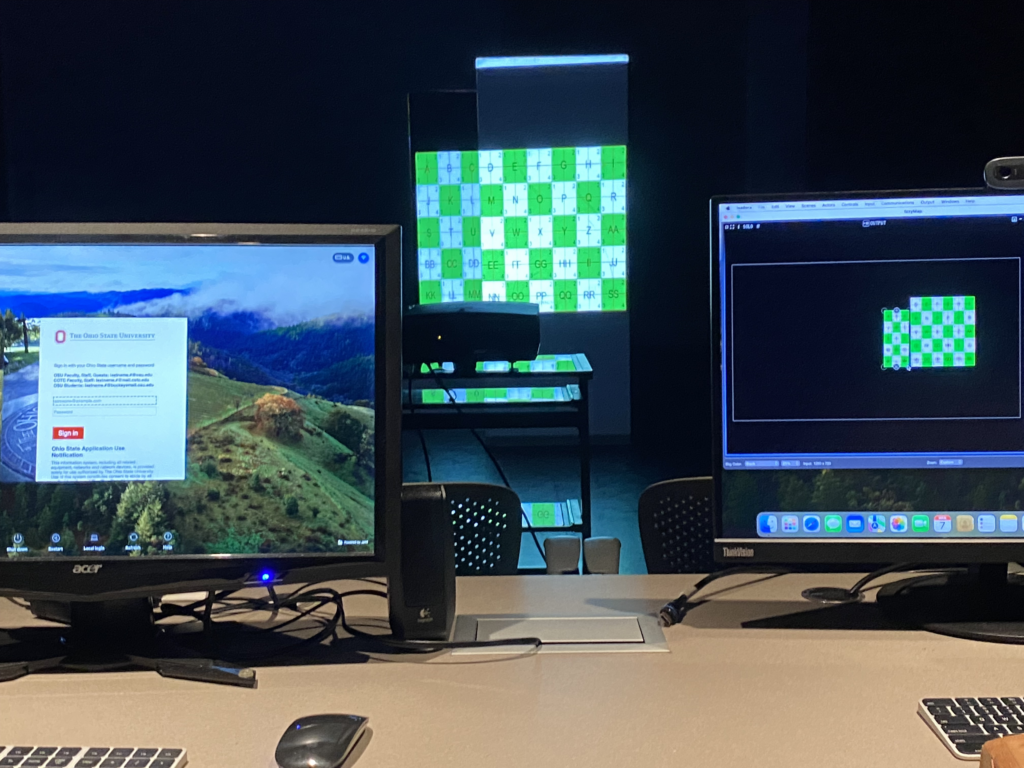

One of the first things I did was go into the motion lab and establish what sorts of resources I would be using, as well as try and get some projection mapping stuff working just to get familiar with the process and environment; Michael Hesmond was very helpful in this regard. I was going to need to use the Mac Studio, that way I could use two projectors at once. We decided to try two different kind of projectors, a short-throw and long-throw, just to see if one would be better than the other. After getting everything set up and using the grid I got something that kind of looked like this:

I was technically projecting on the side instead of across from each other, but this was okay, I really just needed a proof of concept and I wanted to also see how pixel smearing would look. After doing all this, I determined that I would need these resources:

- 2x Power Cables for the projectors

- 2x Extension cables that would plug into the wall

- 2x HDMI cables

- 2x Short-throw Ben-Q projectors, both projector types worked fine but I wanted to use the short throws because their color was better, and the space was relatively small

- The motion lab switcher, this was important for getting both of the projectors working; we had to do a little debugging to make sure the projections were going to the correct outputs

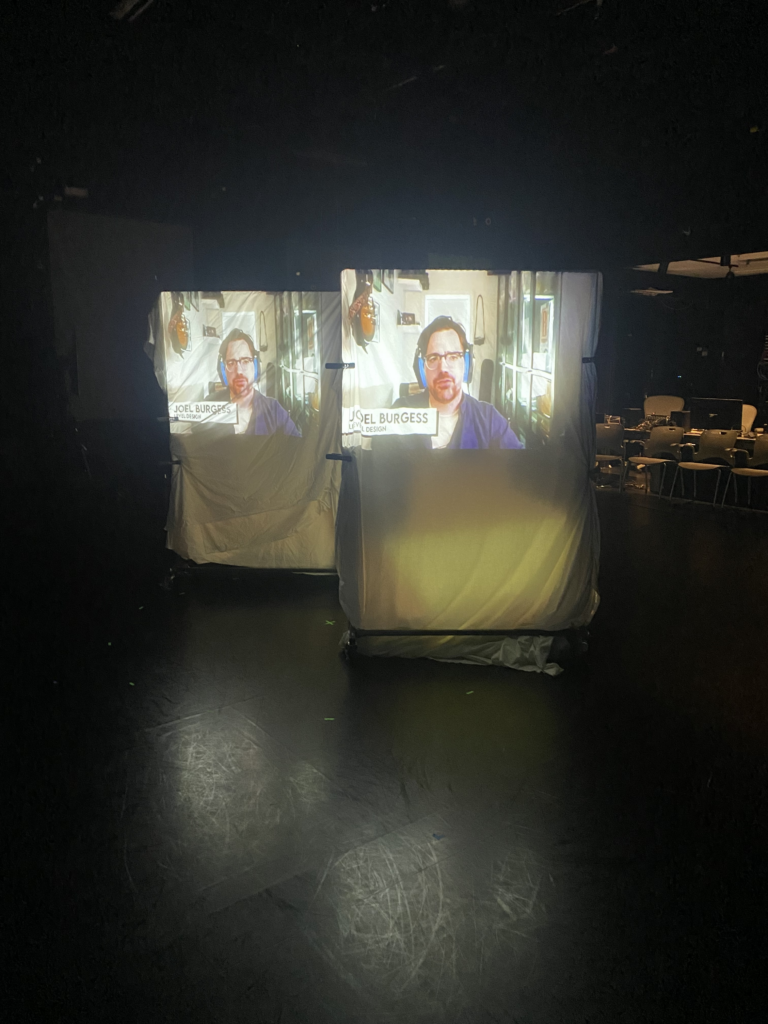

The only resources I wasn’t sure about were the boxes. There weren’t a lot of them, and I wanted more uniform shapes, so this would have to be reworked. When I came back, I did a bit more work thinking about the setup and resources with Nico and Michael, and I got another setup going:

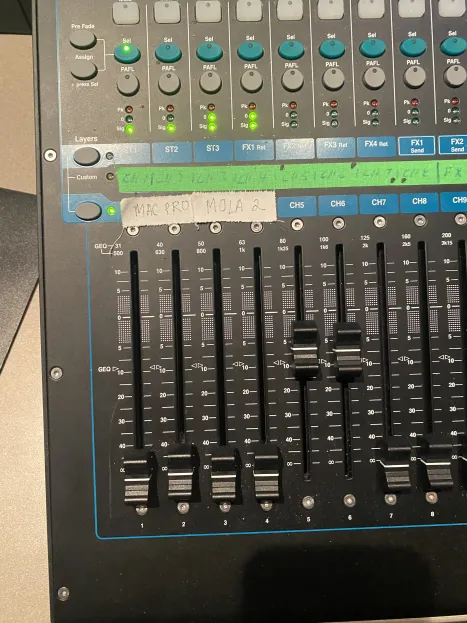

This time, the setup was moved diagonally. I wanted to do this to give myself more space to work with and because when audio would be incorporated, I needed speakers that I could project audio from in different locations. Instead of boxes, Nico had a great suggestion of using some draped sheets, so we took them and folded them over some movable coat racks. I also played with getting audio to play out of different speakers. I only wanted sound to come out of the back left and the front right speaker. Isadora had a little bit of trouble, the snd out parameter for my movies had to be set to e1-2 for the front speakers and e3-4 for the back speakers, then the panning needed to be adjusted so that audio would only play out of a specific speaker. After that was figured out though, we saved my audio settings to the sound board so that it could be loaded up quickly later.

For the cycle 1 presentation, I took a quick video:

I got a lot of great feedback about peoples’ thoughts and feelings on this project:

- Jiara felt that there was a resonance between the tone of the voice and the quality of the fabric, or perhaps the wrinkly appearance of the fabric. It seemed that for one coat rack the fabric was more pristine and ironed, while on another the fabric was more crumpled, which could connect to the quality of audio or perhaps the autobiographical nature of the footage

- There was some confusion about the meaning of the piece, for many the relationship between the people and footage wasn’t clear. Was it connected? Was there a back and forth? It almost seemed like one side of the footage was the interviewer and the other side was the interviewee

- People liked spatial aspect of what was going on. Alex noted that the sound could be disorienting because there are multiple sources faced towards each other, which is a very good thing to note. Michael and I had talked about this briefly and this issue could potentially be solved by having the speakers be under the coat racks and having them project in the opposite direction, rather than projecting towards each other. But one thing that was good about this audio setup was that there were dedicated viewing positions that we created since the audio would be less distracting in the corners where the perspective was best framed. Moreover, finding spots where both footage can be viewed and heard was fun

- The biggest thing is that the messaging isn’t clear for those that don’t have context about these people. In general, there needs to be some conveyance of information about these people’s backgrounds, positions, roles, etc. and there could be more done to imply that one person is more well-known than the others. For example, maybe there is more ornate framing around Todd, maybe there is something about the volume of the space that could be played with, maybe the footage of the normal developers is jumbled and fragmented

- Someone suggested having the racks move, which would be really interesting though I don’t know how that would work haha

PP3/ Cycle 1- Rhythm Imposter

Posted: April 7, 2024 Filed under: Uncategorized Leave a comment »For my cycle 1 I was inspired by the game Among Us. I wanted to put a twist on the game and use sound and body movement to help players decide who was the imposter and who wasn’t. I immediately knew I needed multiple headphones, but I struggled where to put these headphone in for Isadora to send the sound to. I realized that I was going to need a multichannel sound system that allows me to connect multiple headphones. I first used a different version of the MOTU UltraLite mk3 Hybrid sound system that had 8 channels on it. It was pretty simple to use but when I connected it to my computer and tried to send the sound through, it was only reading 2 channles. It took a couple of classes, reading the manual, and asking Alex how do I solve this problem but I solved the problem.

First, I had each player choose 1 number and that number would assign your role as a “regular” or “imposter”. Here is my patch for where everyone chose their roles:

Here is my patch for getting the sound to be sent through different channels. Again in this instance I had 1 song going to 4 channels (headphones) and another song going to 2 channels (headphones).

The purpose of this cycle was to get the MOTU sound system working and solving the problem of getting different sounds/ songs to be sent through different channels. My first struggle was that I could on get 2 channels working, however after switching to a bigger MOTU sound system and making sure Isadora read 8 channels, then I was able to achieve my goal.

Cycle 1: Personal Metrics

Posted: April 4, 2024 Filed under: Uncategorized Leave a comment »Overview:

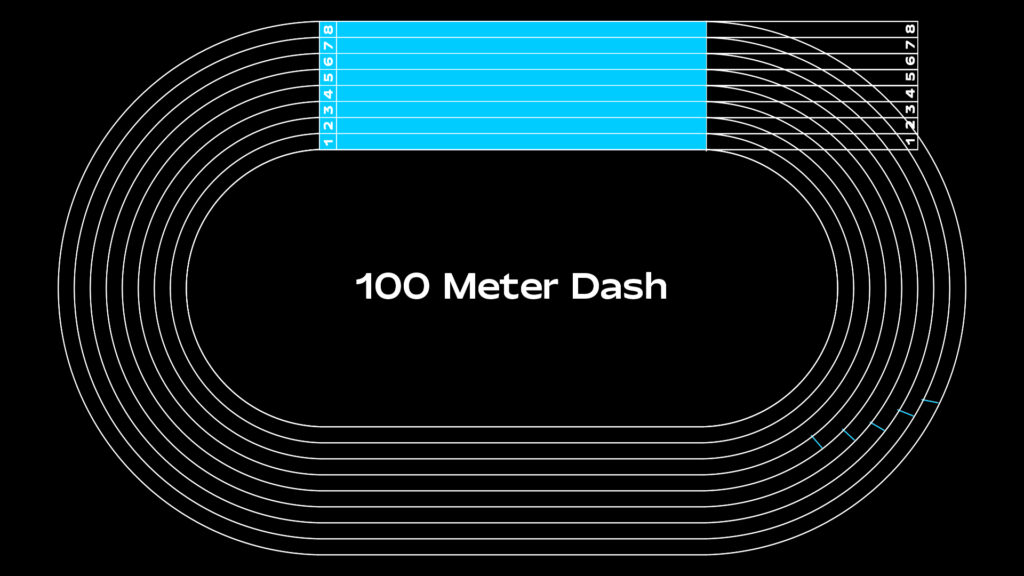

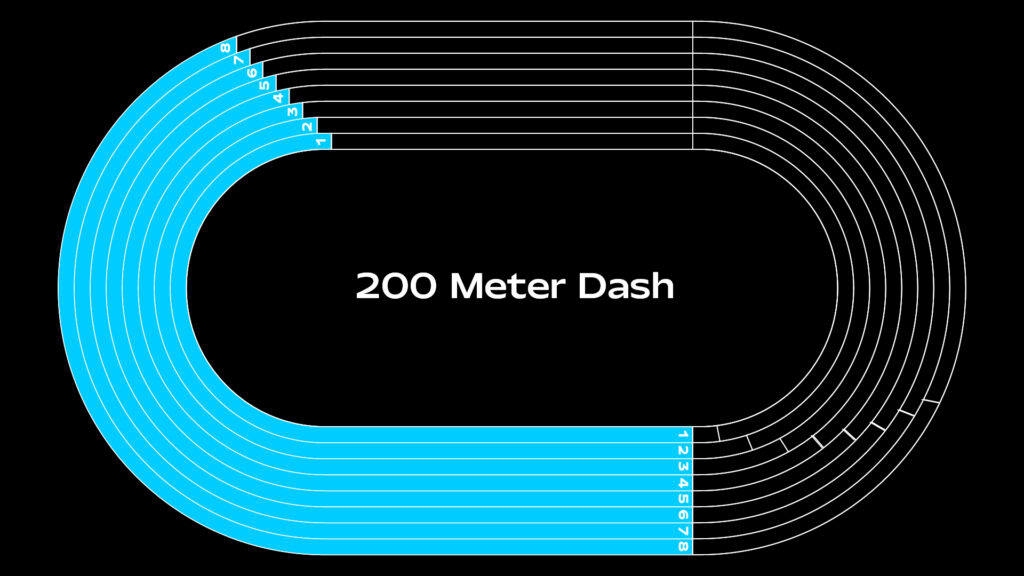

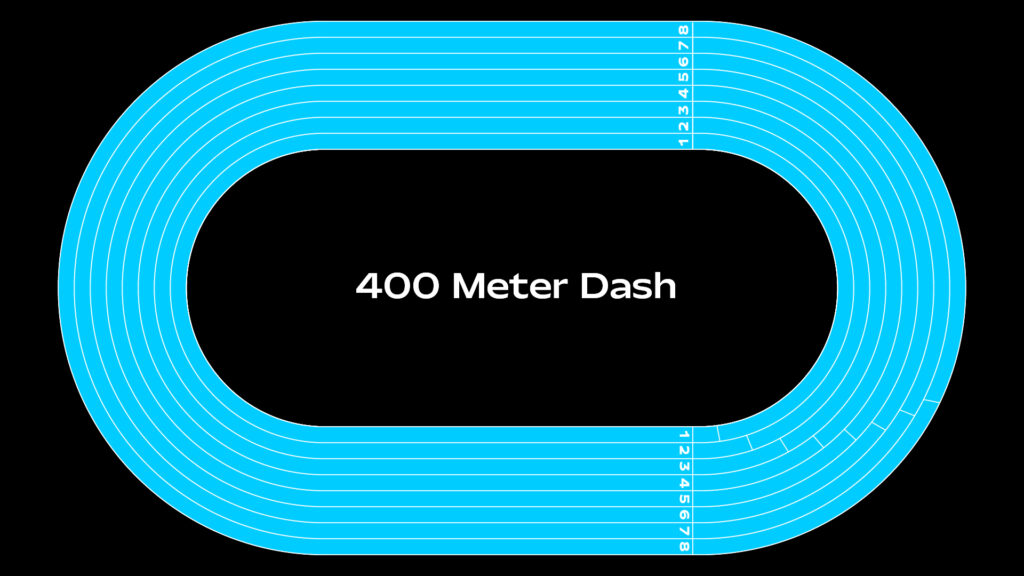

Cycles 1-3 aims to explore the data behind providing personalized running phase zones to a sprinter based on user input. Eventually leading to efficient training strategies to improve performance.

Implementation:

The project will utilize Isadora’s control panel feature for interactive user input (Cycle 1). Algorithms for phase zone calculation will be developed, drawing on secondary research for running phase terminology and incorporating height as a variable (Cycle 2). The system will be designed to present users with clear and actionable results, potentially visualizing phase zones on a track diagram for better comprehension (Cycle 3).

Cycle 1 Features:

- User Input Interaction: Utilizing Isadora’s control panel feature, users will input their fastest running time, goal time, and height, providing essential data for the calculation process that I will be workshopping in Cycle 2.

- Personalization Based on World Records: Users will be able to set their persona best time and goal time, with reference ranges derived from the current Men’s and Women’s world records for sprint events (100m, 200m, and 400m dashes).

- Height Adjustment: Height will be factored into the calculation process, potentially influencing the distribution of running phase zones to accommodate individual physical characteristics.