Cycle 1 (Amy)

Posted: December 14, 2023 Filed under: Uncategorized Leave a comment »Cycle 1: research and experiments, Initial title – ‘the things you don’t see’

October 11th goal: I would like for someone’s presence, just being, to be sensed. They are reacting to the system reacting to them, it’s reciprocated, ongoing. The feedback loop of interaction.

Research: keywords and quotes from Quantum Listening by Pauline Oliveros

- ‘Listening field…’ – pg 13

- ‘Listening to their [audience] listening.’ – pg 16

- ‘Deep listening takes us below the surface of our consciousness and helps to change or dissolve limiting boundaries.’ – pg 38

- ‘What if you could hear the frequency of colors?’ – pg 40

Reflection:

In this initial stage, my desires were a jumble. I had the vague idea of creating an interactive audio-visual installation that ‘woke up’ to physical presence and responded generatively to subtle bodily movements. I was also very intrigued by the concept of deep listening and the philosophy of Pauline Oliveros during this time, particularly, her work ‘Teach yourself to Fly.’ In this work, a group of people sing together, responding to and diverging from the voices of those around them. The result is an organic sound cloud that is ever shifting, highlighting breath, fluidity, and a state of impermanence.

Alex provided guidance by helping me sift through my thoughts and in constructing a clear directive. The first was – how do I use the Orbbec to pick up movement and how is that reflected through programming in Isadora? Second – how do I transfer this response onto a lightbulb using a DMX box? Third – how does the motion translate or manipulate sound output?

In Cycle 1, I programmed the bare bones of motion and sound reactivity in Isadora. AND got the lightbulbs to work, yay!

Cycle 3: Xylophone Hero at Fort Izzy

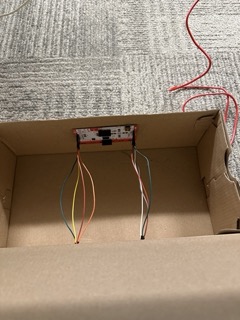

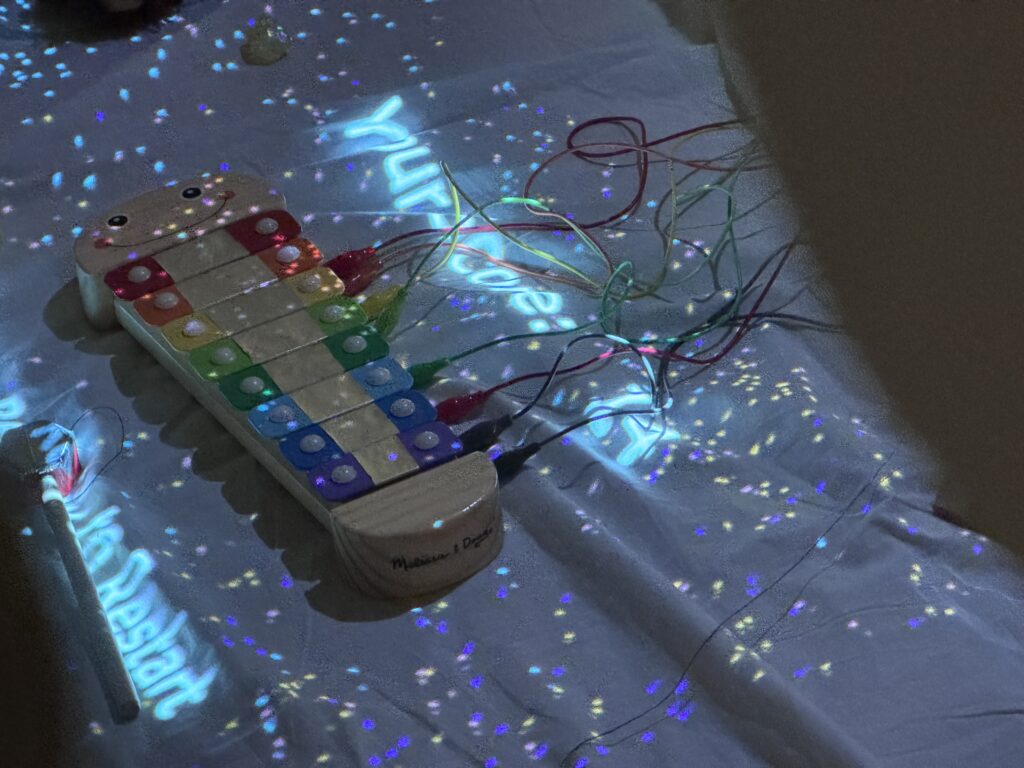

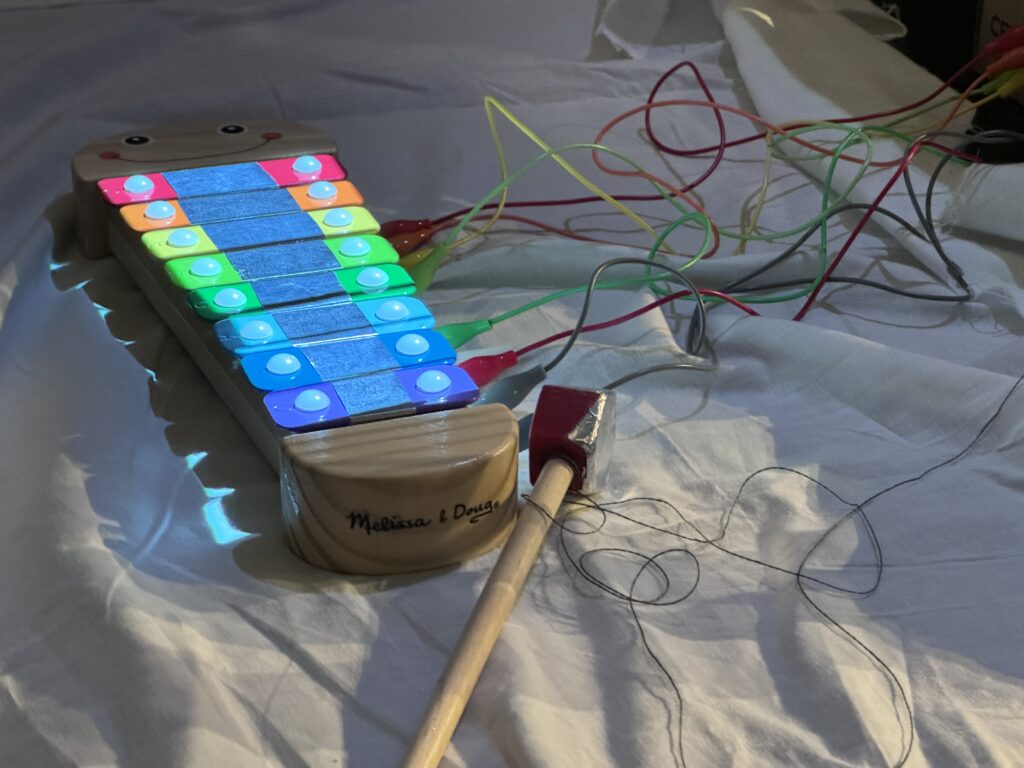

Posted: December 14, 2023 Filed under: Uncategorized Leave a comment »This Cycle I decided to focus on the physical presentation of my project. My first task was to connect my xylophone to Isadora using the Makey Makey. I wrapped foil around each key of the xylophone, then made “wires” out of foil that connected to each foiled key. Then I remapped the back inputs of the Makey Makey to the numbers 1-8 and attached the breadboard wires to the Makey Makey. I attached the Makey Makey to the inside of a shoebox and fed the breadboard wires through to the outside of the box. From there, I connected the alligator clips and the breadboard wires. To ground the mallet, I tied one end of some conductive string to Earth on the Makey Makey and tied the other end around the handle of the mallet, taping the extra string to the mallet. I then covered the top of the mallet in foil.

Before my Cycle 3 presentation, I mapped the output of my Isadora patch onto the xylophone and I set up a “blanket fort” around my projection in the Motion Lab. I also went through my patch to make sure everything was working as expected, and I added a start screen, an end screen, and a restart. I staged the fort with stuffed animals, playing cards, and other items that might be found in a children’s bedroom.

I am extremely happy with how this project turned out and I am so proud of what I produced. If I were to do more cycles, I would paint the shoebox to look more like a toy box, secure the bottom sheet to the floor, and I would figure out a way to hold a high score or display a scoreboard. I could also figure out a way to introduce game difficulties.

Cycle 2: Keeping Score

Posted: December 14, 2023 Filed under: Uncategorized Leave a comment »In between Cycle 1 and Cycle 2, I decided that my input device would be a children’s xylophone, which I would make work using the Makey Makey.

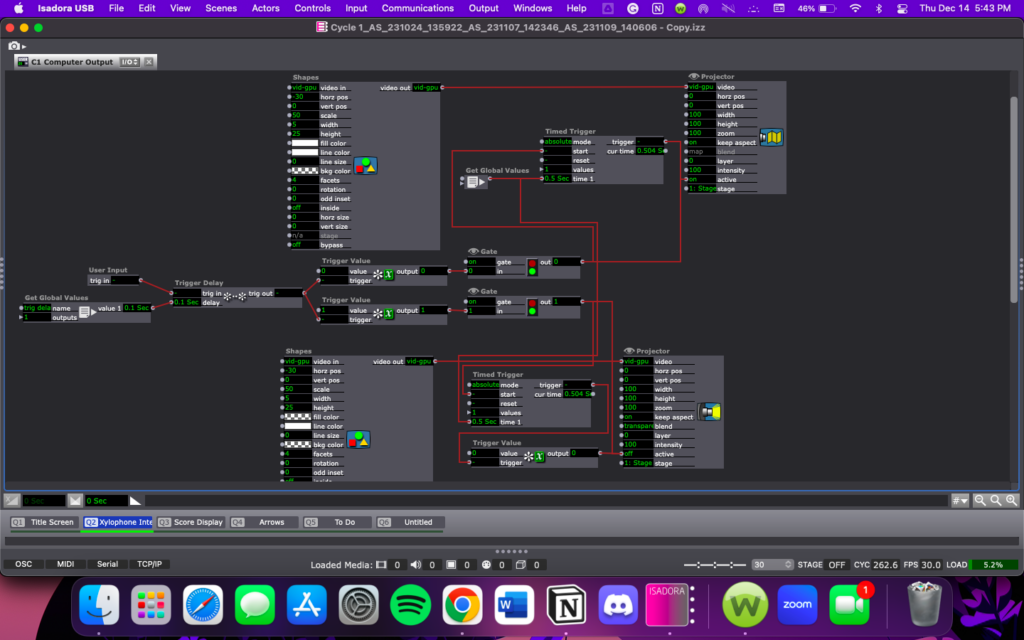

During Cycle 2, I spent most of my time working in Isadora. I wanted to make sure I had my Isadora patch working the way I wanted before I started any of the physical presentation elements. To start, I turned my four arrow shapes into rectangles, then added four more to simulate a xylophone.

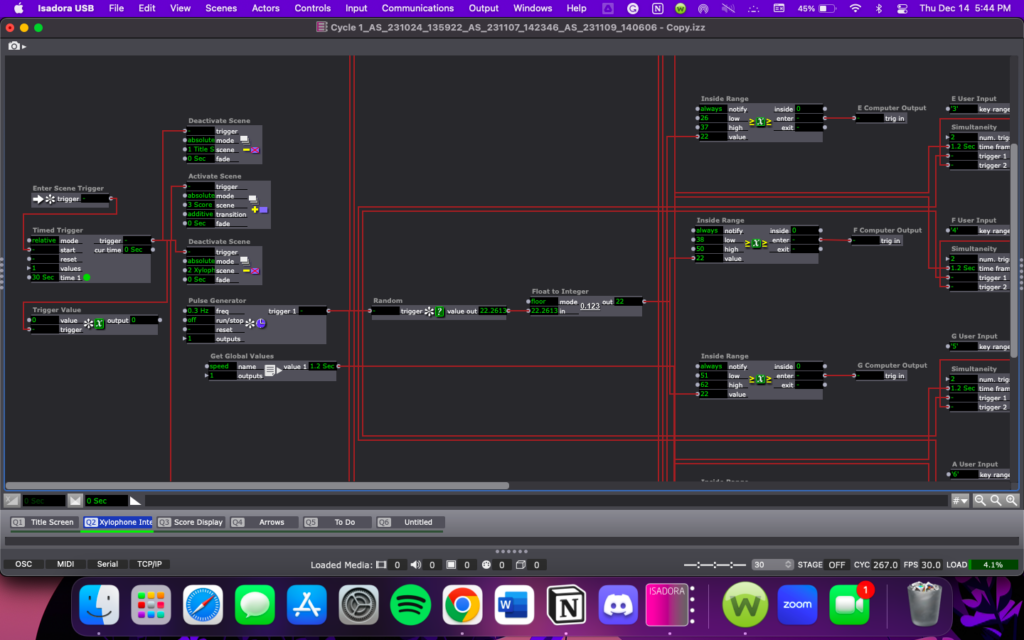

Next, I added in a way for the computer to tell the player which key to hit. To do this I started by duplicating the user actors I had made for the previous rectangles. Each user actor is almost identical to the blinking arrows in Cycle 1, but has input from a Get Global Values Actor inside the User Actor, and an Inside Range Actor outside of the User Actor. The outside mechanism uses an Enter Scene Trigger, Timed Trigger, Trigger Value, Pulse Generator, Activate and Deactivate Scene Actors, the Random Actor and the Float to Integer Actor, as well as an Inside Range Actor connected to each of the duplicated User Actors. Both patch snippets are pictured below.

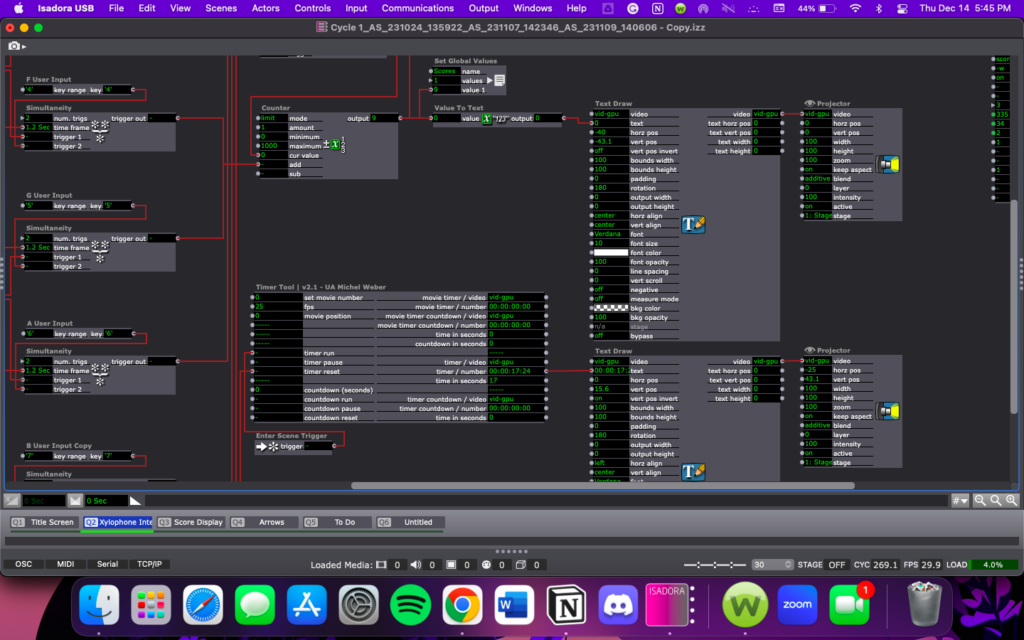

I added in a goal for the game; To score as many points as possible in one minute. First I added a way for Isadora to track the player’s score using the Counter. Actor. Then I added in a game timer using the TimerTool Actor downloaded from the Add-Ons page of the TroikaTronics website.

I also tried to add in a scoreboard feature, but it turned out to be much more difficult than I had anticipated, so a scoreboard is likely a Cycle 4 type of addition.

In Cycle 3 I am going to spend more time focusing on the physical presentation of my project. My goals are to get the xylophone connected to my Isadora patch, figure out how I want to set up in the Motion Lab, and projection map my Stage onto the xylophone.

Lawson: Cycle 3 “Wash Me Away and Birth Me Again”

Posted: December 14, 2023 Filed under: Nico Lawson, Uncategorized | Tags: Au23, Cycle 3, dance, Digital Performance, Isadora Leave a comment »Changes to the Physical Set Up

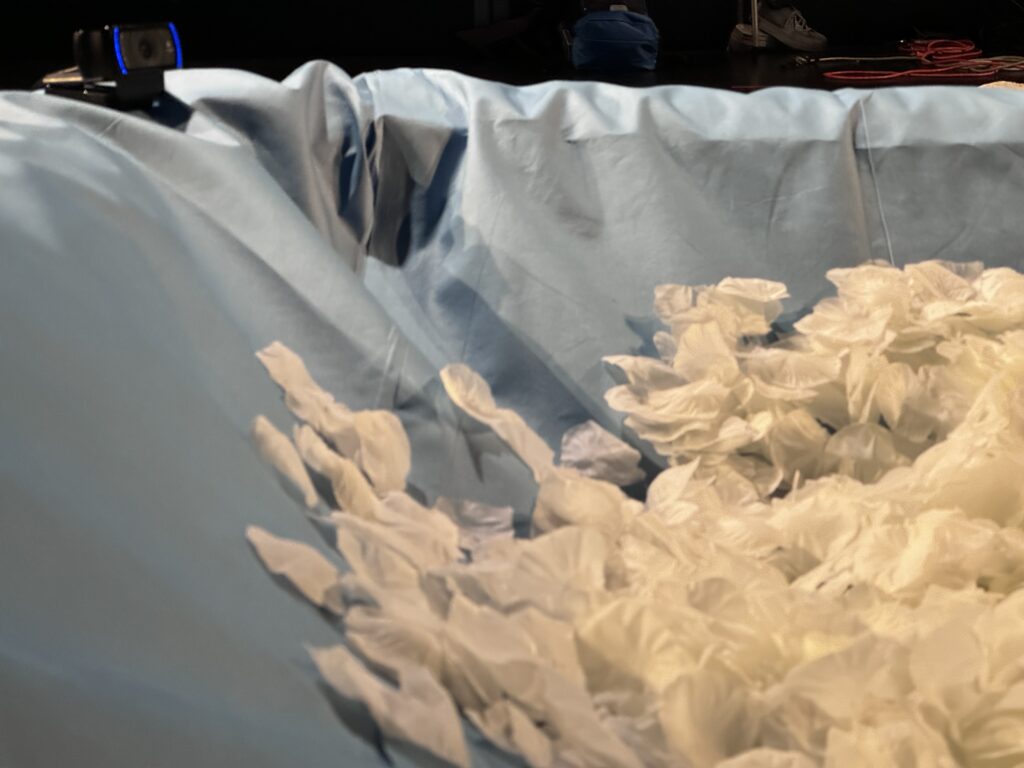

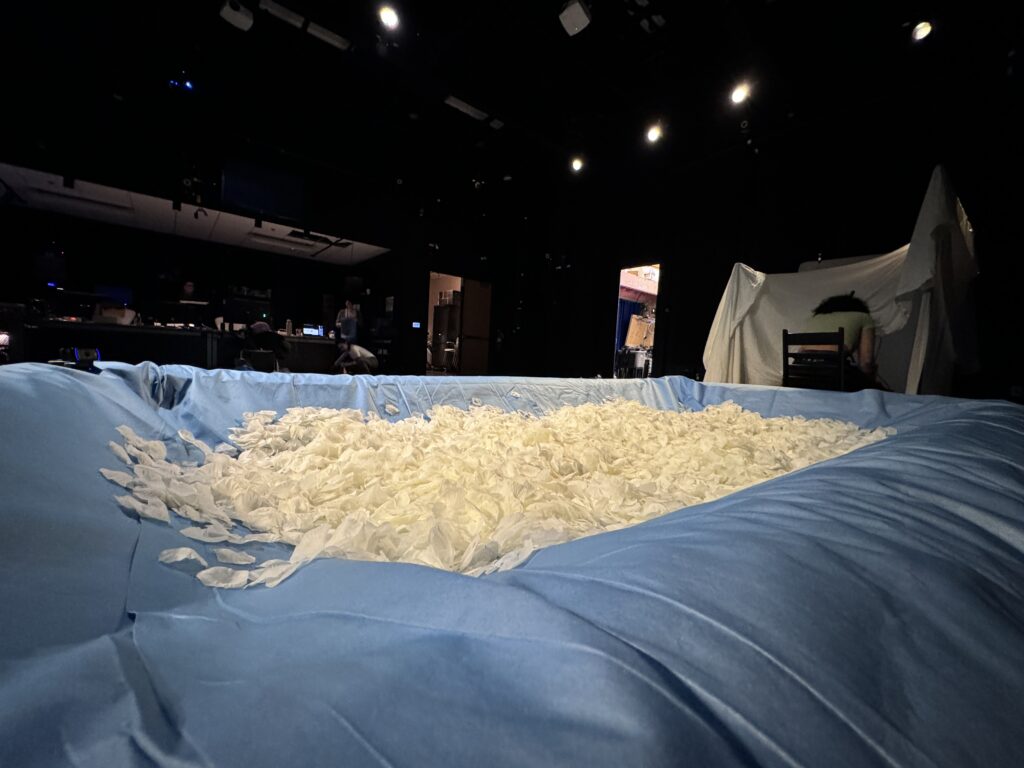

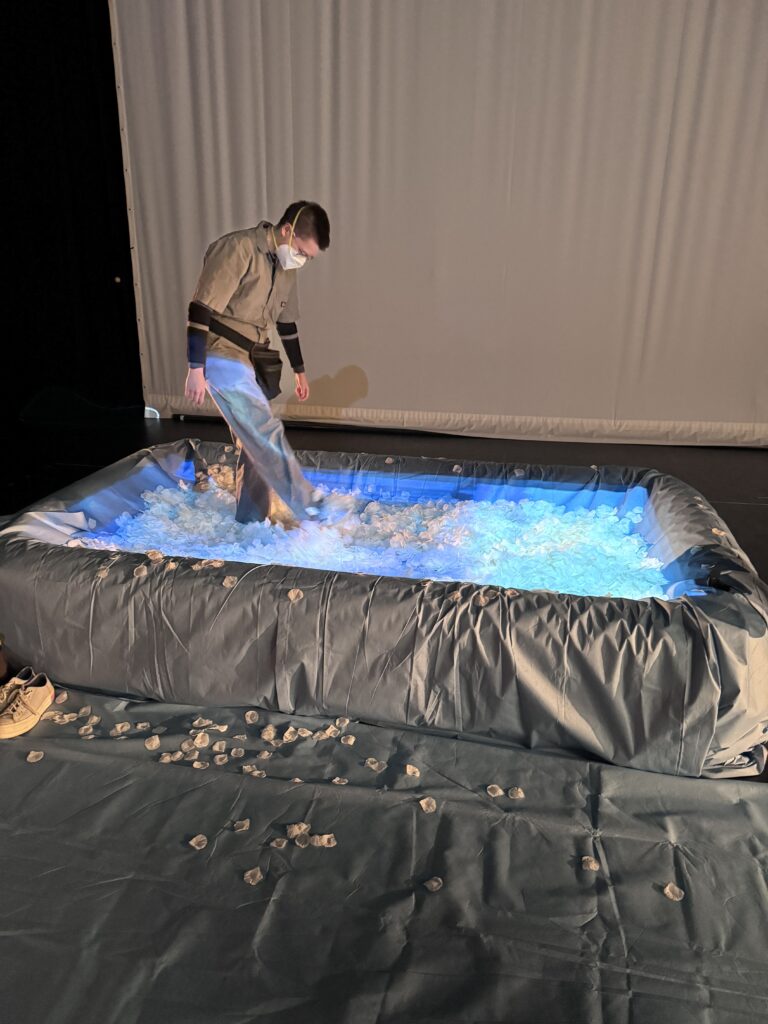

For cycle 3, knowing that I wanted to encourage people to physically engage with my installation, I replaced the bunched up canvas drop cloths with a 6 ft x 10 ft inflatable pool. I built up the bottom of pool with two folded wrestling mats. Building up the bottom of the pool made the pool more stable and reduced the volume of silk rose petals that I would need to fill the pool. Additionally, I wrapped the pool with a layer of blue drop cloths. This reduced the kitschy or flimsy look of the pool, increased the contrast of the rose petals, and allowed the blue of the projection to “feather” at the edges to make the water projection appear more realistic. To further encourage the audience to physically engage with the pool, I placed an extra strip of drop cloth on one side of the pool and set my own shoes on the mat as a visual indicator of how people should engage: take your shoes off and get in. This also served as a location to brush the rose petals off of your clothes if they stuck to you.

In addition to the pool, I also made slight adjustments to the lighting of the installation. I tilted and shutter cut three mid, incandescent lights. One light bounced off of the petals. Because the petals were asymmetrically mounded, this light gave the petals a wave like appearance as the animation moved over top of them. The other two shins were shutter cut just above the pool to light the participant’s body from stage left and stage right.

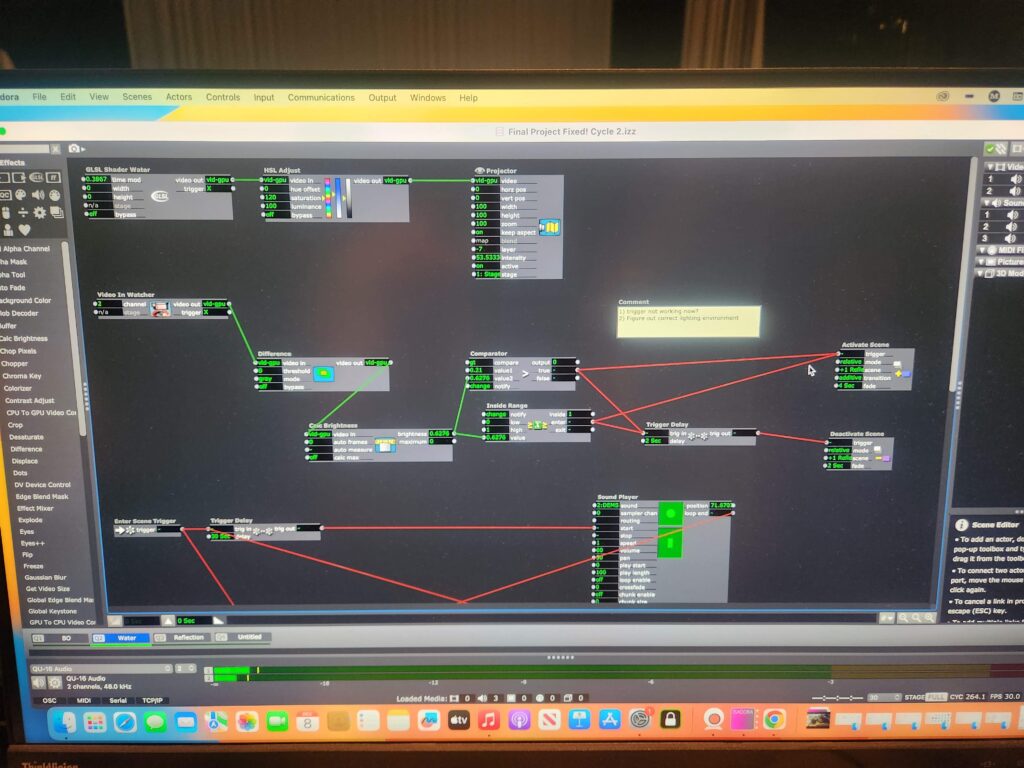

Changes to the Isadora Patch

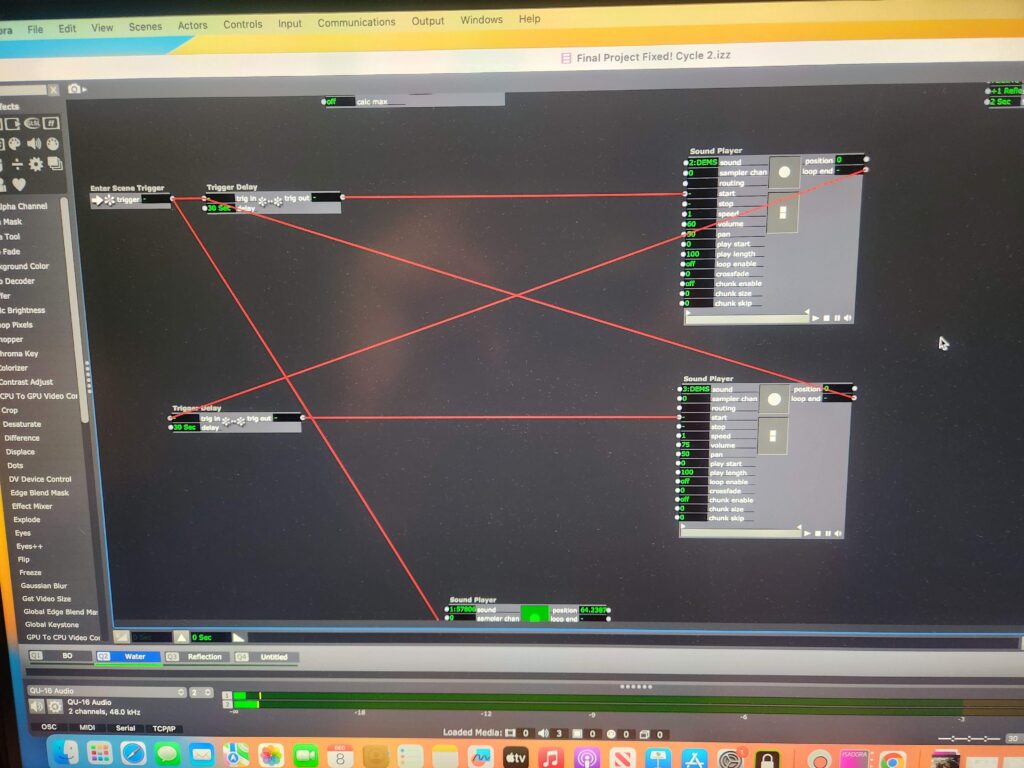

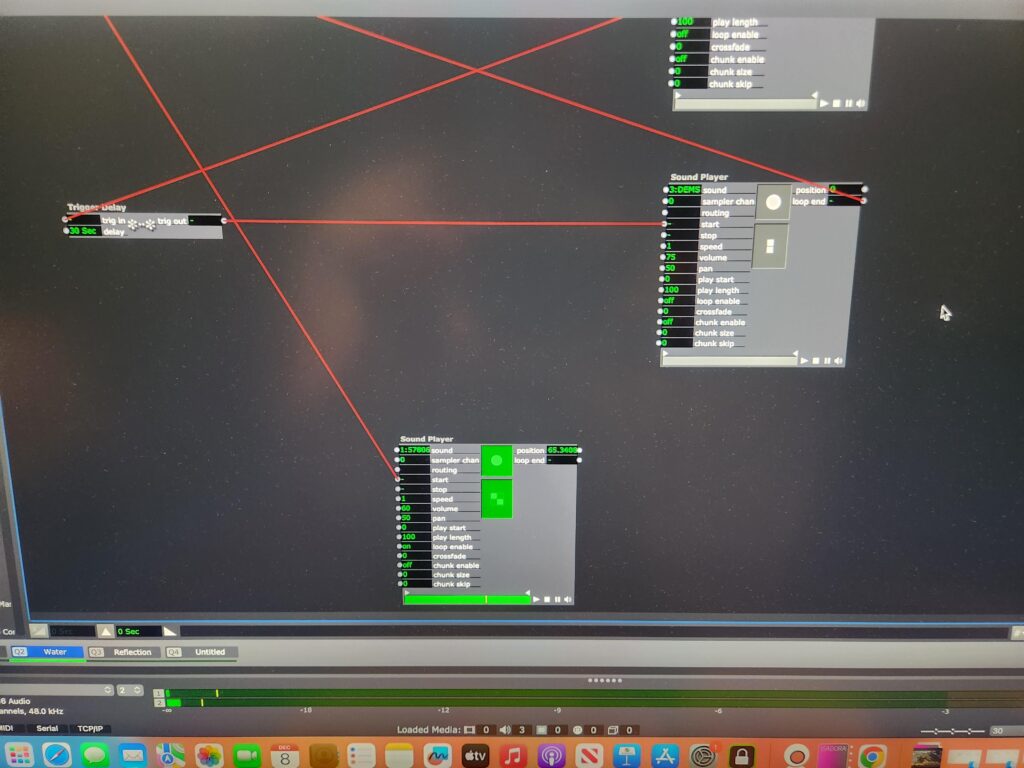

During cycle 2, it was suggested that I add auditory elements to my project to support participant engagement with the installation. For this cycle, I added 3 elements to my project: a recording of running water, a recording of the poem that I read live during cycle 2, and a recording of an invitation to the audience.

The words of the poem can be found in my cycle 2 post.

The invitation:

“Welcome in. Take a rest. What can you release? What can the water carry away?”

I set the water recording to play upon opening the patch and to continue to run as long as the patch was open. I set the recordings of the poem and the invitation to alternate continuously with a 30 second pause between each loop.

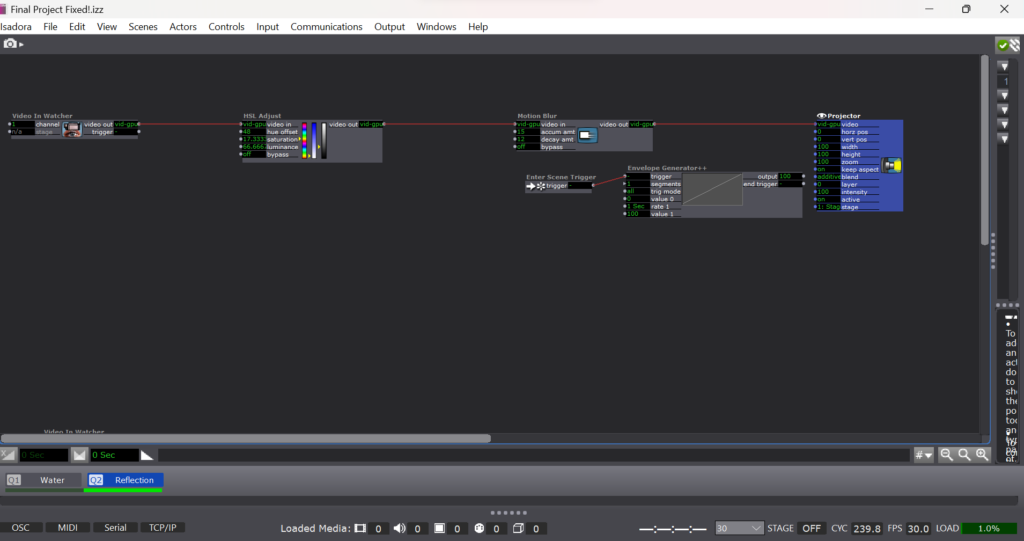

Additionally, I made changes to the reflection scene of the patch. First, I re-designed the reflection. Rather than using the rotation feature of the projection to rotate the projected image from the webcam, I used the spinner actor and then zoomed in the projection map so it would fit into the pool. Rather than try to make the image hyper-realistic, I decided to amplify the distortion of the reflection by desaturating it and then using a colorizer actor to give the edges of the moving image a purple hue. I also made minor adjustments to the motion blur to play up the ghostliness of the emmanation.

Second, I sped up the trigger delay to 3 seconds and the deactivate scene trigger to 2 seconds. I made this change as a result of feedback from a peer that assisted me with my adjustments to the projection mapping. She stated that because the length of time of the fading up and down of reflection scene took so long to turn on and off and the reflection itself was so subtle that it was difficult to determine how her presence in the pool was triggering any change. I found the ghostliness of the final reflection to be incredibly satisfying.

Impact of Motion Lab Set Up

On the day of our class showing, I found that the presence of my installation in the context of other tactile and movement driven exhibits in the Motion Lab helped the handful of context-less visitors figure out how to engage with my space. When people entered the Motion Lab, they first encountered Natasha’s “Xylophone Hero” followed by Amy’s “seance” of voices and lightbulbs. I found that moving through these exhibits established an expectation that people could touch and manipulate my project and encouraged them engage to more fully with my project.

I also observed that the presence of the pool itself and the mat in front of it also encouraged full-body engagement with the project. I watched people “swim” and dance in the petals and describe a desire to lay down or to make snow angels in the petals. The presence of the petals in a physical object that visitors recognized appeared to frame and suggest the possibilities for interacting with the exhibit by making it clear that it was something that they could enter that would support their weight and movement. I also observed that hearing the water sounds in conjunction with my poem also suggested how the participants could interact with my work. Natasha observed that my descriptions of my movement in my poem help her to create her own dance in the pool sprinkling the rose petals and spinning around with them as she would in a pool.

The main hiccup that I observed was that viewers often would not stay very long in the pool once they realized that the petals were clinging to their clothes because of static electricity. This is something that I think I can overcome through the use of static guard or another measure to prevent static electricity from building up on the surface of the petals.

A note about sound…

My intention for this project is for it to serve as a space of quiet meditation through a pleasant sensory experience. However, as a person on the autism spectrum that is easily overwhelmed by a lot of light and noise, I found that I was overwhelmed by my auditory components in conjunction with the auditory components of the three other projects. For the purpose of a group showing, I wish that I had only added the water sound to my project and let viewers take in the sounds from Amy and CG’s works from my exhibit. I ended up severely overstimulated as the day went on and I wonder if this was the impact on other people with similar sensory disorders. This is something that I am taking into consideration as I think about my installation in January.

What would a cycle 4 look like?

I feel incredibly fortunate that this project will get a “cycle 4” as part of my MFA graduation project.

Two of my main considerations for the analog set up at Urban Arts Space are disguising and securing the web camera and creating lighting that will support the project using the gallery’s track system. My plan for hiding the web camera is to tape it to the side of the pool and then wrap it in the drop cloth. This will not make the camera completely invisible to the audience, but it will minimize it’s presence and make it less likely that the web cam could be knocked off or into the pool. As for the lighting, I intend make the back room dim and possibly use amber gels to create a warmer lighting environment to at least get the warmth of theatrical lighting. I may need to obtain floor lamps to get more side light without over brightening the space.

Arcvuken posed the question to me as to how I will communicate how to interact with the exhibit to visitors while I am not present in the gallery. For this, I am going to turn to my experience as neurodivergent person and my experience as an educator of neurodivergent students. I am going to explicitly state that visitors can touch and get into the pool and provide some suggested meditation practices that they can do while in the pool in placards on the walls. Commen sense isn’t common – sometimes it is better for everyone if you just say what you mean and want. I will be placing placards like this throughout the entire gallery for this reason to ensure that visitors – who are generally socialized not to touch anything in a gallery – that they are indeed permitted to physically interact with the space.

To address the overstimulation that I experienced in Motion Lab, I am also going to reduce the auditory components of my installation. I will definitely keep the water sound and play it through a sound shower, as I found that to be soothing. However, I think that I will provide a QR code link to recordings of the poems so that people can choose whether or not they want to listen and have more agency over their sensory experience.

Cycle 3

Posted: December 14, 2023 Filed under: Uncategorized Leave a comment »After I studied connecting Isadora to Arduino and experimented its potentials in Cycle 1 and 2, I focused on considering what type of experience I could create with that technology in Cycle 3.

Feedbacks from everyone in Cycle 2, that just a tiny motion of small servo motors and its fuzzy structure could have its own quality, really inspired me.

I decided not to make such a “well designed” machine but just collected found objects and combined them with mechanics.

It was like installing an electric life in dead objects; how static objects get its another life as an interactive/kinetic machine.

Reactions from viewers (not only in our class but I also showed it to Art grad students and faculties) exceeded my expectations; the interactive motion which responds to viewers but still keeps a certain unpredictability made the sense of life (this object is living its own life) in each of viewers.

While its rule of interaction is very very very simple (just chasing and responding to a viewer in front of a webcam), viewers grows the sense and the meaning of that object in their own way…it was my discovery through this Cycle 3.

It could be a powerful potential of such an interactive project a creator doesn’t need fill out all the concept and meaning but viewers would find and grow it through the relationship with that object.

Body as Reservoir of Secrets, DEMS Cycle 3

Posted: December 12, 2023 Filed under: Uncategorized Leave a comment »In cycle 3, the three things I focused on were:

- Refined the composition of the graphics generated. Included more gaussian blur effects to reduce the number of hard-edges present in the work. The reduction of hard edges was intentional to reduce the “digital” nature of the graphics.

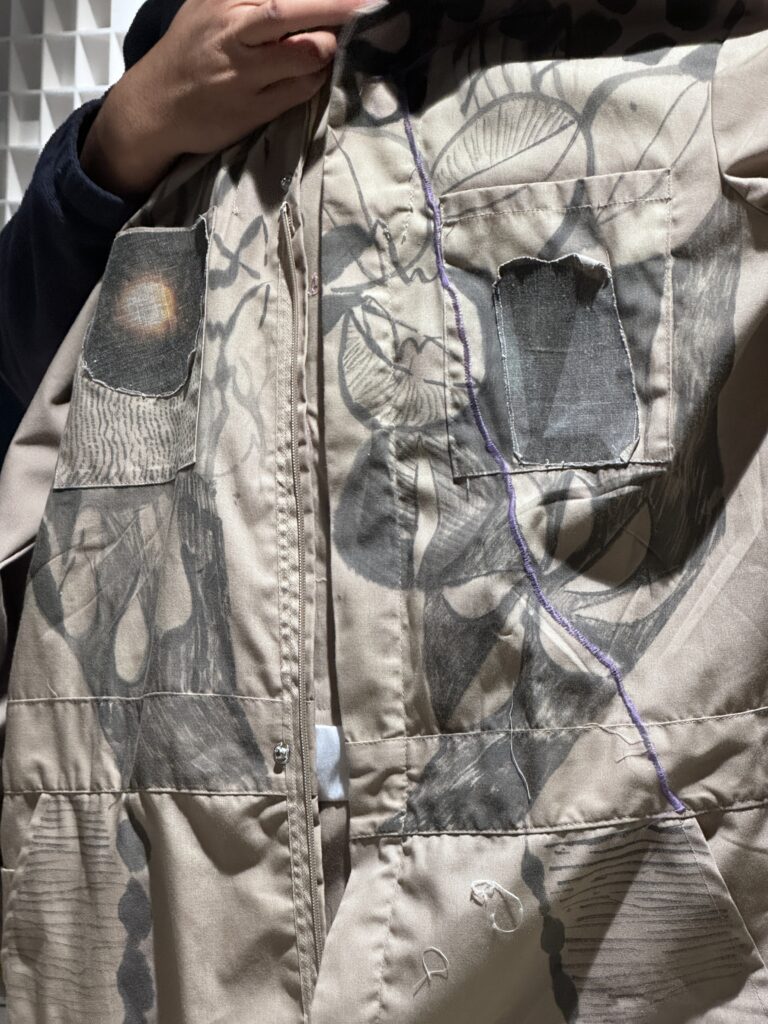

- I continued to develop the costume. I drew, painted and stitched in the costume, to create a more shamanistic leaning for the costume, hoping to steer away from an immediate aviation or space travel reference.

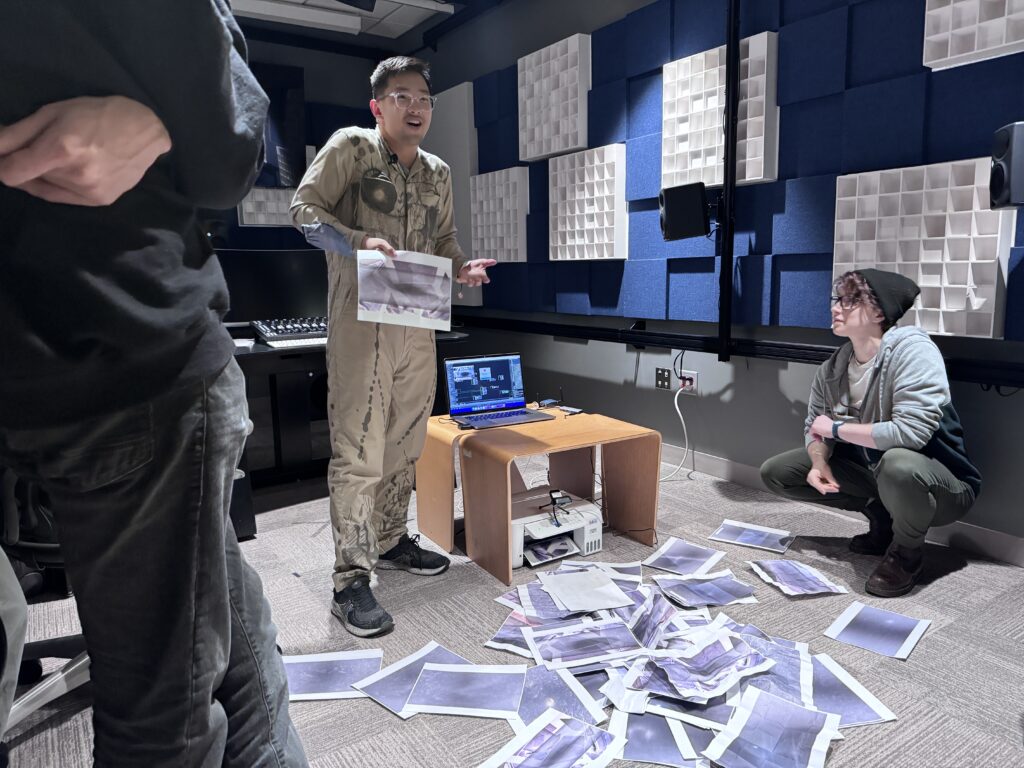

- I managed to program a Hot Folder in my computer, so that syncs up with the stage to image actor on isadora, and whatever Isadora exports to the Capture folder is automatically printed.

Decisions made:

- I decided to include an additional web-cam to do a live-capture of the pile of printed matter that was generated. The pile would then appear in some print outs.

- I moved the pulse sensing contact mic to my wrist due to the headache I experienced after the cycle 2 presentation.

- The score of the piece was supposed to be live-painting/collaging. There was not enough space/time for that to happen at ACCAD and the dec 8th critique. So I devised a score that was about folding each sheet of paper 8 times, and being unable to fold it the 9th time as a parallel to the irretrievable nature of ancestry.

Challenges/Feedback:

- My patch got very complicated and towards the end I was having some difficulty figuring how everything was wired, even though I did make an effort to be organized.

- Isadora crashed from time to time. I will look into buying a high capacity windows laptop next year.

- I needed some troubleshooting with the Hot Folder and took awhile to figure out why I was generating so many prints. Turned out that I had an earlier version isadora file running in the background which was feeding the hot folder a bunch of images.

- The prints generated had a general purple hue to them. It seems like I needed to vary the colors more in the graphics generated, so that this purple hue is counteracted/balanced.

Observations/ Ongoing curiosities:

- The printer working generated an unexpected mechanical soundscape to the piece. The rhythmic and hasty machine sound creates a sense of urgent-ness to the work, I find myself feeling some anxiousness and felt pressure to work at that rhythm/pace. I felt “chased” by the printer.

- A classmate posed me a question about generative art, and how generative art tend not to have any material cost to it. She asked me how I felt about the paper and ink expended for this performance. I actually welcome the creation of these materials because as an artist working in collage, I hardly throw away anything because I save everything in flat files. These materials will eventually find its way into another piece of work in the future.

- I intend to stage a cycle 4 in the Sherman Studio Arts Center after the semester quietens down. Cycle 4 will have the live-painting aspect and I will be exploring using what I have created in this class, as means to create a video work. I am also curious about how feature extraction can influence sound and be used for composing.

Body as Reservoir of Secrets, DEMS Cycle 2

Posted: December 12, 2023 Filed under: Uncategorized Leave a comment »For cycle 2, the idea started to take shape in a more concrete manner. I started to develop the costume using coveralls bought on amazon which I tailored myself for a better fit.

The following feature extraction functionalities were in the costume-

- On the coveralls I stitched on some patches with dark pieces of digitally printed fabric. This increases the overall contrast of the figure, which helps the eyes++ actor and webcam track object movements better.

- A contact microphone was housed in the chest pocket running up to my neck. An additional elastic band was devised to apply pressure and hold the contact microphone in place.

- Two iphone Gyroscopes were housed within blue/purple pockets with elastic openings on the arm and calf of the custom.

- A microphone was blown into whenever a scene change was desired, as a means of activating breath.

Challenges/Feedback

- Composition looked too busy given that more than 10 variables were being fed into isadora.

- My computer’s graphics card seems to be struggling and isadora crashes easily.

- A classmate mentioned that the costume appeared like an astronaut, which is not what I intended.

- I had a headache after wearing the neck piece with the contact mic for 20 minutes because it was restricting bloodflow.

Observations/On-going curiosities

- The class seems to be very interested to map/figure out which visual element was coupled with the various sensors.

- The class seems to be interested view the live generated graphics as a video piece, when I had intended the graphics primarily to be viewed as prints. I am open to the possibility of the video being screened alongside the painting performance.

Cycle 2

Posted: November 28, 2023 Filed under: Uncategorized Leave a comment »Cycle 2

I achieved connecting Isadora and Arduino for Cycle 1 and made just a simple Arduino project like blinking LEDs.

So, I set up my goal for Cycle 2 to try to operate motor(s) by Isadora using a webcam as a motion sensor.

I firstly tried a servo motor. Fortunately, Arduino actor on Isadora already had a feature to control servos so I could easily connect them.

I made a webcam as a motion sensor like we had played in PP3 and made an instant “interactive servo robot” whose arm chases a viewer’s motion.

I also tried a stepper motor. I was a little bit tricky for me as Arduino actor on Isadora doesn’t have a stepper motor operating feature.

So, I re-checked a tutorial Arduino code to control a stepper motor and made the same work flow on Isadora (blinking Low/High to make a magnetic motor spin).

Its motion was not smooth, but it worked!

Probably I need to adjust an interval time between Low/High or some other things to operate a stepper motor smoothly, but anyway I decided to use servo motors for Cycle 3 as I should focus on building a final output now (and servos are easier to control for my project).

But operating a stepper motor by Isadora taught me many things like the idea of translating a written code into an Isadora chart.

My Cycle 3, the final output, would be a small interactive robot using webcam, Arduino, servos, and Isadora for sure.

Feedbacks from the class during Cycle 2 inspired me to make a small but very organistic interactive robot; as I usually make spatial or sculptural scale works, I considered my small Arduino test project (like a tiny servo) just as a small mock-up, but everyone’s reaction suggested me that a small scale thing could have its own communicativeness which a large sculpture doesn’t have. This would be my direction to achieve Cycle 3.

Lawson: Cycle 1

Posted: November 14, 2023 Filed under: Nico Lawson, Uncategorized | Tags: cycle 1, dance, Interactive Media, Isadora Leave a comment »My final project is yet untitled. This project will also be a part of my master’s thesis, “Grieving Landscapes” that I will present in January. The intention of this project is that it will be a part of the exhibit installation that audience members can interact with and that I will also dance in/with during the performances. My goal is to create a digital interpretation of “water” that is projected into a pool of silk flower petals that can then be interacted, including casting shadows and reflecting the person that enters the pool.

In my research into the performance of grief, water and washing has come up often. Water holds significant symbolism as a spirit world, a passage into the spirit world, the passing of time, change and transition, and cleansing. Water and washing also holds significance in my personal life. I was raised as an Evangelical Christian, so baptism was a significant part of my emotional and spiritual formation. In thinking about how I grieve my own experiences, baptism has reemerged as a means of taking control back over my life and how I engage with the changes I have experienced over the last several years.

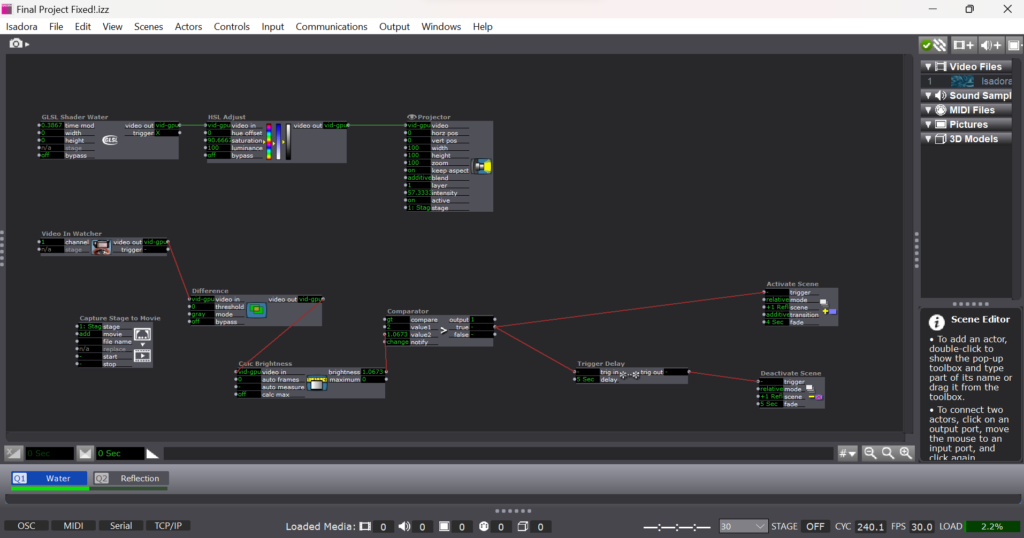

For cycle 1, I created the Isadora patch that will act as my “water.” Rather than attempting to create an exact replica of physical water, I want to emphasis the spiritual quality of water: unpredictable and mysterious.

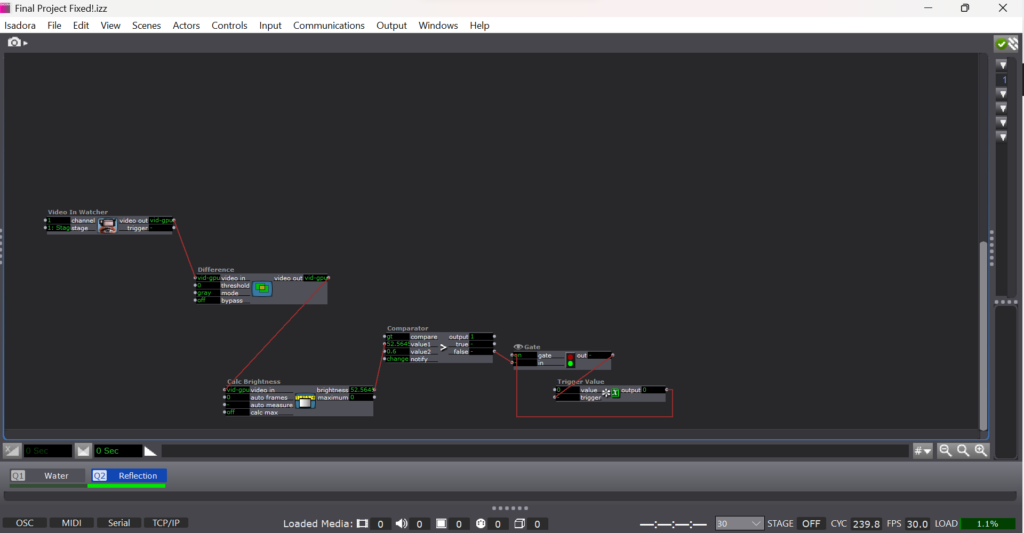

To create the shiny, flowing surface of water, I found a water GLSL shader online and adjusted it’s color until it felt suitably blue: ghostly but bright, but not so bright as to outshine the reflection generated by the web cam. To emphasize the spiritual quality of the digital emanation, I decided that I did not want the watch to be constantly projecting the web cam’s image. The GLSL shader became the “passive” state of the patch. I used difference, calculate brightness, and comparater actors with active and deactive scene actors to form a motion sensor that would detect movement in front of the camera. When movement is detected, the scene with the web cam projection is activated, projecting the participant’s image over the GLSL shader.

To imitate the instability of reflections in water I applied a motion blur to the reflection video. I also wanted to imitate the ghostliness of reflections in water, so I desaturated the image from the camera as well.

To emphasize the mysterious quality of my digital water, I used an additional motion sensor to deactivate the reflection scene. If the participant stops moving or moves out of the range of the camera, the reflection image fades away like the closing of a portal.

The patch itself is very simple. It’s two layers of projection and a simple motion detector. What matters to me is the way that this patch will eventually interact with the materials and how the materials with influence the way that the participant then engages with the patch.

For cycle 2, I will projection map the patch to the size of the pool, calibrating it for an uneven surface. I will determine what type of lighting I will need to support the web camera and appropriate placement of the web camera for a recognizable reflection. I will also need to recalibrate the comparater for a darker environment to keep the motion sensor functioning.

Lawson: PP3 “Melting Point”

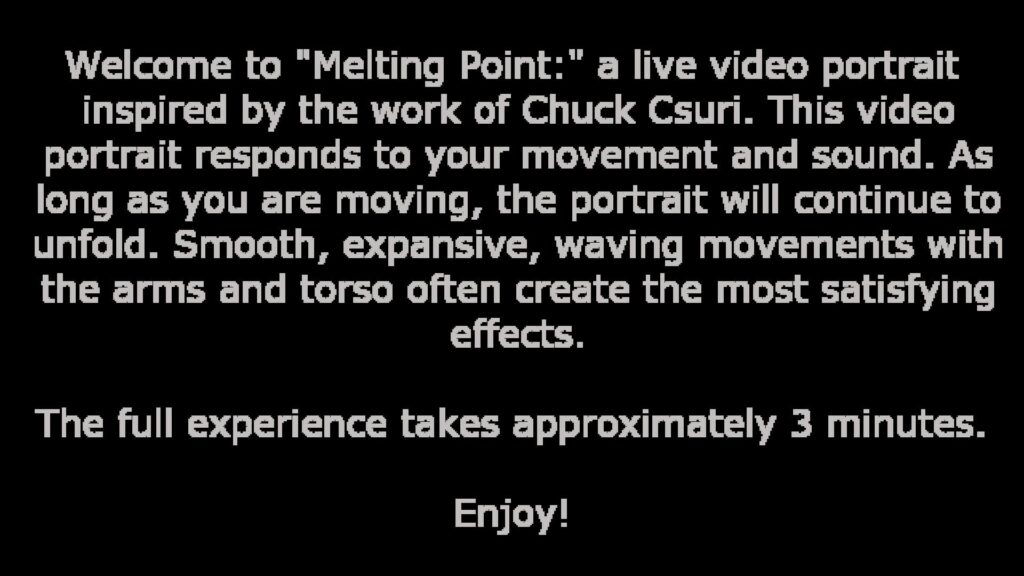

Posted: November 14, 2023 Filed under: Nico Lawson, Uncategorized | Tags: Interactive Media, Isadora, Pressure Project Leave a comment »For Pressure Project 3, we were tasked to improve upon our previous project inspired by the work of Chuck Csuri to make the project suitable to be exhibited in a “gallery setting” for the ACCAD Open House on November 3, 2023. I was really happy with the way that my first iteration played with the melting and whimsical qualities of Csuri’s work, so I wanted to turn my attention to the way that my patch could also act as it’s own “docent” to encourage viewer engagement with the patch.

First, rather than wait until the end of my patch to feature the two works that inspired my project, I decided to make my inspiration photos the “passive” state of the patch. Before approaching the web camera and triggering the start of the patch, my hope was that the audience would be curious and approach the screen. I improved the sensitivity of the motion sensor aspect of the patch so that as soon as a person began moving in front of the camera, the patch would begin running.

When the patch begins running, the first scene that the audience sees is this explanation. Because I am a dancer and the creator of the patch, I am intimately familiar with the types of actions that make the patch more interesting. However, audience members, especially those without movement experience, might not know how to move with the patch with only the effects on the screen. My hope was that including instructions for the type of movement that best interacted with the patch would increase the likelihood that a viewer would stay and engage with the patch for it’s full duration. For this reason, I also told the audience about the length of the patch so audience members would know what to expect. Additional improvements made to this patch were shortening the length of the scenes to keep viewers from getting bored.

Update upon further reflection:

I wish that I had removed or altered the final scene in which the facets of the kaleidoscope actor were controlled by the sound level watcher. After observing visitors to the open house and using the patch at home where I had control over my own sound levels, I found that it was difficult to get the volume to increase to such a level that the facets would change frequently enough for the actor to attract audience member’s attention by allowing them to intuit that their volume impacted what they saw on screen. For this reason, people would leave my project before the loop was complete seeiming to be confused or bored. For simplicity, I could have removed the scene. I also could have used an inside range level actor to lower the threshold for the facets to be increased and spark audience attention.