Pressure Project 1 (Sean)

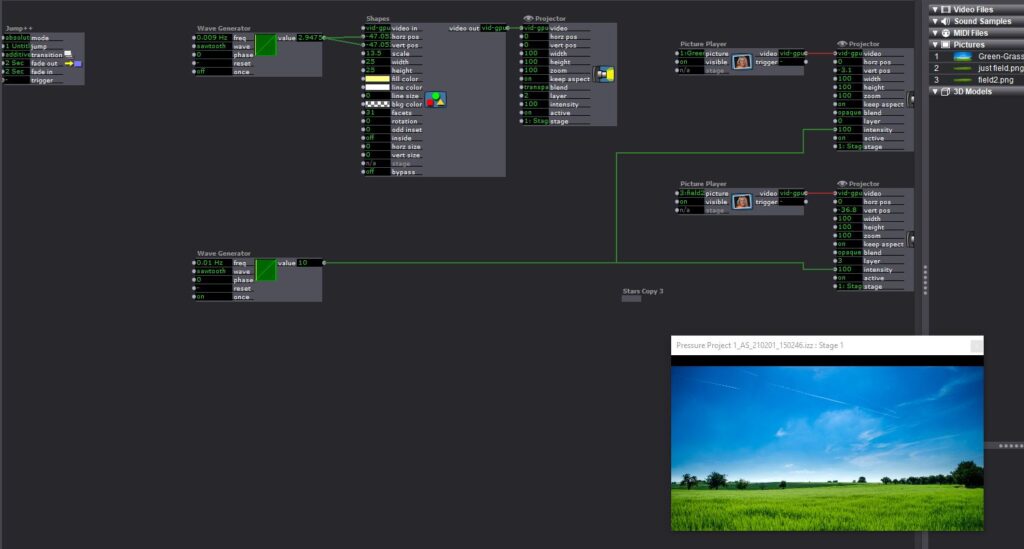

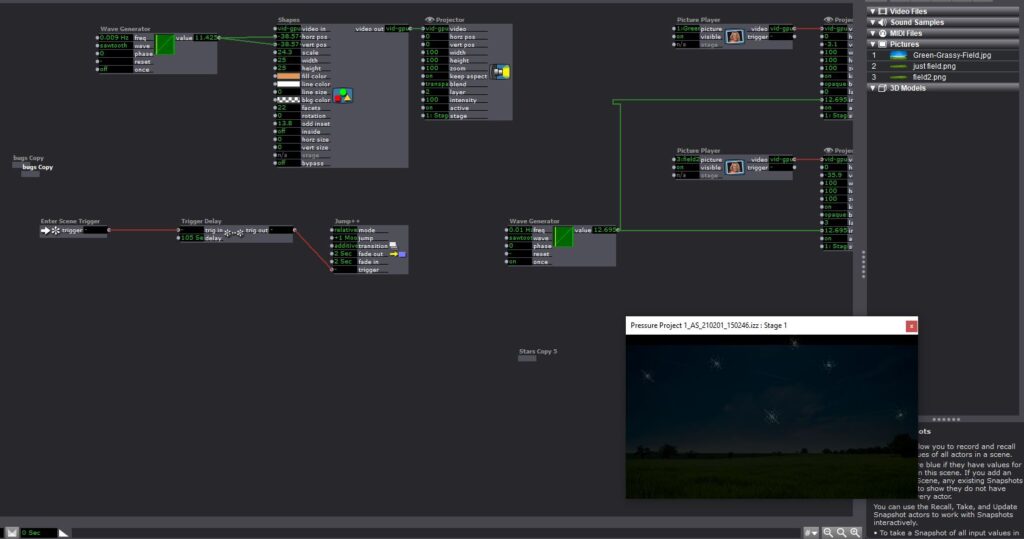

Posted: February 3, 2021 Filed under: Uncategorized Leave a comment »I used my time based pressure project to create a scene of the sun rise fading into a moon rise. I found an image of a big grass field with a few trees scattered around as a background. I had to do some photoshop work to layer the image so that the sun would appear to come up over the horizon and move across the sky. I played with the transparency and the opacity of the image to try and get it to appear as one.

This was really an opportunity to test what I thought I knew about Isadora and to try and recreate some of the actors we had covered in class. I began by playing with the Shapes actor to create the two celestial bodies that would move across the sky – the sun and the moon. I started by trying to figure out how to have them in the same scene and just follow each other, but then I was eating lunch and I had the idea I could make DIFFERENT scenes and that might just make the project more clean.

So I made two scenes. A sun rise and a moon rise. I adjusted the fascets and the odd insert setting on the shapes actor to create the sun and moon in different scenes. I knew that I wanted them to move with a deliberate slowness over the course of an extended duration. I messed quite a bit with the timing output of the wave generators to make them move across the sky at the tempo I desired.

Then next step was to synchronize the increasing and decreasing intensity of the background image to make it appear as thought the sun and the moon were rising. I found myself working again with the wave generator and learning how to change the min/max output and initilizing the setting so that the value when up or down how I wanted to see them

Next, I wanted to have some overlapping stars in the sky to create the illusion that they were contiguous scenes. Shapes actor again set the fascets and odd insert high. I used a random wave generator to give the stars a twinkling quality and used what I had learned about the inverting the value of the intensity to make them appear and disappear as the sun rose.

I was trying to create the effect of lady bugs in my daytime scene that would disappear as it got dark, but I couldn’t get them where I wanted them. We adjusted these settings in class and now I think I have a better understanding of how those actors were talking to each other and how to address that in the future.

Project Bump – Sean

Posted: January 28, 2021 Filed under: Uncategorized Leave a comment »Project Bump – Maria

Posted: January 27, 2021 Filed under: Uncategorized Leave a comment »I really enjoyed the task of creating a “fortune teller machine” for this pressure project. I have no idea how I would make it myself (although I think I could probably figure it out if I spent some time playing around), but I thought it was a really cool application of the interactivity of what Isadora allows!

Reading this student’s reflection revealed a variety of issues that I will probably run into in my own pressure projects, like spending several hours trying to find a specific actor, or making a patch much more complex than it actually needs to be. It’s good to be reminded that these are part of everyone’s learning processes and I shouldn’t let them get me down if I run into them myslef 🙂

Project Bump (Sara)

Posted: January 26, 2021 Filed under: Uncategorized Leave a comment »I scrolled backwards through time to find a project that caught my eye, but I found myself somewhat overwhelmed by the myriad techniques and technologies on display that we have not yet discussed in class. For my own peace of mind (and, admittedly, to satisfy my curiosity), I then clicked on my former peer Taylor’s subsection to scope out his very first Pressure Project in the course.

As is to be expected from one of the most cerebral people I have known, even Taylor’s first foray into Isadora was technically-challenging and highly interactive. What most struck me about the work, though, was his writing in relation to it. He speaks frankly about frustrations, fiddling, and failures even in the midst of what evolved into a successful work. And honestly, I found this to be encouraging. Yes, I will undoubtedly, most assuredly bump into any number of walls as I try to cobble something “delightful” together in five hours. But, given that hard and fast time constraint, rather than try to punch the wall down with my fists, I’ll need to circumnavigate these barriers to keep moving forward. Taylor mentioned that the time limitation led him to make “simpler choices.” I’ll keep that in mind and try to do the same.

MByrne Bump

Posted: January 26, 2021 Filed under: Uncategorized Leave a comment »https://dems.asc.ohio-state.edu/?p=2597

Here we have an interactive photo-booth design that allows the user to place themselves in a setting and costume of their choosing. I resonated with Matt’s project since I am interested in bringing XR elements into live music performance, especially with the sudden prevalence of virtual events. Infected Mushroom did a tour with XR and projection mapping at least 5 years ago, but they did a one-off virtual event more in-line with this back in November.

https://www.youtube.com/watch?v=ElFL6JswhIQ

Project Bump

Posted: January 25, 2021 Filed under: Uncategorized Leave a comment »I selected Emily Pickens’s third pressure project. The concept and execution are very simple, but I found it to be a very fun interactive object. It is a box with several touch sensitive spots on it that when touched play a different sound. It uses the makey makey and has the user keep one hand on ground while exploring the box with their other. The Isaodra setup seems quite straightforward, but I think there are so many ways one could further this idea and have different touchpoints trigger a variety of feedback. Maybe the box vibrates when you touch one area. Or flashes a color from the inside at another.

Cycle 3 – Stanford

Posted: December 14, 2020 Filed under: Uncategorized Leave a comment »For Cycle 3 I had to rethink the platform I was using to transport my Sketchup model from my computer to VR. I was having issues with all of the shapes loading in SentioVR and the base subscription didn’t allow me to use VR at all. This made me look into an extension called VR Sketch.

This ended up being a way better program. You are able to make edits while in VR and even have multiple people in the model at the same time. The render quality is not as great though.

I think my favorite thing about the program was the workflow. I used it a few times over the past couple weeks while working on other class projects. I was able to make quick sketches models for a design I was working on and put myself in it in VR to see if it worked the way I thought it was working. Then I could make changes and go back in VR within minutes. It made the way I design a lot different. I now have a practical way to see the way an audience is going to experience something before it is built and make changes accordingly.

Cycle 3 – Final Project

Posted: December 13, 2020 Filed under: Uncategorized Leave a comment »In this iteration of the project, I completed a more robust prototype of the box, which allows a more usable interface while calling cues.

The box evolved between cycle two and three, but adding all of the wires and the grounding option. In cycle two, the box mainly just had the spot outlined for the Makey Makey, and the top of the box had numbers painted on. I improved by then adding all of the wires, cutting the holes for the box, and securing everything inside the box.

The patch did not change from cycle 2.

This project could also work off of just the Izzy patch, but you would then need to memorize which letters are what number, where the box allows you to just think of the numbers.

Cycle 2 – Final Project

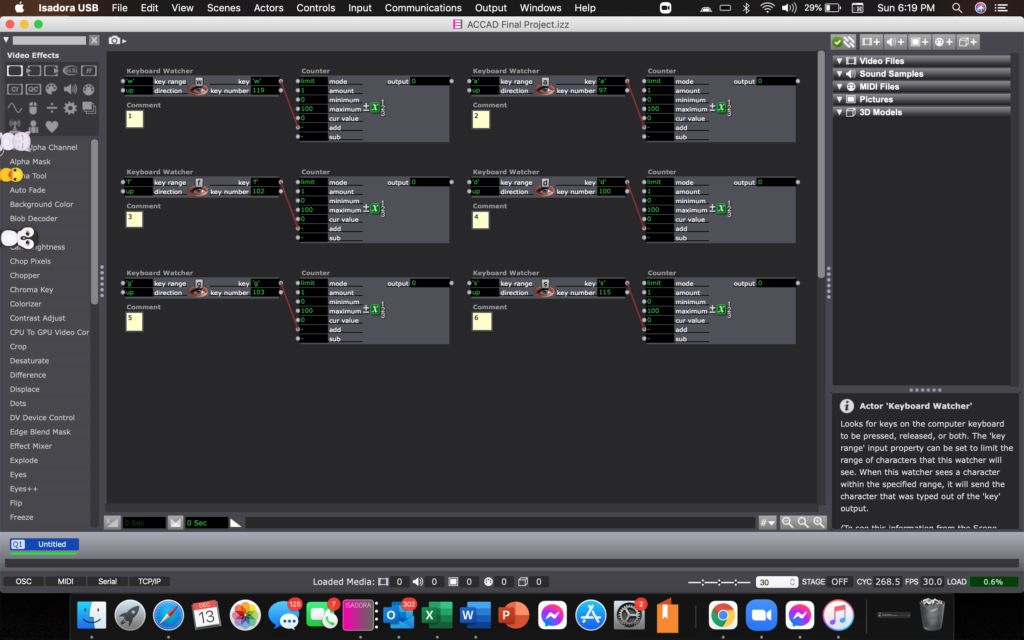

Posted: December 13, 2020 Filed under: Uncategorized Leave a comment »In the second cycle of my final project, I focused upon the Isadora patch. I knew I didn’t want to make it overtly complicated, though I wanted to attempt to discover a way to create a more visual output of the data, but did not decide to go as far to do so.

Above is the Isadora patch itself.

I do not have any photos of the box during this cycle, as I forgot to photograph any before moving onto the third cycle. The cycle three file will describe the detailed changes between two and three, for the box.

Cycle 1 – Final Project

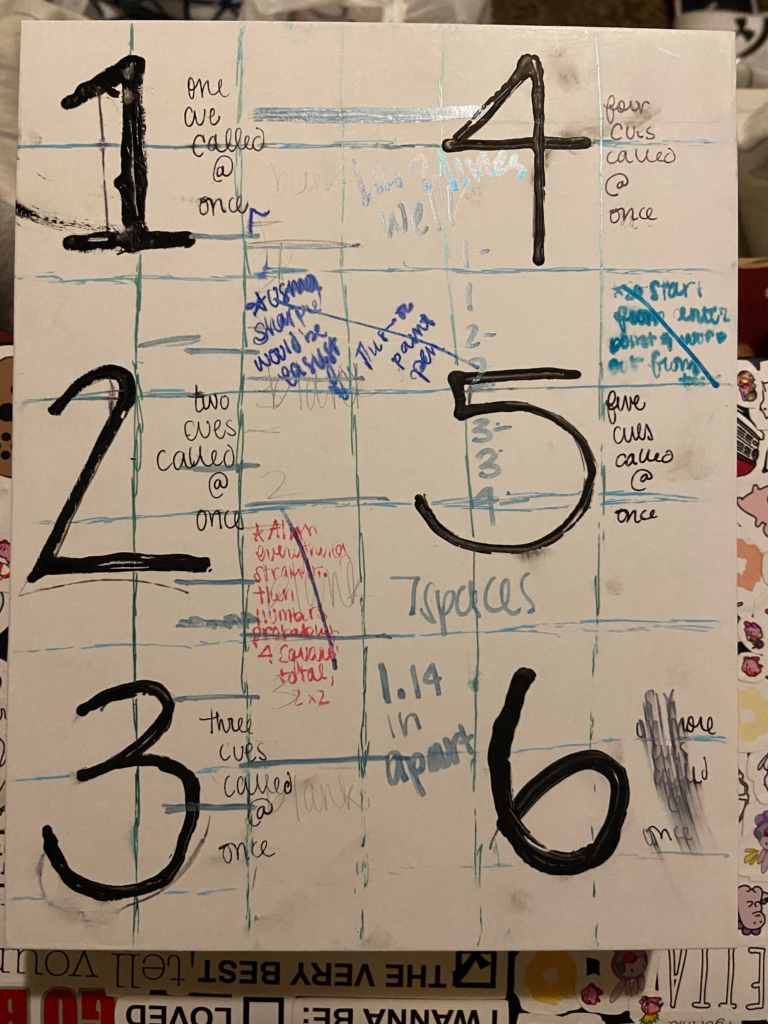

Posted: December 13, 2020 Filed under: Uncategorized Leave a comment »With my final project, I wanted to create a box that could be used to track the number of cues called together at once by a stage manager.

In order to do so, I would need a box, electrical paint, conductive thread, wires, and a makeymakey. Once I got everything needed, I began crafting out the measurements to be able to line everything up on the box to allow comfortable sized numbers, and organization to ease use.

Each of these photos document the original concept for the box, along with all of my added notes for improvement on my next version of the box.