Project Bump

Posted: September 8, 2020 Filed under: Uncategorized Leave a comment »I found Aaron Cochran’s cycle project to have an interesting trajectory. https://dems.asc.ohio-state.edu/?p=2281

I like how Aaron went from working with the Kinect sensor and projector to create the interactive game. I thought the idea of this kind of augmented reality game was executed well and the environment seemed very responsive to the movements of the player. Throughout all three of his cycles Aaron seemed to have a logical process that arrived at a good result.

Project Bump

Posted: September 7, 2020 Filed under: Uncategorized Leave a comment »I really enjoyed reading about Parisa Ahmadi’s final project “Nostalgia” (https://dems.asc.ohio-state.edu/?p=1743). The way the visuals of the final project overlapped on various fabrics created a full world of ideas just like how I would imagine my memories swirling around my mind. The softness of the fabric and genuine content of the visuals develops a more intimate space and allows audience members to feel comfortable experiencing what ever they end up experiencing. Since the visuals were connected to specific triggers on objects the audience would be able to directly interact and have a sense of how they impact their environment. Overall it seemed like a really thoughtful project and the result was able to surround the viewer with activity that interacted with all of the senses.

Cycle 1

Posted: December 13, 2019 Filed under: Uncategorized Leave a comment »I changed the project I wanted to do many times leading up to Cycle 1. So, for cycle 1 I sort of did a pitch to the class of what I had finally decided that I wanted to work on.

I chose to do a Naruto inspired “game” that tracks the position of a user’s hands and then does something when the correct amount of hand signals were “recognized”.

I wanted to use Google’s hand tracking library but at the suggestion of the class I opted to use the much better Leap motion controller to due the hand tracking. The leap motion controller has a frame rate of 60 frames per second which makes it ideal for my use case.

Secondly, I had the idea of using osc to send messages to the lightboard when a jutsu was successfully completed.

Short presentation below:

Cycle 3 “”lighting dance challenge”

Posted: December 13, 2019 Filed under: Uncategorized Leave a comment »

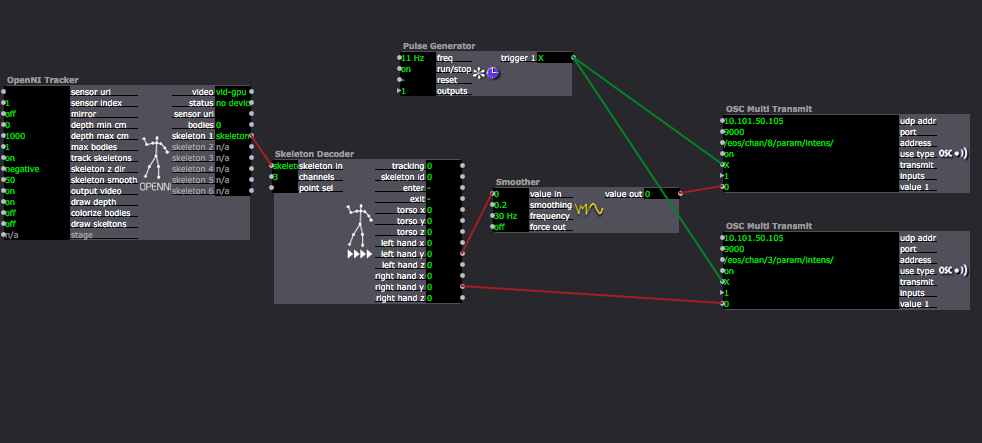

In the last performance, I changed a lot to play with the actor “OpenNI”. It helped me really design the various simple movements which can be recognized by the connector while showing a great “dance composition” on the stage. Another thing is, with the help of the actor “OSC Multi Transmit”, I have the chance to connect the data of human body directly to the data of lighting intensity/color. With out only trigger the cues I made in to lighting board, it bring up more possibilities of the idea of having audience member control single light directly.

So I finally made the structure for my final:

Introduction part:

People: myself

Position: Red Spot

Movement: Rise/put down arms (allow various speed).

Isadora: connector see the arms position data, connect to the lighting intensity.

Audience Participate Part:

People: three audience members

Position: stand on “red spot”, “yellow spot” and “blue spot” individually

Movement: red: rise/put down arms (with certain tempo I gave).

yellow: kneel down and stand up (with certain tempo I gave).

Blue: jump into certain area and leave (with certain tempo I gave).

Isadora: red: arms position data

yellow: torso position data

blue: body brightness data

When all the audience members all doing the right movement with the right tempo on stage, they can successfully trigger the lights at different tempo to create a dance piece with all various movement and lighting changes. If they all doing right, I will go in front of the connect sensor 2 to add the intensity so that it will trigger a strobing lights with the sudden silence. It seems something wrong, but after 5 seconds, a automatically lighting dance will be triggered which means everyone did a good job and it is the symbol of the success.

However, in the final show, the connector didn’t work that well which did not fully showed my design. But the introduction part went really well.

Thank you Alex and Oded really helped me go through every technology problem and artistic problems I had. This project kept changing until the end and without everyone’s help, i cannot really finish this! And really a great course!

Cycle 2 – Hand tracking algo

Posted: December 13, 2019 Filed under: Uncategorized Leave a comment »I spent the majority of the time leading up to this cycle devising a hand-tracking algorithm that could efficiently and robustly track the position of the fingers in (one) hand. There are a few approaches I took:

Approach 1: Get the position of the palm of the hand from polling the leap motion controller. Then subtract the difference of the finger position to get its relative position.

Pros: Consistent, deals with location away from Leap motion controller well

Cons: Gives bad data at certain orientations

Approach 2: Get the differences of the finger positions in relation to each other. These deltas will provide relative finger positions to each other.

Pros: Works well at most hand orientations

Cons: Not as good when fingers close together

Approach 3: Check to see if each finger is extended and generate a unique code corresponding to each finger for each frame.This is the approach I went with. This makes it much easier to program as it abstracts away a lot of the calculations involved. Also, this ended up being arguably the most robust out of the three due to only using Leap Motion’s api.

Pros: Very robust, easy to program, works well at most orientations

Cons: Will have to make hand signals simpler to effectively use this approach.

Cycle 2 “Tech Rehearsal”

Posted: December 13, 2019 Filed under: Uncategorized Leave a comment »In my cycle 2 showing, I took away the fun part we had in cycle one where I have audience members dancing with the music on the stage and reacted to the lightings. In this cycle, I tried a little bit serious run to have people become my crew member and help me trigger the lightings with their movement.

In this run, I called them helper instead of “lighting designer” which makes more sense to me. But still, I don’t think the audience members only have one title when they join my project. They can be the performer moving on the stage while become the crew member trigger the lighting with their movement tasks. I think I should really blur their title instead of really giving them a position contains various jobs.

Also, with the help of Alex, I add one OpenNI actor to have the connector recognize people’s shape, so that it can see where people’s arms/legs/torso are moving. With the help of this, I designed a movement of clapping over head and when the connect see both of the hands reach the same hight it will trigger the cue. It really helped me develop more movements for audience members rather than just letting them appear/not appear in the area where the connector can see.

I will develop more movements after this cycle and really blur audience member’s role as being a lighting helper and a performer at the same time.

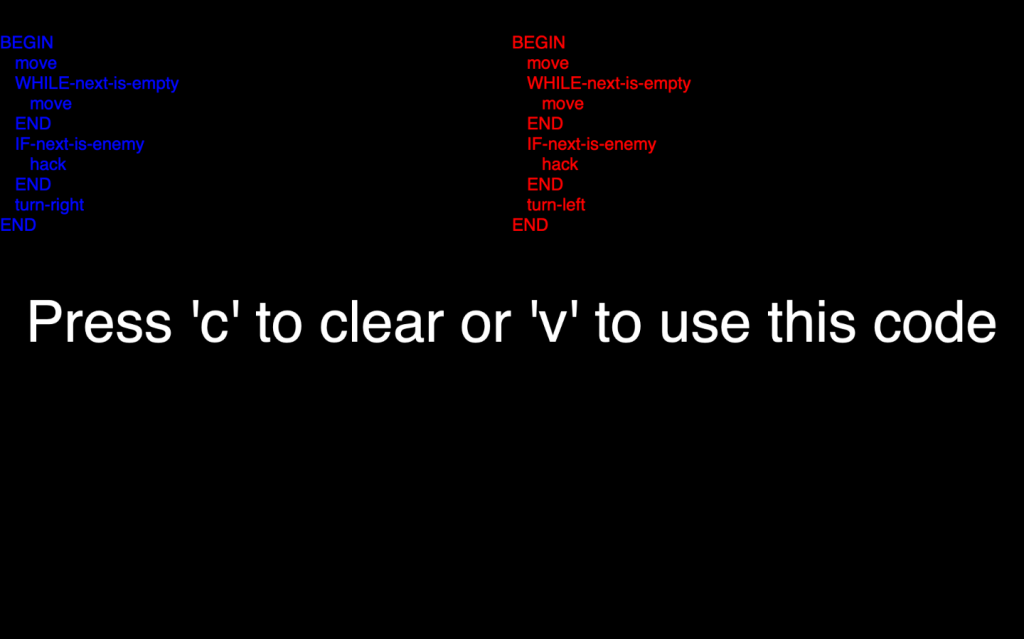

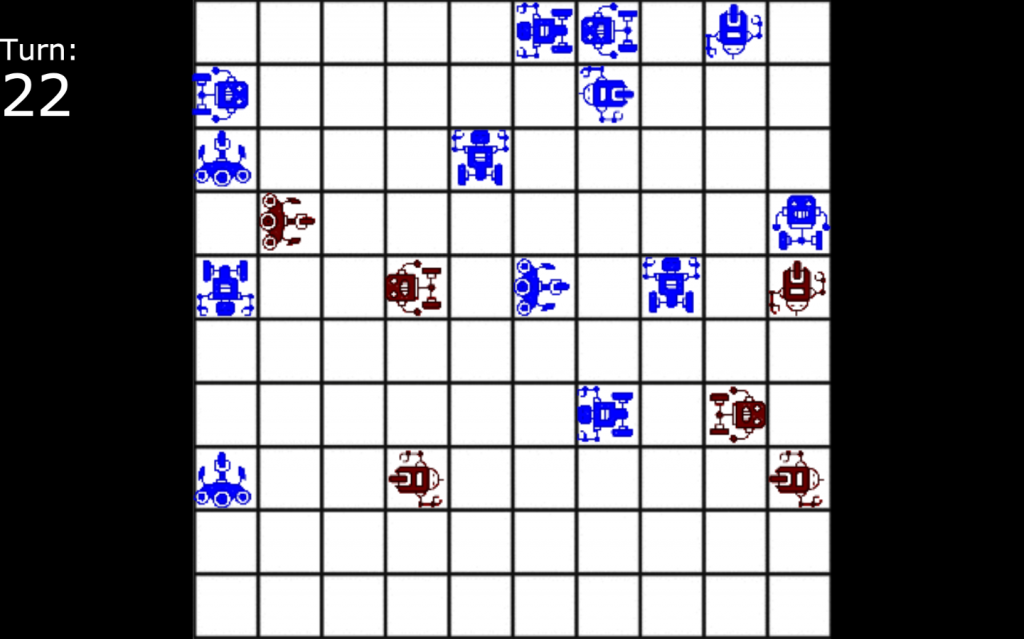

DroidCo. Final

Posted: December 12, 2019 Filed under: Uncategorized Leave a comment »For the final stage of my project, I finished the display and game logic of DroidCo. As a final product, DroidCo. allows two players to use OSC to “write code” (by pressing a predetermined selection of buttons) that their droids will execute to compete. The goal of the game is to convert every droid on the grid onto your team.

function main()

{

var worldState = JSON.parse(arguments[0]);

var droid = arguments[1];

var startIndex = (droid * 6) + 2;

var team = worldState[startIndex + 1];

var x = worldState[startIndex + 2];

var y = worldState[startIndex + 3];

var dir = worldState[startIndex + 4];

var red;

var blue;

var hor;

var ver;

var rot;

switch (team){

case 1:

blue = 50;

red = -100;

break;

case 2:

blue = -100;

red = 50;

break;

}

switch (x){

case 0:

hor = -45;

break;

case 1:

hor = -35;

break;

case 2:

hor = -25;

break;

case 3:

hor = -15;

break;

case 4:

hor = -5;

break;

case 5:

hor = 5;

break;

case 6:

hor = 15;

break;

case 7:

hor = 25;

break;

case 8:

hor = 35;

break;

case 9:

hor = 45;

break;

}

switch (y){

case 0:

ver = 45;

break;

case 1:

ver = 35;

break;

case 2:

ver = 25;

break;

case 3:

ver = 15;

break;

case 4:

ver = 5;

break;

case 5:

ver = -5;

break;

case 6:

ver = -15;

break;

case 7:

ver = -25;

break;

case 8:

ver = -35;

break;

case 9:

ver = -45;

break;

}

switch(dir){

case 0:

rot = 180;

break;

case 90:

rot = 270;

break;

case 180:

rot = 0;

break;

case 270:

rot = 90;

break;

}

return [red, blue, hor, ver, rot];

}Above is the code for the “droid parser” which converts each droid’s worldState data into values that Isadora can understand to display the droid.

Overall, the game was a major success. It ran with only minor bugs and I accomplished most of what I wanted to do with this project. The code was rather buggy (I underestimated the strain new users could put on my code). I also ran into several problems with players’ code not executing exactly as they wrote it. These bugs are definitely due to the String Constructor (Isadora does not handle strings very well), but I think I can work out the kinks.

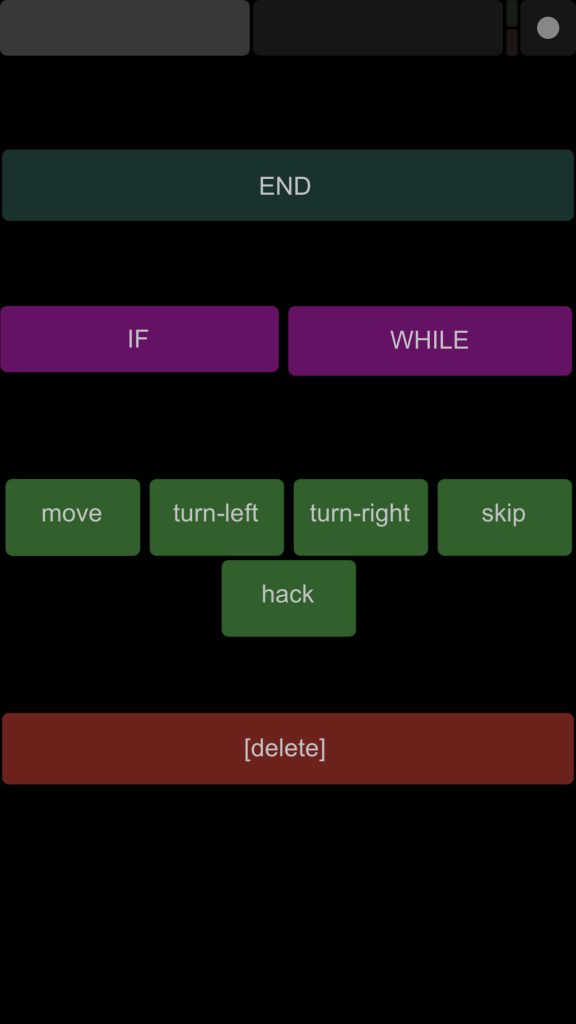

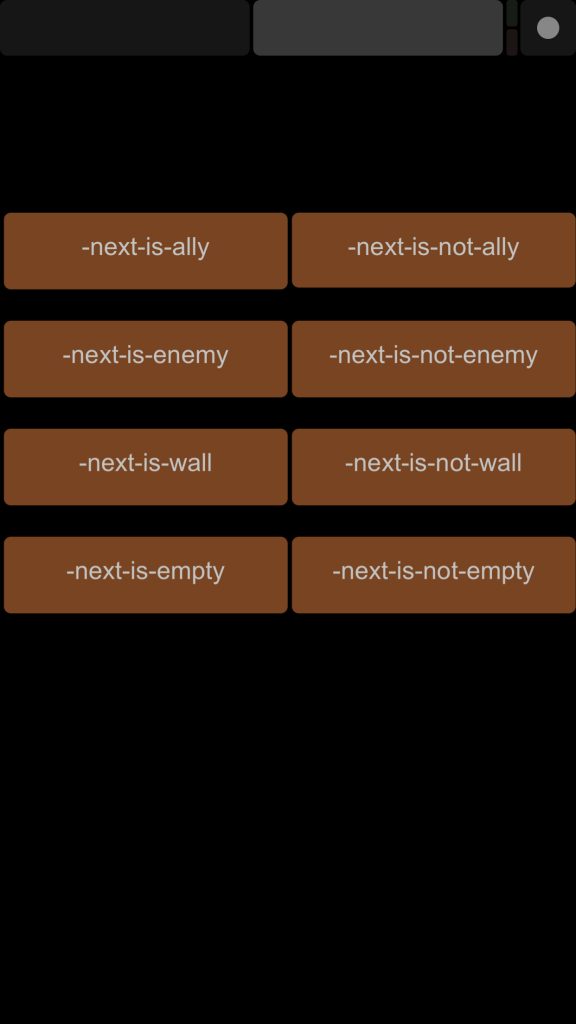

Cycle 2 – DroidCo. Interface Development

Posted: December 12, 2019 Filed under: Uncategorized Leave a comment »For cycle two, I finished the development of the OSC interface for DroidCo. The interface consisted of a set of buttons that sent keywords to Isadora which would then be used to generate the virtual code for each team’s droid to run.

The interface (available in both an iPhone/iPod version and iPad version) consisted of two pages. The first contained the END command to complete code blocks, IF and WHILE to create conditional blocks, action keywords to trigger turn actions, and a delete button to remove lines of code. The second page contained all the conditionals that would be used with the IF and WHILE blocks.

To process these OSC inputs, I created a String builder javascript program. This program takes the next OSC input and adds it to a code string (the string the compiler from cycle 1 uses to create the virtual code) and a display string that shows the user their code (with proper indentation). Below is the code for this program.

function main(){

var codeString = arguments[0];

var displayString = arguments[1];

var next = arguments[2];

var ends = arguments[3];

var i;

switch(next){

case "END ":

codeString = codeString.concat(next);

ends = ends - 1;

for (i = 0; i < ends; i++){

displayString = displayString.concat("\t");

}

displayString = displayString.concat(next);

displayString = displayString.concat("\n");

break;

case "IF":

codeString = codeString.concat(next);

for (i = 0; i < ends; i++){

displayString = displayString.concat("\t");

}

displayString = displayString.concat(next);

ends = ends + 1;

break;

case "WHILE":

codeString = codeString.concat(next);

for (i = 0; i < ends; i++){

displayString = displayString.concat("\t");

}

displayString = displayString.concat(next);

ends = ends + 1;

break;

case "move ":

codeString = codeString.concat(next);

for (i = 0; i < ends; i++){

displayString = displayString.concat("\t");

}

displayString = displayString.concat(next);

displayString = displayString.concat("\n");

break;

case "turn-left ":

codeString = codeString.concat(next);

for (i = 0; i < ends; i++){

displayString = displayString.concat("\t");

}

displayString = displayString.concat(next);

displayString = displayString.concat("\n");

break;

case "turn-right ":

codeString = codeString.concat(next);

for (i = 0; i < ends; i++){

displayString = displayString.concat("\t");

}

displayString = displayString.concat(next);

displayString = displayString.concat("\n");

break;

case "skip ":

codeString = codeString.concat(next);

for (i = 0; i < ends; i++){

displayString = displayString.concat("\t");

}

displayString = displayString.concat(next);

displayString = displayString.concat("\n");

break;

case "hack ":

codeString = codeString.concat(next);

for (i = 0; i < ends; i++){

displayString = displayString.concat("\t");

}

displayString = displayString.concat(next);

displayString = displayString.concat("\n");

break;

case "back":

if(codeString != "BEGIN "){

if(codeString.substring(codeString.length - 4) == "END "){

ends = ends + 1;

}

codeString = codeString.substring(0, codeString.lastIndexOf(" "));

codeString = codeString.substring(0, codeString.lastIndexOf(" ")+1);

displayString = displayString.substring(0, displayString.lastIndexOf(" "));

displayString = displayString.substring(0, displayString.lastIndexOf(" ")+2);

}

break;

default:

codeString = codeString.concat(next);

displayString = displayString.concat(next);

displayString = displayString.concat("\n");

break;

}

var ret = new Array(codeString, displayString, ends);

return ret;

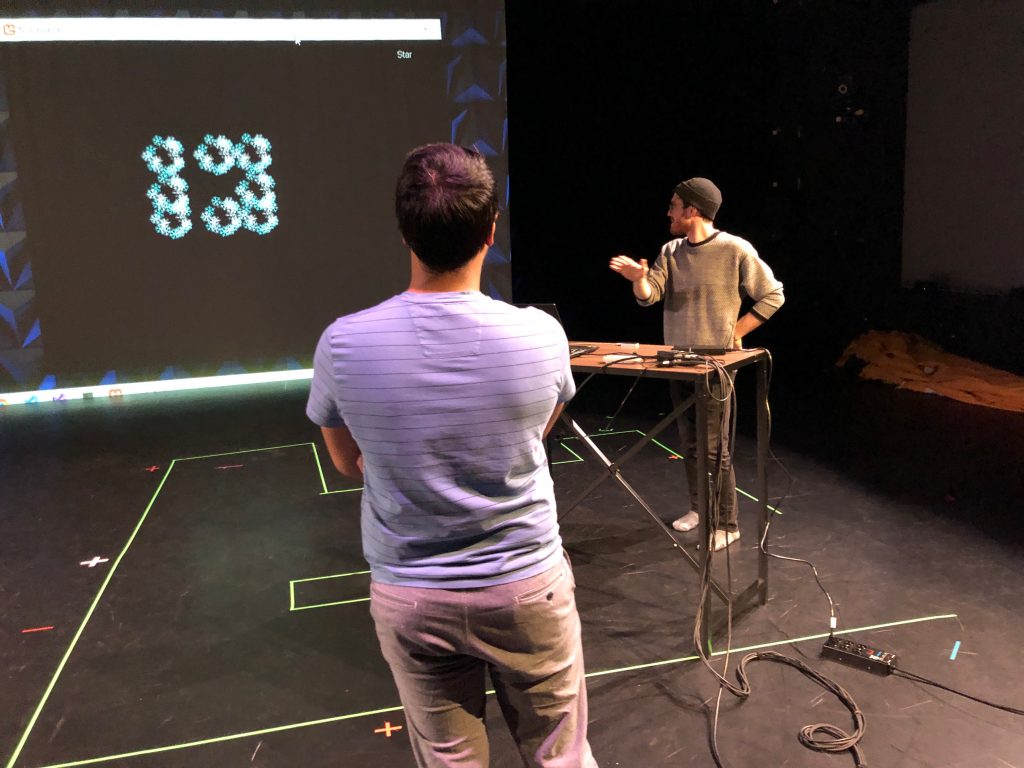

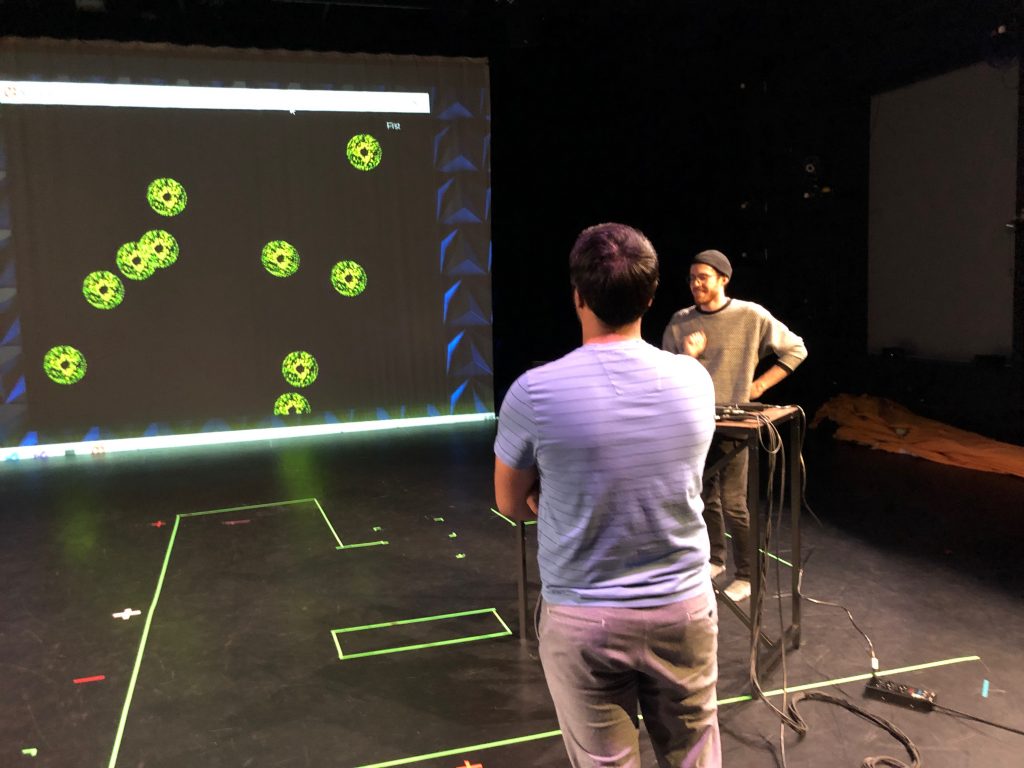

}Cycle 3- One-Handed Ninjutsu

Posted: December 12, 2019 Filed under: Uncategorized 2 Comments »This project was inspired by an animated TV show that I used to watch in middle school; Naruto. In Naruto, the protagonists had special abilities that they could activate by making certain hand signals in quick succession. Example:

Almost all of the abilities in the show required the use of two hands. Unfortunately, The leap motion controller that I used for this project did not perform so well when 2 hands were in view and it would have been extremely difficult for it to distinguish between 2-handed signals. However, I feel that the leap motion was still the best tool for hand-tracking due to its impressive 60 frames per second hand tracking that was quite robust (with one hand) on the screen.

Some more inspirations:

I managed to program 6 different hand signals for the project:

Star, Fist, Stag, Trident, crescent, and uno

Star – All five fingers extended

Fist – No fingers extended (like a fist)

Uno – Index finger extended

Trident – Index, Middle, Ring extended

Crescent – Thumb and pinky extended

Stag – Index and pinky extended

The Jutsu that I programmed are as follows:

Fireball Jutsu – star, fist, trident, fist

Ice Storm Jutsu – uno, stag, fist, trident

Lightning Jutsu – stag, crescent, fist, star

Dark Jutsu – trident, fist, stag, uno

Poison Jutsu – uno, trident, crescent, star

The biggest challenges for this project was devising a robust-enough algorithm for the hand signals that would be efficient enough to not interfere with the program’s high frame rate.

And two: Animations and art. Until you start working on a game, I think people don’t realize the amount of work that goes into animating stuff and how laborious of a process it is. So, that was a big-time sink for me and if I had more time I definitely would have improved the animation quality.

Here it is in action: https://dems.asc.ohio-state.edu/wp-content/uploads/2019/12/IMG_8437.mov

Some pics:

You can find the source code here: https://github.com/Harmanjit759/ninjaGame

NOTE: You must have monogame, leap motion sdk, c++ 2011 redistributable installed on your machine to be able to test out the program.

Cycle 3 – Aaron Cochran

Posted: December 12, 2019 Filed under: Uncategorized Leave a comment »For my final project, I achieved blob detection and the response of projected mapping onto a grid.

As seen in the video below, I set out to create a prototype of a combination between the games checkers and minesweeper. Scott Swearingen often talks about the concepts of public and private information in gameplay. In the game of Poker for example, there is public information about how much you are bidding, while the players each have private information of what is in their hands and on the board. These factors influence decision making on the behalf of the players.

This prototype acts as a proof of concept for a game that uses projection mapping to have information that is “private information” that is held by the game that changes the strategy of players as a result. By combining checkers and minesweeper, a level of randomness was added that would disrupt strategy by random pieces being “blown up” by the game rather than “captured” by the players.