Final Project: Looking Across, Moving Inside

Posted: December 10, 2017 Filed under: Final Project, Uncategorized Leave a comment »Looking Across, Moving Inside

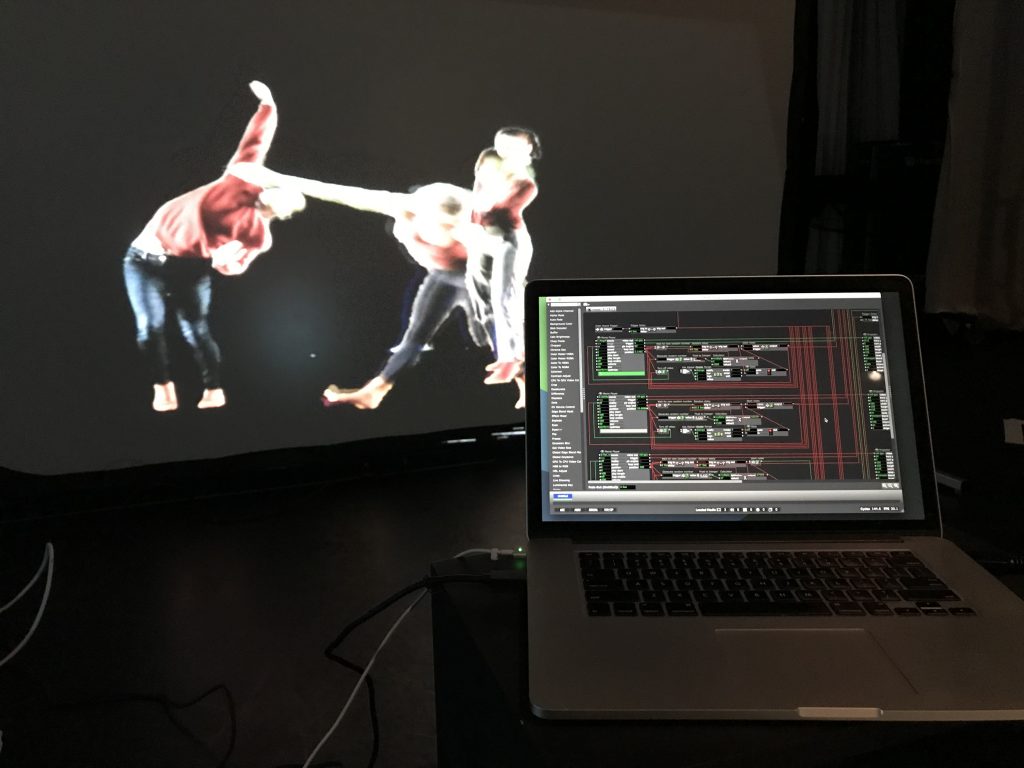

What are different ways that we can experience a performance? Erin Manning suggests that topologies of experience, or relationscapes, reveal the relationships between making, performing, and witnessing. For example, the relationship between writing a script or score, assembling performers, rehearsing, performing, and engaging the audience. Understanding these associations is an interactive and potentially immersive process that allows one to look across and move inside a work in a way that witnessing only does not. For this project, I created an immersive and interactive installation that allows an audience member to look across and move inside a dance. This installation considers the potential for reintroducing the dimension of depth to pre-recorded video on a flat screen.

Hardware and Software

The hardware and software required includes a large screen that supports rear projection, a digital projector, an Xbox Kinect 2, a flat-panel display with speakers, and two laptops—one running Isadora and the other running PowerPoint. These technologies are organized as follows:

A screen is set up in the center of the space with the projector and one laptop behind it. The Kinect 2 sensor is slipped under the screen, pointing at an approximately six-foot by six-foot space delineated by tape on the floor. The flat-panel screen and second laptop are on a stand next to the screen, angled toward the taped area.

The laptop behind the screen is running the Isadora patch and the second laptop simply displays a PowerPoint slide that instructs the participant to “Step inside the box, move inside the dance.”

Media

Generally, projection in dance performance places the live dancers in front of or behind the projected image. One cannot move to the foreground or background at will without predetermining when and where the live dancer will move. In this installation, the live dancer can move to up or downstage at will. To achieve this the pre-recorded dancers must be filmed singly with an alpha channel and then composited together.

I first attempted to rotoscope individual dancers out from their backgrounds in prerecorded dance videos. Despite helpful tools in After Effects designed to speed up this process, each frame of video must still be manually corrected, and when two dancers overlap the process becomes extremely time consuming. One minute of rotoscoped video takes approximately four hours of work. This is an initial test using a dancer rotoscoped from a video shot in a dance studio:

Abandoning that approach, and with the help of Sarah Lawler, I recorded ten dancers moving individually in front of a green screen. This process, while still requiring post-processing in After Effects, was significantly faster. These ten alpha-channeled videos then comprised the pre-recorded media necessary for the work. An audio track was added for background music. Here is an example of a green-screen dancer:

Programming

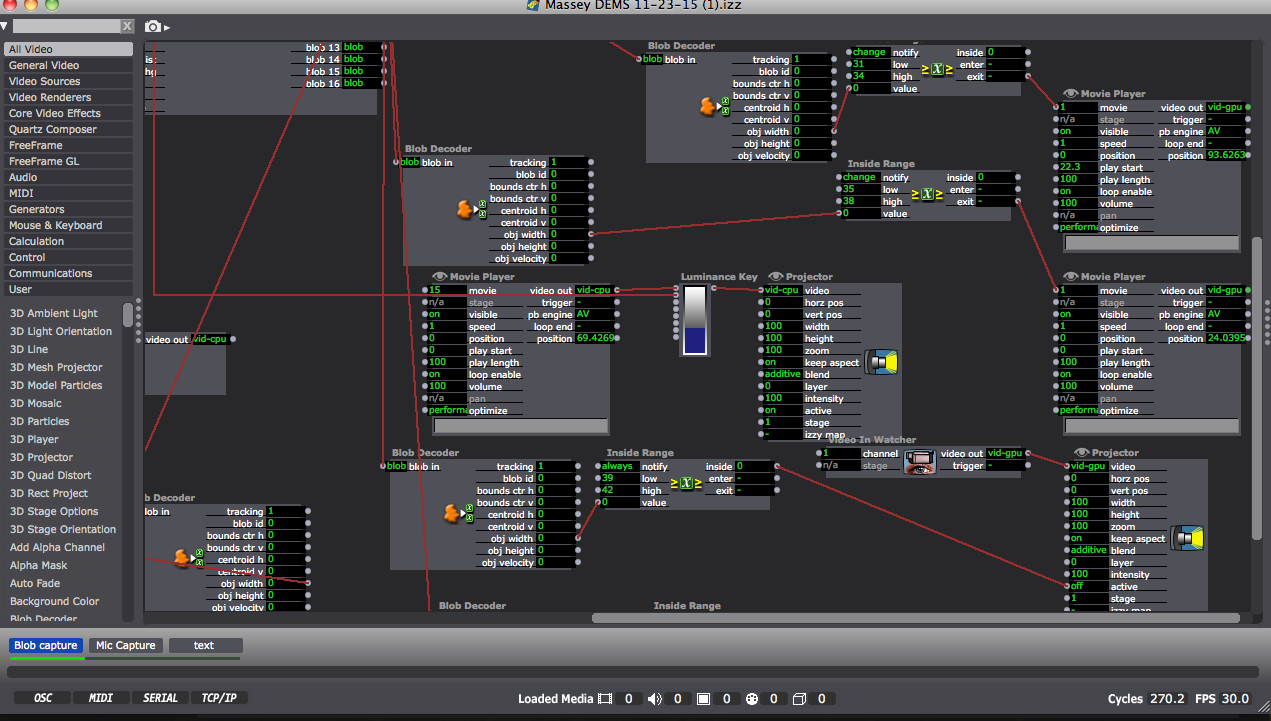

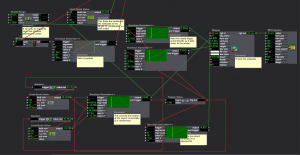

The Isadora patch was divided into three main function:

Videos

The Projector for each prerecorded video was placed on an odd numbered layer. As each video ends, a random number of seconds passes before they reenter the stage. A Gate actor prevents more than three dancers from being on stage at once by keeping track of how many videos are currently playing.

The Live Dancer

Brightness data was captured from the Kinect 2 (upgraded from the original Kinect for greater resolution and depth of field) via Syphon and fed through several filters in order to isolate the body of the participant.

Calculating Depth

Isadora logic was set up such that as the participate moved forward (increased brightness), the layer number on which they were projected increased by even numbers. As they moved backwards, the layer number decreased. In other words, the live dancer might be on layer 2, behind the prerecorded dancers on layers 3, 5, 7, and 9. As the live dancer move forward to layer 6, they are now in front of the prerecorded dancers on levels 3 and 5, but behind those on levels 7 and 9.

Download the patch here: https://www.dropbox.com/s/6anzklc0z80k7z4/Depth%20Study-5-KinectV2.izz?dl=0

In Practice

Watching people interact with the installation was extremely satisfying. There is a moment of “oh!” when they realize that they can move in and around the dancers on the screen. People experimented with jumping forward and back, getting low the floor, mimicking the movement of the dancers, leaving the stage and coming back on, and more. Here are some examples of people interacting with the dancers:

Devising Experiential Media from Benny Simon on Vimeo.

Devising Experiential Media from Benny Simon on Vimeo.

Devising Experiential Media from Benny Simon on Vimeo.

Future Questions

Is it possible for the live participant to be on more than one layer at a time? In other words, could they curve their body around a prerecorded dancer’s body? This would require a more complex method of capturing movement in real-time than the Kinect can provide.

What else can happen in a virtual environment when dancer move in and around each other? What configurations of movement can trigger effects or behaviors that are not possible in a physical world?

Final Project – here4u

Posted: December 9, 2017 Filed under: Calder White, Final Project Leave a comment »There’s something about opening myself up and exposing vulnerability that is important to my creative work and to my life. In laying myself bare, I hope that it encourages others to do the same and maybe we connect in the process. I tell people about me in the hopes that they’ll tell me about them.

You can read in depth about where I started with this project in this post, but in as concise a way as I can manage with this project, I was working to tell a non-linear narrative about a platonic, long-distance relationship between a mother and son. Using journal entries, film photographs, newspapers, daily planners, notes to self, and random memorabilia from myself and my mother, I scattered QR codes throughout an installation of two desks with a trail of printed text messages between them. In order to enhance the interactivity of my project, the QR codes led to voicemails and text messages between a mother and a son, digital photos showing silhouetted figures and miscellaneous homes, and footage from anti-Trump protests. I worked to emphasize feelings of distance, loneliness, belonging, and relationship that I have experienced and continue to experience during my time in Columbus.

This final project — here4u — really took it out of me. Working from such a personal place was in some ways freeing, but constantly stressful. I spent a lot of time with this project worrying about whether people would connect with the work, whether they would believe the authenticity of it, and whether I was oversharing to the point of discomfort (for both the audience and for myself). The worry was balanced equally with excitement/anticipation of how it would all be received. To amplify some rawness that I was only beginning to develop after the first showing, I generated much more digital material and matched that with an increase in tangible items in the space. From laying out journals and planners that I carry with me every day, some even including notes and plans for the work, screenshotting and printing out text conversations between my mom and I to create a trail between the two desks, and even brewing the tea that reminds me of home was my way of leaning into opening myself up for the audience. Besides the lights that Oded designed for me, I wanted to strip this installation of all extraneous theatricality in order to get at the personal nature of the work.

The “son” space

After some recommendations from Alex after the first cycle presentation, I began to think more choreographically about this project, shifting my mindset around it from the creation of a static installation into the curation of a museum of moments to be experienced. Between the first cycle and the final showing, I invested much more time and effort into crafting the viewer’s journey through the space. Oded’s lighting certainly helped this, and with it I thought critically about which QR codes should be placed where in order to enhance a tangible object, a written note, etc. This felt like mental prototyping and it helped me to conceptualize what I wanted for my final product.

I’m thankful for this final project (and this course) for giving me an outlet to investigate the concepts I’m researching in my dance-making through other disciplines. Taking themes I work with in the dance studio and translating them into photography, audio/visual art, and digital media design has given me a new perspective on the topics I am diving into for my senior project and beyond. DEMS was a real treat, and I’m glad to have been a part of it.

Cycle 1 Presentation – here4u

Posted: November 28, 2017 Filed under: Calder White, Final Project, Uncategorized Leave a comment »For my final project in DEMS, titled here4u, I’m working to tell a non-linear narrative about a long distance platonic relationship between a mother and a son. The story is told mainly through text messages, voicemails, and journal entries and photographs (both physical and digital), unlocked via QR codes scattered throughout the installation. My inspiration comes from an extremely personal place as I have been in this very situation for the past four years of my undergraduate career, and my drive for this project stems from an artistic desire for transparency between creator/performer and the audience. Through this project, I am opening myself up to the audience — using actual voicemails and texts between my mother and I and actual entries from my journal — to tell a story about distance, loneliness, belonging, misinformation, struggle, and coming of age. I am doing all of this in the hopes that my “baring it all” can open a dialogue about connection and relation between myself and the people experiencing this installation, as well as between the visitors to the space.

In the first cycle presentation, I organized two desk spaces diagonal to each other in the Motion Lab, one being the “mother” space and the other being the “son” space. The mother space has various artifacts that would be found on my mom’s desk at home: note pads with hand-written reminders, prayer cards and rosaries from my Baba, and a film photograph from the cottage that my mom and I used to live in. Spread within the artifacts are QR codes that include worried voicemails from my mom about suspicious activity on campus, texts from her to me asking where I’ve been, and links to screen recordings of our longer text conversations.

Across the room stands the son space, a much less organized desk with worn and filled daily planners, books dealing with citizenship theory, journals open to personal entries, and crumpled to-do lists. The QR codes here lead to first-person videos of protests, an online gallery of silhouetted selfies, and voicemails apologizing for taking so long to respond to my mom.

In the creation of this project, I am dredging up a wealth of emotions from my time in Columbus and pinning it to a presentation that I hope won’t feel like a performance. That being said, I realize that in it’s presentational nature, there are certain theatrical elements that need to be considered and addressed. With the first cycle showing, I learned very quickly how individual an experience this is. In order to get the most out of the QR codes — particularly the voicemails — the audience members must wear headphones, which immediately isolates them into their own world and deters connection with other audience members during their experience. In my attempt for creating a feeling of loneliness, I would say this was a success.

I received a wealth of feedback on which aspects of both spaces worked and which didn’t, and agreement on the most powerful aspects of the story-telling being the voicemails and texts was unanimous due to the emotion heard in the voice. At the suggestion of Ashlee Daniels Taylor, I will be working to generally increase the amount of material in the space so that there is more to look through and find in the QR codes. This works towards another one of my goals with the narrative, which is to engender the sense of a story without the need for the audience member to find every single piece of the puzzle, amplifying individual experience within the installation.

For my second cycle, I have been working towards the generation of more material to be found and directing the experience moreso than in my first presentation. Without any sort of guidance using vocal, lighting, or other cues, the first presentation invited viewers to find their own way through the installation which, although was fun for me to watch and hear how different people pieced together the experience for themselves, led to awkward successive QR codes (being too similar or repetitive), a desire for more direction, and an undefined ending to the experience. I will be working with some theatrical lighting to guide the viewers in a more predetermined path through the space.

The emotional feedback that I received after the first presentation has inspired me to push forward with this project’s main intentions and to develop this experience has honestly and authentically as possible. The tears and personal relations shared in our post-presentation discussion proved to me the value in making oneself vulnerable to their audience and I intend to lean into this for the second cycle presentation.

Virtual Reality Storytelling & Mixed Reality

Posted: December 18, 2016 Filed under: Final Project Leave a comment »For my final project, I jumped into working with VR to begin creating an interactive narrative. The title of this experience is “Somniat,” and it’s hoped to revisit feelings of childhood innocence and imagination in its users.

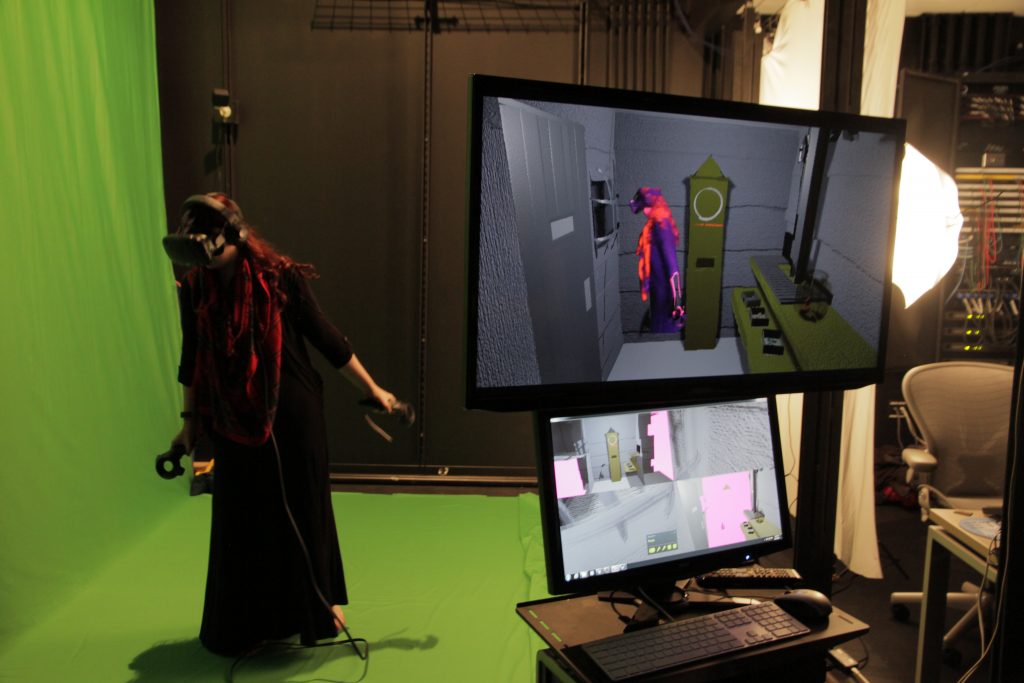

This project was built upon across three separate ACCAD courses – “Concept Development for Time-Based Media,” “Game Art and Design I,” and “Devising Experiential Media Systems.” In Concept Development, I created a storyboard, which eventually became an animatic of the story I hoped to create. Then in Game Design, I hashed out all the interactive objectives, challenges, and user cues, building them into a functional virtual reality experience. And finally, in DEMS, I brought all previous ideas together and built the entire experience, both inside and out of VR, into a complete presentation! A large focus of this presentation was the mixed reality greenscreen. Mixed reality is is where you record the user in the virtual world as well as the real world, and then blend both realities together! An example of this mixed reality from my project can be seen below:

I had a great time working on this project. I’d have to say my biggest takeaway from our class has been to start thinking of everything that goes into a user’s experience; from first hearing about the content to using it for themselves to telling others about it after. My perspective on creating experiential content has been widened, and I look forward to using this view on work in the future!

‘You make me feel like…’ Taylor – Cycle 3, Claire Broke it!

Posted: December 14, 2016 Filed under: Final Project, Isadora, Taylor Leave a comment »towards Interactive Installation,

the name of my patch: ‘You make me feel like…’

Okay, I have determined how/why Claire broke it and what I could have done to fix this problem. And I am kidding when I say she broke it. I think her and Peter had a lot of fun, which is the point!

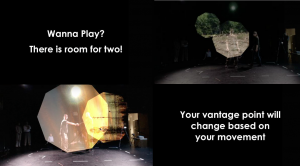

So, all in all, I think everyone that participated with my system had a good time with another person, moved around a bit, then watched themselves and most got even more excited by that! I would say that in these ways the performances of my patch were very successful, but I still see areas of the system that I could fine tune to make it more robust.

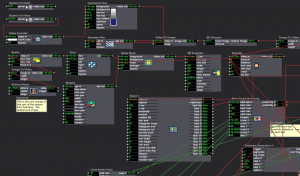

My system: Two 9 sided shapes tracked 2 participants through space, showing off the images/videos that are alpha masked onto the shapes as the participants move. It gives the appearance that you are peering through some frame or scope and you can explore your vantage point based on your movement. Once participants are moving around, they are instructed to connect to see more of the images and to come closer to see a new perspective. This last cue uses a brightness trigger to switch to projectors with live feed and video delayed footage playing back the performers’ movement choices and allowing them to watch themselves watching or watching themselves dancing with their previous selves dancing.

The day before we presented, with Alex’s guidance, I decided to switch to the Kinect in the grid and do my tracking from the top down for an interaction that was more 1:1. Unfortunately, this Kinect is not hung center/center of the space, but I set everything else center/center of the space and used Luminance Keying and Crop to match the space and what the Kinect saw. However, because I based the switch, from shapes following you to live feed, on brightness when the depth was tracked at the side furthest from the Kinect the color of the participant was darker (video inverter was used) and the brightness window that triggered the switch was already so narrow. To fix this I think shifting the position of the Kinect or how the space was laid out could have helped. Also, adding a third participant to the mix could have made (it even more fun) the brightness window greater and increased the trigger range, so that the far side is no longer a problem.

I wonder if I should have left the text running for longer bouts of time, but coming in quicker succession? I kept cutting the time back thinking people would read it straight away, but it took people longer to pay attention to everything. I think this is because they are put on display a bit as performers and they are trying to read and decipher/remember instructions.

The bout that ended up working the best, or going in order all the way through as planned was the third go-round, I’ll post a video of this one on my blog which I can link to once I can get things loaded. This tells me my system needs to accommodate for more movement because there was a wide range of movement between the performers (maybe with more space, or more clearly defined space). Also, this accounting for the time taken exploring I have mentioned above.

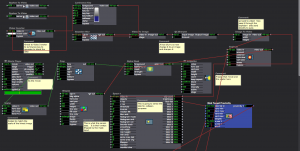

Study in Movement – Final Project

Posted: December 14, 2016 Filed under: Final Project, James MacDonald Leave a comment »For my final project, I wanted to create an installation that detected people moving in a space and used that information to compose music in real time. I also wanted to create a work that was not overly-dependent on the resources of the motion lab; I wanted to be able to take my work and present it in other environments. I knew what I would need for this project; a camera of sorts, a computer, a projector, and a sound system. I had messed around with a real-time composition library in the past by Karlheinz Essl, and decided to explore it once again. After a few hours of experimenting with the modules in his library, I combined two of them together (Super-rhythm and Scale Changer) for this work. I ended up deciding to use two kinect cameras (model 1414) as opposed to a higher resolution video camera, as the kinect is light-invariant. One kinect did not cover enough of the room, so I decided to use two cameras. To capture the data of movement in the space I used a piece of software called TSPS. For a while, I was planning on using only one computer, and had developed a method of using both kinect cameras with the multi-camera version of TSPS (one camera was being read directly by TSPS, and the other was sent into TSPS via Syphon by an application created in Max/MSP).

This is where I began running into some mild problems. Because of the audio interface I was using (MOTU mk3), the largest buffer size I was allowed to use was 1024. This became an issue as my Syphon application, created with Max, utilized a large amount of my CPU, using even more than the Max patch, Ableton, TSPS, or Jack. In the first two cycle performances, this lead to CPU-overload clicks and pops, so I had to explore other options.

I decided that I should use another computer to read the kinect images. I also realized this would be necessary as I wanted to have two different projections. I placed TSPS on the Mini Mac I wanted to use, along with a Max patch to receive OSC messages from my MacBook to create the visual I wanted to display on the top-down projector. This is where my problems began.

At first, I tried sending messages between the two computers over OSC by creating a network between the two computers, connected by ethernet. I had done this before in the past, and a lot of sources stated this was possible to do. However, this time, for reasons beyond my understanding, I was only able to send information from machine to another, but not to and from both of them. I then explored creating an ad-hoc wireless network, which also failed. Lastly, I tried connecting to the Netgear router over wi-fi in the Motion Lab, which also proved unsuccessful.

This lead me to one last option: I needed to network the two computers together using MIDI. I had a MIDI to USB interface, and decided I would connect it to the MIDI output on the back of the audio interface. This is when I learned that the MOTU interface does not have MIDI ports. Thankfully, I was able to borrow another one from the Motion Lab. I was able to add some of the real-time composition modules to the Max patch on the Mini Mac, so that TSPS on the Mini Mac would generate the MIDI information that would be sent to my MacBook, where the instruments receiving the MIDI data were hosted. This was apparently easier said than done. I was unable to set my USB-MIDI interface as the default MIDI output in the Max patch on the Mini-Mac, then ran into an issue where something would freeze up the MIDI output from the patch. Then, half an hour prior to the performance on Friday, my main Max patch on my MacBook completely froze; it was as if I paused all of the data processing in Max (which, while possible, is seldom used). This Max patch crashed, and I reloaded it, then reopened the on one the Mini Mac, adjusted some settings for MIDI CC’s that I thought were causing errors, and ten minutes after that, we opened the doors and everything worked fine without errors for two and a half hours straight.

Here is a simple flowchart of the technology utilized for the work:

MacBook Pro: Kinect -> TSPS (via OSC) -> Max/MSP (via MIDI) -> Ableton Live (audio via Jack) -> Max/MSP -> Audio/Visual output.

Mini Mac: Kinect -> TSPS (via OSC) -> Max/MSP (via MIDI) -> MacBook Pro Max Patch -> Ableton Live (audio via Jack) -> Max/MSP -> Audio/Visual output.

When we first opened the doors, people walked across the room, and heard sound as they walked in front of the kinects and were caught off-guard, and then stood still out of range of the kinects as they weren’t sure what just happened. I explained the nature of the work to them, and they stood there for another few minutes contemplating whether or not to run in front of the cameras, and who would do so first. After a while, they all ended up in front of the cameras, and I began explaining more of the technical aspects of the work to a faculty member.

One of the things I was asked about a lot was the staff paper on the floor where the top-down projector was displaying a visual. Some people at first thought it was a maze, or that it would cause a specific effect. I explained to a few people that the reason for the paper was because the black floor of the Motion Lab sucks up a lot of the light from the projector, and the white paper helped make the floor visuals stand out. In a future version of this work, I think it would be interesting to connect some of the staff paper to sensors (maybe pressure sensors or capacitive touch sensors) to trigger fixed MIDI files. Several people also were curious about what the music on the floor projection represented, as the main projector had stave with instrument names and music that was automatically transcribed having been heard. As I’ve spent most of my academic life in music, I sometimes forget that people don’t understand concepts like partial-tracking analysis, and since apparently the audio for this effect wasn’t working, it was difficult for me to effectively get the idea across of what was happening.

During the second half of the performance, I spoke with some other people about the work, and they were much more eager to jump in and start running around, and even experimented with freezing in place to see if the system would respond. They spent several minutes running around in the space and were trying to see if they could get any of the instruments to play beyond just the piano, violin, and flute. In doing so, they occasionally heard bassoon and tuba once or twice. One person asked me why they were seeing so many impossibly low notes transcribed for the violin, which allowed me to explain the concept of key-switching in sample libraries (key-switching is when you can change the playing technique of the instrument by playing notes where there aren’t notes on that instrument).

One reaction I received from Ashley was that I should set up this system for children to play with, perhaps with a modification of the visuals (showing a picture of what instrument is playing, for example), and my fiance, who works with children, overheard this and agreed. I have never worked with children before, but I agree that this would be interesting to try and I think that children would probably enjoy this system.

For any future performances of this work, I will probably alter some aspects (such as projections and things that didn’t work) to work with the space it would be featured in. I plan on submitting this work to various music conferences as an installation, but I would also like to explore showing this work in more of a flash-mob context. I’m unsure when or where I would do it, but I think it would be interesting.

Here are some images from working on this piece. I’m not sure why WordPress decided it needed to rotate them.

And here are some videos that exceed the WordPress file size limit:

Third and Last time Around with Tea

Posted: December 13, 2016 Filed under: Final Project, Robin Ediger-Seto Leave a comment » For my final project I wanted to take the working elements of the two earlier projects and simplify them whilst creating in more complex work. The two earlier projects contained tea in them. During deeper expirations of my relationship with tea I realize that it’s a symbol that is tied to many facets of my identity. It is important part of almost all the cultures I was brought up in, Indian, American, Japanese, and Iranian. This brought me back to the key premise of this journey, the expiration of time in story. I am very much a product of the times and would not exist anytime before this. I was also interested in the way that we tell the story that got us here. And how as audience members we are able to imagine alternate pasts while giving facts. From these ponderings I came up with a new score.

For my final project I wanted to take the working elements of the two earlier projects and simplify them whilst creating in more complex work. The two earlier projects contained tea in them. During deeper expirations of my relationship with tea I realize that it’s a symbol that is tied to many facets of my identity. It is important part of almost all the cultures I was brought up in, Indian, American, Japanese, and Iranian. This brought me back to the key premise of this journey, the expiration of time in story. I am very much a product of the times and would not exist anytime before this. I was also interested in the way that we tell the story that got us here. And how as audience members we are able to imagine alternate pasts while giving facts. From these ponderings I came up with a new score.

In the final performance I would make tea while explaining what I Believe to be true about tea. To get this material I free wrote a list of every fact that I know. I then measured how long it took me to make tea and recorded me reading the list. This portion would be filmed in chunks and playback in eight tiles much like the last performance. During the brewing of the tea I talked about the Inspiration for the work. I did this into a microphone and made a patch that listened to the decibels I was producing and produced a light whatever they got loud enough. My meanderings on the microphone ended with me presenting the tiles and then I poured tea for people.

In the final performance I would make tea while explaining what I Believe to be true about tea. To get this material I free wrote a list of every fact that I know. I then measured how long it took me to make tea and recorded me reading the list. This portion would be filmed in chunks and playback in eight tiles much like the last performance. During the brewing of the tea I talked about the Inspiration for the work. I did this into a microphone and made a patch that listened to the decibels I was producing and produced a light whatever they got loud enough. My meanderings on the microphone ended with me presenting the tiles and then I poured tea for people.

I did not get a lot of feedback after the show, but I did get a lot while rehearsing it. For instance, during the performance I needed to assert my role during the making of the tea by looking at the audience. Also that I needed to put a little more attention into the composition of my set. These pieces of advice really help the final performance and I wish I had better used my lab time as the space for rehearsals incident of doing so much work at home.

My constant worry through the process of the three performances was that I was not keeping in the spirit of the class by making simple systems. during the final performance I realized that what I was working on was highly applicable to the class. I was creating systems where the user could express themselves and have complete control over the performance. I was designing a cockpit for a performance with a singular user, me. The designers, stage manager, and performer only had to be one person. The triggers for actions played into the narrative of the work. In the second performance cycle the cup of tea attached to the Makey Makey integrated a prop into the system that controlled the performance. In the final performance I ditched the cup instead opting for a more covert controlling method. I placed to aluminum foil strips on the table and attached the Makey Makey so that I could covertly Control the system by simply touching booth strips. The other cue was controlled by a brightness calculator that was triggered by turning off the lamb after I made the tea.

This performance will not be a final product and I will continue to work on it for my senior project to be performed in March at MINT gallery, here in Columbus. I plan on integrating the Kinect that I just bought to create motion triggers, so that even less hardware will be seen on stage. I believe that I’m going good direction with the technological aspect of the work and now need to focus on the content. This class allowed me to think about systems in theater and ways to give the performer more freedom in the performance. The entire theater proses could benefit from a holistic design proses, where even the means od truing on a light must be taken in to consideration.

Final Project

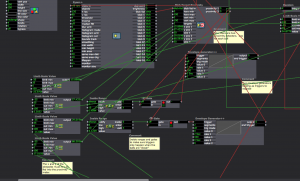

Posted: December 17, 2015 Filed under: Final Project, Josh Poston Leave a comment »Well I had a huge final patch and because of the resource limitations of the MOLA finished the product on my computer. Which then crashed so goodbye to that patch. Here is something close to what my final patch was. Enjoy.

This is the opening scene. It is the welcome message and instructions.

This is the Snowboard scene 2. It is triggered by timer at the end of the welcome screen. First picture is the kinect input and the movie/ mask making. Also the proximity detector and exploder actor.

This image is of the obstacle generation and the detection system.

This is the static background image and the kinect inputs/ double proximity detector for dodgeball.

This image is of one of the dodgeball actors. the other is exactly the same just with slightly different shape color.

This image shows the 4 inputs necessary to track the proximity of the dodgeballs (obstacles) to the avatar when both x and y is a variable of object movement. Notice that because there are two balls there are two gates and triggers.

How To Make An Imaginary Friend

Posted: December 13, 2015 Filed under: Anna Brown Massey, Final Project 1 Comment »An audience of six walk into a room. They crowd the door. Observing their attachment to remaining where they’ve arrived, I am concerned the lights are set too dark, indicating they should remain where they are, safe by a wall, when my project will soon require the audience to station themselves within the Kinect sensor zone at the room’s center. I lift the lights in the space, both on them and into the Motion Lab. Alex introduces the concepts behind the course, and welcomes me to introduce myself and my project.

In this prototype, I am interested in extracting my live self from the system so that I may see what interaction develops without verbal directives. I say as much, which means I say little, but worried they are still stuck by the entrance (that happens to host a table of donuts), I say something to the effect of “Come into the space. Get into the living room, and escape the party you think is happening by the kitchen.” Alex says a few more words about self-running systems–perhaps he is concerned I have left them too undirected–and I return to station myself behind the console. Our six audience members, now transformed into participants, walk into the space.

I designed How to make an imaginary friend as a schema to compel audience members, now rendered participants, to respond interactively through touching each other. Having achieved a learned response to the system and gathered themselves in a large single group because of the cumulative audio and visual rewards, the system would independently switch over to a live video feed as output, and their own audio dynamics as input, inspiring them to experiment with dynamic sound.

System as Descriptive Flow Chart

Front projection and Kinect are on, microphone and video camera are set on a podium, and Anna triggers Isadora to start scene.

> Audience enters MOLA

“Enter the action zone” is projected on the upstage giant scrim

> Intrigued and reading a command, they walk through the space until “Enter the action zone” disappears, indicating they have entered.

Further indication of being where there is opportunity for an event is the appearance of each individual’s outline of their body projected on the blank scrim. Within the contours of their projected self appears that slice of a movie, unmasked within their body’s appearance on the scrim when they are standing within the action zone.

> Inspired to see more of the movie, they realize that if they touch or overlap their bodies in space, they will be able to see more of the movie.

In attempts at overlapping, they touch, and Isadora reads a defined bigger blob, and sets off a sound.

> Intrigued by this sound trigger, more people overlap or touch simultaneously, triggering more sounds.

> They sense that as the group grows, different sounds will come out, and they continue to connect more.

Reaching the greatest size possible, the screen is suddenly triggered away from the projected appearance, and instead projects a live video feed.

They exclaim audibly, and notice that the live feed video projection changes.

> The louder they are, the more it zooms into a projection of themselves.

They come to a natural ending of seeing the next point of attention, and I respond to any questions.

Analysis of Prototype Experiment 12/11/15

As I worked over the last number of weeks I recognized that shaping an experiential media system renders the designer a Director of Audience. I newly acknowledged once the participants were in the MOLA and responding to my design, I had become a Catalyzer of Decisions. And I had a social agenda.

There is something about the simple phrase “I want people to touch each other nicely” that seems correct, but also sounds reductive–and creepily problematic. I sought to trigger people to move and even touch strangers without verbal or text direction. My system worked in this capacity. I achieved my main goal, but the cumulative triggers and experiences were limited by an all-MOLA sound system failure after the first few minutes. The triggered output of sound-as-reward-for-touch worked only for the first few minutes, and then the participants were left with a what-next sensibility sans sound. Without a working sound system, the only feedback was the further discovery of unmasking chunks of the film.

Because of the absence of the availability of my further triggers, I got up and joined them. We talked as we moved, and that itself interested me–they wanted to continue to experiment with their avatars on screen despite a lack of audio trigger and an likely a growing sense that they may have run out of triggers. Should the masked movie been more engaging (this one was of a looped train rounding a station), they might have been further engaged even without the audio triggers. In developing this work, I had considered designing a system in which there was no audio output, but instead the movement of the participants would trigger changes in the film–to fast forward, stop, alter the image. This might be a later project, and would be based in the Kinect patch and dimension data. Further questions arise: What does a human body do in response to their projected self? What is the poetic nature of space? How does the nature of looking at a screen affect the experience and action of touch?

Plans for Following Iteration

- “Action zone” text: need to dial down sensitivity so that it appears only when all objects are outside of the Kinect sensor area.

- Not have the sound system for the MOLA fail, or if this happens again, pause the action, set up a stopgap of a set of portable speakers to attach to the laptop running Isadora.

- Have a group of people with which to experiment to more closely set the dimensions of the “objects” so that the data of their touch sets off a more precisely linked sound.

- Imagine a different movie and related sound score.

- Consider an opening/continuous soundtrack “background” as scene-setting material.

- Consider the integrative relationship between the two “scenes”: create a satisfying narrative relating the projected film/touch experience to the shift to the audio input into projected screen.

- Relocate the podium with microphone and videocamera to the center front of the action zone.

- Examine why the larger dimension of the group did not trigger the trigger of the user actor to switch to the microphone input and live video feed output.

- Consider: what was the impetus relationship between the audio output and the projected images? Did participants touch and overlap out of desire to see their bodies unmask the film, or were they relating to the sound trigger that followed their movement? Should these two triggers be isolated, or integrated in a greater narrative?

Video available here: https://vimeo.com/abmassey/imaginaryfriend

Password: imaginary

All photos above by Alex Oliszewski.

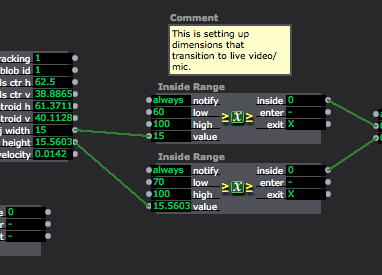

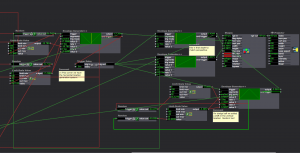

Software Screen Shots

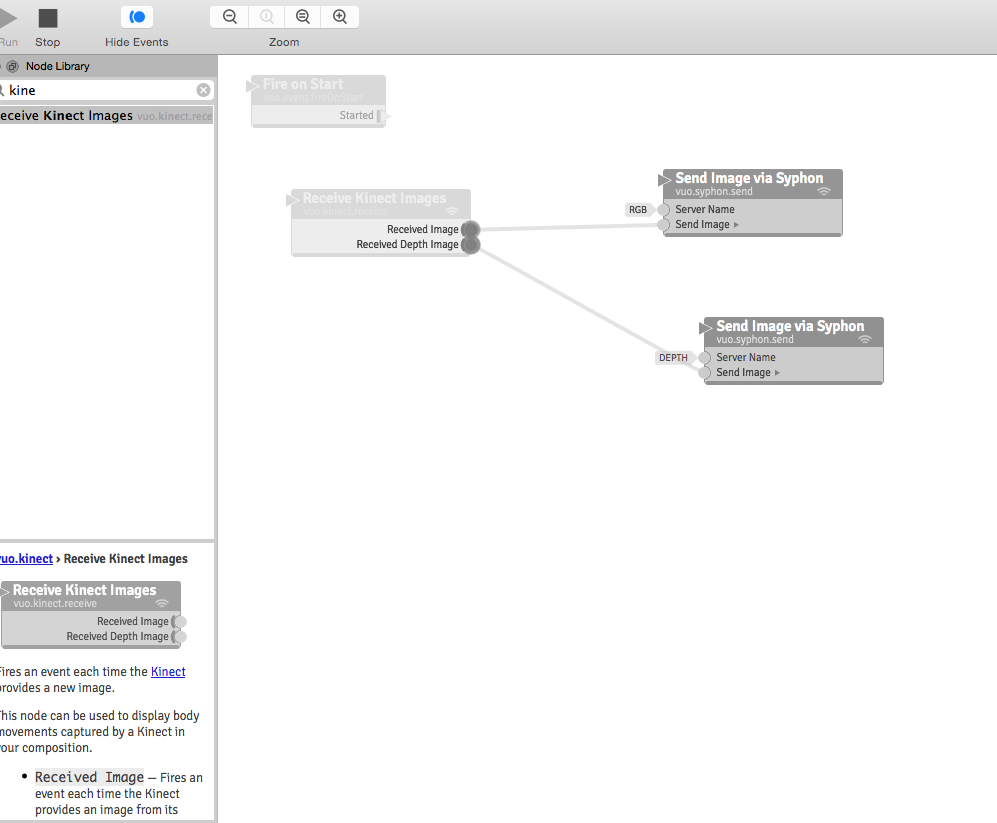

Vuo (Demo) Software connecting the Kinect through Syphon so that Isadora may intake the Kinect data through a Syphon actor:

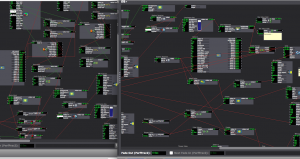

Partial shot of the Isadora patch:

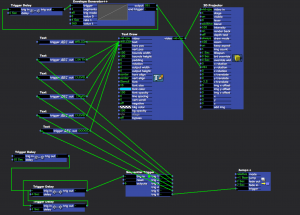

Close up of “blob” data or object dimensions coming through on the left, mediated through the Inside range actors as a way of triggering the Audio scene only when the blobs (participants) reach a certain size by joining their bodies:

Final Project Showing

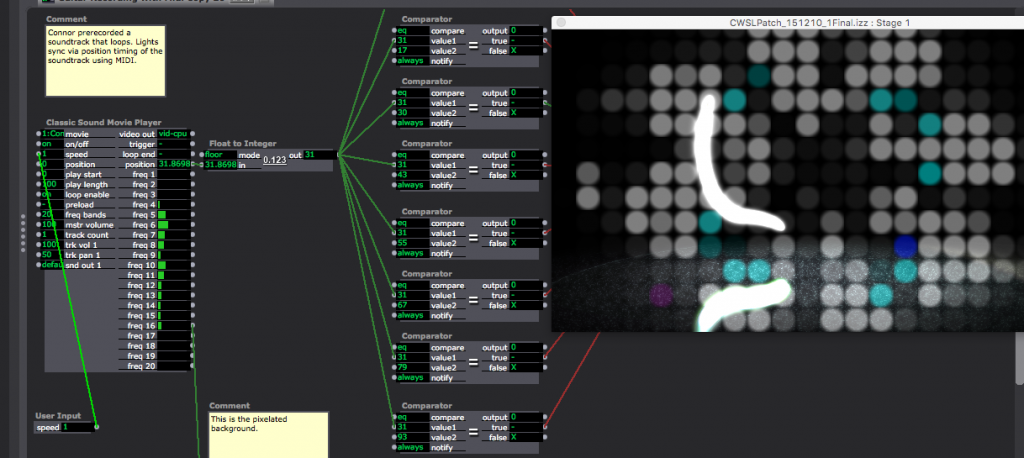

Posted: December 12, 2015 Filed under: Connor Wescoat, Final Project Leave a comment »I have done a lot of final presentations during the course of my college career but never one quite like this. Although the theme of event was “soundboard mishaps”, I was still relatively satisfied with the way everything turned out. The big take away that I got from my presentation was that some people are intimidated when put on the spot. I truly believe that anyone of the audience could of come up with something creative if they got 10 minutes to themselves with my showing to just play around with it. While I did not for see the result, I was satisfied that one of the 6 members actually got into the project and I could visually see that he was having fun. In conclusion, I enjoyed the event and the class. I met a lot of great people and I look forward to seeing/working with anyone of my classmates in the future. Thank you for a great class experience! Attached are screen shots of the patch. The top is the high/mid/low frequency analysis while the bottom is the prerecorded guitar piece that is synced up to the lights and background.

![20171205_161939[1]](http://recluse.accad.ohio-state.edu/ems/wp-content/uploads/2017/12/20171205_1619391-e1512876401400-300x169.jpg)

![20171205_162905[1]](http://recluse.accad.ohio-state.edu/ems/wp-content/uploads/2017/12/20171205_1629051-300x169.jpg)

![20171205_170446[1]](http://recluse.accad.ohio-state.edu/ems/wp-content/uploads/2017/12/20171205_1704461-1-169x300.jpg)

![20171205_170102_HDR[2]](http://recluse.accad.ohio-state.edu/ems/wp-content/uploads/2017/12/20171205_170102_HDR2-169x300.jpg)